Apache Paimon (incubating) is a streaming data lake storage technology that provides users with high-throughput, low-latency data ingestion, streaming subscription, and real-time query capabilities. Paimon uses open data formats and technology concepts to connect with mainstream computing engines in the industry, such as Apache Flink, Spark, and Trino, to promote the popularization and development of the streaming lakehouse architecture.

As a unified computing and analysis engine for big data processing, Apache Spark not only supports high-level API use in multiple languages, but also supports a wide range of big data scenario applications including Spark SQL for structured data processing, MLlib for machine learning, GraphX for graph processing, and Structured Streaming for incremental computing and stream processing. Spark has become an essential part of the big data software stack. As a new player in the data lake field, Paimon's integration with Spark can facilitate Paimon's usability and implementation in both quasi-real-time and offline lakehouse scenarios.

Next, we will introduce the main features in the new version of Paimon that are supported by the Spark-based computing engine.

Schema evolution is a crucial feature in the data lake field. It enables users to modify the current schema of a table to fit existing data or new data that changes over time while maintaining data integrity and consistency.

In offline scenarios, you can use the SQL syntax of ALTER TABLE provided by the computing engines such as Spark or Flink to operate schema modification. In certain scenarios, it is difficult to acquire the schema changes of the input data table in real-time accurately. Moreover, for updating the schema of the current table in streaming scenarios, the following steps need to be performed:

(1) Stop the streaming job.

(2) Complete the schema update operation.

(3) Restart the streaming job, which is relatively inefficient.

Paimon allows you to automatically merge the schemas of the source data and the current table data while data are being written, and use the merged schema as the latest schema of the table. You only need to configure the write.merge-schema parameter.

data.write

.format("paimon")

.mode("append")

.option("write.merge-schema", "true")

.save(location)Adding columns is a common operation when performing data append or overwrite to automatically adjust the schema to include one or more new columns.

Assume that the schema of the original table is:

a INT

b STRINGThe schema of the new data is:

a INT

b STRING

c LONG

d Map<String, Double>After the operation is completed, the schema of the table is changed to:

a INT

b STRING

c LONG

d Map<String, Double>The schema evolution of Paimon also supports data type promotion, such as promoting Int to Long and Long to Decimal. You can continue to write data based on the preceding table. Assume that the schema of the new data is:

a Long

b STRING

c Decimal

d Map<String, Double>After the operation is completed, the schema of the table is changed to:

a Long

b STRING

c Decimal

d Map<String, Double>As shown in the preceding example, Paimon supports the promotion of data field types, such as the promotion of numeric types to higher precision (from Int to Long and from Long to Decimal). Paimon also supports some types of forced conversions, such as the conversion of String type to Date type or the conversion of Long type to Int, but requires the explicit configuration parameter write.merge-schema.explicit-cast.

data.write

.format("paimon")

.mode("append")

.option("write.merge-schema", "true")

.option("write.merge-schema.explicit-cast", "true")

.save(location)Assume that the schema of the original table is:

a LONG

b STRING // The content is in the format of 2023-08-01.The schema of the new data is:

a INT

b DATEAfter the operation is completed, the schema of the table is changed to:

a INT

b DATENote:

Schema evolution during data writing (appending or overwriting) does not support delete and rename column operations, nor does it support data type promotion that is not within the scope of implicit/explicit conversion. If a specific value cannot be converted to the target type, an error will be reported to prevent data corruption, and the operation will be terminated.

Spark Structured Streaming is a scalable and fault-tolerant stream processing engine built on the Spark SQL engine that can express stream computing in the same way as a batch compute that expresses static data. The Spark SQL engine is responsible for running it incrementally and continuously, updating the final result as the streaming data continues to arrive. Structured Streaming supports aggregation between streams, event time windows, and joins between stream batches. Spark implements end-to-end exactly-once through checkpointing and write-ahead logs. In short, Structured Streaming provides fast, scalable, fault-tolerant, end-to-end one-time stream processing without the user having to think about stream processing.

In the 0.5 and 0.6 versions, Paimon gradually improves the support for reading and writing with Spark Structured Streaming and provides streaming read and write capabilities based on the Spark engine.

Spark Structured Streaming defines three output modes. Paimon only supports append mode and complete mode.

// `df` is the upstream source data.

val stream = df

.writeStream

.outputMode("append")

.option("checkpointLocation", "/path/to/checkpoint")

.format("paimon")

.start("/path/to/paimon/sink/table")By combining various trigger policies supported by Spark and some streaming processing capabilities extended by Paimon, Paimon can support a wide range of application scenarios of streaming sources.

Paimon provides a variety of ScanMode that allows the user to specify the initial state of the data read from the Paimon table with appropriate parameters.

| ScanMode | Description |

| latest | Reads only the data that is subsequently continuously written. |

| latest-full | Read the data of the current snapshot and the data that is subsequently continuously written. |

| from-timestamp | Read the data that is continuously written after the timestamp specified by the parameter scan.timestamp-millis. |

| from-snapshot | Read the data that is continuously written after the version specified by the parameter scan.snapshot-id. |

| from-snapshot-full | Read the snapshot data of the version specified by the parameter scan.snapshot-idand the data that is subsequently continuously written. |

| default | By default, this mode is equivalent to the latest-full mode. If you specify a scan.snapshot-id, this mode is equivalent to the from-snapshot mode. If you specify a scan.timestamp-millis, this mode is equivalent to the from-timestamp mode. |

Paimon extends the SupportsAdmissionControl interface to implement traffic control on the source side. This prevents streaming jobs from failing due to the large amount of data to be processed in a single batch. Paimon currently supports the following ReadLimit implementations.

| Readlimit parameters | Description |

| read.stream.maxFilesPerTrigger | The maximum number of Splits returned for a batch. |

| read.stream.maxBytesPerTrigger | The maximum number of bytes returned in a batch. |

| read.stream.maxRowsPerTrigger | The maximum number of rows returned by a batch. |

| read.stream.minRowsPerTrigger | The minimum number of rows returned by a batch. It is used together with maxTriggerDelayMs to form ReadMinRows. |

| read.stream.maxTriggerDelayMs | The maximum latency triggered by a batch. It is used together with minRowsPerTrigger to form ReadMinRows. |

Two examples can illustrate the use of the Paimon Spark Structured Streaming.

Example 1:

Common streaming incremental ETL scenarios.

// The schema of the Paimon source table is time Long, stockId INT, avg_price DOUBLE

val query = spark.readStream

.format("paimon")

.option("scan.mode", "latest")

.load("/path/to/paimon/source/table")

.selectExpr("CAST(time AS timestamp) AS timestamp", "stockId", "price")

.withWatermark("timestamp", "10 seconds")

.groupBy(window($"timestamp", "5 seconds"), col("stockId"))

.writeStream

.format("console")

.trigger(Trigger.ProcessingTime(180, TimeUnit.SECONDS))

.start()This example reads the subsequent incremental data of Paimon at an interval of 3 minutes, performs ETL conversion, and then synchronizes the incremental data to the downstream.

Example 2:

This method is suitable for retroactive data replication scenarios. This method reads data from a specified snapshot in a Paimon table in streaming mode. After the snapshot is read, the data that is written to the Paimon table is no longer read. The approximate data size of each batch is limited.

val query = spark.readStream

.format("paimon")

.option("scan.mode", "from-snapshot")

.option("scan.snapshot-id", 345)

.option("read.stream.maxBytesPerTrigger", "134217728")

.load("/path/to/paimon/source/table")

.writeStream

.format("console")

.trigger(Trigger.AvailableNow())

.start()In the sample code, the Trigger.AvailableNow() is specified to read only the data that is available on Paimon when the streaming task is started. The ScanMode of from-snapshot is used to read the data that is written after the snapshot ID=345. After configuring the maxBytesPerTrigger to 128 MB, the Spark Structured Streaming splits the data to be consumed in batches based on the 128 MB split size. Multiple batches consume the current snapshot data.

Insert Overwrite is a common SQL syntax used to rewrite the entire table or a specified partition in a table. This feature is also supported in the new version of Paimon, which includes both static and dynamic modes.

Overwrite the entire table: You can use the following SQL statement to overwrite the data in the original table with new data, regardless of whether the current table is a partition table.

For more information about how to use Paimon in the Spark environment, refer to here.

USE paimon;

CREATE TABLE T (a INT, b STRING) TBLPROPERTIES('primary-key'='a');

INSERT OVERWRITE T VALUES (1, "a"), (2, "b");

----------

1 a

2 b

----------

INSERT OVERWRITE T VALUES (1, "a2"), (3, "c");

----------

1 a2

3 c

----------Overwrite the specified table partition.

USE paimon;

CREATE TABLE T (dt STRING, a INT, b STRING)

TBLPROPERTIES('primary-key'='dt,a')

PARTITIONED BY(dt);

INSERT OVERWRITE T VALUES ("2023-10-01", 1, "a"), ("2023-10-02", 2, "b");

----------------

2023-10-01 1 a

2023-10-02 2 b

----------------

INSERT OVERWRITE T PARTITION (dt = "2023-10-02") VALUES (2, "b2"), (4, "d");

----------------

2023-10-01 1 a

2023-10-02 2 b2

2023-10-02 d 4

----------------By default, the Insert Overwrite operation is performed in the static mode. You need to explicitly specify the partition information to be overwritten. You can enable the dynamic mode by using the parameter to perform the Insert Overwrite operation. In this way, Paimon automatically determines the partitions involved in the source data to perform the overwrite operation.

When Paimon starts DPO, you need to specify the extension of Paimon when starting a Spark session:

--conf spark.sql.extensions=org.apache.paimon.spark.extensions.PaimonSparkSessionExtensionsUSE paimon;

CREATE TABLE T (dt STRING, a INT, b STRING)

TBLPROPERTIES('primary-key'='dt,a')

PARTITIONED BY(dt);

INSERT OVERWRITE T VALUES ("2023-10-01", 1, "a"), ("2023-10-02", 2, "b");

----------------

2023-10-01 1 a

2023-10-02 2 b

----------------

SET spark.sql.sources.partitionOverwriteMode=DYNAMIC;

INSERT OVERWRITE T VALUES ("2023-10-02", 2, "b2"), ("2023-10-02", 4, "d");

----------------

2023-10-01 1 a

2023-10-02 2 b2

2023-10-02 d 4

----------------After you configure spark.sql.sources.partitionOverwriteMode=DYNAMIC, you no longer need to specify the partition to overwrite dt="2023-10-02". This implements dynamic data overwriting.

In addition to the common SQL syntax (including DDL, DML, query, and some table information queries) provided by the Spark framework, Paimon also needs to expand some additional SQL syntax to provide operation interfaces with custom functions to facilitate users to manage and explore Paimon tables. The introduction of the Call Procedure provides framework-level support for this scenario.

Syntax of the procedure:

CALL procedure_name(table => 'table_identifier', arg1 => '', ...);Currently, Paimon has implemented three procedures:

| Procedure | Description | Usage |

| create_tag | Create a tag for a specified snapshot | CALL create_tag(table => 'T', tag => 'test_tag', snapshot => 2) |

| delete_tag | Delete a created tag | CALL delete_tag(table => 'T', tag => 'test_tag') |

| rollback | Roll back a table to a specified tag or version | CALL rollback(table => 'T', version => '2') |

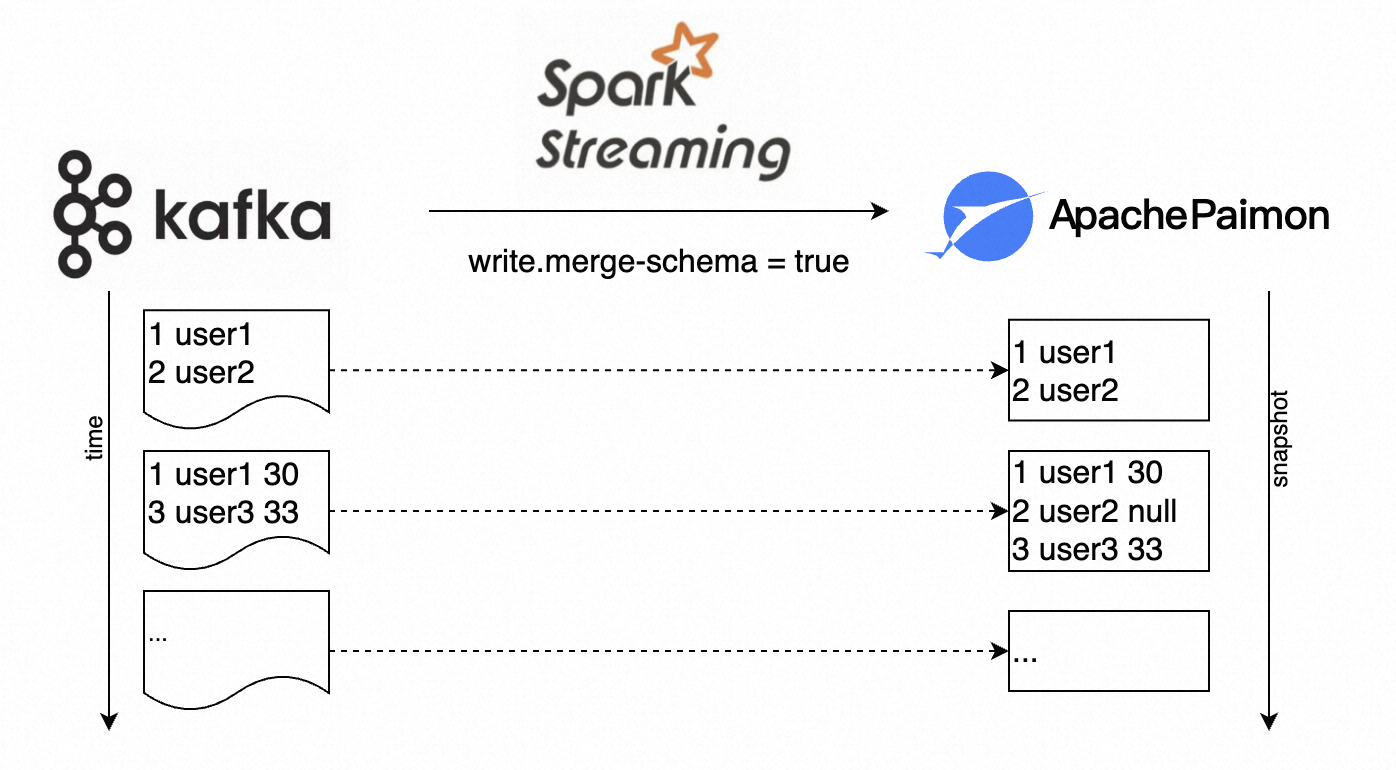

The following example shows how to use schema evolution in streaming mode to synchronize data to the user table in real-time input data, and the user table in the original table only has the userId and name. At a certain point, the input data adds the age attribute. When you do not need to stop the O&M task, you can use schema evolution to merge metadata and write new data.

// The definition of the original table.

// CREATE TABLE T (userId INT, name STRING) TBLPROPERTIES ('primary-key'='userId');

// -- Assumed data of the original table that are written in streaming mode --

// 1 user1

// 2 user2

// -------------------------

// Use MemoryStream to simulate upstream streaming data.

val inputData = MemoryStream[(Int, String, Int)]

val stream = inputData

.toDS()

.toDF("userId", "name", "age")

.writeStream

.option("checkpointLocation", "/path/to/checkpoint")

.option("write.merge-schema", "true")

.format("paimon")

.start("/path/to/user_table")

inputData.addData((1, "user1", 30), (3, "user3", 33))

stream.processAllAvailable()

// -- Table data after the batch data are written --

// 1 user1 30

// 2 user2 null

// 3 user3 33

// ---------------------------Paimon was incubated in the Flink community and originated from streaming data warehouses, but it is much more than that. Paimon will continue to focus on deep integration with other engines like Apache Spark and support for scenarios like offline lakehouses. In the future, the community will gradually expand its support for the Spark engine to include more Spark SQL syntax, such as Update and Merge Into. Additionally, there will be further optimization in terms of read/write performance.

Integration of Paimon and Spark - Part 2: Query Optimization

62 posts | 6 followers

FollowAlibaba EMR - April 25, 2024

Alibaba EMR - November 14, 2024

Apache Flink Community - July 5, 2024

Apache Flink Community - June 11, 2024

Apache Flink Community - May 10, 2024

Apache Flink Community - January 31, 2024

62 posts | 6 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by Alibaba EMR