By miHoYo Big Data Development Team

In recent years, the maturation of various cloud-native technologies like containers, microservices, and Kubernetes has led more companies to adopt cloud-native approaches for deploying and running enterprise applications such as AI and big data. Taking Spark as an example, running it on the cloud leverages the elastic resources, operational control, and storage services of the public cloud, leading to numerous successful Spark on Kubernetes implementations in the industry.

During 2023 Apsara Conference, Anming Du, a big data technology expert from miHoYo Data Platform Group, discussed the objectives, explorations, and practices in upgrading miHoYo's big data architecture to be cloud-native. He detailed how leveraging the Spark on Kubernetes architecture, based on Alibaba Cloud Container Service for Kubernetes (ACK), can bring about benefits in elastic computing, cost savings, and decoupled storage and computation.

miHoYo's business has seen rapid growth, resulting in a swift increase in big data offline storage and computation tasks. The early big data offline architecture could no longer meet the emerging scenarios and demands.

To address the original architecture's lack of flexibility, complicated operations and maintenance, and low resource utilization, we began researching how to make our big data infrastructure cloud-native in the second half of 2022. We ultimately implemented the Spark on Kubernetes + OSS-HDFS solution on Alibaba Cloud. It has been running stably in the production environment for about a year, yielding three major benefits: elastic computing, cost savings, and decoupled storage and computing.

1. Elastic computing

With periodic updates, new activities, and game launches, the demand for and consumption of offline computing resources can fluctuate greatly, sometimes by tens or hundreds of times the usual levels. The Kubernetes clusters' inherent elasticity easily solves the issue of resource consumption peaks when Spark computing tasks are scheduled on Kubernetes.

2. Cost saving

Taking advantage of the strong elasticity of the ACK cluster, all computing resources are applied for as needed and released when not in use. By customizing Spark components and fully utilizing ECI spot instances, we've managed to achieve up to a 50% reduction in costs for the same computing tasks and resource consumption.

3. Decoupled storage and computing

Spark running on Kubernetes utilizes the computing resources of the Kubernetes cluster, with data access gradually transitioning from HDFS and OSS to OSS-HDFS. Celeborn is employed for shuffle read and write operations, and the architecture effectively decouples computation from storage, making it easy to maintain and scale.

As we all know, the Spark engine can support and run on a variety of resource managers, such as YARN, Kubernetes, and Mesos. In big data scenarios, most companies in China still run Spark tasks on YARN clusters.

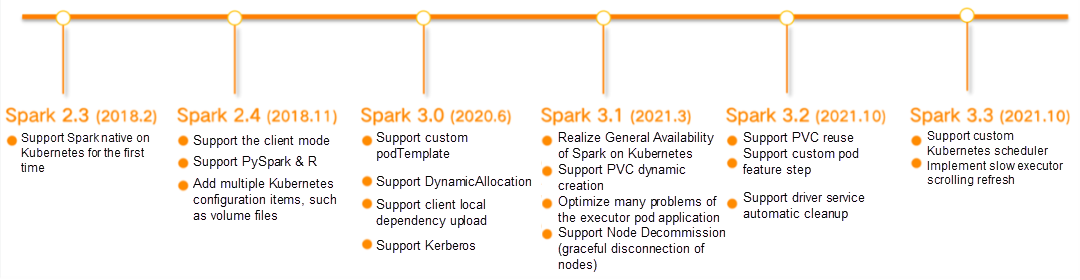

Spark supported Kubernetes for the first time in version 2.3 and was generally available only in version 3.1 released in March 2021. Spark supports Kubernetes later than supporting Yarn. Although there are still some deficiencies in maturity and stability, Spark on Kubernetes can achieve outstanding benefits, such as elastic computing and cost savings. Therefore, major companies are constantly trying and exploring this technology. In this process, the operating architecture of Spark on Kubernetes is also constantly evolving.

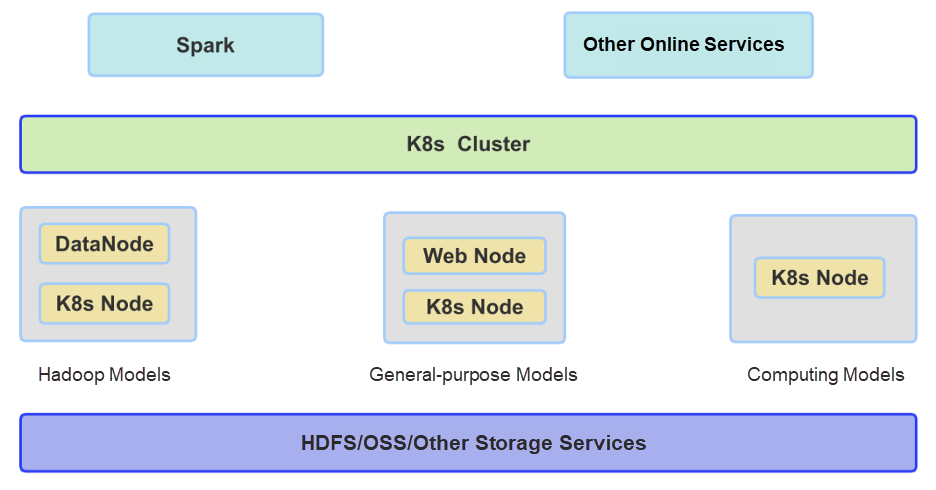

Currently, most companies still use the hybrid deployment solution to run Spark tasks on Kubernetes. The principle of architecture design is that different business systems have different business peak times. The typical peak period of big data offline business systems is from 0 to 9 a.m.. In contrast, the common peak period of various application microservices and BI systems provided by the Web is daytime. At other periods, the machine node of the business system can be added to the Kubernetes NameSpace used by Spark. As shown in the following figure, Spark and other online application services are deployed on a Kubernetes cluster.

The advantage of this architecture is that it can improve the utilization rate of machine resources and reduce costs through the hybrid deployment and off-peak operation of offline services. However, the disadvantages are also obvious, that is, the architecture is complex to implement, and the maintenance cost is relatively high. In addition, it is difficult to achieve strict resource isolation, especially the isolation in terms of network, inevitably causing services to affect each other. Plus, we believe that this approach is not in line with cloud-native philosophy and future trends.

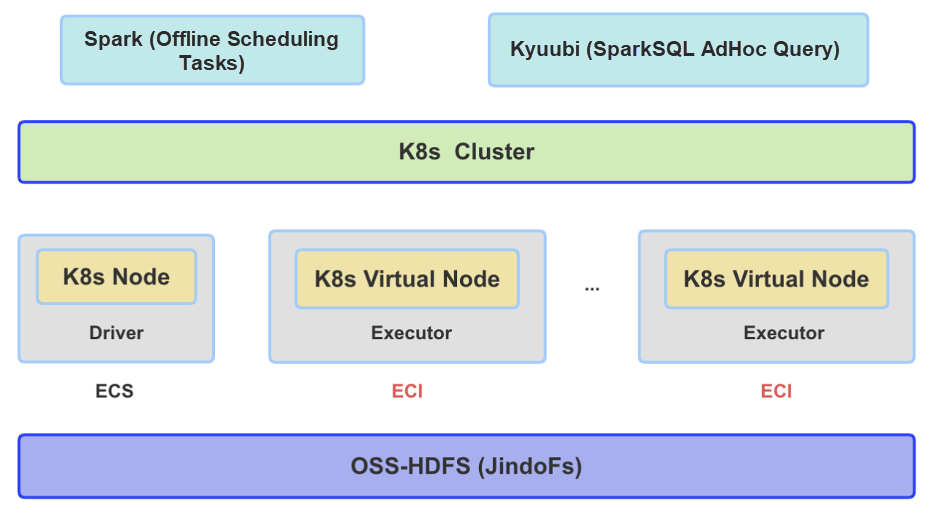

Considering the disadvantages of hybrid deployment, we have designed and adopted a new, more cloud-native implementation architecture. We use OSS-HDFS (JindoFs) as the underlying storage, adopt Alibaba Cloud's container service ACK for the computing cluster, and select Spark 3.2.3, which features rich and stable functions.

OSS-HDFS fully complies with the HDFS protocol and offers advantages such as unlimited capacity and support for hot and cold data storage. It also supports directory atomicity and millisecond-level rename operations, making it highly suitable for offline data warehouses and capable of replacing existing HDFS and OSS.

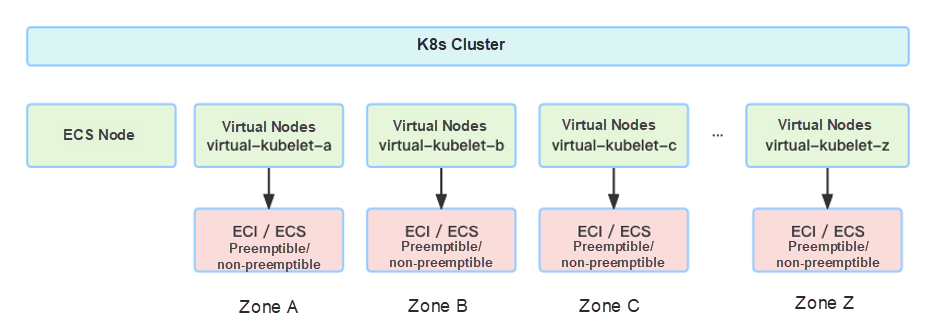

ACK clusters provide a high-performing and scalable container application management service, supporting lifecycle management of enterprise-level Kubernetes containerized applications. Elastic Compute Service (ECS) is a well-known Alibaba Cloud server, while Elastic Container Instance (ECI) is a serverless container runtime service that can be applied for and released within seconds.

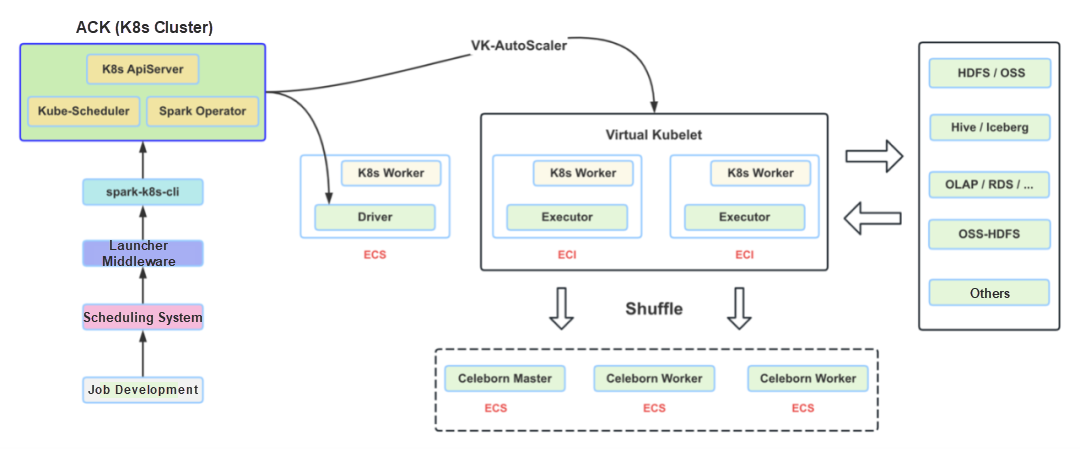

This architecture is simple and easy to maintain. Leveraging the elastic capabilities of ECI at the underlying layer enables Spark tasks to handle peak traffic with ease. Spark executors are scheduled to run on ECI nodes, maximizing the elasticity of computing tasks and reducing costs. The overall architecture is illustrated in the following figure.

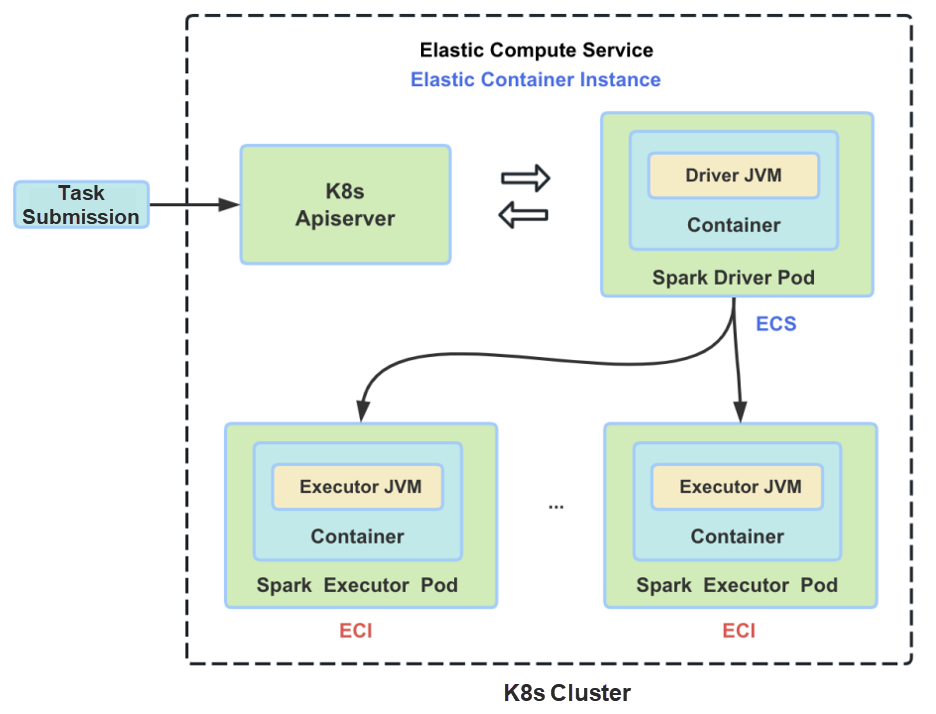

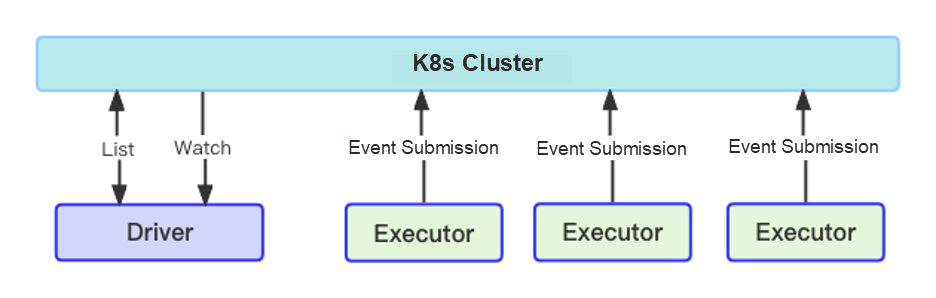

Before diving into the specifics, here's a quick overview of the fundamental principles that enable Spark to run on Kubernetes. In Kubernetes, a Pod represents the smallest deployable unit and both the Spark driver and executor run as separate pods, with each pod getting a unique IP address. A pod may contain one or more containers. Inside these containers is where the driver and executor Java Virtual Machines (JVM) processes start, run, and terminate.

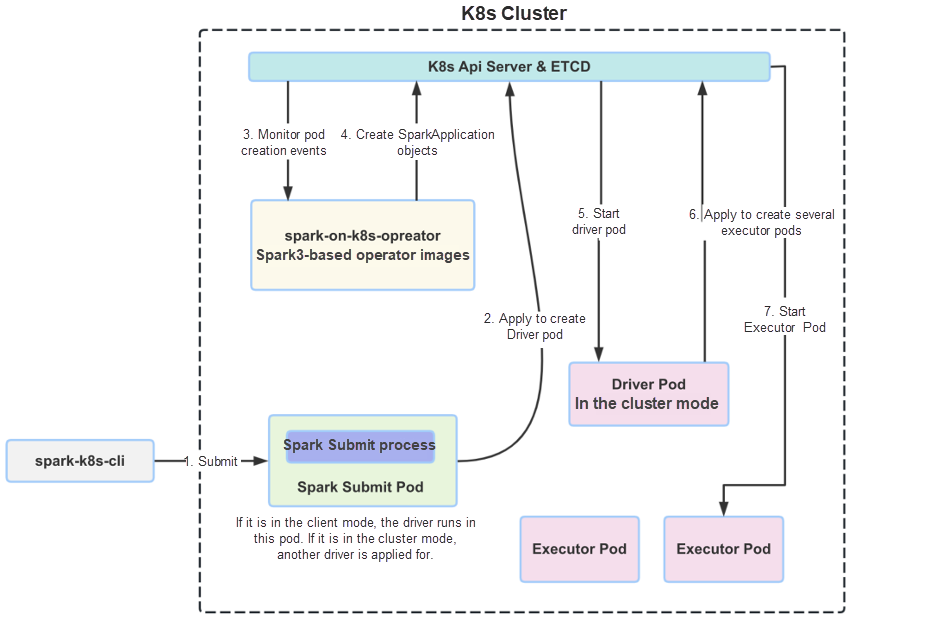

Once a Spark job is submitted to the Kubernetes cluster, the first to launch are the driver pods. These drivers then request executors from the Apiserver as needed, which execute the given tasks. Upon task completion, it's the driver's job to clear out all executor pods. Below is a simplified diagram illustrating their relationship.

The entire execution process for a task is depicted in the figure below. After a user develops and prepares a Spark job, it's released to the scheduling system along with the necessary runtime parameters. This scheduling system routinely passes the job to our in-house launcher middleware: the spark-k8s-cli, which then submits the job to the Kubernetes cluster. Once submitted, the Spark driver pods initiate first and request the allocation of executor pods from the cluster. These executors, while running specific tasks, interact with external big data components such as Hive, Iceberg, OLAP databases, and OSS-HDFS. Meanwhile, Celeborn is responsible for data shuffling between Spark executors.

Different companies have their unique ways of submitting Spark jobs to Kubernetes clusters. First, let's look at the common current methods before delving into the task submission and management approach we use in production.

This method involves directly submitting jobs through the spark-submit command, which Spark inherently supports. It's simple to integrate and aligns with user habits. However, this method doesn't conveniently allow for job status tracking and management, automatic configuration of Spark UI service and ingress, or cleaning up resources post-completion. Hence, it's not ideal for production environments.

Another common submission method involves the pre-installation of spark-operator on Kubernetes. Clients submit YAML files via kubectl to run Spark jobs. Essentially, this extends the native submission mode, with the actual submission still occurring via spark-submit. Additional features include job management, service/ingress creation and cleanup, task monitoring, and pod enhancement. Although suitable for production, this method lacks seamless integration with big data scheduling platforms and can be complex for those unfamiliar with Kubernetes.

In our production environment, we submit jobs using the spark-k8s-cli. This tool, fundamentally an executable file, has been refactored, enhanced, and deeply customized from the Alibaba Cloud emr-spark-ack submission tool.

The spark-k8s-cli merges the advantages of both spark-submit and spark-operator, ensuring all tasks are manageable by spark-operator. It supports interactive spark-shell sessions and local dependency submissions, operating identically to the native spark-submit syntax.

Initially, Spark Submit JVM processes for all tasks commenced within gateway pods. However, we noticed stability issues over time; if a gateway pod encountered an issue, all ongoing Spark tasks within it failed. Additionally, managing log outputs for Spark tasks proved challenging. To resolve this, we shifted spark-k8s-cli to use a separate submit pod for each task, ensuring independence between them. These submit pods request the start of the driver for the task. Like the driver pods, submit pods run on dedicated ECS nodes and are automatically released upon task completion. The diagram below illustrates the submission and operation principles of spark-k8s-cli.

In addition to the above basic task submission, we have also made some other enhancements and customizations for spark-k8s-cli:

• Supporting submitting tasks to multiple Kubernetes clusters in the same region to implement SLB and failover between clusters.

• Implementing the automatic queuing function similar to YARN when the resource is insufficient (If Kubernetes sets the resource quota and the quota reaches the upper limit, the task will fail directly).

• Equipped with exception handling such as Kubernetes network communication, and creation failure or startup failure retries, and provide fault tolerance for occasional cluster jitter and network exceptions.

• Supporting throttling and control for large-scale replenishment tasks based on different departments or lines of business.

• Equipped with alert features, such as inline task submission failure, container creation failure or startup failure, and running timeout.

The Kubernetes cluster itself does not provide the automatic log aggregation and display function like YARN. Users need to collect the logs of drivers and executors by themselves. At present, the common solution is to deploy an agent on each Kubernetes node, collect logs through the agent, and store them on third-party storage, such as ES and SLS. However, these methods are inconvenient for users and developers who are used to clicking and viewing logs on the YARN page, because they have to go to a third-party system to retrieve and view the log.

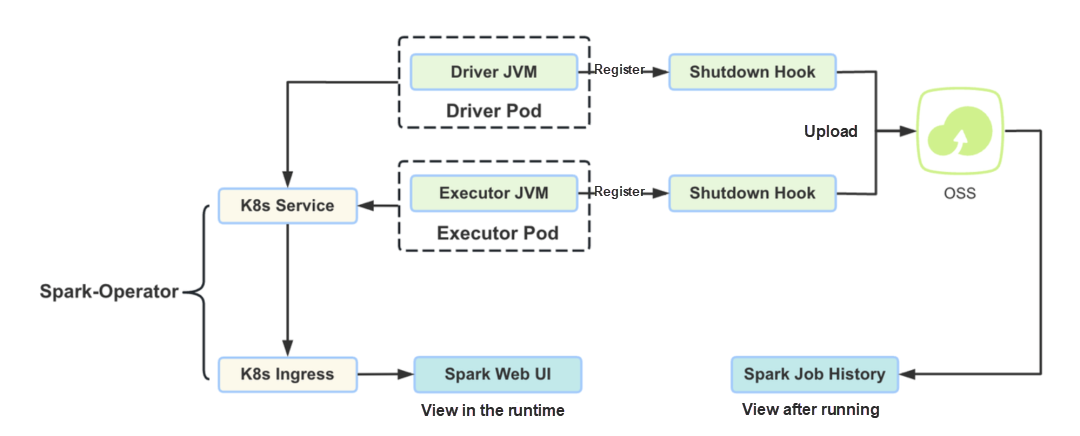

To facilitate the viewing of the Kubernetes Spark task log, we have modified the Spark code so that the driver and executor logs are finally output to OSS. Users can directly click on the Spark UI and Spark Jobhistory to view log files.

The figure above outlines the log collection and display principle. When a Spark task is initiated, both the driver and executor register a shutdown hook. Upon task completion and JVM shutdown, the hook uploads the full log to OSS. Additionally, to view the complete log, the Spark job history code must be modified to display stdout and stderr on the history page. When users click on the log, the corresponding driver or executor log file is retrieved from OSS and rendered in the browser for viewing. For tasks that are currently running, we provide a Spark Running Web UI. After task submission, spark-operator automatically creates a service and ingress for users to view the running details. At this point, logs are accessed by pulling the running log of the corresponding pod via the Kubernetes API.

Leveraging the auto-scaling capabilities of ACK clusters and the extensive use of ECI, the total cost of running Spark tasks on Kubernetes is significantly lower than on fixed YARN clusters, also greatly enhancing resource utilization.

ECI is a serverless container service, with the major difference from ECS being that ECI is billed by the second, and can be provisioned and released just as quickly. Therefore, ECI is particularly well-suited for computational scenarios with clear peaks and troughs in load, such as Spark.

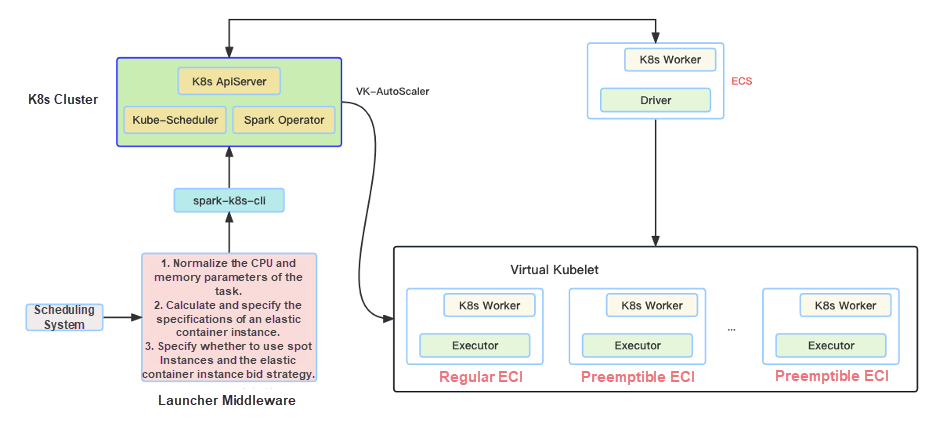

The figure above demonstrates how to request and utilize ECI for Spark tasks within an ACK cluster. Before doing so, users must install the ack-virtual-node component in the cluster and configure details such as the vSwitch. During task runtime, executors are scheduled to a virtual node, which requests the creation and management of ECI.

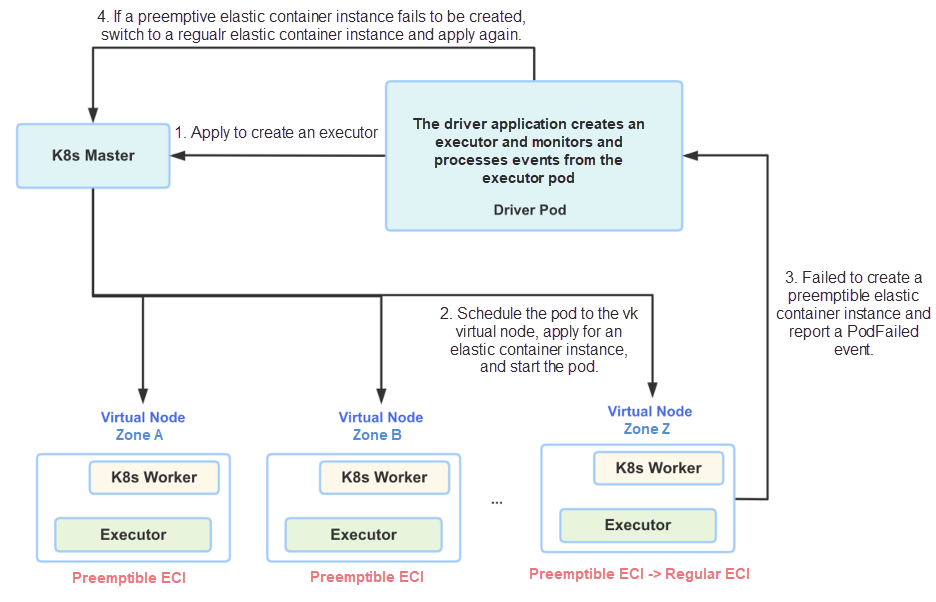

Elastic container instances come in two types: regular and preemptible. Preemptible instances are cost-effective spot instances with a default one-hour protection period, which suits most Spark batch processing scenarios. After this period, preemptible instances may be forcefully reclaimed. To further enhance cost savings and capitalize on the price benefits of preemptible instances, we've modified Spark to introduce automatic conversion between ECI instance types. Spark task executor pods are first run on preemptible ECIs, and if creation fails due to limited inventory or other reasons, they automatically switch to regular ECIs to ensure tasks proceed as expected. The diagram below details the implementation principle and conversion logic.

The disk capacity of Kubernetes nodes is small, and the nodes are applied for when required and released when used up, so it cannot store a large amount of Spark Shuffle data. If a disk is attached to an executor pod, it is difficult to determine the size of the attached disk. Considering factors such as data skew, the disk usage is low and it is complex to use. In addition, although the Spark community provides functions such as Reuse PVC in Spark 3.2, it is found after research that the functions are not complete and are not stable enough.

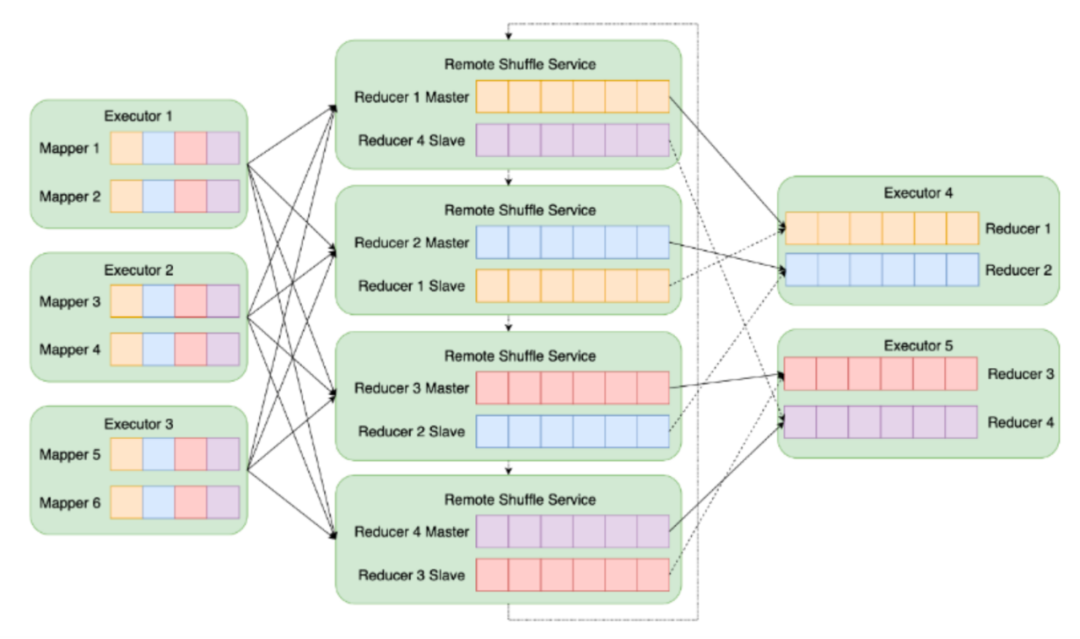

To cope with the problems in data shuffle in Spark on Kubernetes, we finally chose the open-source Celeborn solution of Alibaba Cloud after thorough research and comparison of several open-source products. Celeborn is an independent service that is used to store the intermediate shuffle data of Spark so that executors no longer depend on local disks. This service can be used by both Kubernetes and YARN. Celeborn uses the push shuffle mode. The shuffle process includes write appending and sequential reads to improve data read and write performance and efficiency.

Based on the open-source Celeborn project, we have also carried out some internal work on data network transmission function enhancement, metrics enrichment, monitoring and alert improvement, bug repair, etc. At present, a stable internal version has been formed.

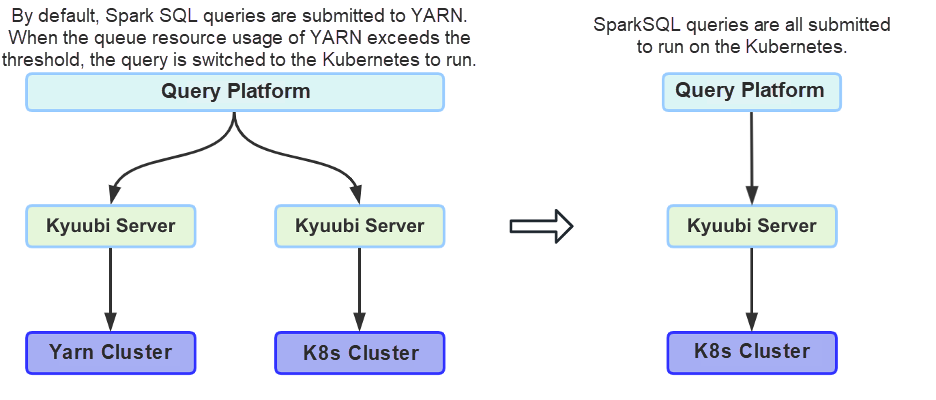

Kyuubi is a distributed and multi-tenant gateway that can provide SQL and other query services for Spark, Flink, and Trino. In the early days, our Spark Adhoc queries were sent to Kyuubi for execution. To solve the problems of insufficient YARN queue resources and failure to submit and run users' query SQL, we also support the deployment and running of the Kyuubi server on Kubernetes. Therefore, when YARN resources are insufficient, Spark queries are automatically switched to run on the Kubernetes. Given the gradual reduction of the YARN cluster scale, query resources and consistent user query experience cannot be guaranteed. Currently, we have submitted all Spark SQL Adhoc queries to Kubernetes for execution.

To make users' Adhoc queries run smoothly on Kubernetes, we have also made some source code modifications to Kyuubi, including rewriting docker-image-tool.sh, Deployment.yaml, and Dockfile files in Kyuubi project, redirecting log to OSS and providing Spark Operator management support, permission control, and convenient viewing of task running UI.

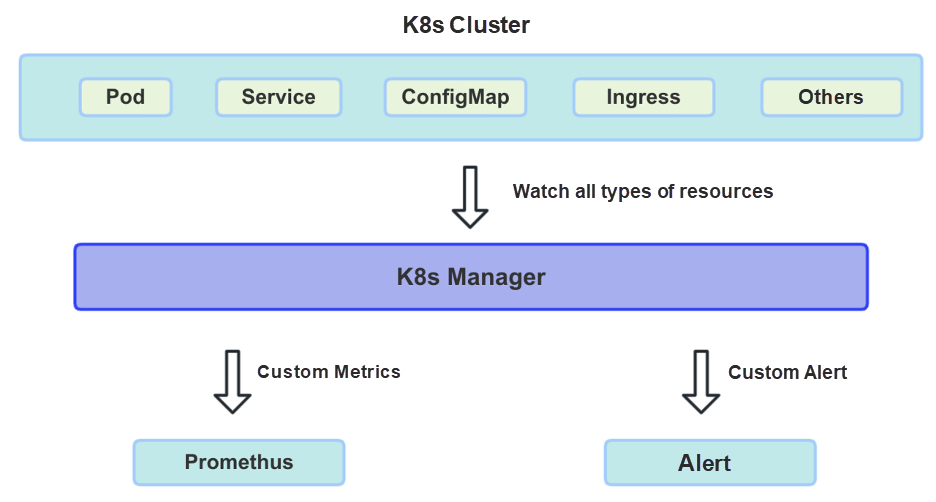

In the Spark on Kubernetes scenario, although Kubernetes is equipped with monitoring and alerting at the cluster level, it cannot fully meet our requirements. In the production environment, we pay more attention to Spark tasks, pod status, resource consumption, and ECI on the cluster. With the Kubernetes watch mechanism, we have implemented our own monitoring and alerting service, Kubernetes Manager, which is shown in the following figure.

The Kubernetes Manager is an internally implemented, relatively lightweight Spring Boot service. Its function is to monitor and summarize various resource information on each Kubernetes cluster, such as pods, quotas, services, ConfigMaps, ingresses, and roles. This allows for the generation and display of custom metrics, as well as exception alerts for the metrics. These include the total CPU and memory usage of the cluster, the number of currently running Spark tasks, memory resource consumption, and runtime top statistics of Spark tasks, the total number of Spark tasks in a single day, the total number of pods in the cluster, pod status statistics, the model of ECI machines and their available zones distribution, and the monitoring of expired resources.

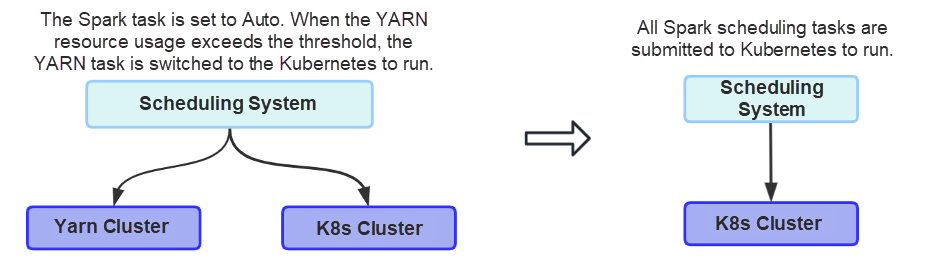

In our scheduling system, Spark tasks support three execution policies: YARN, Kubernetes, and Auto. When a user task specifies the resource manager to be used, the task is only run on YARN or Kubernetes. If the user selects Auto, the task's execution location is based on the resource utilization rate of the current YARN queue, as shown in the following figure. Due to the large number of tasks and the continuous migration of Hive tasks to Spark, some tasks still run on YARN clusters for now, but eventually, all tasks will be hosted by Kubernetes.

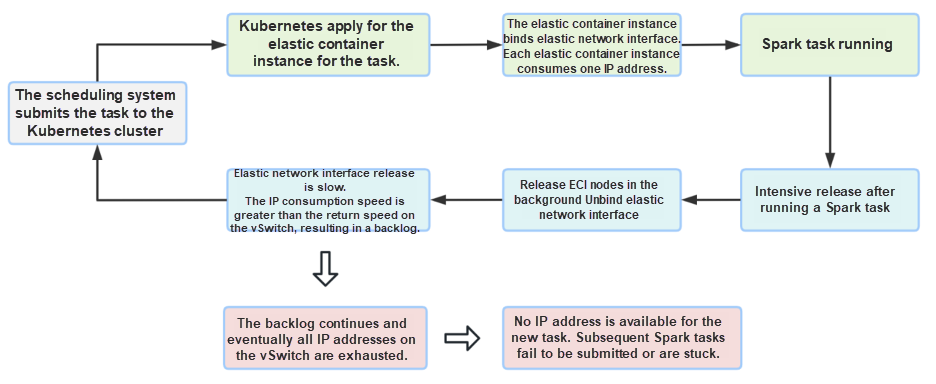

A large number of elastic container instances (ECIs) are used during Spark tasks. Two prerequisites are required for an ECI to be created: 1. An IP address can be applied for. 2. Inventory is available in the current zone. The number of available IP addresses provided by a single vSwitch is limited, and the total number of preemptible instances owned by a single zone is also limited. Therefore, in the actual production environment, whether you are using a common elastic container instance or a spot elastic container instance, it is preferable to configure support for multiple zones and multiple vSwitches.

When the Spark task is submitted, model information such as the CPU and memory of each executor has been specified. Therefore, we can obtain the actual runtime length of each executor from the task before the SparkContext is closed at the end of the task, and then calculate the approximate cost of the Spark task by combining the unit price. Since ECI Spot Instances change with the market and inventory, the cost per task calculated by this method is an upper limit and is mainly used to reflect the trend.

At the beginning of the launch when the number of tasks was small, the Spark operator service ran well. However, as the number of tasks continued to increase, the operator processing of various events slowed down. A large number of resources generated during the running of tasks, such as ConfigMaps, ingresses, and services, were not cleared in time, resulting in accumulation. The web UI of newly submitted Spark tasks also failed to open for access. After identifying the problem, we adjusted the number of operator coroutines and implemented functions such as batch processing of pod events, filtering of irrelevant events, and TTL deletion to address the insufficient performance of the Spark operator.

Spark 3.2.2 uses Fabric 8 (Kubernetes Java Client) to access and operate resources in Kubernetes clusters. The default client version is 5.4.1. In this version, when the task ends and the executors are released intensively, the driver sends a large number of API requests to delete pods to the Kubernetes Apiserver. This puts pressure on the Apiserver and ETCD, and the CPU usage of Apiserver will increase instantaneously.

Currently, our internal Spark version has upgraded the kubernetes-client to 6.2.0, which supports batch deletion of pods. This solves the cluster jitter caused by a large number of pod deletion requests when Spark tasks are released intensively.

During the design and implementation of Spark on Kubernetes, we faced a variety of issues, bottlenecks, and challenges. Below is a brief introduction to these problems and the solutions we implemented.

The slow release of elastic network interfaces was a performance bottleneck in the large-scale application of ECIs. This issue could lead to a significant consumption of IP addresses on the vSwitch, eventually causing Spark tasks to get stuck or fail to submit. The figure below illustrates the specific trigger and process. The Alibaba Cloud team has since addressed this issue through technical upgrades, significantly improving release speed and overall performance.

When a Spark task starts a driver, it creates an event watcher for executors to monitor the running status of all executors in real-time. However, for some long-running Spark tasks, this watcher may become invalid due to resource expiration or network exceptions. Therefore, it's necessary to reset the watcher in such cases, or the task might proceed incorrectly. This was identified as a bug in Spark, and our internal version has fixed it, with a PR submitted to the Spark community.

As shown in the figure above, the driver uses both list and watch methods to monitor the executor's status. Watch serves as a passive listener, which may occasionally miss events due to network issues, though this is rare. List is an active method, where the driver can request information about all executors of its task from the Apiserver at intervals — every 3 minutes, for example.

Listing requests information about all pods of a task. When the number of tasks is large, frequent listing puts significant pressure on the Kubernetes Apiserver and ETCD. Initially, we had disabled the scheduled list and relied solely on watch. However, in cases of abnormal task execution, like numerous executors experiencing out-of-memory (OOM) issues, there is a chance that the driver's watch information might be incorrect, causing the driver to stop requesting executors even though the task is incomplete, leading to a stuck task. Our solutions are as follows:

• Enable the list mechanism alongside the watch, extending the list interval to request every 5 minutes.

• Modify the code related to ExecutorPodsPollingSnapshotSource to allow the Apiserver to cache the full pod information and retrieve it from the cache, reducing the pressure on the cluster caused by listing.

Apache Celeborn is an open-source product from Alibaba Cloud, previously known as RSS (Remote Shuffle Service). In its early stages, it showed some immaturity, particularly in its handling of network latency and packet loss, which led to extended runtimes or even failures of some Spark tasks with a large amount of shuffle data. We have addressed these issues with the following solutions:

• Optimize Celeborn, form internal versions, and improve code for network packet transmission.

• Optimize Celeborn master and worker parameters to improve the read and write performance of shuffle data.

• Upgrade the underlying image version of ECI and fix the Linux kernel bug of ECI.

To prevent indefinite resource usage, we set quota limits on each Kubernetes cluster. Since a quota is also a resource in Kubernetes, any application or release of a pod modifies the quota's CPU and memory values. Concurrent submission of many tasks can lead to quota lock conflicts, which can affect the creation of task drivers and cause tasks to fail to start.

To overcome these failures, we modified the Spark driver pod creation logic and added configurable retry parameters. If the driver pod creation fails due to quota lock conflicts, we perform a retry. Failure to create an executor pod due to quota lock conflicts does not require special handling. If the creation fails, the driver automatically attempts to create a new executor, which effectively acts as an automatic retry.

Batch submission of a large number of tasks can lead to simultaneous starts of multiple submit pods, all applying for IP and binding elastic network interfaces to the Terway component at once. This can result in pods that have started and bound elastic network interfaces successfully, but are not fully ready, with their network communication functions not yet operational. Consequently, tasks attempting to access Core DNS cannot send out requests, leading to UnknownHost errors and task failure. The following measures help avoid and resolve this problem:

• Assign a Terway pod to each ECS node.

• Enable the cache feature of Terway and allocate IP addresses and elastic network interfaces in advance. New pods are directly obtained from the cache pool and returned to the cache pool after they are used up.

To ensure sufficient inventory, each Kubernetes cluster is set up with multiple availability zones. However, cross-zone network communication tends to be slightly less stable than within the same zone, which could lead to packet loss and unstable task runtimes. In scenarios where there is cross-zone packet loss, setting the ECI scheduling policy to VSwitchOrdered can help. This ensures that all executors of a task are generally within the same zone, avoiding unstable runtime issues caused by communication anomalies between executors in different zones.

Finally, we would like to express our sincere gratitude to the members of Alibaba Cloud Container, ECI, EMR, and other related teams for their valuable suggestions and professional technical support during the implementation and actual migration of our entire technical solution.

Moving forward, we will continue to optimize and enhance the overall architecture, focusing on the following areas:

Observability | Best Practices for Using Prometheus to Monitor Memcached

Observability | Best Practices for Using Prometheus to Monitor SQL Server

212 posts | 13 followers

FollowApsaraDB - February 9, 2021

Alibaba Cloud Community - October 9, 2024

Alibaba Cloud Community - September 20, 2024

Alibaba EMR - August 24, 2021

Alibaba EMR - March 18, 2022

Alibaba Developer - July 13, 2021

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Alibaba Cloud Native