By Juntao Ji

Since its inception, Apache RocketMQ has been widely adopted by enterprise developers and cloud providers because of its simple architecture, rich business features, and strong scalability. Over a decade of large-scale scenario refinement, RocketMQ has become the industry-standard choice for financial-grade reliable messaging and is widely applied in business scenarios across the internet, big data, mobile internet, and IoT domains. As its use cases expand and adoption grows in the industry, developers must increasingly focus on ensuring RocketMQ’s reliability and availability.

Given that the underlying system of RocketMQ is a log-based storage mechanism, previous solutions to prevent data loss and single-point failures in such systems have been relatively mature, such as replicating data across multiple machines. In this process, the problem to be solved simplifies to ensuring data consistency across multiple machines, robust enough to withstand issues like node failures and split-brain scenarios. These problems can be completely solved by a distributed consensus algorithm.

In the open-source Apache RocketMQ, we have introduced DLedger[2] and SOFAJRaft[3] as concrete implementations of the Raft[4] algorithm to support high availability. This article will explain how RocketMQ leverages Raft - a simple and effective distributed consensus algorithm - to ensure high availability.

Consensus is a fundamental concept in achieving fault tolerance in distributed systems. It refers to the process where multiple servers in a system need to agree on some value. Once a decision is made on a value, it becomes irreversible [1]. Typical consensus algorithms can continue to operate as long as a majority of the servers are available; for example, in a cluster of five servers, even if two servers fail, the entire cluster can still function normally. If the number of failed servers exceeds half, the cluster cannot continue to operate normally. Despite that, it will never return incorrect results.

One of the well-known distributed consensus algorithms is Paxos [12], but unfortunately, it is rather difficult to understand, and the cost of this complexity is that few people can master it and make reliable implementations. Luckily, Raft appeared in time. It is easy to understand and has numerous proven industrial use cases, such as TiKV and etcd.

The original paper that details Raft is titled "In Search of an Understandingable Consensus Algorithm" [4]. A slightly shorter version of this article won the Best Paper Award at the 2014 USENIX Annual Technical Conference. Interestingly, the first sentence of the abstract in the paper states: "Raft is a consensus algorithm for managing a replicated log."

In English, the word "log" not only means "log" as in records but also "log" as in wood. A group of "replicated logs" forms a raft, which aligns perfectly with the English meaning of Raft. Moreover, the Raft homepage uses a raft made of three logs as its logo (as shown in Figure 1). Of course, a more formal explanation of Raft is the acronyms of the following words: Re{liable | plicated | dundant} And Fault-Tolerant, reflecting the initial problems Raft was designed to address.

Figure 1: Header attached to the title of the Raft homepage

Raft is a consensus algorithm designed for managing replicated logs. It breaks down the consensus process into several sub-problems: leader election, log replication, and security. Consequently, the entire Raft algorithm becomes easier to understand, prove, and implement, making it simpler to achieve distributed consensus protocols. Like Paxos, Raft ensures that as long as a majority of nodes (n/2 + 1) are operational, the system can continue to provide service.

In the Raft algorithm, each node (server) in the cluster can be in one of the following three states:

Raft separates time into terms to manage elections. Each term starts with a leader election. The term is an incremental number that increases with each election. If a follower does not receive a heartbeat from the leader within the “election timeout,” it increments its own term number and transitions to the candidate state to initiate a leader election. Candidates first vote for themselves and send RPCs for requesting votes to other nodes. If the receiving node has not voted in the current term, it agrees to vote for the requester.

If a candidate receives votes from a majority of the cluster's nodes in an election, it becomes the leader. Term numbers generated during this process are used in node-to-node communication to prevent outdated information from causing errors. For example, if a node receives a request with a smaller term number, it rejects the request.

In extreme cases, the cluster may have split-brain or network problems. In this case, the cluster may be divided into several subsets that cannot communicate with each other. However, since the Raft algorithm requires a leader to have the support of a majority of the cluster’s nodes, this ensures that even in a split-brain scenario, at most one subset can elect a valid leader.

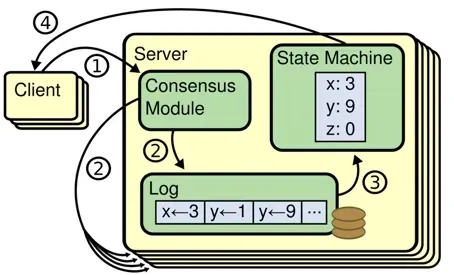

Raft maintains consistency among nodes through log replication. For an infinitely growing sequence a[1, 2, 3...], if the value a[i] satisfies distributed consensus for any integer i, the system meets the requirements of a consistent state machine. In real systems, operations are continuous, so reaching consensus on a single value is not sufficient. To ensure consistency across all replicas, operations are typically transformed into write-ahead-log (WAL). By keeping the WAL consistent across all replicas, each replica can execute the WAL operation in order, ensuring the final state is consistent, as shown in Figure 2.

Figure 2: How to ensure data consistency between nodes through log replication. Phase one involves the Client sending a write request to the leader. Phase two involves the Leader converting the ‘operation’ into WAL and replicating it. Phase three involves the Leader receiving responses from the majority and applying the operation to its state machine.

After receiving a client request, the leader appends the request as a new log entry to its log and then replicates the entry to other nodes in parallel. Only after the majority of nodes have written the log entry does the leader commit the operation and apply it to its state machine, simultaneously notifying other nodes to commit the log entry. Therefore, Raft ensures no data loss even in the event of a leader crash or network partition. Any committed log entry is guaranteed to exist in the log of any new leader in subsequent terms.

In short, a key feature of the Raft algorithm is the Strong Leader:

To help better understand how the Raft algorithm operates, the demonstration on the following website is strongly recommended. In the form of animation, it visually illustrates how Raft works and handles issues like split-brain scenarios: https://thesecretlivesofdata.com/raft/

Figure 3: Raft algorithm election process

After viewing the visual explanations on the above website, you may understand the design principles and specific algorithms of Raft. Now, let’s get straight to the point and discuss the past and present of RocketMQ and Raft.

RocketMQ has been attempting to integrate the Raft algorithm for quite a long time, and the integration approach has evolved over this period. Today, Raft is only a small part of RocketMQ’s high availability mechanism, While RocketMQ has developed a high availability consensus protocol suited to its own needs.

This chapter mainly discusses the efforts RocketMQ has made to implement the Raft algorithm within its system and the current form and specific role of Raft in RocketMQ.

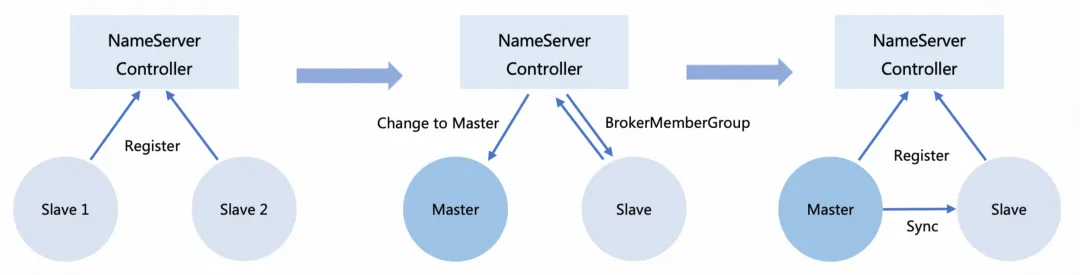

The primary reason RocketMQ introduced the Raft protocol was to enhance the high availability and automatic fault recovery capabilities of the system. Before the 4.5 version, RocketMQ had only one Master/Slave deployment mode, where a group of brokers had only one Master and zero to multiple Slaves, and these Slaves replicated data in the Master synchronously or asynchronously. However, this approach had some limitations:

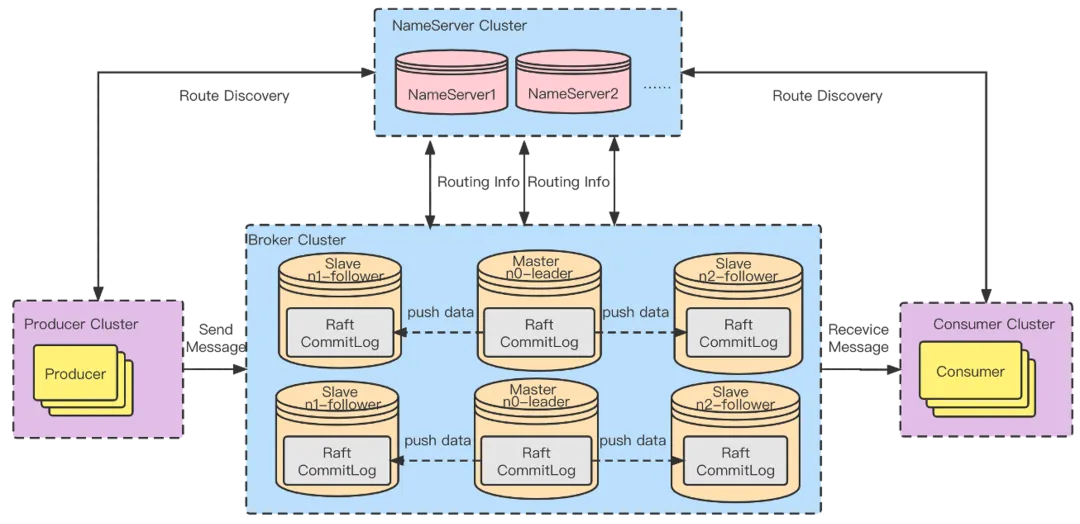

To address these issues, RocketMQ introduced DLedger, a Raft-based repository [5]. DLedger is a distributed log replication technology that uses the Raft protocol to safely replicate and synchronize data across multiple replicas. With the release of RocketMQ 4.5, deployments could adopt the RocketMQ on DLedger model. The DLedger commitlog replaced the original CommitLog, giving CommitLog the ability to elect and replicate. Through role transmission, the Raft roles were then passed to the external broker roles: the leader corresponds to the original master, and the follower and candidate correspond to the original slaves:

Figure 4: Deployment model of RocketMQ on DLedger, where the roles between brokers are transmitted by the Raft CommitLog.

Therefore, RocketMQ brokers gained the capability for automatic failover. In a group of brokers, when the Master fails, DLedger’s automatic leader election capability elects a new leader, which then becomes the new Master through role transmission. DLedger can also build highly available embedded KV storage. Operations on some data are recorded in DLedger and then restored to a hashmap or RocksDB based on data volume or actual needs, to build a consistent and highly available KV storage system, applicable to scenarios such as metadata management.

We tested DLedger under various failure conditions, including random symmetric partitioning, randomly killing nodes, randomly pausing some nodes to simulate slow nodes, and complex asymmetric network partitions such as bridge and partition-majorities-ring. Under these failures, DLedger maintained consistency, confirming its high reliability [6].

In summary, after introducing the Raft protocol, the multi-replica architecture of RocketMQ was enhanced, improving the reliability and self-recovery capabilities of the system. It also simplified the overall system architecture and reduced O&M complexity. This architecture resolves the single-master issue by quickly electing a new Master after a Master failure. However, since the Raft leader election and replication capabilities are integrated into the data link (CommitLog), the following issues arise:

Moreover, embedding election logic into the data link may trigger a series of issues, directly contradicting our goals of high availability and stability. Starting from the hypothetical scenario where elections occur within the data link, we can envision a storage cluster composed of multiple nodes that require regular elections to determine which node manages the data flow. In this design, elections are not just part of the control plane but also directly affect the stability of the data link. In the event of election failure or inconsistency, the entire data path could be blocked, leading to a loss of write capability or data loss.

However, when we look at PolarStore, we find that its design philosophy includes the separation of the control plane and data plane: data plane operations rely solely on locally cached full metadata, minimizing dependency on the control plane. The advantage of this design is that even if the control plane is completely unavailable, the data plane can still maintain normal read and write operations according to the local cache. In this case, the election mechanism of the control plane never affects the availability of the data plane. This separated architecture brings considerable robustness to the storage system, allowing it to maintain business continuity even when encountering faults.

In conclusion, decoupling election logic from the data path is crucial for ensuring the high availability and stability of storage systems. By isolating the complexity and potential failures of the control plane, it is possible to ensure that the data plane retains its core functions even in the face of control plane failures, thus providing continuous service to users. This robust design concept is essential in modern distributed storage systems, allowing the data plane to continue operating with minimal impact when the control plane encounters issues.

This example tells us that if the availability of the data plane is decoupled from the control plane, the failure of the control plane has minimal impact on the data plane. Otherwise, one must either continuously improve the availability of the control plane or accept cascading failures [7]. This is also the direction of evolution for RocketMQ discussed later.

As mentioned earlier, we introduced Raft into RocketMQ in the form of DLedger, which gave the CommitLog the ability to elect leaders. This design is straightforward and effective, giving consistency directly to the most important components. But this design also couples the election and replication processes together. When these processes are coupled, each election and replication strongly depends on the Raft implementation of DLedger, which is unfriendly to future scalability.

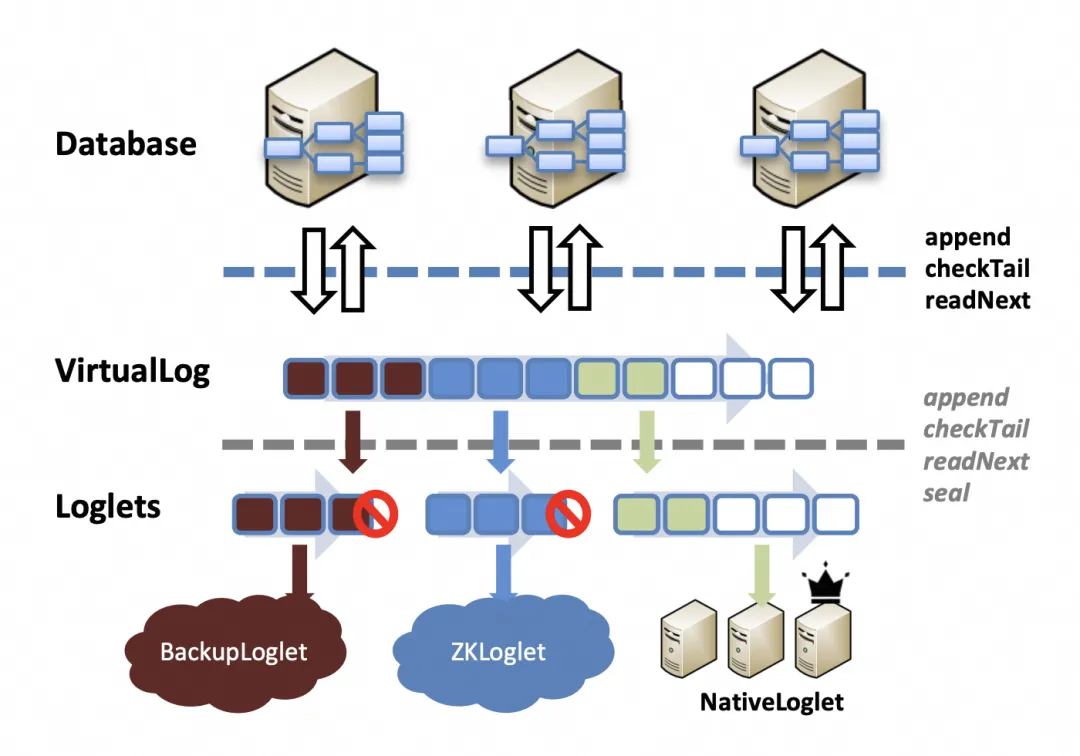

So, is there a more reliable and flexible solution? Let’s look to the academic community for inspiration. At OSDI'20, the paper related to Meta's unified control-plane metadata storage platform, Delos[8], received the Best Paper Award. This paper offers a new perspective on solving the problem of coupling the control plane and data plane during a leader election: Virtual Consensus. It describes work on implementing online consensus protocol switching in production environments. This design aims to virtualize consensus by virtualizing the shared log API, allowing services to change consensus protocols without downtime.

The starting point of the paper is that in the production environment, the system is often highly integrated with the consensus mechanism, so replacing the consensus protocol will involve very complex and in-depth system changes. Using the previously mentioned DLedger CommitLog as an example, the data flow responsible for fault tolerance and the control flow responsible for syncing the consensus group configuration in the distributed consensus protocol is closely related. Modifying such a tightly coupled system is extremely challenging, making the development and implementation of new consensus protocols costly, and even updating minor features is significantly challenging. Not to mention the introduction of more advanced consensus algorithms (if any in the future) in such an environment, which is bound to cost a significant amount.

In contrast, Delos proposes a new method to overcome these challenges by separating the control plane and data plane. In this architecture, the control plane handles leader elections, while the data plane that consists of VirtualLog and underlying Loglets manages the data. VirtualLog provides an abstraction layer for a shared log, connecting different Loglets which represent instances of different consensus algorithms. This abstraction layer establishes a mapping relationship between log entries in VirtualLog and individual Loglets. To switch to a new consensus protocol, simply instruct VirtualLog to hand over new log entries to the new Loglet for consensus. This design enables seamless switching of consensus protocols and significantly reduces the complexity and cost of replacing consensus protocols.

Figure 5: Schematic diagram of Delos design architecture. The control plane and data plane are separated and collaborate in the form of VirtualLog.

Since this design has already been openly discussed in the academic community, RocketMQ can implement and validate it in the industry. In RocketMQ 5.0, we introduced the concept of the Raft Controller: Raft and other leader election algorithms are used only in the upper-layer protocol, while the lower-layer data replication is handled by a data replication algorithm within the Broker, responding to the results of the upper-layer leader election.

This design philosophy is not uncommon in the industry; instead, it has become a mature and widely validated practice. Take Apache Kafka as an example. This high-performance messaging system adopts a layered architecture strategy. In early versions, it used ZooKeeper to build its metadata control plane, which managed cluster state and metadata information. As Kafka evolved, newer versions introduced the self-developed KRaft mode, further internalizing and enhancing metadata management. Additionally, Kafka’s ISR (In-Sync Replicas) [15] protocol handles data transmission, providing high throughput and configurable replication mechanisms, and ensuring flexibility and reliability in the data plane.

Similarly, Apache BookKeeper, a low-latency and high-throughput storage service, also adopts a similar architectural philosophy. It uses ZooKeeper to manage the control plane, including maintaining consistent service state and metadata information. On the data plane, BookKeeper utilizes its self-developed Quorum protocol to handle write operations and ensure low-latency performance for read operations.

With a similar design, our Brokers no longer handle their own elections. Instead, the upper-layer Controller instructs the lower layers, which then perform data replication based on those instructions, achieving the separation of election and replication.

Figure 6: From the ATA article "Interpreting the New High Availability Design of RocketMQ 5.0."

However, unlike Delos, we have three layers of consensus - consensus among Controllers (Raft), consensus when Controllers select leaders for Brokers (Sync-State Set), and consensus among Brokers during replication (Master-Slave confirmation replication algorithm). We add an extra layer of consensus among Controllers to ensure strong consistency among control nodes. The specific implementations of these three consensus algorithms are not detailed here. If you are interested, please refer to the RIP-31/32/34/44 documents in our RocketMQ community.

This design is also reflected in our paper accepted by ASE 23' [9]: The Controller component plays a core role in the switching link but does not affect the normal operation of the data link. Even if it faces issues like crashes, hardware failures, or network partitions, it will not lead to data loss or inconsistency in the Brokers. In subsequent tests, we even simulated more stringent scenarios, such as simultaneous crashes and hardware failures of Controllers and Brokers, as well as random network partitions, and our design performed very well.

Next, we will delve deeper into the consensus protocols in RocketMQ, exploring how consensus is achieved within RocketMQ.

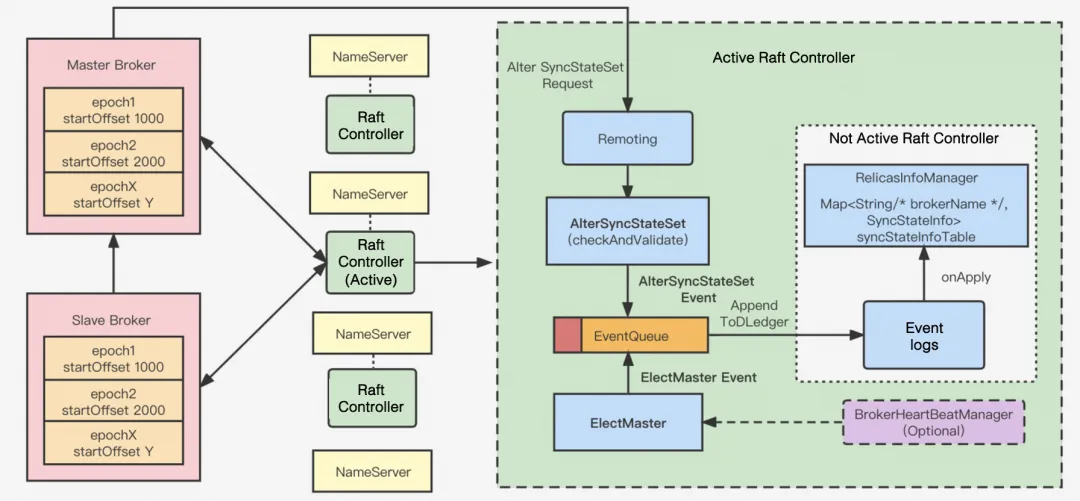

First, let's look at the figure below, which illustrates how the Raft Controller specifically achieves consensus in the control plane and data plane.

Figure 7: Detailed design architecture and operational principles of the Raft Controller

In the preceding figure, the green part represents the Controller, which is the control plane separated by RocketMQ. It contains two consensus algorithms: one is the Raft algorithm, which ensures consensus among Controllers; Another is the Sync-State Set (referred to as 3S in the following text) algorithm, the leader election algorithm for ensuring Broker consensus, which is a distributed consensus protocol for leader election in the data plane that we developed based on the PacificA algorithm [10]. The red part is the Broker, which is the core of our data plane. Other storage structures of the Broker are omitted here, focusing only on the epoch file, the core file for achieving consensus during replication. We implemented the consensus protocol for data replication based on this file.

Below, we will elaborate separately on the consensus in the control plane and data plane.

There are two layers of consensus in the control plane:

The two consensus algorithms are explained below.

Before RocketMQ 5.0, Raft existed only within the CommitLog, transmitting roles externally through DLedger CommitLog. However, as analyzed earlier, this approach had significant drawbacks: tight coupling of election and replication processes, heavy reliance on the DLedger storage library, and great difficulty in iteration. Therefore, we considered moving the capabilities of Raft upwards to achieve strong consistency of original data in the control plane. This way, the election process is separated from the log replication process, and the cost of each election is relatively lower, requiring synchronization of only a limited amount of data.

In the Controller, we use the Raft algorithm to elect the Active Controller, which is responsible for handling elections and synchronization tasks in the data plane. Other Controllers only sync the processing results of the Active Controller. This design ensures that the Controller itself is highly available and that only one Controller is handling Broker election tasks.

In the latest RocketMQ, two implementations of distributed consensus algorithms within the Controller are provided at this level: DLedger and JRaft. These two consensus algorithms can be easily selected in the Controller’s configuration file. Essentially, both are implementations of the Raft algorithm, though their specific implementations differ:

Both implementations of Raft provide very simple API interfaces externally, allowing us to focus more on handling the tasks of the Active Controller.

Setting aside the consensus algorithm of the Controller itself, let’s focus on the role of the Active Controller in the entire process, which is the core of our control plane consensus - 3S algorithm.

The concept of Sync-State Set in the 3S algorithm is similar to Kafka’s In-Sync Replica (ISR) [17] mechanism, both of which reference Microsoft’s PacificA algorithm. Unlike ISR, which operates at the partition level, the 3S algorithm initiates elections at the entire Broker level and systematically summarizes the election scenarios needed for RocketMQ. Relatively speaking, the 3S algorithm is simpler, with more efficient elections and stronger performance in scenarios with a large number of partitions.

The 3S algorithm mainly operates during the interaction between the Controller and the Broker. The Active Controller handles the heartbeat and election for each Broker. Similar to Raft, the 3S algorithm has a heartbeat mechanism to achieve an effect similar to leases, that is, when the Master Broker does not report a heartbeat for a certain duration, a re-election may be triggered. However, unlike Raft, the 3S algorithm has a core for consensus handling: Controller. This centralized design simplifies leader election in the data plane and speeds up the achievement of consensus.

Under this design, Brokers no longer send heartbeats to peers (data plane) but instead send them uniformly upward (control plane). After sending an election request, the Controller decides which Broker can serve as the Master, while the others revert to Slave status. The selection criteria for the Controller can be diverse such as synchronization progress and node resources, or simple and effective such as reaching a certain threshold of data synchronization, as long as the node is within the Sync-State Set. This is also a form of Strong Leader, but different from Raft in that:

In Raft, it is like group work where classmates (Brokers) vote to elect a group leader, whereas in the 3S algorithm, the class teacher (Controller) directly appoints the leader based on who raises their hand fastest.

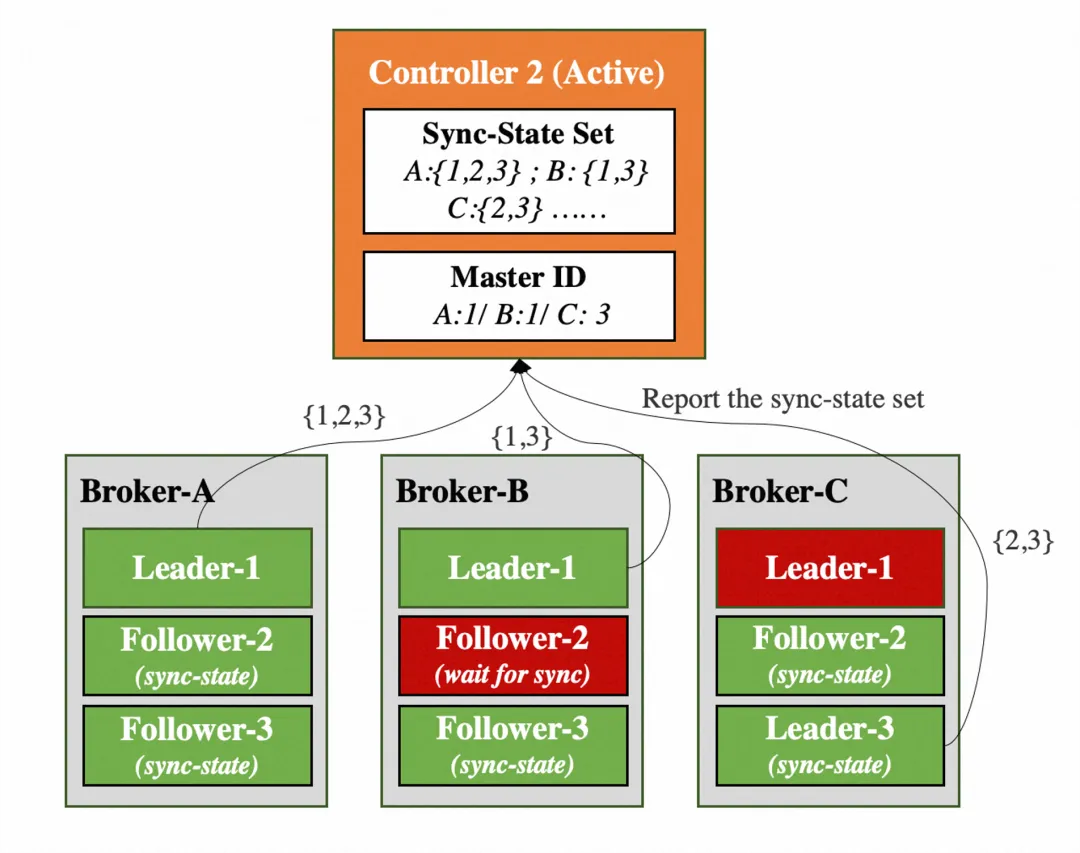

Figure 8: Multiple Broker clusters report their master-slave roles and synchronization status to the Active Controller.

As shown in the figure above, the Leaders of the three Broker clusters regularly report their synchronization status to the Active Controller:

B. Follower-2 in cluster B might have just started and is still synchronizing historical messages, so the Sync-State Set does not include it, and when the Leader fails, the Controller will not choose it as well.

What are the benefits of this design? The principle behind the Raft algorithm is "voting," where peers are equal and follow a "majority rules" outcome. Therefore, for a cluster with 2n+1 nodes, Raft can tolerate up to n node failures, requiring at least n+1 nodes to remain operational. However, the 3S algorithm has an election center, and the RPC for each election is sent upward. It does not need approval from other nodes to elect a node. Therefore, for a cluster with 2n+1 nodes, it can tolerate up to 2n node failures. That means that the number of replicas does not need to exceed half of the total number of replicas and does not need to satisfy the "majority" rule. Generally, having at least two replicas is sufficient, achieving the balance between reliability and throughput.

The above example succinctly introduces the relationship and differences between the 3S algorithm and Raft. It can be said that the design concept of the 3S algorithm originates from Raft but surpasses it in specific scenarios.

Additionally, the consensus of the 3S algorithm operates at the entire Broker level. Therefore, we optimized the timing of leader elections. The following scenarios may trigger an election (the descriptions in parentheses offer more vivid analogies for easier understanding, likening the election process to selecting a group leader):

Control Plane, initiated by the Controller (initiated by the class teacher):

Data Plane, initiated by a Broker to elect itself as master (a student volunteers to be the group leader):

Through these optimizations, the 3S algorithm demonstrates powerful functionality in RocketMQ 5.0, making the Controller an indispensable component in the high-availability design paradigm. In actual use cases, the 3S algorithm includes additional detail optimizations that allow it to tolerate more severe scenarios, such as simultaneous failures in the control plane and data plane, with more than half of the nodes failing. These results will be demonstrated in the chaos experiments discussed later.

The control plane ensures role consensus among Controllers through the Raft algorithm and role consensus within Brokers through the 3S algorithm. After the Broker roles are determined, how should the data plane ensure strong data consistency based on the election results? The algorithms involved are not complex, so the design of RocketMQ will be introduced from an implementation perspective. The data plane consensus in RocketMQ primarily consists of the following two components:

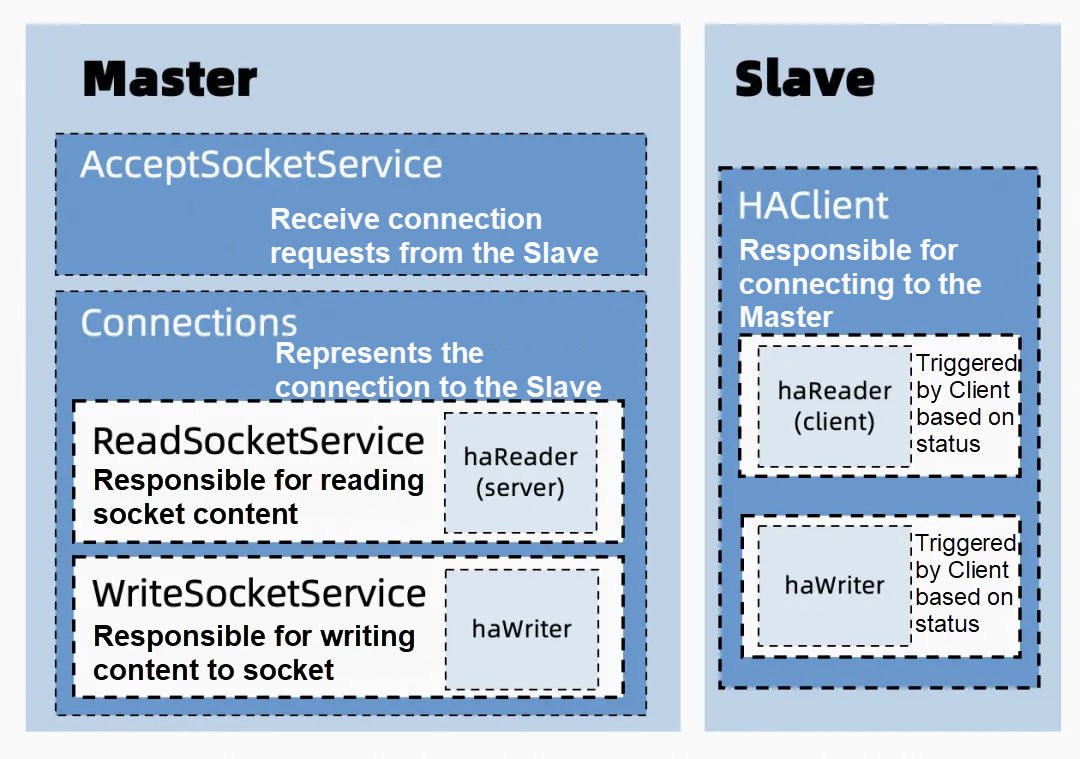

• HAClient: An essential client within each Slave’s HAService, responsible for managing read and write operations in synchronization tasks.

• HAConnection: Represents the HA connection in the Master, with each connection theoretically corresponding to one slave. This connection class stores various details of the transmission process, including the channel, transmission status, current transmission offset, and other information.

To better illustrate the design structure of RocketMQ in this aspect, the following figure is provided. It shows that the core is still the data transmission process, and a Reader and a Writer are designed respectively:

Figure 9: Specific implementation of the data plane replication process, designed separately for Master and Slave, but capable of switching after elections.

How does such a simple design ensure strong consistency when writing data?

The core consensus actually exists in the establishment and maintenance of the HAConnection (depicted as the dark blue box in the lower-left corner of the figure). The Master node in each Broker cluster maintains connections with all Slaves and stores these in a Connection table. After each Slave requests data replication, it reports the final replication offset and results, allowing the Master node to determine the Sync-State Set to report to the Controller.

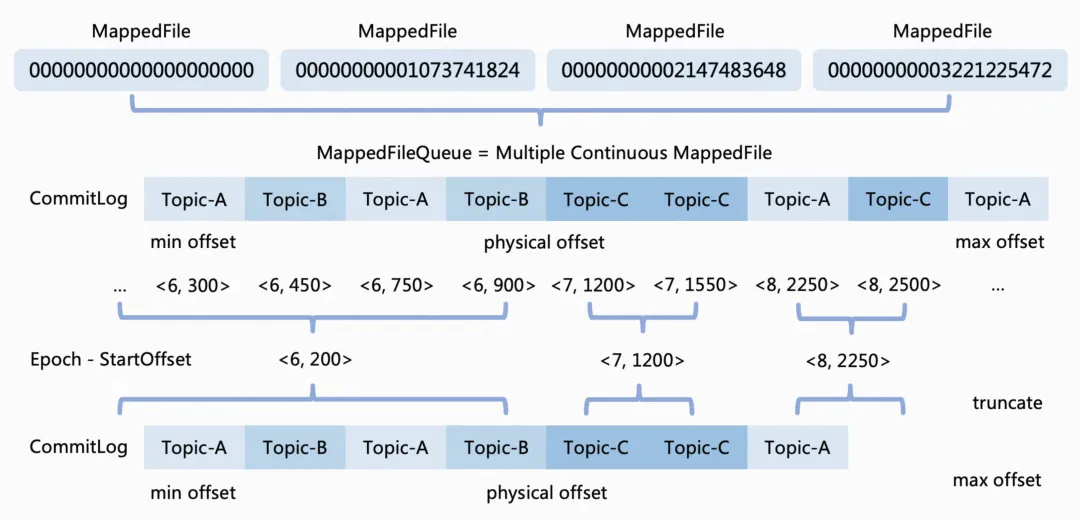

During the establishment of HAConnection, there is a HandShake phase that ensures data consistency. This phase truncates the CommitLog to ensure that all data before the replication offset is strongly consistent. This process is achieved through markings in the Epoch file: The Epoch file contains the state of each election. After each election, the Master node leaves a mark in the Epoch file, which includes the current election term and the current initial offset.

From this, we can see that our data plane consensus algorithm has some similarities to Raft: the Raft algorithm increments the term number after each election, which determines the authority for subsequent leader elections. In the data plane consensus algorithm, once the election result is confirmed, the term number is used to represent consensus across multiple election results. When the term number aligns with the log offset, it indicates that the two Brokers have agreed on the election, thus the previous data is considered strongly consistent, and only the consistency of subsequent data needs to be ensured. To help understand this, the following figure can be referenced:

Figure 10: From the article "Interpreting the New High Availability Design of RocketMQ 5.0"

Similar to the figure above, the top rectangular bar represents MappedFile, the log storage form used by RocketMQ. The two rectangular bars below represent the commitlogs of the Master and Slave, respectively. The Slave node will find the largest that matches by looking backward, then truncate to that point and start replicating forward. This replication is handled by a dedicated service in RocketMQ, decoupling the replication and election processes of the Master and Slave nodes. Only when a Slave node catches up with the Master node as much as possible will it be included in the Sync-State Set and become eligible for future elections.

As the saying goes, "Empty talk misleads the country; practical work strengthens it." Whether a design is advanced or redundant needs to be verified through various tests.

For systems like RocketMQ that are widely used in production, we must simulate the faults that closely resemble real-world scenarios to test their availability. Here, we need to introduce a new concept which is chaos engineering.

The original definition of chaos engineering [13] is: Chaos engineering is the discipline of experimenting on a system in order to build confidence in the system's capability to withstand turbulent conditions in production.

From this definition, chaos engineering is a software engineering method aimed at verifying the reliability of a system by intentionally introducing chaos into the production environment. The fundamental assumption is that the production environment is complex and unpredictable, and by simulating various failures, potential issues can be discovered and resolved. This approach helps ensure the system can continue to operate effectively when faced with real-world challenges.

You have written code and must know that a well-designed system can cleverly pass various test cases, but issues often arise after deployment. Therefore, for a system to demonstrate high availability, it must excel in the face of complex and unpredictable frequent failures introduced by chaos engineering, rather than just passing test cases.

Below, we will detail the "torture tests" we conducted to verify the high availability of RocketMQ.

OpenChaos [14], tailored for cloud-native scenarios, is under the OpenMessaging and hosted by the Linux Foundation. Currently, it supports chaos testing for the following platforms: Apache RocketMQ, Apache Kafka, DLedger, Redis, Zookeeper, etcd, and Nacos.

Currently, OpenChaos supports injecting several types of faults, among which the main ones are:

In actual scenarios, the most common faults are these four types: network partitioning, packet loss, server crashes, and system hangs. Additionally, OpenChaos supports other more complex specific scenarios. Take Ring and Bridge as examples. In Ring, each node can see a majority of other nodes, but no node sees the same majority of any other node. In Bridge, the network is split into two parts but retains one central node that maintains continuous bidirectional connectivity with components on both sides. The conditions for forming these scenarios are very stringent. In addition, these scenarios prevent consensus by "obstructing visibility among nodes to avoid forming a global majority." Theoretically, prolonged network partitioning and packet loss could also simulate these situations and even more complex ones.

Therefore, we injected a large number of the four types of chaos faults listed above, observed whether there was any message loss in the cluster, and recorded the fault recovery time.

Our chaos testing validation environment is set up as follows:

The configuration of the seven machines is as follows: Processor: 8 vCPUs, Memory: 16 GiB, Instance Type: ecs.c7.2xlarge, Public Network Bandwidth: 5 Mbps, Internal Network Bandwidth: 5 up to 10 Gbps.

During the tests, we set up several random test scenarios, each lasting at least 60 seconds, with a guaranteed 60-second interval between recovery and the injection of the next fault:

System crashes: This chaos fault is injected using the kill -9 command to terminate random processes within the specified scope.

System hangs: This chaos fault is injected using the kill -SIGSTOP command to simulate paused processes.

Packet loss: This chaos fault is injected using the iptables command to specify a certain percentage of packet loss between machines.

Network Partitioning: This chaos fault is also injected using the iptables command to completely partition nodes.

The actual test scenarios and groups are far more extensive than those listed above, but some include overlapping conditions, such as the startup and shutdown of a single broker/controller, which will not be listed separately. Additionally, we performed cross-testing on important parameters of the Broker. The tested switches include: transientStorePoolEnable (whether to use direct memory), and slaveReadEnable (whether the slave node provides the ability to read messages). We also conducted separate tests for the types of Controllers (DLedger/JRaft). Each scenario was tested at least five times, with each test lasting at least 60 minutes.

For all the scenarios mentioned above, the total duration of chaos testing is:

12 (number of scenarios) × 2 (Broker switch configurations) × 2 (Controller types) × 5 (tests per scenario) × 60 (duration per test) = 14,400 minutes.

Since the injection duration is one minute and the recovery duration is one minute, the total number of fault injections is at least 14,400 / 2 = 7,200 times (in reality, the injection duration and number of injections were significantly higher).

Based on the recorded test results, the conclusions are as follows:

This article summarizes the evolution of RocketMQ and Raft. From the very beginning, RocketMQ faithfully applied Raft to develop DLedger CommitLog, and now it has evolved into a separation of the control plane and data plane, both of which are based on the Raft protocol. This is the evolution specific to RocketMQ. Now, RocketMQ’s high availability has gradually matured. Based on a three-layer consensus protocol, it achieves consensus among Controllers, between Controllers and Brokers, and among Brokers.

With this design, RocketMQ’s role and data consensus are neatly divided across multiple layers and can collaborate orderly. When election and replication are decoupled, we can thus better develop consensus protocols across different layers, such as directly applying a superior consensus algorithm to Controllers without affecting the data plane.

Actually, the Raft design has contributed much to the high availability of RocketMQ, enabling RocketMQ to adopt the design principles of Raft and make improvements suited to its needs. The consensus algorithm and high availability design of RocketMQ [9] received recognition from the academic community in 2023 and was accepted at the CCF-A class academic conference ASE 23'. We look forward to seeing even better consensus algorithms in the future that can be adapted and utilized in RocketMQ’s real-world scenarios.

If you want to learn more about RocketMQ, please refer to: https://rocketmq-learning.com/

[1] Apache RocketMQ

https://github.com/apache/rocketmq

[2] OpenMessaging DLedger

https://github.com/openmessaging/dledger

[3] SOFAJRaft

https://github.com/sofastack/sofa-jraft

[4] Diego Ongaro and John Ousterhout. 2014. In search of an understandable consensus algorithm. In Proceedings of the 2014 USENIX conference on USENIX Annual Technical Conference (USENIX ATC'14). USENIX Association, USA, 305-320.

[5] OpenMessaging DLedger

https://github.com/openmessaging/dledger

[6] DLedger - a commitlog repository based on the Raft protocol

https://developer.aliyun.com/article/713017

[7] "What can we learn from Didi's system crash?"

[8] Mahesh Balakrishnan, Jason Flinn, Chen Shen, Mihir Dharamshi, Ahmed Jafri, Xiao Shi, Santosh Ghosh, Hazem Hassan, Aaryaman Sagar, Rhed Shi, Jingming Liu, Filip Gruszczynski, Xianan Zhang, Huy Hoang, Ahmed Yossef, Francois Richard, and Yee Jiun Song. 2020. Virtual consensus in delos. In Proceedings of the 14th USENIX Conference on Operating Systems Design and Implementation (OSDI'20). USENIX Association, USA, Article 35, 617-632.

[9] Juntao Ji, Rongtong Jin, Yubao Fu, Yinyou Gu, Tsung-Han Tsai, and Qingshan Lin. 2023. RocketHA: A High Availability Design Paradigm for Distributed Log-Based Storage System. In Proceedings of the 38th IEEE/ACM International Conference on Automated Software Engineering (ASE 2023), Luxembourg, September 11-15, 2023, pp. 1819-1824. IEEE.

[10] PacificA: Replication in Log-Based Distributed Storage Systems.

https://www.microsoft.com/en-us/research/wp-content/uploads/2008/02/tr-2008-25.pdf

[11] braft

https://github.com/baidu/braft

[12] Leslie Lamport. 2001. Paxos Made Simple. ACM SIGACT News (Distributed Computing Column) 32, 4 (Whole Number 121, December 2001), 51-58.

[13] Chaos Engineering

https://en.wikipedia.org/wiki/Chaos_engineering

[14] OpenMessaging OpenChaos

https://github.com/openmessaging/openchaos

[15] What does In-Sync Replicas in Apache Kafka Really Mean?

https://www.cloudkarafka.com/blog/what-does-in-sync-in-apache-kafka-really-mean.html

The Evolution of the Batch Processing Model in Apache RocketMQ

New Scenarios and New Capabilities: Observability Innovations in the AI-Native Era

212 posts | 13 followers

FollowAlibaba Cloud Native - July 18, 2024

Alibaba Cloud Native Community - October 26, 2023

Alibaba Cloud Native Community - November 20, 2023

Alibaba Cloud Native Community - December 6, 2022

Alibaba Cloud Native Community - December 17, 2025

Alibaba Cloud Native Community - January 5, 2023

212 posts | 13 followers

Follow Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native