By Xieyang

In distributed systems, network failures, machine downtime, and disk damage are inevitable. To provide uninterrupted and reliable services to users, the system must have redundancy and fault tolerance capabilities. RocketMQ plays a crucial role in various production scenarios, including logs, statistical analysis, online transactions, and financial transactions. Different environments have varying demands for infrastructure cost and reliability.

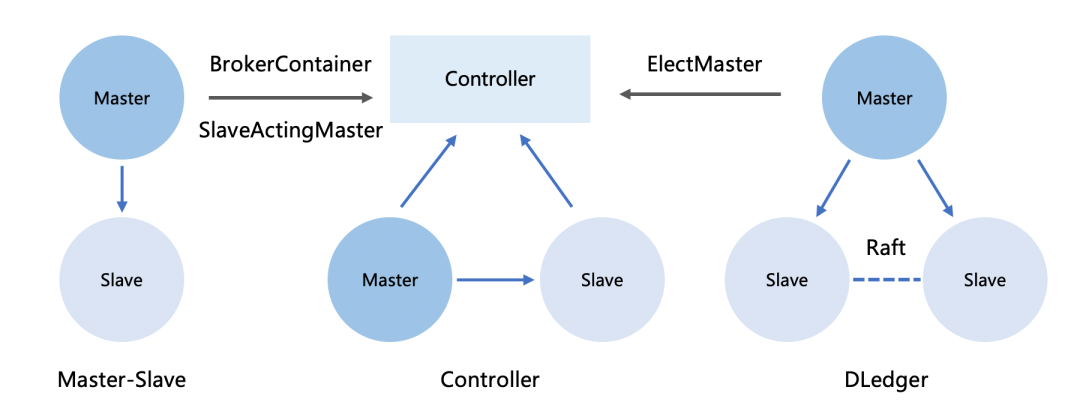

In RocketMQ v4, there are two mainstream high-availability designs: the switchless architecture in the active/standby mode and the Raft-based multi-replica architecture (shown on the left and right in the diagram). In practical production, we found that in the two-replica cold-standby mode, the secondary node has low resource utilization, and there are availability issues with special types of messages when the master node is down. However, Raft is highly serialized, and its majority-based acknowledgment mechanism lacks flexibility in expanding read-only replicas, making it challenging to support complex scenarios like peer-to-peer deployment of two data centers and multi-center setups in different locations.

In RocketMQ v5, the advantages of the previous solutions are integrated, and the DLedger Controller is proposed as the control node (shown in the middle part of the diagram). This makes the election logic a plug-in and optimizes the implementation of data replication.

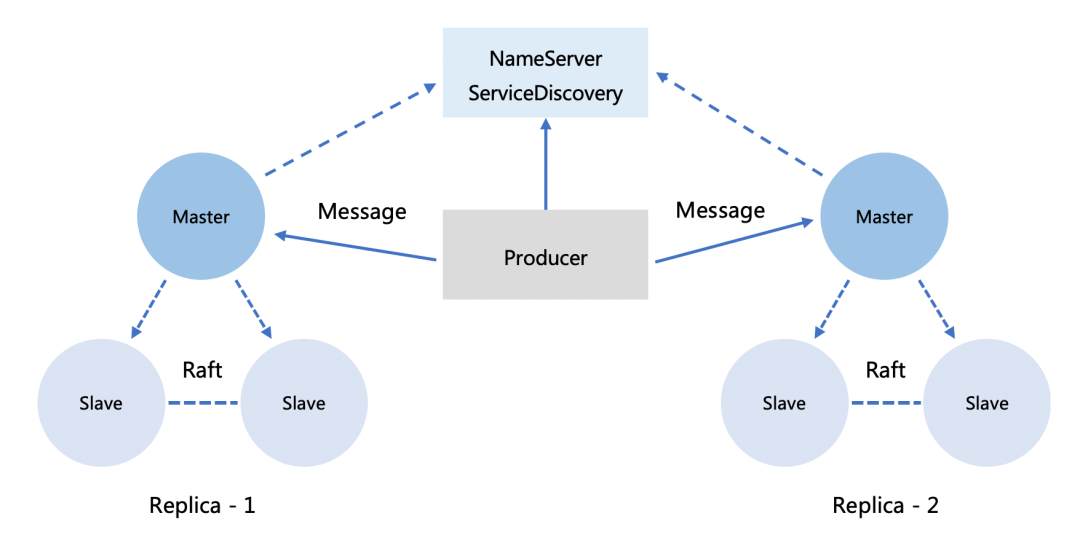

In a distributed system of the Primary-Backup architecture, data is replicated into multiple copies in order to prevent data loss. A group of nodes that handle the same data is referred to as a replica set. The replica set can operate at the file level (e.g. HDFS) or at the partition or queue level (e.g. Kafka). Each storage node can accommodate multiple replicas in different replica sets, or it can act as an exclusive node like RocketMQ. Exclusive nodes simplify data persistence by ensuring that only the primary replica of each replica set responds to read and write requests, with secondary replicas typically configured as read-only to balance the load. The election process allows one replica within the replica set to hold an exclusive write lock.

RocketMQ configures a ClusterName for each broker node that stores data. The BrokerName is used to identify the broker node for efficient resource management. Multiple nodes with the same BrokerName form a replica set. Each replica also has a unique BrokerId starting from 0 to indicate its identity. The node with BrokerId = 0 is the leader/primary/master of the replica set. In the event of a failure, the Broker numbers are reassigned through an election process. For example, if BrokerId = {0, 1, 3}, then 0 is the primary and the other two are secondary replicas.

Within a replica set, data can be shared among nodes in various ways. The degree of resource sharing can range from Shared Nothing to Shared Disk, Shared Memory, and Shared Everything. TiDB is an example of a shared-nothing architecture, where each storage node uses TiKV, based on RocksDB, for data storage. Transactions or MVCC are implemented through protocol interaction at the upper layer. TiKV offers user-friendliness and flexibility in solving issues like data hotspots and fragmentation during scaling. However, implementing transactions across multiple nodes requires multiple network communications. Shared Everything architectures, such as AWS Aurora and Alibaba Cloud PolarStore, fully run applications in user space using kernel bypass, maximizing storage reuse and reducing resource consumption. These architectures involve one primary and multiple secondary nodes sharing a reliable underlying storage system, enabling one write operation for multiple reads and fast switching.

Most key-value (KV) operations use consistent hashing to allocate nodes. However, when the primary replica set experiences storage jitter, primary-secondary switchovers, or network partitions, the keys corresponding to the affected shard cannot be updated, and some local operations may fail. In contrast, message systems operate differently. Although they handle large amounts of traffic, data interaction across replica sets is rare. Unordered messages that fail to be sent to the expected partition can be written to other replica sets (shards). The failure of one replica set does not have a significant impact on the overall system. This provides additional availability across replica sets at the service level. Furthermore, as a PaaS layer product, RocketMQ is widely deployed in various environments, such as Windows and Linux on Arm. To provide better flexibility, RocketMQ aims to reduce deep dependencies with IaaS layer products. Partial fault isolation and light dependencies are important factors in RocketMQ's choice of the Shared Nothing architecture.

Within a replica set, each node may have different processing speeds, resulting in the concept of a log water level. Master nodes and slave nodes, which have minor differences compared to the former, form a synchronous replica set (SyncStateSet). The minor differences can be defined based on the small difference in log water level (file size) or the lag time of the slave node within a certain range. When the master node fails, the remaining nodes in the synchronous replica set may be promoted to become the master. In some cases, system disaster recovery drills may be needed, or a replica may need to be switched to become the new master during maintenance or grayscale upgrades of certain machines. This gives rise to the concept of priority replica. There are various principles and policies for selecting priority replicas, such as dynamically selecting the replica with the highest water level, the longest joining time, or the longest CommitLog. Static policies like rack and zone priority are also supported.

From the perspective of the model, there are a large number of topics on a single node of RocketMQ. If Kafka maintains state machines at the topic/partition granularity, node downtime may cause tens of thousands of state machines to switch. Such a thundering herd will result in many potential risks. Therefore, RocketMQ v4 chooses a single broker as the minimum granularity for switching. Compared with other finer-grained implementations, only the broker number needs to be reassigned when the replica identity switches, leaving minimum pressure on metadata nodes. Due to the small amount of communication data, the primary/secondary switchover can be accelerated. The impact of a single replica going offline is limited to the replica set, reducing management and O&M costs. This implementation has some disadvantages. For example, the storage nodes cannot achieve load balancing in the best state on the cluster. In addition, the coupling between topics and storage nodes is high, and horizontal scaling generally changes the total number of partitions, which requires additional processing logic at the upper layer.

To measure the availability of replica sets in a more standard and accurate manner, several academic terms have been introduced:

• RTO (Recovery Time Objective). It generally indicates the time from business interruption to recovery.

• RPO (Recovery Point Object). It measures business continuity. For example, if a hard disk is backed up every day, all updates of the latest backup will be lost when it fails.

• SLA (Service-Level Agreement). The manufacturer promises service quality to the user in the form of a contract. The higher the SLA, the higher the cost.

The number of nodes is closely related to reliability. A RocketMQ replica set may have 1, 2, 3, or 5 replicas based on different production scenarios.

How do I ensure the eventual consistency of data in a replica set? That must be achieved through data replication. Should we choose logical replication or physical replication?

Logical replication: There are many scenarios in which messages are synchronized. Various connector implementations essentially move messages from one system to another. For example, we can import and export data to systems such as Elasticsearch and Flink for analysis and select specific topics and tags for synchronization based on business needs. This is highly flexible and scalable. In this solution, as the number of topics increases, the system suffers performance losses caused by upper-layer implementations such as service discovery, offset, and heartbeat management. Therefore, in scenarios of message synchronization, RocketMQ supports data transfer in the form of message routing. Message replication is regarded as a special case of service consumption.

Physical replication: MySQL records bin logs and redo logs for operations. The bin log records the original logic of the statement, such as modifying a field in a row. The redo log belongs to a physical log and records which data page in a tablespace has been changed. In RocketMQ's scenarios, CommitLog at the storage layer abstracts an append only data stream through a linked list and the MappedFile mechanism of the kernel. The primary replica transmits uncommitted messages to other replicas in sequence (equivalent to redo log) and calculates the confirmation offset based on rules to determine whether the log stream is committed. This solution uses only one log and offset to ensure the consistency of primary-secondary write-ahead logs, simplifying replication and improving performance.

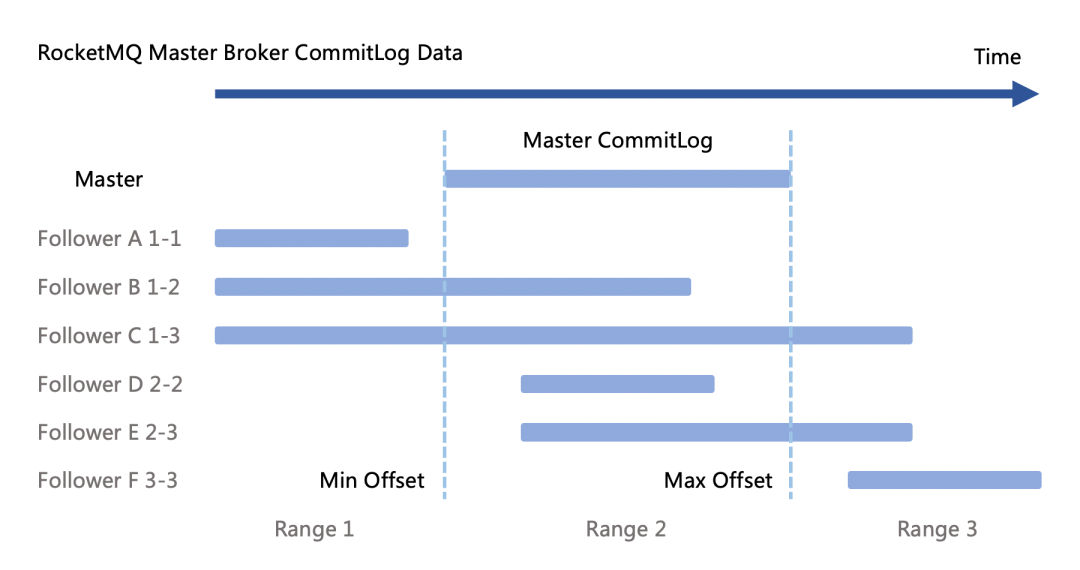

The multi-replica structure designed for availability obviously needs to replicate all the data that needs to be persisted. Choosing physical replication is more resource-saving. How does RocketMQ ensure the eventual consistency of data during physical replication? This involves data alignment. For near-FIFO systems, such as messages and streams, the more recent the message is, the higher the value is. A single node in the replica set of the message system does not retain the full data of this replica like a database system. On the one hand, the broker continuously normalizes the cold data and transfers it to the low-frequency medium to save costs. On the other hand, the data on the hot data disk is deleted from far to near. If one of the replicas in the replica set is down for a long time or in scenarios such as backup and reconstruction, complex situations such as misalignment and forking of log streams may occur. In the following figure, we use the first and last offsets of the CommitLog on the master node as reference offsets, so that we can divide three intervals. This is indicated by the blue arrow in the figure below. A simple arrangement and combination can prove that the CommitLog of the standby machine at this time must meet one of the following six conditions.

Each case is discussed and analyzed below:

• In the 1-1 case, the slave Max < = master Min is satisfied. Generally, the slave is online or offline for a long time. The master skips the existing logs and starts replicating from the master Min.

• In the case of 1-2 and 2-2, the master Min < slave Max < = master Max is satisfied. Generally, the log level falls behind due to the network disconnection of the slave. We can follow the master through the HA connection.

• In the case of 1-3 and 2-3, the slave Max > master Max. This may be because the master becomes a master again after the downtime of asynchronous write disk writing. It could also be caused by a CommitLog fork due to dual-master write during network partitioning. Because the new master lags behind the slave, a small number of unconfirmed messages are lost. In this case, the abnormal election mode (RocketMQ refers to this situation as an unclean election) should be avoided as much as possible.

• The 3-3 case, in theory, will not appear. The reason that the slave data is longer than the master data is that the master node data is lost, and there are abnormal elections at the same time. In this case, manual intervention is required.

As mentioned earlier, only the primary replica of each replica set in RocketMQ accepts external write requests. How do we determine the identity of a node?

Distributed systems are generally divided into centralized architectures and decentralized architectures. For MultiRaft, each replica group contains three or five replicas. We can select a master in a replica set through a consensus protocol such as Paxos and Raft. In a typical centralized architecture, two replicas are deployed to save resource costs on the data plane. In this case, external components such as ZK, ETCD, and DLedger Controller are used as the central node for election. Determining the membership by an external component involves two important issues in distributed systems: 1. How to determine whether the status of a node is normal 2. How to avoid the double masters problem

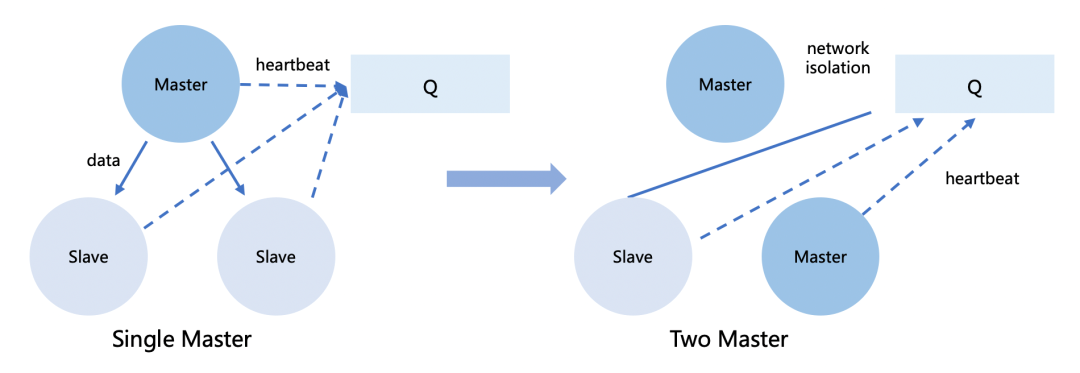

For the first problem, the solution of Kubernetes is relatively elegant. The Kubernetes health check to pods includes liveness probes and readiness probes. Liveness probes mainly detect whether the application is alive and restart the pod when it fails. Readiness probes determine and probe whether the application accepts traffic. In general, a simple heartbeat mechanism can only realize survival detection. Let's take a look at an example: Assume that there are three replicas, A, B, and C, in a replica set, and another node, Q (sentinel), is responsible for observing the node status and assuming the responsibilities of global election and status maintenance. Nodes A, B, and C periodically send heartbeats to node Q. If node Q fails to receive a heartbeat from a node for more than a period of time (usually two heartbeats), the node is considered abnormal. If the primary replica is abnormal, node Q will promote other replicas of the replica set to the primary replica and broadcast to inform other replicas.

In engineering practice, the possibility of node offline is generally less than that of network jitter. We assume that node A is the master of the replica set, and the network between node Q and node A is interrupted. Node Q considers node A abnormal. Reselect node B as the new master and inform nodes A, B, and C that the new master is node B. The node A works normally, and the network between the nodes B and C is also normal. Since the order in which the notification event of node Q reaches nodes A, B and C is unknown, if B is reached first, there are two working masters in the system simultaneously, nodes A and B. If both nodes A and B receive external requests and synchronize data with node C, serious data errors will occur. The above-mentioned "dual-master" problem occurs because although node Q thinks that node A is abnormal, node A thinks that it is normal. When both the old master and the new master accept the write, the log stream bifurcates. The essence of the problem is that the network partition causes the system to fail to agree on the node status.

Leases are an effective means to avoid the "dual-master" problem. The typical meaning of a lease is that the central node now recognizes which node is the master and allows the node to work properly during the lease validity period. If node Q wants to switch to a new master, it only needs to wait for the lease of the previous master to expire, and then it can issue a new lease to the new master node without the dual-master problem. In this case, the system demands a high availability of node Q, which may become a performance bottleneck of the cluster. There are still many implementation details in using leases in production. For example, depending on clock synchronization, the validity period of the issuer needs to be set slightly longer than that of the receiver, and the switching of issuers is also more complicated.

In the design of RocketMQ, we hope to use a decentralized design to reduce the global risks caused by the downtime of the central node (decentralization and whether the central node exists are two things), so the lease mechanism is not introduced. During the controller (corresponding to node Q) crash recovery, since the broker will permanently cache its own identity, each master replica will manage the state machine of this replica set. RocketMQ Dledger Controller can ensure that the core process of sending and receiving messages will not be affected when most replica sets are unavailable in the sentinel component. However, the old master cannot be downgraded due to the permanent cache identity, resulting in the system must tolerate dual masters when the network is partitioned. A variety of solutions have been produced. Users can select the AP model with availability as the priority through pre-configuration or the CP model with consistency as the priority. The AP model allows the system to ensure service continuity through short-term forking (the following section will continue to talk about why forking is difficult to avoid in message systems). The CP model configures the minimum number of replicas to make ACKs exceed more than half of the nodes in the cluster. In this case, the message sent to the old master will return a timeout to the client because the data cannot be sent to the slave through the HA link. The client will initiate a retry to the other shard. After the client goes through a service discovery cycle, the client can discover the new master.

In the case of network partitioning, especially the network partitioning between the old master and slave and the controller, at this time, the old master will not be automatically downgraded because the lease mechanism is not introduced. The old master can be configured as an asynchronous double write, and each message needs to be confirmed twice by the master and slave respectively before it can be returned to the client. However, when the slave is switched to the master, it will set synchronous write disk mode that only needs a single replica to confirm. In this case, the client can still send a message to the old master in a short period of time, and the old master can return the message after double replicas confirm. Therefore, the message sent to the old master will return a SLAVE_NOT_AVAILABLE timeout response, and the message will be sent to the new node after the client retries. After a few seconds, when the client gets the new route from the NameServer and controller, the old master is removed from the client cache. At this point, the secondary node is promoted.

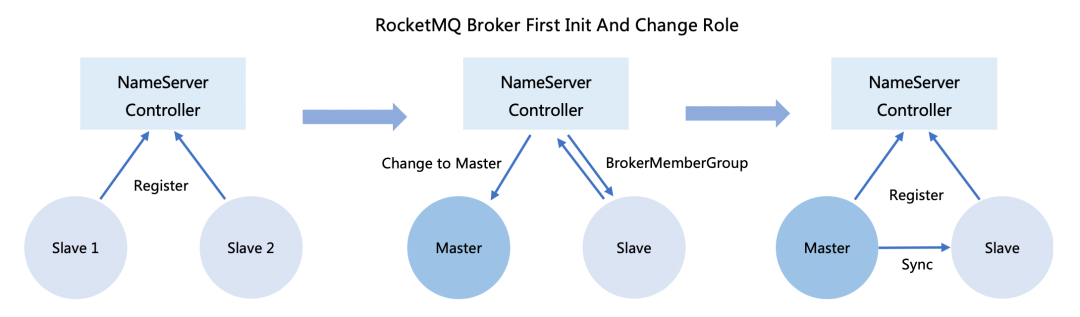

External components can assign node identities. The preceding figure shows the process of launching a two-replica replica set:

1. One out of multiple controllers becomes the master node for identity allocation after the election and redirecting the requests of brokers.

2. If multiple replicas exist in a RocketMQ replica set and need to select the primary node, the node will start as a slave by default and the secondary node will register itself to the controller.

3. The node gets the BrokerMemberGroup from the controller, which contains the description and connection information of this replica set.

4. The primary node maintains the information of the entire replica set, replicates data of the secondary node, and periodically reports the water level gap between the primary and secondary nodes and the replication speed to the controller.

The implementation of RocketMQ's weakly dependent controller does not break the assumption that each term in Raft has only one leader at most. In general, in projects, Leader Lease is used to solve dirty reads, and Leader Stickiness is used to cope with frequent switching to ensure the uniqueness of the master.

• Leader Lease: After the lease of the previous leader expires, wait for a period of time before initiating the leader election.

• Leader Stickiness: The unexpired follower rejects new leader election requests.

Note: Raft considers that only the node with the latest committed log is eligible to be a leader, while Multi-Paxos does not have this limit.

For the log continuity issue, Raft checks the log continuity through the offset check before confirming a log. If the log is not continuous, Raft rejects the log to ensure log continuity. Multi-Paxos allows holes in the log. Raft carries the commit index of the leader in AppendEntries. Once a log is in the majority, the leader updates the local commit index (corresponding to the confirm offset of RocketMQ) to complete committing. The next AppendEntries sends the new commit index to other nodes. Multi-Paxos has no log connectivity assumption and needs additional commit messages to notify other nodes.

In addition to network partitioning, many situations lead to the forked log data stream. See the following case: Three replicas use asynchronous replication and asynchronous persistence. Replica A is the old master, and replicas B and C are the slaves. At the moment of switching, the log water level of replica B is greater than that of replica C. At this time, C becomes the new master, and the data on replicas B and C will fork because replica B has an extra piece of unconfirmed data. So how does replica B judge the data fork between itself and replica C in a simple and reliable way?

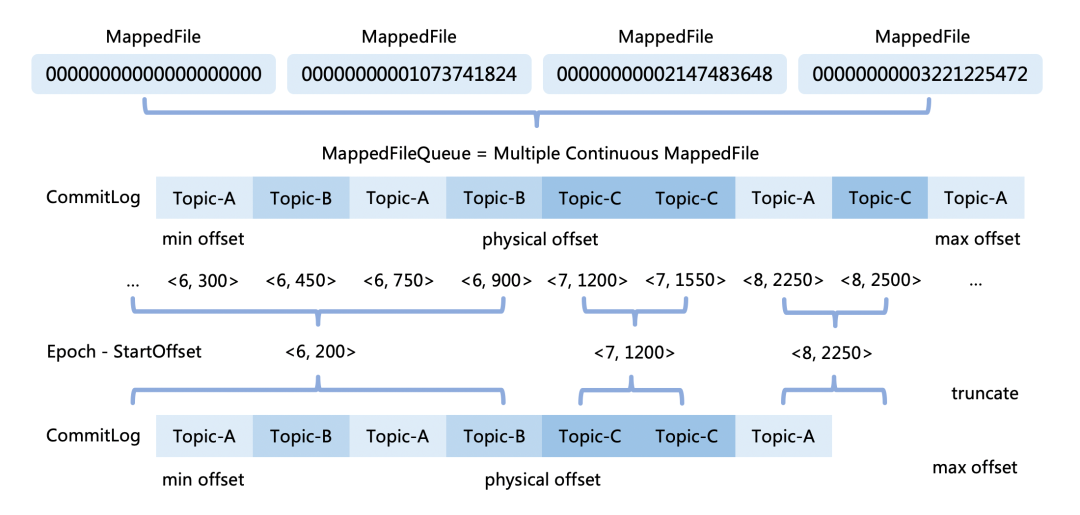

An intuitive idea is to directly compare the master and slave CommitLog from front to back byte by byte. In a general production environment, both the master and slave CommitLog have hundreds of GB of log file streams, and the scheme of reading and transmitting a large amount of data is time-consuming and laborious. Soon we found that a good way to determine whether two large files are the same is to compare the hash values of the data. The amount of data to be compared is reduced from hundreds of GB to hundreds of hash values at once. For the first different CommitLog file, local hash alignment can be adopted. There are still some computational costs. There is still room for optimization—implement a new truncation algorithm through Epoch and StartOffset. This Epoch-StartOffset satisfies the following principles:

The following is a specific case of election truncation. The idea and process of the election truncation algorithm are as follows:

Master CommitLog Min = 300, Max = 2500,EpochMap = {< 6, 200>, <7, 1200>, <8,2500>} Slave CommitLog Min = 300, Max = 2500, EpochMap = {< 6, 200>, <7, 1200>, <8,2250>}

The implementation code is as follows:

public long findLastConsistentPoint(final EpochStore compareEpoch) {

long consistentOffset = -1L;

final Map<Long, EpochEntry> descendingMap = new TreeMap<>(this.epochMap).descendingMap();

for (Map.Entry<Long, EpochEntry> curLocalEntry : descendingMap.entrySet()) {

final EpochEntry compareEntry = compareEpoch.findEpochEntryByEpoch(curLocalEntry.getKey());

if (compareEntry != null &&

compareEntry.getStartOffset() == curLocalEntry.getValue().getStartOffset()) {

consistentOffset = Math.min(curLocalEntry.getValue().getEndOffset(), compareEntry.getEndOffset());

break;

}

}

return consistentOffset;

}After the failure occurs, the system will repair the fork data, and there are many small details worthy of further study and discussion.

In the process of implementing data truncation, there is a very special operation. When the slave is switched to the master, the Confirm Offset of ConsumeQueue must be promoted to the MaxPhyOffset of CommitLog, even if it is unknown whether this part of the data is committed on the master. For Raft a few years ago, when a log was transmitted to the follower, the follower confirmed that it received the message, and the master applied the log to its own state machine and notified the client and all followers to commit the log. The two things happen at the same time. If the leader first replied that the client processing was successful, the leader hung up at this time. This is because the confirm offset of other followers was generally slightly lower than the leader, and the state machine is inconsistent before the pending log is applied to the follower's state machine, resulting in the loss of the data written to the leader. Let's take a specific case. Assume that there are two replicas, one master and one slave:

1. The master's max offset = 100. The master sends the current confirm offset = 40 and the data with message buffer = [40-100] to the slave.

2. After the slave replies to the master that the confirm offset is 100, the master needs to do several things at the same time.

3. At this time, the primary machine suddenly goes down, and the confirm offset of the secondary machine may be a value from 40 to 100.

Therefore, when the master switches to the slave, if the data is directly truncated at 40, it means that the message that the client has sent to the server is lost, and the correct water level should be raised to 100. However, the slave has not received the information that the confirm offset is 100 in 2.3, which is equivalent to submitting the pending message. In fact, the new leader will comply with the "Leader Completeness" rule. During the switchover, the pending entries of the old leader are neither deleted nor changed. Under the new term, the new leader will directly apply a larger version to submit the pending entries together. Here, the behaviors of the primary and secondary nodes of the replica set jointly ensure the security of the replicated state machine.

What is the symbol of a successful switchover, and when can I receive new traffic from the producer? The Raft can receive new traffic from the producer once the switchover is made, but for RocketMQ, the phase will be delayed a little until the index has been completely built. To ensure the consistency of the consumer queue, RocketMQ records the StartOffset of the consume queue offset in the CommitLog. At this time, the data between the confirm offset and max offset is received by the replica as a slave. The StartOffset of this part of messages in the consumer queue has been fixed. However, due to the optimization of the pre-calculation offset of RocketMQ, when the message is actually put into the real data dispatch of CommitLog, it will be found that the consume queue at the corresponding position has been occupied when the new traffic from the producer is received, resulting in inconsistent data in the primary and secondary indexes. The essential reason is that the optimization of pre-built indexes at the RocketMQ storage layer is intrusive to logs. However, the cost of a short wait during a switchover is far less than the speed-up benefit at normal runtime.

Currently, RocketMQ separately manages metadata in memory. The standby machine synchronizes the data of the current primary node every five seconds. For example, if a temporary topic is created on the primary node and a message is received, and the topic is deleted within a synchronization cycle, the metadata of the primary node may be inconsistent with that of the secondary node. Or when the offset is updated, there are multiple consumer offset update links in the multi-replica architecture for a single queue. When the consumer pulls messages, the consumer actively updates the broker, controls the reset offset, and updates the HA link. When there are master-slave switches in the replica set, the consumer group simultaneously goes online and offline. Due to the time difference in route discovery, it may also cause two different consumers in the same consumer group to consume the same queue on the primary and secondary machines in the same replica set.

The reason is that the standby machine redoes metadata update and message flow asynchronously, which may cause dirty data. Due to the large number of topics and groups on a single RocketMQ node, there are a large amount of data to be persisted through the implementation of logs. In complex scenarios, log-based rollback depends on the snapshot mechanism, which also increases computing overhead and recovery time. This problem is very similar to databases. MySQL creates MDL locks when executing DDL to modify metadata, which prevents users' other operations from accessing tablespaces. When the secondary database synchronizes the primary database, a lock will be created. The two logs represented by the start point and end point of metadata modification are not atomic operations. This means that if the primary database goes down during metadata modification, the MDL lock held by the secondary database cannot be 'released. MySQL's solution is to write a special log after each crash recovery of the primary database to notify all connected secondary databases to release all MDL exclusive locks held by them. State machine replication for all operations to follow the log stream requires multiple log types at the storage layer and is more complex to implement. RocketMQ chooses to operate in another synchronous mode, that is, a two-phase protocol similar to ZAB. For example, when updating the offset, you can choose to configure LockInStrictMode to make all the secondary databases synchronize the modification. In fact, to optimize the above-mentioned offset jumping phenomenon, when the RocketMQ client is not restarted, the client will obtain messages by preferentially adopting the local offset when the server undergoes primary-secondary switchover, further reducing repeated consumption.

Synchronous replication means that a user's operation has been committed on multiple replicas. In normal cases, assume that all three replicas in a replica set must confirm the same request, which is equivalent to writing data through three replicas (referred to as 3-3 writes). 3-3 writes provide very high data reliability, but when all slave nodes are configured for synchronous replication, the interruption of any synchronous node will cause the entire replica set to fail to process the request. If the third replica is cross-zone, the long tail also causes some latency.

In asynchronous replication mode, write requests that have not been replicated to the slave node are lost. Write operations confirmed to the client cannot be guaranteed to be persisted. Asynchronous replication is a configuration mode in which the RPO is not 0 when a fault occurs. The system can continue to respond to write requests regardless of the status of the slave node. This reduces the latency and improves the throughput. In order to balance the two, usually only one of the slave nodes is in synchronous replication mode, while the other nodes are in asynchronous replication mode. Whenever a synchronous slave node becomes unavailable, or its performance degrades, another asynchronous slave node is promoted to synchronous mode. This ensures that at least two nodes (the master node and a synchronization slave node) have the latest replica of the data. This mode, called 2-3 write, helps avoid jitter, provides better delay stability, and is sometimes called semi-synchronous replication mode.

In RocketMQ, asynchronous replication is also widely used in scenarios where the message read/write ratio is extremely high, the number of slave nodes is large, or multiple replicas in different locations. Synchronous replication and asynchronous replication are configured by using the brokerRole parameter in the Broker configuration file. This parameter can be set to one of the following values: ASYNC_MASTER, SYNC_MASTER, and SLAVE. In practical applications, you must properly set the persistence mode and master-slave replication mode based on your business scenarios. Generally, the network speed is higher than the local I/O speed. Asynchronous persistence and synchronous replication are a trade-off between performance and reliability.

| Replication mode | Advantage | Disadvantage |

| Synchronous replication | The written messages are not lost. It is suitable for scenarios that require high reliability. | High write latency |

| Asynchronous replication | The secondary downtime doesn't affect the primary. It is suitable for scenarios that require high performance. | Possible loss of data |

Synchronous replication means that a piece of data is confirmed by both the master and slave before returning to the user that the operation was successful, which can ensure that the message is still in the slave after the master is down, which is suitable for scenarios with high reliability requirements. Synchronous replication can also limit the amount of unsynchronized data to reduce the memory pressure of the HA link. The disadvantage is that the false death of one slave in the replica group will affect writing. Asynchronous replication does not need to wait for the slave for confirmation and has higher performance than synchronous replication. Uncommitted messages may be lost during switchover (refer to the preceding log forking). In scenarios with three or even five replicas and high reliability requirements, asynchronous replication cannot be used. If synchronous replication is used, a response is returned only after each replica confirms. This seriously affects efficiency when the number of replicas is large. Regarding the number of replicas to confirm a message, the RocketMQ server has a number of quantitative configurations for flexible adjustment:

• TotalReplicas: total replicas.

• InSyncReplicas: Each message must be confirmed by at least this number of brokers. (This parameter does not take effect if the primary message is in ASYNC_MASTER or AllAck mode).

• MinInSyncReplicas: The minimum number of synchronized replicas. The client will fail quickly if InSyncReplicas is less than MinInSyncReplicas.

• AllAckInSyncStateSet: The master confirmation is persisted. A result of true indicates that all slaves' confirmations in the SyncStateSet are required.

Therefore, RocketMQ proposes that in the synchronous replication mode, replica sets can also support adaptive degradation (parameter name: enableAutoInSyncReplicas) of replica sets to adapt to special scenarios. Automatic degradation is performed when the number of surviving replicas in a replica set decreases, or the gap between log entries is too large. The minimum number of replicas is the minInSyncReplicas. For example, configure for two replicas. Under normal circumstances, the two replicas will be in synchronous replication mode. When the secondary node is offline or feigns death, adaptive degradation will be performed to ensure that the primary node can still send and receive messages normally. This feature provides users with an availability-first choice.

In the implementation of RocketMQ v4.X, the broker periodically serializes all its topics every 30 seconds and transmits them to the NameServer for registration and keeping them alive. The large size of the metadata of topics on brokers renders large network traffic costs. Therefore, the registration interval of brokers cannot be set too short. At the same time, the NameServer adopts a delay isolation mechanism for brokers to prevent the registration information of all brokers from being removed instantly when the NameServer network jitters and causing an avalanche of services. By default, it is replaced only when the abnormal master is down for more than 2 minutes or when the slave is switched to the master and re-registered. The fault tolerance design also leads to slow failover of brokers. RocketMQ v5.0 introduces a lightweight heartbeat (parameter: liteHeartBeat), which logically splits the registration behavior of brokers and the heartbeat of NameServer, reducing the heartbeat interval to 1 second. When the NameServer receives no heartbeat request from the broker at a configurable interval of 5 seconds, the broker is removed. This shortens the time for self-healing in abnormal scenarios from minutes to seconds.

In the early stages, RocketMQ introduced a master-slave deployment architecture based on the Master-Slave mode, providing a certain level of high availability. When the master node is under heavy load, read traffic can be redirected to the slave node. However, this mode lacks a master selection mechanism, resulting in complete interruption of message sending for the replica set if the master node becomes unavailable. Additionally, secondary messages such as delayed messages, transactional messages, and Pop messages may not be consumed or experience delays. Moreover, the standby machine's resource utilization is low in normal working scenarios, leading to resource waste. To address these issues, the community proposed running multiple BrokerContainers within a Broker process. This design is similar to Flink's slot concept, enabling a Broker process to run multiple nodes as containers. It reuses transport layer connections, service thread pools, and other resources, allowing a single node to handle multiple traffic loads in a master-slave cross-deployment manner. This design does not rely on external dependencies and possesses strong self-healing capabilities. However, the isolation performance in this mode is weaker than that in native container mode, and the self-healing process becomes more complex due to the increased architectural complexity.

Another evolution path involves switching architectures. RocketMQ also attempted to manage high availability status by relying on Zookeeper's distributed lock and notification mechanism. Introducing external dependencies added complexity to the architecture, making it challenging to deploy in small environments and increasing deployment, operation, maintenance, and diagnostic costs. Alternatively, a master in the cluster can be automatically selected based on Raft. The replica identity in Raft is revealed and reused at the broker role level to eliminate external dependencies. However, the strongly consistent Raft version does not support flexible degradation policies and cannot be dynamically adjusted between consistency and availability. Both switching schemes are CP designs that prioritize consistency at the expense of high availability. When the primary replica goes offline, the master selection policy and scheduled route update policy still result in a long failover time. Additionally, Raft's requirement for three replicas poses significant cost pressure. Certain solutions used to avoid and reduce I/O pressure, such as the native RocketMQ TransientPool and zero-copy methods, cannot be effectively utilized under Raft.

The RocketMQ DLedger convergence mode is a systematic solution that combines the preceding two routes in the evolution of RocketMQ 5.0. The core features are as follows:

The following table compares several implementations:

| Mode | Advantage | Disadvantage | |

| No switching Architecture | Master-Slave Mode | It is simple to implement, suitable for small and medium-sized users, and has strong human intervention control. | Cold backup wastes resources. Manual intervention is required to cope with faults. Messages cannot be written to the faulty replica set, so the consumption of some secondary messages is suspended, rendering high O&M costs. |

| Broker Container Mode | It is unnecessary to select a master. It has no external dependencies, and has strong self-healing capabilities. The failover time is reduced from about 30 seconds to less than 3 seconds. | Because data is replicated from the master to the slave in one direction, the mode increases the O&M complexity, making the self-healing process in secondary message scenarios complex. Therefore, it requires long-term production inspection. | |

| Switching Architecture | Use Raft to implement | Automatic master-slave switchover. | The failover time is long. Raft with strong consistency does not support flexible degradation and cannot make flexible adjustments between C and A. The cost pressure of three replicas is high. Replication links do not effectively present the benefits of native storage. |

| Convergence Architecture | Use Dledger Controller to implement. | A set of code supports both switchless and switching architectures, and the two modes can be switched. The replication protocol is significantly simplified and can achieve flexible degradation compared to other protocols. | This increases the complexity of NameServer deployment but requires large-scale production environment verification. |

1. The replica storage structure abstraction differs in terms of minimum granularity, and each of the three designs has its own advantages.

2. Complex parameter configurations are converged to the server. Both Kafka and RocketMQ support flexible configuration of ACK of a single message, which is a trade-off between data writing flexibility and reliability. RocketMQ, during its evolution to the cloud native, hopes to simplify the client API and configuration and let the business side care about the message itself. In this case, RocketMQ chooses to configure this value on the server.

3. Replica data is synchronized in different ways. Pulsar uses star-type writing: Data is written directly from the writer to multiple bookkeepers. It is suitable for scenarios where clients and storage nodes are co-located. The data path requires only 1 hop, and the latency is lower. The disadvantage is that when the storage and computing are separated, star-type write requires more network bandwidth between the storage cluster and computing cluster. RocketMQ and Kafka use Y-type writing: A client writes data to a primary replica and then forwards the data to another broker replica. Although the internal bandwidth of the server is abundant, a 2-hop network is required, which increases the latency. Y-type writing is beneficial for solving the issue of multi-client file writing and provides better latency stability by utilizing 2-3 writes to overcome glitches.

If you carefully read the source code of RocketMQ, you will find that RocketMQ pays great attention to handling various complex issues. These issues include node failures, network instabilities, replica consistency, persistence, availability, and latency. RocketMQ has accumulated these competencies over the years through its experience in production environments. As distributed technologies continue to evolve, new and intriguing technologies such as replication protocols based on RDMA networks are emerging. RocketMQ aims to collaborate with the community in order to evolve into a unified platform that integrates "messages, events, and streams".

1. Paxos design

https://lamport.azurewebsites.net/pubs/paxos-simple.pdf

2. SOFA-JRaft

https://github.com/sofastack/sofa-jraft

3. Pulsar Geo Replication

https://pulsar.apache.org/zh-CN/docs/next/concepts-replication

4. Pulsar Metadata

https://pulsar.apache.org/zh-CN/docs/next/administration-metadata-store

5. Kafka Persistence

https://kafka.apache.org/documentation/#persistence

6. Kafka Balancing leadership

https://kafka.apache.org/documentation/#basic_ops_leader_balancing

7. A Highly Available Cloud Storage Service with Strong Consistency

https://azure.microsoft.com/en-us/blog/sosp-paper-windows-azure-storage-a-highly-available-cloud-storage-service-with-strong-consistency/

8. PolarDB Serverless: A Cloud Native Database for Disaggregated Data Centers

https://www.cs.utah.edu/~lifeifei/papers/polardbserverless-sigmod21.pdf

A New Paradigm for Cloud-native Gateway Deployment | Higress 1.1 Supports Non-Kubernetes Deployment

640 posts | 55 followers

FollowAlibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native - June 7, 2024

Alibaba Cloud Native Community - November 20, 2023

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native - June 6, 2024

Alibaba Cloud Native - June 7, 2024

640 posts | 55 followers

Follow Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn MoreMore Posts by Alibaba Cloud Native Community