By Qingshan Lin (Longji)

The development of message middleware has spanned over 30 years, from the emergence of the first generation of open-source message queues to the explosive growth of PC Internet, mobile Internet, and now IoT, cloud computing, and cloud-native technologies.

As digital transformation deepens, customers often encounter cross-scenario applications when using message technology, such as processing IoT messages and microservice messages simultaneously, and performing application integration, data integration, and real-time analysis. This requires enterprises to maintain multiple message systems, resulting in higher resource costs and learning costs.

In 2022, RocketMQ 5.0 was officially released, featuring a more cloud-native architecture that covers more business scenarios compared to RocketMQ 4.0. To master the latest version of RocketMQ, you need a more systematic and in-depth understanding.

Today, Qingshan Lin, who is in charge of Alibaba Cloud's messaging product line and an Apache RocketMQ PMC Member, will provide an in-depth analysis of RocketMQ 5.0's core principles and share best practices in different scenarios.

This course covers the advanced message features of RocketMQ 5.0, still focusing on business messaging scenarios. As mentioned in the RocketMQ 5.0 overview, RocketMQ can handle complex business messaging scenarios. In this course, we'll explore how RocketMQ solves complex business scenarios from a feature perspective.

In the first part, we'll learn about RocketMQ's consistency features, which are crucial for trading and finance. In the second part, we'll examine RocketMQ's features, such as SQL subscription and scheduled messages, from the perspective of large-scale and complex businesses. In the third part, we'll analyze RocketMQ from a high availability perspective, focusing on the high-level availability requirements of large companies, including zone-disaster recovery and active geo-redundancy.

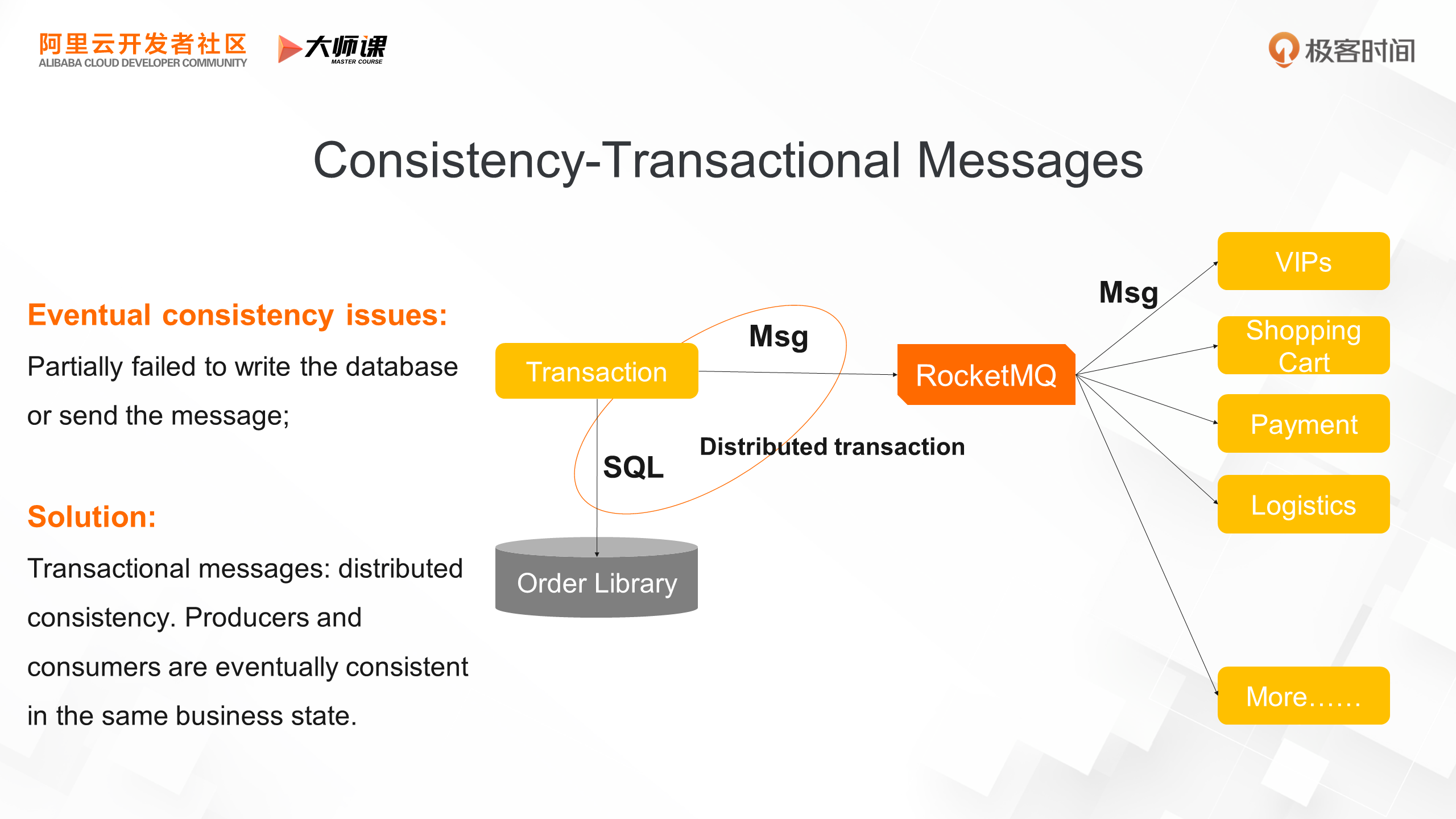

Let's start with the first feature of RocketMQ: transactional messages, which is closely related to consistency and sets RocketMQ apart from other message queues. We'll continue to use the example of a large-scale e-commerce system. As shown in the figure, if the payment is successful, the order status will be updated to Paid in the order database in the transaction system, and then the transaction system will send a message to RocketMQ. RocketMQ will notify all downstream applications that the order has been paid, ensuring subsequent performance.

However, there's a problem with this process: the transaction system writes to the database and sends the message separately, which is not a transaction. This can lead to various exceptions, such as the database write being successful but the message failing to send. In this case, the downstream application won't receive the order status, and for e-commerce businesses, this can result in a large number of users paying but not receiving their goods. Another scenario is that the message is sent successfully first, but the database write fails, causing the downstream application to think the order has been paid and prompting the seller to ship the goods, even though the user hasn't paid successfully. These exceptions can lead to a large amount of dirty data and have disastrous business consequences.

To address this, we need to use RocketMQ's advanced feature: transactional messages. The ability of transactional messages is to ensure the consistency of the producer's local transactions (such as writing to the database) and the consistency of sending transactions. Finally, through the Broker's at-least-once consumption semantics, we can ensure that the consumer's local transactions can also be executed successfully. Ultimately, the transaction status of producers and consumers for the same business will be consistent.

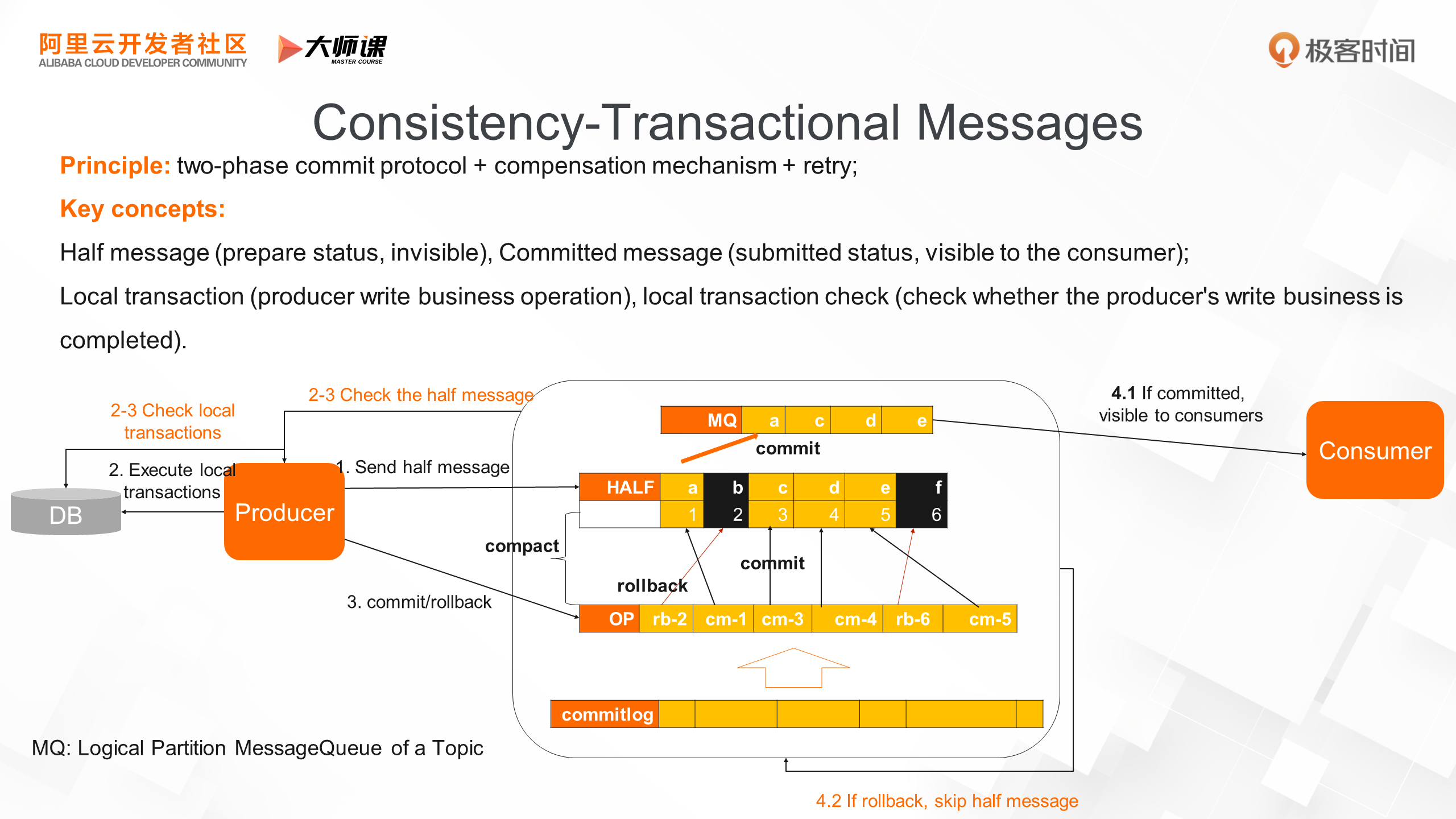

As shown in the following figure, transactional messages are implemented in two phases: commit and transaction compensation mechanism.

First, the producer sends a half message, also known as a prepare message, which is stored in the Broker's queue. Next, the producer executes a local transaction, typically writing to the database. After the local transaction is complete, the producer sends a commit operation to RocketMQ. RocketMQ writes the commit operation to the OP queue, compacts it, and writes the committed message to the ConsumeQueue, making it visible to the consumer. Conversely, if a rollback operation is performed, the corresponding half message is skipped. In case of exceptions, such as the producer going down before sending a commit or rollback, the RocketMQ Broker has a compensation check mechanism that periodically checks the transaction status of the producer and continues to advance the transaction.

Whether it is a Prepare message, a Commit/Rollback message, or a compact phase, the storage layer adheres to RocketMQ's design concept of sequential read/write, achieving optimal throughput.

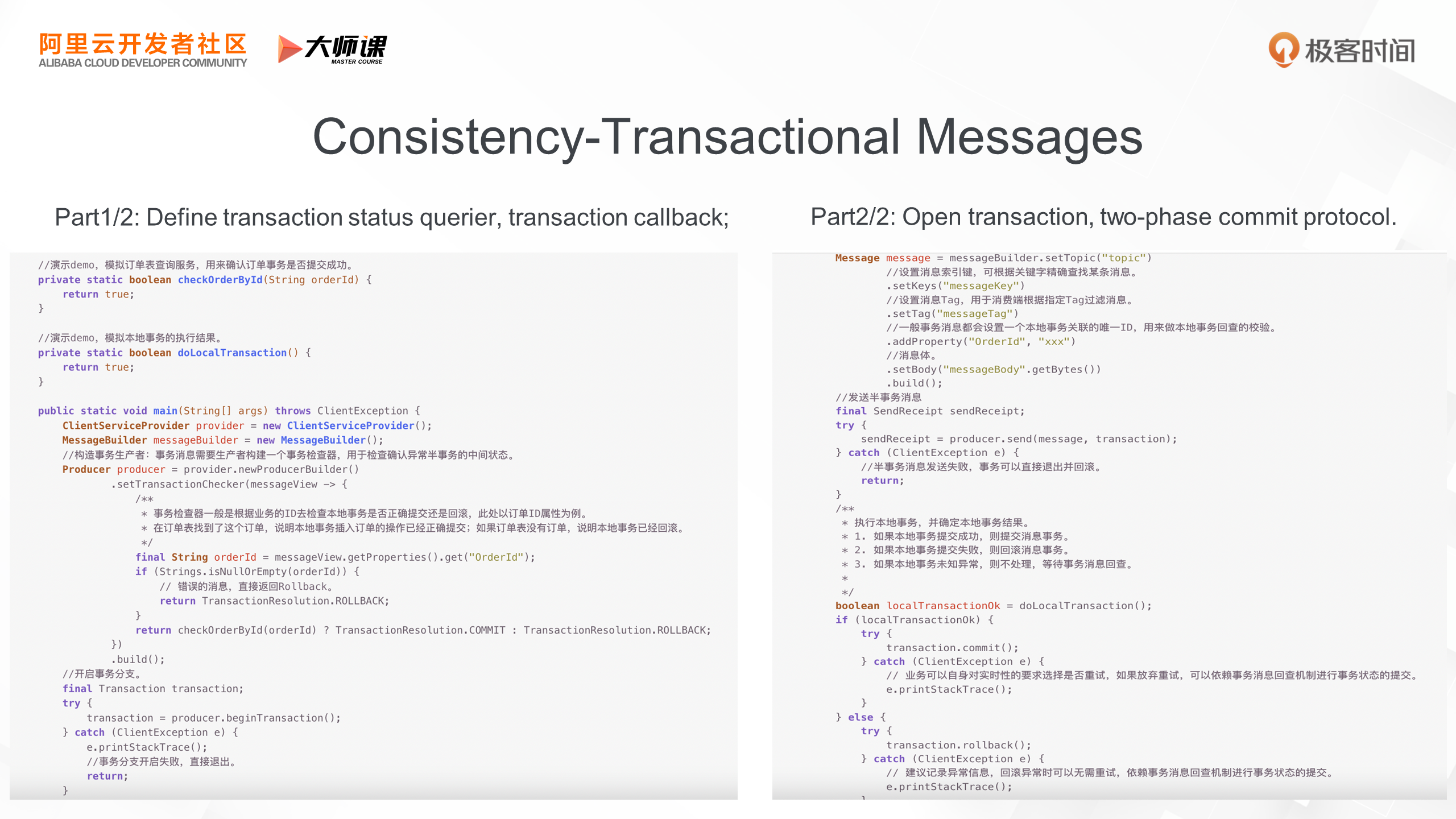

Next, let's look at a simple example of a transactional message.

The use of transactional messages requires implementing a transaction status querier, which is the main difference from ordinary messages. For example, if we're a trading system, this transaction checker might be based on querying the database using the order ID to determine the status of the order, such as whether it's been successfully created, paid, or refunded. The message production process also has many differences. You need to start a distributed transaction, send a prepare message, and then execute a local transaction, which typically involves database operations. If the local transaction is successful, the entire transaction is committed, and the previous prepare message is confirmed. This allows consumers to consume the message. If an exception occurs during the local transaction, the entire transaction is rolled back, the previous prepare message is canceled, and the entire process is reverted. The changes in using transactional messages are mainly reflected in the producer code, while the consumer usage remains the same as with ordinary messages, which will not be included in the demo.

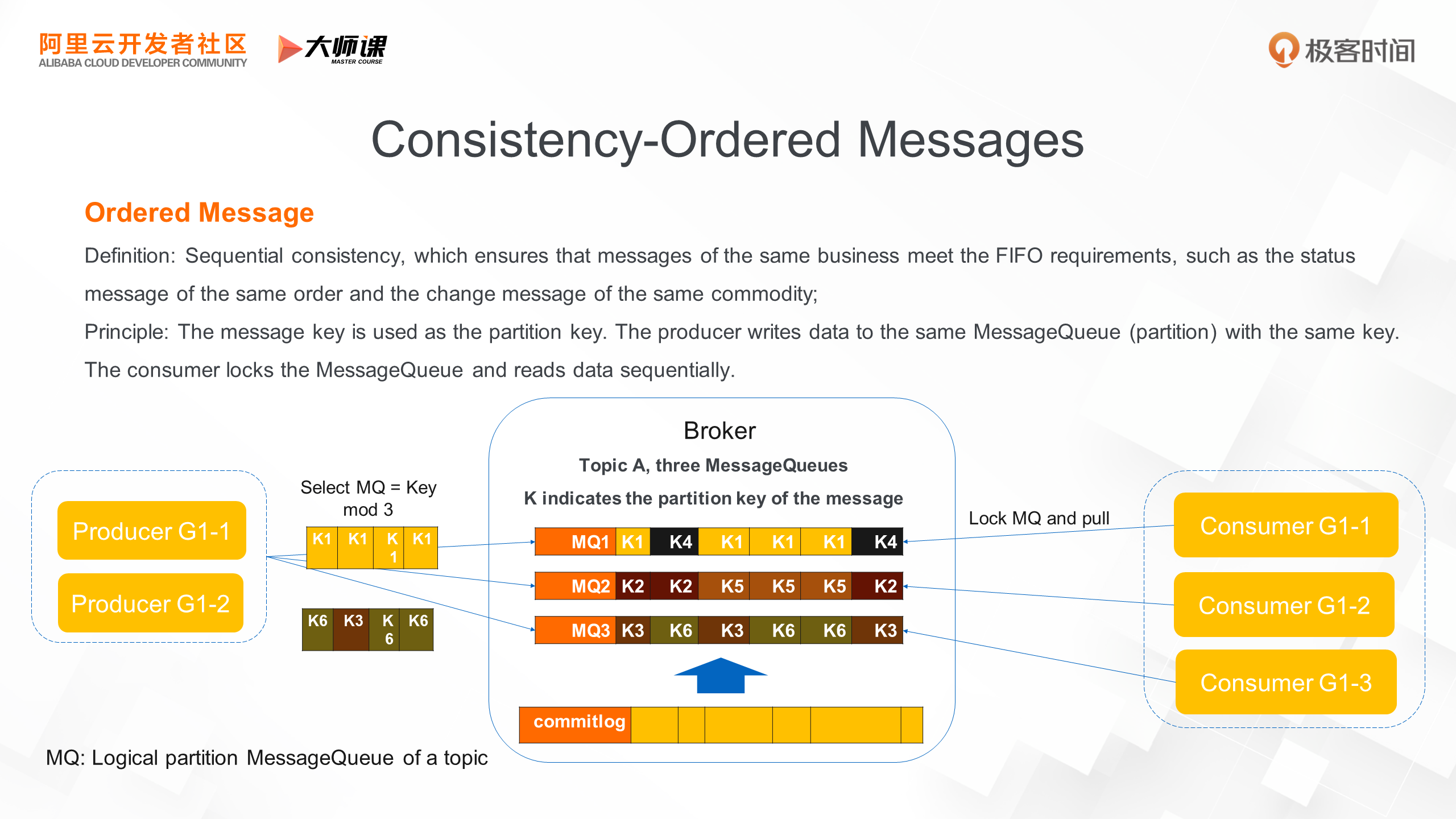

The second advanced feature is ordered messages, which is also one of the features of RocketMQ. It solves the problem of order consistency and ensures that messages of the same business are produced and consumed in the same order. In Alibaba, there was a scenario where the buyer's database was copied. Since Alibaba's order database uses the database-table splitting technology, it is split into the buyer's database according to the buyer's primary key and the seller's primary key for different business scenarios. The synchronization between the two databases uses the mechanism of sequential distribution of binary logs. By using ordered messages, binary log changes in the buyer database are played back in the seller database in strict order to achieve consistency between the order databases. If there is no order guarantee, database-level dirty data may occur, which will lead to serious business errors.

The following figure shows the implementation principle of ordered messages, which makes full use of the natural sequential reading and writing features of logs.

In the Broker storage model, each topic has a fixed ConsumeQueue, which can be understood as the partition of the topic.Producers add business keys to send messages. Order IDs can be used in this case. Messages with the same order ID will be sent to the same topic partition in sequence. Each partition will be locked by only one consumer at a certain time. Consumers will read the messages of the same partition in sequence for serial consumption to achieve sequence consistency.

Next, let's look at a simple demo of ordered messages.

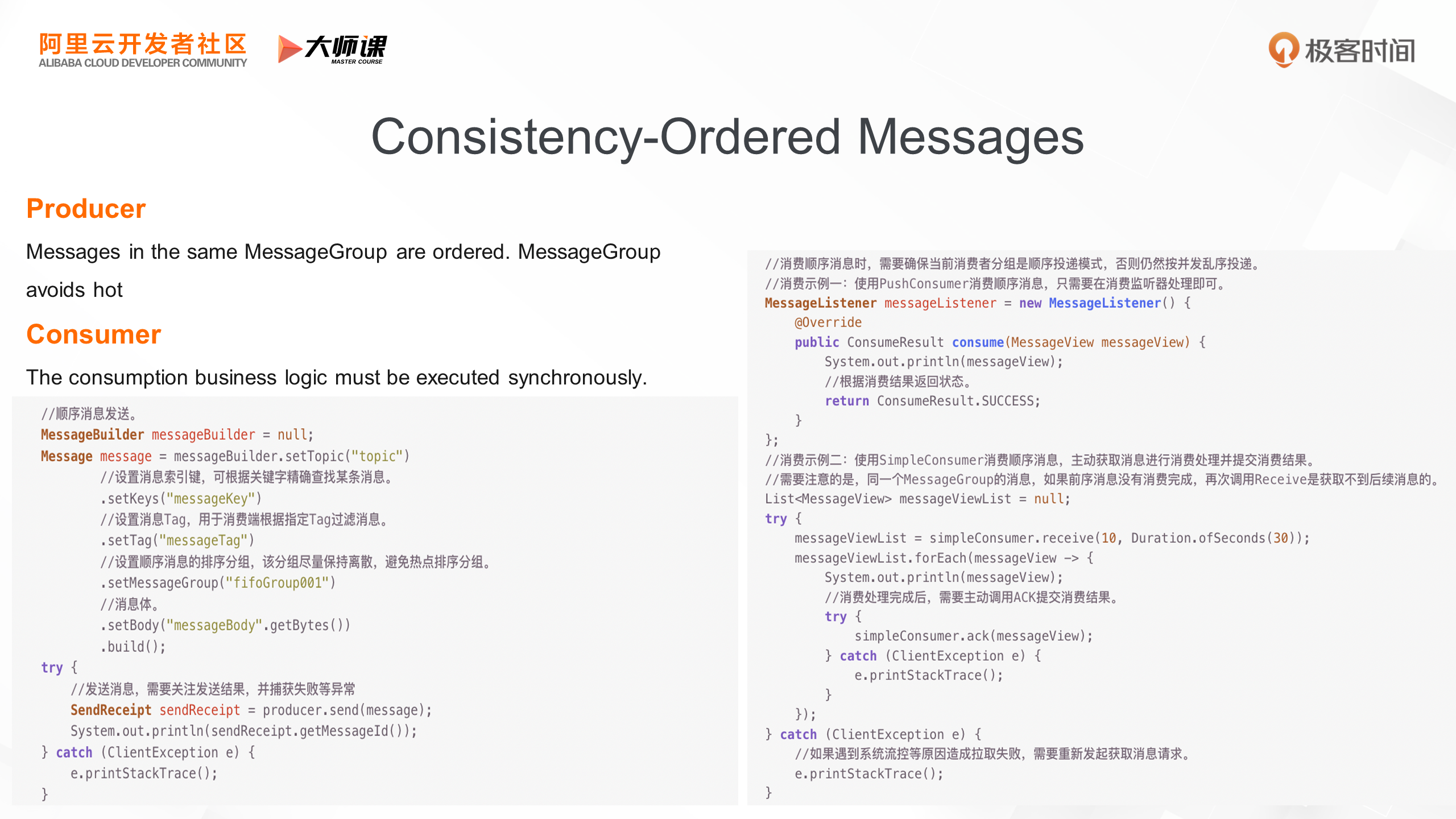

When it comes to ordered messages, both producers and consumers need to be aware of certain considerations.

In the production phase, you first define a message group. Each message can select a business ID as the message group, which should be as discrete and random as possible. Since the same business ID is assigned to the same data storage shard, production and consumption are serialized on this shard. If the business ID has a hot spot, it can cause serious data skew and local message accumulation. For example, in e-commerce transaction scenarios, order IDs are typically chosen for business message grouping because they are discrete. However, choosing seller IDs may lead to hot spots, with popular sellers experiencing much higher traffic than average sellers.

In the consumption phase, there are two modes similar to regular message sending and receiving: fully managed push consumer mode and semi-managed simple consumer mode. The RocketMQ SDK ensures that messages in the same group enter the business consumption logic in series. Note that your own business consumption code should also be serialized, and then return consumption success confirmation synchronously. Do not put messages in the same group into another thread pool for concurrent consumption, as this would destroy the sequential semantics.

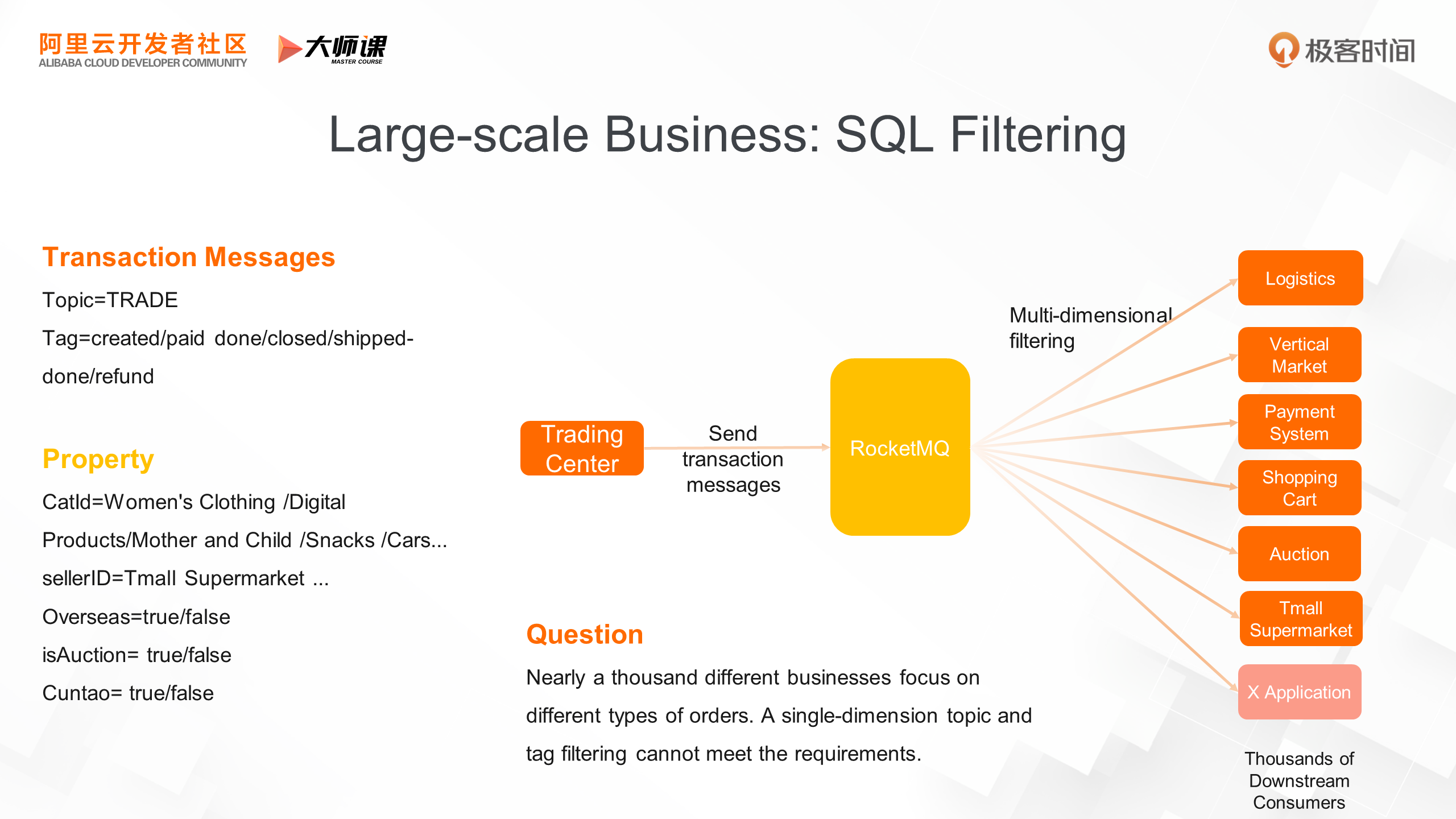

The third advanced feature is the SQL consumption mode, which is also essential in complex business scenarios. Let's revisit Alibaba's e-commerce scenario. Alibaba's entire e-commerce business revolves around transactions, with hundreds of different businesses subscribing to transaction messages. These businesses are primarily focused on specific segments and only need to process certain messages under the topic. According to traditional models, consumers typically subscribe to full transaction topics and filter them locally. However, this approach consumes a significant amount of computing and network resources, particularly during peak periods like Double 11, making it an unacceptable solution.

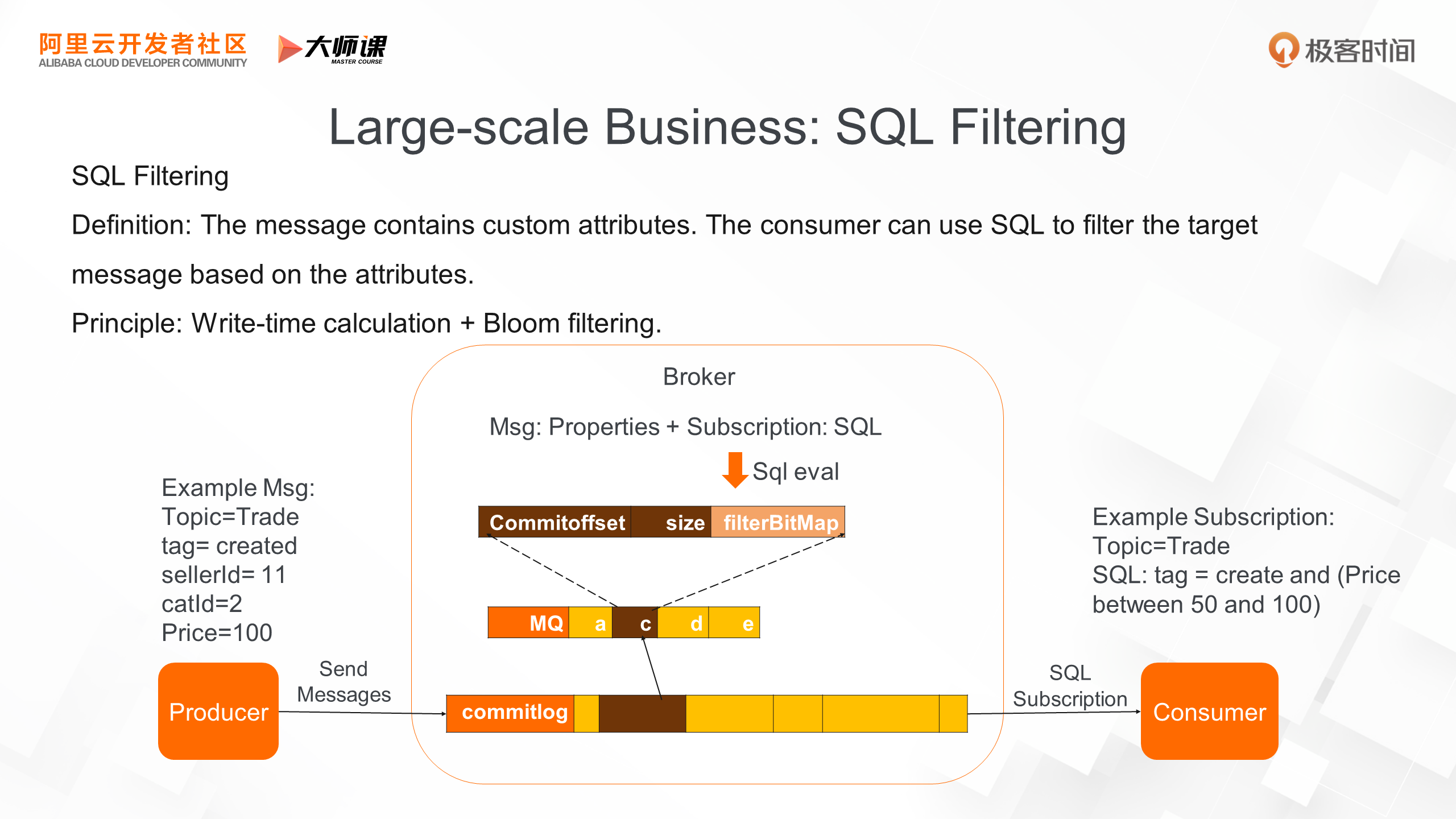

To solve this problem, RocketMQ provides an SQL consumption mode. In transaction scenarios, each order message contains business attributes in different dimensions, including seller ID, buyer ID, category, province, city, price, and order status. SQL filtering allows consumers to filter consumption target messages by using SQL statements. As shown in the following figure, a consumer only wants to pay attention to order creation messages within a certain price range, so the subscription relationship [Topic=Trade, SQL: status= order create and (Price between 50 and 100)] is created. The Broker will run SQL calculations on the server and return only valid data to the consumer. To improve performance, Broker also introduces a Bloom filter module to calculate results in advance and write bitmap filters to reduce invalid I/O when messages are written and distributed. In general, the filtering link is continuously prepended, from local filtering on the consumer side to write-time filtering on the server side to achieve optimal performance.

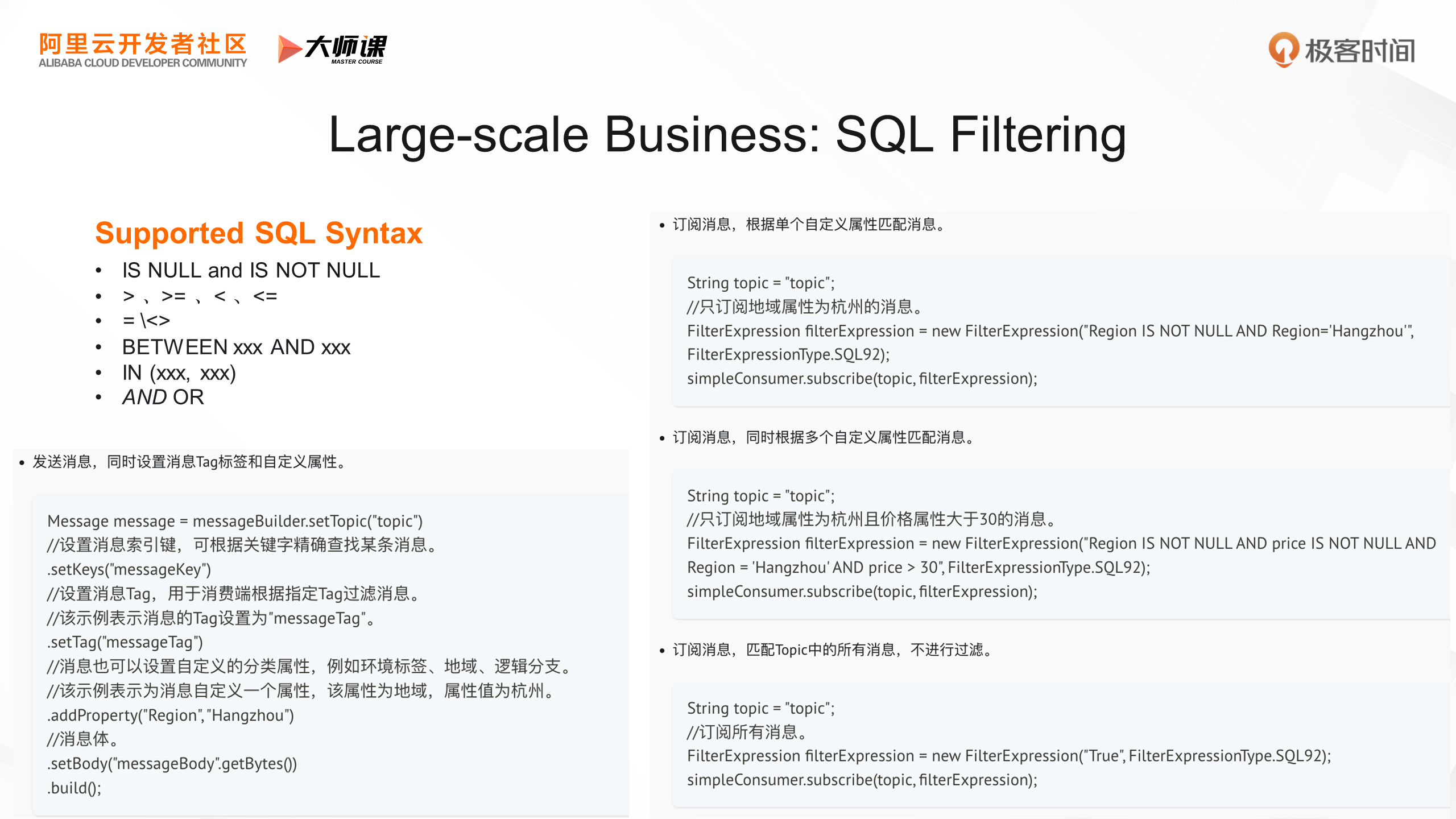

Next, let's look at an example of an SQL subscription. Currently, RocketMQ SQL filtering supports the following syntax, including attribute non-empty judgment, attribute size comparison, attribute range filtering, set judgment, and logical calculation, which can meet most filtering requirements.

In the message production phase, you can configure topics and tags as well as custom attributes. For example, in this case, we set a region attribute to indicate that the message is sent from the Hangzhou region. At the time of consumption, we can filter and subscribe to SQL according to custom attributes. The first case is that we use a filter expression to determine that the region field is not empty and is equal to Hangzhou before consumption. The second case adds more conditions. If this is an order message, we can also determine the region condition and price range to determine whether to consume. The third case is the full receiving mode, and the expression is directly True. This subscription mode will receive all messages under a topic without any filtering.

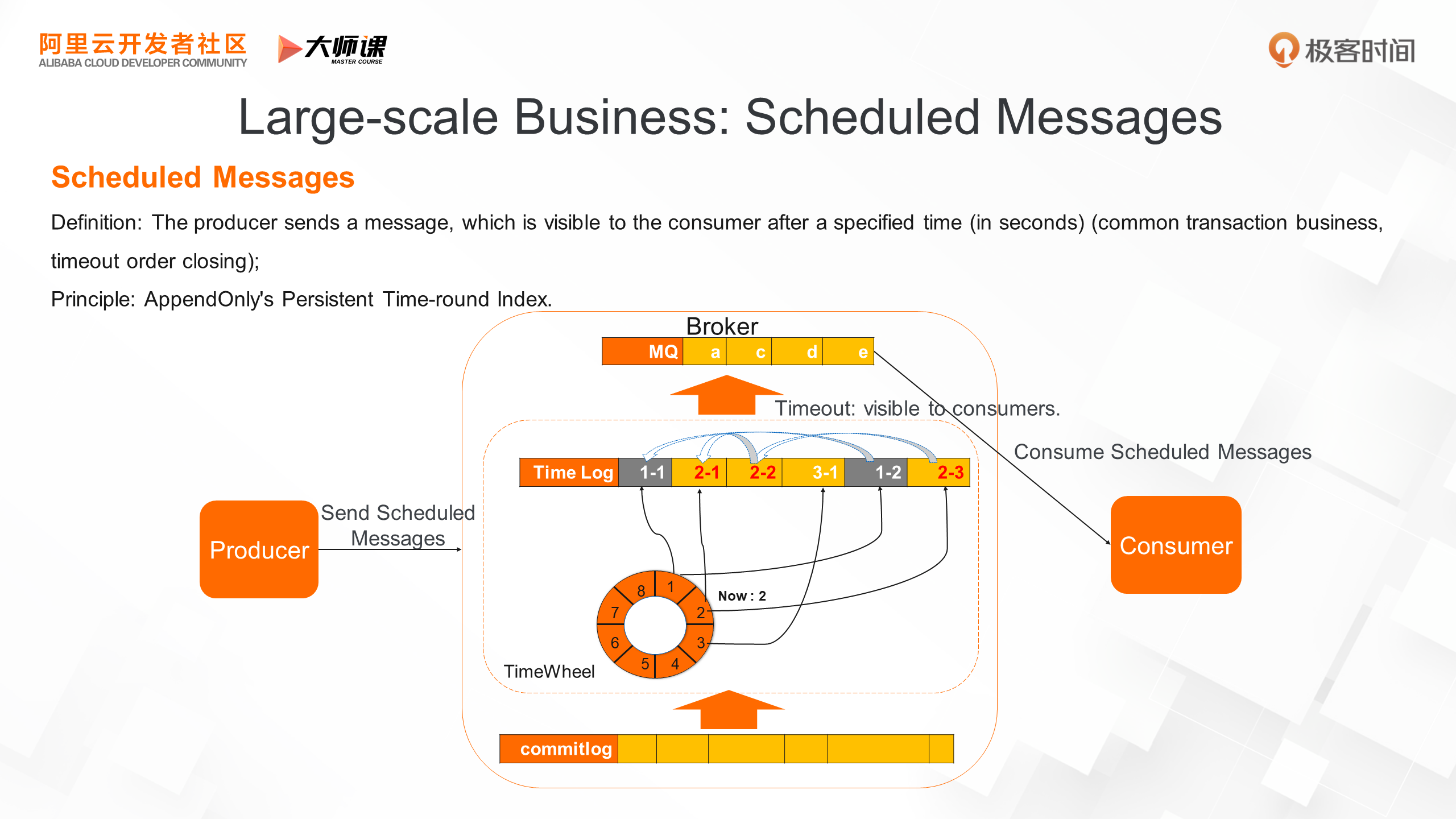

The fourth advanced feature is scheduled messages, which allow producers to specify that a message is not visible to consumers until a certain period of time has elapsed after it is sent. Many business scenarios require large-scale timed event triggers. For example, in a typical e-commerce scenario, orders are automatically closed after 30 minutes of order creation and no payment is made. Scheduled messages can greatly simplify this scenario.

RocketMQ's scheduled messages are implemented based on time wheels. As you may know, TimeWheel simulates the rotation of a dial to sort time. Each grid in the TimerWheel represents the smallest time scale, called a tick. In RocketMQ, each tick is one second, and messages at the same time are written to the same grid. Since multiple messages may be triggered at the same time, and the writing time of each message is different, RocketMQ also introduces the Timerlog data structure. Timerlog writes data in sequential append mode, where each element contains the physical index of the message and the previous message at the same time, forming a logically linked list. Each grid of the TimeWheel maintains a head-to-tail pointer to the linked list of messages at that time. Similar to a dial, the TimerWheel has a pointer that represents the current moment and rotates around the TimerWheel in a loop. When the pointer points to a tick, it means the tick has expired, and all content will pop up together and be written to ConsumeQueue, making it visible to consumers.

Currently, the performance of scheduled messages in RocketMQ far exceeds that in RabbitMQ and ActiveMQ.

Next, let's discuss RocketMQ's global high-availability technical solution.

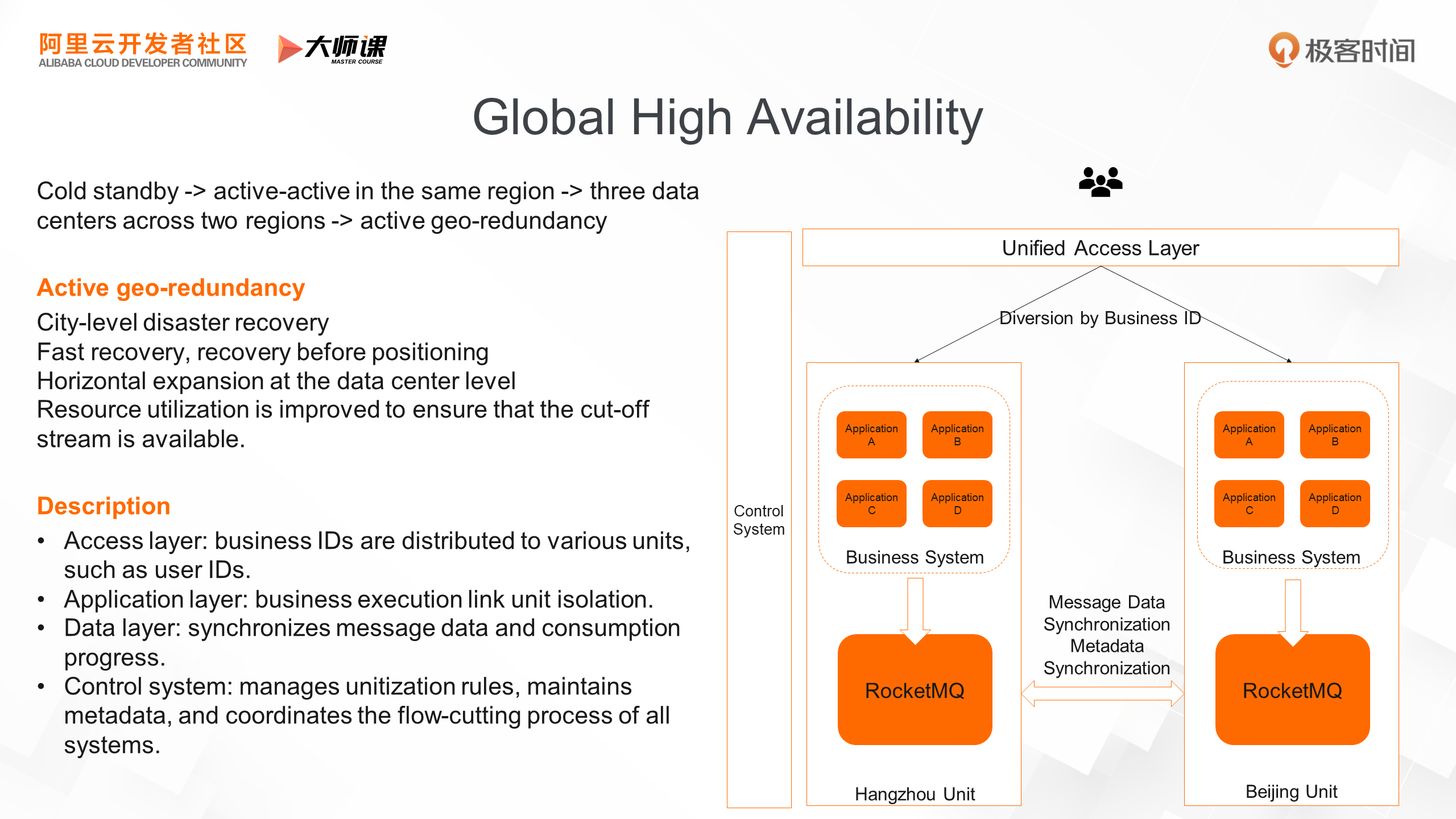

In our previous message basics course, we covered RocketMQ's high-availability architecture, which mainly refers to multiple copies of data and high-availability services within RocketMQ clusters. The high availability we're discussing today is global, which is often referred to in the industry as zone-disaster recovery, three centers in two regions, and active geo-redundancy.

Compared to cold standby, active-active in the same region, and the three data centers across two regions model, active geo-redundancy has more advantages. It can handle city-level disasters, such as earthquakes and power outages. Additionally, some human operations, like changes to a basic system and the introduction of new bugs, can lead to the unavailability of an entire data center level. The active geo-redundancy architecture can directly cut traffic to an available data center, prioritize business continuity, and then locate specific problems.

Moreover, active geo-redundancy can also realize data center-level expansion. The computing and storage resources of a single data center are limited, and active geo-redundancy architecture can distribute business traffic proportionally across data centers. At the same time, the multi-active architecture ensures that all data centers are providing business services instead of being cold standbys, significantly improving resource utilization. Due to the multi-active state, availability is more guaranteed, and we can have more confidence in the face of extreme scenarios.

In the active geo-redundancy architecture, RocketMQ assumes the multi-active capability of the infrastructure. The multi-active architecture is divided into several modules:

• The first is the access layer. Through the unified access layer, user requests are distributed to multiple data centers according to the service ID. The service ID can generally use the user ID.

• The second is the application layer, which is generally stateless. When a request enters a data center, you must ensure that the entire link of the request is closed in the unit, including RPC, database access, and message reading and writing. This reduces the access latency and ensures that the system performance does not deteriorate due to the multi-active architecture.

• Further down is the data layer, which includes databases, message queues, and other stateful systems. Here, let's focus on the active geo-redundancy of RocketMQ. RocketMQ uses the connector component to synchronize message data in real time based on topics and synchronize consumption status in real time based on consumers and topics.

• Finally, a global control layer is required. The control layer must maintain the global unit rules like which traffic goes to which data centers. Meanwhile, it also manages the configuration of multi-active metadata, such as which applications or topics need to be multi-active. In addition, at the time of switching, this layer coordinates the switching process of all systems and controls the switching sequence.

In this course, we've covered a lot of advanced features of RocketMQ. First, we explored the consistency features, including sequential consistency and distributed business consistency.

Next, we discussed two features that enable RocketMQ to handle large-scale and complex businesses. One is SQL filtering and subscription, which meets the filtering needs of a large number of consumers in a single massive business. The other is scheduled messages, a common scenario in many Internet transaction businesses.

Finally, we learned about RocketMQ's high-level disaster recovery capabilities and explored an active geo-redundancy solution.

In the next course, we'll delve into RocketMQ's features in other business scenarios.

Click here to go to the official website for more details.

RocketMQ 5.0: What are the Advantages of RocketMQ in Business Message Scenarios?

RocketMQ 5.0 Stream Storage: What Are the Requirements of Stream Scenarios?

208 posts | 12 followers

FollowAlibaba Cloud Native - June 6, 2024

Alibaba Cloud Native - June 7, 2024

Alibaba Cloud Native Community - November 20, 2023

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native Community - February 15, 2023

Alibaba Cloud Native Community - May 16, 2023

208 posts | 12 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn MoreMore Posts by Alibaba Cloud Native