By Yinyou Gu (Alibaba Cloud Middleware Developer)

RocketMQ 5.0 has many new features (such as the separation of storage and computing and the improvement of batch capabilities). It is a landmark version.

When it comes to a new version, we often think of changes in the architecture of the server first and ignore the design philosophy of the client. The client is essential to messaging products, and many features implement better if the client and server cooperate.

Lightweight, cloud-native, and unified messaging models are the design philosophy of the RocketMQ 5.0 client.

Lightweight focuses on light logic and processes. It simplifies complexity and reduces obstacles to the development of multilingual ecology.

The preceding figure lists the differences between RocketMQ 4.x and RocketMQ 5.0.

① Version 4.x uses JsonCodecs and RocketMQCodecs for serialization, while version 5.0 uses the standard Protobuf protocol. Obstacles to the development of multiple languages include many irregularities. For example, RocketMQ custom serialization requires other languages to implement a set of protocols to implement serialization and deserialization parsing. In contrast, as a standard serialization protocol, Json can serialize and deserialize almost all languages. However, it has distinct disadvantages (such as redundant information occupying a significant volume). Therefore, it is more used in scenarios where the frontend and backend interact with each other (such as restful architectures). In addition, the message middleware scenario does not need to care about whether data is readable during transmission. Therefore, Protobuf is one of the choices. It is mature and standard enough to support multiple languages natively and occupies a small volume when transmitting.

② Previously, when the client used consumers to consume information, there was computing logic (such as rebalancing and system-level topic processing). However, in RocketMQ 5.0, all computing logic is migrated to the server. The client only needs to call Receive interface and does not need to process additional system-level topics. Thus, the overall logic becomes very lightweight.

③ The implementation and maintenance of version 4.x are costly, so version 5.0 is not updated based on version 4.x. One of the original design ideas is to directly add gRPC protocol to the version 4.x client and iteratively upgrade it to version 5.0. However, this is equivalent to carrying forward the previous burden and violates the lightweight principle, so it was rejected.

The elasticity, high availability, and interactive O&M capabilities of the cloud are reflected in the RocketMQ 5.0 client. These three features correspond to extreme auto-scaling, low coupling, and cloud-end integration.

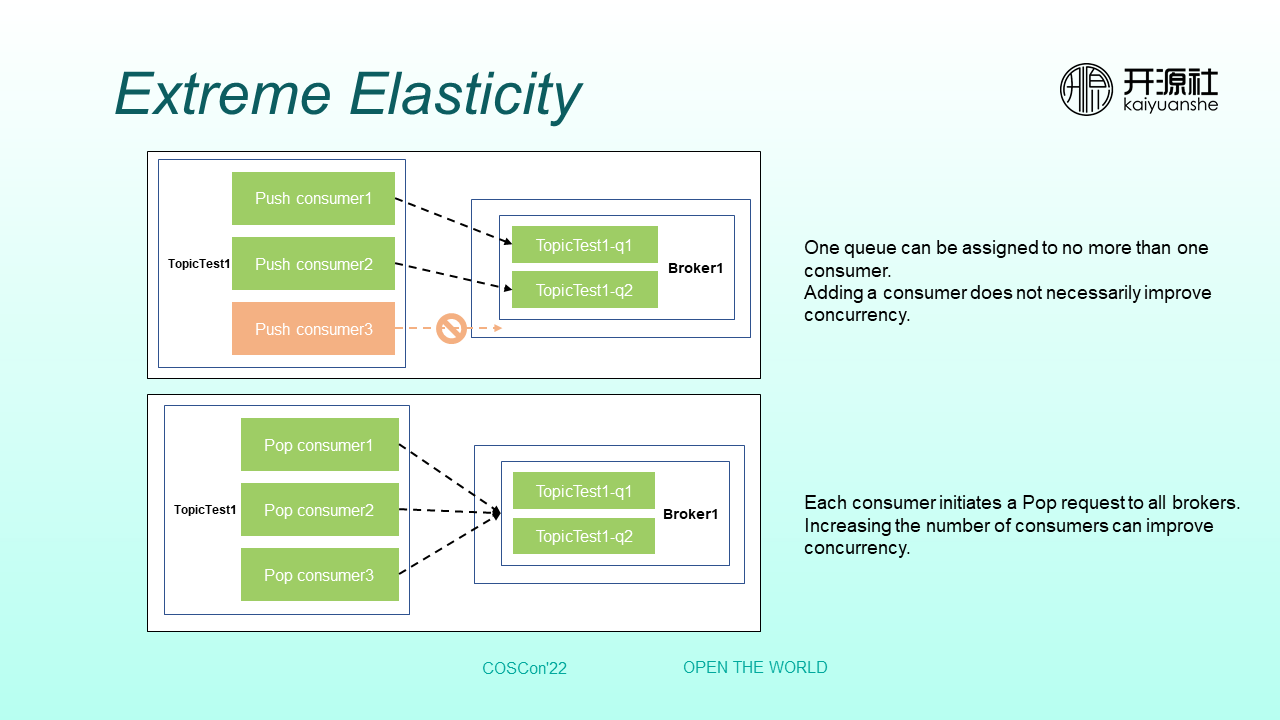

The preceding figure shows the clients of version 4.x and 5.0. In version 4.x, a Queue corresponds to no more than one consumer. Adding consumers does not necessarily increase the concurrency of consumption. The elasticity of version 4.x has an upper limit. However, in RocketMQ 5.0, each consumer can initiate Pop requests to all brokers. The previous push mode is changed to Pop request. Increasing the number of consumers can improve consumption concurrency. Even if there is only one queue, it can still generate hundreds of thousands of consumers to pull queue messages.

For example, the shopping website has a sudden return traffic peak. Previously, the simplest and most effective way was to increase the number of business applications and increase processing concurrencies (such as increasing the core application data of the return system and customer system). However, if the number of queues is not so large at the beginning, we need to scale out the number of queues first and then scale out the number of core services. At the same time, the scale out only improves the processing speed of newly enqueued messages without helping the already slow consumption speed of stacked queues. This would result in earlier return applications being processed after later ones, which will affect user experience.

However, the RocketMQ 5.0 Pop consumption model only needs to directly increase the number of business processing nodes to solve the problem.

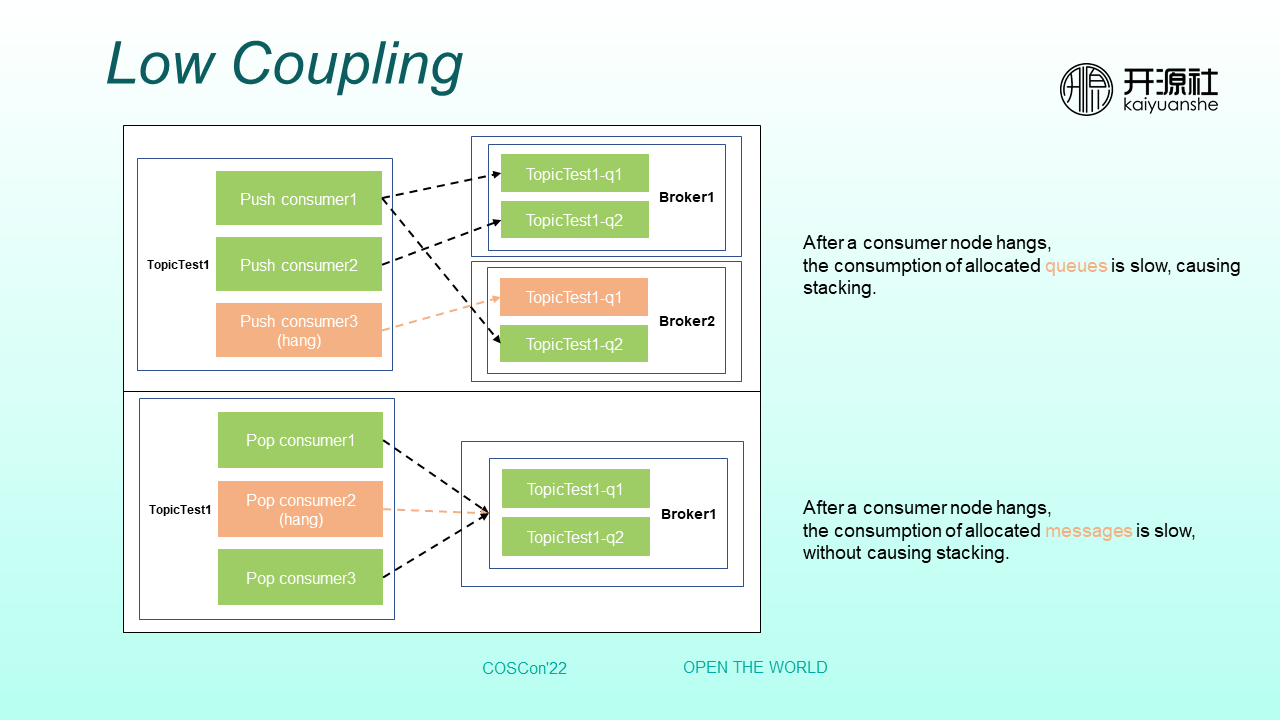

Stacked queues cannot be scaled out to accelerate consumption. This is similar to the low-coupling scenario. For example, in version 4.x, if FullGC occurs on a consumer node, the consumption would be slow, but the node would not be completely offline and still maintain with the server. Therefore, queues continue to be allocated to the node, causing a series of possible problems.

However, in RocketMQ 5.0, if FullGC occurs on a node, the allocation granularity degrades from the queue to the message. As a result, the consumption of allocated messages is slow, and no stacking occurs.

Therefore, the advantages of using the Pop protocol after RocketMQ 5.0 can be seen from its extreme elasticity and low coupling. We only need to scale out when the consumption capacity is insufficient and scale in when abundant. The failure of a single node does not affect the overall situation.

The cloud can be understood as the server side far away in the data center. The client is embedded in the business SDK. Previously, when performing O&M on the console, only the server could be controlled, and the control over the client was limited, making the user experience fragmented. For example, you can change server configuration simply by using the command tool in addition to the white screen and receive results in time. However, you can easily encounter problems while you change client configuration, in which business release is involved.

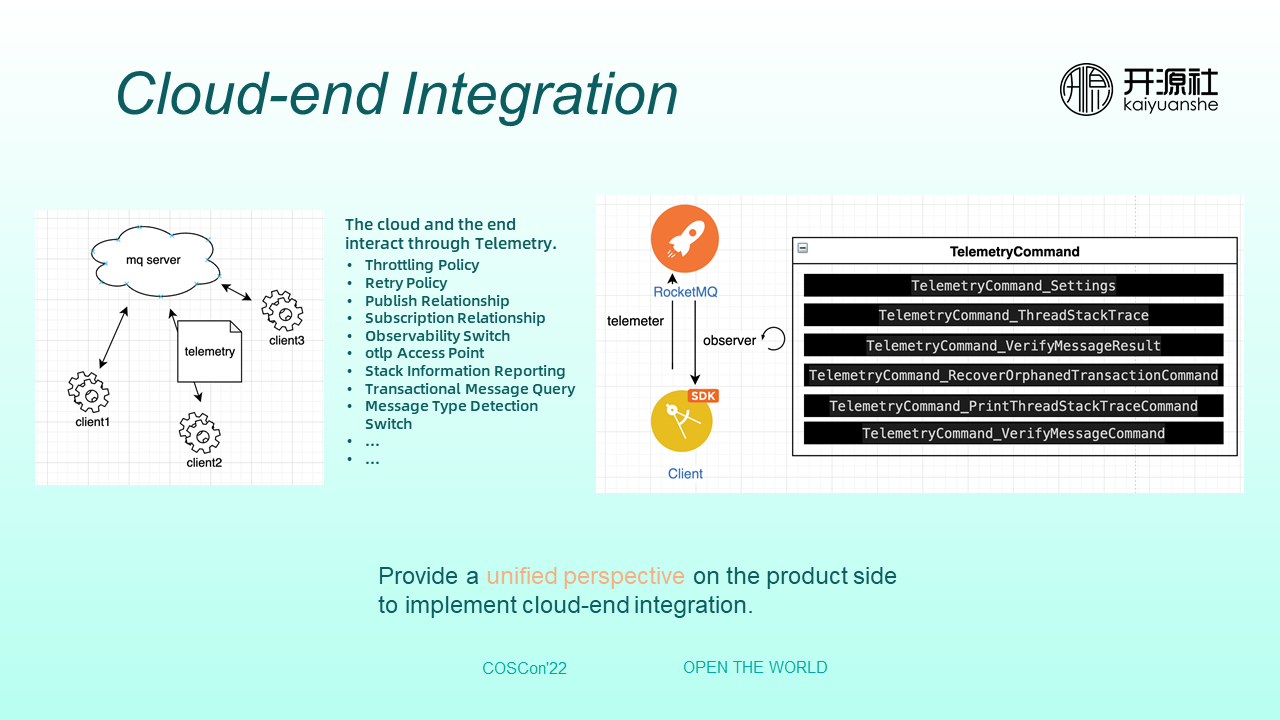

RocketMQ 5.0 uses the telemetry protocol to collaborate with the server. The telemetry protocol is introduced to enable interaction between the cloud and the end through telemetry. It includes (but is not limited to) throttling policies, retry policies, publish and subscribe relationship control, observability switches, access point information, and stack information. The main implementation method is to directly send a telemetry request to the server through SDK and then read and write the commands shown in the preceding figure to the observer.

Commands include setup, stack printing, message validation, consumption, and transactional message backchecks. The key capabilities are observability switches and OTLP access point information.

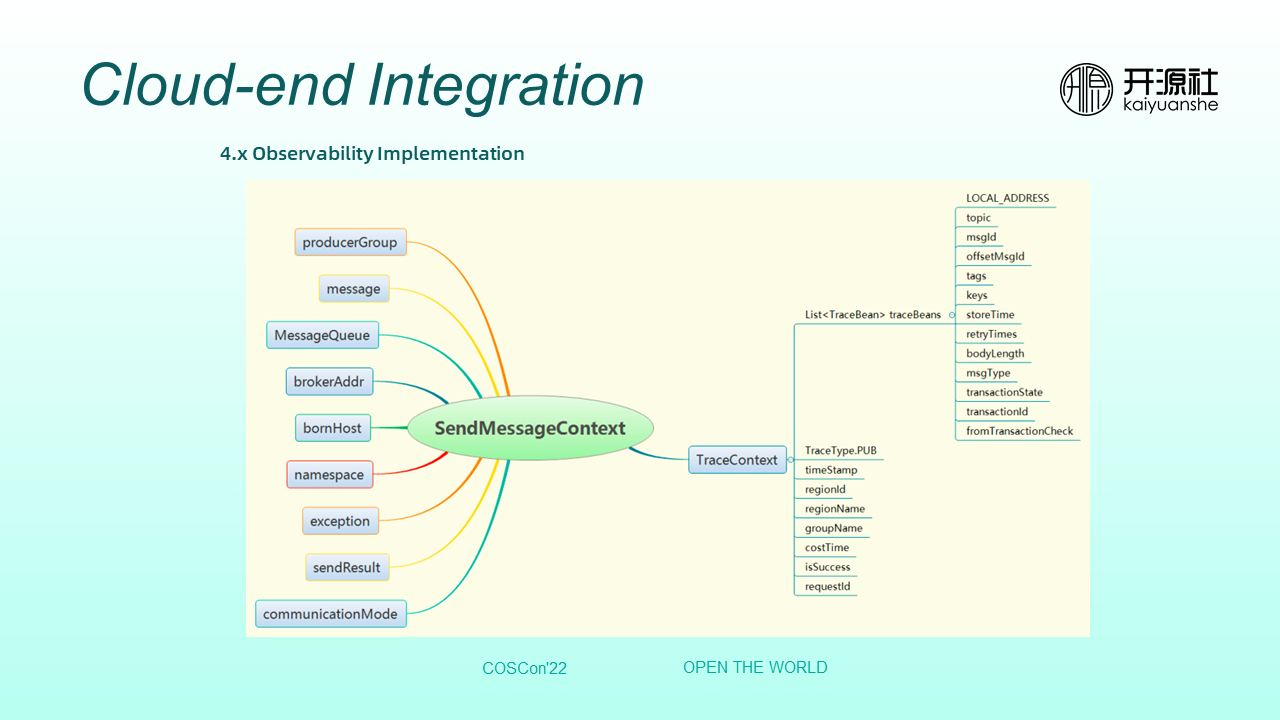

In the past, observability was implemented based on message tracing. When sending and consuming messages, parameters in contexts are added to trace messages and are asynchronously sent in batches in the background. The preceding figure shows all the contexts for sending messages, including MessageQueue, MessageID, and IP address.

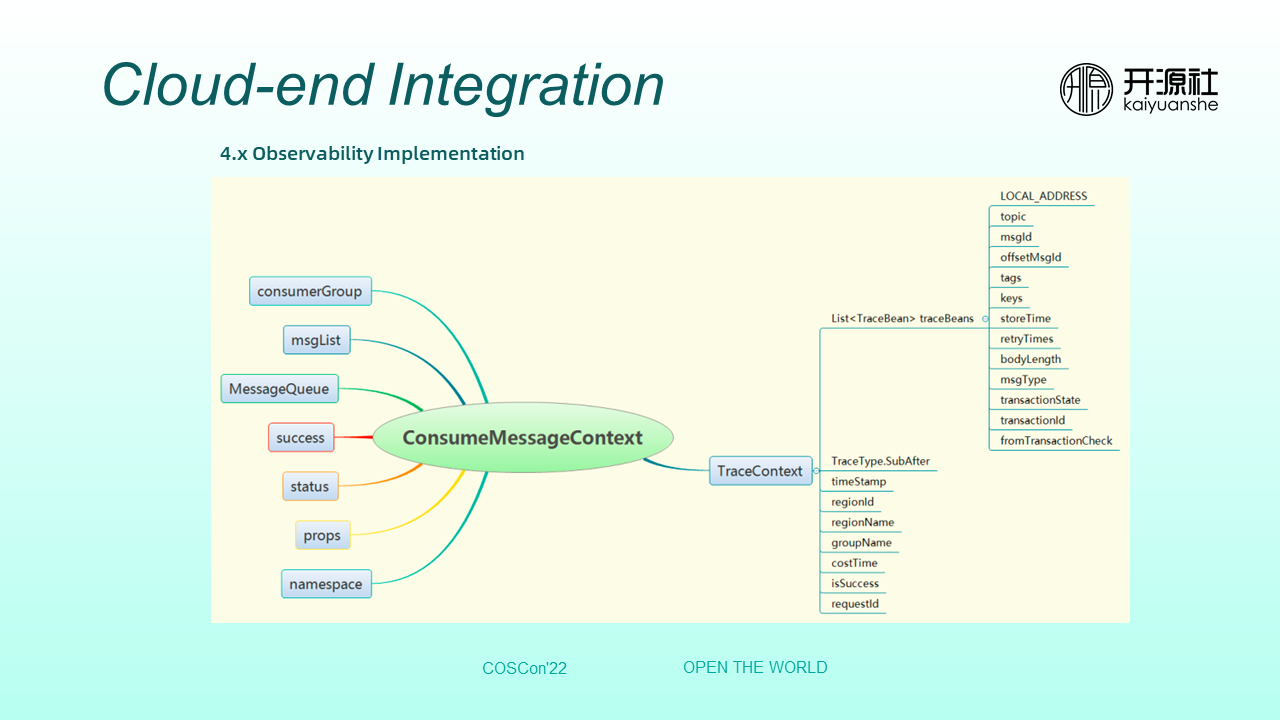

The preceding figure shows contexts during consumption. Three trace messages are generated end-to-end for a single MessageID: after sending, before consuming, and after consuming. We can track the message rotation trace by querying the message data of the trace because it inserts the MessageID as the key value to the topic. Previously, users had to query trace messages based on MessageID in the console.

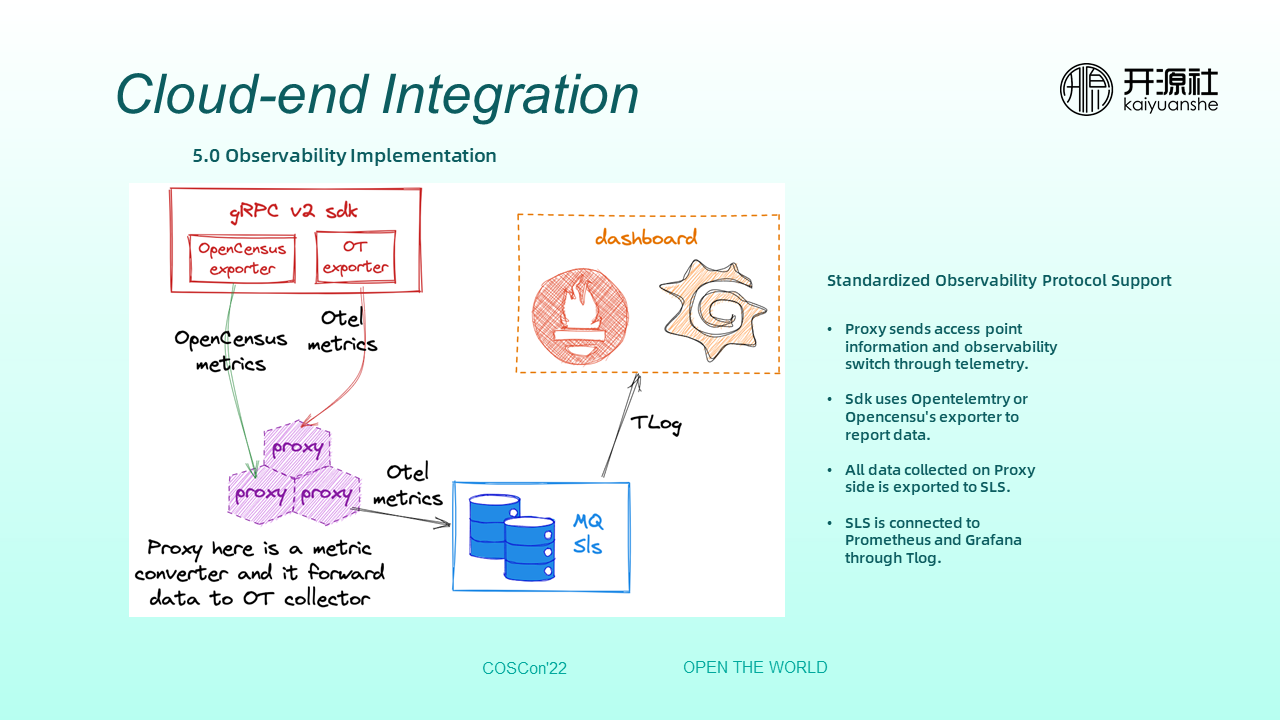

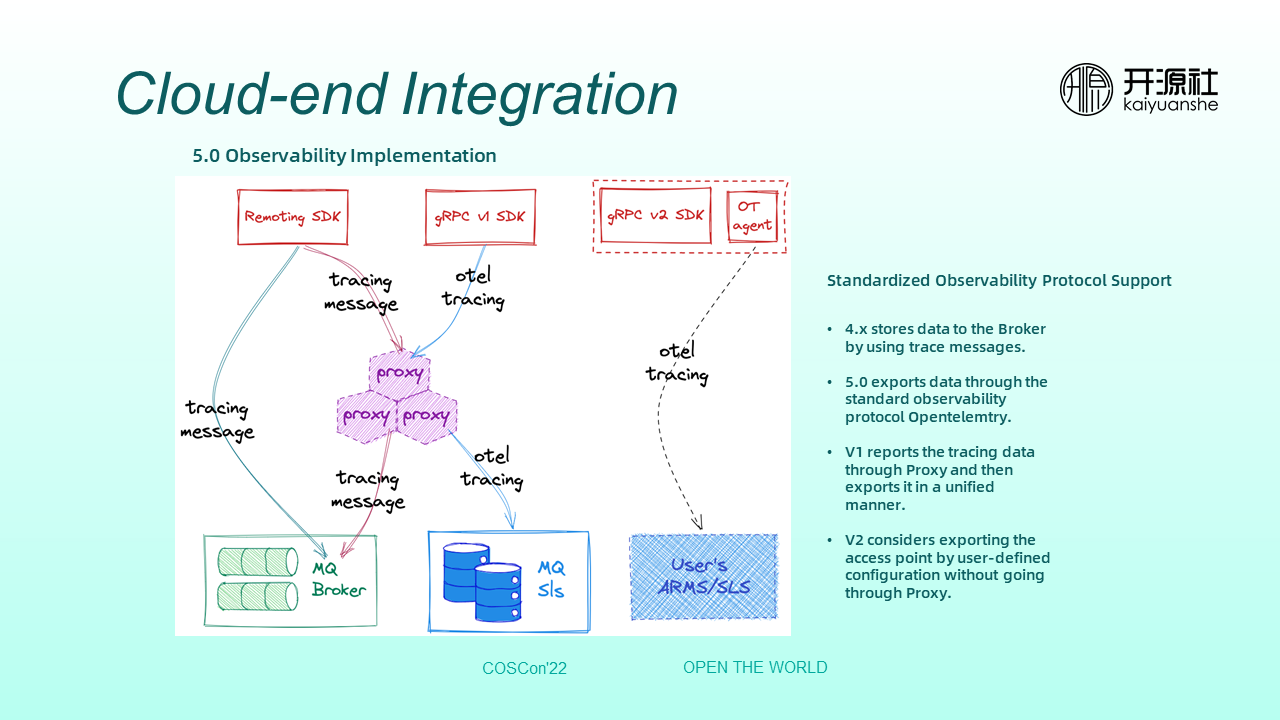

RocketMQ already has observability capabilities. However, custom observability methods cannot be well connected to existing observability products and capabilities. Version 5.0 has a standardized observability protocol, which can use richer and more professional analysis and display tools to make observability data have a higher value.

Telemetry protocol is used to send observability switches and access points to the client. The proxy sets the access point information to itself by default. This allows all observability messages reported by the client to be converged on the server and then reported to SLS using standard OpenTelemetry and Opencensu protocols. Then, SLS interfaces with Prometheus and Grafana using TLog.

The data link of Tracing is complex. We must first import the observability data to the Proxy. However, since Tracing has a large amount of data, we once considered exporting it to an access point through a user-defined configuration without going through the Proxy, but this will cause other problems. For example, if a version 4.x user wants to access the RocketMQ 5.0 server to use observability, the trace message needs to be decoded and generated through the standardized observability protocol.

In terms of multiple languages, the latest OpenTelemetry support is not necessarily comprehensive enough, so some old observability protocols (such as Opencensu) are also required. On the whole, they are all standard protocols.

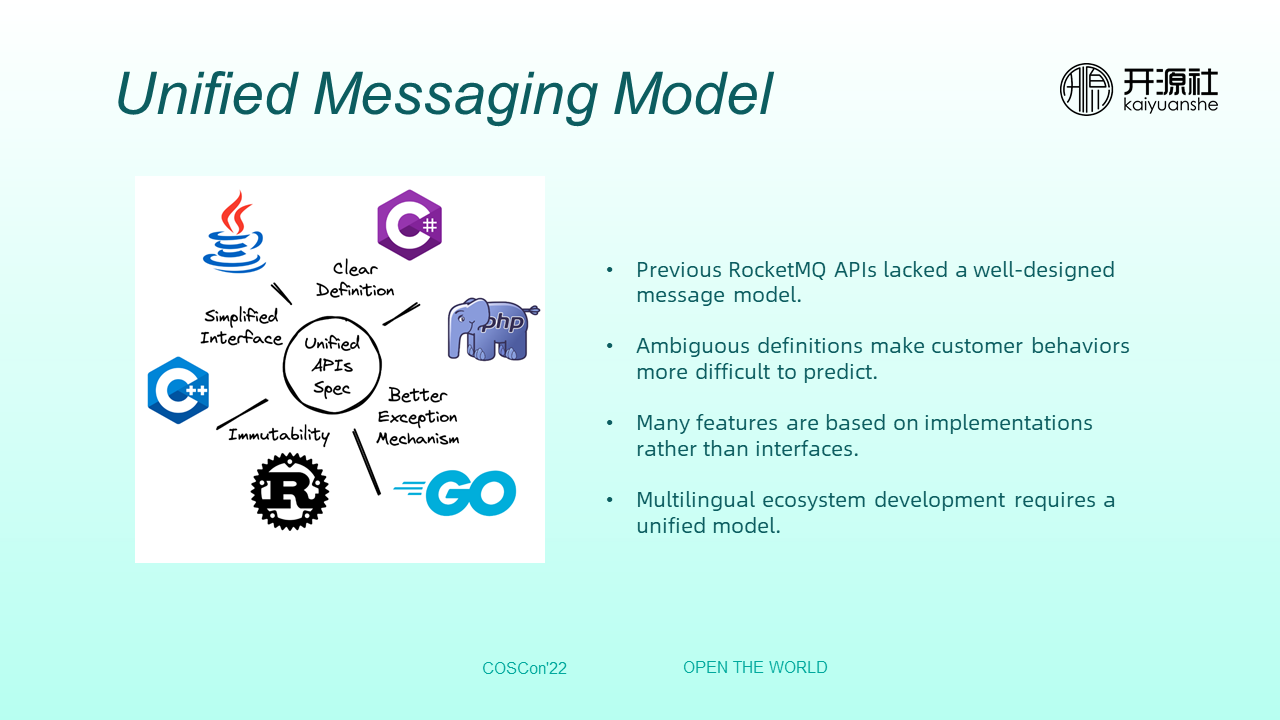

Previous RocketMQ API lacked a well-designed message model, and many concepts were not clearly defined. For example, there are two IDs when sending a message: the MessageID defined by the user and the offsetID issued by the server. However, during consumption, the offsetIDs issued by the server become MessageIDs again. The ambiguous definition makes it more difficult to predict customer behavior. Besides, most previous models were execution-oriented and not developer-friendly, with many features based on implementations rather than interfaces. Therefore, users need to touch many details and need to face too many redundant and complex interfaces, thus resulting in a high error rate.

In addition, multilingual ecosystem development requires a unified model.

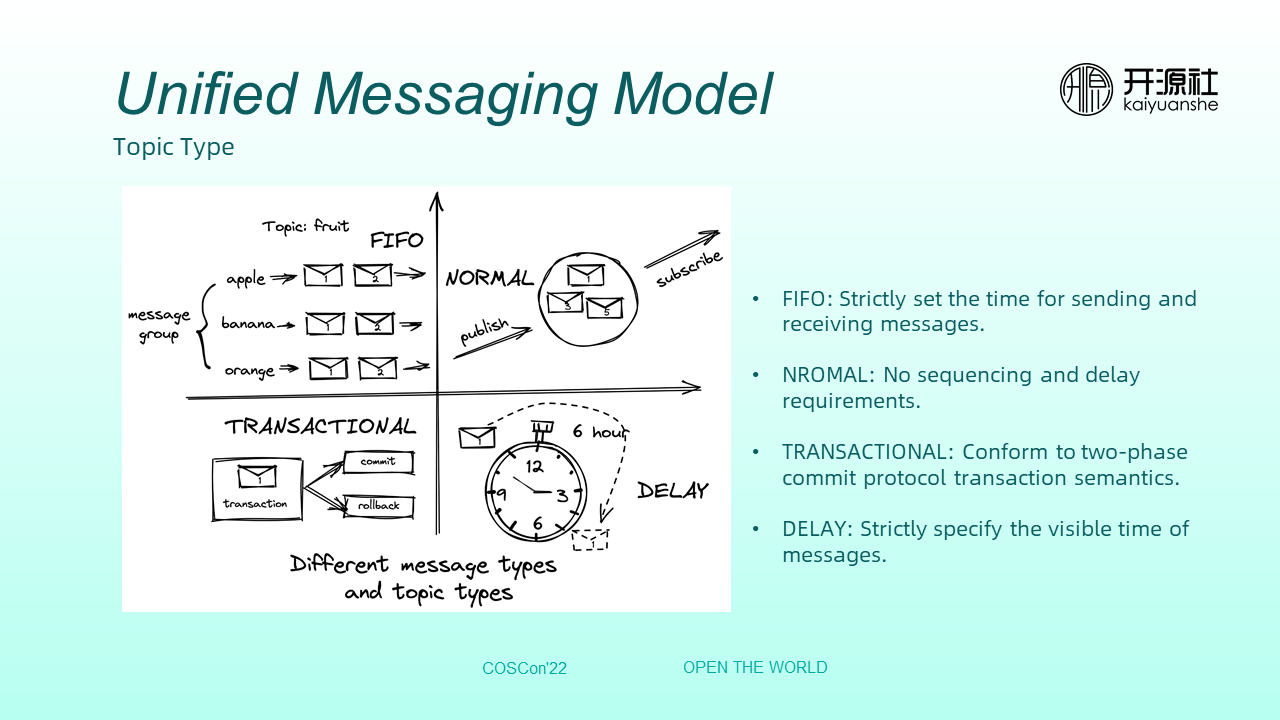

Topic type is one of the evident changes.

Previously, the client did not verify topic types, and any type of message can be sent to the topic. However, after the introduction of RocketMQ 5.0, topic types need to be verified. For example, an ordered message topic can only send ordered messages. Previously, a delay message was a level-by-level delay message, but now, it is a scheduled message that supports any time precision, including millisecond-level precision.

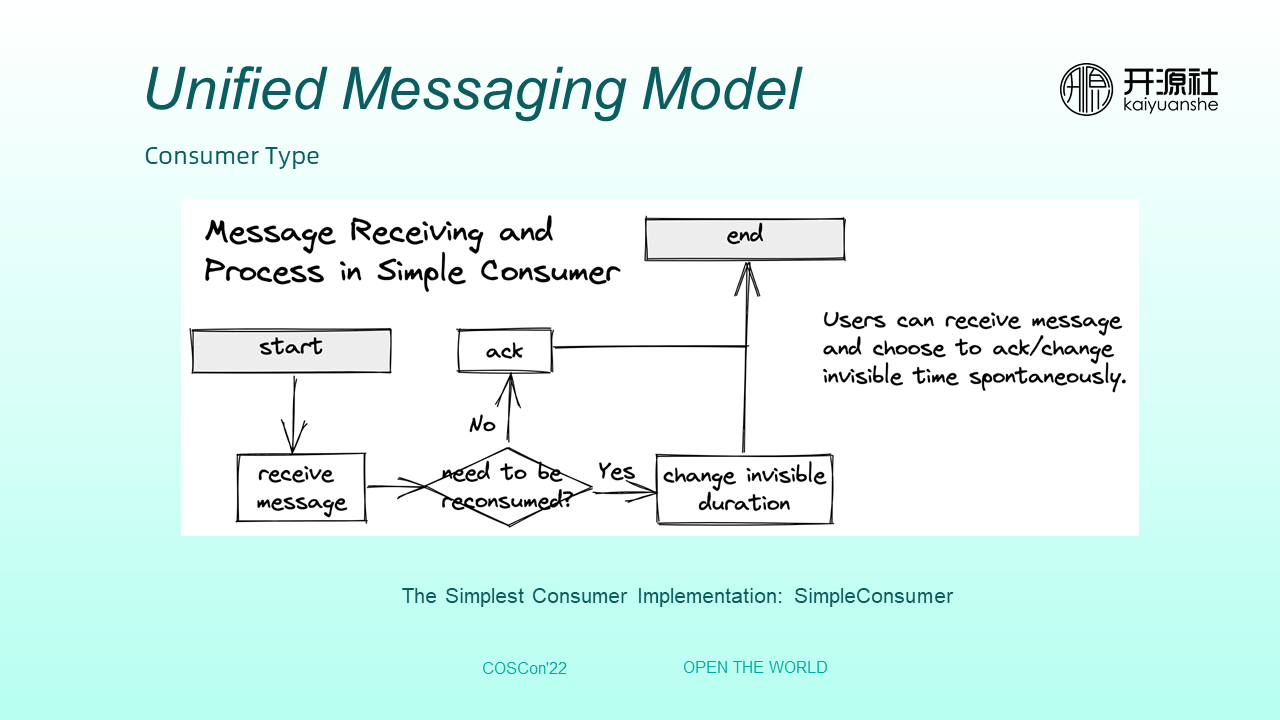

At the same time, the unified model defines various consumers and has different consumer implementations (such as PushConsumer, PullConsumer, and SimpleConsumer). The bottom layer is implemented using the Pop protocol. For example, the previous PushConsumer was manually pulled, but now it uses Pop.

SimpleConsumer method is simple. You can call receive message operation to obtain the message before consuming it. If the message is consumed, you can perform ACK. If not, you can change the visible time. The overall process is intuitive and based on interface definitions.

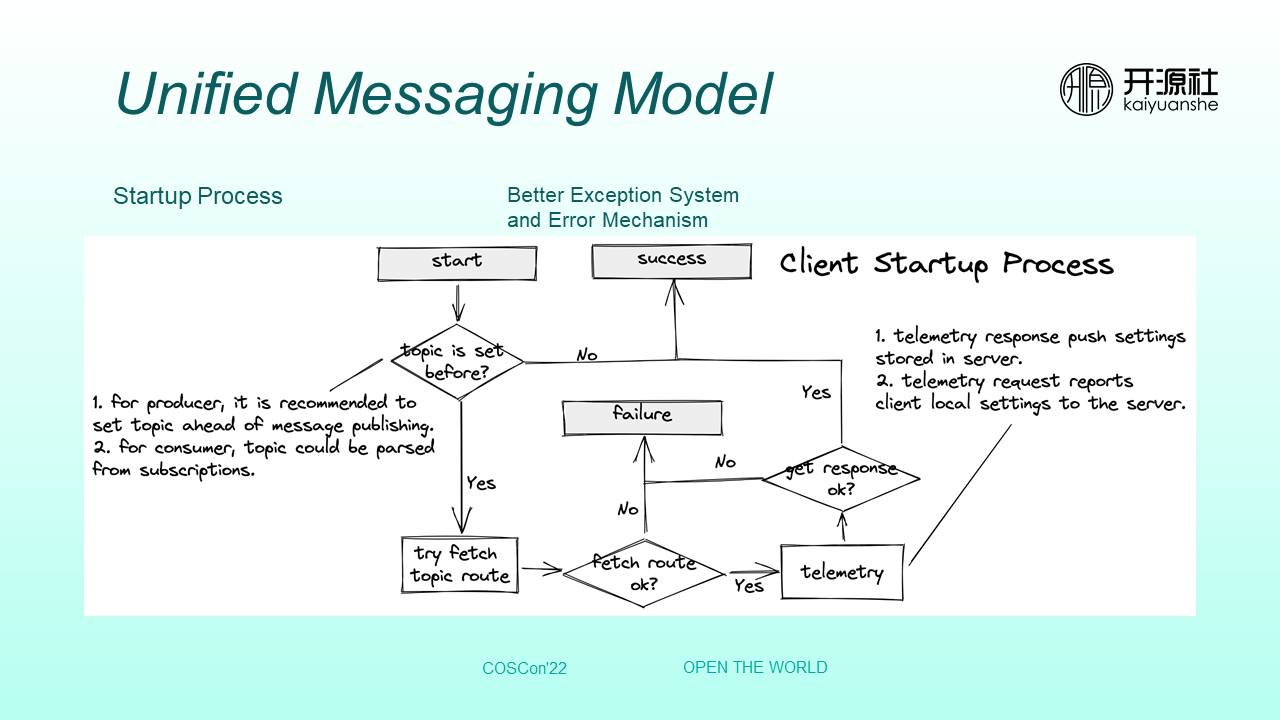

The new version of the startup process adds preparation work to catch obvious errors and exceptions earlier. For example, the new startup process tries to fetch settings from the server and can perform hot updates on such settings. They are called server and customer telemetry. Any preparation failure will cause the client to fail to start. This contrasts with the previous version, in which the client can be forcibly pulled up and retried.

After the preparation work is added, configuration problems or network problems can be found in advance. For example, if topic routing information does not exist, when the model is started, the bonding topic will be checked first. Each sender and producer can set the reserved topic information in advance and then check according to error information to determine whether the model can be started.

Rapid Deployment of RocketMQ 5.0 Clusters Based on Cloud Infrastructure

507 posts | 48 followers

FollowAlibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native Community - December 19, 2022

Alibaba Developer - April 18, 2022

Alibaba Cloud Native Community - February 15, 2023

Alibaba Cloud Native Community - April 13, 2023

Alibaba Cloud Community - December 21, 2021

507 posts | 48 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn MoreMore Posts by Alibaba Cloud Native Community