By Ruoyu Liang, UC Browser Backend Engineer

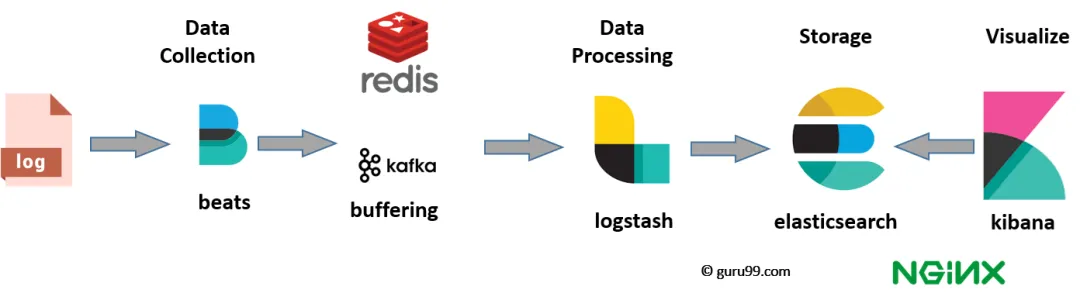

As we all know, in ELK, E stands for Elasticsearch, L for Logstash, and K for Kibana. Logstash is a powerful data processing pipeline that provides complex data transformation, filtering, and rich data input and output support. Filebeat, a lightweight log file collector from the same family, is often used alongside Logstash when dealing with large volumes of log files and requiring minimal resource consumption. A classic use case is illustrated below: Filebeat sends log files from various servers to Kafka for decoupling, and then Logstash consumes and processes the log data, and finally transfers it to Elasticsearch, where Kibana handles the visualization. This architecture balances efficiency and functionality.

Note that iLogtail is also a lightweight, high-performance data collection tool with excellent processing capabilities. More importantly, stress tests show that iLogtail is much ahead of Filebeat in performance. The Polling + Inotify mechanism may be the most important reason for the excellent performance of iLogtail. In this regard, the community already has detailed documentation, so we will not delve into it here.

Let's focus on the performance test results and the actual business scenarios. The scenario that closely matches our case is the fourth row in the table, "Container File Collection with Multiple Configurations Performance." As shown, under the same traffic input, as the number of collection configurations increases, the CPU usage increase for Filebeat is twice that for iLogtail. In other scenarios, iLogtail is also far ahead in terms of CPU utilization, ranging from five times to ten times better. For specific figures, please refer to the link below.

Comparison summary

| CPU | Memory | |

|---|---|---|

| Container Standard Output Stream Collection Performance | Under the same traffic input, iLogtail performs at least ten times better than Filebeat. | The memory consumption difference between iLogtail and Filebeat is negligible. |

| Container File Collection Performance | Under the same traffic input, iLogtail performs about five times better than Filebeat. | The memory consumption difference between iLogtail and Filebeat is negligible. |

| Container Standard Output Stream Collection with Multiple Configurations Performance | Under the same traffic input, as the number of collection configurations increases, the CPU usage increase for Filebeat is three times that for iLogtail. | Both iLogtail and Filebeat experience increased memory consumption due to an increase in collection configurations, but this remains within acceptable limits. |

| Container File Collection with Multiple Configurations Performance | Under the same traffic input, as the number of collection configurations increases, the CPU usage increase for Filebeat is twice that for iLogtail. | Both iLogtail and Filebeat experience increased memory consumption due to an increase in collection configurations, but this remains within acceptable limits. |

Can iLogtail replace Filebeat and Logstash in the production environment and directly collect logs to Elasticsearch?

In the past, the answer was no. There were primarily four reasons for this.

Let's analyze them one by one.

Although the core part of iLogtail has outstanding performance, its original Elasticsearch flusher plug-in has shortcomings.

• The production environment has many collection instances but lacks a front-end page for administrators to maintain collection configurations in a user-friendly manner. Since the Config Server already provides API interfaces, this would be the easiest to implement.

• The iLogtail Agent lacks lifecycle management. When an Agent process exits, its corresponding heartbeat information remains in the Config Server database without any indication of its liveness, making it difficult to know which records should be cleaned up.

• Our thousands of application instances are deployed in groups, and each group may have different collection configurations. Therefore, the Config Server must also support managing Agents in groups based on tags.

All nodes in the production environment require multi-instance deployment, including the Config Server. However, the current LevelDB storage solution makes it stateful, necessitating a switch to a relational database like MySQL, which has mature disaster recovery solutions.

When the Agent is running, it needs to report information such as CPU and memory usage to the Config Server in an appropriate manner, allowing administrators to monitor its load status easily.

Aside from adding a front-end interface for the Config Server, we have made comprehensive optimizations over the past period to address the five major issues in the four aforementioned areas, ultimately achieving our goal. Below, using the OKR methodology, we will introduce the solutions to these five major problems. It is worth noting that the community has recently started discussions about modifying the communication protocol for the Config Server. Our solution was implemented based on earlier work and might differ from the final solution.

The solution consists of three parts:

• Use the BulkRequest interface of esapi to send log data to the backend in batches. By batching, a single request can send hundreds or even thousands of log entries, reducing the number of requests by two or three orders of magnitude.

• Before the flush stage, the Agent aggregates the logs and generates a random pack ID. This pack ID is used as the routing parameter to ensure that logs from the same batch are routed to the same shard. This prevents Elasticsearch from using auto-generated document IDs as the shard field, reducing unnecessary computation and I/O operations, lowering the load, and increasing throughput.

• Enable the go routine pool and send log data to the backend concurrently.

The solution refers to the implementation of HAProxy to minimize the impact of network jitter.

• The Config Server only considers an Agent to be online after receiving a heartbeat for a specified number of consecutive times.

• An Agent is considered offline only if the server does not receive a heartbeat for a specified number of consecutive periods.

• After an Agent has been offline for a certain duration, the residual heartbeat information for that Agent can be automatically cleaned up.

This solution requires modifying the communication protocol. The relevant part of the original protocol is as follows:

message AgentGroupTag {

string name = 1;

string value = 2;

}

message AgentGroup {

string group_name = 1;

...

repeated AgentGroupTag tags = 3;

}

message Agent {

string agent_id = 1;

...

repeated string tags = 4;

...

}As you can see, both the Agent Group and the Agent of iLogtail have tags. However, the data structures of the two attributes are different. The Agent Group is a name-value array, and the Agent is a string array.

The solution unifies them to use a name-value array and adds operators to the Agent Group to express two semantics: "AND" and "OR". This way, an Agent Group has an "expression" composed of tags and operators. If this expression matches the tags held by an Agent, then the Agent is considered to belong to that group. For example:

• If an Agent Group's tags are defined as cluster: A and cluster: B, and the operator is defined as "OR", then all Agents holding either the cluster: A tag or the cluster: B tag belong to this group.

• If an Agent Group's tags are defined as cluster: A and group: B, and the operator is defined as "AND", then only Agents holding both the cluster: A tag and the group: B tag belong to this group.

The Config Server already provides a set of Database storage interfaces. By implementing all methods of these interfaces, persistence can be achieved. The LevelDB storage solution serves as a good example.

type Database interface {

Connect() error

GetMode() string // store mode

Close() error

Get(table string, entityKey string) (interface{}, error)

Add(table string, entityKey string, entity interface{}) error

Update(table string, entityKey string, entity interface{}) error

Has(table string, entityKey string) (bool, error)

Delete(table string, entityKey string) error

GetAll(table string) ([ ]interface{}, error)

GetWithField(table string, pairs ...interface{}) ([ ]interface{}, error)

Count(table string) (int, error)

WriteBatch(batch *Batch) error

}To achieve this, we implemented all methods of the provided interface one by one. Each method basically obtains the entity and primary key of the table through reflection, and then calls GORM's Create, Save, Delete, Count, and Find methods to complete CRUD operations. This approach enables persistence to MySQL, PostgreSQL, SQLite, and SQL Server, and also supports reading data from the database.

The Database interface defines the WriteBatch method, which is intended to process heartbeat requests in batches to improve the database write capability. For MySQL, the corresponding approach would be to use database transactions. However, during practical implementation, DBAs found that updating a batch of heartbeat data within a transaction could cause the transaction to become too large, leading to potential database deadlocks. Discontinuing the use of WriteBatch resolved the issue. By default, the heartbeat interval is 10 seconds. Even if each heartbeat request performs a write operation, the TPS (Transactions Per Second) would be hundreds under a scale of thousands of Agents. Therefore, the database pressure is not particularly high.

This solution is perhaps the simplest and most direct. The Agent reports its CPU usage and memory consumption in bytes within the extras field of the heartbeat request. The Config Server then saves this information to a MySQL database. Additionally, a scheduled task is set up to take snapshots. Multiple snapshots form a time series, which can be visualized for trend analysis, aiding in problem identification and resolution.

The above solutions address how to store a large volume of logs in Elasticsearch, fulfilling half of the user requirements. The remaining half is to provide query services to users, which necessitates a user interface.

Theoretically, Kibana can offer data visualization capabilities, but it is primarily designed for administrators and is a general-purpose tool, not specifically tailored for log services. It can only be used in small teams and single clusters and falls short in meeting some core enterprise needs, such as internal system integration, multi-user role-based access control, Logstore configuration, and management of multiple Elasticsearch clusters. Therefore, to achieve an enterprise-level unified interface, a custom minimal log query platform needs to be developed to complement the log collection process, forming a comprehensive closed loop and providing a complete solution. However, this part is largely unrelated to iLogtail and will be skipped here.

After addressing the five major issues involved in replacing Logstash with iLogtail, we can confidently state that iLogtail has initially formed a small closed loop in terms of configuration management, collection, and feedback. This means it can operate with minimal dependency on other tools and stands out as the best alternative to Filebeat and Logstash technologically.

The version of iLogtail we are using is based on the open-source 1.8.0. Since its official launch at the end of February 2024, it has been running stably for over three months, handling peak loads of more than ten billion logs per hour, with data volumes reaching the terabyte level. The service supports thousands of business application pods and utilizes over a hundred Elasticsearch storage nodes.

Currently, these code changes involve modifications to the communication protocol in some implementation details, which require ongoing communication with community leaders. We hope that some of these changes will be merged into the main branch of iLogtail in the near future.

Of the five major issues, aside from the first one related to the Elasticsearch flusher, the other four are deeply intertwined with the Config Server. Therefore, I once said in the community DingTalk group: "Config Server is the pearl on the crown", which means that it holds significant user value. I hope that community members will continue to work together in this area.

OpenKruise v1.7: SidecarSet Supports Native Kubernetes Sidecar Containers

HTTP/3 Version RPC Protocol by Apache Dubbo, Improving Unstable Network Efficiency by up to 6 Times

640 posts | 55 followers

FollowAlibaba Cloud Community - December 21, 2023

Alibaba Cloud Native Community - March 29, 2024

Alibaba Cloud Community - February 20, 2024

Alibaba Cloud Native Community - August 13, 2025

Alibaba Cloud Native Community - February 8, 2025

Alibaba Cloud Native Community - August 8, 2025

640 posts | 55 followers

Follow Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn MoreMore Posts by Alibaba Cloud Native Community