By Dumin

As the requirements for observable data collection continue to innovate, diversified data input and output options, personalized data processing capabilities, and high-performance data processing throughput capabilities have become prerequisites for top observable data collectors. However, due to historical reasons, the existing iLogtail architecture and collection configuration structure can no longer meet the above requirements, gradually becoming a bottleneck restricting the rapid evolution of iLogtail:

▶ iLogtail was initially designed for scenarios where file logs are collected to Simple Log Service:

(1) It simply divides logs into multiple formats. Each format of logs only supports one processing method (such as parsing in regex mode and JSON parsing).

(2) Function implementation is strongly bound to concepts related to Simple Log Service (such as Logstore). Based on this design concept, the existing iLogtail architecture leans towards a monolithic architecture, resulting in serious coupling between modules, poor scalability and universality, and difficulty in providing cascading ability of multiple processes.

▶ The introduction of the Golang plug-in system greatly expands the input and output channels of iLogtail, improving the processing capability to a certain extent. However, due to the implementation of the C++ part, the ability to combine I/O with the processing modules is still severely limited:

(1) The native high-performance processing capabilities of the C++ part are still limited to scenarios where log files are collected and delivered to Simple Log Service.

(2) The processing ability of the C++ part cannot be combined with the processing ability of the plug-in system, and only one of the two can be ensured, thus lowering the performance of complex log processing scenarios.

▶ The existing iLogtail collection configuration structure is similar to the architecture of iLogtail and also uses a tiled structure, which lacks the concept of processing pipelines and cannot express the semantics of cascading processes.

For these reasons, on the 10th anniversary of the birth of iLogtail, Simple Log Service started to upgrade iLogtail, hoping to make iLogtail easier to use, more scalable, and have better performance, so as to better serve our users.

After more than half a year of reconstruction and optimization, iLogtail 2.0 is ready to be released. Let's dive into the new features of iLogtail 2.0!

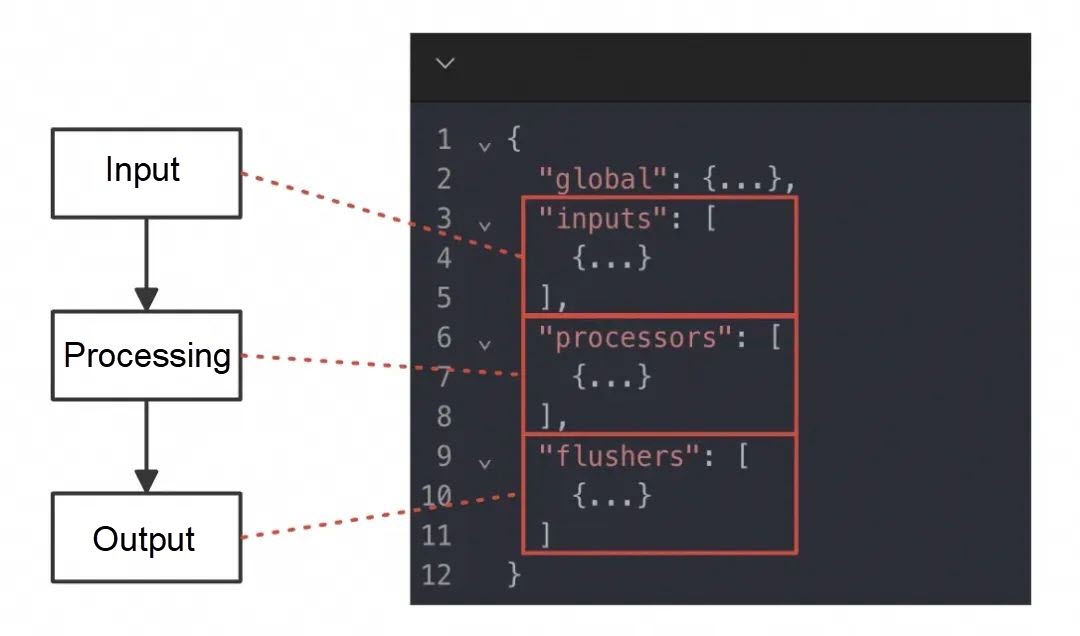

To address the limitation of the previous tiled structure in expressing complex collection behaviors, iLogtail 2.0 introduces a new pipeline configuration. Each configuration corresponds to a processing pipeline, consisting of an input module, a processing module, and an output module. Each module comprises multiple plugins with the following functions:

A JSON object can be used to represent a pipeline configuration:

The inputs, processors, and flushers represent the input module, processing module, and output module. Each element {...} in the list represents a plug-in. Global represents some configurations of the pipeline. For more information about the pipeline configuration structure, see iLogtail pipeline configuration structure [3].

Example: Collect the test.log in the /var/log directory, parse the logs in JSON format, and send the logs to Simple Log Service. The old and new configurations corresponding to this collection requirement are as follows. You can see that the new configuration is refined and the operations performed are clear at a glance.

{

"configName": "test-config",

"inputType": "file",

"inputDetail": {

"topicFormat": "none",

"priority": 0,

"logPath": "/var/log",

"filePattern": "test.log",

"maxDepth": 0,

"tailExisted": false,

"fileEncoding": "utf8",

"logBeginRegex": ".*",

"dockerFile": false,

"dockerIncludeLabel": {},

"dockerExcludeLabel": {},

"dockerIncludeEnv": {},

"dockerExcludeEnv": {},

"preserve": true,

"preserveDepth": 1,

"delaySkipBytes": 0,

"delayAlarmBytes": 0,

"logType": "json_log",

"timeKey": "",

"timeFormat": "",

"adjustTimezone": false,

"logTimezone": "",

"filterRegex": [],

"filterKey": [],

"discardNonUtf8": false,

"sensitive_keys": [],

"mergeType": "topic",

"sendRateExpire": 0,

"maxSendRate": -1,

"localStorage": true

},

"outputType": "LogService",

"outputDetail": {

"logstoreName": "test_logstore"

}

}{

"configName": "test-config",

"inputs": [

{

"Type": "file_log",

"FilePaths": "/var/log/test.log"

}

],

"processors": [

{

"Type": "processor_parse_json_native"

"SourceKey": "content"

}

],

"flushers": [

{

"Type": "flusher_sls",

"Logstore": "test_logstore"

}

]

}If further processing is required after JSON parsing is executed, you only need to add a processing plug-in in the pipeline configuration, but in the old configuration, this requirement cannot be expressed.

For more information about the compatibility between the new pipeline configuration and the old configuration, see the Compatibility Description section at the end of this article.

To support the new pipeline configuration and distinguish it from the old configuration structure, we provide new APIs for managing pipeline configuration, including:

For more information about these interfaces, see OpenAPI documentation[4].

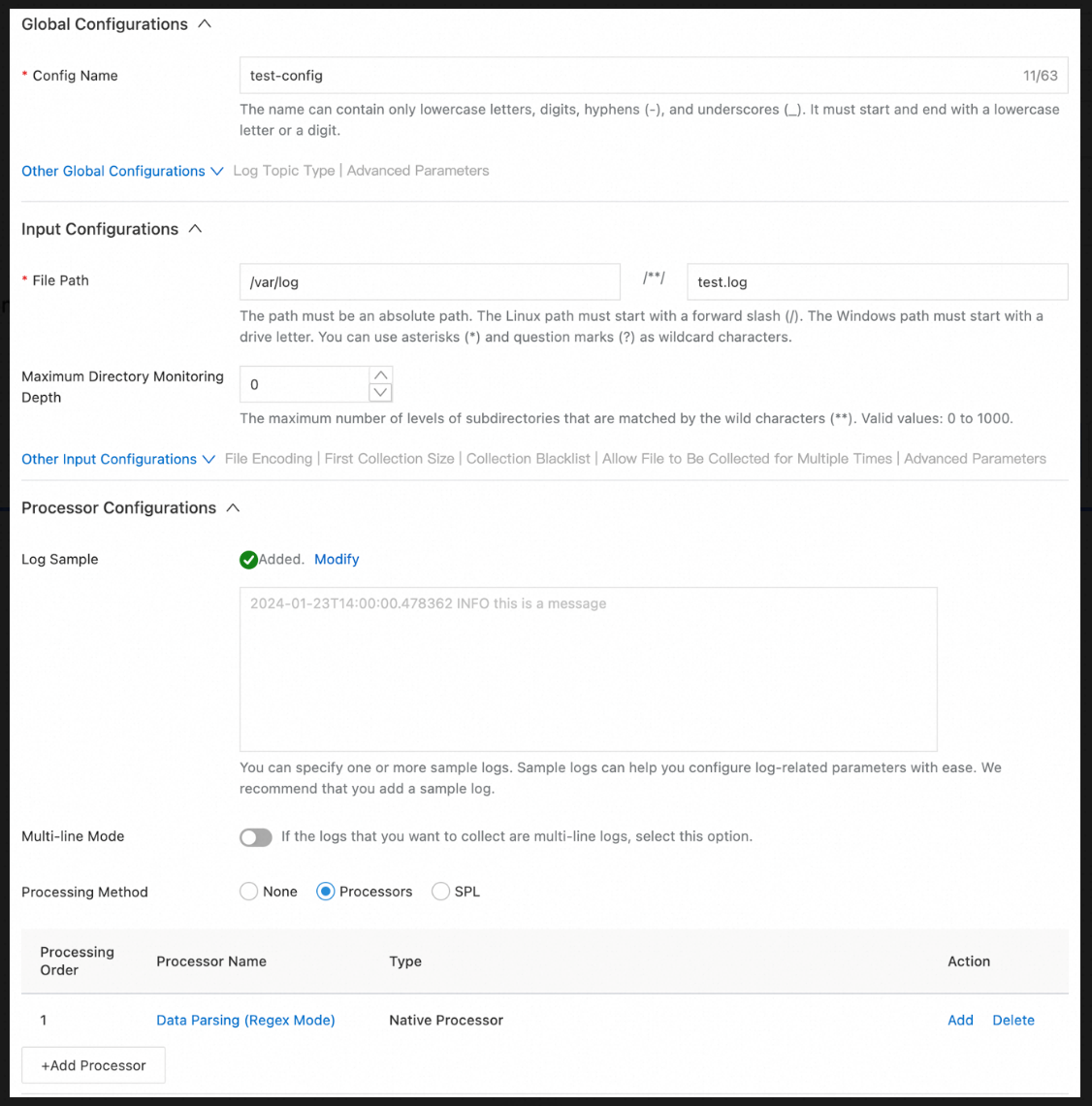

Corresponding to the pipeline collection configuration structure, the front-end console has also been newly upgraded, which is divided into global configuration, input configuration, processing configuration, and output configuration.

Compared with the old console, the new console has the following features:

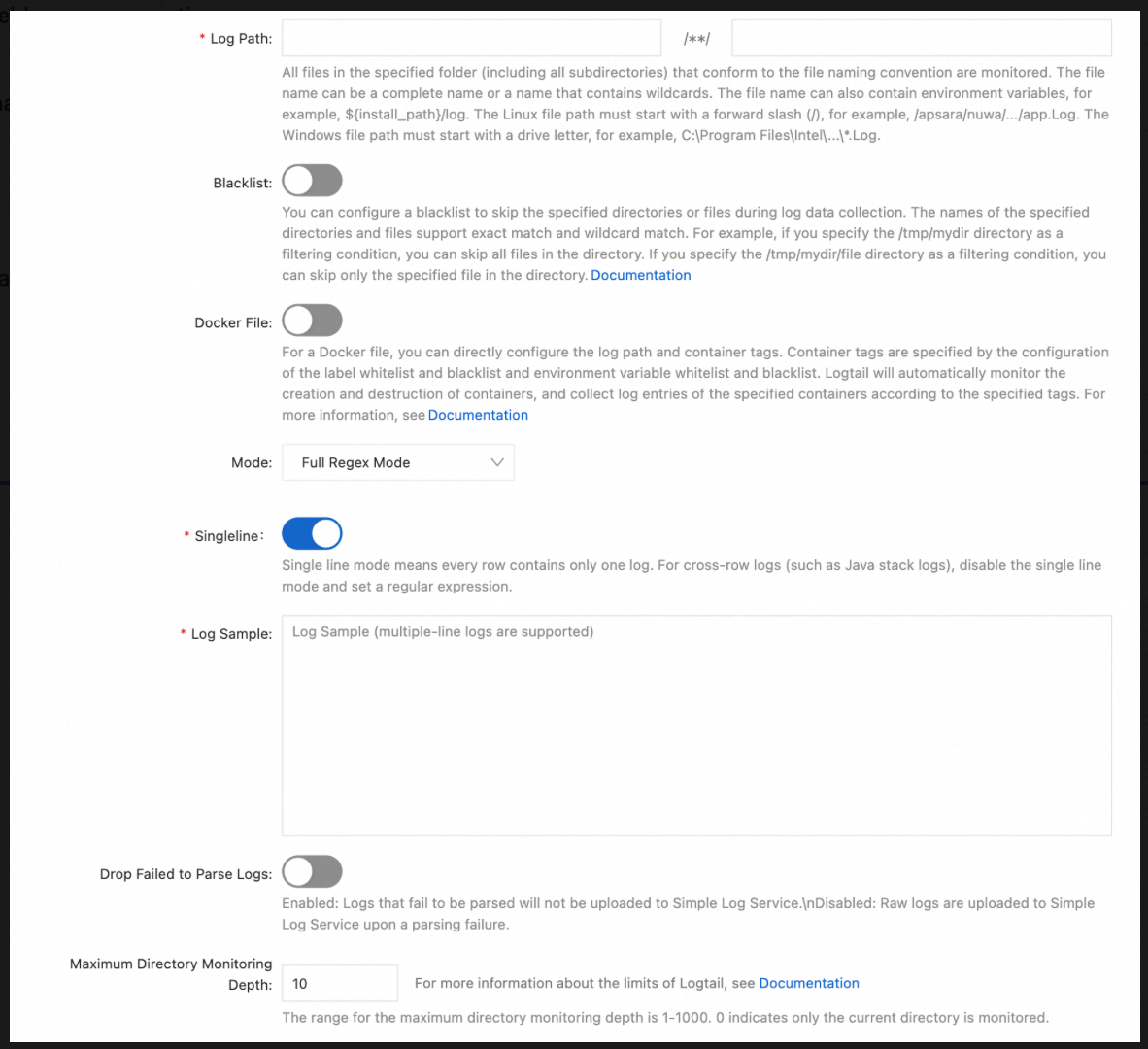

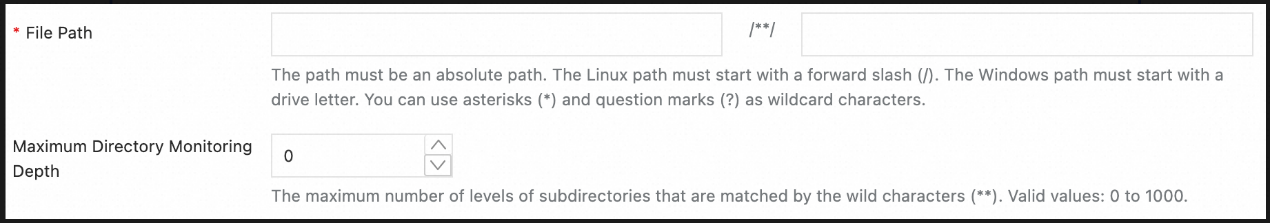

Parameter cohesion: Parameters related to a feature are displayed in a centralized manner, which avoids configuration missing caused by scattered parameters in the old console.

Example: The maximum directory monitoring depth is closely related to the ** in the log path. In the old console, these two are separated far away and easy to forget. In the new console, the two are put together for easy understanding.

All parameters are valid: In the old console, after plug-in processing is enabled, some console parameters become invalid, causing unnecessary misunderstanding. All parameters in the new console are valid.

Similarly, corresponding to the new collection configuration, the CRD resources corresponding to the collection configuration in the Kubernetes scenario are also upgraded. Compared with the old CRDs, the new CRDs have the following features:

apiVersion: log.alibabacloud.com/v1alpha1

kind: ClusterAliyunLogConfig

metadata:

name: test-config

spec:

project:

name: test-project

logstore:

name: test-logstore

machineGroup:

name: test-machine_group

config:

inputs:

- Type: input_file

FilePaths:

- /var/log/test.log

processors:

- Type: processor_parse_json_native

SourceKey: contentIn text log collection scenarios, when your logs are complex and need to be parsed multiple times, are you confused because you can only use extended processing plug-ins? Are you worried about the performance loss and various inconsistencies caused by this?

Upgrade to iLogtail 2.0, and the above problems will be a thing of the past!

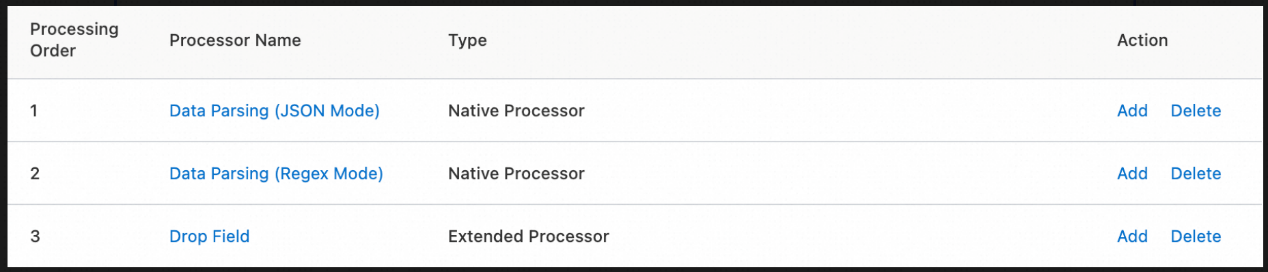

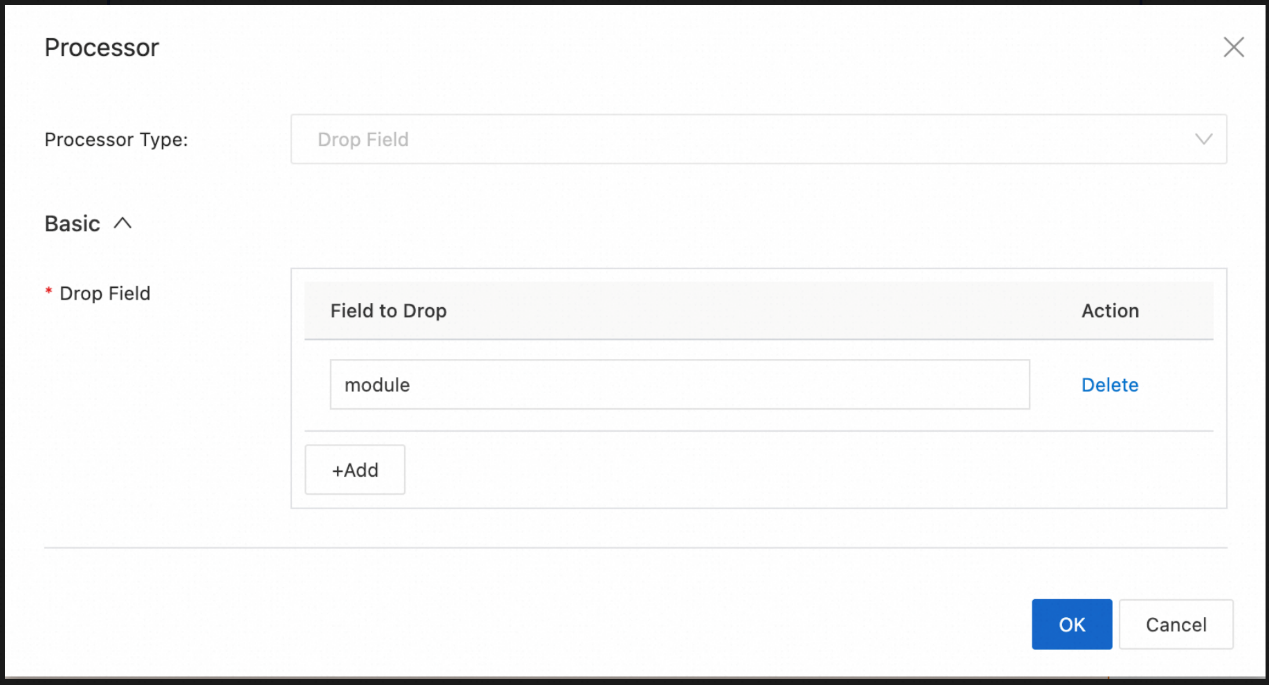

The processing pipeline of iLogtail 2.0 supports the new cascade mode. Compared with the 1.x series, iLogtail 2.0 has the following capabilities:

Note: An extended processing plug-in can only appear after all native processing plug-ins, but not before any native processing plug-in.

Example: If your text log is as follows:

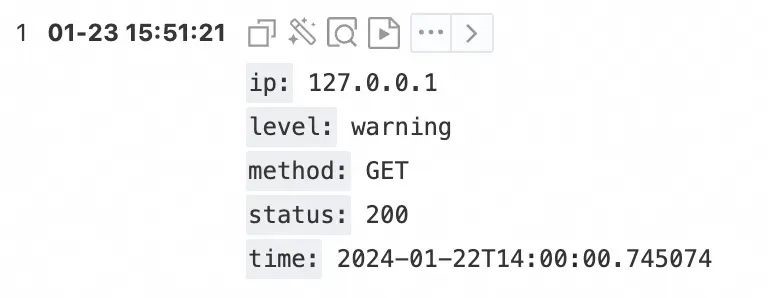

{"time": "2024-01-22T14:00:00.745074", "level": "warning", "module": "box", "detail": "127.0.0.1 GET 200"}

You need to parse the time, level, and module fields. You also need to perform regular parsing on the detail field to split the IP, method, and status fields and discard the drop field. In this case, you can use the Data Parsing (JSON Mode) native plug-in, Data Parsing (Regex Mode) native plug-in, and Drop Field extended plug-in in sequence to complete the requirements.

[Commercial Edition]

[Open Source Edition]

{

"configName": "test-config"

"inputs": [...],

"processors": [

{

"Type": "processor_parse_json_native",

"SourceKey": "content"

},

{

"Type": "processor_parse_regex_native",

"SourceKey": "detail",

"Regex": "(\\S)+\\s(\\S)+\\s(.*)",

"Keys": [

"ip",

"method",

"status"

]

}

{

"Type": "processor_drop",

"DropKeys": [

"module"

]

}

],

"flushers": [...]

}The results are as follows:

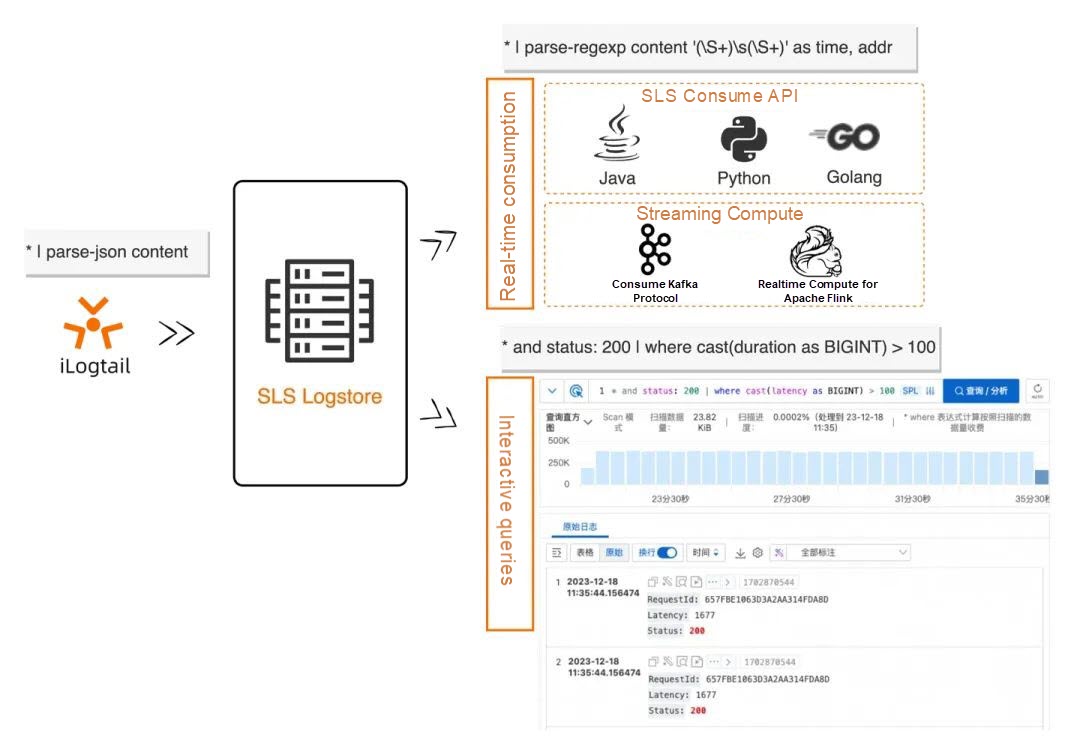

In addition to using a combination of processing plug-ins to process logs, iLogtail 2.0 also adds the SPL (SLS Processing Language) processing mode, which uses the syntax provided by Simple Log Service for unified query, client-side processing, and data processing to implement client-side data processing. The advantages of using the SPL processing mode are:

<data-source>

| <spl-cmd> -option=<option> -option ... <expression>, ... as <output>, ...

| <spl-cmd> ...

| <spl-cmd> ...*

| extend latency=cast(latency as BIGINT)

| where status='200' AND latency>100*

| project-csv -delim='^_^' content as time, body

| project-regexp body, '(\S+)\s+(\w+)' as msg, userFor native parsing plug-ins, iLogtail 2.0 provides finer-grained parsing control, including the following parameters:

Example: If you want to retain this field in the log and rename it as raw when the log field content fails to be parsed, you can configure the following parameters:

In iLogtail 1.x, if you want to extract the time field to nanosecond precision, Simple Log Service can only add the nanosecond timestamp field to your logs. In iLogtail 2.0, nanosecond information is directly appended to the log collection time (__time__) without the need to add additional fields. This not only reduces unnecessary log storage space but also facilitates the sorting of logs based on nanosecond time precision in the Simple Log Service console.

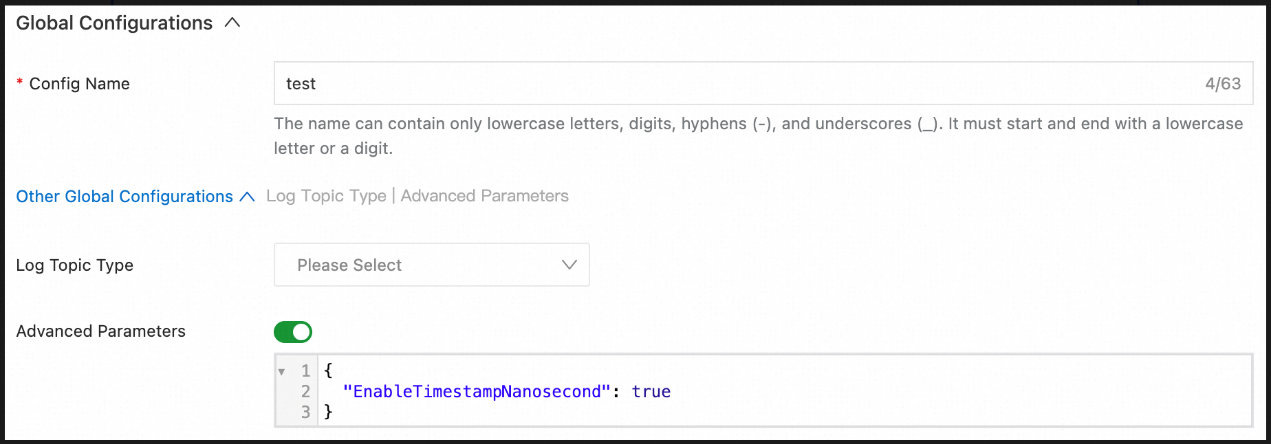

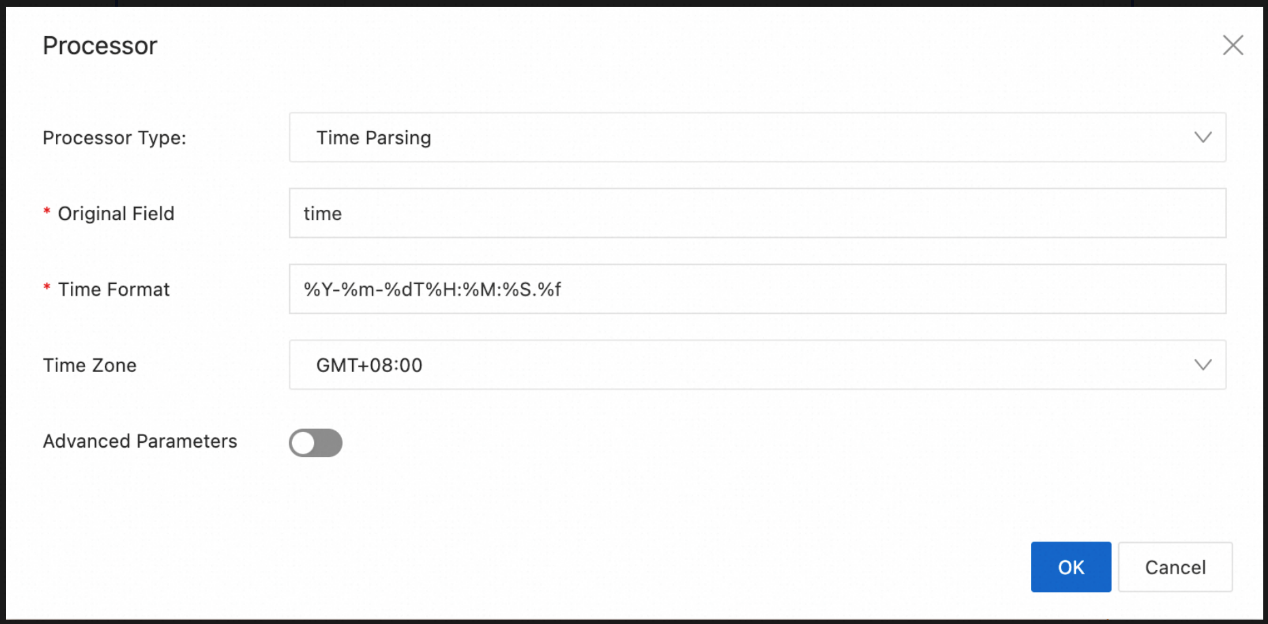

To extract time fields from logs to nanosecond precision in iLogtail 2.0, you need to first configure the native time parsing plug-in. Next, add .%f to the end of SourceFormat. Then, add "EnableTimestampNanosecond": true to the global parameters.

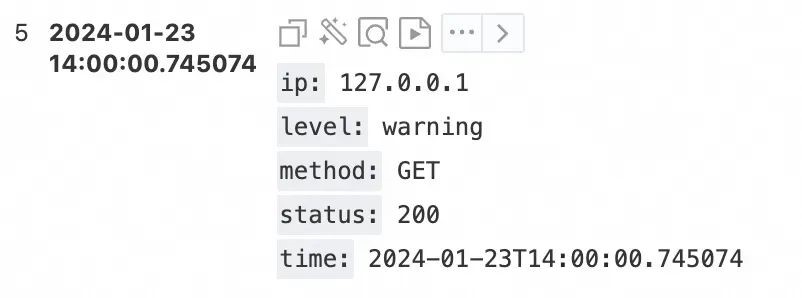

Example: A log contains the time field, whose value is 2024-01-23T14:00:00.745074, and the time zone is UTC+8. You need to parse the time to nanosecond precision and set time to this value.

The results are as follows:

Note: iLogtail 2.0 no longer supports nanosecond timestamp extraction in versions 1.x. If you have used the nanosecond timestamp extraction feature in versions 1.x, you must manually enable the new nanosecond timestamp extraction feature after you upgrade to iLogtail 2.0. For more information, see the Compatibility Description at the end of this article.

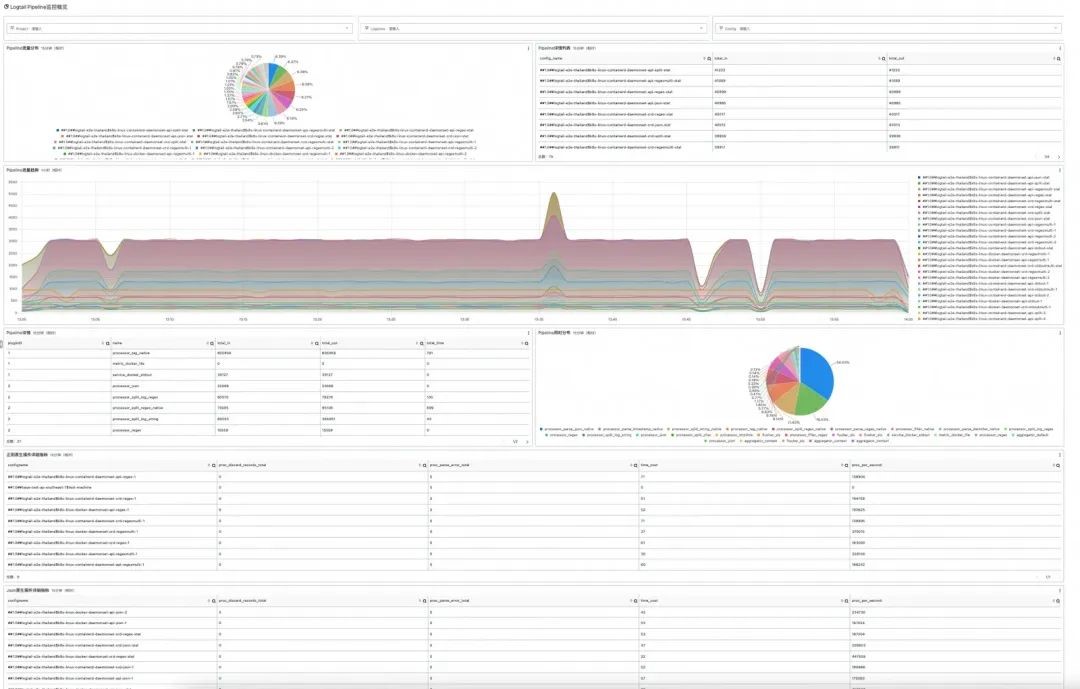

Compared with the simple metrics exposed by iLogtail 1.x, iLogtail 2.0 greatly improves its observability:

iLogtail 2.0 supports C++ 17 syntax. The C++ compiler is upgraded to GCC 9 and the versions of C++ dependency libraries are updated. This makes iLogtail run faster and more secure.

Table: Performance of iLogtail 2.0 in single-threaded log processing (the length of a single log is 1 KB in this example)

| Scenario | CPU (cores) | Memory (MB) | Processing Rate (MB/s) |

| Single-line Log Collection | 1.06 | 33 | 400 |

| Multi-line Log Collection | 1.04 | 33 | 150 |

There is a small amount of incompatibility between the new collection configuration and the old collection configuration. For more information, see iLogtail 2.0 collection configuration incompatibility change description [5].

When using extended plugins to process logs, iLogtail 1.x stored some tags in common log fields for implementation reasons. This caused inconvenience when using features like query, search, and consumption in the Simple Log Service console. To resolve this, iLogtail 2.0 will return all tags to their original locations by default. If you still want to maintain the 1.x behavior, add "UsingOldContentTag": true to the global parameters in the configuration.

Version 2.0 no longer supports the PreciseTimestampKey and PreciseTimestampUnit parameters from versions 1.x. After upgrading to iLogtail 2.0, the previous nanosecond timestamp extraction function will no longer work. If you still need to parse nanosecond timestamps, manually update the configuration based on the Log Time Parsing Supports Nanosecond Accuracy section (2.5).

The native Apsara parsing processing plugins in version 2.0 no longer support the AdjustingMicroTimezone parameters from versions 1.x. The default microsecond timestamp will be adjusted to the correct time zone based on the configured time zone.

For native parsing plugins, in addition to the three parameters mentioned in the Finer-grained Log Parsing Control section (2.4), there is also the CopyingRawLog parameter. This parameter is only valid when both KeepingSourceWhenParseFail and KeepingSourceWhenParseSucceed are true. It adds an additional raw_log field to the log when parsing fails, containing the content that failed to be parsed.

This parameter is included for compatibility with earlier configurations. After upgrading to iLogtail 2.0, it is recommended to delete this parameter to reduce unnecessary duplicate log uploads.

The goal of Simple Log Service is to provide users with a comfortable and convenient user experience. Compared to iLogtail 1.x, the changes in iLogtail 2.0 are more noticeable, but they are just the beginning of iLogtail's journey towards a modern observable data collector. We highly recommend trying iLogtail 2.0 if feasible. You may experience some initial discomfort during the transition, but we believe that you will soon be drawn to the more powerful features and improved performance of iLogtail 2.0.

[1] Input plug-ins

https://www.alibabacloud.com/help/en/sls/user-guide/overview-19

[2] Processing plug-ins

https://www.alibabacloud.com/help/en/sls/user-guide/overview-22

[3] iLogtail pipeline configuration structure

https://next.api.aliyun.com/struct/Sls/2020-12-30/LogtailPipelineConfig?spm=api-workbench.api_explorer.0.0.65e61a47jWtoir

[4] OpenAPI documentation

https://next.api.aliyun.com/document/Sls/2020-12-30/CreateLogtailPipelineConfig?spm=api-workbench.api_explorer.0.0.65e61a47jWtoir

[5] iLogtail 2.0 collection configuration incompatibility change description

https://github.com/alibaba/ilogtail/discussions/1294

Alibaba Group's Practice of Accelerating Large Model Training Based on Fluid

Writing Flink SQL for Weakly Structured Logs: Leveraging SLS SPL

664 posts | 55 followers

FollowAlibaba Cloud Native Community - February 8, 2025

Alibaba Cloud Native Community - November 29, 2024

Alibaba Cloud Native Community - August 14, 2024

Alibaba Container Service - October 13, 2022

Alibaba Cloud Community - February 20, 2024

Alibaba Cloud Community - August 2, 2022

664 posts | 55 followers

Follow Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Log Management for AIOps Solution

Log Management for AIOps Solution

Log into an artificial intelligence for IT operations (AIOps) environment with an intelligent, all-in-one, and out-of-the-box log management solution

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community