By Weilong Pan (Huolang)

Simple Log Service (SLS) is a cloud-native observation and analysis platform that provides large-scale, low-cost, and real-time services for log, metric, and trace data. With SLS's convenient data access, system logs and business logs can be stored and analyzed by accessing logs to SLS. Alibaba Cloud Realtime Compute for Apache Flink is a big data analysis platform built by Alibaba Cloud based on Apache Flink. It is widely used in real-time data analysis and risk detection. Realtime Compute for Apache Flink supports the SLS Connector, enabling users to use SLS as a source table or a result table on the Alibaba Cloud Realtime Compute for Apache Flink platform.

Alibaba Cloud Realtime Compute for Apache Flink SLS Connector simplifies the structure of log data. Through configuration, it maps the log field of SLS directly to the Flink SQL Table field column. However, a significant portion of business logs is not completely structured. For example, all log content might be written into a single field, necessitating the extraction of structured fields using methods like regular expression and delimiter splitting. In this regard, this article outlines the use of SLS SPL (Structured Programming Language) to configure the SLS Connector, enabling standardized data. This approach encompasses log cleansing and format standardization.

Log data often comes from multiple sources in various formats and usually lacks a fixed schema. Therefore, before processing and analyzing the data, we need to cleanse and standardize it to ensure a consistent format. The data can be in different formats such as JSON strings, CSV, or irregular Java stack logs.

Flink SQL is a real-time computing model that supports SQL syntax and enables analysis of structured data. However, it requires a fixed schema for the source data, including fixed field names, types, and quantities. This requirement serves as the foundation of the SQL computing model.

There exists a gap between the weakly structured nature of log data and the structured analysis capability of Flink SQL. Consequently, a middle layer is necessary to bridge the gap and perform data cleansing and standardization. Several options are available for this middle layer. In the following section, we will briefly compare different solutions and propose a new approach based on SLS SPL to address the lightweight data cleansing and standardization needs.

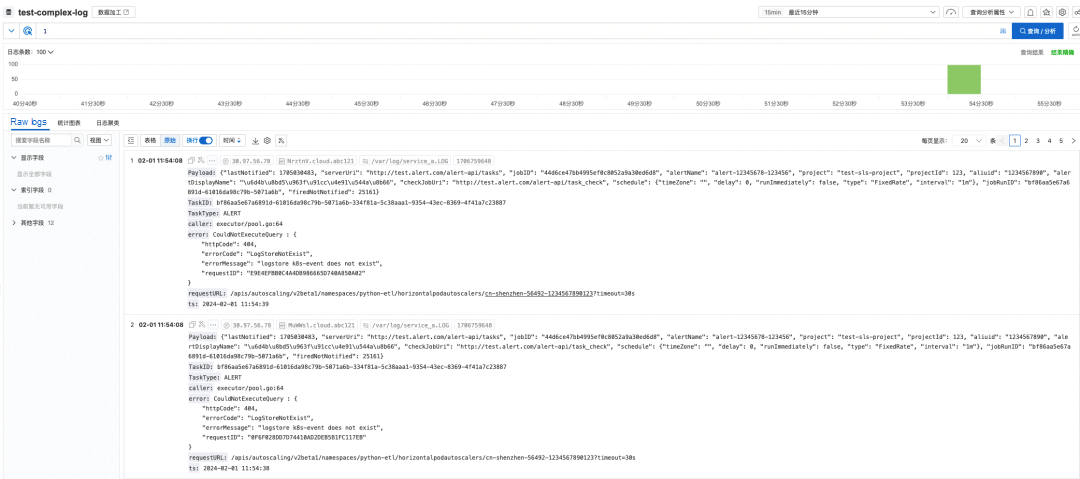

The following is an example of a log. The format is complex, including JSON strings and mixed strings. Among them:

{

"Payload": "{\"lastNotified\": 1705030483, \"serverUri\": \"http://test.alert.com/alert-api/tasks\", \"jobID\": \"44d6ce47bb4995ef0c8052a9a30ed6d8\", \"alertName\": \"alert-12345678-123456\", \"project\": \"test-sls-project\", \"projectId\": 123, \"aliuid\": \"1234567890\", \"alertDisplayName\": \"\\u6d4b\\u8bd5\\u963f\\u91cc\\u4e91\\u544a\\u8b66\", \"checkJobUri\": \"http://test.alert.com/alert-api/task_check\", \"schedule\": {\"timeZone\": \"\", \"delay\": 0, \"runImmediately\": false, \"type\": \"FixedRate\", \"interval\": \"1m\"}, \"jobRunID\": \"bf86aa5e67a6891d-61016da98c79b-5071a6b\", \"firedNotNotified\": 25161}",

"TaskID": "bf86aa5e67a6891d-61016da98c79b-5071a6b-334f81a-5c38aaa1-9354-43ec-8369-4f41a7c23887",

"TaskType": "ALERT",

"__source__": "11.199.97.112",

"__tag__:__hostname__": "iabcde12345.cloud.abc121",

"__tag__:__path__": "/var/log/service_a.LOG",

"caller": "executor/pool.go:64",

"error": "CouldNotExecuteQuery : {\n \"httpCode\": 404,\n \"errorCode\": \"LogStoreNotExist\",\n \"errorMessage\": \"logstore k8s-event does not exist\",\n \"requestID\": \"65B7C10AB43D9895A8C3DB6A\"\n}",

"requestURL": "/apis/autoscaling/v2beta1/namespaces/python-etl/horizontalpodautoscalers/cn-shenzhen-56492-1234567890123?timeout=30s",

"ts": "2024-01-29 22:57:13"

}Data cleansing is required to extract more valuable information from such logs. First, we need to extract important fields and then perform data analysis on these fields. This article focuses on the extraction of important fields. The analysis can still be performed in Flink.

Assume that the specific requirements for extracting fields are as follows:

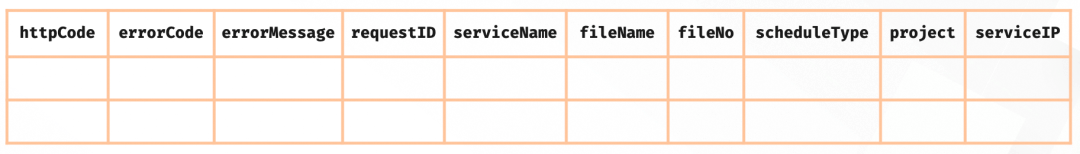

The final required fields are listed as follows. With such a table model, we can easily use Flink SQL for data analysis.

There are many ways to implement such data cleansing. Here are several solutions based on SLS and Flink. There is no absolute best solution. Different solutions are used in different scenarios.

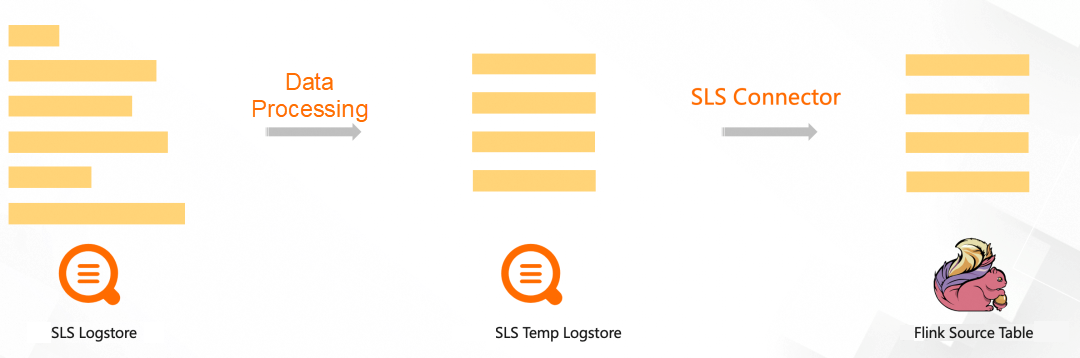

Data processing solution: Create a destination Logstore in the SLS console and create a data processing job to clean data.

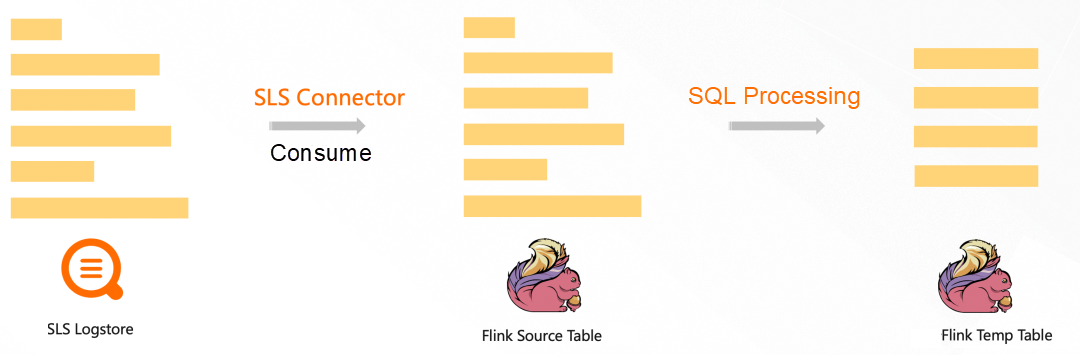

Flink solution: Specify error and payload as source table fields, use SQL regular functions and JSON functions to parse the fields, write the parsed fields to a temporary table, and then analyze the temporary table.

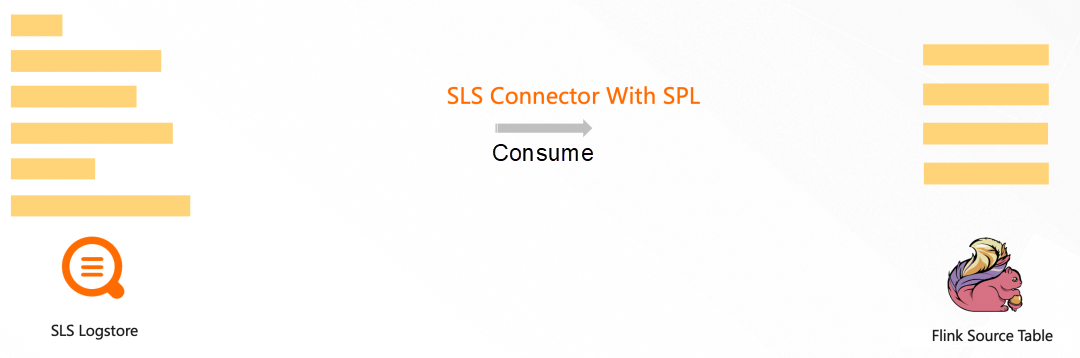

SPL solution: Configure SPL statements in the Flink SLS Connector to cleanse the data. In Flink, source table fields are defined as the cleansed data structure.

We can conclude from the principles of the preceding three solutions that in scenarios where data cleansing is required, configuring SPL in SLS Connector is a solution that is lightweight, easy to maintain, and easy to scale.

In scenarios where log data is weakly structured, the SPL solution avoids creating a temporary intermediate Logstore in the first Solution and avoids creating a temporary table in Flink in the second Solution. The SPL solution cleanses the data in a closer position to the data source and focuses on business logic on the computing platform. Therefore, the separation of duties is clearer.

Next, a weakly structured log is used as an example to describe how to use Flink based on SLS SPL. For better demonstration, we configure the source table of SLS in the Flink console and then start a continuous query to observe the effect. In actual use, you only need to modify the configurations of the SLS source table to complete data cleansing and field standardization.

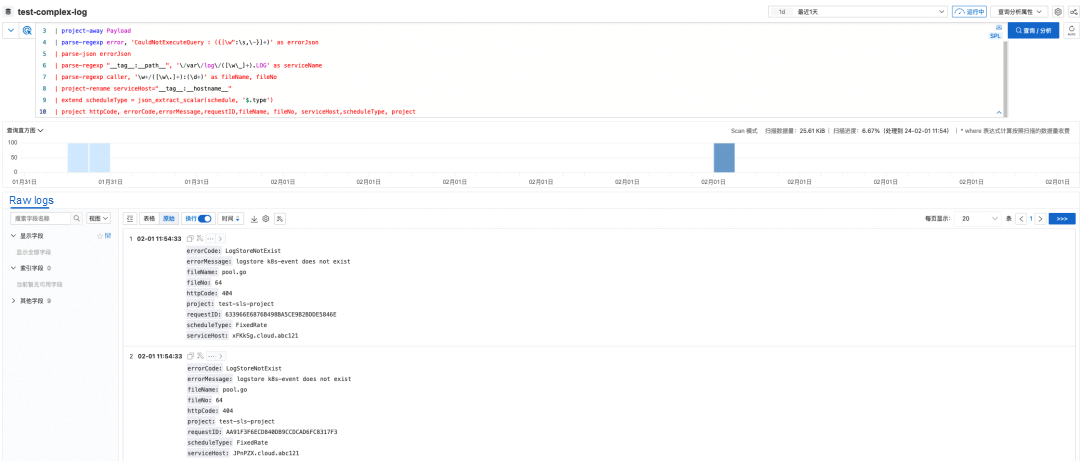

You can enable the scan mode in the Logstore. The SLS SPL pipeline syntax uses the 丨delimiter to separate different commands. Each time you enter a command, you can view the results in a real-time manner. Then, you can increase the number of pipelines to obtain the final results in a progressive and exploratory manner.

A brief description of the SPL in the preceding figure:

* | project Payload, error, "__tag__:__path__", "__tag__:__hostname__", caller

| parse-json Payload

| project-away Payload

| parse-regexp error, 'CouldNotExecuteQuery : ({[\w":\s,\-}]+)' as errorJson

| parse-json errorJson

| parse-regexp "__tag__:__path__", '\/var\/log\/([\w\_]+).LOG' as serviceName

| parse-regexp caller, '\w+/([\w\.]+):(\d+)' as fileName, fileNo

| project-rename serviceHost="__tag__:__hostname__"

| extend scheduleType = json_extract_scalar(schedule, '$.type')

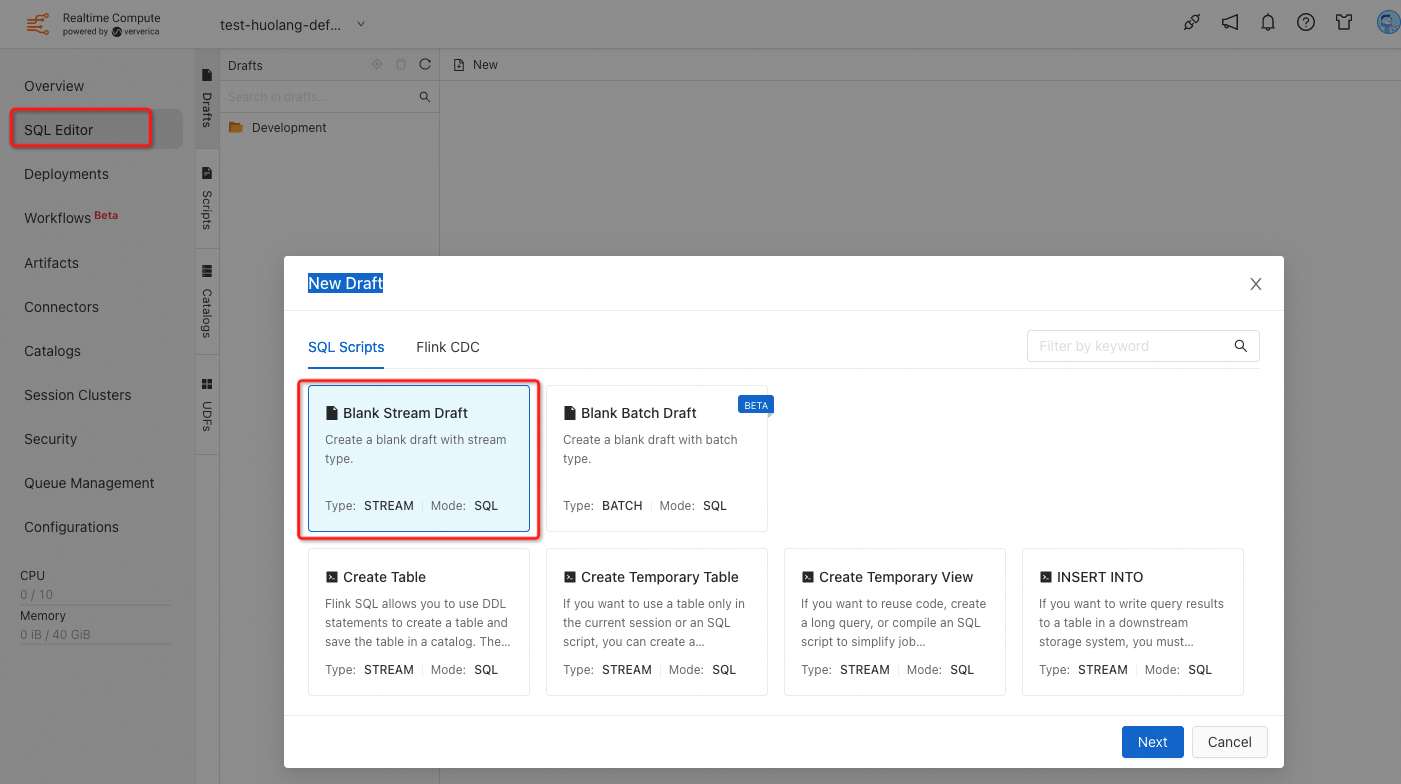

| project httpCode, errorCode,errorMessage,requestID,fileName, fileNo, serviceHost,scheduleType, projectCreate a blank SQL stream job draft in the Realtime Compute for Apache Flink console. Click Next to write the job.

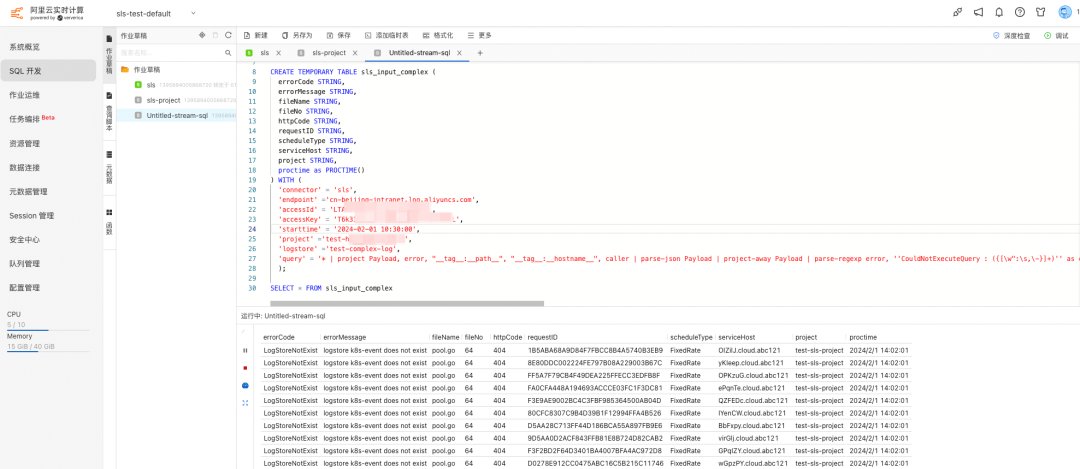

Enter the following statements to create a temporary table in the job draft:

CREATE TEMPORARY TABLE sls_input_complex (

errorCode STRING,

errorMessage STRING,

fileName STRING,

fileNo STRING,

httpCode STRING,

requestID STRING,

scheduleType STRING,

serviceHost STRING,

project STRING,

proctime as PROCTIME()

) WITH (

'connector' = 'sls',

'endpoint' ='cn-beijing-intranet.log.aliyuncs.com',

'accessId' = '${ak}',

'accessKey' = '${sk}',

'starttime' = '2024-02-01 10:30:00',

'project' ='${project}',

'logstore' ='${logtore}',

'query' = '* | project Payload, error, "__tag__:__path__", "__tag__:__hostname__", caller | parse-json Payload | project-away Payload | parse-regexp error, ''CouldNotExecuteQuery : ({[\w":\s,\-}]+)'' as errorJson | parse-json errorJson | parse-regexp "__tag__:__path__", ''\/var\/log\/([\w\_]+).LOG'' as serviceName | parse-regexp caller, ''\w+/([\w\.]+):(\d+)'' as fileName, fileNo | project-rename serviceHost="__tag__:__hostname__" | extend scheduleType = json_extract_scalar(schedule, ''$.type'') | project httpCode, errorCode,errorMessage,requestID,fileName, fileNo, serviceHost,scheduleType,project'

);Enter the analysis statement in the job and view the result data:

SELECT * FROM sls_input_complexClick the Debug button in the upper-right corner to debug. You can view the values of each column in TABLE, which correspond to the results of SPL processing.

To meet the requirements of weakly structured log data, the Flink SLS Connector has been upgraded. It now allows for direct configuration of SPL (Stream Processing Language) through the Connector to cleanse and push down SLS data sources. This upgraded solution, particularly when extracting regular expression fields, JSON fields, and CSV fields, is lighter-weight compared to the original data processing solution and the original Flink SLS Connector solution. This streamlined approach simplifies the data cleansing task.

Furthermore, performing data cleansing at the data source end reduces network transmission traffic, ensuring that the data arriving in Flink is already standardized. This enables you to focus more on business data analysis in Flink.

In addition, the SLS scan-based query now supports SPL queries, making SPL verification and testing easier. You can view the real-time execution results of the SPL pipeline syntax.

[1] SLS Overview

https://www.alibabacloud.com/help/en/sls/product-overview/what-is-log-service

[2] SPL Overview

https://www.alibabacloud.com/help/en/sls/user-guide/spl-overview

[3] Alibaba Cloud Realtime Compute for Apache Flink Connector SLS

https://www.alibabacloud.com/help/en/flink/developer-reference/log-service-connector

[4] SLS Scan-Based Query Overview

https://www.alibabacloud.com/help/en/sls/user-guide/scan-based-query-overview

Accelerating Large Language Model Inference: High-performance TensorRT-LLM Inference Practices

664 posts | 55 followers

FollowAlibaba Cloud Native - April 26, 2024

Alibaba Cloud Native Community - August 14, 2025

Alibaba Cloud Native Community - July 31, 2025

Alibaba Cloud Native Community - May 31, 2024

Alibaba Cloud Native Community - November 4, 2024

Alibaba Cloud Native Community - November 1, 2024

664 posts | 55 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Native Community