By Zikui

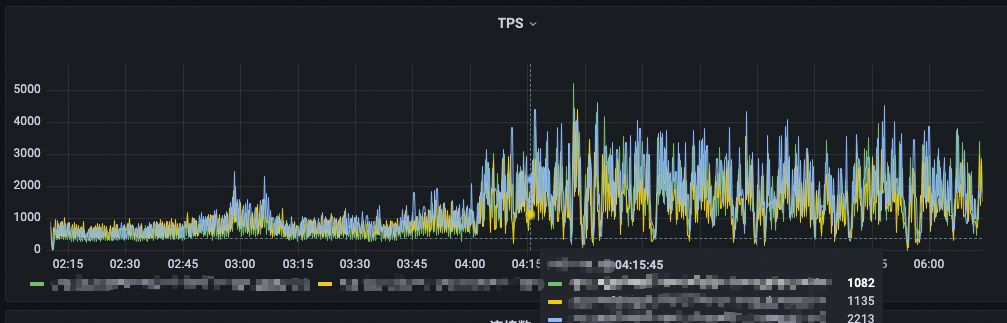

In the daily use of ZooKeeper, a troublesome issue is the disk capacity of nodes. If there is an excessively high TPS or an inappropriate cleanup strategy, it can lead to the accumulation of data and log files in the cluster, ultimately causing the disk to become full and the server to be down. Recently, an online check revealed that a user's cluster experienced a sudden surge in TPS within a certain period.

This results in a large number of snapshot and transaction log files on the disk.

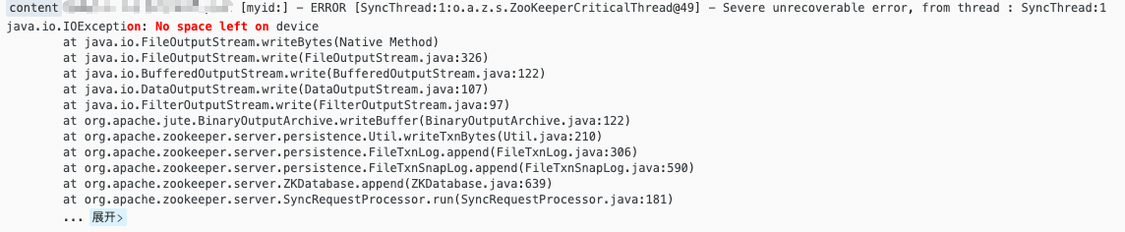

Ultimately, the disk space is exhausted and the node service becomes unavailable.

This article explores the best practice for solving ZooKeeper disk issues by delving into the mechanism of ZooKeeper data file generation and the parameters related to data file generation in ZooKeeper.

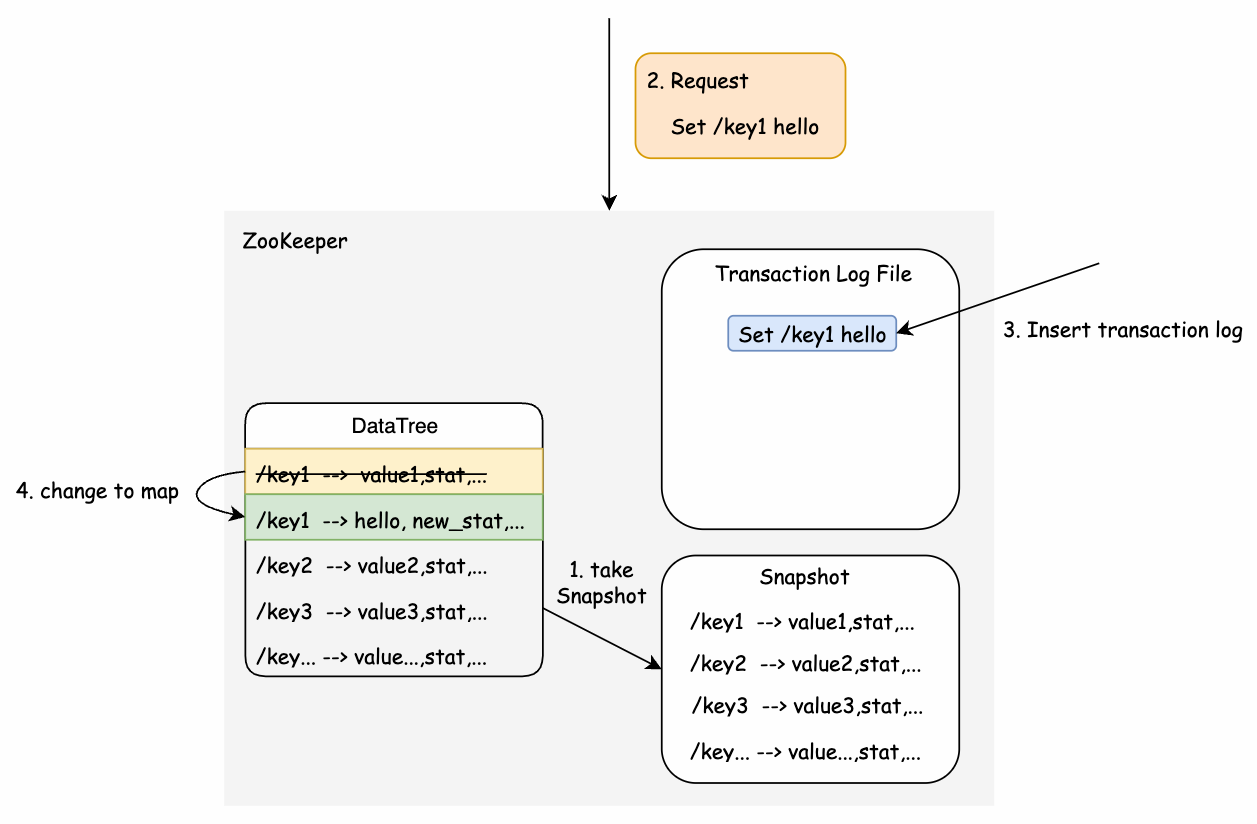

First, we need to explore the basic principle of data persistence in ZooKeeper. To ensure that all data changes are not missing, ZooKeeper uses a state machine to record and restore data.

Simply put, ZooKeeper has a large map that stores all Znodes, where the key is the path of Znodes and the value is the data, ACL, and states on Znodes. ZooKeeper serializes the large map in memory at a certain time point to obtain a snapshot of the data in memory at this time.

At the same time, another file is used to store the modification operations on the data state in this snapshot after this time point.

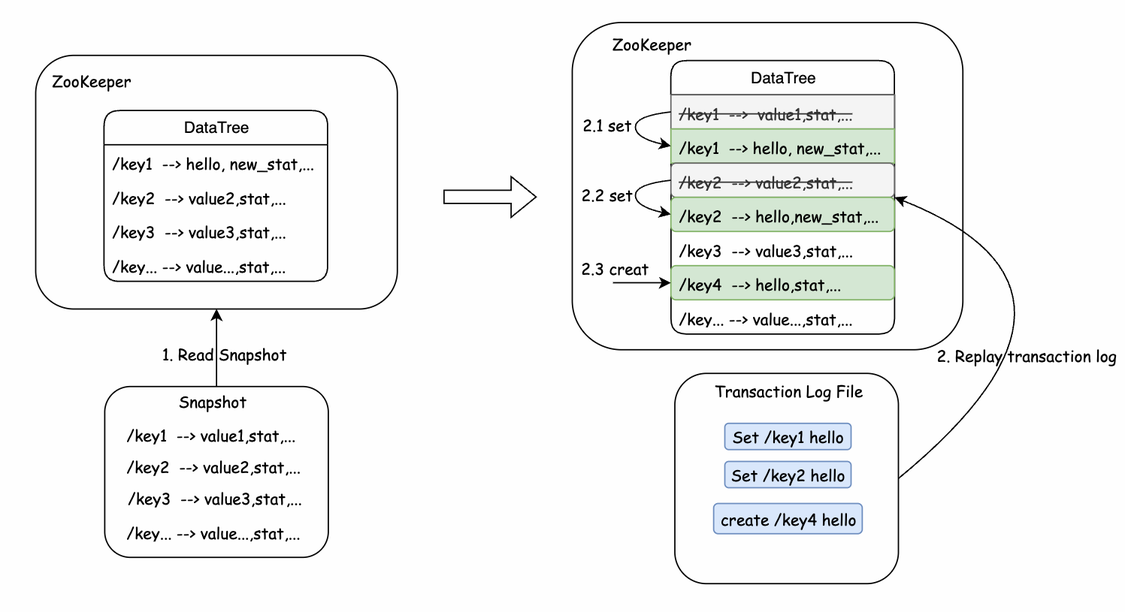

When a ZooKeeper node is restarted, data is restored by using existing snapshots and transaction logs.

This method can ensure that the changed data in memory will not be lost, and the write performance will not be significantly impacted. Even if a node is down, the data can be completely restored.

From this point of view, there are mainly two types of data files generated by ZooKeeper: snapshot files of data in memory and transaction log files that store changes. These two types of files are specified respectively by dataDir and dataLogDir in the configuration file, and they are also the two types that occupy a large amount of disk space in ZooKeeper.

When an excessive amount of data is stored or data changes very frequently in ZooKeeper, the files to which snapshots of storage in memory are serialized and the transaction log files generated by data changes can become numerous and large. If appropriate data cleanup strategies and parameters are not configured, disk issues will cause cluster nodes down and even service unavailable.

public class ZooKeeperServer implements SessionExpirer, ServerStats.Provider {

...

public void takeSnapshot(boolean syncSnap) {

long start = Time.currentElapsedTime();

try {

txnLogFactory.save(zkDb.getDataTree(), zkDb.getSessionWithTimeOuts(), syncSnap);

} catch (IOException e) {

LOG.error("Severe unrecoverable error, exiting", e);

// This is a severe error that we cannot recover from,

// so we need to exit

ServiceUtils.requestSystemExit(ExitCode.TXNLOG_ERROR_TAKING_SNAPSHOT.getValue());

}

...

}

...

}When the server tries to write the data in memory to disk, an exception will be thrown and the server process exits directly.

For these problems, ZooKeeper officially provides some parameters, so you can effectively reduce the stress on disk by configuring appropriate parameters. Next, let's analyze the relevant parameters in detail.

• autopurge.snapRetainCount: (No Java system property) New in 3.4.0

• autopurge.purgeInterval: (No Java system property) New in 3.4.0

First of all, ZooKeeper officially supports the ability to regularly clean up data files. Through autopurge.snapRetainCount and autopurge.purgeInterval, you can specify the cleanup interval and the number of data files that should be retained during the cleanup.

Note that to enable this feature in ZooKeeper, you must set autopurge.purgeInterval to a value greater than 0. This value indicates the interval (unit: hour) at which cleanup tasks are run.

In general, configuring these two parameters to enable the scheduled cleanup can greatly reduce the stress on the disk capacity. However, the minimum interval for the scheduled cleanup tasks is 1 hour, which cannot avoid the disk issue in some special scenarios.

For example, a large number of data change requests during the interval between two disk cleanups will generate a large number of transaction log files and snapshot files. Since the cleanup event interval has not been reached, the data accumulates and eventually fills up the disk before the next disk cleanup. To better understand other parameters, let's first explore when ZooKeeper generates snapshot files and transaction log files.

By referencing the method (ZooKeeperServer.takeSnapshot) in the code, it can be found that a new snapshot file generation is triggered in the following situations:

• The node has been started and the data files have been loaded.

• A new leader is elected in the instance.

• A certain condition is met in SyncRequestProcessor (specified by the shouldSnapshot method).

Since the first two cases are not highly frequent, let's focus on the third case. The takeSnapshot method is called in the run method of SyncRequestProcessor, and before that, the shouldSnapshot method has been called to make a judgment:

private boolean shouldSnapshot() {

int logCount = zks.getZKDatabase().getTxnCount();

long logSize = zks.getZKDatabase().getTxnSize();

return (logCount > (snapCount / 2 + randRoll))

|| (snapSizeInBytes > 0 && logSize > (snapSizeInBytes / 2 + randSize));

}The value of logCount represents the size of the current transaction log file and the number of transaction logs, while snapCount and snapSizeInBytes are the values configured through Java SystemProperty;

randRoll is a random number (0 < randRoll < snapCount / 2);

randSize is also a random number (0 < randSize < snapSizeInBytes / 2), so the logic of this judgment condition can be summarized as follows:

When the number of transactions in the current transaction log file exceeds a random value related to snapCount that is generated at runtime (snapCount/2 < value < snapCount), or when the size of the current transaction log file exceeds a random value related to snapSizeInBytes that is generated at runtime (snapSizeInBytes/2 < value < snapSizeInBytes), a snapshot file write operation is performed. Moreover, from the code, it can be seen that writing a snapshot will flush the current transaction log file to disk and create a new transaction log file.

public void run() {

if (shouldSnapshot()) {

resetSnapshotStats();

// Roll transaction log files

zks.getZKDatabase().rollLog();

// take a snapshot

if (!snapThreadMutex.tryAcquire()) {

LOG.warn("Too busy to snap, skipping");

} else {

new ZooKeeperThread("Snapshot Thread") {

public void run() {

try {

zks.takeSnapshot();

} catch (Exception e) {

LOG.warn("Unexpected exception", e);

} finally {

snapThreadMutex.release();

}

}

}.start();

}

}

}The two variables snapCount and snapSizeInBytes can be specified in the ZooKeeper configuration file.

• snapCount : (Java system property: zookeeper.snapCount)

• snapSizeLimitInKb : (Java system property: zookeeper.snapSizeLimitInKb)

It can be seen that the frequency of generating a snapshot file can be controlled by configuring snapCount and snapSizeLimitInKb in the configuration file.

From the above analysis, we know that the generation and cleanup of ZooKeeper's data files can be configured through 4 parameters.

• autopurge.snapRetainCount : (No Java system property) New in 3.4.0

• autopurge.purgeInterval : (No Java system property) New in 3.4.0

• snapCount : (Java system property: zookeeper.snapCount)

• snapSizeLimitInKb : (Java system property: zookeeper.snapSizeLimitInKb)

The first two configuration items specify the scheduled cleanup policy of ZooKeeper while the last two parameters set the frequency of generating snapshot files in ZooKeeper.

According to the previous analysis, by adjusting the snapCount and snapSizeLimitInKb to be larger, the snapshot generation frequency can be reduced, but too large values for these two parameters will also lead to slow loading of data when the node restarts, which increases the service recovery time and business risks.

The autopurge.purgeInterval can only be specified as a minimum of 1, which strictly limits the cleanup interval to 1 hour and can not mitigate the risk for high-frequency write scenarios.

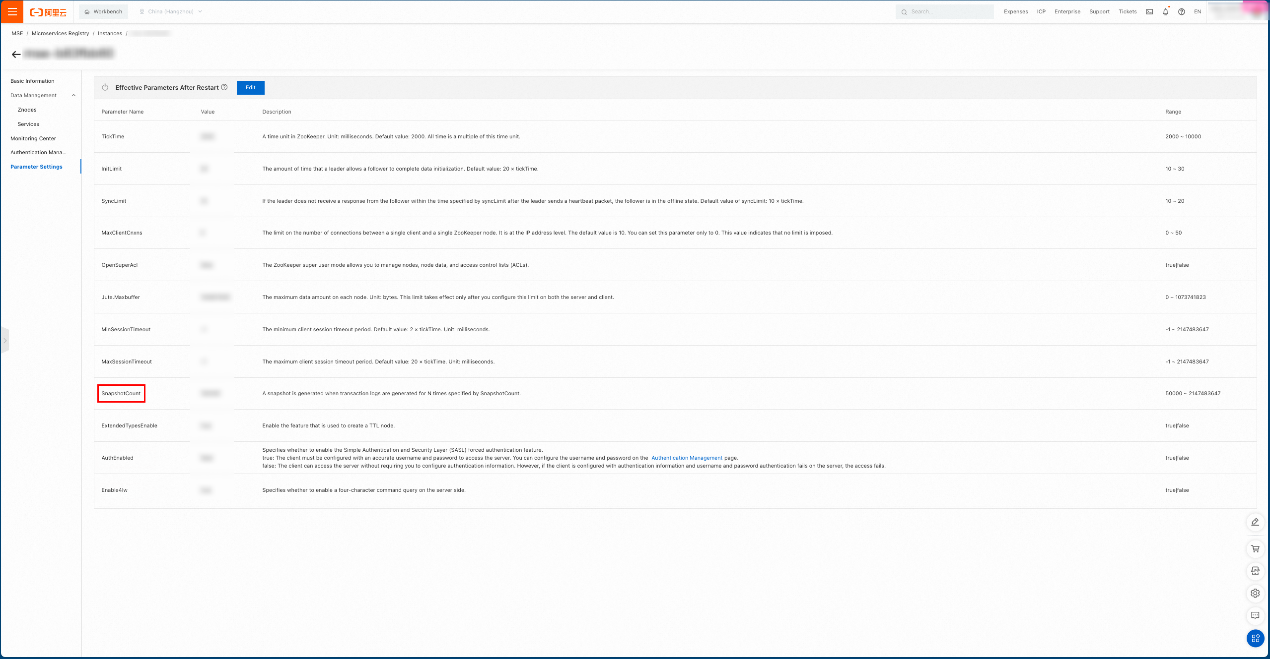

For the ZooKeeper's disk issues analyzed above, MSE ZooKeeper has set appropriate parameters, and users can conveniently change related parameters to adapt to business scenarios. In addition to ZooKeeper's data cleanup mechanism, MSE ZooKeeper provides additional safeguard measures to ensure that the cluster is not affected by disk issues.

Spring Cloud Alibaba Integrates the Distributed Scheduled Task Scheduling

507 posts | 48 followers

FollowAlibaba Cloud Native Community - October 22, 2024

tianyin - November 8, 2019

Apache Flink Community China - November 6, 2020

Alibaba Cloud Native Community - October 18, 2024

Alibaba Clouder - March 20, 2018

Alibaba Cloud Native Community - June 29, 2023

507 posts | 48 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Lindorm

Lindorm

Lindorm is an elastic cloud-native database service that supports multiple data models. It is capable of processing various types of data and is compatible with multiple database engine, such as Apache HBase®, Apache Cassandra®, and OpenTSDB.

Learn MoreMore Posts by Alibaba Cloud Native Community