By Xuanyang

Let's cut to the chase:

• SLS: Alibaba Cloud Simple Log Service provides one-stop data collection, processing, query and analysis, visualization, alerting, consumption, and delivery. It improves your digital capabilities in R&D, O&M, operations, and security scenarios.

• iLogtail: An observable data collector provided by SLS. It features lightweight, high-performance, and automatic configuration. It can be deployed on physical machines, virtual machines, and Kubernetes to collect observable data, such as logs, traces, and metrics.

• iLogtail collection configuration: The data collection pipeline of iLogtail is defined by the collection configuration. A collection configuration contains information about data input, processing, and output.

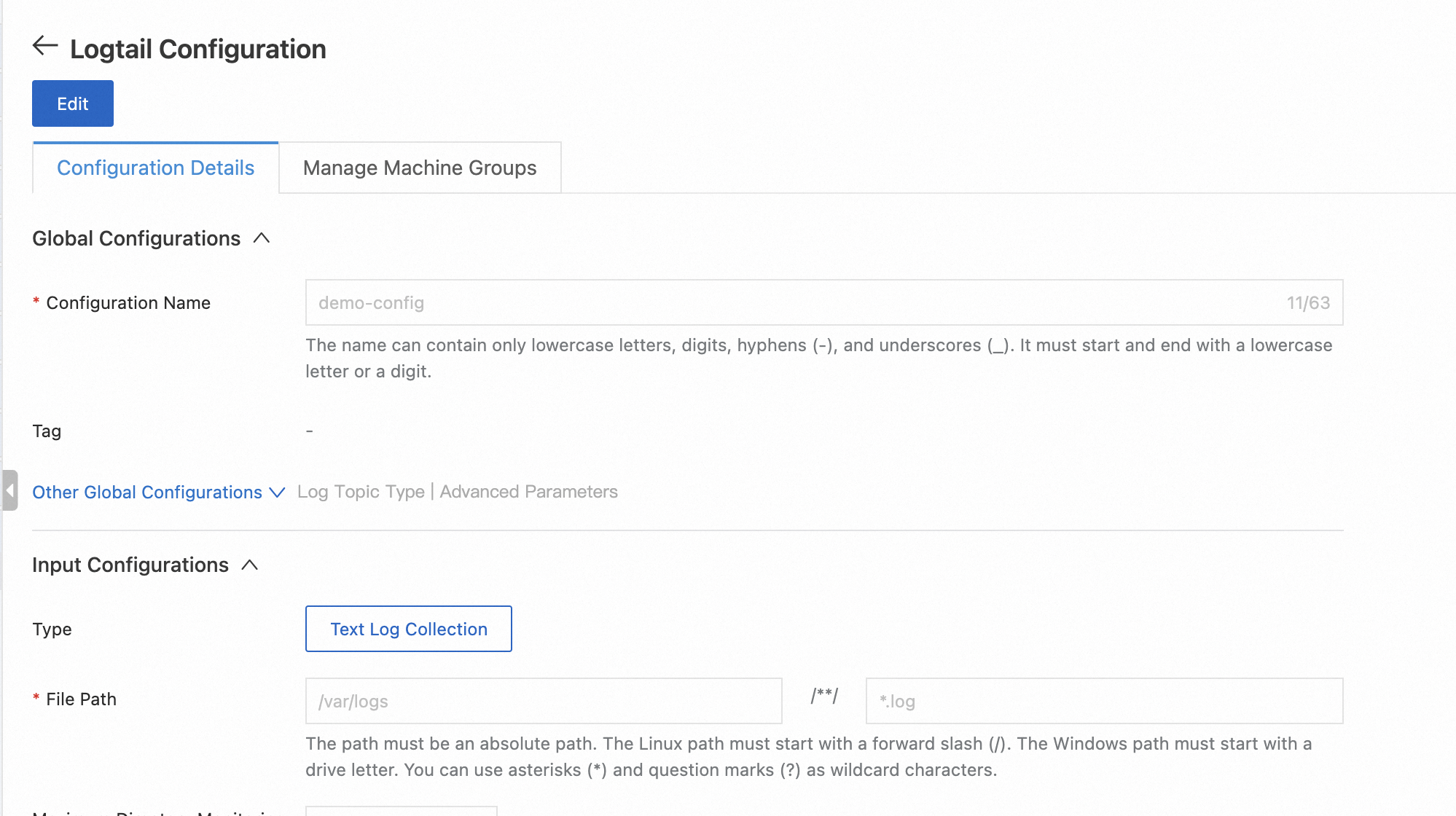

In the SLS console, a simple collection configuration is as follows:

Managing collection configurations through the console isn't automated enough.

Imagine having to manually modify a batch of collection configurations through the console for every deployment - wouldn't that be too troublesome? Not to mention the risk of making mistakes.

In the cloud-native era, we need a more convenient way to manage collection configurations. Ideally, this method should be flexible, scalable, and detached from business logic. It should allow centralized management and monitoring to simplify operations and maintenance. Additionally, it should support easy integration and automated deployment to reduce deployment complexity.

Based on these requirements, CRDs came into being.

The use of CRDs in Kubernetes expands the Kubernetes API, enabling users to define and oversee their own resources. By utilizing CRDs, collection configurations can be efficiently managed as Kubernetes objects, aligning them with other Kubernetes resources like pods, services, and deployments.

Looks great, let's implement a CRD!

Yes, we already have a CRD - AliyunLogConfig.

Let's start with a simple AliyunLogConfig configuration example:

apiVersion: log.alibabacloud.com/v1alpha1

kind: AliyunLogConfig

metadata:

# Set the name of the resource. The name must be unique in the current Kubernetes cluster.

name: example-k8s-file

namespace: kube-system

spec:

# [Optional] Target project.

project: k8s-log-xxxx

# Set the name of the Logstore. If the specified Logstore does not exist, SLS automatically creates a Logstore.

logstore: k8s-file

# [Optional] The number of shards in the Logstore.

shardCount: 10

# [Optional] Logstore storage time.

lifeCycle: 30

# [Optional] Logstore hot storage time.

logstoreHotTTL: 7

# Set the iLogtail collection configuration.

logtailConfig:

# Set the type of the data source. If you want to collect text logs, set the value to file.

inputType: file

# Set the name of the iLogtail collection configuration, which must be the same as the resource name (metadata.name).

configName: example-k8s-file

inputDetail:

# Specify the settings that allow Logtail to collect text logs in simple mode.

logType: common_reg_log

# Set the path to the log file.

logPath: /data/logs/app_1

# Set the name of the log file. You can use wildcard characters (* and ?) when you specify the log file name. Example: log_*.log.

filePattern: test.LOG

# If you want to collect text logs from containers, set the dockerFile to true.

dockerFile: true

# Set container filter conditions.

advanced:

k8s:

K8sNamespaceRegex: ^(default)$

K8sPodRegex: '^(demo-0.*)$'

K8sContainerRegex: ^(demo)$

IncludeK8sLabel:

job-name: "^(demo.*)$"AliyunLogConfig has a built-in default project. When you use the project, you only need to specify the target Logstore and edit the collection configuration to implement data access. Later, with the continuous development of the AliyunLogConfig, some new functions have been added, such as supporting the definition of Logstore creation parameters, supporting the specified target project, supporting simple cross-region and cross-account capabilities, and so on.

AliyunLogConfig returns the CR processing result to the Status field, which contains the statusCode and statu fields.

status:

statusCode: 200

status: OK In general, AliyunLogConfig as a CRD for managing collection configurations can meet basic functional requirements and can support some complex scenarios.

With the continuous development of SLS, the limitations of AliyunLogConfig are constantly highlighted.

Structural Disorder

Incomplete Functionality

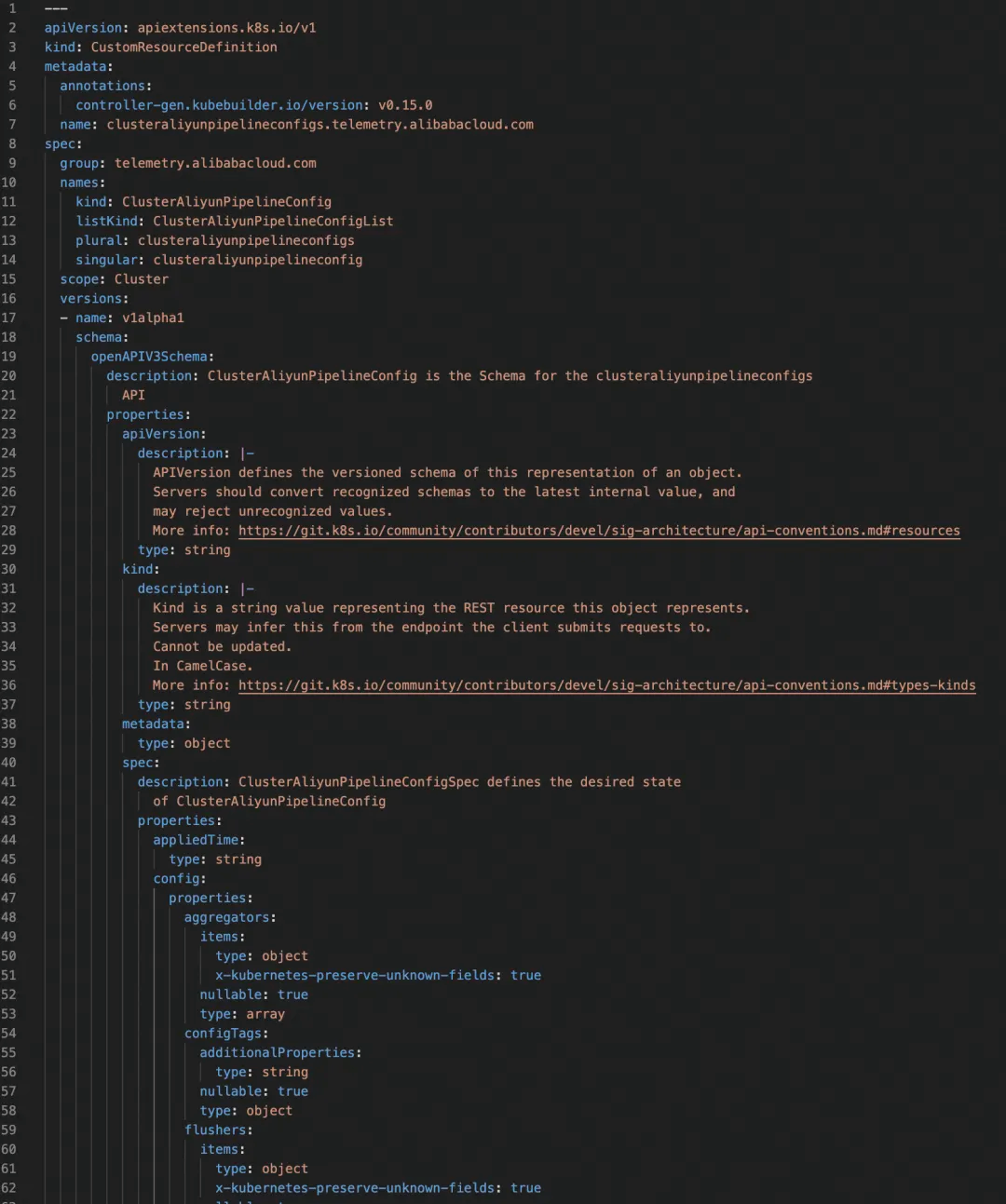

Our brand new CRD-AliyunPipelineConfig, here it comes!

Compared with AliyunLogConfig, AliyunPipelineConfig has made great improvements in configuration format and behavior logic, focusing on flexibility, simplicity, and stability.

|

Type |

AliyunPipelineConfig (updated) |

AliyunLogConfig |

|

ApiGroup |

telemetry.alibabacloud.com/v1alpha1 |

log.alibabacloud.com/v1alpha1 |

|

CRD Resource Name |

ClusterAliyunPipelineConfig |

AliyunLogConfig |

|

Scopes |

Cluster |

Default Cluster |

|

Collection Configuration Format |

It is basically equivalent to LogtailPipelineConfig in SLS API. |

It is basically equivalent to the LogtailConfig in SLS API. |

|

Cross-region Capabilities |

Yes. |

Yes. |

|

Cross-account Capabilities |

Yes. |

Yes. |

|

Webhook Verification Parameters |

Yes. |

No. |

|

Configuration Conflict Detection |

Yes. |

No. |

|

Configuration Difficulty |

Relatively low |

Relatively high |

|

Configure Observability |

Status contains information such as error details, update time, last successfully applied configuration, and the last successful application time. |

Status contains the error code and error message. |

The overall structure of the AliyunPipelineConfig is as follows:

apiVersion: telemetry.alibabacloud.com/v1alpha1

kind: ClusterAliyunPipelineConfig

metadata:

name: test-config

spec:

project: # The target project.

name: k8s-your-project# The name of the project.

uid: 11111 # [Optional] Account.

endpoint: cn-hangzhou-intranet.log.aliyuncs.com # [Optional] Endpoint.

logstores: # [Optional] The list of logstores to be created, which is basically the same as the CreateLogstore interface.

- name: your-logstore # The name of the logstore.

ttl: 30 # [Optional] Data retention time.

shardCount: 10 # [Optional] The number of shards.

... #[Optional] Other Logstore parameters.

machineGroups: # [Optional] The list of machine groups to be associated with the collection configuration.

- name: machine-group-1 # The name of the machine group.

- name: machine-group-2 # The name of the machine group.

config: # The collection configuration. The format is basically the same as that of the CreateLogtailPipelineConfig interface.

sample: #[Optional] Log sample.

global: #[Optional] Global configuration.

inputs: #The input plug-in. You must have only one plug-in.

...

processors: # [Optional] Processing plug-ins, which can be freely combined

...

aggregators: # [Optional] Aggregate plug-ins.

...

flushers: # Output plug-ins. You can specify only one flusher_sls.

...

enableUpgradeOverride: false # [Optional] Used to upgrade the AliyunLogConfig.The AliyunPipelineConfig configuration has several features:

Clear Structure

Fewer Required Fields

Fit API

Next, let's talk about the fields in the spec field in detail.

The list of project fields is as follows:

|

Parameter |

Data Type |

Required |

Description |

|

name |

string |

Yes. |

The name of the target project. |

|

description |

string |

No. |

The description of the target project. |

|

endpoint |

string |

No. |

The service entry of the target project. This parameter is available only when cross-region requirements exist. |

|

uid |

string |

No. |

The uid of the target account. This parameter is available only when cross-account requirements exist. |

Here are some points to note:

• The project field cannot be modified after the CR is created. If you need to switch projects, you need to create a new CR.

• The name field is required. The collected logs will be sent here.

• The endpoint field is specified only when cross-region requirements exist. Otherwise, it will be in the region where the cluster is located by default.

• The uid field is specified only when there are cross-account requirements. This parameter is not required in other scenarios.

• If the specified project does not exist, the system attempts to create the project. If you specify the endpoint and uid when creating the project, the system creates the project in the corresponding region or account.

The list of config fields is as follows:

|

Parameter |

Data Type |

Required |

Description |

|

sample |

string |

No. |

The sample log. |

|

global |

object |

No. |

Global configuration. |

|

inputs |

Object list. |

Yes. |

The input plug-ins list. |

|

processors |

Object list. |

No. |

The processing plug-ins list. |

|

aggregators |

Object list. |

No. |

The aggregation plug-ins list. |

|

flushers |

Object list. |

Yes. |

The output plug-ins list. |

|

configTags |

map |

No. |

The custom label that is used to mark the iLogtail collection configuration. |

In order to unify the user experience and improve the functions, the detailed information and plug-in parameters of config are completely consistent with the request parameters of the SLS CreateLogtailPipelineConfig interface, and all functions are supported.

Note:

• You can configure only one Inputs plug-in (API-level limit).

• You can configure only one Flushers plug-in. The value of the plug-in must be flusher_sls (API-level limit).

• The configTags are the tags that are added to the collection configuration, not the tags that are written to the log.

The logstores field, which supports multiple logstore attributes. The list of a single logstore field is as follows:

|

Parameter |

Data Type |

Required |

Description |

|

name |

string |

Yes. |

The name of the Logstore to be created. |

|

queryMode |

string |

No. |

The type of the target Logstore. The default value is standard, with optional values including: ● query: query Logstore. ● standard: standard Logstore. |

|

ttl |

int |

No. |

The data retention period for the target Logstore (in days). Default value: 30. Valid values: 1 to 3650. If you set this parameter to 3650, the data is permanently stored. |

|

hotTtl |

int |

No. |

The data retention period for hot data storage in the target Logstore (in days). Default value: 0. The value must be less than TTL and greater than or equal to 7. |

|

shardCount |

int |

No. |

The number of shards in the target Logstore. Default value: 2. Valid values: 1 to 100. |

|

maxSplitShard |

int |

No. |

The maximum number of shards to be automatically split in the target Logstore. Default value: 64. Valid values: 1 to 256. |

|

autoSplit |

bool |

No. |

Whether the automatic shard splitting feature is enabled for the target Logstore. Default value: true. |

|

telemetryType |

string |

No. |

The type of observable data in the target Logstore. Default value: None. Valid values: ● None: The log type. ● Metrics: The Metrics type. |

|

appendMeta |

bool |

No. |

Whether the function of recording public IP addresses is enabled for the target Logstore. Default value: true. |

|

enableTracking |

bool |

No. |

Whether Web Tracking is enabled for the target Logstore. Default value: false. |

|

encryptConf |

object |

No. |

The encryption configuration information of the target Logstore. |

|

meteringMode |

string |

No. |

The billing method of the target Logstore. Default value: null. Optional values: ● ChargeByFunction: billed by feature. ● ChargeByDataIngest: billed by the number of writes. |

The Logstore parameters in this section fully support the parameters in the SLSCreateLogstore interface and the billing method can be modified.

Here are the key points:

• In general scenarios, you only need to enter the name to create a Logstore. You do not need to worry about other parameters. Their default values are the same as when you create a Logstore in the console.

• All parameters are valid only when you create a Logstore. Existing Logstores are not modified by this parameter.

The machineGroups field supports multiple machine groups. The parameter list of a single machine group is as follows:

| Parameter | Data Type | Required | Description |

| name | string | Yes. | The name of the machine group to be associated with the iLogtail collection configuration. |

The machine group configuration is simplified. You only need to enter the name to create an identifier machine group. The machine group name is the same as the custom ID.

Note:

• The machineGroups field does not need to be filled in by default, and the machine group automatically created by the default log component is enough.

• The attribute of the existing machine group will not be overwritten, that is, there was originally an IP machine group called A. If A is configured here, the machine group will not be changed to the identifier type.

• The machine group of the collection configuration is strictly the same as the machine group configured here. If you need to add a machine group, you need to edit this parameter.

I'm sure we've all been in a situation where we faced an unfamiliar configuration, filled in some parameters, submitted it, only to find that it didn't work and there were no error messages, leaving the whole process stuck.

But with AliyunPipelineConfig, you won't encounter such frustrations. You just need to fill in the parameters, and AliyunPipelineConfig takes care of everything else.

The AliyunPipelineConfig has a detailed CRD format specification and is matched with a webhook for verification so that the format problem can be observed easily.

The webhook checks the detailed value of the fixed parameter. For example:

AliyunPipelineConfig provides comprehensive assistance for your work.

Although there is parameter value verification, the parameter content may still be filled in incorrectly. For example, our Logstore has so many parameters when it is created, it is inevitable to fill in one incorrectly. Or, when the machine group quota exceeds the limit, how can some machine group be created? Or, the uid filled in is wrong, and cross-account permission is not obtained...

These situations can all be displayed in AliyunPipelineConfig's Status field! The following is a collection configuration. If you specify the wrong project during configuration and use the project of another user, an error will be reported here:

apiVersion: telemetry.alibabacloud.com/v1alpha1

kind: ClusterAliyunPipelineConfig

metadata:

finalizers:

- finalizer.pipeline.alibabacloud.com

name: example-k8s-file

# Expected configuration.

spec:

config:

flushers:

- Endpoint: cn-hangzhou.log.aliyuncs.com

Logstore: k8s-file

Region: cn-hangzhou

TelemetryType: logs

Type: flusher_sls

inputs:

- EnableContainerDiscovery: true

FilePaths:

- /data/logs/app_1/**/test.LOG

Type: input_file

logstores:

- encryptConf: {}

name: k8s-file

project:

name: k8s-log-clusterid

# The application status of the CR.

status:

# Whether the CR is applied successfully.

success: false

# The current status of the CR. If the application fails, the error details are displayed.

message: |-

{

"httpCode": 401,

"errorCode": "Unauthorized",

"errorMessage": "The project does not belong to you.",

"requestID": "xxxxxx"

}

# The time when the current status was updated.

lastUpdateTime: '2024-06-19T09:21:34.215702958Z'

# The last successfully applied configuration information. This configuration information is the actual configuration that takes effect after the default value is filled. This application is not successful, so it is empty here.

lastAppliedConfig:

config:

flushers: null

global: null

inputs: null

project:

name: ""Through these error messages, you can easily locate the problem.

Furthermore, AliyunPipelineConfig performs automatic retry for failures. If there is a temporary error (such as insufficient quota for a Logstore in a project), it will retry until successful, with exponentially increasing intervals between retries.

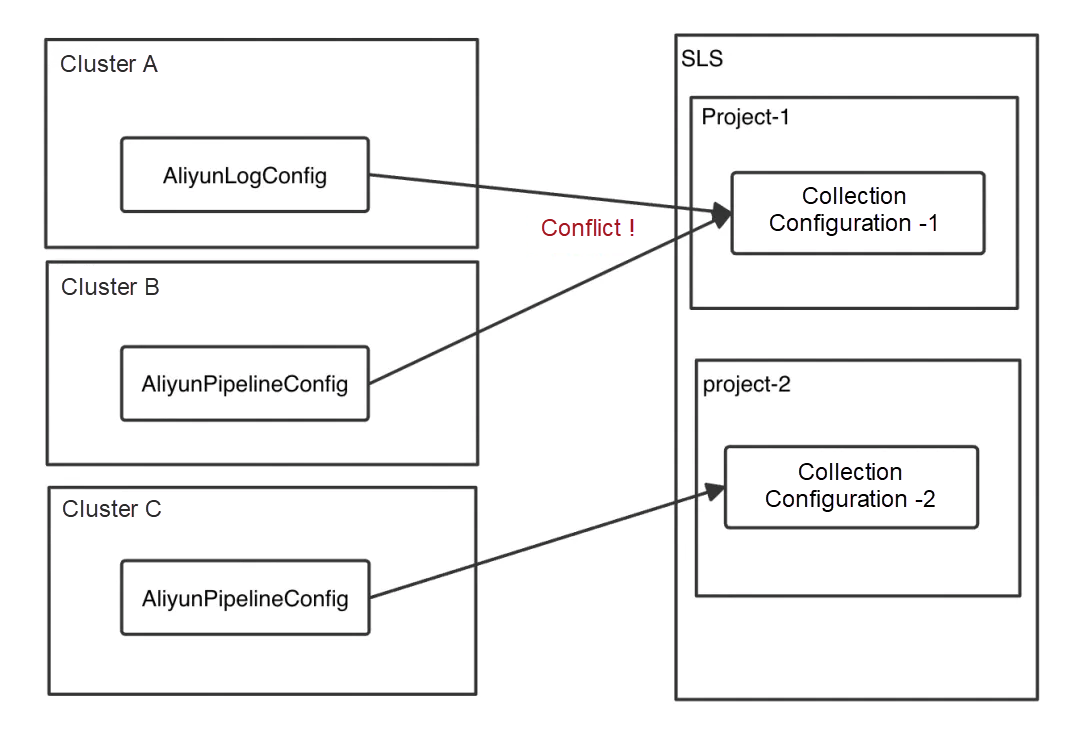

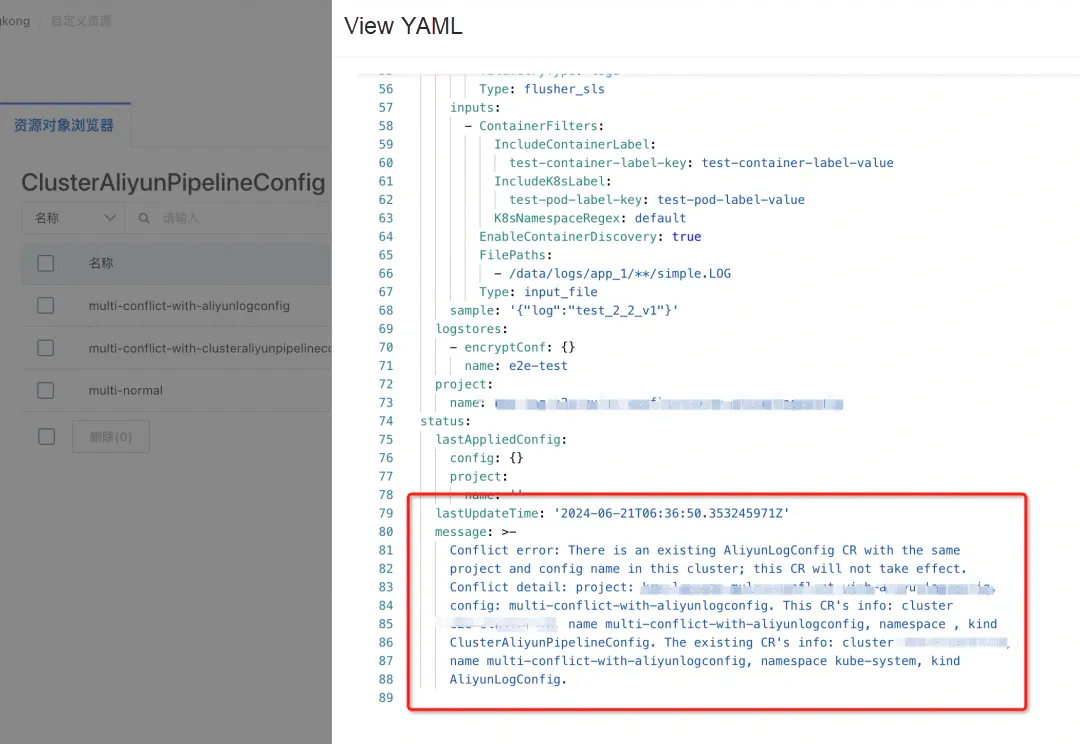

In the era of AliyunLogConfig, we might encounter issues where inadvertently several CRs point to the same project/config, resulting in overlapping configurations and affecting the log collection.

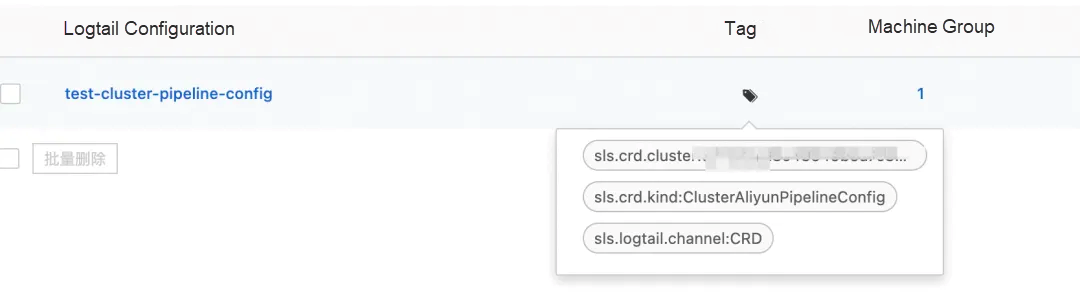

AliyunPipelineConfig will help you solve this problem! When you create a collection configuration, the AliyunPipelineConfig labels the collection configuration and records the cluster, type, and namespace of the CR.

For a tagged collection configuration, only the creator can modify it. Requests from other CRs will be rejected. Of course, the rejected CR will also write the rejection information to Status, telling you that it conflicts with xx CR named xx in xx cluster xx namespace xx type, so that the problem can be quickly located and solved.

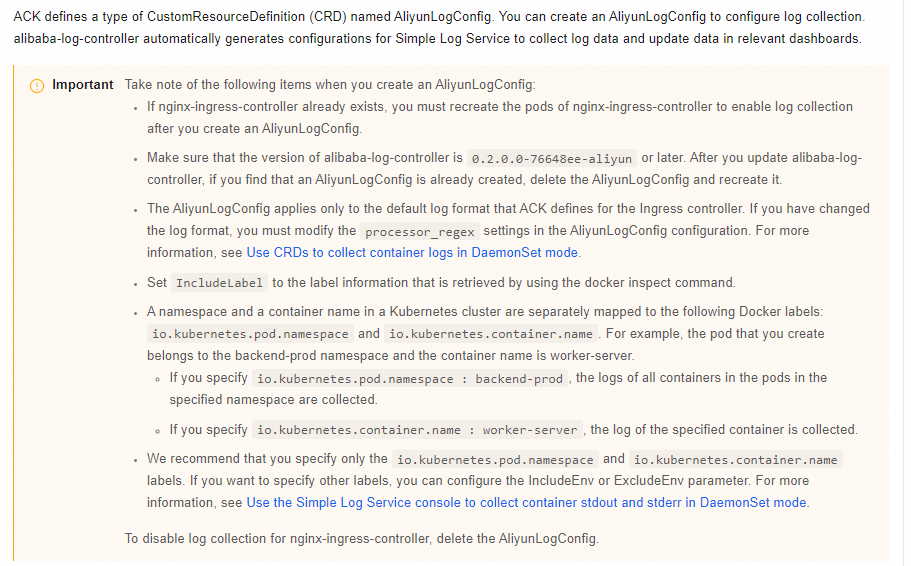

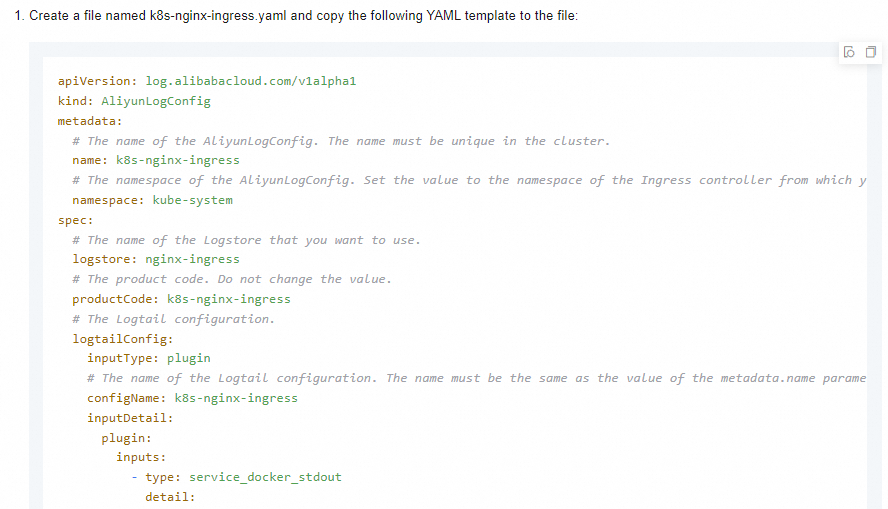

This is a log collection document for the ACK ingress component. You can see that a complex AliyunLogConfig CR is configured in the log collection document with a long description:

We now use AliyunPipelineConfig to implement it:

• The following <your-project-name> needs to be replaced with your actual project

• The following <your-endpoinnt> needs to be replaced with your actual endpoint, for example, cn-hangzhou.log.aliyuncs.com

• The following <your-region> needs to be replaced with your actual region, for example, cn-hangzhou

apiVersion: telemetry.alibabacloud.com/v1alpha1

kind: ClusterAliyunPipelineConfig

metadata:

name: k8s-nginx-ingress

spec:

# Specify the target project.

project:

name: <your-project-name>

logstores:

# Create a Logstore to store Ingress logs.

- name: nginx-ingress

# The product code. Do not change the value.

productCode: k8s-nginx-ingress

# Add a collection configuration.

config:

# Define the input plug-in.

inputs:

# Use the service_docker_stdout plug-in to collect text logs from the container.

- Type: service_docker_stdout

Stdout: true

Stderr: true

# Filter the target container. Here is the container label.

IncludeLabel:

io.kubernetes.container.name: nginx-ingress-controller

# Define the parsing plug-in.

processors:

- Type: processor_regex

KeepSource: false

Keys:

- client_ip

- x_forward_for

- remote_user

- time

- method

- url

- version

- status

- body_bytes_sent

- http_referer

- http_user_agent

- request_length

- request_time

- proxy_upstream_name

- upstream_addr

- upstream_response_length

- upstream_response_time

- upstream_status

- req_id

- host

- proxy_alternative_upstream_name

NoKeyError: true

NoMatchError: true

# The regular expression that is used to extract fields. Logs that conform to this format will map the content of each capture group to its corresponding field.

Regex: ^(\S+)\s-\s\[([^]]+)]\s-\s(\S+)\s\[(\S+)\s\S+\s"(\w+)\s(\S+)\s([^"]+)"\s(\d+)\s(\d+)\s"([^"]*)"\s"([^"]*)"\s(\S+)\s(\S+)+\s\[([^]]*)]\s(\S+?(?:,\s\S+?)*)\s(\S+?(?:,\s\S+?)*)\s(\S+?(?:,\s\S+?)*)\s(\S+?(?:,\s\S+?)*)\s(\S+)\s*(\S*)\s*\[*([^]]*)\]*.*

SourceKey: content

# Define the output plug-in.

flushers:

# Use the flusher_sls plug-in to write data to a specified Logstore.

- Type: flusher_sls

Logstore: nginx-ingress

Endpoint: <your-endpoint>

Region: <your-region>

TelemetryType: logsIn this way, an ingress parsing configuration is set, and the structure is very clear.

First of all, we need to make it clear that data collection depends on:

• Data Source

• Collectors

• Collection Configuration

To achieve cross-region and cross-account configuration, you need to modify not only the configuration of the generation side (alibaba-log-controller) of the collection configuration but also the configuration of the collection side (iLogtail).

Let's give an example to introduce our cross-region and cross-account capabilities.

Assume that the data source is in the Hangzhou region of Account A and you want to deliver the data to the Shanghai region of Account B.

First, you need to modify the startup parameters of iLogtail.

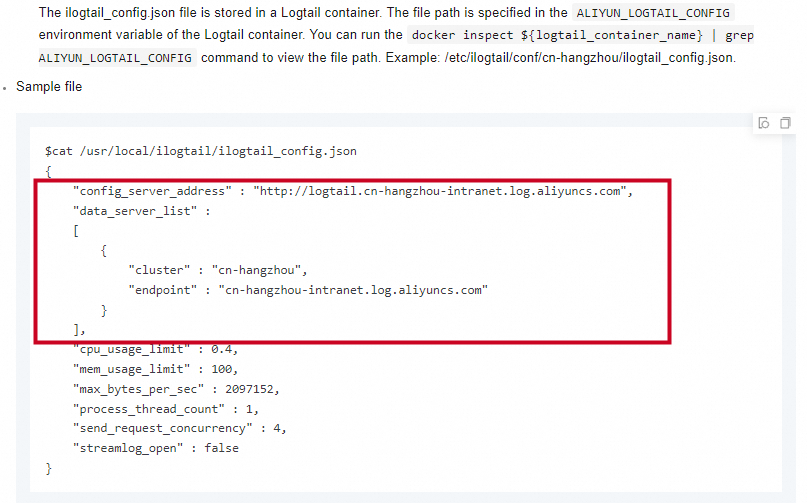

The startup parameters of logtail can be found in the document, here we only need to pay attention to the ilogtail_config.json file inside the two parameters: config_server_address and data_server_list.

• The config_server_address specifies the address from which configurations are pulled. Logtail pulls configurations from the corresponding region by using this address.

• The data_server_list specifies the addresses to which data is sent. Logtail can send data to these regions.

The initial startup parameter file contains only one region, as in the document:

1. In order to support cross-region, we need to make some modifications and add the regional information of Shanghai. The modified configuration is as follows:

{

"config_server_address" : "http://logtail.cn-hangzhou.log.aliyuncs.com",

"config_server_address_list": [

"http://logtail.cn-shanghai.log.aliyuncs.com"

"http://logtail.cn-hangzhou.log.aliyuncs.com"

],

"data_server_list" :

[

{

"cluster" : "cn-shanghai",

"endpoint" : "cn-shanghai.log.aliyuncs.com"

},

{

"cluster" : "cn-hangzhou",

"endpoint" : "cn-hangzhou.log.aliyuncs.com"

},

]

}2. You can save this file to configMap and mount it to daemonset named logtail-ds under the kube-system namespace. The path in the container is assumed to be /etc/ilogtail/ilogtail_config.json.

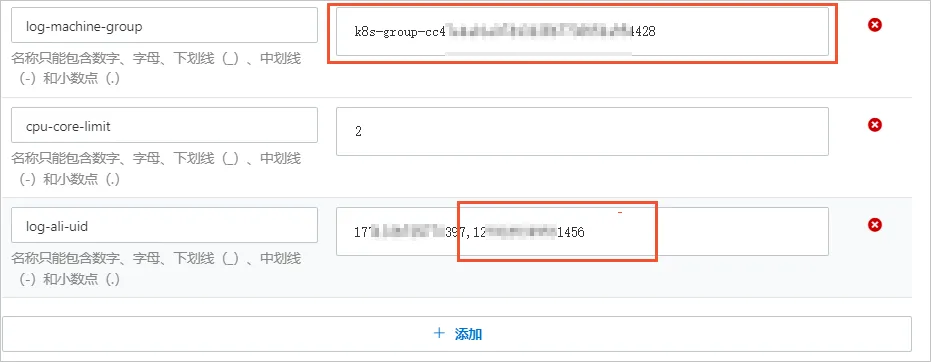

3. Then, in the cluster, under the kube-system namespace, find the configmap named alibaba-log-configuration.

4. Edit the value of the log-ali-uid and add the uid of account B (separate multiple accounts with commas (,), for example, 17397,12456).

5. Edit the value of the log-config-path and change it to the above mounted /etc/ilogtail/ilogtail_config.json.

6. Record the value of the log-machine-group configuration items.

7. Restart the daemonset named logtail-ds in the kube-system namespace.

At this point, iLogtail directly pulls the collection configuration in the Shanghai region, sends data to Shanghai, and has the user ID of account B.

Modifications to alibaba-log-controller are relatively simple:

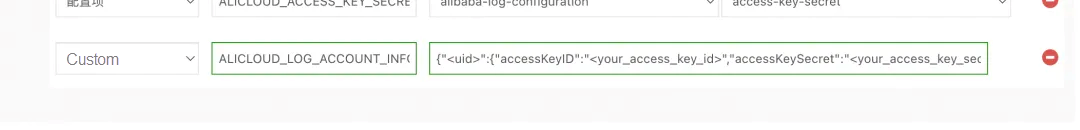

1. In the kube-system namespace, find a deployment named alibaba-log-controller.

2. Add environment variable ALICLOUD_LOG_ACCOUNT_INFOS={"<uid>":{"accessKeyID":"<your_access_key_id>","accessKeySecret":"<your_access_key_secret>"}}, uid here is account B, ak/sk needs SLS-related permissions.

3. Restart alibaba-log-controller. At this point, the alibaba-log-controller has the permission to create SLS resources (logstore, collection configuration, etc.) under account B.

Editing the AliyunPipelineConfig CR is the simplest step. You only need to add the uid and endpoint. Do not forget to modify the endpoint and region in flusher_sls.

apiVersion: telemetry.alibabacloud.com/v1alpha1

kind: ClusterAliyunPipelineConfig

metadata:

name: k8s-nginx-ingress

spec:

# Specify the target project.

project:

# The project of Account B.

name: <your-project-name>

# The uid of Account B.

uid: <uid-user-b>

# The target endpoint.

endpoint: cn-shanghai.log.aliyuncs.com

# Add a collection configuration.

config:

# Define the input plug-in.

inputs:

# Omitted here.

...

# Define the output plug-in.

flushers:

# Use the flusher_sls plug-in to write data to a specified Logstore.

- Type: flusher_sls

Logstore: nginx-ingress

Endpoint: cn-shanghai.log.aliyuncs.com

Region: cn-shanghai

TelemetryType: logsIn this way, the collection configuration will be created under the project of account B, and the data can also be collected.

Remember when we explained AliyunPipelineConfig parameter above, we omitted one? That's enableUpgradeOverride!

When the following conditions are met, the AliyunLogConfig can be directly upgraded to AliyunPipelineConfig:

1. AliyunLogConfig and AliyunPipelineConfig are in the same cluster.

a) If they are not in the same cluster, the AliyunPipelineConfig will fail to be applied due to configuration conflicts.

2. AliyunLogConfig and AliyunPipelineConfig point to the same collection configuration:

a) The target project is the same

b) The target iLogtail collection configuration name is the same

3. AliyunPipelineConfig turned on the enableUpgradeOverride switch

apiVersion: telemetry.alibabacloud.com/v1alpha1

kind: ClusterAliyunPipelineConfig

metadata:

name: k8s-nginx-ingress

spec:

# You only need to turn on this switch to overwrite and update the AliyunLogConfig that points to the project:<your-project-name> and the collection configuration name: k8s-nginx-ingress

enableUpgradeOverride: true

project:

name: <your-project-name>

config:

inputs:

...

flushers:

...If the above conditions are met, our CRD manager alibaba-log-controller will do the following operations:

In this way, AliyunLogConfig can be upgraded to AliyunPipelineConfig.

The introduction to AliyunPipelineConfig ends here. Are you ready to use it now?

AliyunPipelineConfig will first be launched on the self-built Kubernetes cluster deployment log component and then officially launched on ACK and other container products. We welcome everyone to try it out and give us your feedback. We'll be open to suggestions and make improvements accordingly.

Visualize Cloud Data with Grafana and Alibaba Cloud OpenAPI Plug-Ins

212 posts | 13 followers

FollowAlibaba Clouder - April 22, 2019

Alibaba Developer - June 18, 2020

Alibaba Container Service - October 13, 2022

Alibaba Developer - June 19, 2020

Xi Ning Wang(王夕宁) - August 17, 2023

Alibaba Cloud Native Community - November 15, 2023

212 posts | 13 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by Alibaba Cloud Native

Santhakumar Munuswamy August 26, 2024 at 5:11 am

Thank for sharing