By Yuanxiao Zhao

Known for its simplicity, high-efficiency, and strong concurrency, the Go language is widely recognized as an ideal choice for building microservices. As the primary programming language for Kubernetes and Docker, Go is not only extensively used in cloud-native infrastructure components but is also increasingly adopted by developers for various business scenarios to construct business applications based on microservice architecture.

Through its modular system, Microservice architecture enhances system flexibility, agility, and scalability, shortens development cycles, and increases resource utilization efficiency. This is one of the main reasons why more and more companies choose to adopt microservice architecture. However, as business continues to evolve and iterates, the complexity brought by microservices also increases the O&M difficulty, thereby impacting development efficiency and system stability.

To ensure system stability, while enjoying the advantages of microservices, we also have to continuously address the problems and risks they bring. For example:

Currently, in the Go ecosystem, mainstream microservice frameworks primarily focus on how to quickly build microservice applications and solve inter-microservice communication issues, but they still lack microservice governance capabilities. Mainstream microservice governance components, such as Sentinel-Golang and OpenTelemetry, although effectively addressing traffic protection and observability issues, require developers to manually instrument business code with SDKs, which distracts from focusing on business logic implementation. This undoubtedly reduces development efficiency to some extent.

To better govern Go application microservices, improve development efficiency, and enhance system stability, this article introduces the Microservices Engine (MSE) microservice governance solution. Without changing business code, you can achieve the aforementioned governance capabilities.

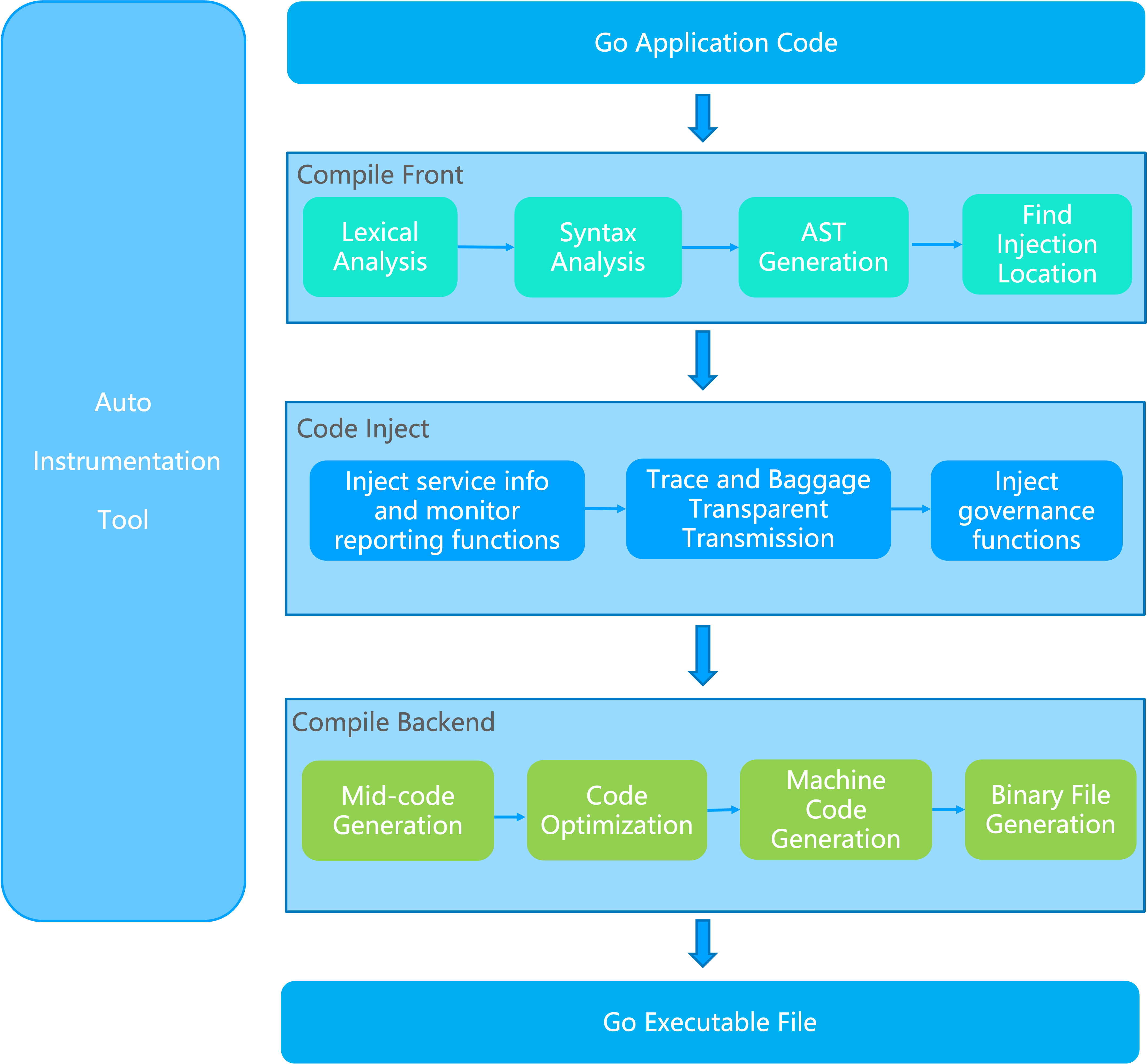

Unlike Java, Golang is a statically compiled language that converts source code directly into machine code during compilation. It does not rely on a virtual machine and does not have bytecode. Although this approach cannot shield the underlying operating system, it allows for higher performance because it can run directly on hardware.

We use the native -toolexec mechanism provided by the Go Build tool to hijack code during compilation. We inject instrumentation into specific Go SDKs to enhance the code (such as framework SDKs, built-in runtime and net/http packages of Go), thereby equipping microservice applications with governance capabilities.

The compilation-time injection framework is now open source. We welcome participation in community discussions and contributions. For more details, please refer to opentelemetry-go-auto-instrumentation

To more intuitively demonstrate how to govern the microservices of Go applications, we will walk through a demo that shows the entire process of integrating and using microservice governance from start to finish.

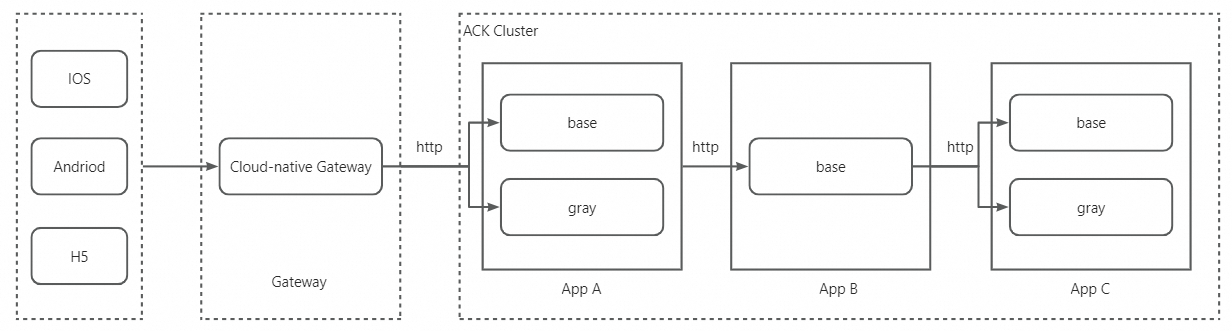

The demo service is deployed on Alibaba Cloud ACK cluster. The invocation sequence is Gateway -> A -> B -> C. The gateway uses Alibaba Cloud MSE Cloud Native Gateway. Applications are called via HTTP, and service discovery is implemented through the Kubernetes standard CoreDNS. Applications A and C each have a canary release version deployed for canary verification during the iterative release process of a certain requirement.

For the implementation source code of the demo service, please refer to mse-go-demo/multiframe

| Application Name | Language and Version | Microservice Framework and Version | Client Call Method | Service Discovery Method |

|---|---|---|---|---|

| A | go 1.20 | gin 1.8.1 | http | CoreDNS |

| B | go 1.19 | kratos 2.7.1 | http | CoreDNS |

| C | go 1.19 | go-zero 1.5.1 | / | CoreDNS |

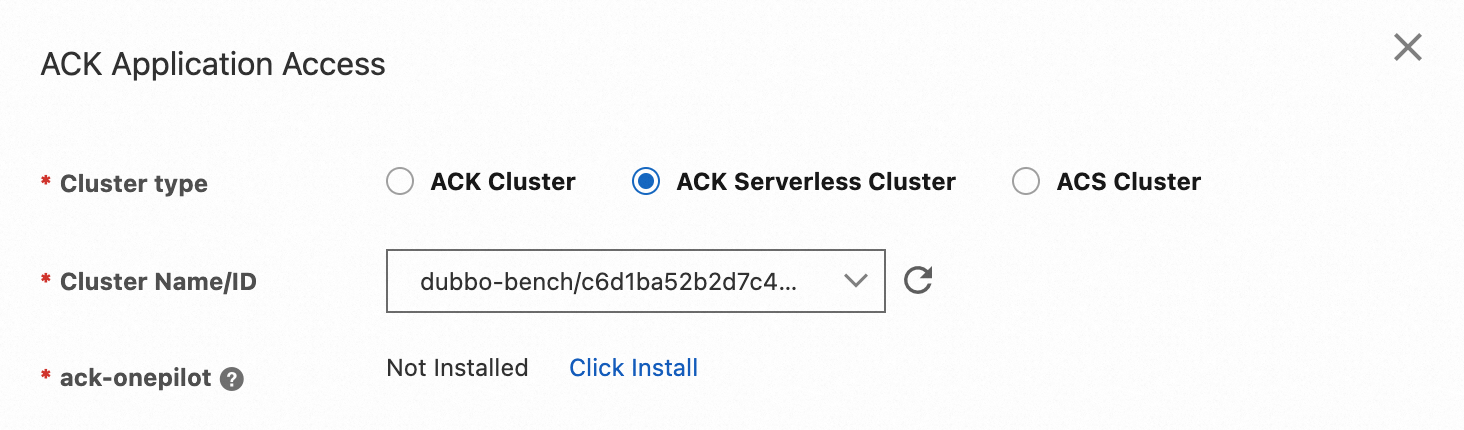

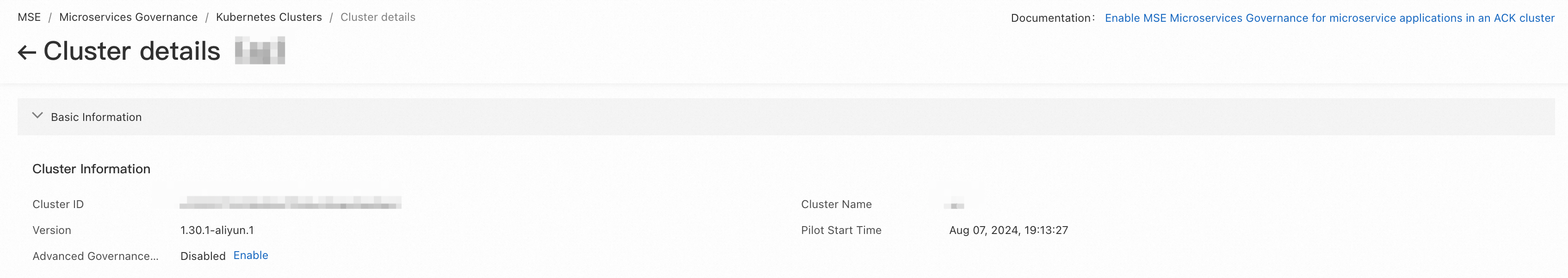

Integrating a Go application with the MSE Service Governance Center requires just the following four steps. Steps 1 and 2 need to be executed only once during the initial integration and do not need to be repeated afterward.

1. Install the ACK-Onepilot component for the ACK cluster in the MSE Service Governance Center.

2. Enable advanced governance for the ACK cluster in the MSE Service Governance Center.

3. Download and use our provided Instgo tool to compile the Go application, replacing the go build command, so as to generate a binary executable file.

# Generate the executable file of the current operating system

./instgo build --mse --licenseKey="{licenseKey}"

# Cross-compilation, for example, generating a Linux executable file in MacOS

#amd

CGO_ENABLED=0 GOOS=linux GOARCH=amd64 ./instgo build --mse --licenseKey="{licenseKey}"

#arm

CGO_ENABLED=0 GOOS=linux GOARCH=arm64 ./instgo build --mse --licenseKey="{licenseKey}"4. After packaging the application into an image, before deploying it to the cluster, add the following labels to the spec.template.metadata.labels section of the corresponding Deployment YAML file to complete the integration.

spec:

template:

metadata:

labels:

# required

msePilotAutoEnable: "on" # Enable MSE microservice governance

mseNamespace: your-namespace # Identify the microservice governance space to which the application belongs

msePilotCreateAppName: "your-app-name" # Identify the application name

aliyun.com/app-language: golang # Identify the application as a Golang application

# optional

alicloud.service.tag: pod-tag # Identify canary nodes in end-to-end canary release, such as gray, blue, and others.As you can see, the entire integration process does not involve changing business code. Compared with manually modifying frameworks or introducing SDK instrumentation, this approach is clearer and simpler.

For more detailed guidance and explanations on each step, please refer to Integrating ACK Microservice Applications with MSE Governance Center (Golang Edition).

After completing the application integration steps outlined in Section 3.2, you can see specific application and service information in the MSE Governance Center console and configure the corresponding service governance rules. This section will demonstrate the operational process and actual effects in three common governance scenarios: application observation and management, traffic governance, and end-to-end canary release.

Integrate the three Go applications A, B, and C described in Section 3.1 into the MSE Governance Center and set the following:

● mseNamespace=mse-go-agent-demo

● Application A: msePilotCreateAppName=go-gin-demo-a

● Application B: msePilotCreateAppName=go-kratos-demo-b

● Application C: msePilotCreateAppName=go-zero-demo-c

After integration, you can view the details of the corresponding applications under the mse-go-agent-demo namespace in the MSE Governance Center.

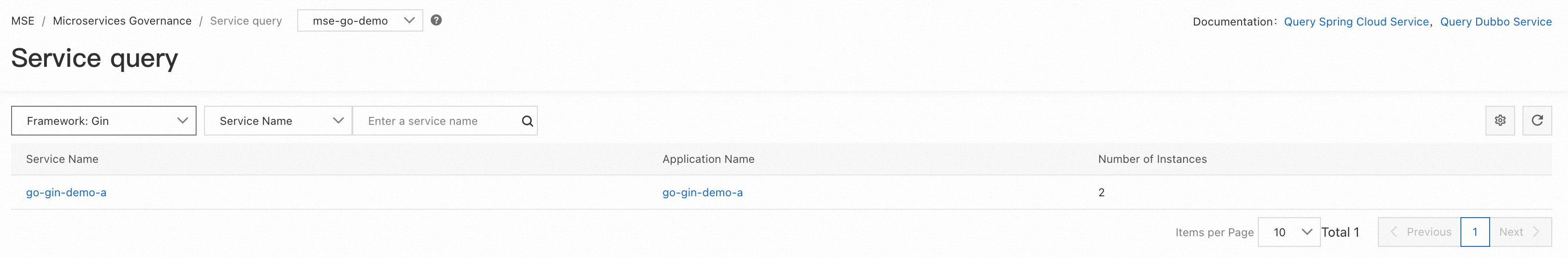

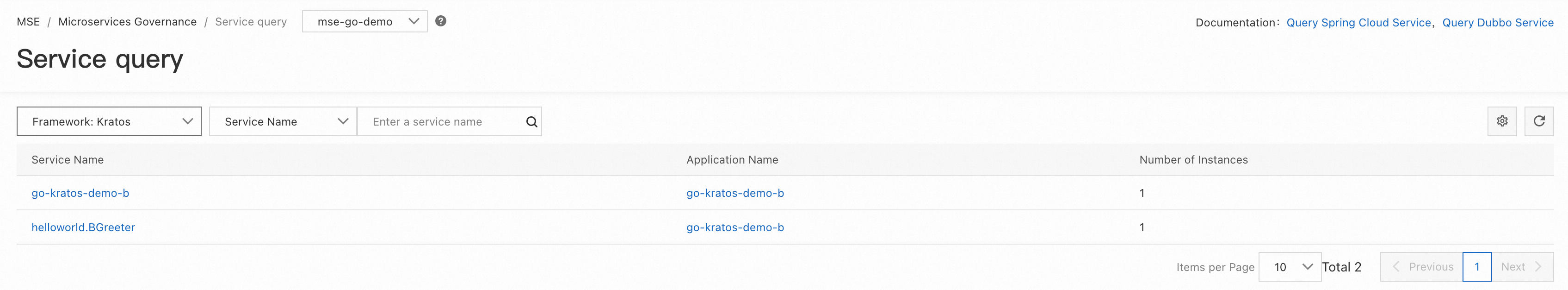

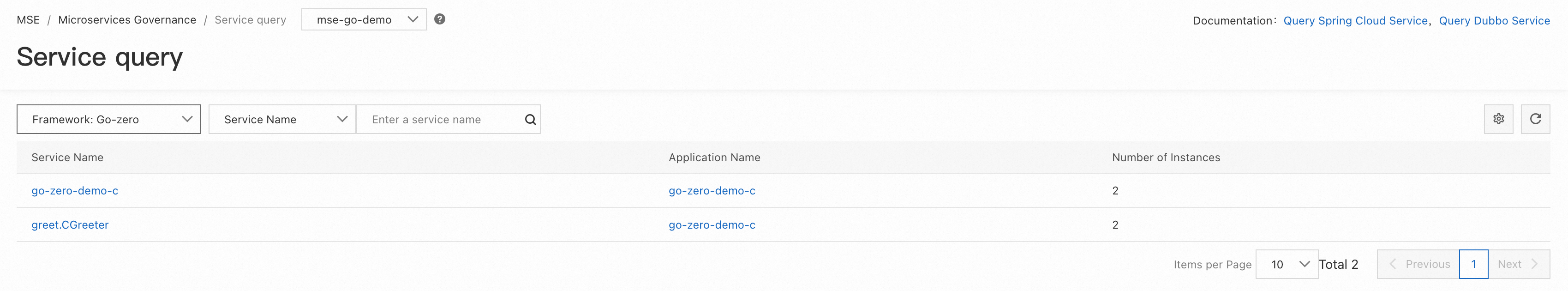

Enter the MSE Service Governance Center and click Service Query to view service information, including HTTP or RPC services created within the application, along with their associated metadata, such as interface metadata and service metadata.

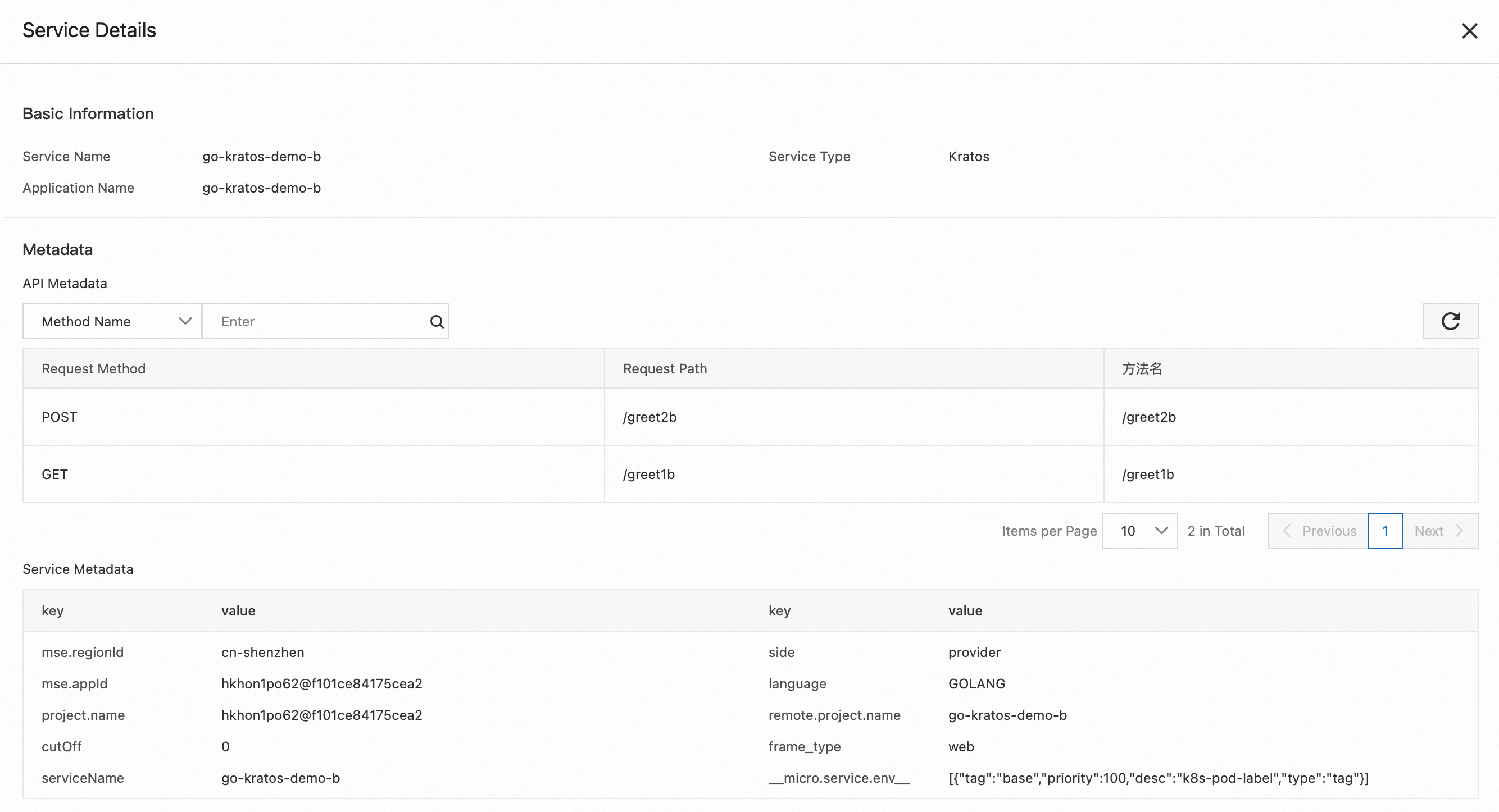

By selecting Gin, Kratos, and Go-zero frameworks in the upper-left corner, you can view the service information of applications A, B, and C respectively. Since we created one HTTP server and one gRPC server for applications B and C during coding to handle different types of requests, you can see two services in the console.

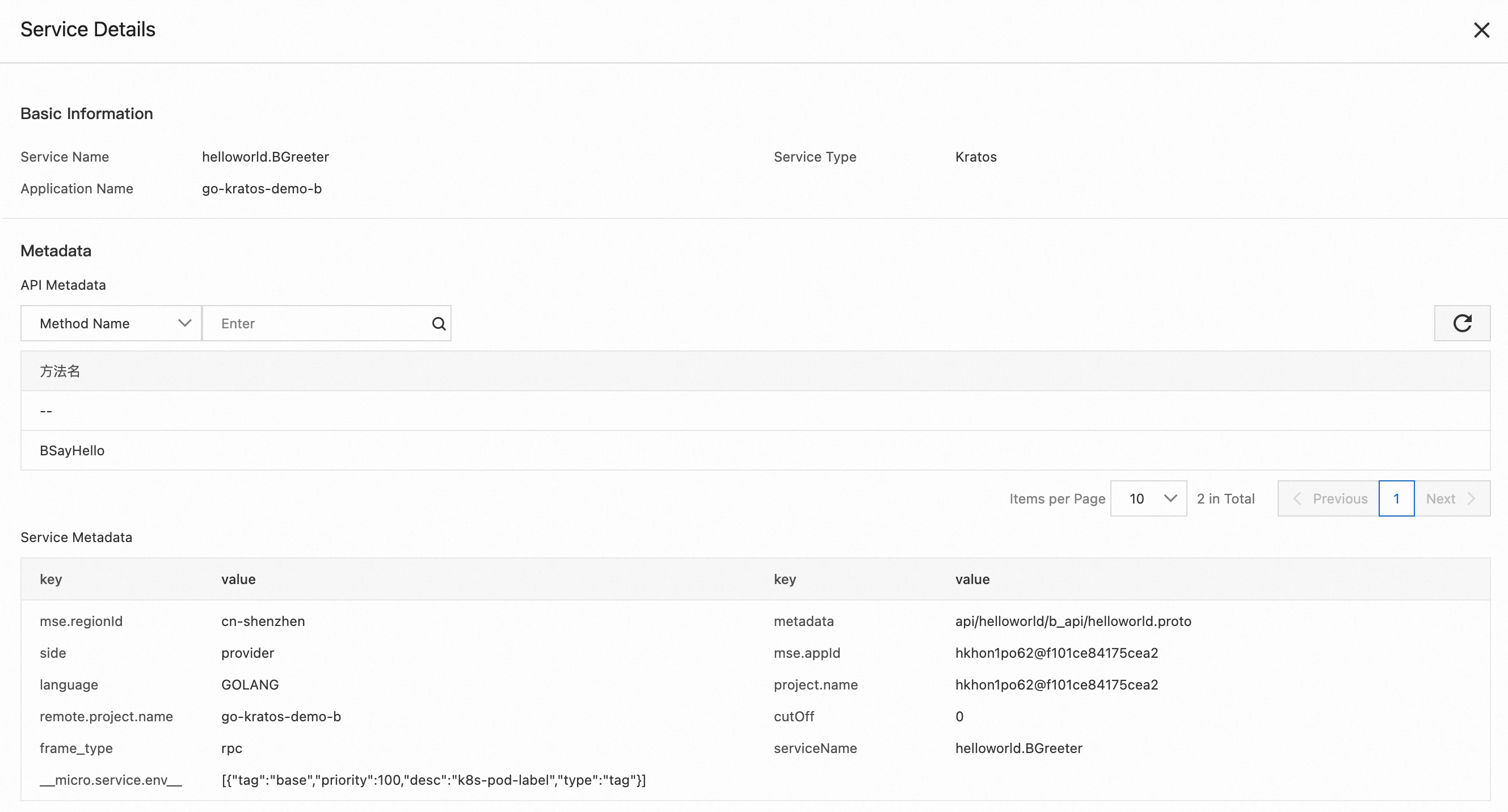

Click the corresponding service to view its detailed metadata. For example, the following is the HTTP service and gRPC service information for application B.

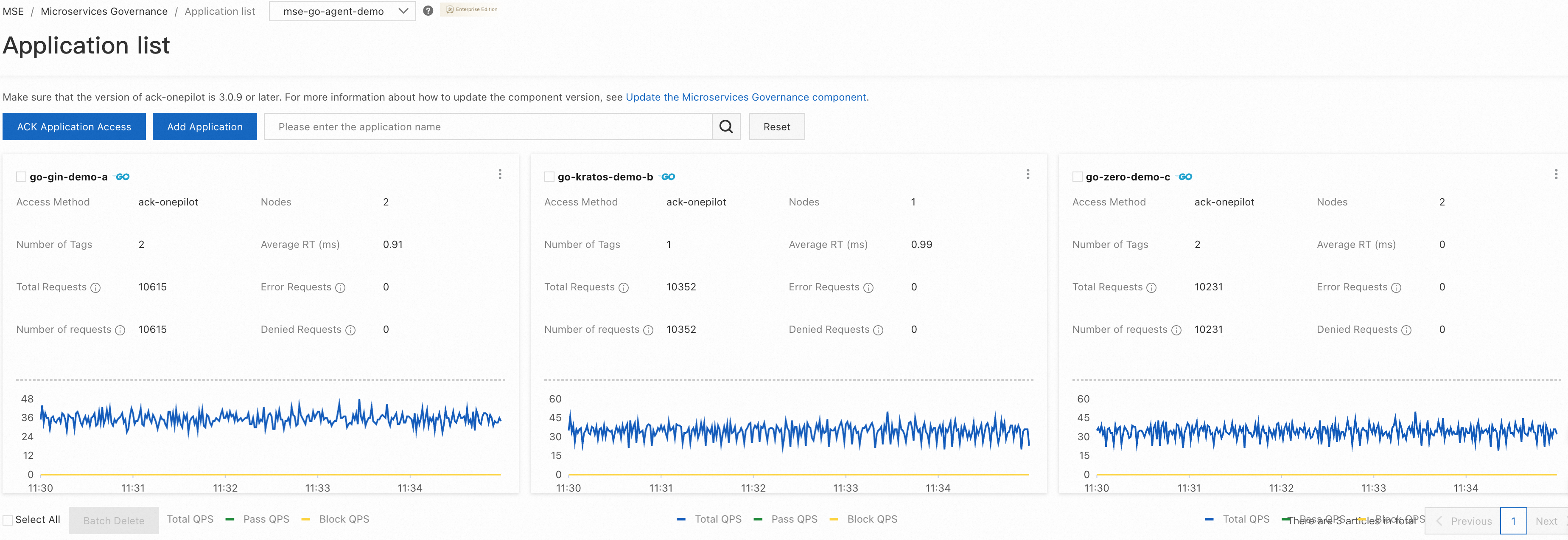

Enter the MSE Service Governance Center and click Application Governance to view operational data and system data from different dimensions, such as application, interfaces, and nodes.

In the application list, you can view the number of nodes, tags, requests, and QPS of different applications.

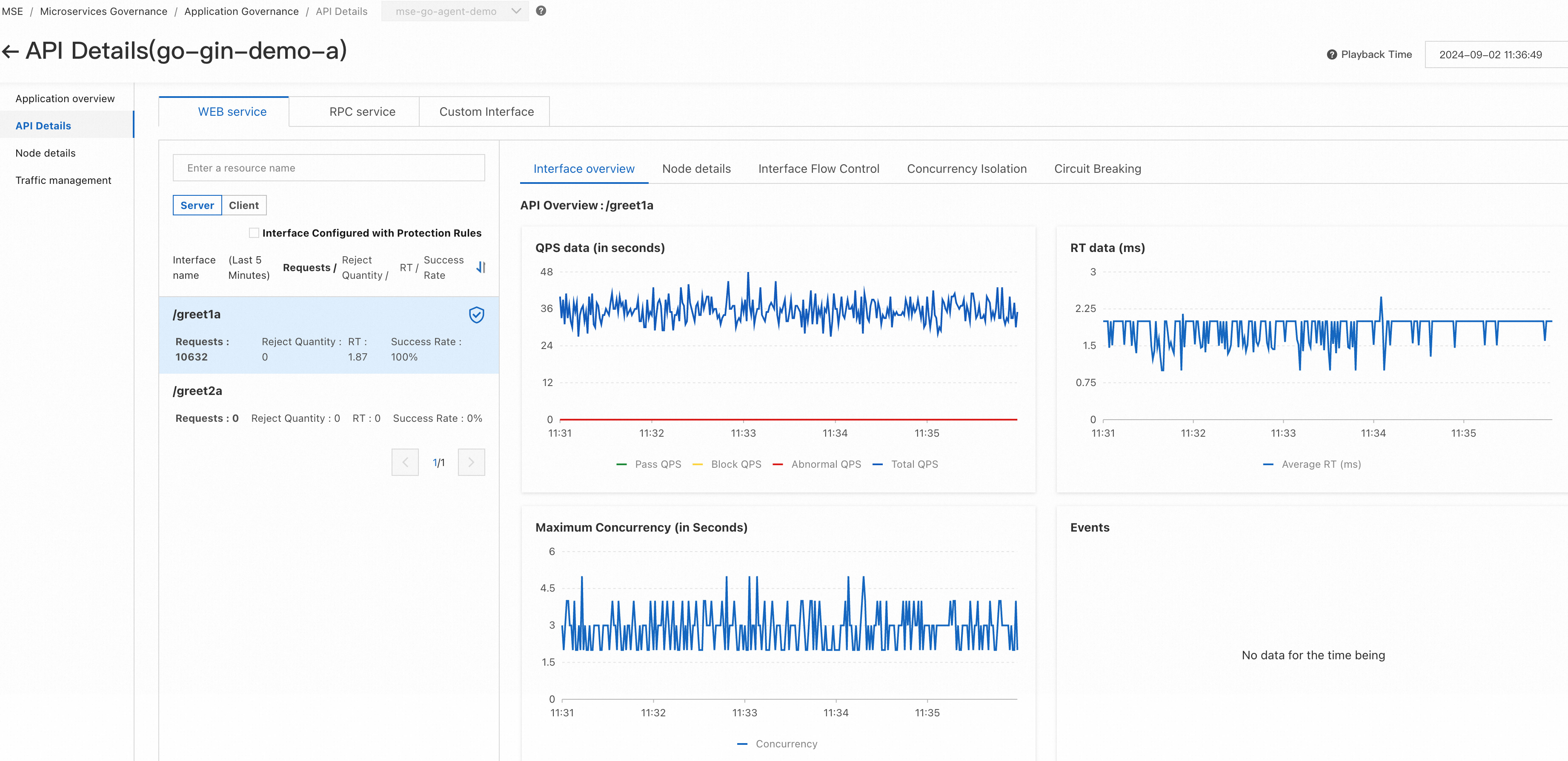

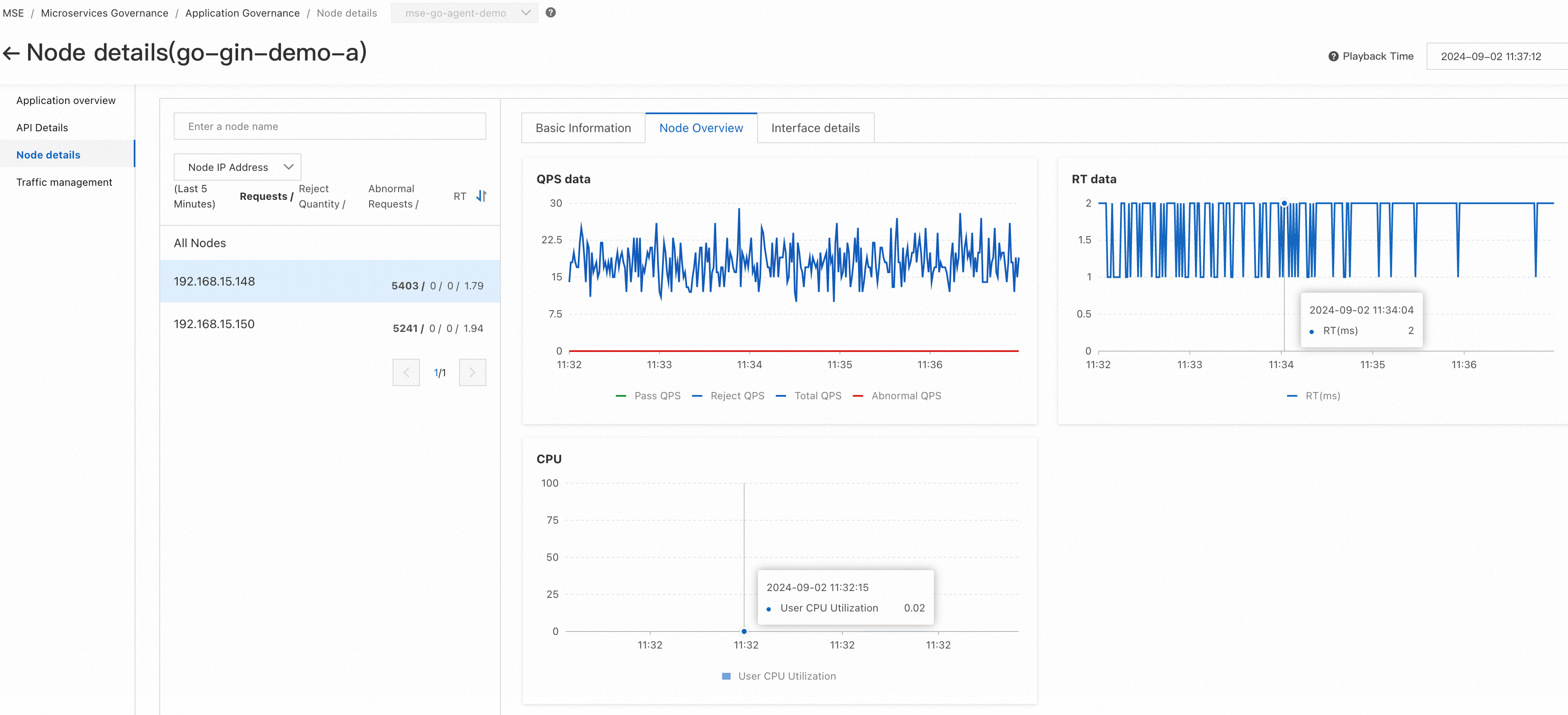

Click the card corresponding to an application to view more detailed information from various perspectives, including overall application data, interface data, and node data.

1) Overall application data

2) Interface data

3) Node data

Currently, we provide the following traffic management capabilities to support user-configurable settings for different scenarios and allow quick activation and deactivation by configuring rules in the console:

● Interface Flow Control: Set the maximum QPS for a single interface. Requests that exceed this threshold will be rejected or placed in a waiting queue.

● Concurrency Isolation: Set the maximum number of concurrent coroutines for a single interface. Requests that exceed this threshold will be rejected.

● Circuit Breaking: Configure circuit breaking protection rules for interfaces. Thresholds can be set based on slow call ratios or failure rates. Once the threshold is reached, circuit breaking will trigger, causing requests to fail quickly within the circuit break duration. After the circuit breaking state ends, recovery occurs through a single probe or a progressive strategy.

● Hotspot Parameter Protection: Compared with interface flow control, protection rules are defined at the parameter level. For example, you can set the maximum concurrent resources occupied by the Nth parameter of an interface to be no more than 10; if the threshold is exceeded, the corresponding requests will fail quickly.

● Behavioral Degradation: After interface flow control is triggered, you can customize protective behaviors, such as returning a specific status code and content, or redirecting.

● Adaptive Overload Protection: Use CPU usage as a metric to gauge instance load and adaptively adjust traffic protection strategies at the entry point to prevent service crashes due to maxed-up CPU resources.

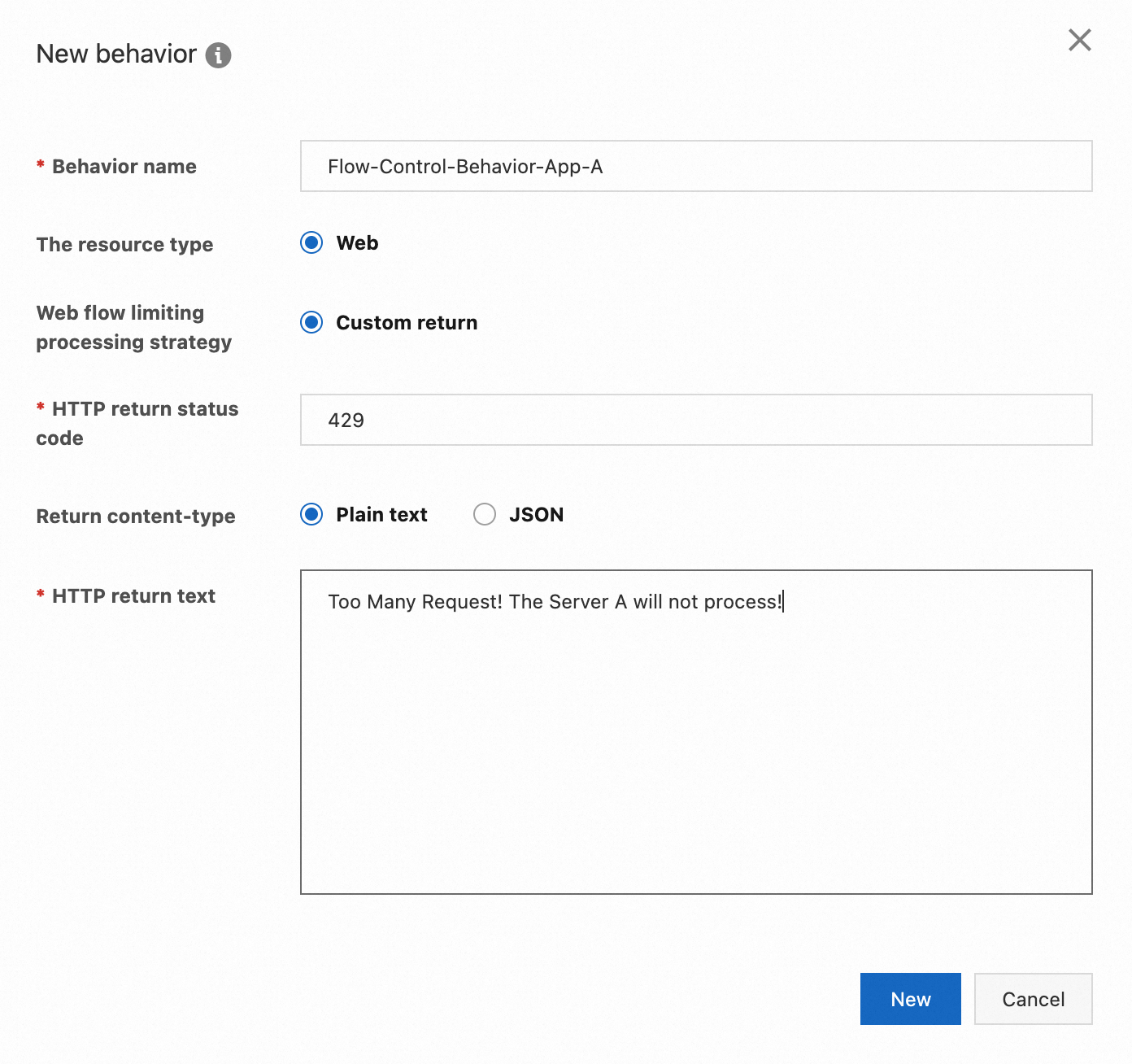

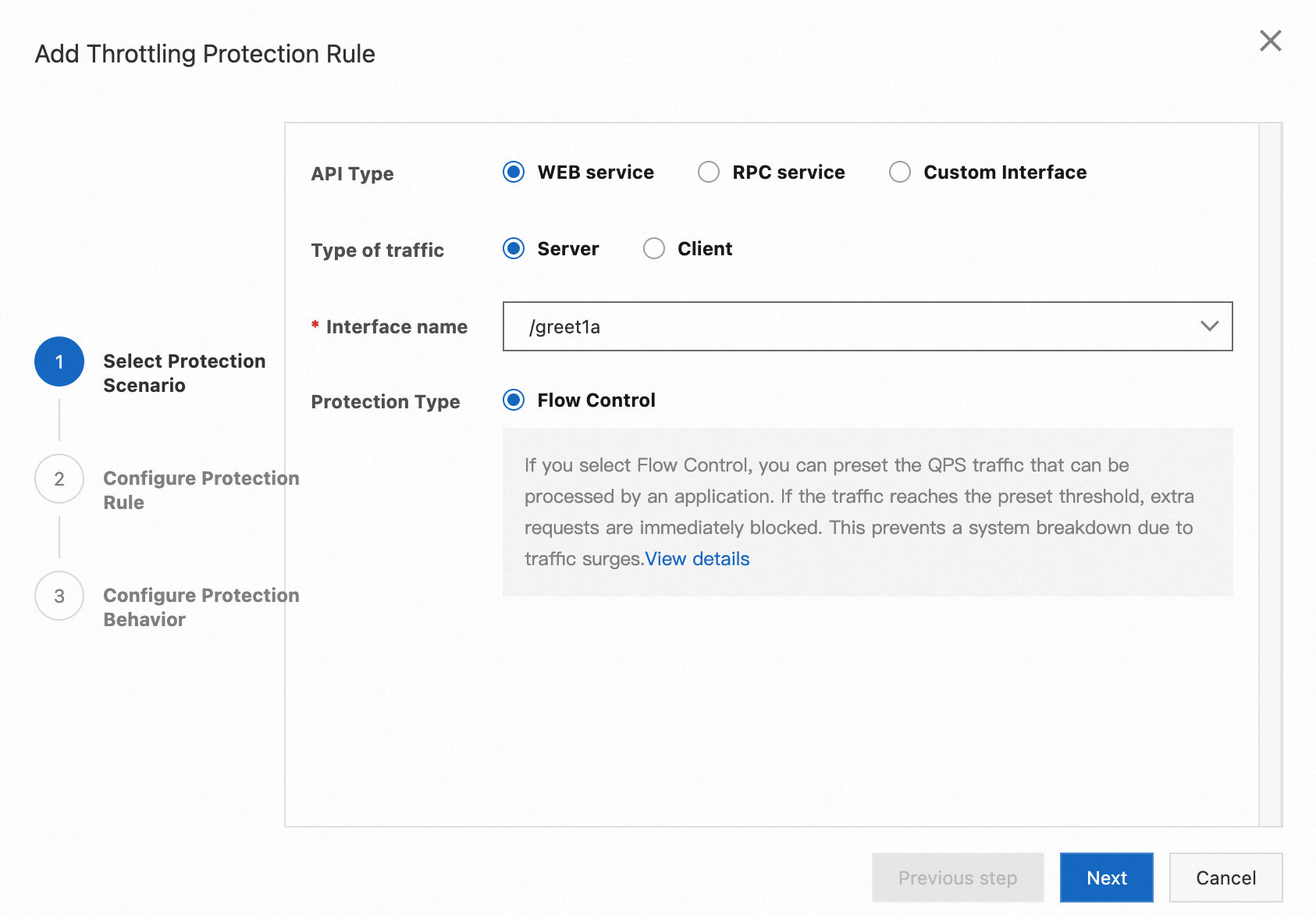

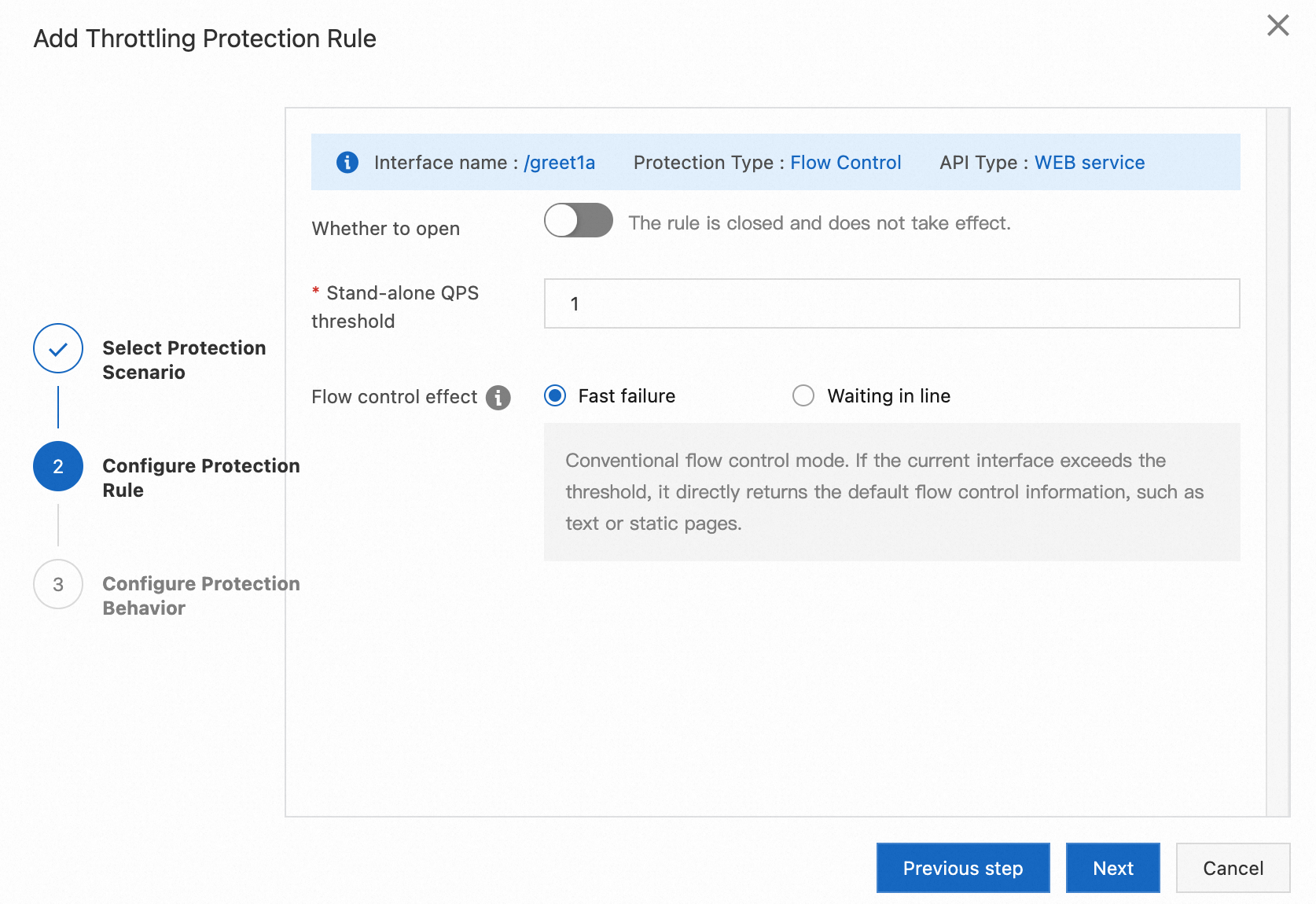

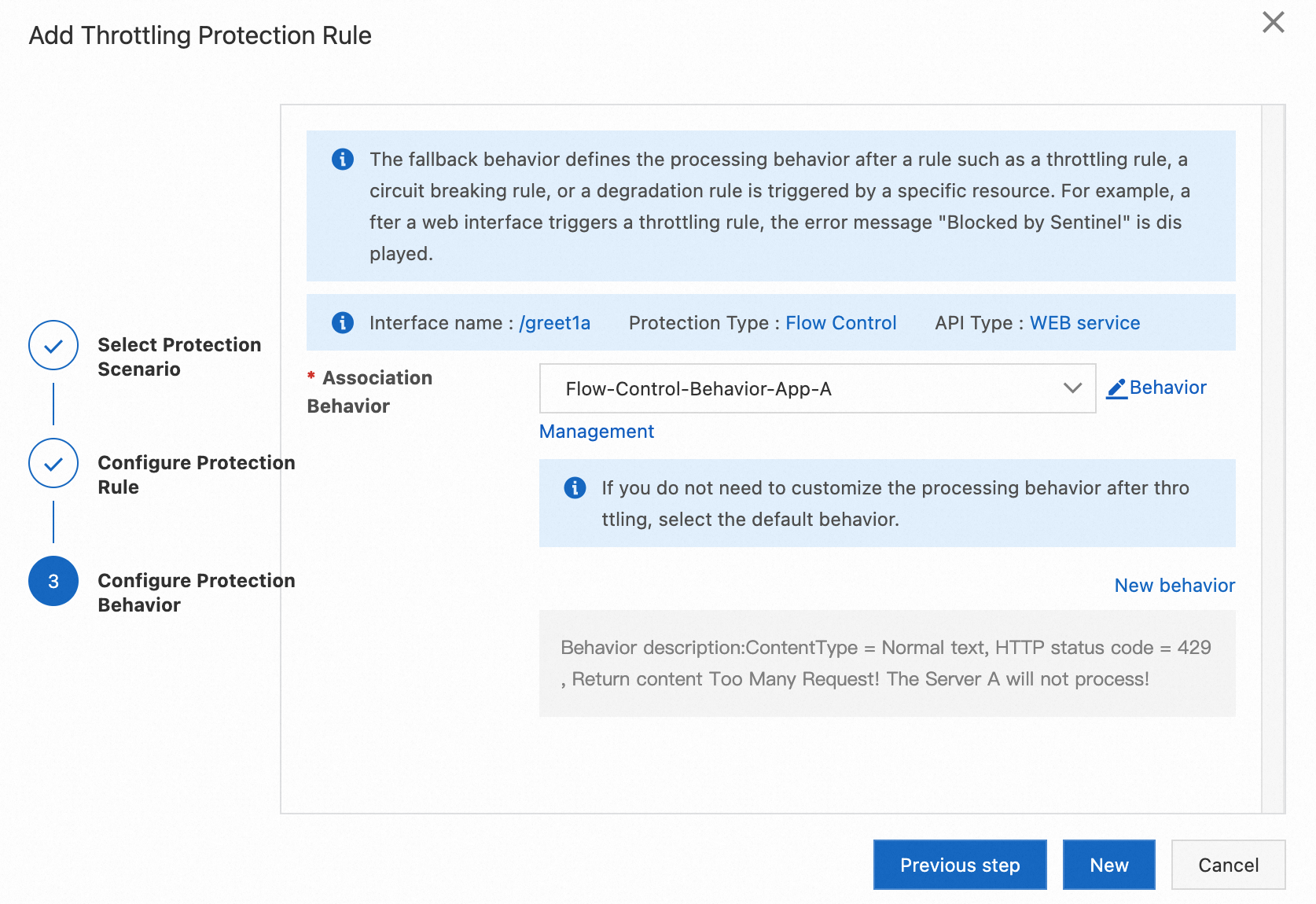

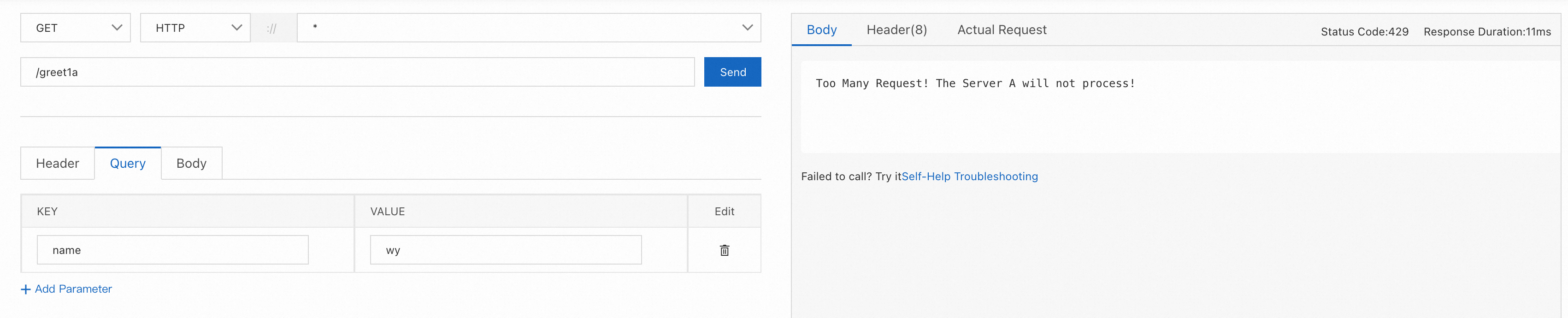

Use application A as an example. Suppose we want to set an interface flow control rule for the /greet1a endpoint with a single-machine QPS threshold of 1. If the threshold is reached, requests should immediately fail and return an HTTP status code of 429 with the content Too Many Requests! The Server A will not process!.

To achieve this effect, you can configure the following directly in the MSE Governance Center:

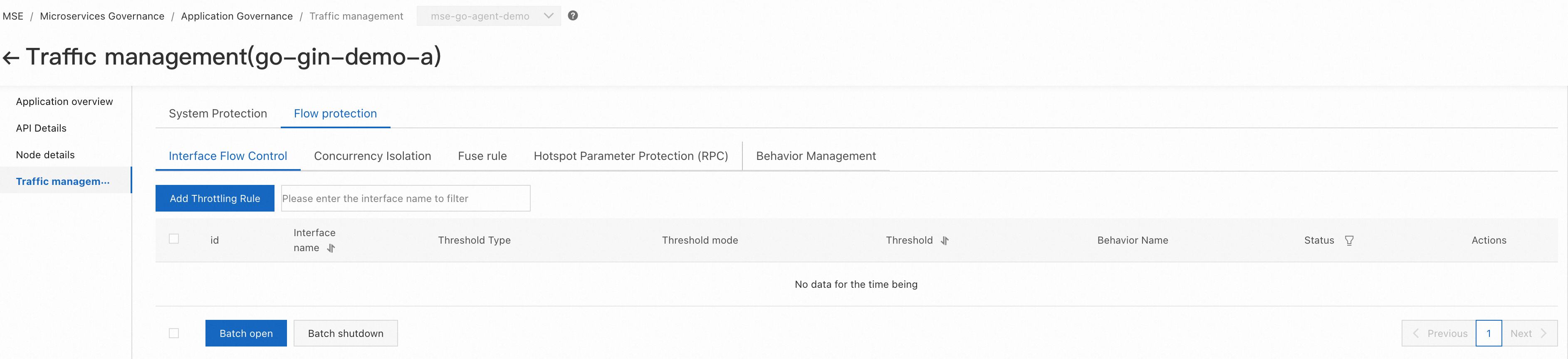

1. Click Application Governance, then click go-gin-demo-a application card, and click Traffic Management

2. Click Flow Protection, and then Behavior Management, and finally click Add Behavior. Configure the behavior as shown below, and click Create.

3. Click Flow Protection, and then Interface Flow Control. Configure flow protection rules as shown in the steps below, and click Add.

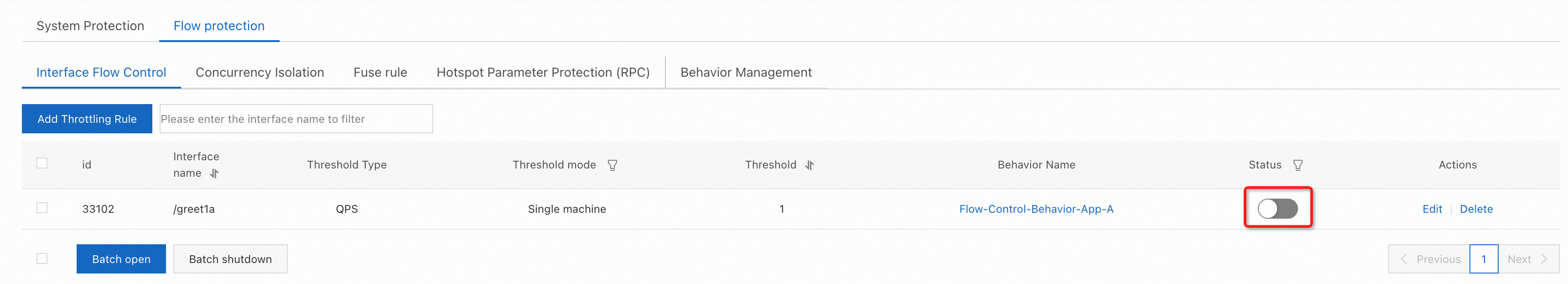

4. In the rule list, click to set the rule status as enabled, and the rule will take effect.

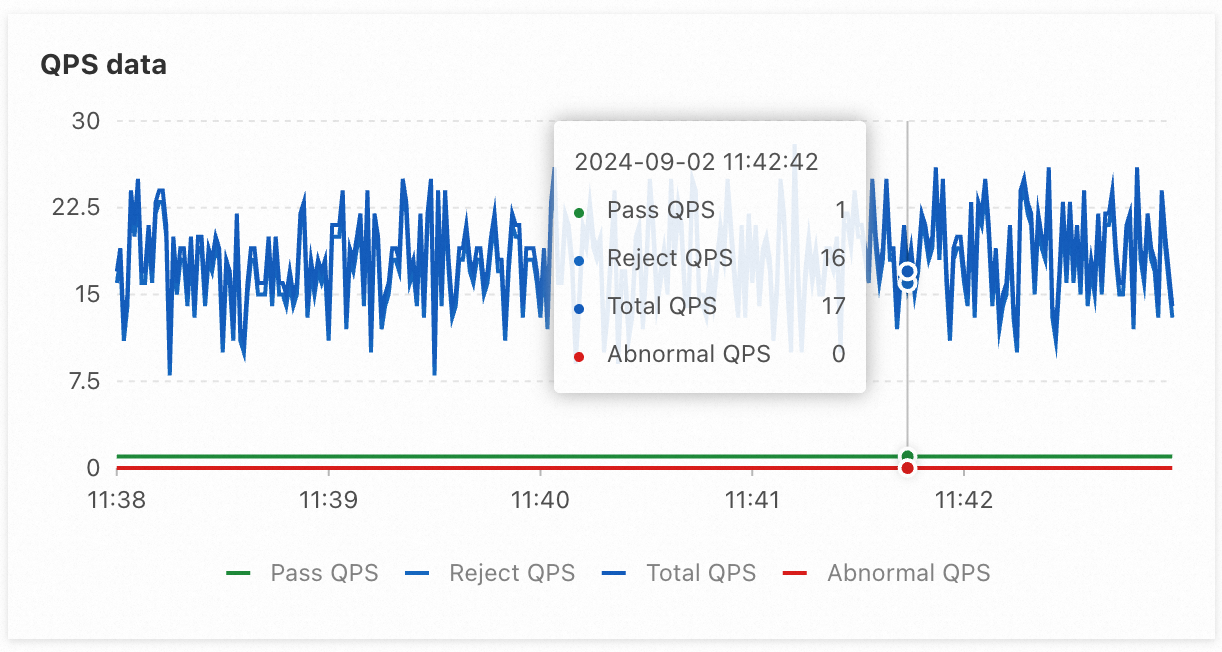

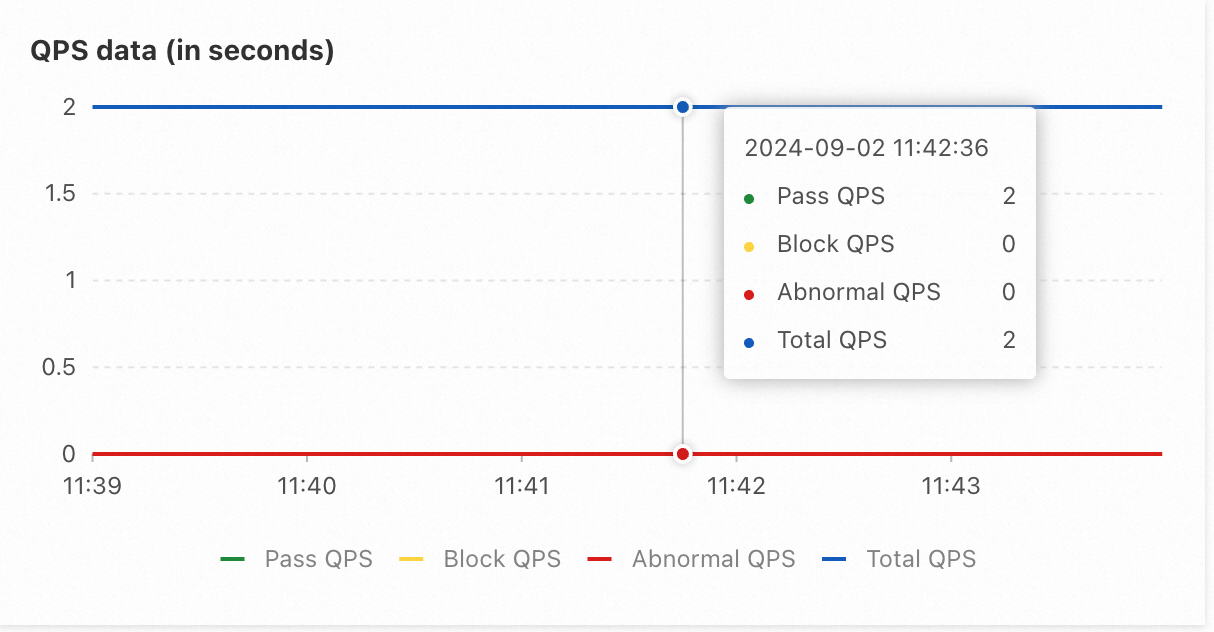

After a period of time, you can observe that the QPS that passes through a single node of application A stabilizes at 1, and requests that exceed the threshold are being rejected. The QPS for application B stabilizes at 2, because application A has 2 nodes, allowing a maximum throughput QPS of 2.

1) Single-node QPS data for application A

2) Overall QPS data for application B

When invoking the /greet1a endpoint of application A with the cloud-native gateway, you can observe that due to the triggered flow control, the interface returns the error status code and content that we defined.

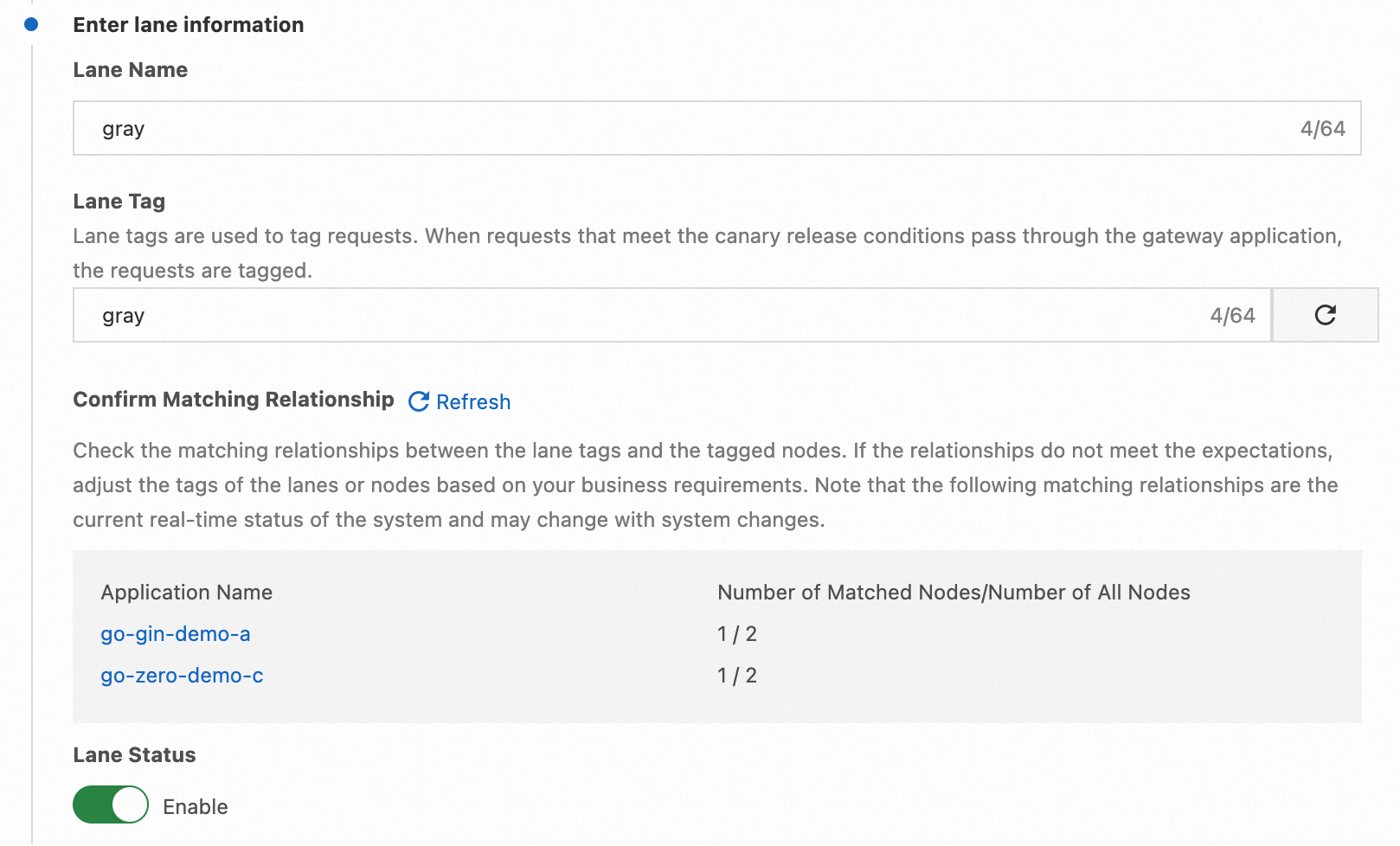

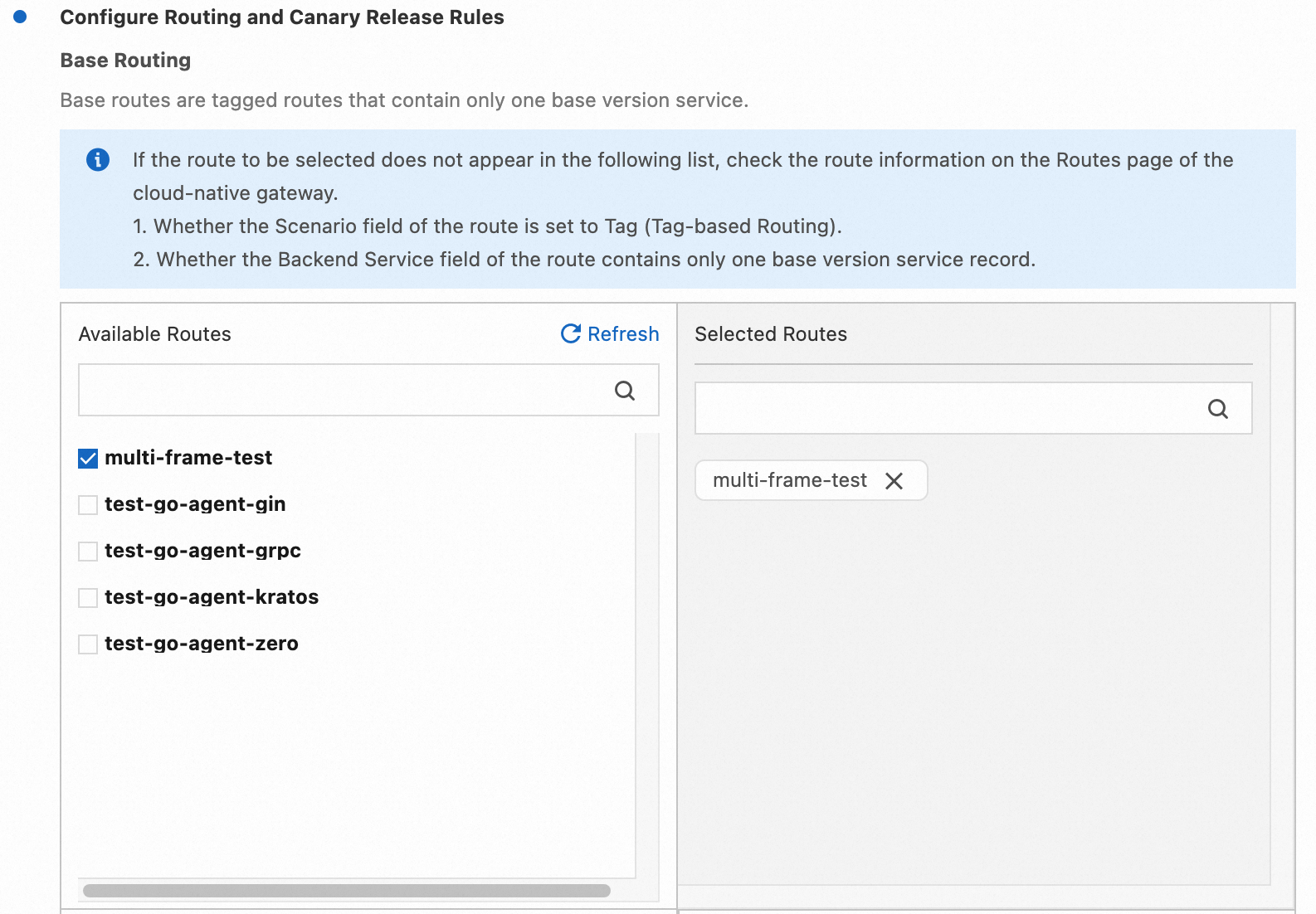

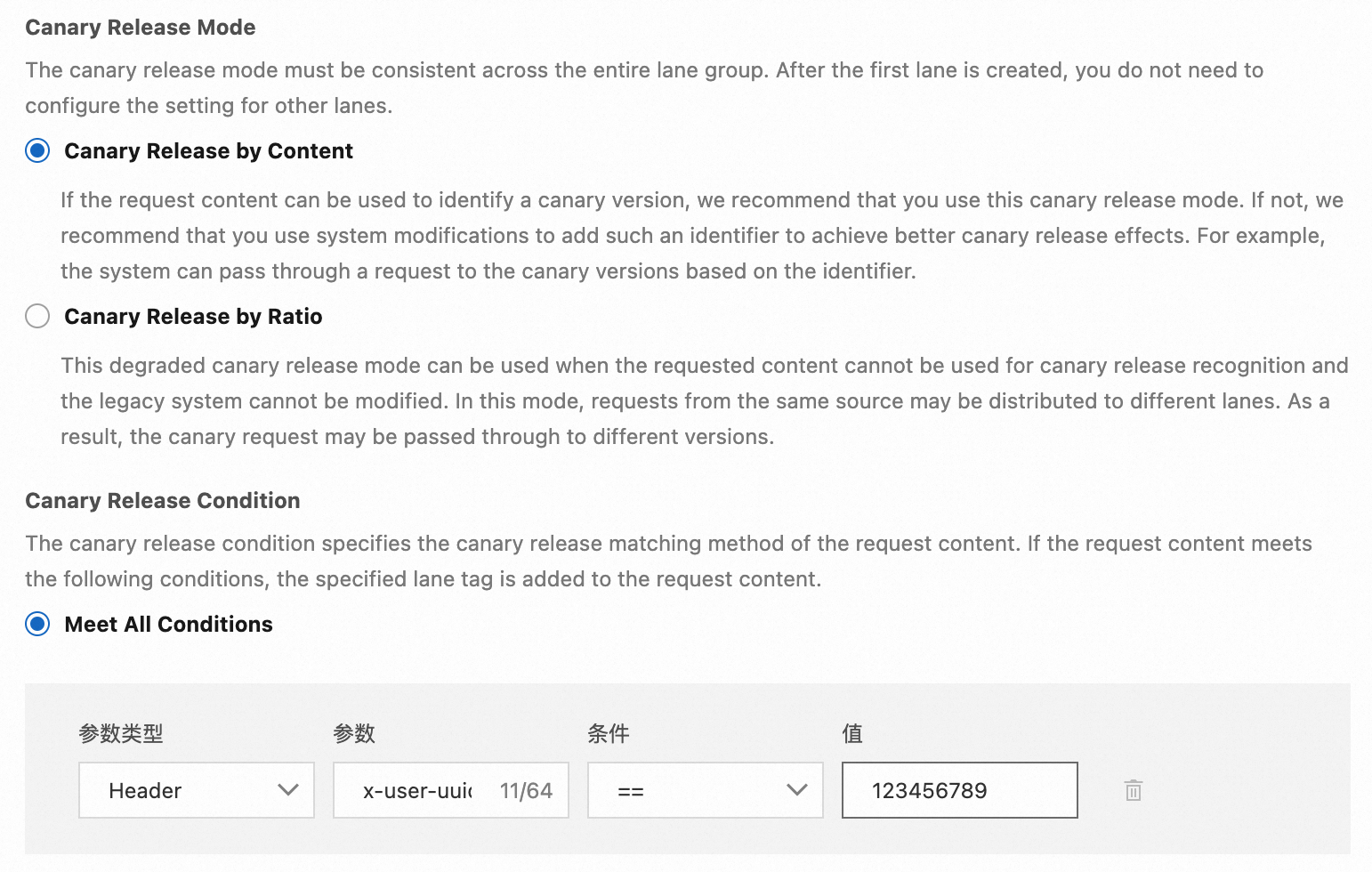

Assume that during a certain iteration, we changed part of the logic in applications A and C and wish to test and verify the functionality through canary release. We hope that requests with x-user-uuid=123456789 in the Header content are routed to canary nodes, while other requests continue to be routed to regular nodes.

To achieve this effect, we can follow these steps for configuration:

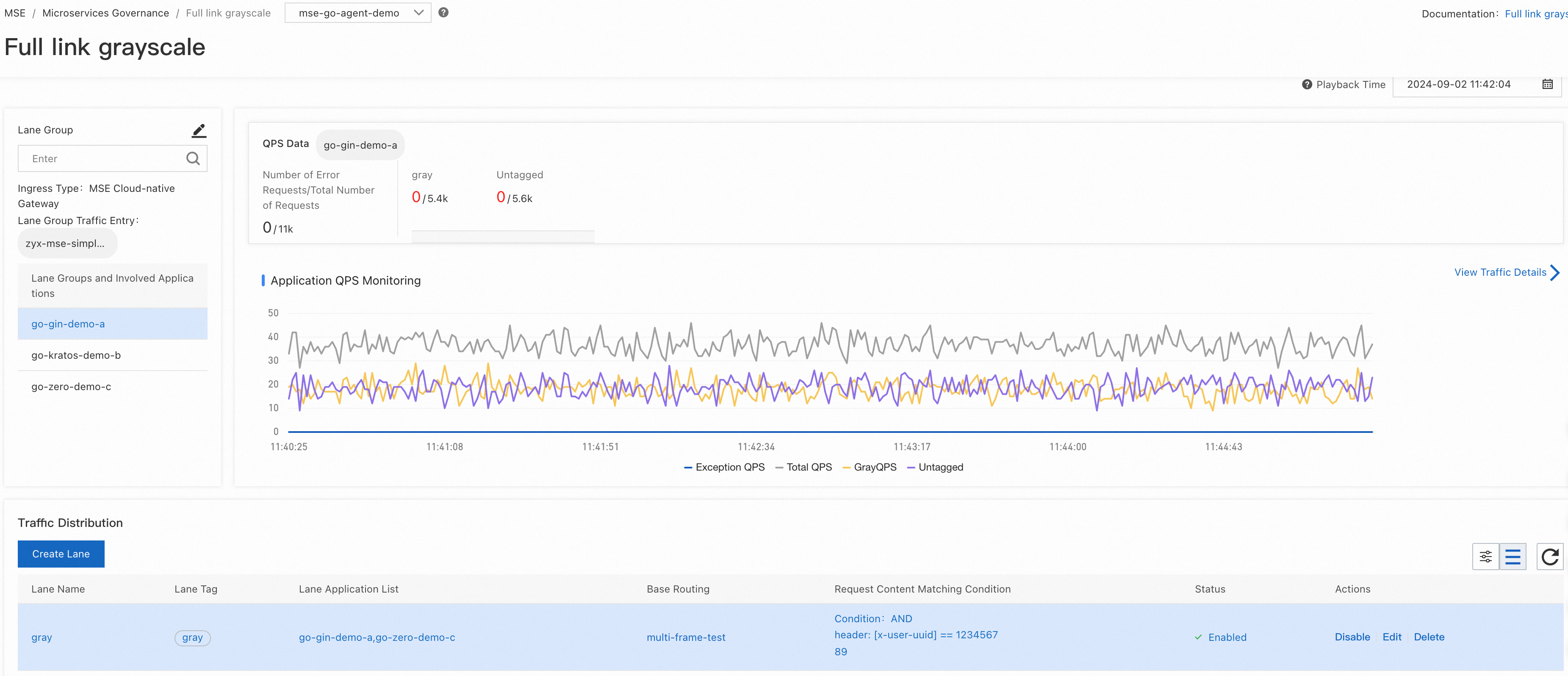

After creating the lane group and lanes, the configuration of end-to-end canary release is complete. As shown in the following image, the traffic entry point for the lane group is the MSE Cloud Native Gateway.

Next, we can initiate requests through the corresponding gateway. The business return results in the demo application print the request call chain and the tags and IPs of the nodes that each hop is routed to, making it easy to observe the call chain.

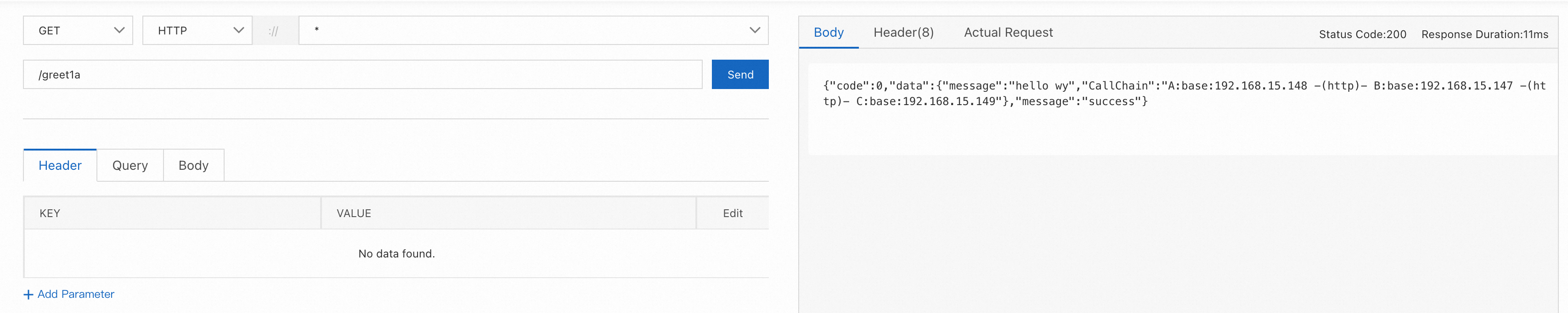

● Set the request Header without x-user-uuid, and requests are routed to the baseline nodes.

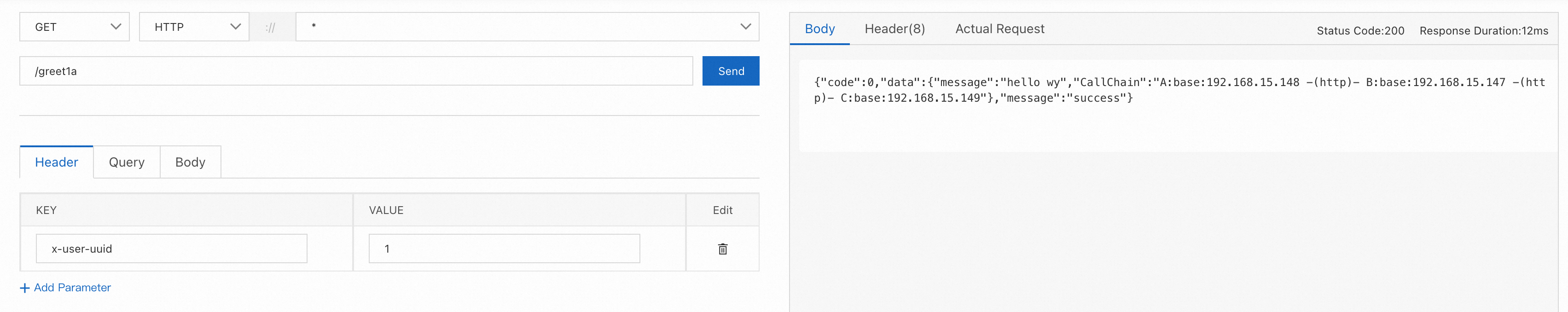

● Set the request Header x-user-uuid=1, and requests are routed to the baseline nodes.

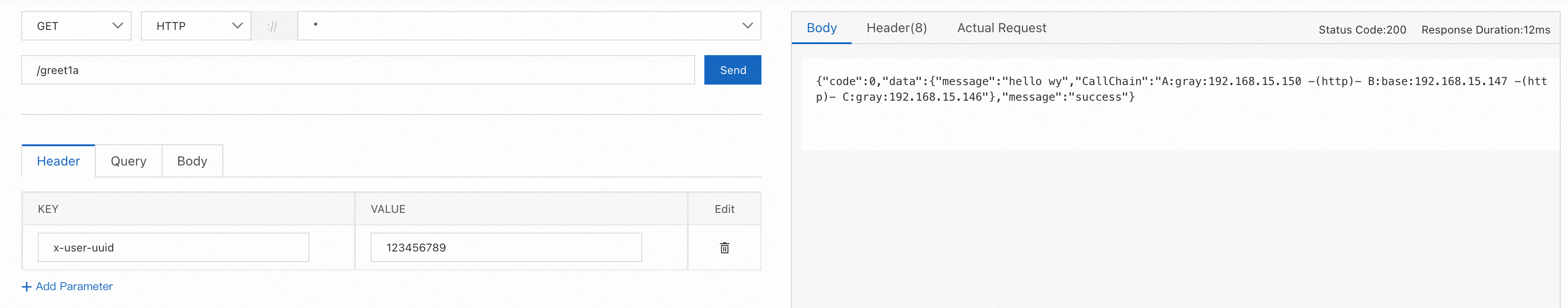

● Set the request Header x-user-uuid=123456789, and requests are routed to the canary nodes of A and C. Since application B does not have a canary node, it is routed to the baseline node.

Thus, we have verified that the configuration of end-to-end canary release rules has taken effect.

Observability of LLM Applications: Exploration and Practice from the Perspective of Trace

The Evolution of the Batch Processing Model in Apache RocketMQ

212 posts | 13 followers

FollowAlibaba Cloud Native - May 11, 2022

CloudNative - May 11, 2022

Alibaba Cloud Native Community - December 19, 2023

Alibaba Cloud Native - July 12, 2024

Alibaba Cloud Native - October 9, 2021

Alibaba Clouder - May 18, 2021

212 posts | 13 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Intelligent Robot

Intelligent Robot

A dialogue platform that enables smart dialog (based on natural language processing) through a range of dialogue-enabling clients

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Native