By Jin Jixiang (Apache RocketMQ PMC Member and Senior Technical Expert from Alibaba Cloud Intelligence)

First of all, let's take a look at the problems that prompted us to explore a new RocketMQ architecture with stateless proxies.

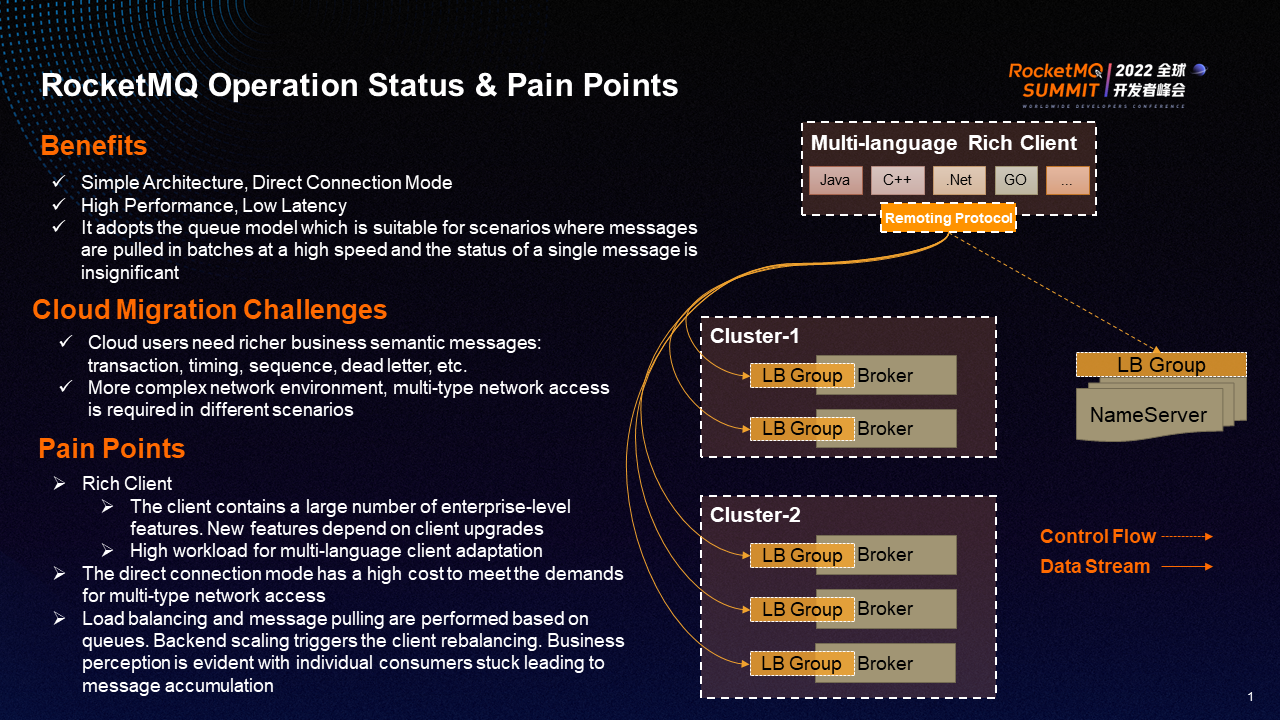

RocketMQ provides a simple architecture. A multi-language client establishes a long TCP connection with the backend NameServer and Broker by a custom remoting protocol. Then, it performs router discovery for messages and completes message sending and receiving. The advantages of this architecture are: the architecture is very simple, and the client and the Broker are directly connected through TCP, with high performance and low latency. At the same time, this architecture adopts the queue model, which is suitable for scenarios where messages are pulled in batches at high speed based on queues.

At the same time, RocketMQ faces various challenges in the migration to the cloud.

First, cloud users need richer business semantic messages, including transactions, timing, sequence, and dead letters. The client side and the Broker side of the original architecture need to be developed separately to meet users' needs on the business side. Users must upgrade the client before they can enjoy the new functions.

Secondly, the network environment is more complex during cloud migration. Different scenarios require different types of network access. Some users require public access points for convenience, so consumers and senders in different regions can connect to the same message service. Some users require private access points for security to isolate some illegal network requests. The original architecture of RocketMQ is relatively costly when dealing with multi-type network access demands. Multi-type network access must cover each machine of NameServer and Broker at the same time. For example, in the scenario where internal Brokers need to be expanded, if the original Brokers have multiple types of network access requirements, the newly expanded Brokers need to be additionally bound with multiple types of network access points before they can be delivered normally.

In summary, facing the challenge of cloud migration, the previous architecture gradually exposed the following pain points:

① Rich Client Form: The client contains a large number of enterprise-level features. Users must upgrade their clients to enjoy new features, with a long process. In addition, the same feature delivery must be adapted in multi-language versions to meet the access of multi-language clients. So, the workload is huge.

② The cost of satisfying multi-type network access is high in the direct connection mode between the client and all nodes of the Broker.

③ When users perform load balancing and message pulling based on the queue, the scaling of the backend will trigger client rebalance, resulting in message delay or repeated consumption. Consumers will have an obvious perception. In addition, the queue-based model is very prone to cause a problem that plagues users: the message consumption of consumers caused by a single failure is stuck, which will cause a large number of messages to accumulate on the server side.

RocketMQ 5.0 proposes a stateless proxy mode to address the preceding pain points.

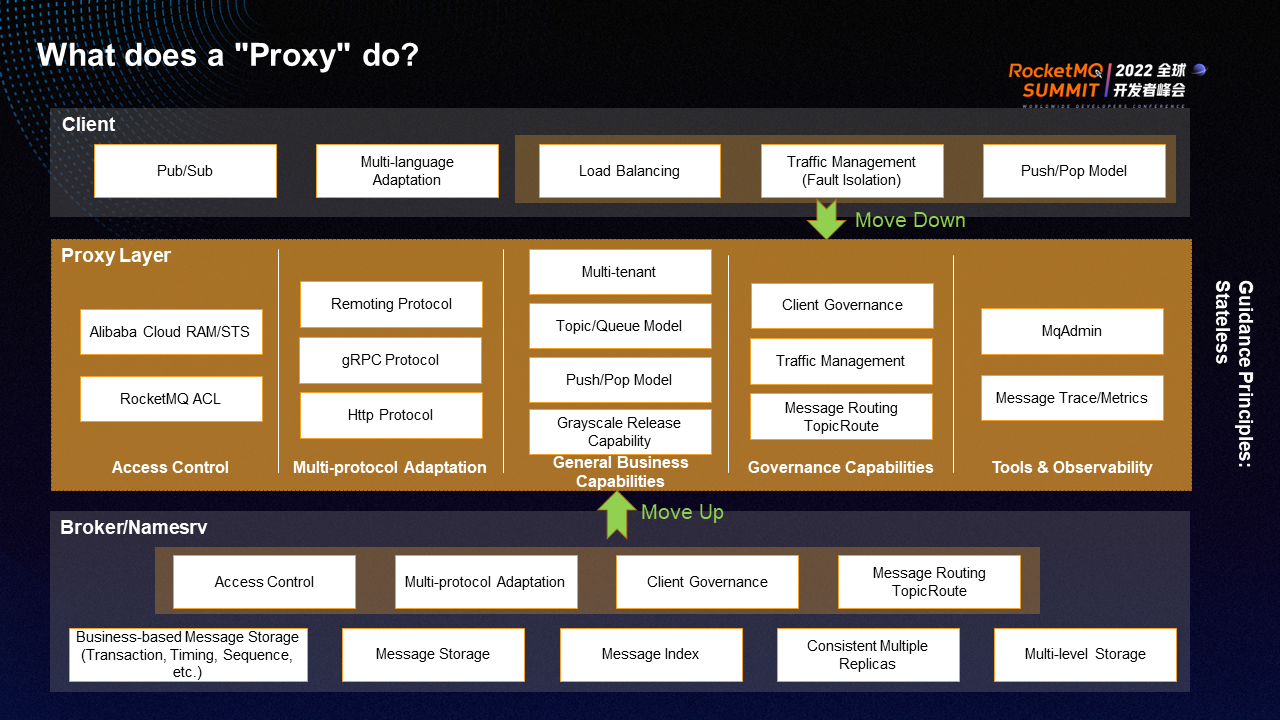

The new architecture inserts a proxy layer between the original client and the Broker. The strategy is to move the stateless features of the client down to the proxy layer as much as possible and also move the stateless features of the Broker side up to the proxy layer as much as possible. Here, we move the original load balancing mechanism, fault isolation, and push/pop consumption mode of the client down to the proxy layer and move the access control, multi-protocol adaptation, client governance, and NameServer message routing capabilities of the Broker up to the proxy layer. Finally, rich capabilities of the proxy layer are created, including access control, multi-protocol adaptation, general business capabilities, governance capabilities, and observability.

We must adhere to a principle in the process of building the proxy layer. The capabilities of the client and Broker must be stateless to migrate to the proxy layer to ensure the subsequent proxy layer can be dynamically scaled with the amount of traffic received.

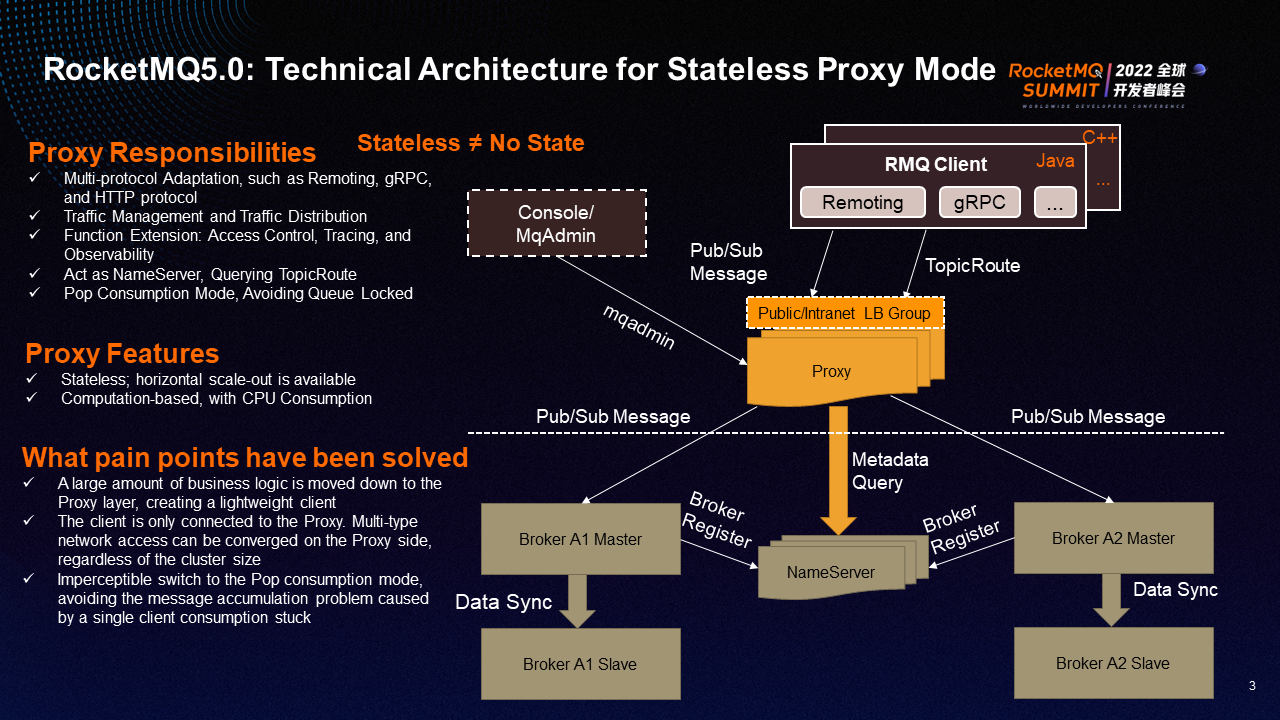

After the stateless proxy layer is introduced, the original direct connection architecture of RocketMQ clients and Brokers evolves into the stateless proxy architecture shown in the preceding figure:

In terms of traffic, the proxy layer receives all traffic from the client side. The Broker and NameServer are no longer directly exposed to users. The only component that users can see is the Proxy.

Here, the Proxy's responsibilities include the following:

At the same time, we can see that Proxy has the following two major features:

① Stateless: It can be scaled horizontally based on user and client traffic.

② Computation-Based: It consumes more CPU, so more CPU is needed to be allocated to Proxy as much as possible during deployment.

In summary, the stateless proxy mode solves multiple pain points of the original architecture:

① Move a large amount of business logic from the client down to the Proxy to create a lightweight client. At the same time, it can quickly adapt to clients in multiple languages by relying on the standardization of the gRPC protocol and the automatic generation of transport layer codes.

② The client will only connect with the Proxy. Multi-type networks can be bound to the Proxy for the demand of multi-type network access. As Brokers and NameServer are no longer directly exposed to the client, users only need to expose the connection of the Intranet to the Proxy. The demand for multi-type network access can only be closed in the Proxy. At the same time, the stateless feature of Proxy ensures that multi-type network access is independent of the cluster size.

③ The consumption mode is switched without perception. No matter whether the client side selects Pop or Push consumption mode, it is finally replaced by the Pop consumption mode of the Proxy and Broker side, thus avoiding the historical problem of message accumulation on the server side caused by a single client consumption stuck.

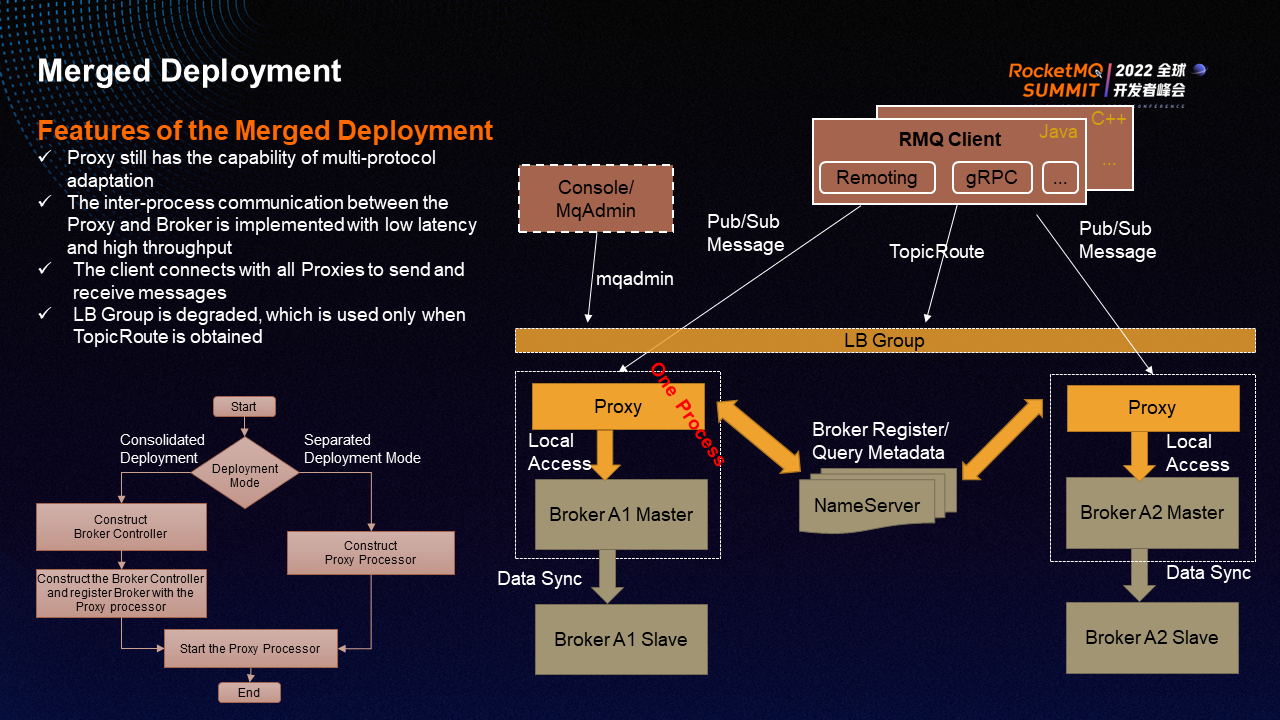

The new architecture introduces a Proxy layer between the client and the Broker. All traffic on the client requires one more hop network and goes through one more serialization/deserialization process. This is extremely unfriendly to users sensitive to end-to-end message latency. Therefore, we designed the merged deployment mode.

In the merged deployment mode, the Proxy and Broker are deployed on a 1:1 peer basis, and the inter-process communication between the Proxy and Broker is implemented, meeting the demands of low latency and high throughput. At the same time, Proxy still has the ability to adapt to multiple protocols. The client establishes connections with all Proxies to send and receive messages to ensure that all messages can be consumed.

In terms of code implementation, we use the constructor to implement the merged deployment and separated deployment. Users can choose the deployment mode. If a user chooses the merged deployment mode, the Broker Controller will be constructed and registered with the Proxy processor before building the Proxy processor, essentially to inform the Proxy processor that subsequent requests should be sent to the Broker Controller. If the user chooses the separated deployment mode, start the Proxy processor directly without constructing the Broker Controller.

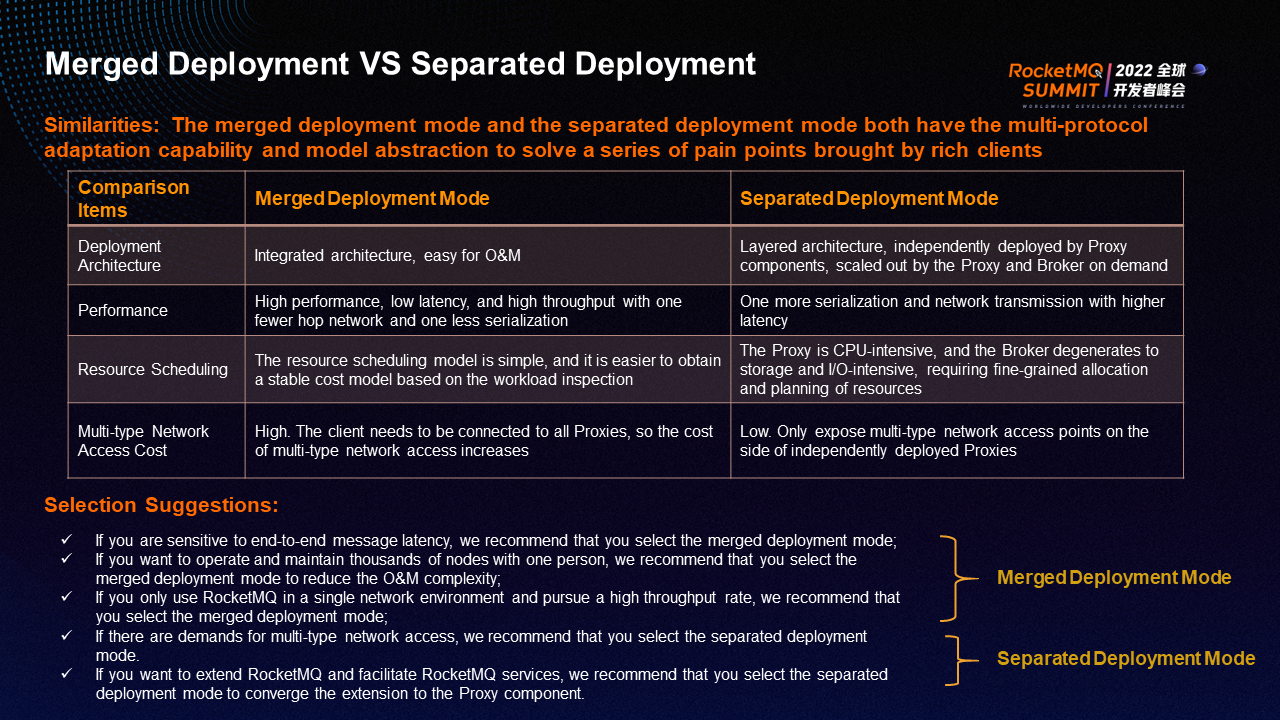

Let's compare these two deployment modes. First of all, the merged deployment and the separated deployment both have the ability of multi-protocol adaptation at the same time and can resolve multi-protocol requests on the user side and client side. They also have model abstraction, which can solve a series of pain points brought by rich clients.

In terms of deployment architecture, the merged deployment is an integrated architecture that is easy to operate and maintain. The separated deployment is a layered architecture being deployed by Proxy components independently and scaled by the Proxy and Broker separately according to the service level.

In terms of performance, the merged deployment architecture requires one fewer hop network and one less serialization with lower latency and higher throughput. The separated deployment requires one more hop network and one more serialization with increased overhead and latency. The specific increase in latency depends primarily on the network latency between the Proxy and the Broker.

In terms of resource scheduling, it is easy to obtain a stable cost model in the merged deployment mode because the component is simple. In the separated deployment mode, Proxy is CPU-intensive, and Broker and NameServer are gradually degraded to storage and I/O-intensive. More memory and disk space need to be allocated. Therefore, fine-grained allocation and resource planning are required to maximize the utilization of resources in the separated deployment mode.

In terms of multi-type network access costs, the costs are high in the merged deployment mode. The client needs to try to establish a connection with each Proxy replica, which performs message sending and receiving. Therefore, users need to bind different types of networks to each Proxy when Proxy is scaled in the scenario of multi-type network access scenarios. The costs of the separated deployment mode are low. Multi-type network access points are needed to be bound at the independently deployed Proxy layer, and multiple Proxies are bound to the same type of network access points at the same time.

Suggestions for Business Selection:

If you are sensitive to end-to-end latency, want to use less manpower to maintain large-scale RocketMQ deployments, or only need to use RocketMQ in a single network environment (such as the internal network access), we recommend using the merged deployment mode.

If there are demands for multi-type network access (such as the need for both Intranet and public network access capabilities or the need for customized RocketMQ), we recommend using the separated deployment mode. You can seal the access points of multiple network types in the Proxy layer and seal the customized transformation points in the Proxy layer.

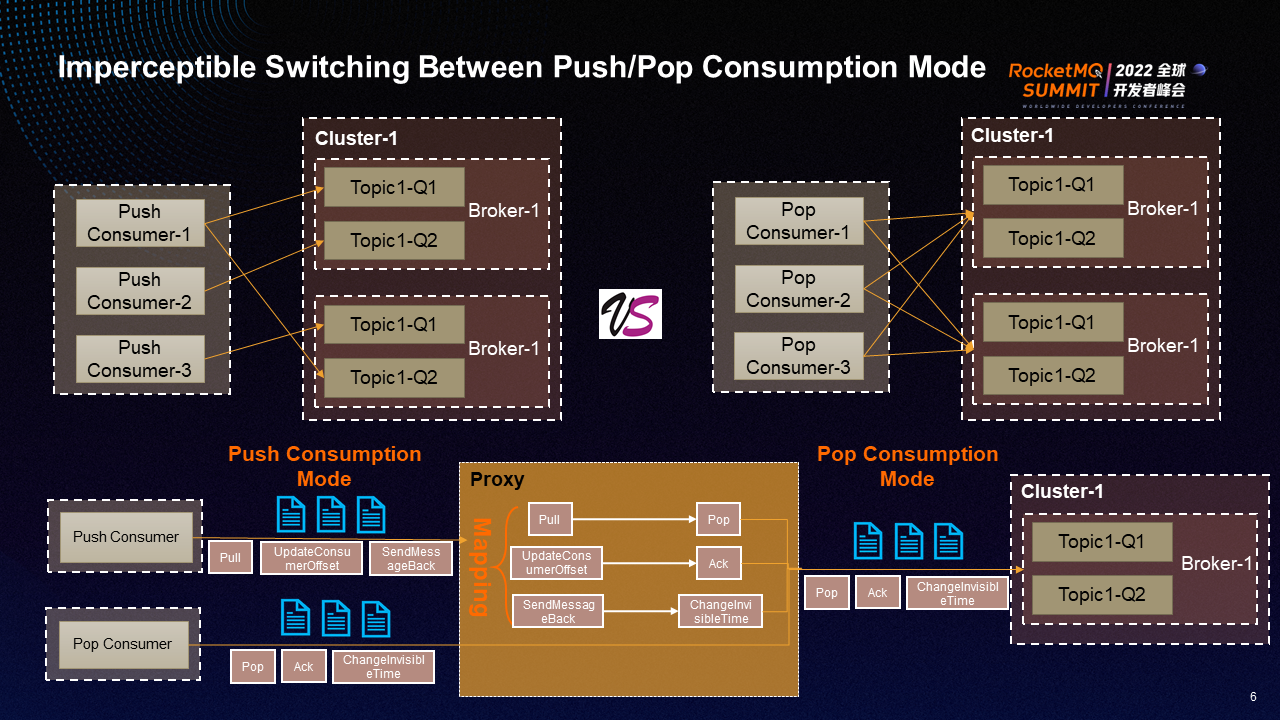

There is a big difference between the new PopConsumer consumption model and the original PushConsumer consumption model. PushConsumer is a consumption model based on the queue model, but there are some remaining problems. For example, if a single PushConsumer is stuck, messages are accumulated on the server side. The new Pop consumption model is a message-based consumption model. PopConsumer attempts to connect to all Brokers and consume messages. Even if one PopConsumer is stuck, other normal PopConsumers can still pull messages from all Brokers for consumption without message accumulation.

From the perspective of the Proxy layer, it can seamlessly adapt to PushConsumer and PopConsumer. Finally, it maps both consumption models to the Pop consumption model and establishes consumption connections with backend Brokers. Specifically, the pull request in PushConsumer is converted into the pop request in the PopConsumer consumption mode. The UpdateConsumeOffset request at the committed offset will be converted into an ACK request at the message level. The SendMessageBack will be converted into a request to modify the invisible time of the message to be consumed again.

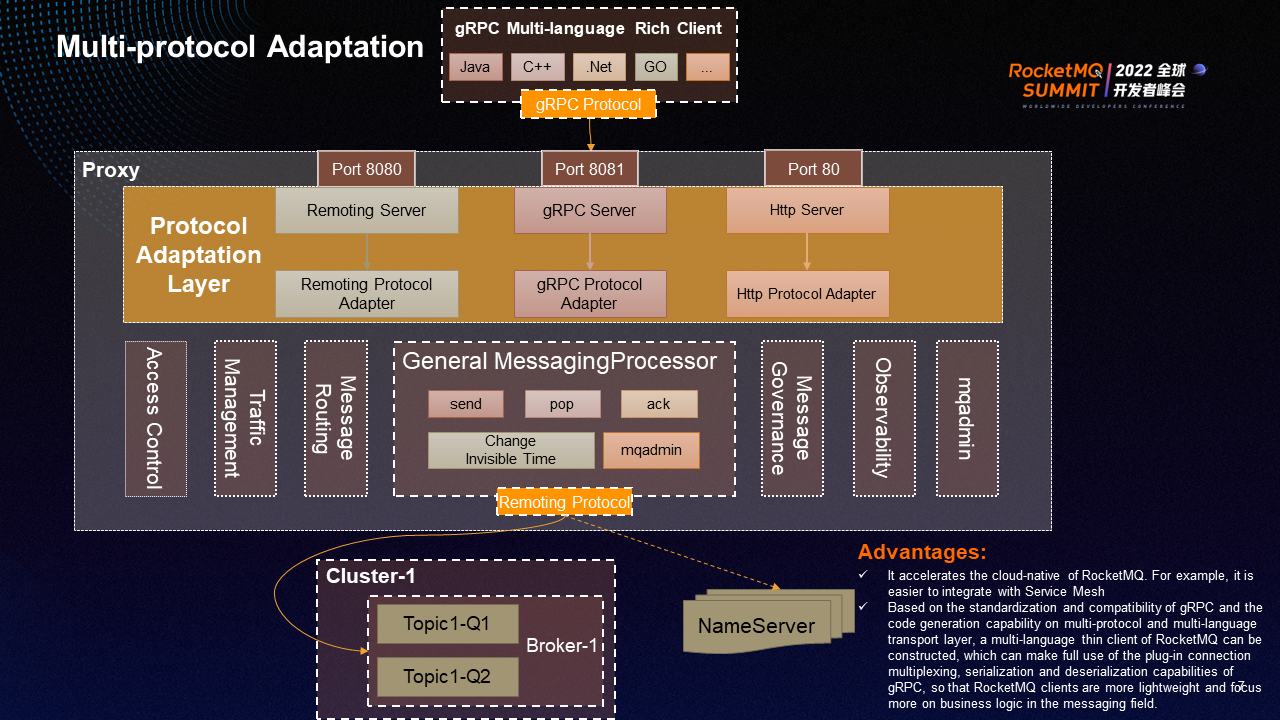

The top layer of the Proxy is the protocol adaptation layer, which exposes services to clients through different ports. After different protocols request to Proxy through different ports, the protocol adaptation layer will adapt. Through the common MessagingProcessor module, the protocol adaptation layer will convert requests such as send, pop, ack, and ChangeInvisibleTime into requests of backend remoting protocol and then establishes connections with backend Brokers and NameServer for message sending and receiving.

The advantages of multi-protocol adaptation are listed below:

① It accelerates the cloud-native of RocketMQ. For example, it is easier to integrate with Service Mesh.

② Based on the standardization and compatibility of gRPC and the code generation capability on multi-protocol and multi-language transport layers, a multi-language thin client of RocketMQ can be constructed, which can make full use of the plug-in connection multiplexing, serialization, and deserialization capabilities of gRPC, so RocketMQ clients are more lightweight and focus more on the business logic in the messaging field.

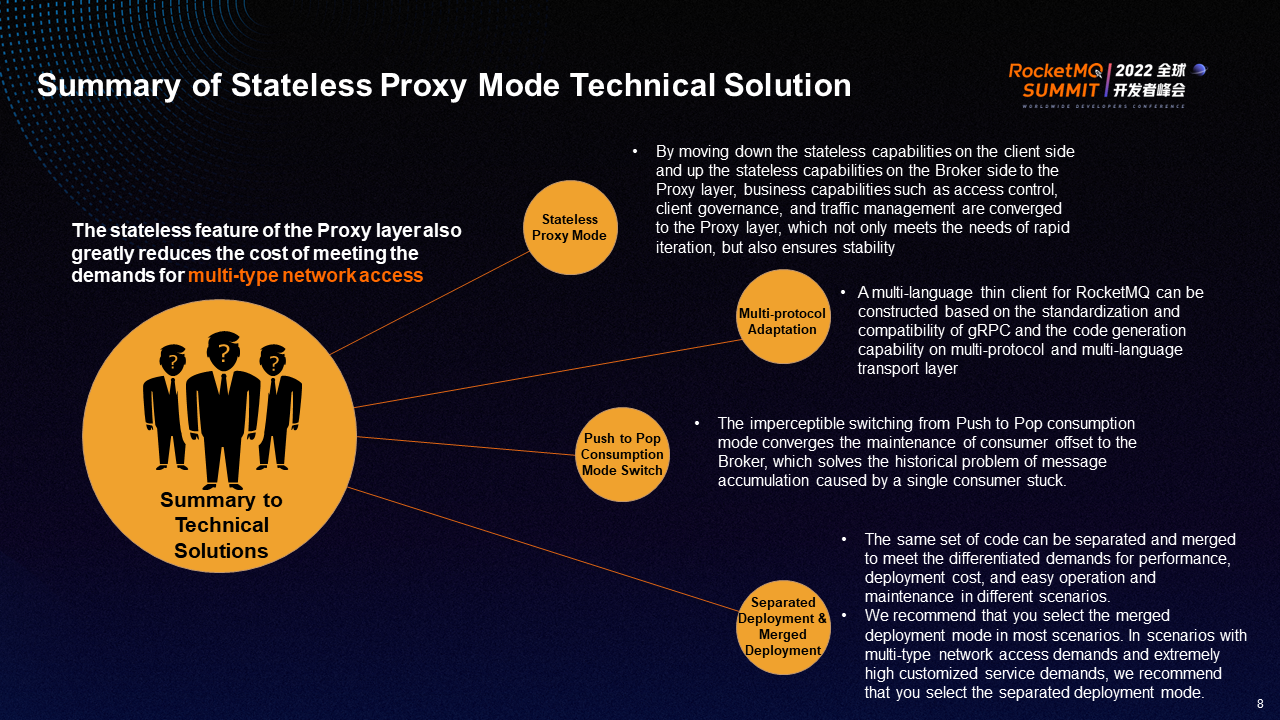

The stateless proxy mode converges services (such as access control, client governance, and traffic management) to the Proxy layer by moving down the stateless capabilities on the client side and up the stateless capabilities on the Broker side to the Proxy layer, which meets the requirements of rapid iteration, converges changes, and guarantees the stability of the entire architecture. With a layered architecture, the business logic development will focus more on the Proxy layer, and the Broker and NameServer at the next layer tend to be stable and can focus more on storage features. The release frequency of the Proxy is much higher than underlying Brokers. Therefore, stability is guaranteed after the problem converges.

Multi-protocol adaptation builds a multi-language thin client for RocketMQ based on the standardization and compatibility of gRPC and the code generation capability on multi-protocol and multi-language transport layer.

The imperceptible switching from Push to Pop consumption mode converges the maintenance of consumer offset to the Broker, which solves the historical problem of message accumulation caused by a single consumer stuck.

In addition, we have explored a deployment mode that combines the separated deployment mode and the merged deployment mode to ensure that the same set of code can be separated and merged to meet the differentiated demands for performance, deployment cost, and easy operation and maintenance in different scenarios. We still recommend that users select the merged deployment mode in most scenarios. If there are demands for multi-type network access or strong customization demands for RocketMQ, we recommend that users select the separated deployment mode to achieve better scalability.

The stateless feature of the Proxy layer significantly reduces the cost of adapting to multi-type network access demands.

In the future, we hope that the underlying Brokers and NameServers of RocketMQ will focus more on storage features, such as the transaction, timing, and sequence of business-based message storage, quickly building message indexes, creating consistent multiple replicas to improve message reliability, and implementing multi-level storage to achieve larger storage space.

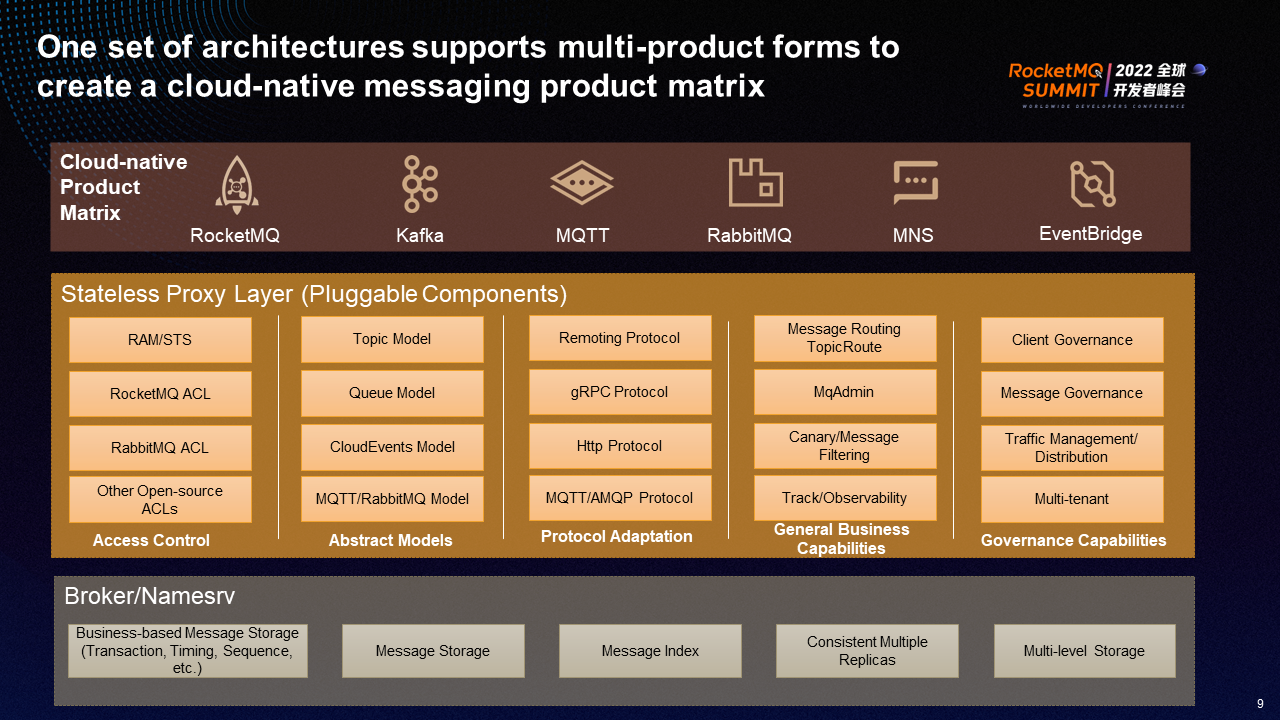

Secondly, develop the stateless Proxy layer according to pluggable features. For example, divide the access control, abstract models, protocol adaptation, general business capabilities, governance capabilities, etc., by module, so the semantics are richer, and various components can be deployed pluggably according to the demands of different scenarios.

Finally, we hope that this set of architectures can support the multi-product form of Alibaba Cloud. We are committed to creating a product matrix with rich cloud-native messages.

Join the Apache RocketMQ community: https://github.com/apache/rocketmq

An Introduction to KubeVela Addons: Extend Your Own Platform Capability

525 posts | 52 followers

FollowAlibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native - June 7, 2024

Alibaba Cloud Native Community - October 26, 2023

Alibaba Cloud Native Community - November 20, 2023

Alibaba Cloud Native Community - January 9, 2024

Alibaba Cloud Native Community - November 23, 2022

525 posts | 52 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Alibaba Cloud Native Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free