By Tao Liu, Technology Expert in Alibaba Cloud Intelligence

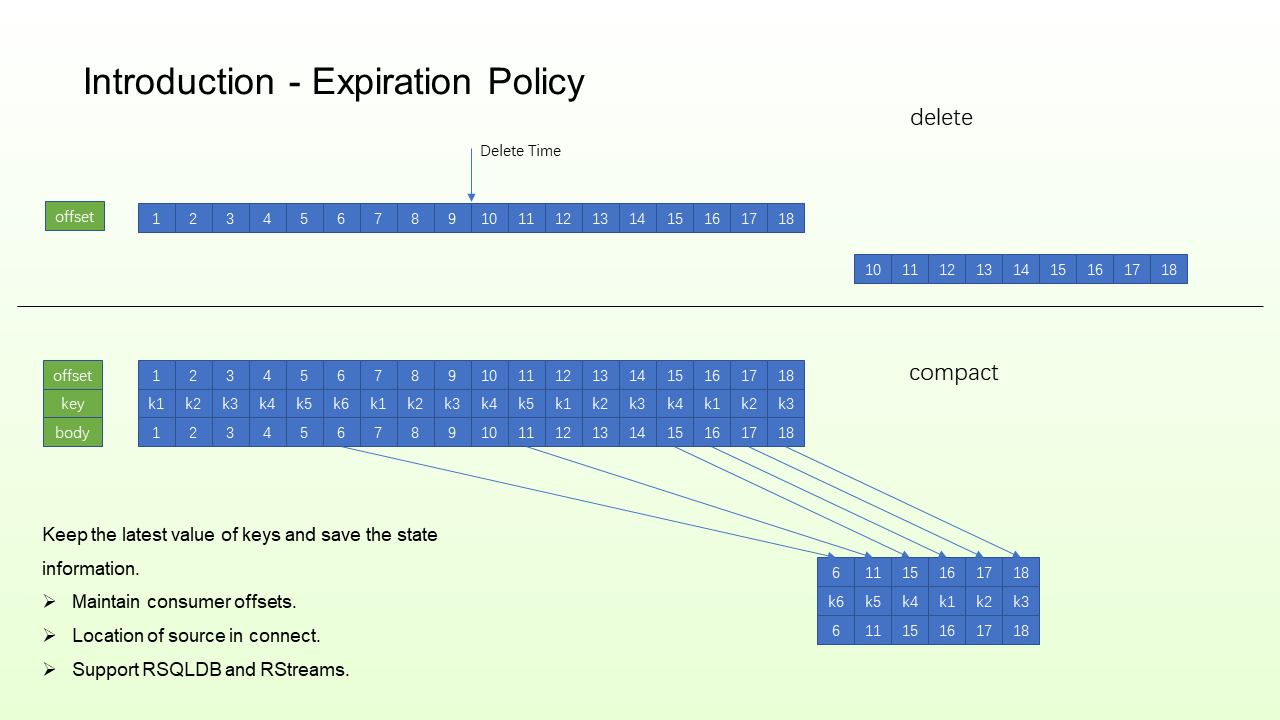

In general, message queue provides several data expiration mechanisms (such as time-based and amount-based). The former cleans up data stored for a certain period, and the latter cleans up data after it reaches a certain value of data partition.

Compaction Topic is a key-based data expiration mechanism. That means only the latest values are retained for data with the same key.

The main application scenarios of this feature are to maintain status information. When the KV structure is required, the key-value information can be directly saved to MQ through the Compaction Topic, thus removing the dependence on external databases. For example, to maintain a consumer offset, you can use consumer group and partition as keys, use consumer offsets as offsets, and send them to MQ in the form of a message. After compaction, you can obtain the latest offset information during consumption. In addition, source information in connect (such as binlog parsing point and other point information) can be stored in Compaction Topic. Compaction Topic can also store the checkpoint information of RSQLDB and RStreams.

The following issues must be solved during compaction:

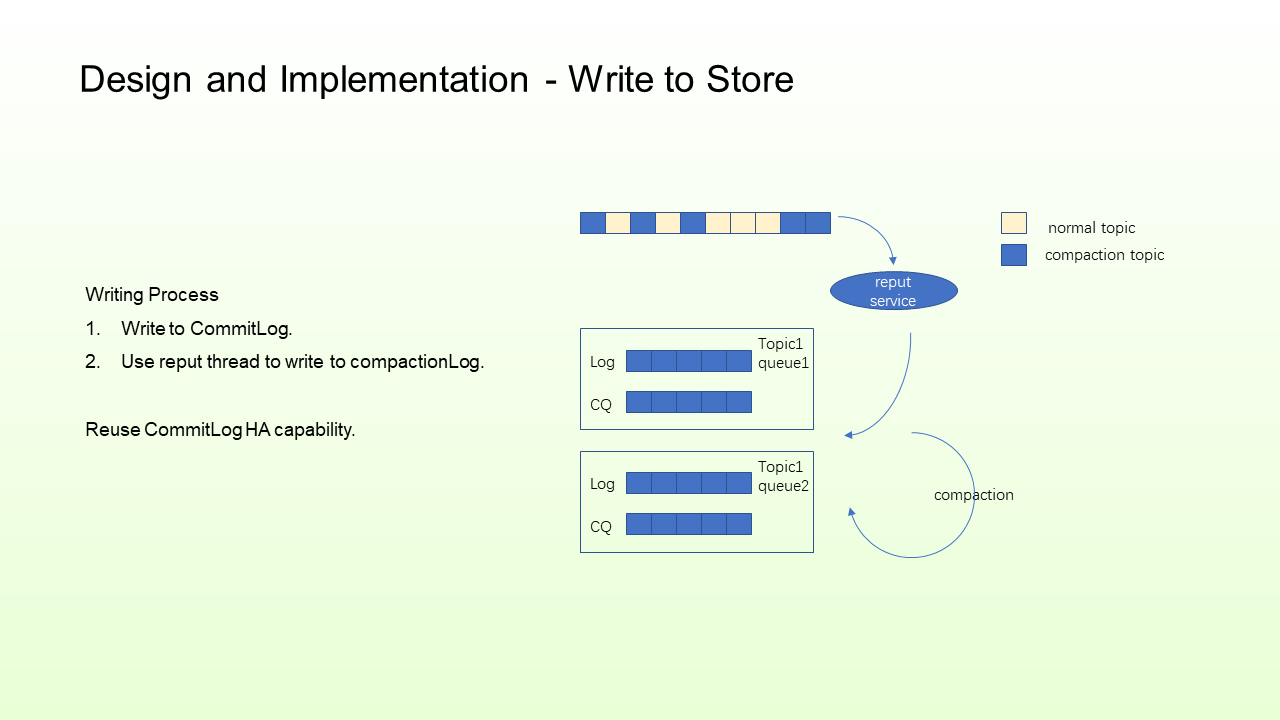

First, how the data is written.

Write to CommitLog, mainly to reuse the HA capability of CommitLog. Then, use reput threads to split the CommitLog message into different files based on the Topic + partition. Sort the message by partition and generate an index. As such, the final message is regulated based on the granularity of the Topic + partition.

During compaction, why don't we regularize on the original commitLog but by partition instead? There are three reasons:

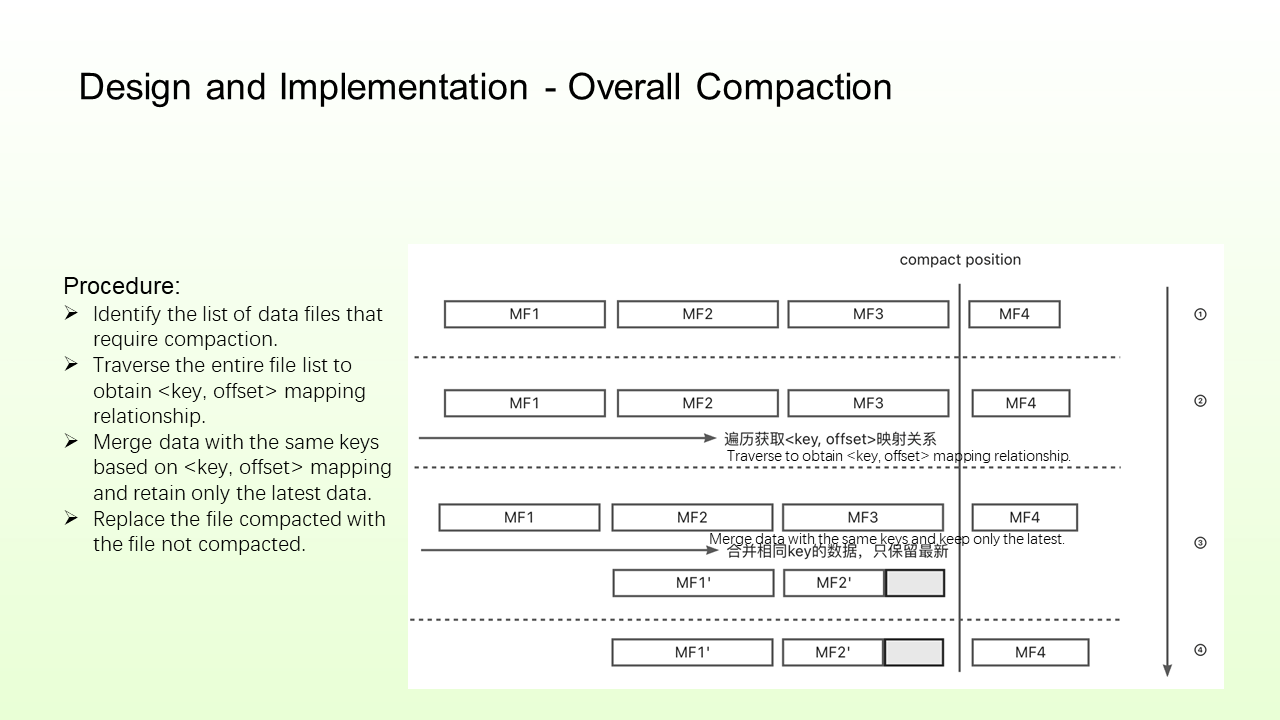

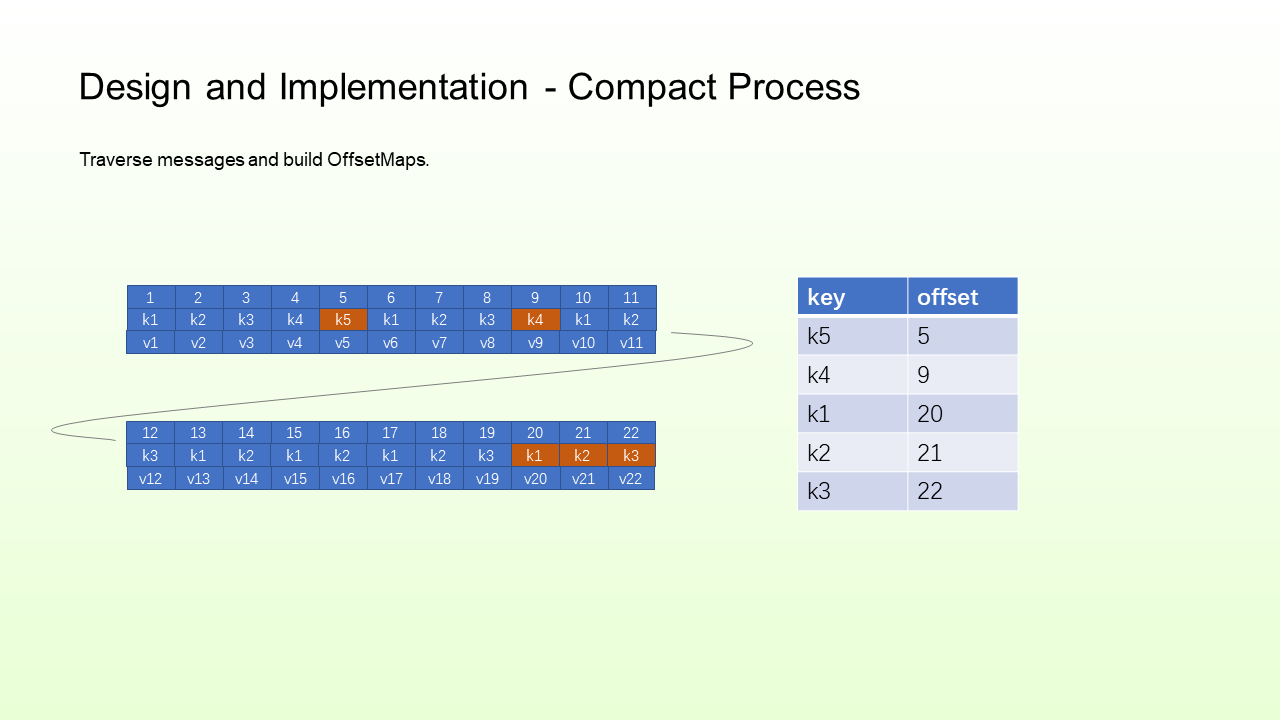

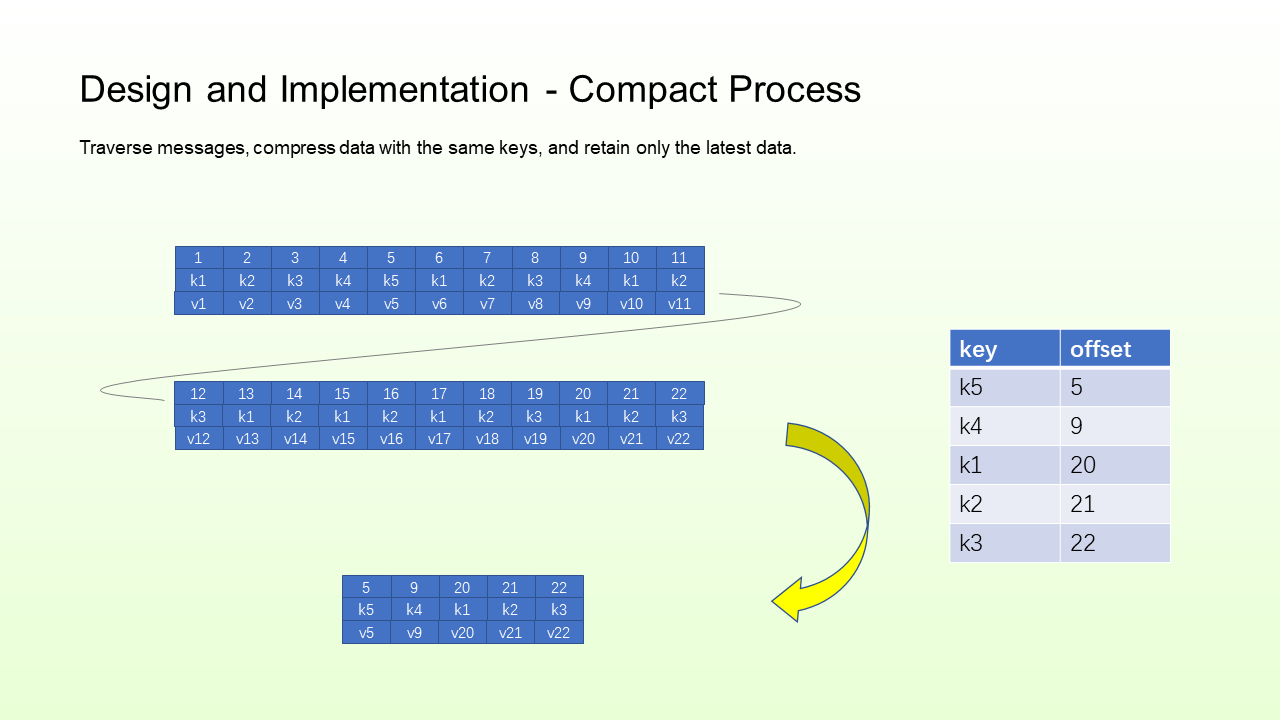

The compaction process is listed below:

The main purpose of building OffsetMaps in the second step is to know which files need to be retained and deleted and the context of the files. Therefore, the layout of writing can be determined before writing the data that needs to be retained in the new file in the way of appending.

What is recorded here is not key-to-value information but key-to-offset information because the data body of the values may be long and occupies space. In contrast, offsets are fixed, and the sequence of the message can be specified through offset information. In addition, the length of keys is not fixed, so it is not appropriate to store original keys directly on a map. Therefore, MD5 is used as a new key. If MD5 is the same, keys are considered the same.

During compaction, all messages are traversed, and values with the same keys and offsets less than OffsetMap are deleted. Finally, the compacted data file is obtained through the original data and map structure.

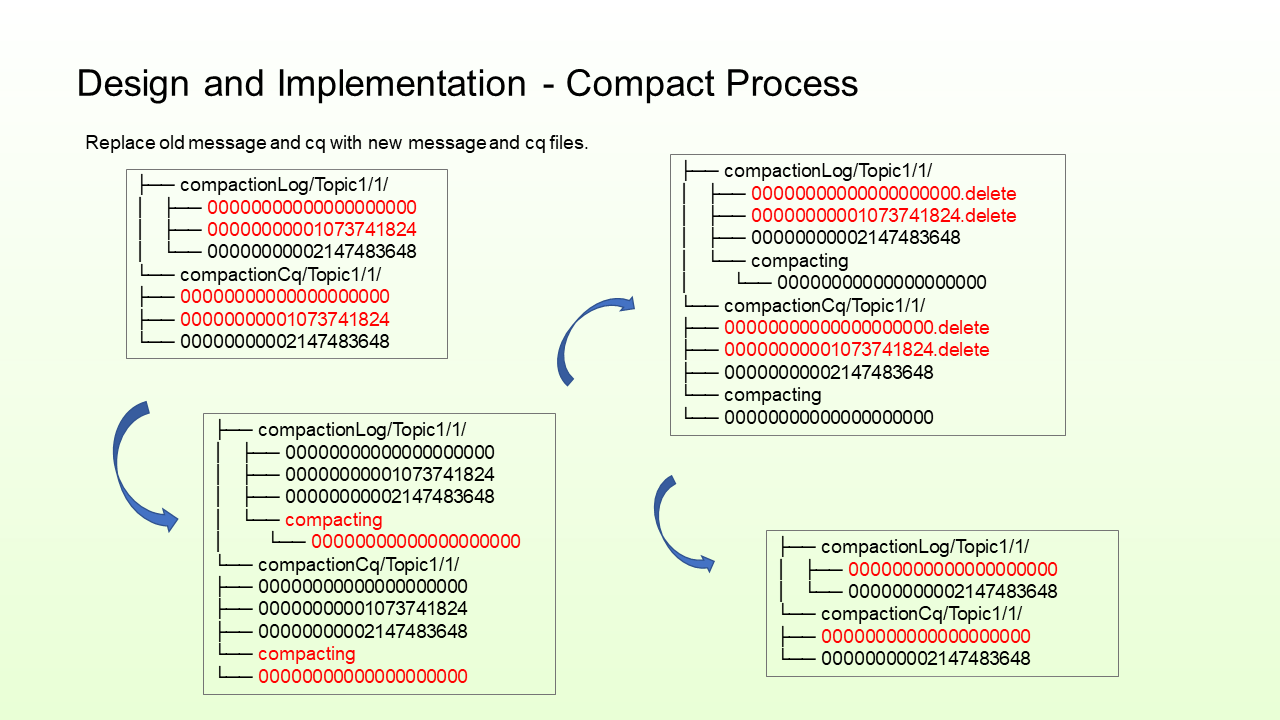

The preceding figure shows the directory structure. When writing, the upper part is the data file, and the lower part is an index. The two files to be compacted are marked red. The compacted files are stored in a subdirectory. The old files need to be marked as deleted first, and the subdirectory files and CQ are moved to the old root directory at the same time. Note that the files correspond to CQ file names and can be deleted together.

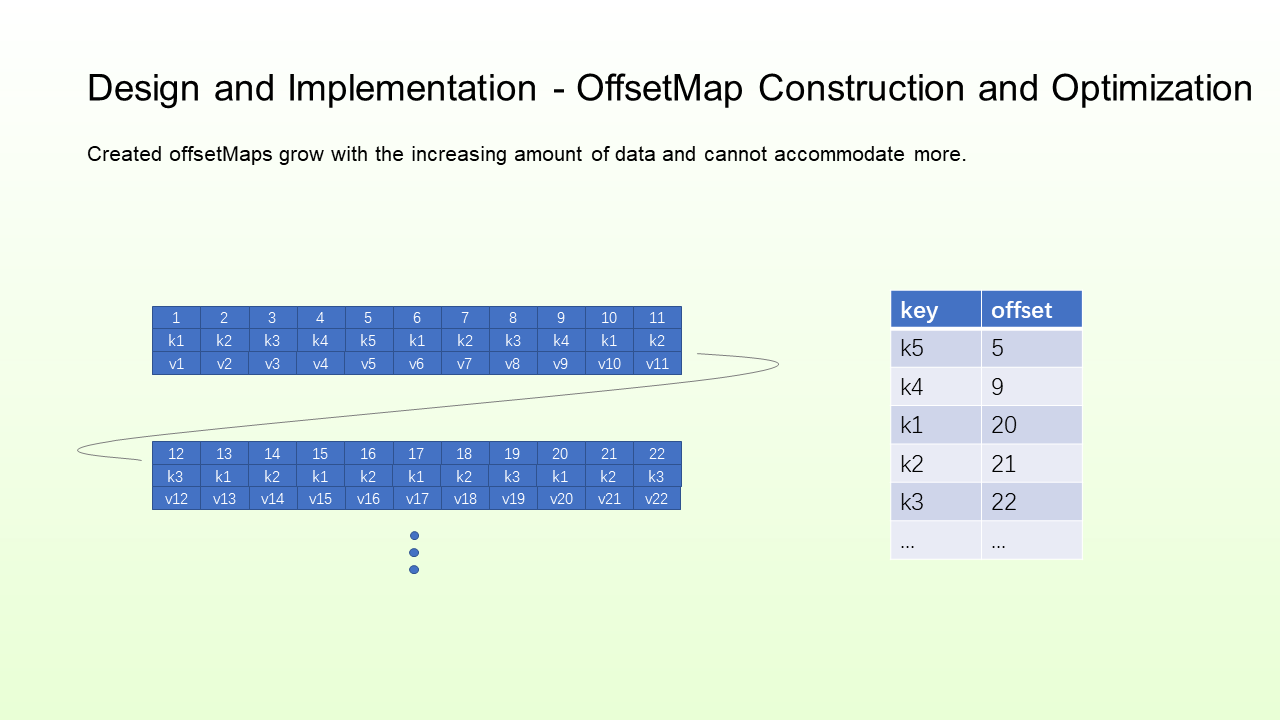

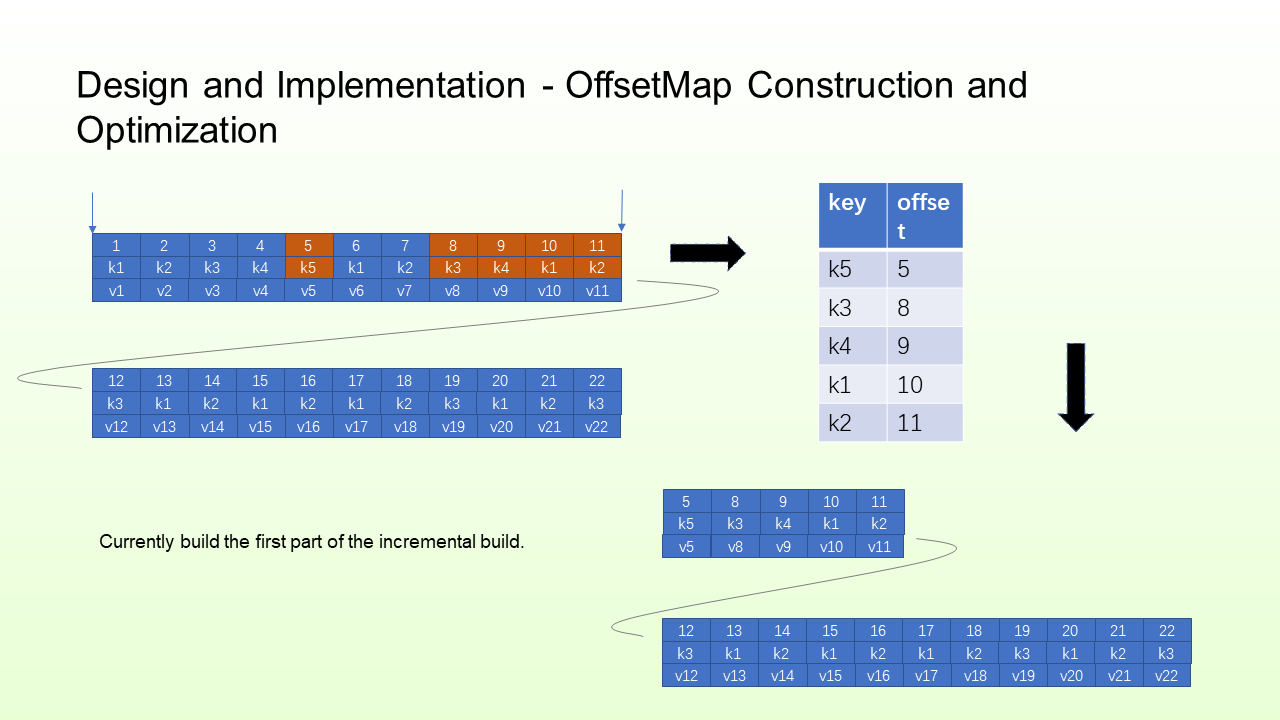

Created OffsetMaps grow with the increasing amount of data and cannot accommodate more.

Therefore, you cannot use the full build method. You cannot build the OffsetMaps of all files that need to be compacted at one time. Instead, you need to change to an incremental build, and the build logic will have minor changes.

First-Round Build: As shown in the preceding figure, build an OffsetMap for the part above and then traverse the file. If offsets are smaller than the offsets of the corresponding keys in the OffsetMap, delete them. If they are equal, keep them. The offsets of the message in the following part must be larger than the offsets in the OffsetMap, so they need to be retained.

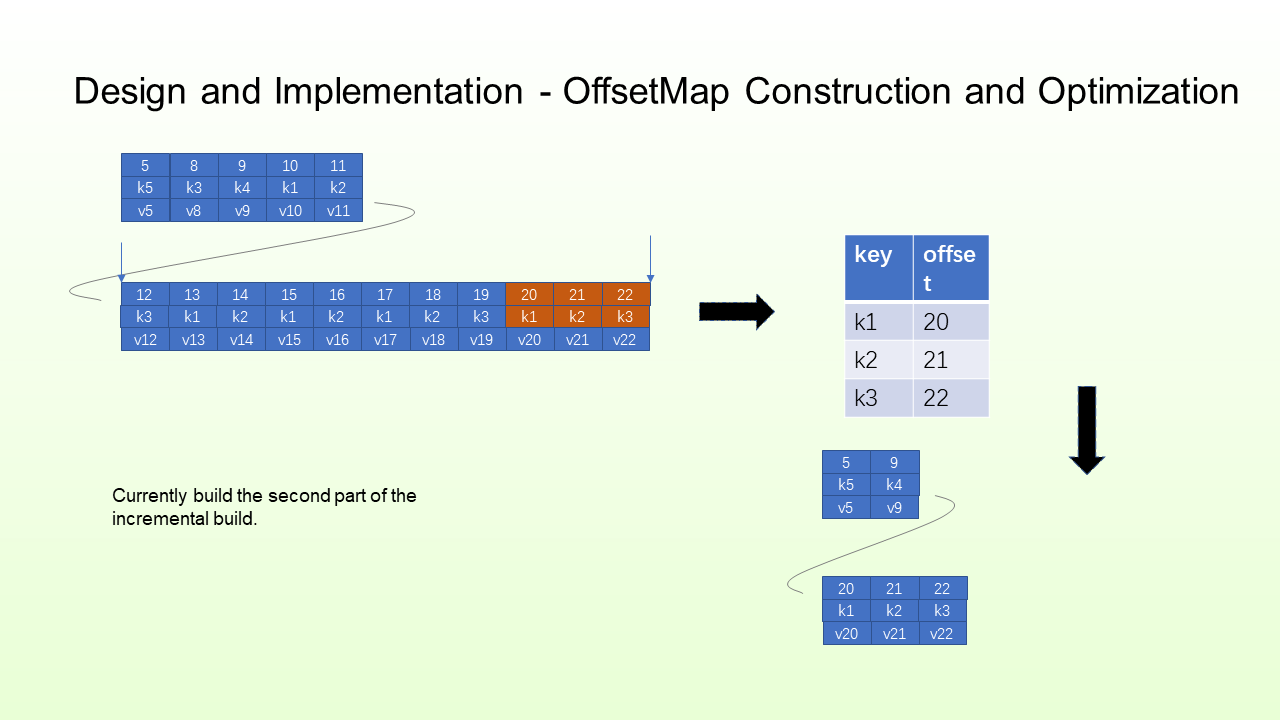

Second-Round Build: Build from where you last ended. If a key in the previous round does not exist in the new round, retain the previous value. If it exists, build according to the principle above. If offsets are smaller than the offsets of the corresponding keys in the OffsetMap, delete them. If they are equal to or larger than the ones in the OffsetMap, keep them.

After changing the one-round build to a two-round build, the size of OffsetMap and the amount of data built are both significantly reduced.

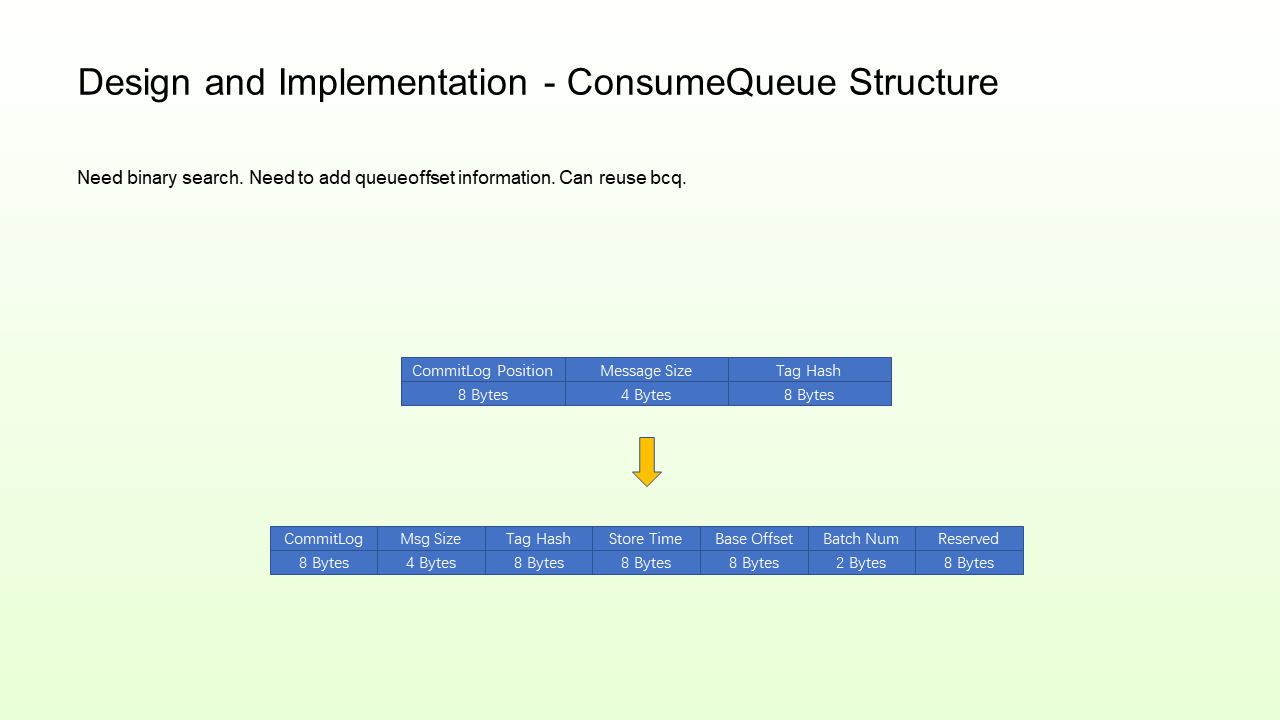

The original indexes were CommitLog Position, Message Size, and Tag Hush. Now, we reuse the bcq structure. Since the data is discontinuous after compacting, you cannot directly find the physical location of data previously. Since queueoffset is monotonically increasing, you can use binary search to find indexes.

Binary search requires queueoffset information, and the index structure would change. bcq contains queueoffset information, so bcq structure can be reused.

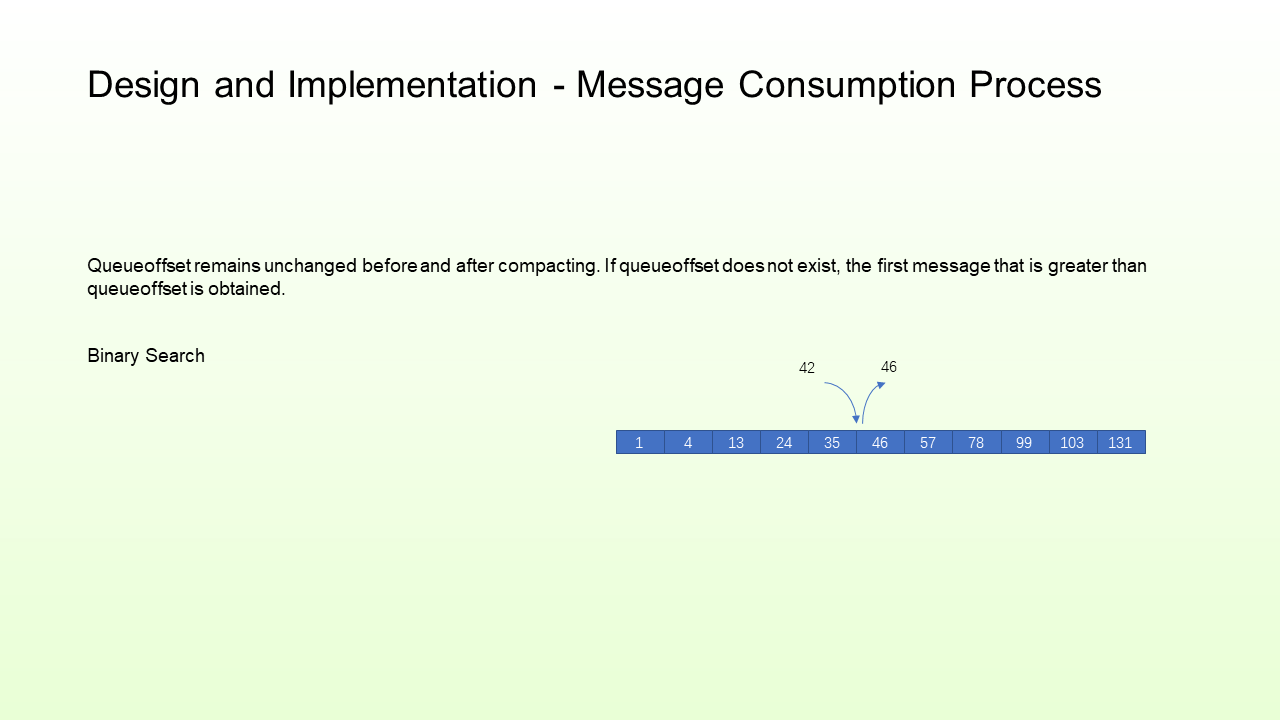

Queueoffset remains unchanged before and after compacting. If queueoffset does not exist, the first message greater than queueoffset is obtained, and then all full data is sent to the client from the beginning.

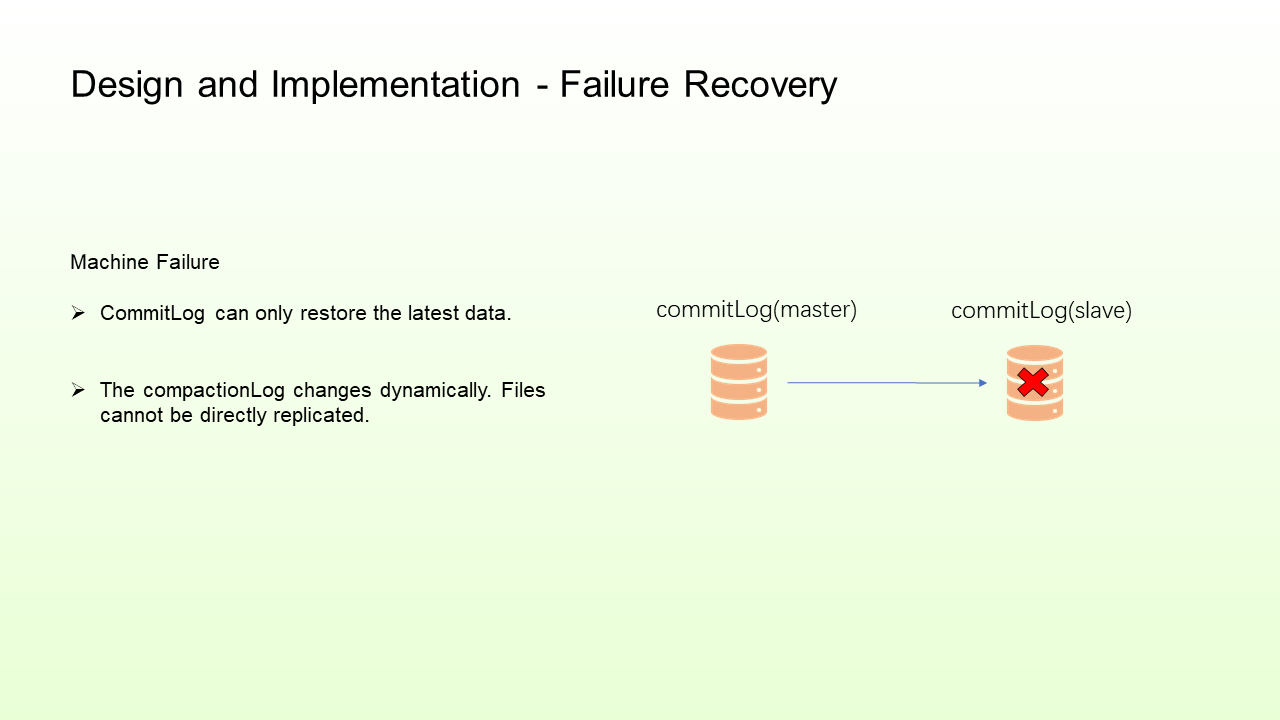

If messages are lost due to a machine failure, you need to rebuild the standby machine because CommitLog can only restore the latest data, while CompactionLog requires old data. In the previous HA mode, data files may be deleted during compacting. Therefore, synchronization between masters and slaves cannot be performed based on the mode of replicating files.

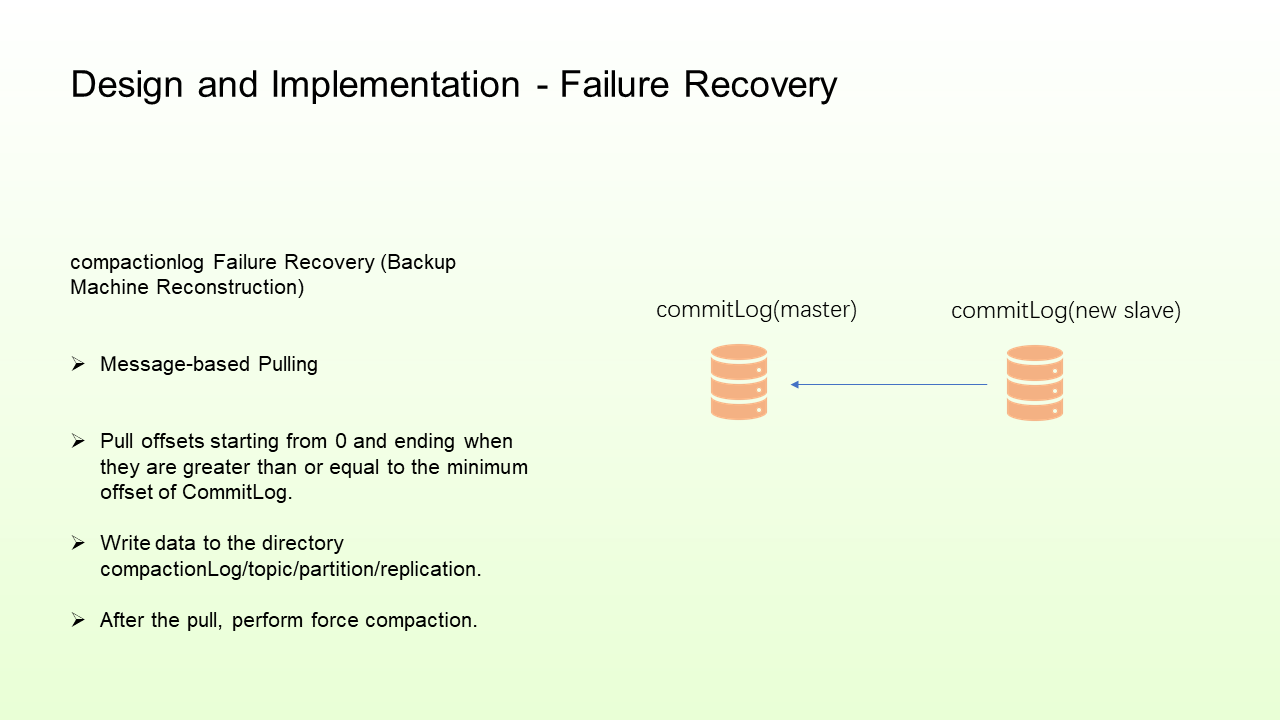

Therefore, message-based replication was implemented. That means simulate consumption requests to pull messages from masters. Generally, pull offsets start from 0 and end when they are greater than or equal to the minimum offset of CommitLog. After the pull is complete, perform force compaction again to compact the CommitLog data and the restored data. This ensures that the retained data is compacted. The subsequent process remains unchanged.

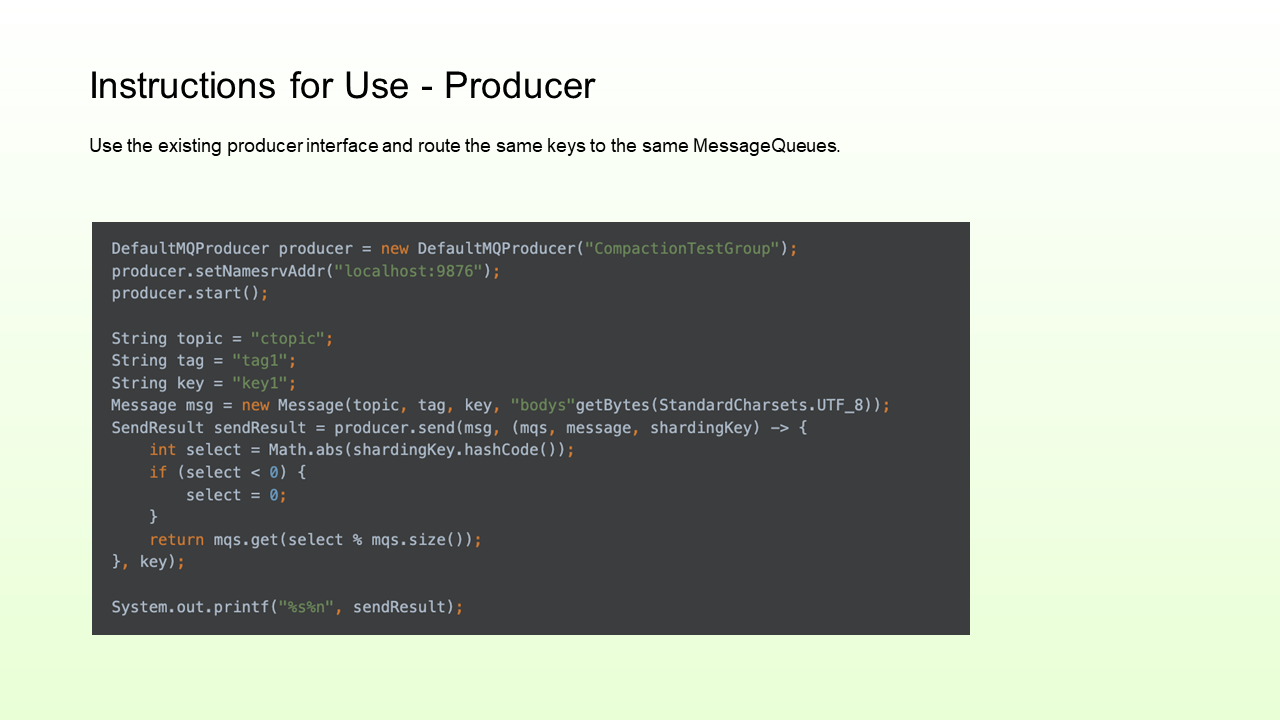

The producer side uses the existing producer interface. As compaction needs to be conducted by partition, you need to route the same keys to the same MessageQueues and implement the relevant algorithms yourself.

The consumer side uses the existing consumer interface. After consuming messages, store the message in the local class Map structure before using it. In most scenarios, data is pulled from the beginning. Therefore, you need to reset consumer offsets to 0 at the beginning. After pulling, pass message keys and values to the local kv structure. We can directly obtain them from the structure if they are to be used.

Design and Implementation of RocketMQ 5.0: Multi-Language Client

RocketMQ Connect Builds a Streaming Data Processing Platform

508 posts | 48 followers

FollowAlibaba Cloud Native Community - February 1, 2024

Alibaba Cloud Native Community - March 14, 2023

Alibaba Cloud Native Community - February 8, 2024

Alibaba Cloud Native Community - July 12, 2022

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native - July 18, 2024

508 posts | 48 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Native Community