By Xieyang

RocketMQ implements a flexible multi-partition and multi-replica mechanism, which avoids the impact of single points failure in the cluster on the overall service availability. Storage mechanisms and high availability policies are the core of RocketMQ stability. The analysis and discussion on the current storage implementation of RocketMQ have been a hot topic in the community. Recently, I have been responsible for constructing multi-replica and high availability of RocketMQ messages. I would like to share some interesting ideas.

This article focuses on which complex problems this storage implementation solves from different perspectives. I deleted the redundant code detail analysis from the original article to analyze the defects and optimization direction of the storage mechanism step by step.

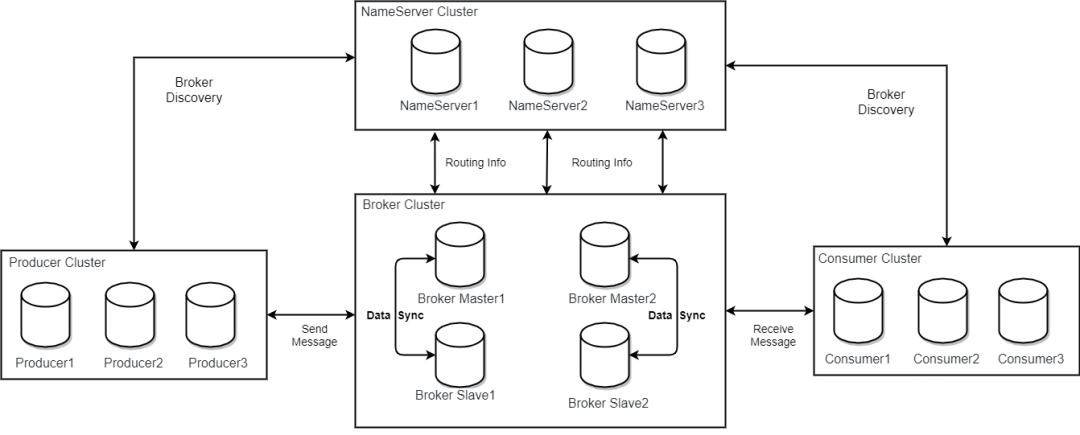

First, I will briefly introduce the architecture model of RocketMQ. RocketMQ is a typical subscription publishing system that decouples upstream and downstream through Broker nodes to transfer and persist data. Broker is a node that stores data. It consists of multiple replica groups deployed horizontally but not necessarily peered. Data of different nodes in a single replica group will reach final consistency. For a single replica group, there will be only one read-write Master and several read-only Slaves at the same time. In case of a primary failure, an election is required for fault tolerance with a single point of failure, and this replica group is readable and unwritable.

NameServer is an independent stateless component that accepts metadata registration from Brokers and maintains some mapping relationships dynamically while providing service discovery capabilities for clients. In this model, we use different topics to distinguish different types of information flows and set up subscription groups for consumers for better management and load balancing.

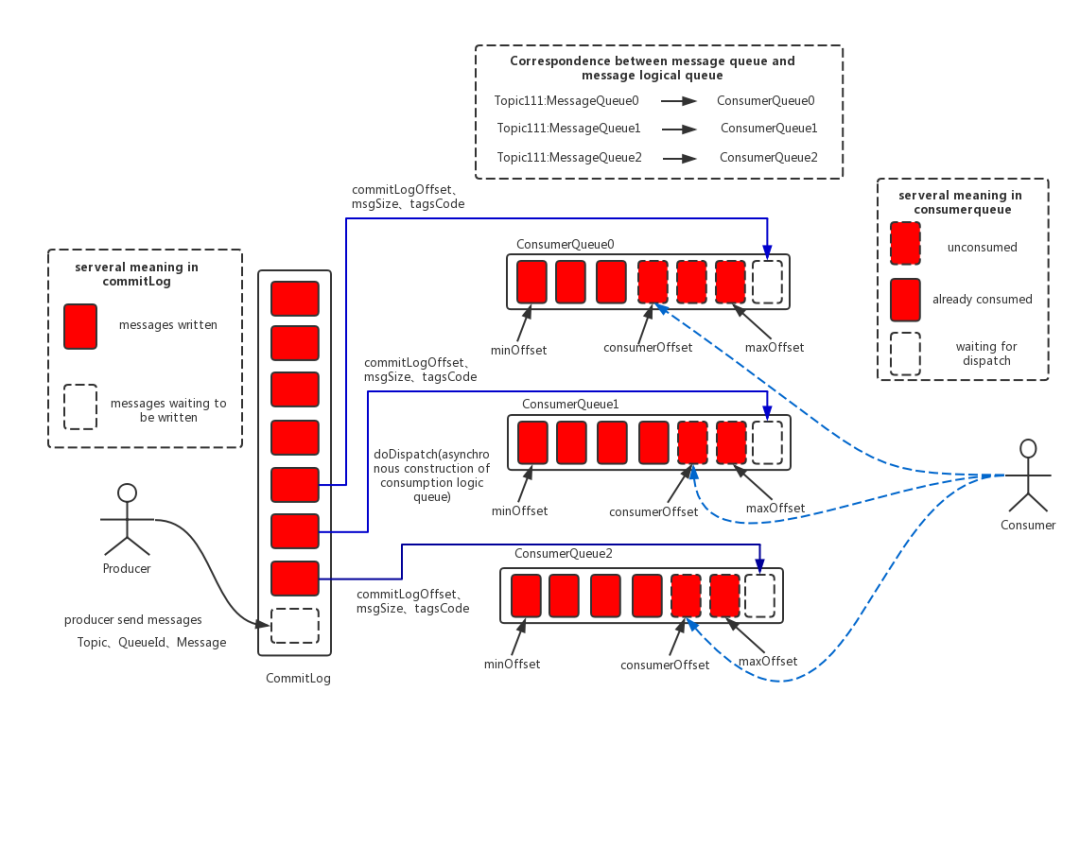

As shown in the middle part of the following figure:

The current storage implementation of RocketMQ can be divided into several parts:

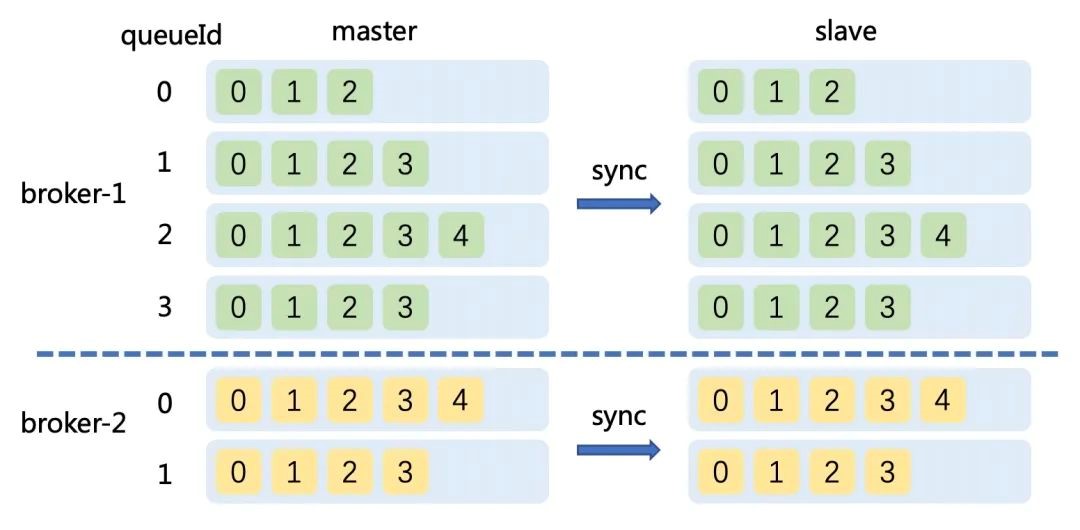

The RocketMQ server creates multiple logical partitions for a single topic to improve the overall throughput and provide high availability across replica groups, which maintain partial partitions on multiple replica groups and are called message queues. In one replica group, the number of queues for the same topic is the same, and the queues are numbered from 0 consecutively. The number of queues on different replica groups can be different.

For example, topic-a can have four queues on the broker-1 primary replica, and the queues are numbered from 0 to 3 (Queue ID). It is the same on the broker-1 secondary replica. However, there may be only two queues on broker-2, which are numbered from 0 to 1. The organization and management method of metadata on Broker matches the preceding model. The TopicConfig of each topic contains several core attributes, names, numbers of read and write queues, permissions, and many metadata identifiers. This model is similar to the StatefulSet of Kubernetes, in which queues are numbered from 0 and are expanded at the end. (For example, 24 queues are partitioned to 16, leaving partitions numbered from 0 to 15.) We don't have to make a separate state machine for each partition (like Kafka) while simplifying the implementation of partitions.

We will maintain the structure of Map in the memory of the storage node to map TopicName directly to its specific parameters. This simple design implies some defects. For example, it does not implement a native Namespace mechanism to isolate metadata in a multi-tenant environment on the storage level. This is an important evolution direction in the process of RocketMQ 5.0 moving towards the cloud-native era.

When the Broker receives an external control command (such as creating or deleting some topics), the memory map will update or delete a KV pair, which needs to be serialized and overwritten to the disk immediately. Otherwise, the update will be lost. In a single-tenant scenario, the number of topics (key) does not exceed a few thousand, and the file size is only a few hundred KB, which is fast.

However, the topic of a storage node can reach more than 10 MB in most cloud-based scenarios. Each time a KV is changed, this large file is fully written to the disk. The overhead of this operation is high, especially when data needs to be migrated across clusters and nodes. In emergency situations, synchronous file writing severely prolongs the response time of peripheral control commands, becoming one of the severe challenges in the cloud-sharing mode. Therefore, two solutions are produced: batch update interface and incremental update mechanism.

In addition to the most important topic information, Broker manages group information, consumption progress ConsumerOffset of the consumer group, and multiple configuration files. Group change is similar to the topic, which only requires persistence when creating or deleting a topic. ConsumeOffset is used to maintain the consumption progress of each subscription group, such as Map>. From the perspective of the function of the file and the data structure, although the number of topic groups is large, the frequency of changes is low. The submission and persistence points are carried out all the time, which leads to the real-time update of map. However, the data (last commit offset) after the last update is useless for the current time, and a small number of updates are allowed.

Therefore, RocketMQ does not use the method of writing files when data changes (like topic groups). Instead, RocketMQ uses a scheduled task to perform CheckPoint on the map. This period is five seconds by default. When the server switches between the primary and secondary or is released normally, messages are repeated within seconds.

Is there room for optimization? Most of the subscription groups are offline, and we only need to update these subscription groups with changed offsets each time. We adopt a differential optimization strategy. (Those who have participated in ACM should be familiar with it. Search for differential Data Transmission.) Only update the changed content when the primary and secondary synchronize offset or persist. What if we need a historical offset submission record in addition to knowing the current offset? Use a built-in system topic to save each submission. (In a sense, Kafka uses an internal topic to save the offset.) Trace the consumption progress by playing back or searching for messages. RocketMQ supports a large number of topics, so the scale of metadata will be larger, and the current implementation overhead will be smaller.

Implementation is determined by the needs and is flexible. In RocketMQ metadata management, another problem is how to ensure the consistency of data on multiple replica groups in a distributed environment, which will be discussed in subsequent articles.

Many articles have mentioned that the core of RocketMQ storage is an extremely optimized sequential disk write, which appends new messages to the end of the file in the form of append only.

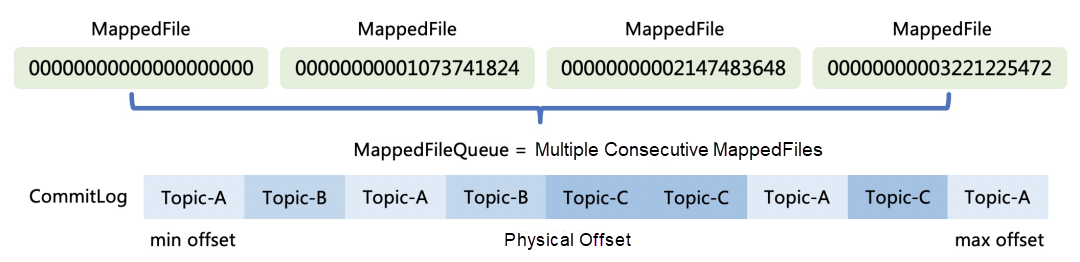

RocketMQ uses a memory-mapped file method called MappedByteBuffer to map a file to the address space of a process, associating the disk address of the file with a virtual address of the process. This uses the FileChannel model in NIO. After this binding, the user process can write to the disk in the form of pointers (offsets) without making read or write system calls, reducing the overhead of copying data between buffers. This kernel implementation mechanism has some limitations. The file of a single mmap is unable to be too large. (RocketMQ chose 1 GB.) At this time, multiple mmap files are connected with a linked list to form a logical queue (called MappedFileQueue), which can save all messages logically without considering the length.

Messages of different topics are directly written by append only, which improves the performance compared with random writes. Note: The write amplification of the mixed writing here is low. When we look back at the theoretical model of BigTable implemented by Google, various LSM trees and their variants convert the original direct maintenance tree into incremental writing to ensure writing performance. Then, superimpose periodic asynchronous merging to reduce the number of files. This action is called compaction.

RocksDB and LevelDB have several to dozens of times of overhead for write amplification, read amplification, and space amplification. Due to the immutability of messages, under the non-accumulation scenarios, data is quickly consumed by the downstream when it is written to the intermediate proxy broker. We do not need to maintain memTable at the time of writing, which avoids data distribution and reconstruction. Compared with the storage engine of various databases, the implementation of messages (like FIFO) can save a lot of resources and reduce the complexity of CheckPoint. Data replication between multiple replicas on the same replica group is all managed by the storage layer. This design is similar to BigTable and GFS, also known as Layered Replication Hierarchy.

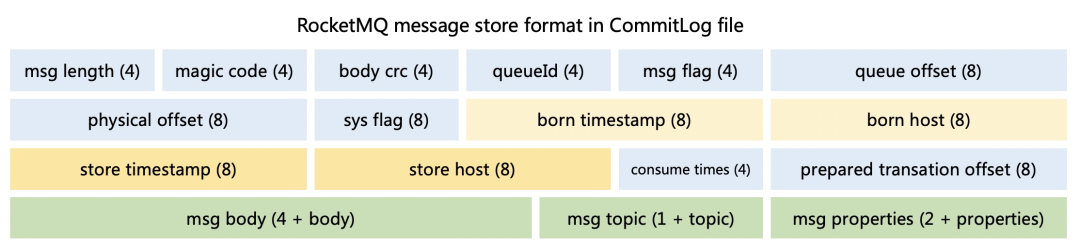

RocketMQ has a complex set of message storage encodings used to serialize the message object. Then, it drops non-fixed-length data to the file mentioned above. Notably, the storage format includes the number and location of the index queue.

During storage, the metadata of a single message occupies a fixed 91 B + part of the storage space, while the payload of the message is greater than 2 KB, which means the additional storage overhead caused by metadata is only increased by 5-10%. The larger a single message, the less additional overhead (proportion) of the storage. However, if you have a large message request, for example, you want to save a serialized image (large binary object) in the body, from the current implementation, it is appropriate to save the reference in the message, save the real data to other components, and read the reference (such as file name or uk) during consumption.

As mentioned before, the message data of different topics is mixed and appended to the CommitLog (MappedFileQueue) and then distributed to other backend threads. RocketMQ (a mechanism for separate management of CommitLog and metadata) is similar to PacificaA to achieve strong consistency in a simple way.

Strong consistency refers to the persistence sequencing of all messages in the Master Broker (corresponding to the primary of PacificA) and achieving Linearizability through total order broadcast. These implementations need to solve two similar problems: how to implement sequential writing under a single machine and how to speed up writing.

If the replica group is asynchronous and multi-write (high performance and reliability), the primary and secondary data logs may be forked if the log is not up to date (the highest water level). In RocketMQ 5.0, the primary and secondary servers use a version-based negotiation mechanism to ensure data consistency using backwardness and truncating uncommitted data.

The logic queue solution is implemented in RocketMQ 5.0 to solve the problem of global partition changes. This is similar to some optimization strategies in PacificaA that add replica groups and shard merge to the computing layer reading through new-seal. Please refer to the design solution for details.

How can we ensure the order of single-machine storage and writing CommitLog? The intuitive idea is to add exclusive lock protection to writing. Only one thread is allowed to lock at the same time. What kind of lock implementation is appropriate? RocketMQ currently implements two methods: AQS-based ReentrantLock and CAS-based SpinLock.

When is Spinlock selected? When is Reentranlock selected? Recall the implementation of the two locks. For ReentrantLock, if the underlying AQS fails to grab the lock, it will sleep. However, SpinLock will always grab the lock, causing obvious CPU usage. When SpinLock fails in trylock, it can be expected that the thread holding the lock will quickly exit the critical area. The busy wait of the dead loop is likely to be more efficient than the pending wait of the process. This is why mode 1 is used to maintain stable CPU usage in high concurrency, and mode 2 is used to reduce CPU overhead in scenarios where the response time of a single request is short. The two implementations are applicable to scenarios with different operating times in the lock. What actions does the thread need to perform after it gets the lock?

Therefore, many articles recommend using ReentrantLock for synchronous persistence and SpinLock for asynchronous persistence. Is there any room for optimization? Currently, a newer futex can be considered to replace the Spinlock mechanism. Futex maintains a core-layer waiting queue and many SpinLock linked lists.

When the lock is obtained, try CAS modification. If it is successful, the lock is obtained. Otherwise, the current thread uaddr hash is put into the waiting queue to scatter the competition for the waiting queue and reduce the length of a single queue. It is similar to concurrentHashMap and LongAddr. The core idea is similar: scattered competition.

Limited by disk I/O, the response of Block Storage is slow. It is impossible to require immediate persistence of all requests. Most systems cache operation logs into memory to improve performance. For example, when the amount of data in the log buffer exceeds a certain size, or it is more than a certain time from the last brush into the disk, the operation logs are persisted through background threads regularly.

This practice of group submission means that if the storage system fails unexpectedly, the last part of the update operation is lost. For example, the database engine always requires that the operation log be brushed to the disk (the redo log is written first) before the data in the memory can be updated. If the power is cut off and restarted, the transaction can be rolled back and discarded through undo log.

There are some subtle differences in the implementation of the message system. The reliability requirements for messages are different in different scenarios. In the Finance Cloud scenario, it may be required that both the primary and secondary messages are synchronously persisted before they are visible to the downstream. However, the log scenario needs the lowest latency while allowing a small amount of loss in the fault scenario. As such, RocketMQ can be configured as single-primary asynchronous persistence to improve performance and reduce costs. The storage layer will lose the last short message that was not saved while the downstream consumers have received it. When the downstream consumer resets the offset to an earlier time and plays back to the same offset, only newly written messages can be read, but not previously consumed messages. (Messages with the same offset are not the same.) This is one read uncommitted.

What would it cause? For normal messages, since this message has been processed downstream, the worst effect is that it cannot be consumed when the location is reset. However, for a stream computing framework (such as Flink), when RocketMQ is used as the source, high availability is realized by replaying the offset from the most recent CheckPoint to the current data. Non-repeatable reading will cause the computing system to fail to consume the exported once accurately, and the computing result will be incorrect. One of the corresponding solutions is to build an index visible to the consumer only when the replica group majority confirms it. It increases the write latency from a macro aspect, which can be interpreted from another perspective as the cost of increasing the isolation level.

We can optimize the problem of weighing latency and throughput by speeding up the primary/secondary replication and changing the replication protocol. Here, you can look at SIGMOD 2022's paper entitled KafkaDirect: Zero-copy Data Access for Apache Kafka over RDMA Networks, which discusses Kafka running on RDMA networks to reduce latency.

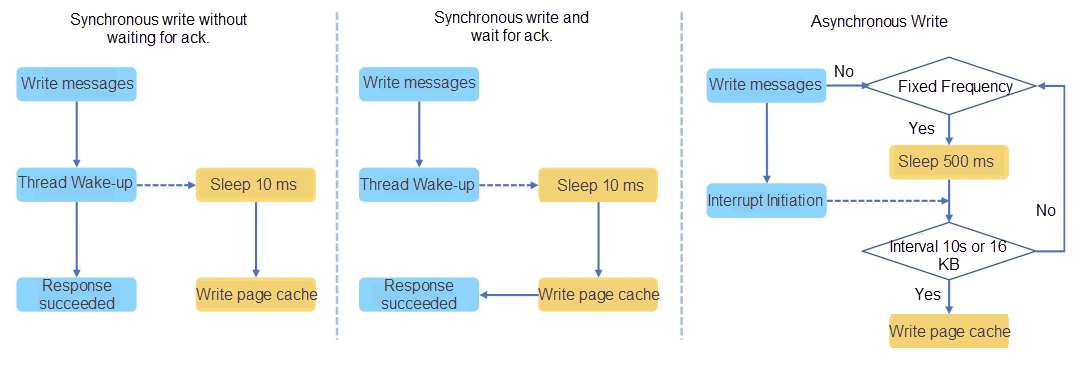

This topic is mostly discussed in the community, and many articles refer to the persistence mechanism as brushing. The word is not accurate. RocketMQ provides three methods for persistence, corresponding to three different thread implementations. Only one is selected for actual use.

The falling threads of synchronous disk brushing are all GroupCommitService. The write thread is only responsible for waking up the disk-falling thread and transferring the message to the storage thread instead of returning immediately after the message storage is completed. My understanding of this design is that the message-writing thread can be regarded as an I/O thread compared with the storage thread. The real storage thread needs to be interrupted for batch persistence, so it takes a lot of time to transfer.

From the implementation of synchronous disk brushing, the disk falling thread will check every 10 ms. If any data is not persistent, it will brush the data in the page cache into the disk. At this time, if the operating system crashes or loses power, will the loss of data that has not been fallen affect the producer? The producer only needs to use reliable sending (non-oneway rpc calls). The sender has not received a successful response, and the client will retry and write the message to other available nodes.

The thread corresponding to asynchronous persistence is FlushRealTimeService, which is divided into fixed frequency and non-fixed frequency in implementation. The core difference is whether the thread responds to interrupts. The so-called fixed frequency means every time a new message arrives, it does not respond to the interrupt and flushes every 500 ms (configurable). If it is found that there is insufficient data that has not fallen (16 KB by default), it directly enters the next cycle.* There is a time-based scheme. If the thread finds that it has been a long time since the last write (ten seconds by default), it will also execute a flush.

However, neither FileChannel nor the MappedByteBuffer force() method can precisely control the amount of data written, and the write behavior here is only a suggestion for the kernel. For non-fixed frequency implementation, every time a new message arrives, a wake-up signal will be sent. When the wake-up action is large in data volume, there is performance loss. When the number of messages is small and the real-time performance is good, this will save resources. In production, the specific choice of persistent implementation is determined by specific scenarios. Whether to write synchronously or asynchronously in multiple replicas to ensure the reliability of data storage is essentially a trade-off between read and write latency and cost.

Broadly speaking, the term has two different meanings:

For the second meaning, when the Broker is configured with asynchronous persistence and the buffer pool is enabled, the enabled asynchronous disk brushing thread is CommitRealTimeService. We know that the operating system usually triggers a flush action (controlled by some vm parameters, such as dirty_background_ratio and dirty_ratio) after a large number of dirty pages are accumulated on the page cache.

An interesting statement here is that CPU cache is maintained by hardware consistency, while the page cache needs to be maintained by software (also known as syncable). This asynchronous write may cause high disk pressure when brushing dirty pages, resulting in glitches when writing. Read and write splitting implementation has occurred to solve this problem.

When RocketMQ is started, five off-heap memory (DirectByteBuffer) are initialized by default (determined by parameter transientStorePoolSize). This solution is also pooled due to the reuse of off-heap memory. The advantages and disadvantages of pooling are listed below:

Downtime is usually caused by underlying hardware problems. If the disk is not permanently faulty after RocketMQ is down, you only need to restart it in place. The Broker will first restore the storage status, load the CommitLog and ConsumeQueue to the memory, complete HA negotiation, and initialize Netty Server to provide services. The current implementation is to finally initialize Network Layer services visible to users. You can initialize the network library first and register topics to NameServer in batches so normal upgrades can have less impact on users.

There are many software engineering implementation details in the process of recovery, such as checking the CRC of the message to see if there is an error when loading from the block device and dispatching the last small piece of the unconfirmed message. Messages are loaded from the third-to-last file recover CommitLog to the page cache by default. (Assume unpersisted data is smaller than 3 GB.) This prevents a thread from being blocked due to the missing page and the message requested by the client being out of memory. It is necessary to maintain the consistency of stored data in distributed scenarios, which involves issues (such as log truncation, synchronization, and postback). I will discuss this in the high availability section.

After the production and saving of the message, we will discuss the lifecycle of the message. As long as the disk is not full, the message can be saved for a long time. As mentioned earlier, RocketMQ stores messages in CommitLog. For a FIFO-like system (such as messages and streams), the more recent the message, the higher the message value. Therefore, the oldest messages are deleted from the front to the back in the form of scrolling by default. A scheduled task that triggers the file cleanup operation is executed every ten seconds by default. When a scheduled task is triggered, multiple physical files may be deleted when it exceeds the expiration time. Therefore, to delete a file, you must determine whether the file is still in use but also delete another file at intervals (parameter deletePhysicFilesInterval). Deleting a file is an I/O-consuming operation, which may cause storage jitter and latency in writing and consuming new messages. Therefore, a scheduled deletion capability is added to use deleteWhen to configure the operation time (4 am by default).

We call deletion caused by insufficient disk space passive behavior. Since high-speed media are expensive, we will asynchronously and actively transfer hot data to secondary media for cost reasons. In some special scenarios, it may be necessary to wipe the disk safely to prevent data recovery while deleting it.

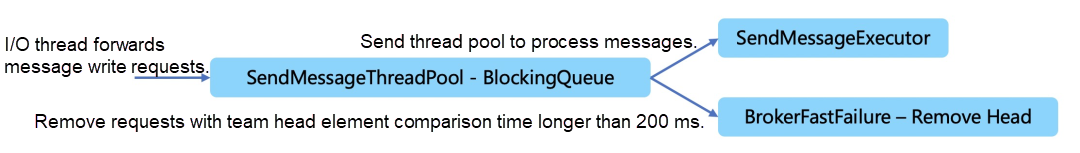

After the message is read by the I/O thread of the server Netty, it will enter the blocking queue. However, a single Broker node sometimes fails to store and write for a short time due to GC and I/O jitter and other factors. If the request is too late to process, the queued requests will accumulate, resulting in OOM. From the perspective of the client, the time from sending the response to the receiving server is extended, and the sending timeout is finally delayed. RocketMQ introduces a fast failure mechanism to alleviate this jitter problem, which means a scanning thread is opened to check the first queued node in the queue continuously. If the queuing time of the node has exceeded 200 ms, it will take out this request and immediately return a failure to the client. The client will retry to other replica groups (the client has some fusing and isolation mechanisms) to achieve high availability of the overall service.

RocketMQ has designed many simple and effective algorithms to perform an active estimation. For example, when a message is written, RocketMQ wants to determine whether the page cache of the operating system is busy, but the JVM does not provide such a monitor tool to evaluate the busy level of the page cache. It uses the processing time of the system to determine whether the write exceeds one second. If it times out, the new request will fail quickly. For another example, when the client consumes data, it determines whether the current primary memory usage is high and is greater than 40% of the physical memory. If so, we recommend that the client pull messages from standby.

In order to quickly switch physical files after CommitLog is fully written, the background uses a thread to create new files and lock the memory asynchronously. It requires a lot of work to design an additional file preheating switch (configure warmMapedFileEnable). There are two main reasons:

There are also disadvantages. After preheating, the time for writing files is shorter, but preheating will bring some write amplification. On the whole, this can improve the stability of the response time and reduce glitches to a certain extent. It is not recommended to open it when the I/O is under high pressure.

RocketMQ is suitable for business message scenarios with a large number of topics. Therefore, RocketMQ uses a zero-copy solution that is different from Kafka. Kafka uses blocking I/O to send files, which is suitable for large files with high throughput (such as system log messages). RocketMQ chooses mmap + write non-blocking I/O (based on multiplexing) as the zero-copy mode. This is because RocketMQ is located in the small data block and high-frequency I/O transmission of service-level messages. When you want lower latency, it is more appropriate to choose mmap.

When kernal allocates the available memory, the free memory is not enough. If the process generates a large number of new allocation requirements or is interrupted by missing pages, it needs to recycle the memory through the elimination algorithm. At this time, jitter and short-term glitches may occur in writing.

There may be two scenarios for RocketMQ to read cold data:

In the first case, in the RocketMQ low version source code, for cases where a large number of CommitLog copies are required (for example, a secondary disk failure or the launch of a new secondary machine), the primary uses DMA replica to copy data to the secondary machine through the network by default. The I/O thread is blocked due to a large number of missing pages, which will affect Netty to process new requests. In the implementation, the internal communication between some components uses the second port provided by fastRemoting. The temporary solution to this problem includes using the service thread to load the data back to memory without using zero copy, but this approach does not essentially solve the blocking problem. In the case of cold copy, you can use madvice to suggest OS reads to avoid affecting the primary message writing, or you can copy data from another secondary.

In the second case, it is a challenge for every storage product. When a client consumes a message, all hot data is stored in the page cache, and cold data is degraded to random reads. (The system has a prediction mechanism for continuous reads of the page cache.) Consumers generally perform data analysis or offline tasks in scenarios where you need to consume data from more than a few hours ago. Here, the downstream targets are throughput first rather than latency. There are two better solutions for RocketMQ. The solution is to forward read requests to the standby in the same way as redirect to share the read pressure or to read from the dumped secondary media. After the data is dumped, the data storage format of RocketMQ changes.

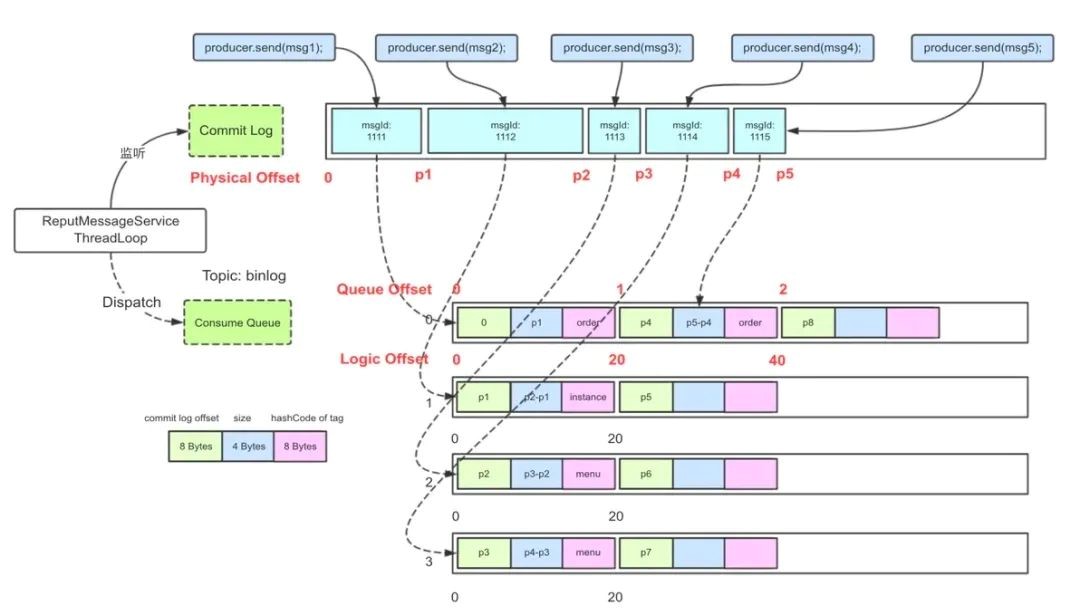

After data is written to CommitLog, when MessageStore writes some messages to CommitLog on the server, a backend ReputMessageService service (dispatch thread) asynchronously builds various indexes to meet different read requirements.

In the RocketMQ model, the logical queue of a message is called a MessageQueue, and the corresponding physical index file is called a ConsumeQueue. In a sense, MessageQueue = Multiple consecutive ConsumeQueue indexes + CommitLog files.

ConsumeQueue is more lightweight than CommitLog. The dispatch thread continuously retrieves messages from the CommitLog and then takes out the physical offset (relative to the index of the file storage) of the messages in the CommitLog. The message length and tag hash are used as indexes of a single message and are distributed to the corresponding consumption queue. The offset + length constitutes a reference to the CommitLog (Ref). This Ref mechanism is only 20 B for a single message, which reduces the index storage overhead. The actual implementation of ConsumeQueue writing is different from CommitLog. CommitLog has many storage policies that can be selected and mixed storage. A ConsumeQueue only stores indexes of one partition of a topic. FileChannel is used for persistence by default. If mmap is used here, it is friendlier to requests with small data volumes without interruption.

The pull request from the client is sent to the server to perform the following process to query messages:

By default, RocketMQ specifies that the files of each consumption queue store 300,000 indexes, and one index occupies 20 bytes. Here, the size of each file is about 5.72 MB (300×1,000×20/1,024/1,024). Why should the number of messages stored in a consumer queue file be set to 300,000? This empirical value is suitable for scenarios with a large number of messages. This value is too large for most scenarios. The real usage of valid data is low, resulting in a high empty rate of ConsumeQueue.

Let's look at what will happen if it is too big or too small. Messages always have an expiration date (such as three days). If the file setting of the consumption queue is too large, it is possible that a file contains the message index of the past month. At this time, the original data has been rolled out, wasting a lot of space. However, it should not be too small either. ConsumeQueue has a large number of small files, which reduces the read and write performance. Here is a non-rigorous empty rate derivation process:

Let's assume the number of topics on a single node is 5,000, the number of queues for a single topic on a single node is eight, and the number of partitions is 40,000. Let's take 1 TB of message data as an example. The size of each message is 4 KB. The number of indexes and the number of messages are both about 0.268 billion (1,024×1,024×1,024/4). The number of ConsumeQueue is 895 (Number of indexes/300,000). The actual usage (valid data volume) is approximately 2.4%. With the atomic self-increasing scrolling of ConsumeQueue Offset, the disk space occupied by ConsumeQueue headers becomes larger due to invalid data. According to the situation on the public cloud, non-zero data accounts for about 5%, and actual valid data only accounts for 1%. For index files (such as ConsumeQueue), we can use persistent memory (such as RocksDB or Optane) to store them or implement a single user-state file system for ConsumeQueue. Several solutions can reduce the overall index file size and improve access performance. This point will be discussed in the optimization of the storage mechanism later.

The CommitLog-ConsumerQueue-Offset relationship is determined from the moment the message is written. If the messages need to be readable in scenarios (such as migrating topics across replica groups and disconnecting replica groups), you need to replicate data to migrate topics across replica groups. Only message-level copy can be used. Simply moving a partition from replica group A to replica group B is not allowed. When facing this scenario, some messaging products adopt the data replication solution by partition. This solution may immediately generate a large number of data to be transmitted (partition rebalance), and the cut-off of RocketMQ can generally take effect within seconds.

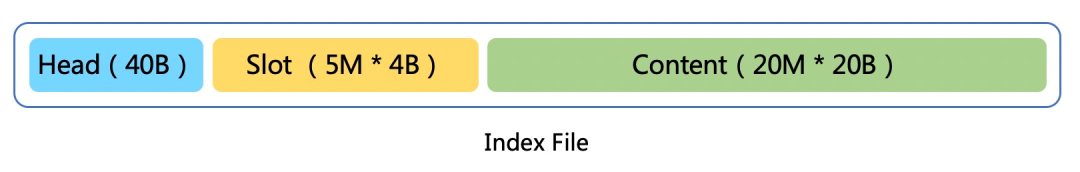

RocketMQ is the first choice for business messages. In addition to building the index of the consumption queue, the ReputMessageService thread builds the index to IndexFile for each message according to the ID and key. This is generated by the convenience of quickly locating target messages. This ability to build random indexes can be degraded. The structure of the IndexFile file is listed below:

IndexFile is fixed-length. In terms of the data structure of a single file, this is a simple native hash zipper mechanism. When a new message index comes in, the hash algorithm is first used to hit one of the 5 million slots in the yellow part. If there is a conflict, the zipper is used to resolve it, and the next of the latest index data is pointed to the previous index position. At the same time, append the index data of the message to the end of the file (green part), forming a linked list of the current slot stored in reverse order according to the time. This is an improvement of LSM compaction under the message model, which reduces write amplification.

The storage design of RocketMQ is abstracted with a simple and reliable queue model as the core, which results in some defects, and corresponding optimization solutions are provided.

RocketMQ implements backoff retry for a single service message. In production practice, we found that some users failed to return messages when the client consumption was throttled. When the number of retry messages was large, the retry messages could not load balance well to all clients due to the limited number of retry queues under the original implementation. At the same time, messages are transmitted back and forth between the server and the client, which increases the overhead on both sides. Users should let the consuming thread wait for a while when consuming throttling.

From the point of view of storage services, this is a deficiency of the queue model. One queue can only be held by one consumer. RocketMQ proposes a new concept of pop consumption, which allows messages in a single queue to be consumed by multiple clients. This involves the addition and unlocking of a single message by the server. The KV model fits this scenario well. In the long run, transactional messages like scheduled messages can have some more native implementations based on KV, which is one of the directions of RocketMQ's future efforts.

Compression is the classic trade-off that uses time to trade space, hoping to bring less disk usage or less network I/O transmission with less CPU overhead. Currently, the RocketMQ client only compresses and stores a single message larger than 4 KB in terms of latency. There are many reasons why the server does not immediately compress and store received messages. For example, the batch storage effect is poor when messages are sparse to ensure that data can be written to disk timely, so the body is not compressed and stored. For most business topics, the body is generally similar to a large extent, which can be compressed to a fraction (a few tenths) of the original.

Storage generally includes high-speed (high-frequency) media and low-speed media. Hot data is stored on high-frequency media (such as ESSD and SSD), and cold data is stored on low-frequency media (NAS and OSS) to meet the requirements of low-cost storage for longer-term data. When data is switched from a high-frequency medium to a lower-frequency NAS or OSS, a data copy is inevitably generated. We can asynchronously regulate the data (rich spare resources) in this process.

How about copying directly from zero copy?

Although low-frequency media are cheap and large in number, they usually have lower IOPS and throughput. The data that needs to be regulated for RocketMQ is the message in the index and CommitLog, which means that the message storage format on the high-frequency medium and the low-frequency medium can be different. When hot messages are downgraded to secondary storage, the data is dense and asynchronous. This is a suitable opportunity for compression and regulation. There are also some cases in the industry that accelerate storage compression based on FPGA. We will continue doing this in the future.

I want to talk about the hard disk scheduling algorithm. In a cost-effective scenario, we can store the index file in SSD and the message in HDD due to the storage mechanism of RocketMQ. Since hot messages are always in PageCache, I/O scheduling is given priority to write instead of read. For consumers without accumulation, the consumed data is copied from the page cache to the socket and then transmitted to users. The real-time performance is high. However, for users who consume cold data (data from a few hours or a few days ago), the demand is generally to obtain the message as soon as possible. As such, the server can choose to meet the pull request of the user as soon as possible. Due to a large amount of random I/O, the disk will have serious rt jitter.

After careful consideration, users want as much throughput as possible here. Assuming that it takes 200 milliseconds to access cold data, the behavior of cold reading is delayed on the server side, and a latency of 500 milliseconds before returning the user data, there is no significant difference. In 500 milliseconds here, a large number of I/O operations can be merged inside the server, and we can use madvice system calls to suggest kernel reads. The merger here brings high benefits, which can reduce the impact of writing hot data and improve performance.

In order to solve the problem of low random reading efficiency, we can design a user-state file system so I/O calls all kernel-bypass.

There are several directions:

After years of development, the RocketMQ storage system has improved its basic functions and features. It has solved the problems in the distributed storage system through a series of innovative technologies and stably served Alibaba Group and a large number of cloud users.

RocketMQ has encountered more interesting scenarios and challenges in the evolution of the cloud-native era. This is a complex project that requires full link tuning. We will continue focusing on enterprise-level features (such as scale, stability, and multi-activity disaster recovery), cost, and elasticity to build RocketMQ into an integrated platform of message, event, and flow. At the same time, we will continue to open-source and create value for society.

[1]. In-depth Understanding of Page Cache in Linux (Article in Chinese)

https://www.jianshu.com/p/ae741edd682c

[2]. PacificA: Replication in Log-Based Distributed Storage Systems.

https://www.microsoft.com/en-us/research/wp-content/uploads/2008/02/tr-2008-25.pdf

[3]. J. DeBrabant, A. Pavlo, S. Tu, M. Stonebraker, and S. B. Zdonik. Anti-caching: A new approach to database management system architecture. PVLDB, 6(14):1942-1953, 2013.

[4]. RocketMQ Technology Insider

[5]. "Ghost Reproduction" in Consistency Agreement (Article in Chinese)

https://zhuanlan.zhihu.com/p/47025699.

[6]. Calder B, Wang J, Ogus A, et al. Windows Azure Storage: a highly available cloud storage service with strong consistency[C]//Proceedings of the Twenty-Third ACM Symposium on Operating Systems Principles. ACM, 2011: 143-157.

[7]. Chen Z, Cong G, Aref W G. STAR: A distributed stream warehouse system for spatial data[C] 2020: 2761-2764.

[8]. design data-intensive application

From VLAN to IPVLAN: Virtual Network Device and Its Application in Cloud-Native

A Review of KubeVela from Launch to Promotion to the CNCF Incubation

640 posts | 55 followers

FollowAlibaba Cloud Native - June 12, 2024

Alibaba Cloud Native - June 7, 2024

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - May 4, 2023

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - February 7, 2023

640 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Cloud Native Community