By Qingshan Lin (Longji)

The development of message middleware has spanned over 30 years, from the emergence of the first generation of open-source message queues to the explosive growth of PC Internet, mobile Internet, and now IoT, cloud computing, and cloud-native technologies.

As digital transformation deepens, customers often encounter cross-scenario applications when using message technology, such as processing IoT messages and microservice messages simultaneously, and performing application integration, data integration, and real-time analysis. This requires enterprises to maintain multiple message systems, resulting in higher resource costs and learning costs.

In 2022, RocketMQ 5.0 was officially released, featuring a more cloud-native architecture that covers more business scenarios compared to RocketMQ 4.0. To master the latest version of RocketMQ, you need a more systematic and in-depth understanding.

Today, Qingshan Lin, who is in charge of Alibaba Cloud's messaging product line and an Apache RocketMQ PMC Member, will provide an in-depth analysis of RocketMQ 5.0's core principles and share best practices in different scenarios.

Today, we're going to explore the stream database of RocketMQ 5.0. In our previous course, we discussed the stream storage capabilities provided by RocketMQ for data integration. By combining stream storage with mainstream distributed stream computing engines like Flink and Spark, RocketMQ can offer users comprehensive stream processing capabilities. However, in some scenarios, we have the opportunity to provide users with a more simplified stream processing solution, without the need to maintain multiple sets of distributed systems. With RocketMQ 5.0, we can provide integrated stream processing.

In the first part of this course, you'll gain a conceptual and macro understanding of what stream processing is. The second part will focus on RocketMQ 5.0, where we'll learn about the lightweight stream processing engine RStreams provided by RocketMQ and understand its features and principles. The third part will introduce RocketMQ's stream database RSQLDB. Through the in-depth combination of stream storage and stream computing, we'll see how it can further reduce the difficulty of using stream processing.

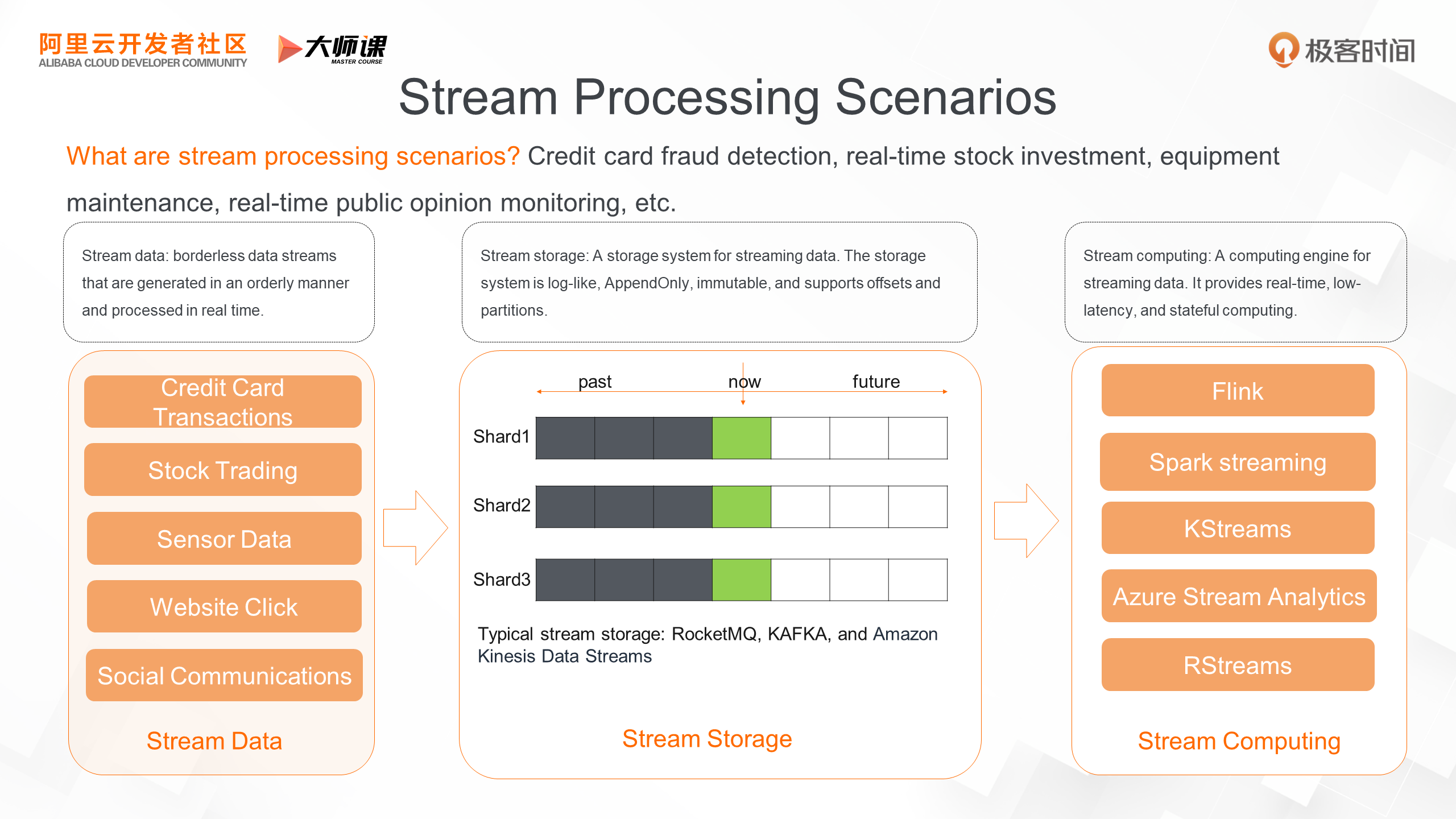

Let's first understand what stream processing is. Stream processing involves stream data ingestion, stream data storage, and stream computing.

The first concept is stream data, which is distinct from batch data and offline data. It's characterized by the continuous generation of data in a certain order, forming a boundless data stream similar to a river. Examples of stream data include credit card transactions, stock transactions, and IoT device sensing data.

The second concept is stream storage, which we covered in the previous lesson and will review briefly here. Stream storage is a deeply optimized storage system for streaming data, providing the ability to read and write data by partition and offset. Data is continuously appended and immutable. Typical stream storage solutions include RocketMQ, KAFKA, and Kinesis Data Streams.

The third concept is stream computing, a computing engine for streaming data. Its main features are real-time computing, low latency, and the ability to implement stateful computing. Examples of stream computing engines include Flink, Spark Streaming, and Kafka Streams.

When do you use stream processing? Compared to batch processing, stream processing focuses more on scenarios that require real-time responses.

The entire stream processing process involves stream data ingestion, stream data storage, and stream computing. Since ingestion and stream storage are not the focus of this course, we'll concentrate on the technical capabilities required for stream computing.

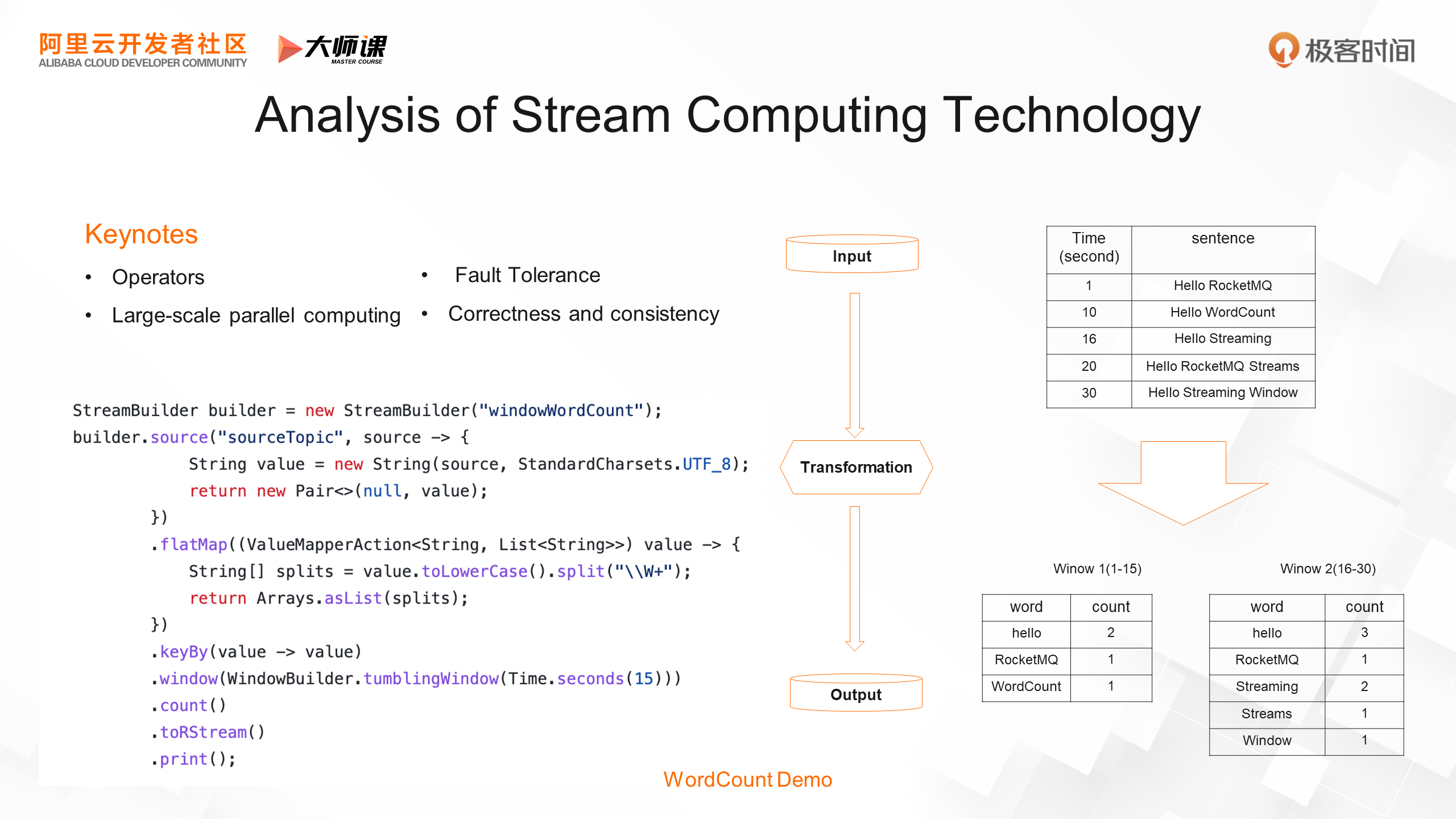

The data stream abstraction in stream computing consists of three steps: data input, data transformation, and data output. Let's use a simple example, WordCount, to illustrate the technical points of stream computing. As shown in the figure on the right, the data input is a stream of statements generated in real-time. We want to count the number of occurrences of each word according to the time window and output the statistical results by time. To achieve this, we only need to write a small amount of code based on the stream computing engine, as shown in the figure in the lower left corner. From this example, we can identify some of the capabilities necessary for a stream computing engine.

First, a stream computing engine should support a wide range of reusable operators and leverage functional programming to improve development efficiency. Additionally, it needs to have fault tolerance capabilities, allowing it to resume computing by restarting or taking over other nodes when a computing process node fails. Furthermore, stream data is often large-scale, such as IoT sensor data, which exceeds the computing power of a single machine. Therefore, the stream computing engine must have large-scale parallel computing capabilities. Finally, the results of stream computing are often used for key business decisions, so the engine must be able to ensure the correctness of the computing results in scenarios with large-scale parallelism, fault-tolerant switching, and resource scheduling.

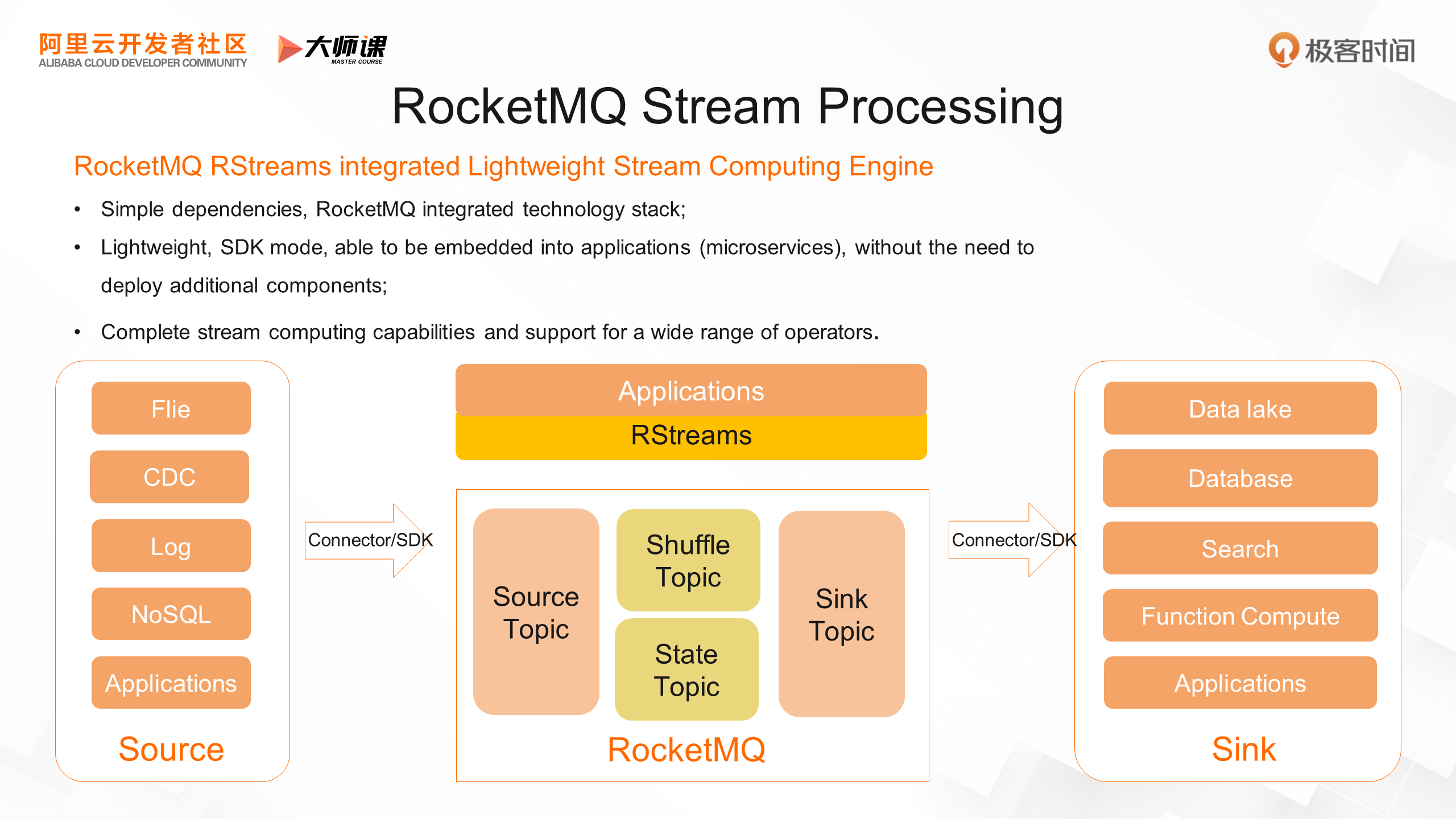

For stream processing scenarios, RocketMQ 5.0 provides the native lightweight stream computing engine RStreams, which has three key features.

First, RStreams only relies on RocketMQ's native technology stack to process data streams based on different types of topics in RocketMQ, making it suitable for lightweight output and edge computing scenarios.

Second, its usage is also lightweight, as it doesn't require building a stream computing platform, and users have no additional O&M burden. They can directly use the RStreams SDK to write stream computational logic and embed it into business applications (or microservices).

Finally, RStreams covers all operators in mainstream scenarios and has complete stream computing capabilities, including stateless operators like filtering and mapping, as well as stateful operators like aggregate computing and window computing.

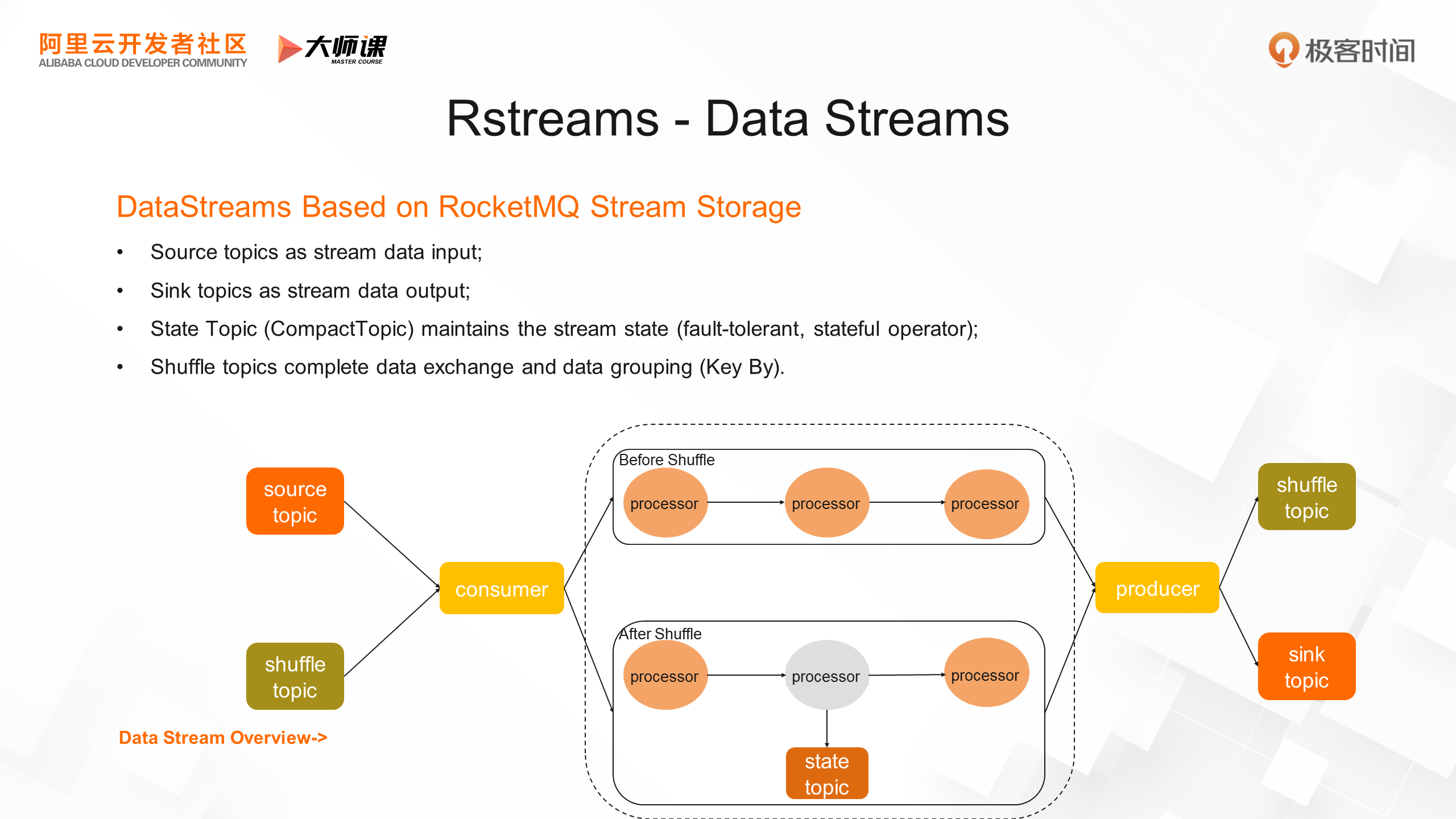

For a stream computing engine, understanding the entire data stream is crucial. While from a user's perspective, stream computing appears to involve one-time input, transformation, and one-time output, the actual implementation involves multiple inputs, computations, and outputs of more atomic operators, resulting in complex data stream diagrams. RStreams implements data streams based on RocketMQ's stream storage capability. The user-facing input and output correspond to the Source Topic and Sink Topic, respectively. The middleware computing process maintains the intermediate state of stream computing based on the State Topic (CompactTopic). During the computing process, data exchange may also be necessary, such as using the KeyBy operator to calculate word frequency. RStreams is implemented based on the Shuffle topic.

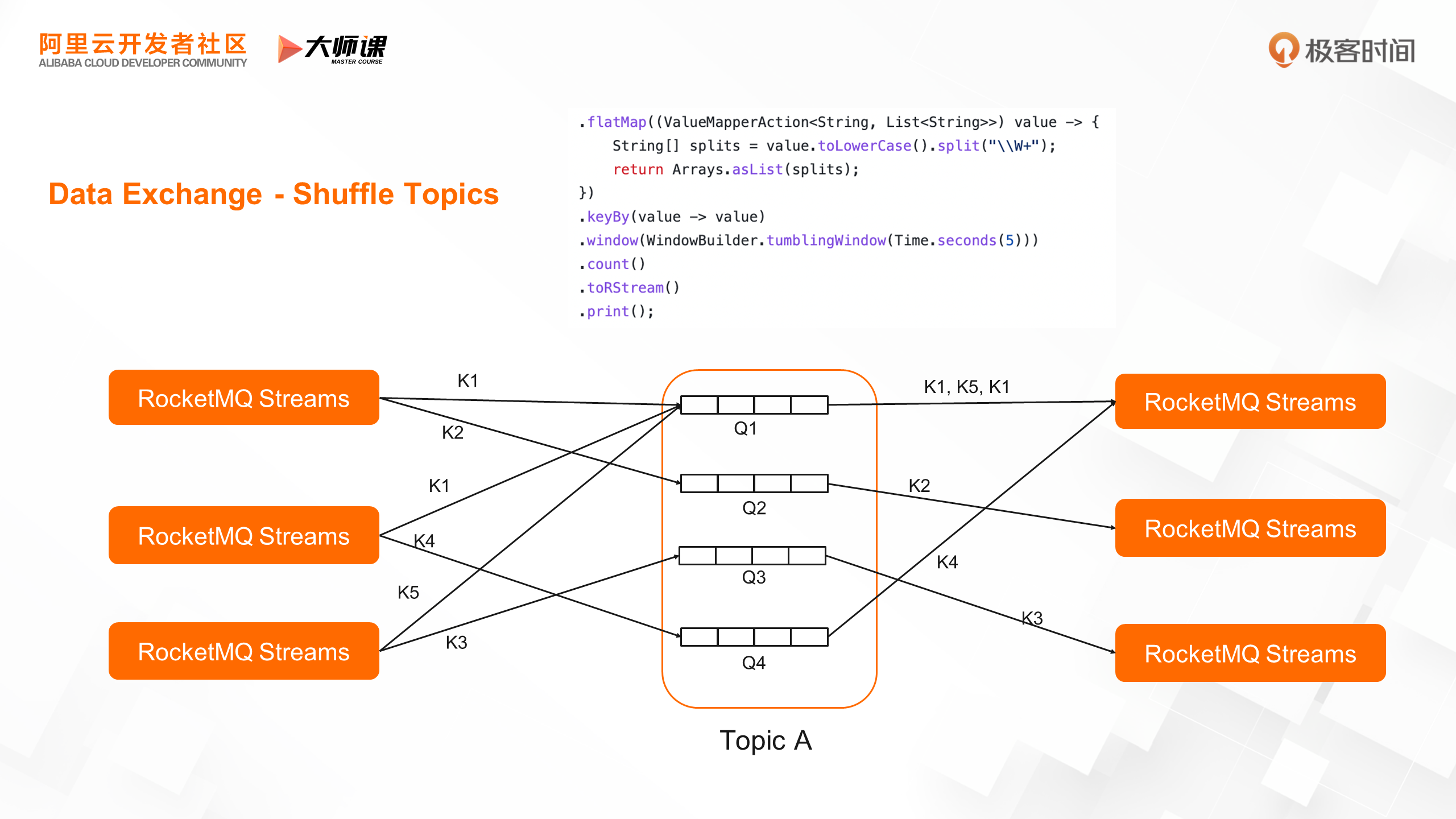

Let's take a look at the shuffle topic. Let's take WordCount as an example. After each sentence is cut into words, we need to count the frequency according to the words. This requires putting the data of the same word on the same calculation instance to count. The implementation of RStreams is to hash words as keys to the same queue, and the consumption load algorithm based on RocketMQ can ensure that the same word is counted on a computing instance. This is the data exchange mechanism of RStreams.

Let's look at another key technical point of RStreams: state management.

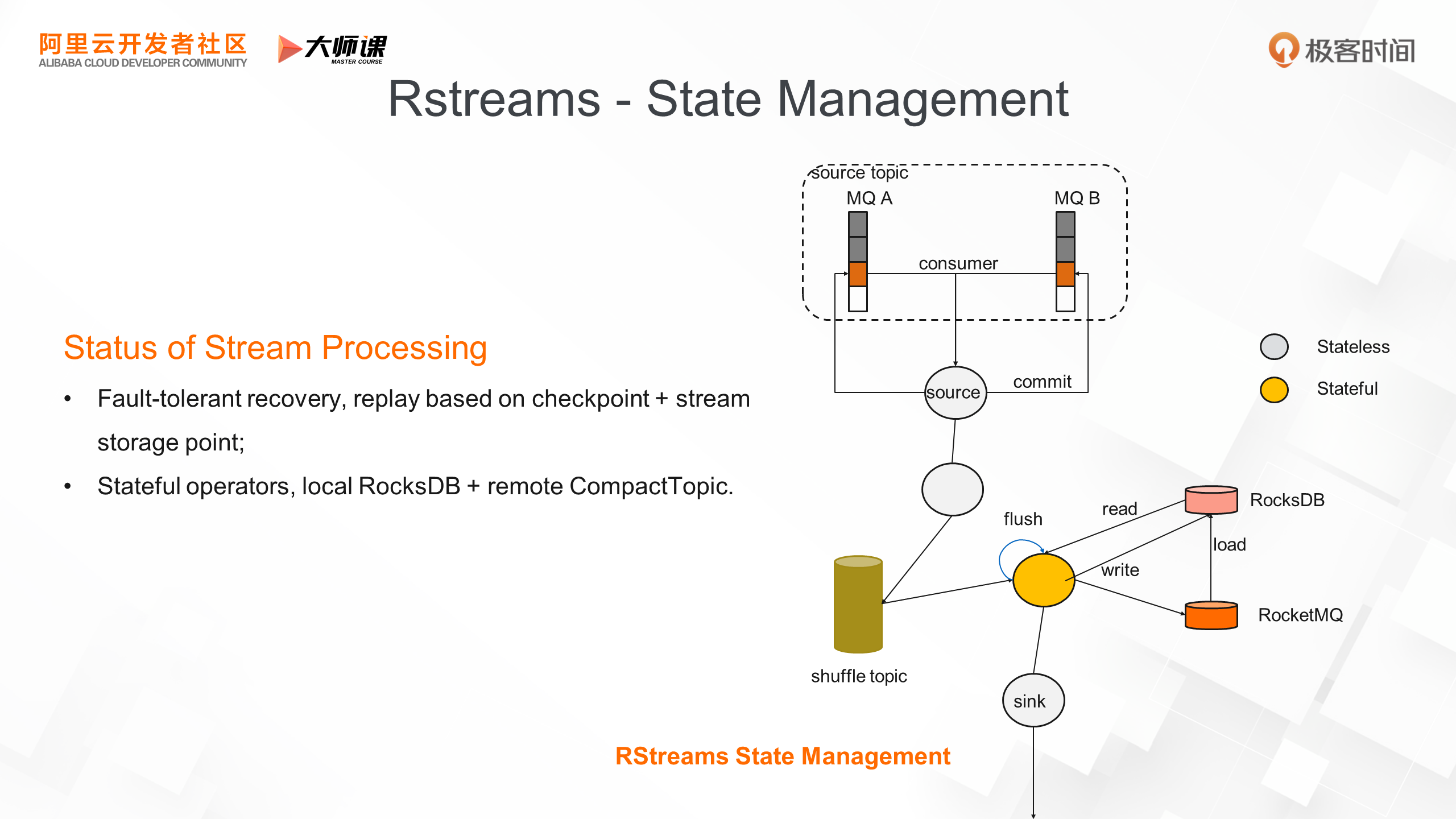

There are two scenarios for state management. One is the fault-tolerant scenario, where you only need to rely on the RocketMQ queue location replay capability to implement the checkpoint mechanism to restore the computing state.

The other scenario is the maintenance of intermediate computing results for stateful computing. RStreams uses RocksDB as a local state manager to provide high-performance, low-latency state reads and writes. It also maintains the remote state based on RocketMQ's CompactTopic and synchronizes with the local state regularly. In this way, when the disk of the local node is damaged or the computing node is rescheduled, the state can be recovered from a unified data storage center, thereby improving the reliability of the state data.

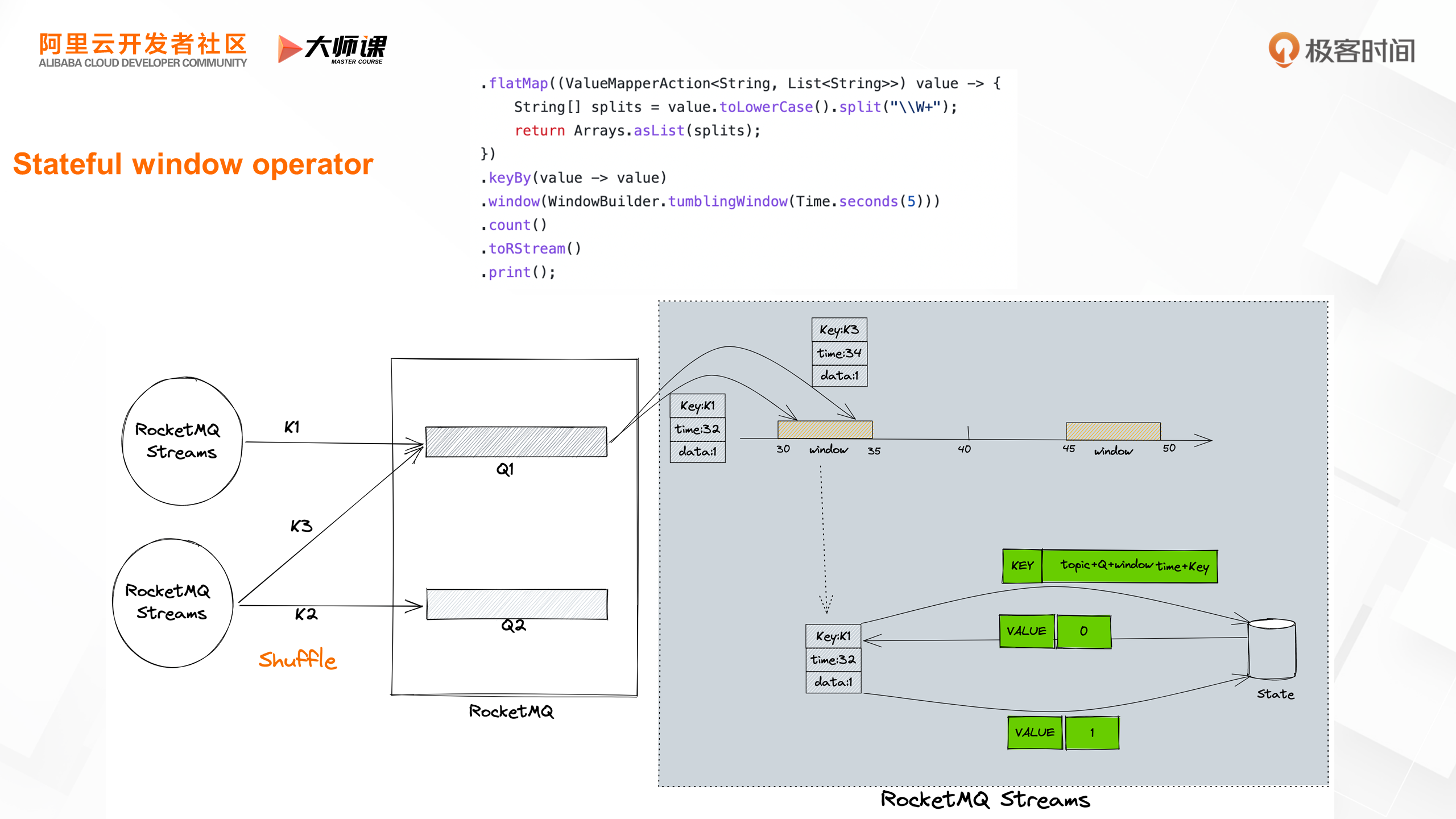

Let's use the window computing example in the WordCount case to illustrate how stateful operators in RStreams maintain their state.

In this example, we first perform group statistics on words using a shuffle topic, and then refresh the word frequency statistics according to the time window. Here, the key used for state maintenance is topic + Q + window time + words, and the value is the statistical quantity, which is periodically updated in RStreams' state storage. When a failure occurs and fault-tolerant recovery is performed, the data in the window doesn't need to be recalculated, ensuring the real-time performance of stream computing.

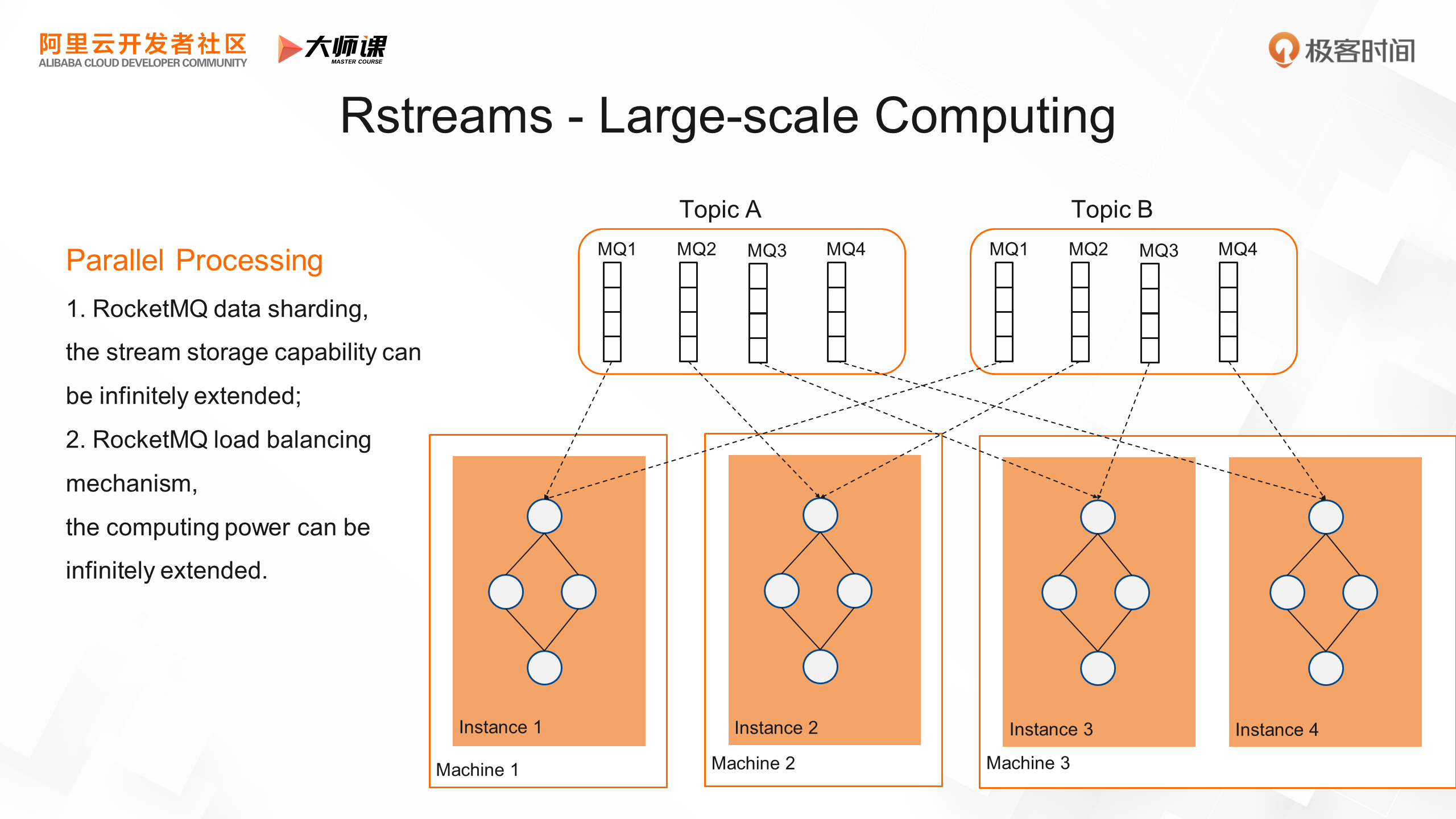

RStreams' large-scale parallel computing leverages RocketMQ's unlimited scalability and load balancing mechanism. For instance, data sharding based on RocketMQ enables unlimited scaling of stream storage nodes, while the sharding load consumption mode based on RocketMQ allows unlimited scaling of stream computing nodes.

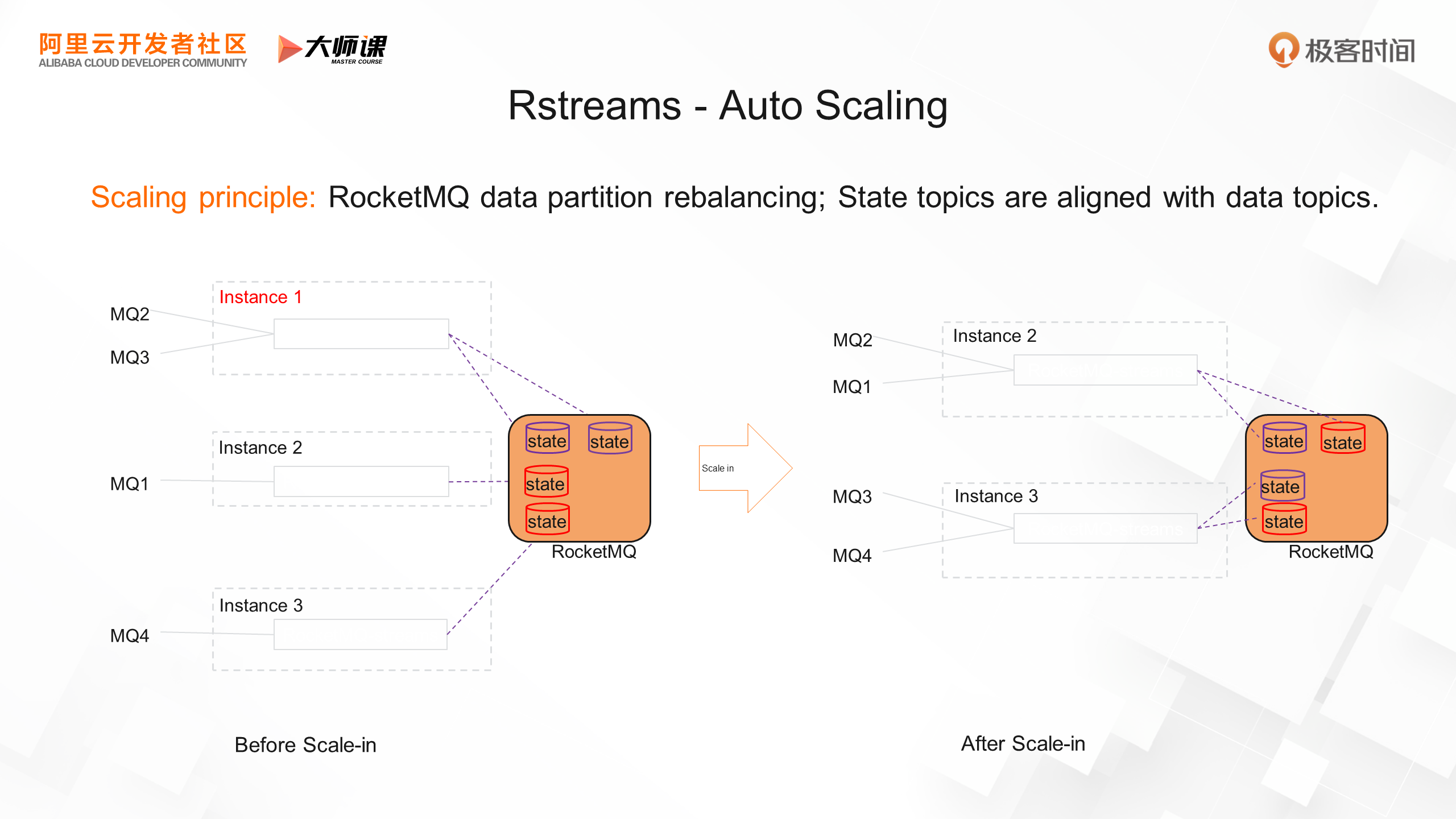

Let's take a closer look at the RStreams auto-scaling process. As mentioned in the previous course, the computing scheduling of RStream mainly depends on the consumer queue load balancing mechanism of RocketMQ. Each data shard of the data source is read and computed by only one RStream instance. When scale-in occurs, compute nodes are reallocated based on the load-balancing algorithm. In addition, when stateful computing is involved, RStreams also needs to rely on Compact topics to maintain the state. The queue distribution of Compact topics should be consistent with that of Sourcetopics. In this way, the data source and the corresponding state storage are reloaded by the same RStreams computing node. For example, in the following figure, when the scale-in occurs, the data and status of SourceTopic queue 2 are scheduled to Rational Streams instance 2, and the data is recovered from the checkpoint load and calculated.

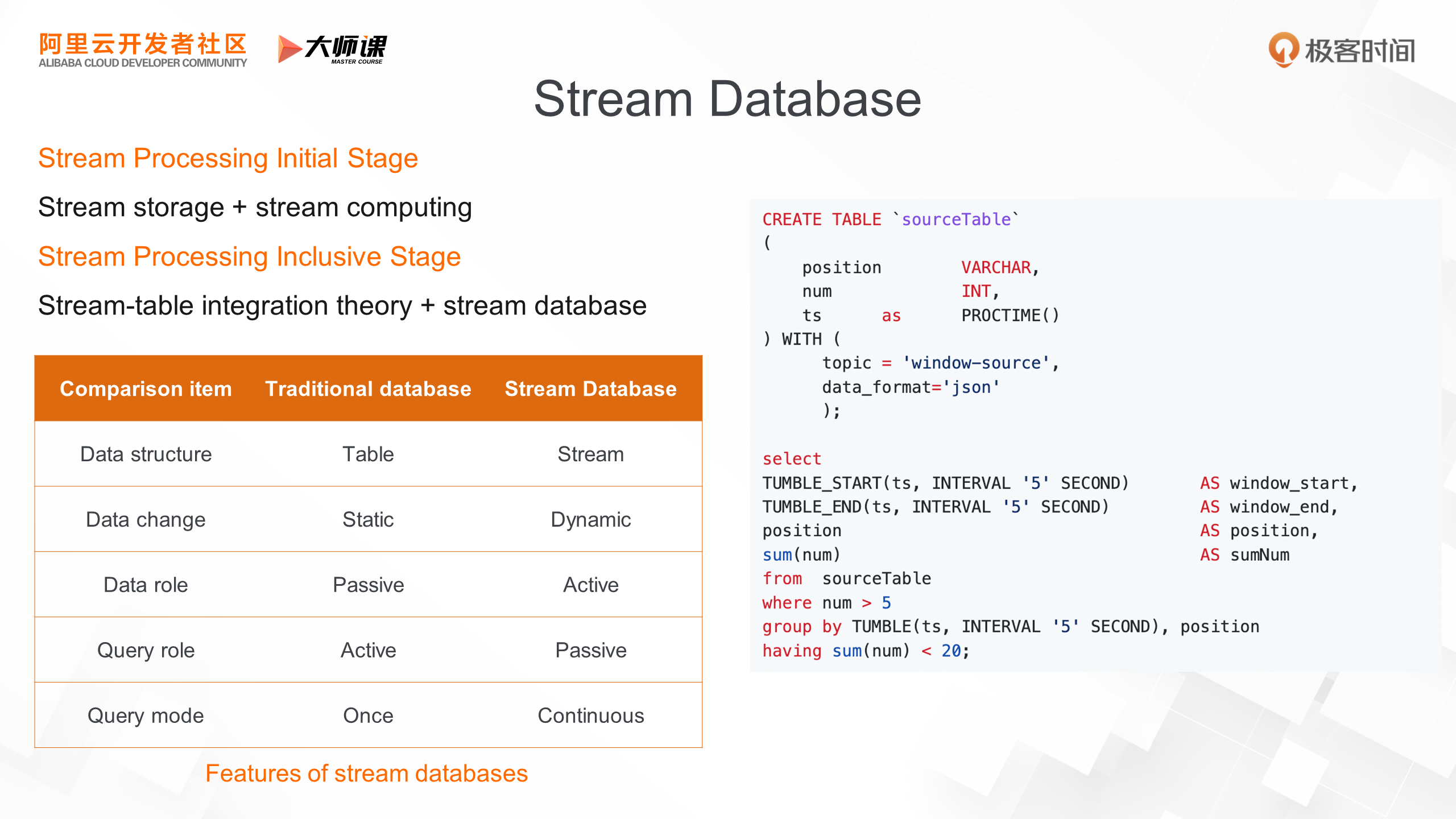

Let's move on to the third part, RSQLDB, the stream database of RocketMQ. First, let's learn about what a stream database is. Stream databases have evolved as stream processing technology has matured and become more inclusive. In the early stages of stream processing, stream storage and stream computing were separate, mainly implemented through SDK API programming. Now, in the popularization stage of stream processing, the technology has been further simplified, and the stream-table integration theory has matured, allowing users to complete stream processing of business data based on traditional database concepts and declarative SQL statements, reducing the learning difficulty and improving efficiency.

What's the difference between stream databases and traditional databases? Let's take a look at the following table. Traditional databases operate on tables, which involve one-time queries for static data initiated by external actions. In this process, the data is static. In contrast, stream databases operate on continuous streams of data, triggering continuous queries. The data is dynamic and active, while the query is passive.

The above is a typical way to use a stream database, the stream filtering, window computing, aggregation computing, and other capabilities can be completed through an SQL statement.

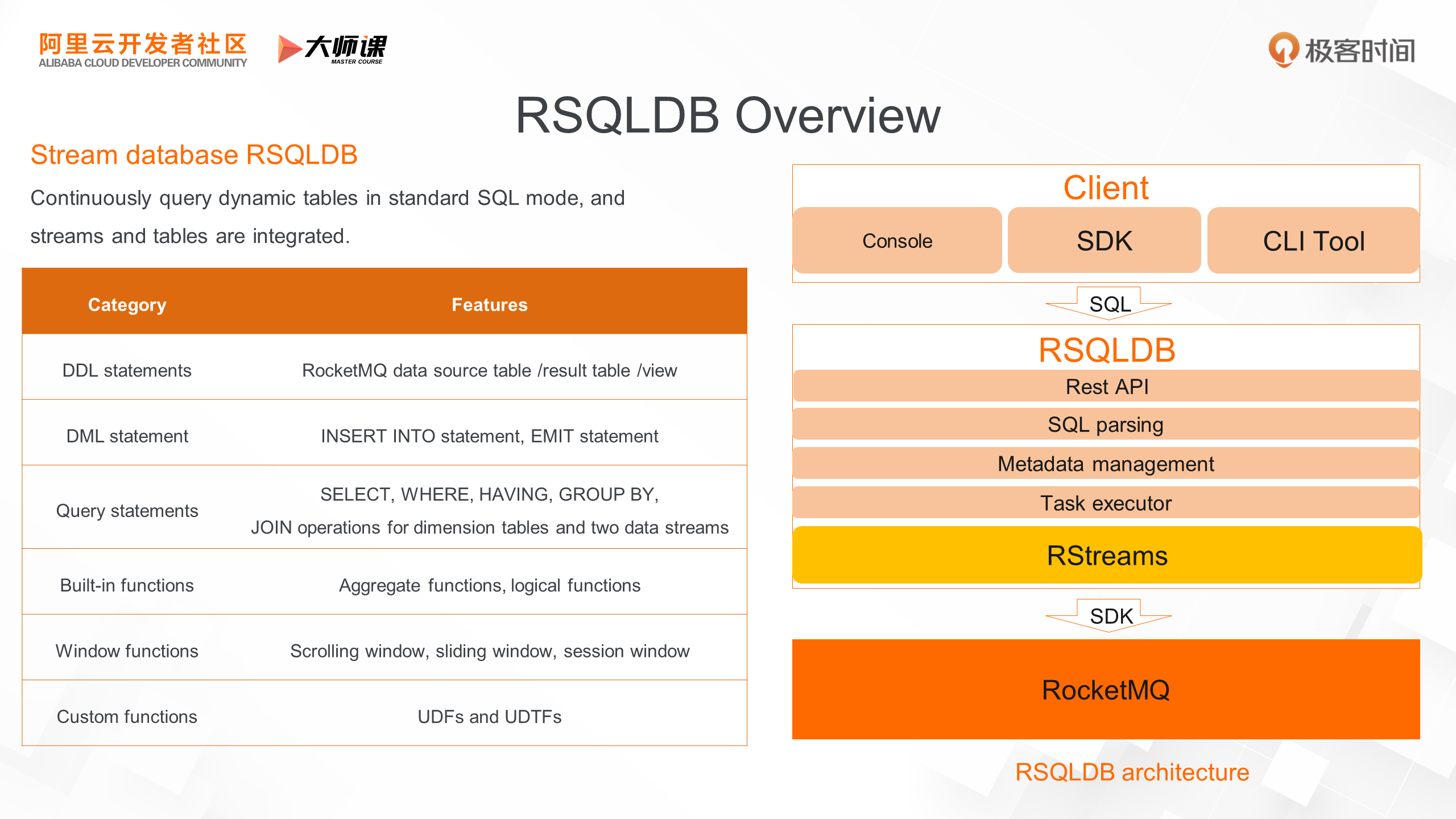

In the trend of stream processing, RocketMQ 5.0 launched RSQLDB, a stream database that uses standard SQL to continuously query dynamic tables. It supports a large number of traditional database usage patterns, including DDL, DML, queries, and various functions. The following figure shows the architecture of RSQLDB, which is also an integrated technology based on RocketMQ. The underlying layer is the stream storage of RocketMQ and the stream computing atomic capabilities of RStreams. On top of these capabilities, an SQL parser is provided to convert user SQL into a physical stream processing process. The top layer provides multiple types of clients, including SDKs, consoles, and command line tools.

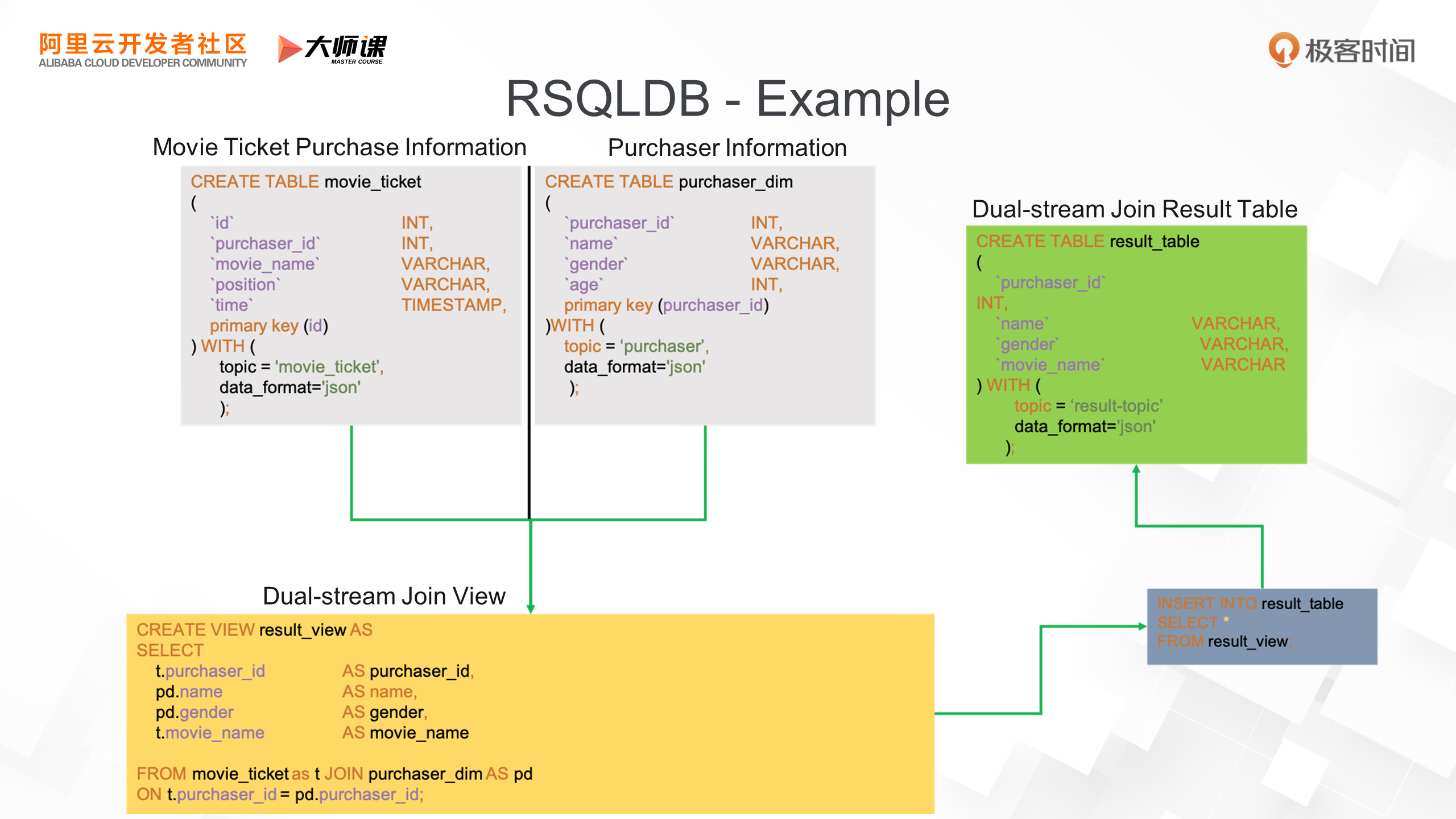

Finally, let's consider a typical example of RSQLDB. In this case, we want to merge the movie ticket purchase information stream and the buyer information stream, ultimately outputting a complete stream of buyer information plus movie ticket information. To do this, we use RSQLDB to create two tables and convert the topic data stream into table abstractions. Next, we create a view that merges the two streams based on the buyer ID. Finally, we create a result table and write the merged view to this output table.

In this way, we can complete a dual-stream join stream processing task using simple declarative SQL statements.

In this course, we systematically explored the scenario of stream processing, covering data ingestion, storage, and computing. The advantage of stream processing is that it enhances the real-time response capability of digital businesses. We also learned about RocketMQ's native lightweight stream computing framework, which is built on RocketMQ's stream storage. Additionally, we acquired the skills to implement integrated processing. Finally, we further optimized RocketMQ's stream processing by integrating stream storage and computing, providing a stream database usage model that reduces the difficulty of using stream processing.

In the next course, we will delve into the learning of the IoT technology of RocketMQ 5.0.

Click here to go to the official website for more details.

RocketMQ 5.0 Stream Storage: What Are the Requirements of Stream Scenarios?

RocketMQ 5.0 IoT Messaging: What Message Technology Does IoT Need?

212 posts | 13 followers

FollowAlibaba Cloud Native - June 6, 2024

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - November 23, 2022

Alibaba Cloud Native Community - December 16, 2022

Alibaba Cloud Native - June 12, 2024

212 posts | 13 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn MoreMore Posts by Alibaba Cloud Native