By Liu Zhendong (Apache RocketMQ PMC Member)

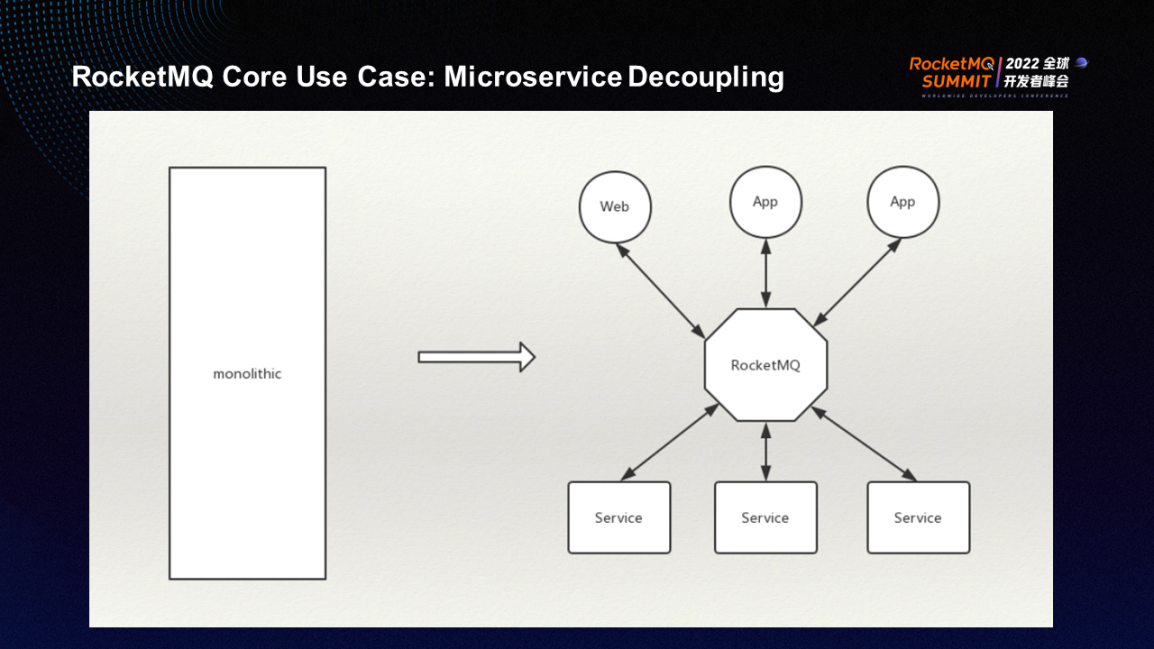

RocketMQ was born to solve the problem of microservice decoupling. Microservice decoupling refers to splitting huge traditional services into distributed microservices.

After the split, a new problem arises: services need to communicate with each other to form a complete service. There are two types of communication: RPC (also known as synchronous communication) and asynchronous communication (such as RocketMQ).

The widespread use of RocketMQ proves that asynchronous communication has great advantages. The most notable feature is asynchronous decoupling. The so-called decoupling means one service does not need to know the existence of another service. For example, in the development of service A, even if other services need the data of service A, service A does not need to know their existence and does not need to rely on the release of other services. Also, the addition of other services will not affect service A, thus realizing the decoupling of the team. This means a specific team completes a microservice, and other teams do not need to know the existence of the team. They only need to implement asynchronous communication through RocketMQ according to the pre-agreed data format. This method has promoted productivity development immensely and promoted the widespread application of RocketMQ naturally.

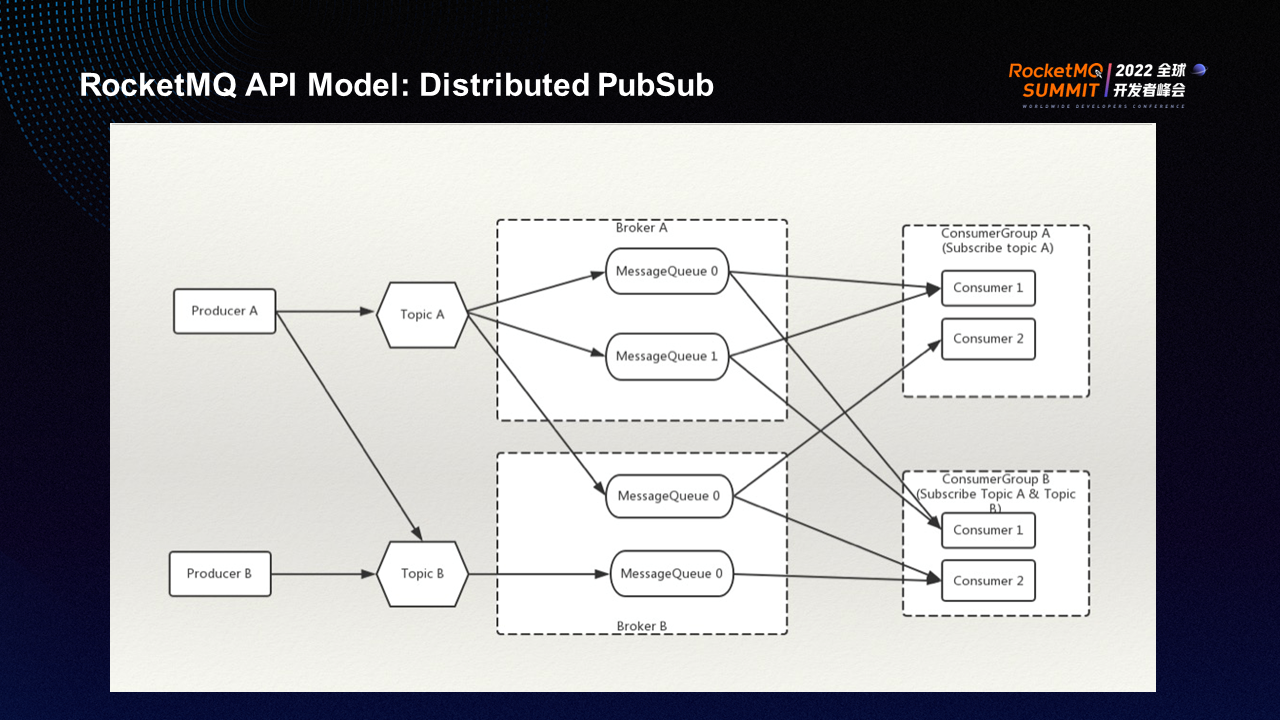

During the asynchronous decoupling, some components produce messages while others consume messages. The API model of RocketMQ is an abstract concept of its asynchronous decoupling process. The two ends of the API model are the two most typical concepts in the RocketMQ field: producer (which provides messages or data) and consumer (which consumes messages).

In addition, the topic is an important concept of the API model. Due to the need for asynchronous decoupling, the process of sending a piece of data from the producer to the final consumption by the consumer is not directly connected. There is an abstract layer in the middle. This abstract concept is called the topic, which is equivalent to a logical address. A topic is like a warehouse. When a piece of data is sent to a topic, it is responsible for storing the data that can be retrieved when other components need it.

RocketMQ is a distributed message middleware. Therefore, the topic is essentially a logical concept. The real physical concept is the queue distributed on each broker, which is the message queue. A topic can have many message queues that can be distributed on many brokers, thus having the ability to expand infinitely. This is a basic feature of the topic.

In addition, the topic receives messages with a very important feature; messages are immutable. The immutable nature of the message allows it to be read repeatedly. With the introduction of the concept of the consumer group, it can be seen that different groups of consumers read messages without affecting each other. Data in the topic does not disappear after a consumer reads it, which means it is sent to one place but consumed by multiple consumers. For example, in an organization, an order team sends a message to an order topic, all other teams in the organization can directly read the message, and the reading of one team does not affect the reading of other teams, thus achieving independence of reading.

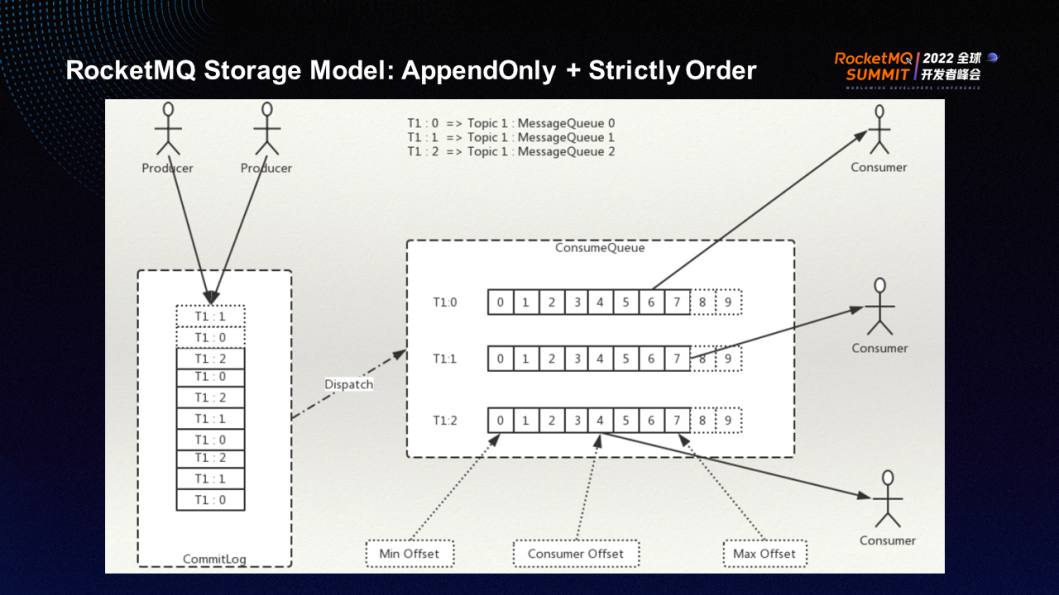

An important feature of MQ is asynchronous decoupling. Asynchronous decoupling often follows the problem of load shifting in ultra-large traffic scenarios on the Internet. Data persistence is required to achieve load shifting. MQ is a storage engine that can temporarily store data from senders. If a consumer cannot process the data right now, the data can be accumulated in MQ first and read when the consumer has sufficient capacity to consume it.

Persistence also supports asynchronous decoupling better. Even if the consumer is not online, the producer is not affected. Persistence is an important ability of MQ. The MQ engine is a sequential storage engine to match the sequential characteristics in the persistence capability.

RocketMQ is slightly different when it is designed. It stores all messages in a centralized manner and creates indexes based on different topics and queues. This design is specially optimized for microservice scenarios. It supports synchronous disk flush and keeps the write latency stable in scenarios with many topics. This is also an important feature that RocketMQ can compete with other message engines.

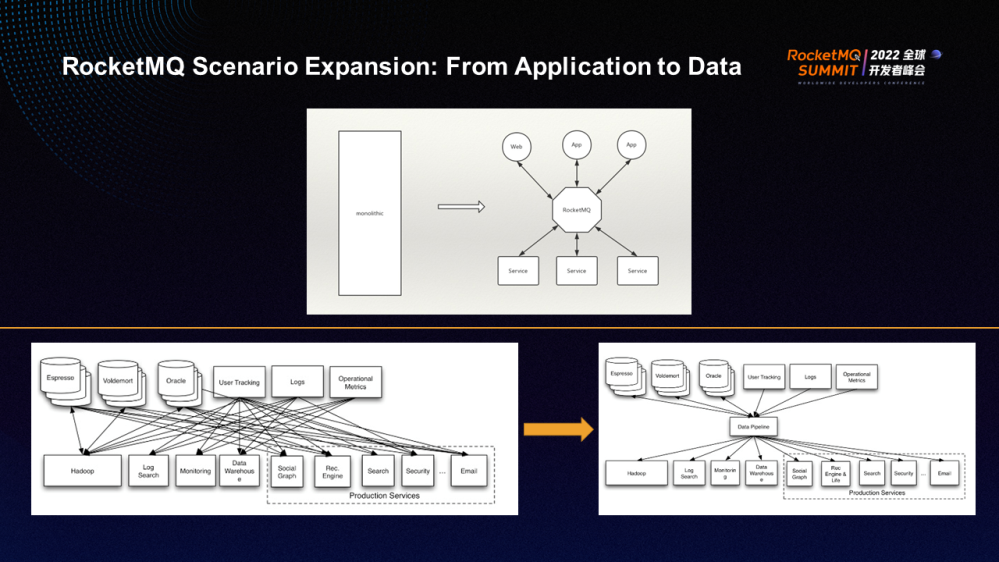

The stream processing was initially applied to user behavior analysis. User behavior analysis refers to guessing user preferences based on user behavior logs. Services (such as search, recommendation, and ads) in the recommendation system are the most typical stream processing scenarios.

The first step in a stream processing scenario is to centrally import user behaviors generated in various systems (including logs and database records) to some analysis engines. There are many data sources and data analysis engines during the process, including offline engines and real-time engines.

We introduced a tool similar to MQ to reduce the complexity. This way, the data source does not directly interact with the data user but sends the data to MQ first. As a result, the connection complexity of the entire system will be significantly reduced from O(N2) to O(N).

Analyzing the role played by MQ and its desired IT architecture from the perspective of user behavior or stream processing, we can find that it is very similar to the architecture of microservice decoupling. All concepts between the two can correspond to each other, such as consumer, producer, topic, message queue, and shard. Therefore, if only the features of RocketMQ are considered, it can support stream processing scenarios.

Currently, many companies use RocketMQ for stream processing. However, RocketMQ can be further optimized for solving stream processing problems.

There are three characteristics of the stream processing scenario:

All in all, throughput becomes more important in the stream processing scenario.

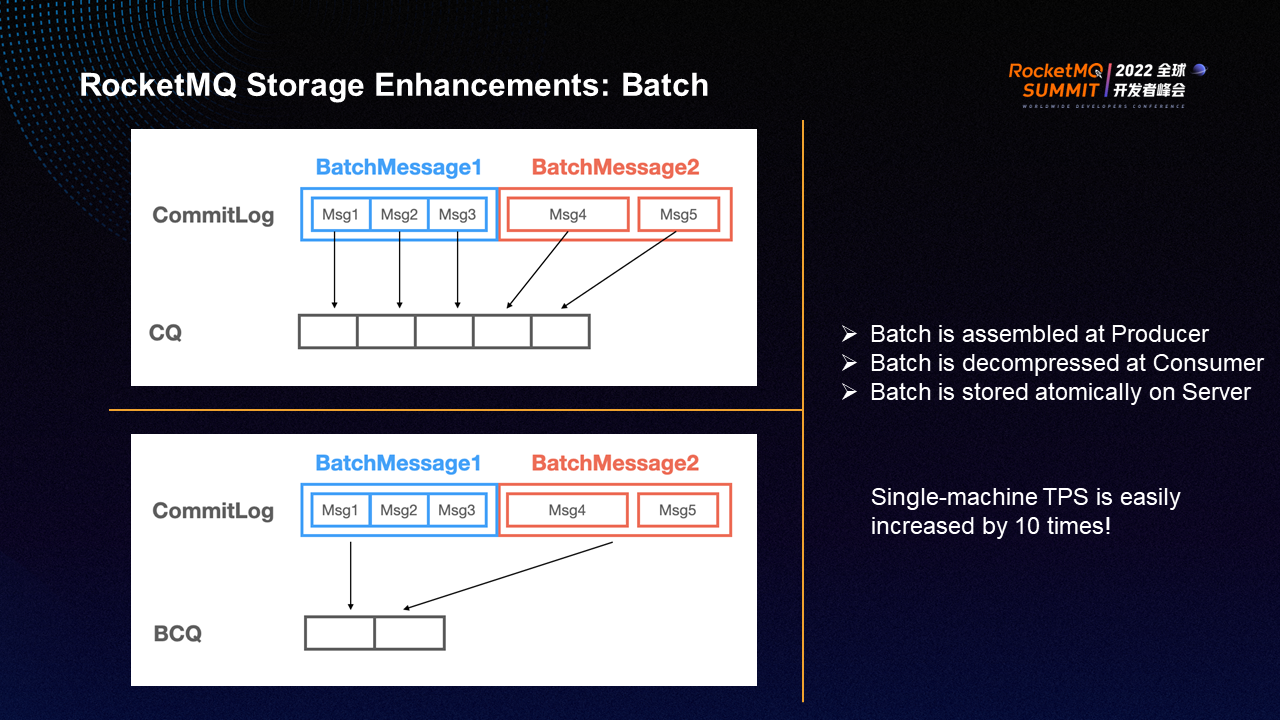

When RocketMQ was originally designed for microservice decoupling, it was intended for a single record, so the throughput was not high. RocketMQ 5.0 introduces Batch, a new feature for throughput.

There is one record for one message and one index for one message in traditional RocketMQ. The advantage of this traditional design is that it can ensure a more stable delay, but it also means the throughput is not high. There are too many RPCs in the communication layer, which consumes the CPU too much. Therefore, RocketMQ 5.0 has launched the Batch feature to address this issue.

The basic logic of Batch: It is automatically assembled on the client to combine multiple messages according to topics and queues and send them to the server as one request. Generally, the server does not decompress the received message but directly stores it. The consumer takes one batch at a time, brings multiple-byte messages to the local, and decompresses them. If each batch contains ten messages, TPS will easily increase by ten. Normally, a remote request has to be sent once for a message, but with the introduction of Batch, a remote request can be sent once for ten messages.

Since the server does not decompress, the CPU consumption on the server increases very little. The functions of decompression and merging are delegated to each client, so bottlenecks are not easily formed on server resources, and TPS can be easily improved.

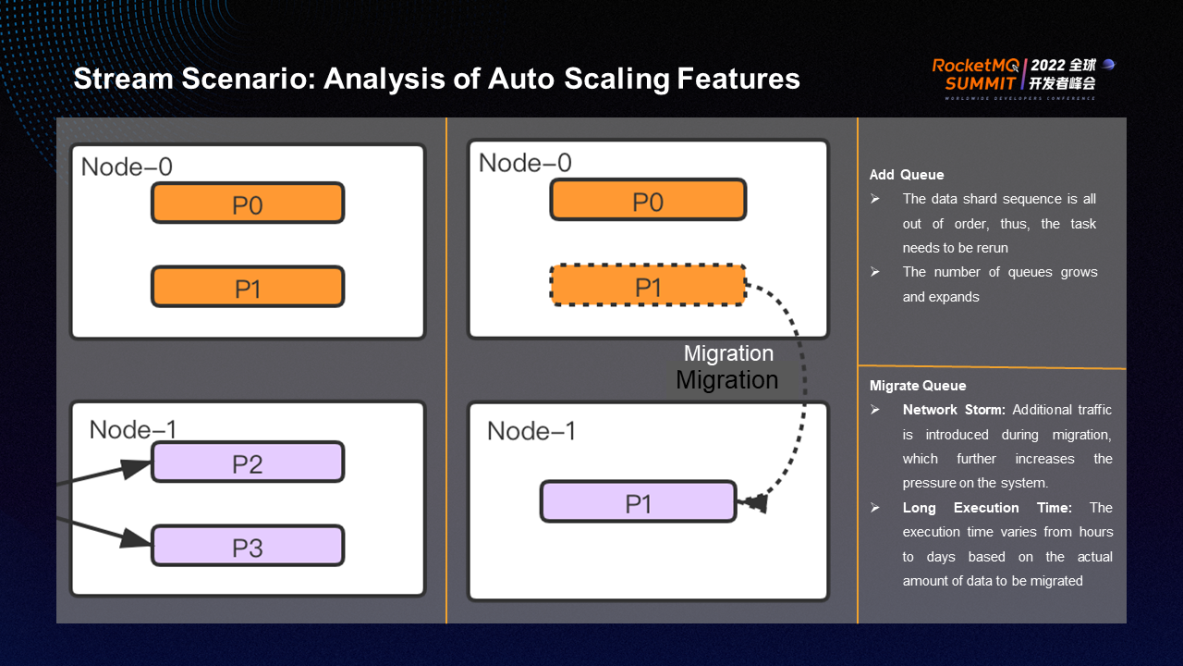

Another typical problem in the stream processing scenario is scale-out and data rebalancing. the scale-out problem is not obvious when the traffic is small in microservice scenarios. However, the single-machine traffic is inherently high in the stream processing scenario. Once the capacity is scaled out, the problem of data rebalancing caused by the scale-out operation is difficult to ignore. If an original node needs to be scaled out to two nodes during the scale-out process, the problem of data rebalancing will occur.

There are usually two solutions to the problem:

Therefore, it is very difficult to use the replication method to implement the scale-out of big data storage engines, and it is difficult to weigh availability and reliability.

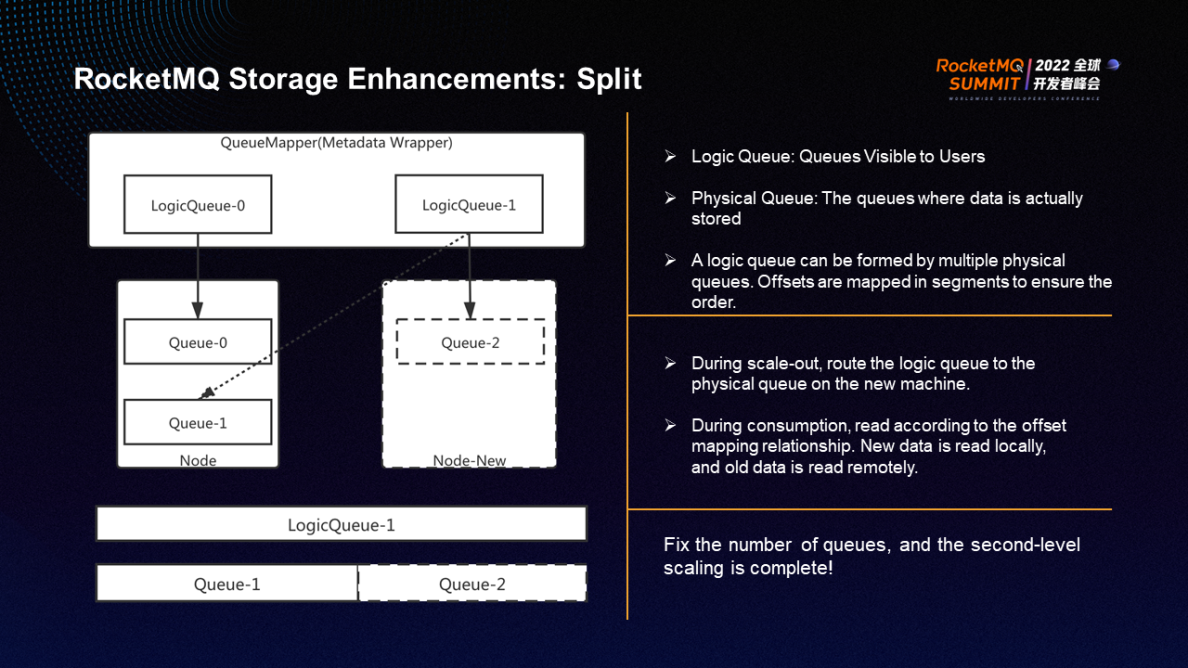

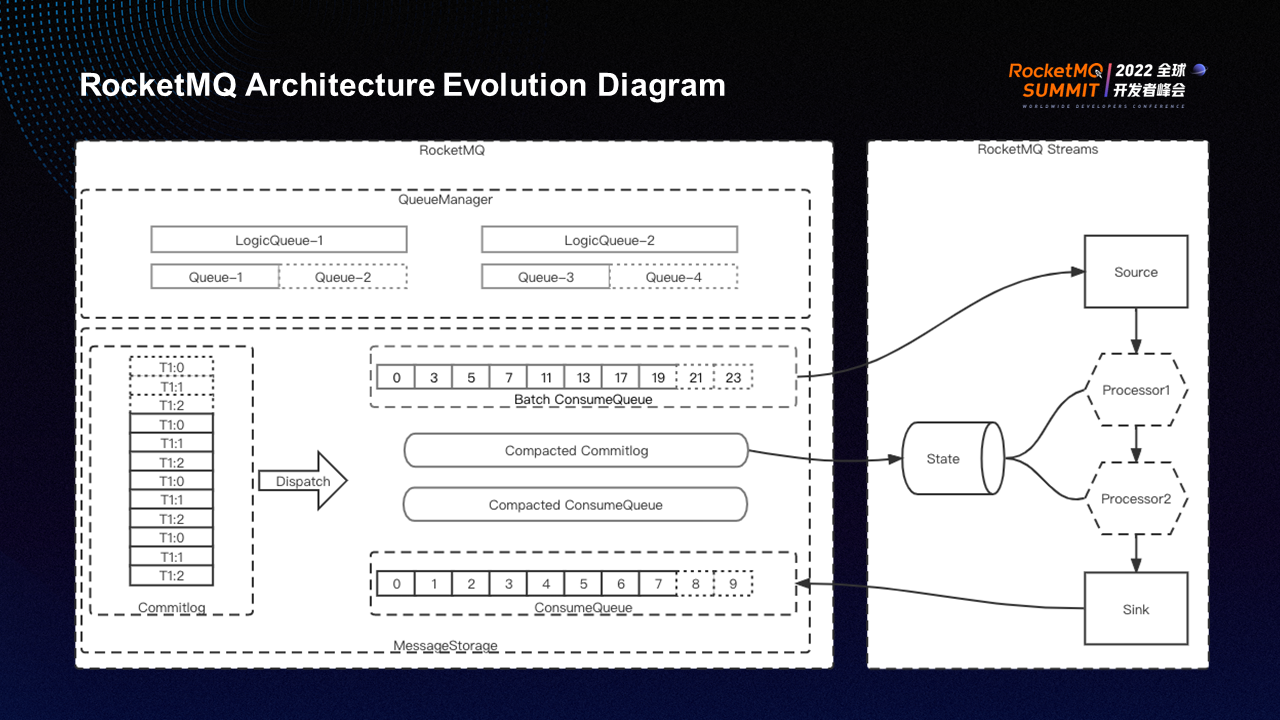

RocketMQ provides the logic queue solution to this problem. The logic queue is a queue that is exposed to customers. A physical queue is distributed on a node to store data. A logic queue is a combination of multiple physical queues through offset mapping.

The principle of offset mapping is listed below:

Let’s assume that the LogicQueue-1 consists of Queue-1 and Queue-2, Queue-1 contains 0 to 100, and Queue-2 contains 101 to 200. Queue-1 and Queue-2 can be mapped to a LogicQueue-1 whose total offset is 0 to 200.

In the preceding cases, we only need to modify the mapping relationship and change the logic queue to the queue on the new node to implement the scale-out.

When writing data, new data is written to the new node, which implements the load balancing of the write end. The reading process is different. The latest data will be read from the local machine, and the old data will be read remotely.

Data is continuously generated and consumed throughout the lifecycle of stream computing. Therefore, in most cases, if no data accumulation occurs, the amount of data read remotely is small, and the reading can be completed almost instantaneously. Both writes and reads are on the new node to complete the scale-out. This scale-out has two advantages: it does not need to move data, and the number of shards does not change. This also means the upstream and downstream clients do not need to restart or change, and the data tasks are complete and correct.

Strictly speaking, RocketMQ is a streaming storage engine. However, RocketMQ 5.0 introduced RocketMQ Streams: a lightweight stream computing.

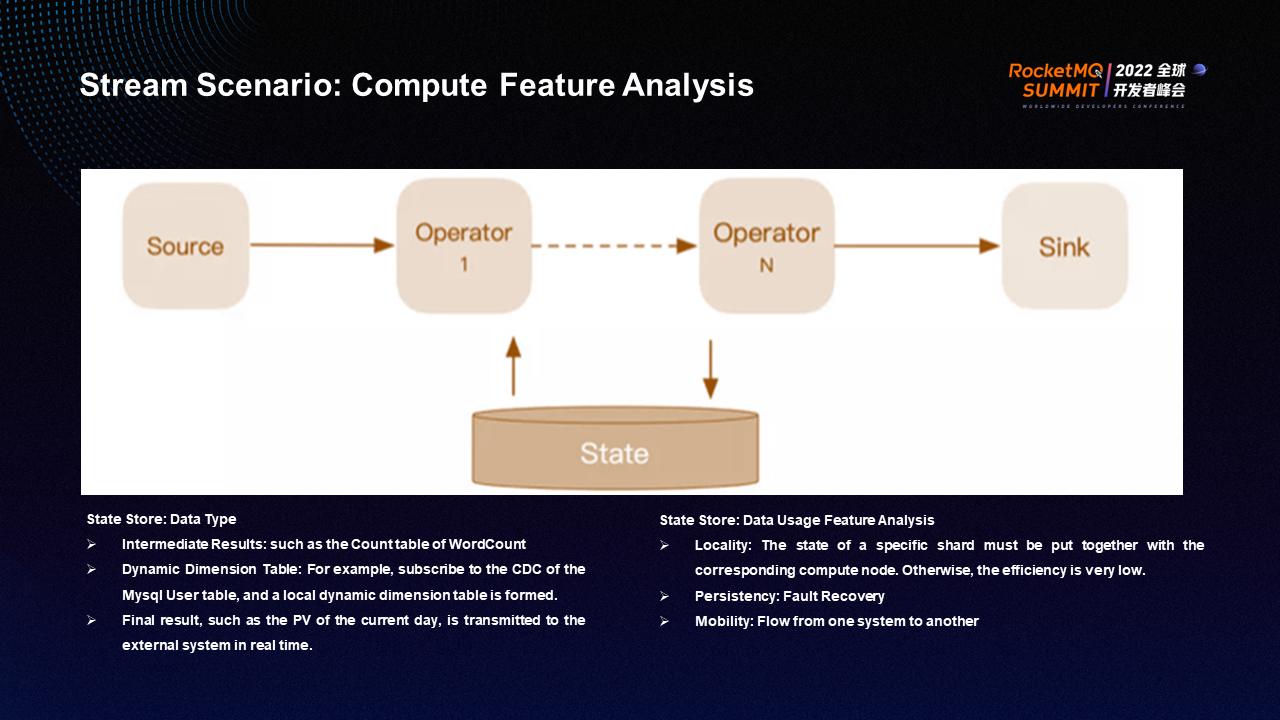

In RocketMQ Streams, the Source refers to the data source, and there are operators in the middle process. Finally, the data enters the Sink. Usually, they are all stateful, such as calculating the word count. When each piece of data comes in, and a new word appears, take the count value of the past data and add 1 to generate a new count value. The intermediate data is called the state store.

The characteristics of the state store include:

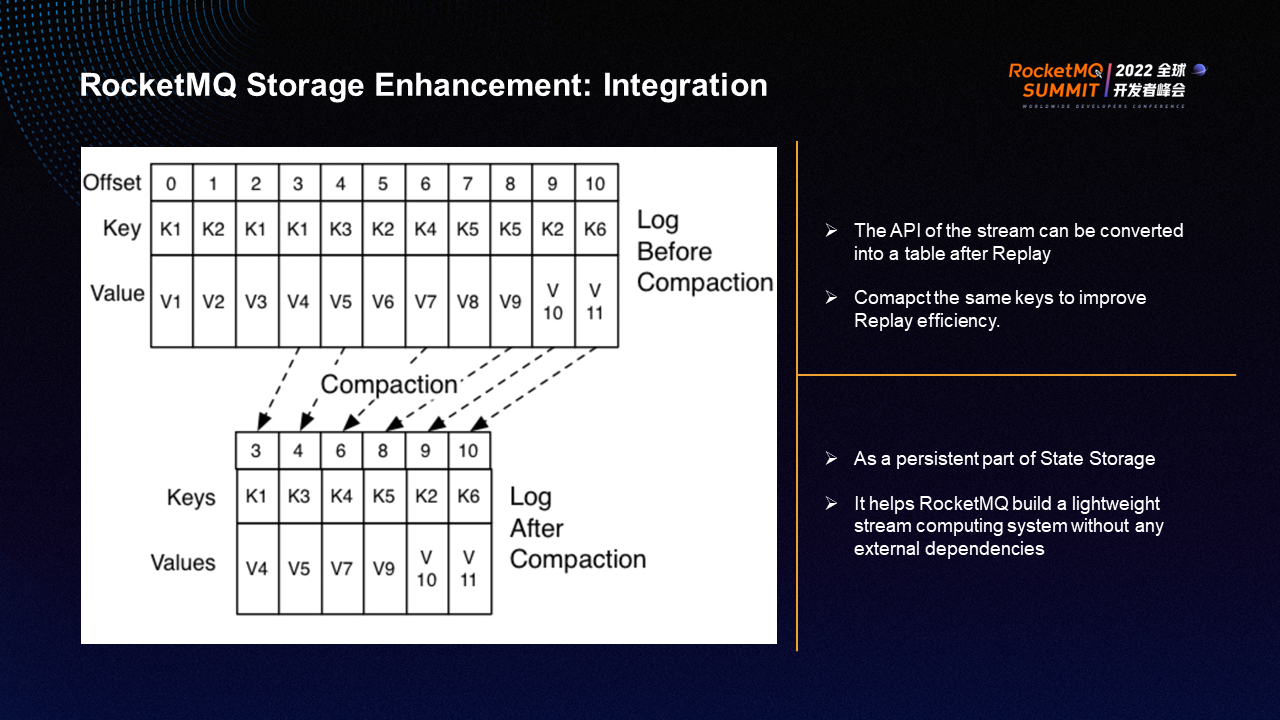

RocketMQ provides a new topic called compacted topic for the three characteristics above. The compacted topic is stored and used in the same way as a normal topic. The only difference is that its server deletes the record with the same key for normalization.

For example, the original node with offset=3 in the figure is K1V4, which overwrites the previous K1V1 with offset=0 and K1V3 with offset=2, leaving only one K1V4 at the end. The advantage of this design is that the amount of data that needs to be read when restoring is very small.

The State store is a NoSQL table. For example, word count is a KV structure, the key is words, and the count is the number of words. However, the word count is constantly changing. It is necessary to convert the table into a stream. The number is formed by all changes in the table, and the list of records forms a stream. The process is called table to stream conversion, which converts a table into a stream. RocketMQ can easily store a dynamic table using the preceding methods.

A compacted topic is equivalent to a dynamic table in the form of a stream. Therefore, a compacted topic is a state with both the characteristics of a stream and a dynamic table. This special topic can act as the storage layer of the state store, which is a persistence layer.

The state store itself is a table with keys and values constantly changing. The table needs to be persisted to implement disaster recovery. All modification records of the table are formed into a stream and stored in a compacted topic of RocketMQ, and the state store will be persisted. When compute node A crashes and compute node B takes over the task, the topic can be read as a common API, and the state store can be recovered locally. This way, the disaster recovery of stream computing tasks can be implemented. This also helps RocketMQ build a lightweight streaming computing engine without any external dependencies.

Batch, logic queue, and compacted topic are three basic storage features used to solve the problem of enhanced throughput, elasticity, and state store. Combining these three storage features with RocketMQ Streams creates a lightweight stream computing solution. Only RocketMQ and RocketMQ Streams are required to implement a common stream computing and storage engine.

The use of RocketMQ 5.0 has changed from microservice decoupling to a stream storage engine. The original features (such as asynchronous decoupling and load shifting) can still be fully used in new scenarios.

In addition, RocketMQ 5.0 enhances three major features for stream storage scenarios: Batch (which improves performance and increases throughput by ten), logic queue (which helps implement scaling in seconds without moving data or changing the number of shards), and the compacted topic is implemented for the state store used in stream computing scenarios.

These enhancements push RocketMQ one step further in its evolution toward a stream storage engine.

Join the Apache RocketMQ community: https://github.com/apache/rocketmq

508 posts | 48 followers

FollowAlibaba Cloud Native - June 7, 2024

Alibaba Cloud Native - June 6, 2024

Alibaba Cloud Community - December 16, 2022

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native - June 12, 2024

508 posts | 48 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn More Short Message Service

Short Message Service

Short Message Service (SMS) helps enterprises worldwide build channels to reach their customers with user-friendly, efficient, and intelligent communication capabilities.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by Alibaba Cloud Native Community