By Lingsheng

In system development and O&M, logs are among the most critical pieces of information, with their primary advantage of simplicity and directness. However, throughout the lifecycle of logs, there is a fundamental tension that is difficult to reconcile: the requirement for simple and convenient log output and collection vs. the need for data formatting and on-demand storage when analyzing logs.

• To address the former for service stability and efficiency, various high-performance data pipeline solutions are proposed, such as cloud services like Alibaba Cloud SLS and open-source middleware like Kafka.

• For the latter, standardized and complete data needs to be provided to downstream systems for use in business analysis and other scenarios. This demand can be met by the SLS data transformation feature.

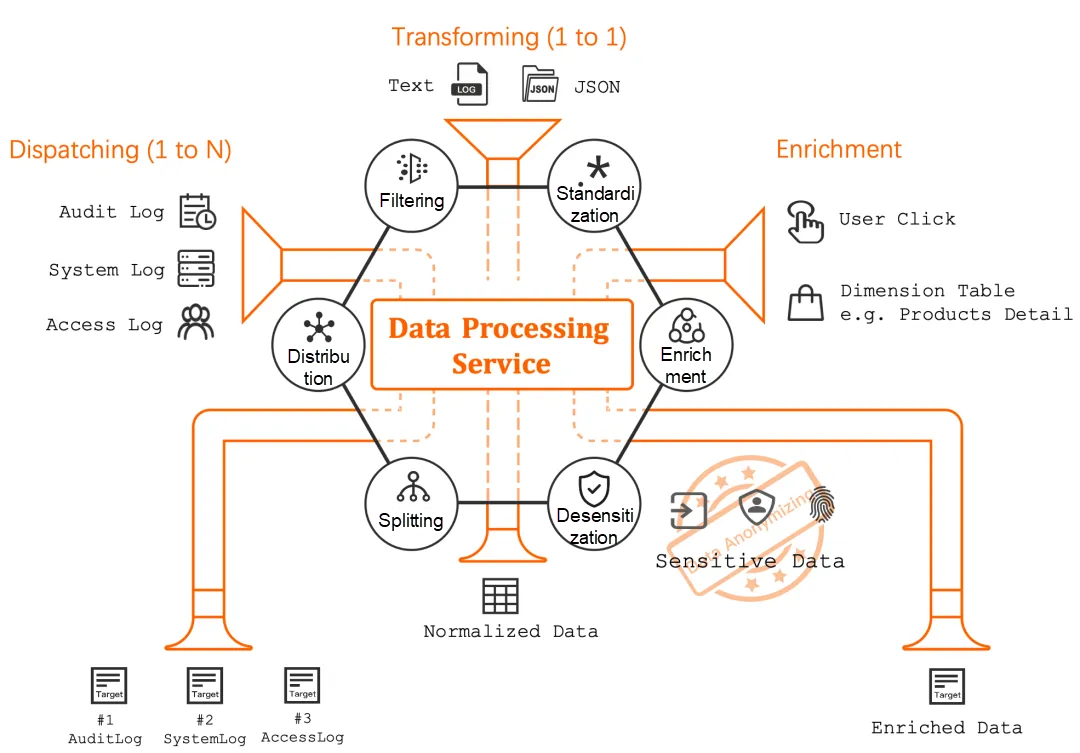

As shown in the preceding figure, common scenarios of SLS data transformation include:

• Standardization: This is the most common scenario, such as extracting key information from text log data and transforming it into standardized data.

• Enrichment: For example, the data clicked by the user may only contain product IDs, and it should be associated with the detailed information in the database during analysis.

• Desensitization: Since China has been improving the laws and regulations on information security, the requirements for handling sensitive data such as personal information are increasingly strict.

• Splitting: During data output, multiple data records are often combined for performance and convenience reasons, and they should be split into individual data items before analysis.

• Distribution: Different types of data are written to specific targets separately, enabling customized use by downstream systems.

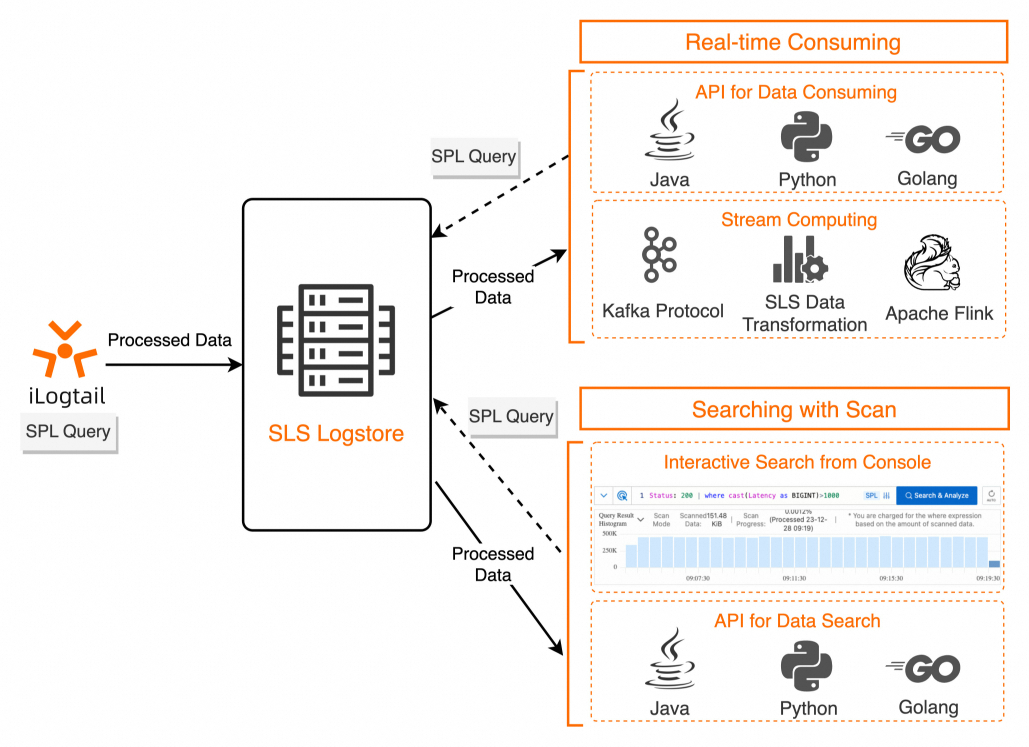

• Integrate SPL for Unified Syntax

SPL is a unified data processing syntax provided by SLS for scenarios such as log collection, interactive search, stream consuming, and data transformation. For more information about the syntax, see SPL syntax. Line-by-line debugging and code prompts are supported during SPL development, which provides an experience similar to Integrated Development Environment (IDE) coding.

• Improve Performance Over 10 Times and Handle Large Data Volumes and Data Spikes More Smoothly

In scenarios involving the processing of unstructured log data, the data transformation (new version) offers a performance improvement of over 10 times compared with the old version for the same processing complexity and supports a higher data throughput. Additionally, with the upgrade of the scheduling system, the new version can more flexibly scale compute resources concurrently during data spikes that are thousands of times greater than normal traffic, thus minimizing backlogs caused by such spikes.

• More Cost-effective, 1/3 the Old Version in Costs

Thanks to technological upgrades, the new version is only 1/3 the old version in data transformation costs. Therefore, when the required features are supported, it is recommended to use Data Transformation (New Version).

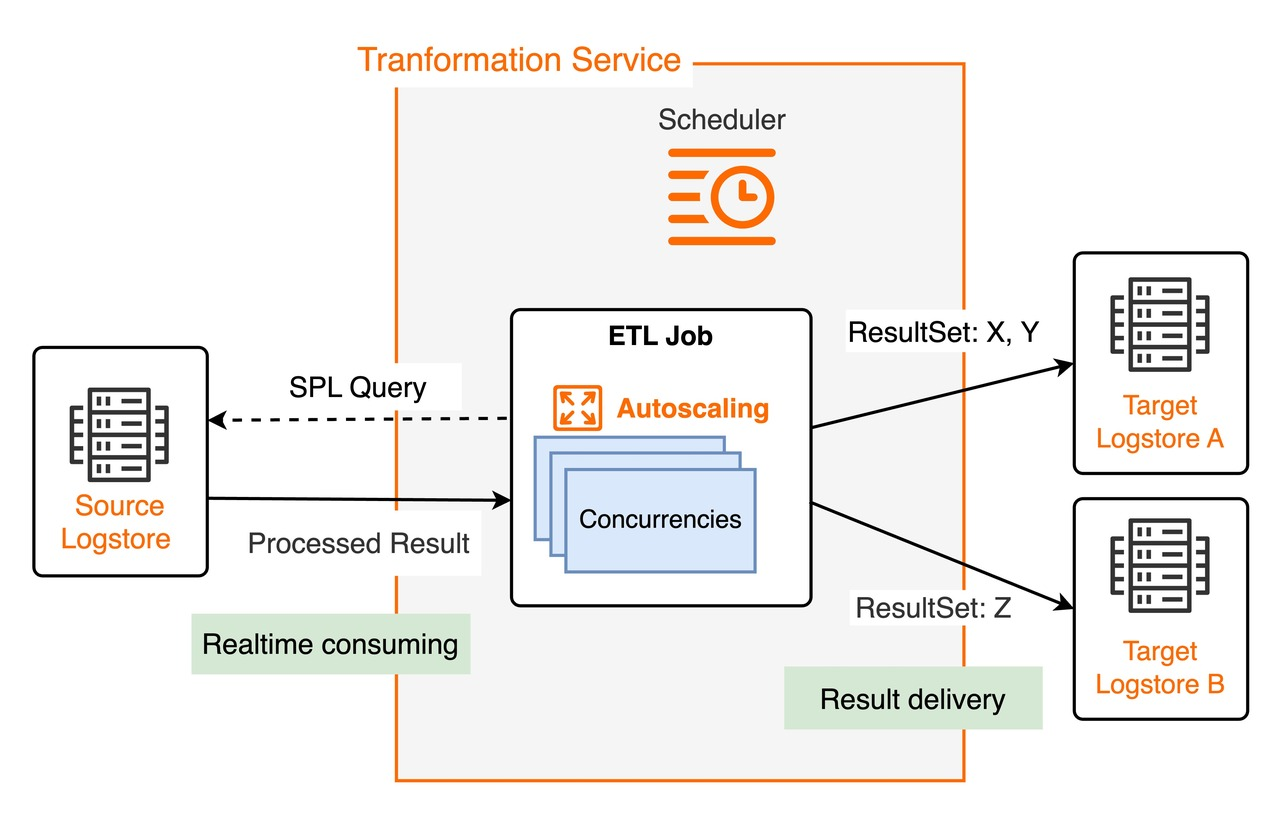

The data transformation (new version) feature processes log data in real time based on hosted data consumption jobs and the Processing Language (SPL) rule consumption feature, as shown in the following figure.

• Scheduling Mechanism

The transformation service has a scheduler that starts one or more instances to concurrently process a transformation job. Each running instance works as a consumer to consume one or more shards of the source Logstore. The scheduler dynamically scales instances based on the instance resource usage and consumption progress. The maximum concurrency for a single job is the number of shards in the source Logstore.

• Running Instances

Based on the SPL rules of a job and the configurations of the destination Logstore, SPL rules can be used to consume source log data from the shards allocated by the data transformation service. The processed results are distributed based on the SPL rules and written to the corresponding destination Logstore. During the running of an instance, consumer offsets of shards are automatically saved to ensure that consumption continues from the point it is interrupted when the job is stopped and then restarted.

SPL in the new version is easier to use than DSL in the old version. The following items compare the two:

1. As a subset of the Python syntax, DSL rules are developed in a similar way to functions and have more syntax symbols that complicate use. In contrast, SPL uses shell-style commands to minimize the use of syntax symbols. For example:

2. SPL allows you to maintain the type of a temporary field during processing. For more information, see Type retention. In contrast, field values are fixed to strings, and intermediate results of type conversion are not retained. See the following examples: for DSL in the old version, the ct_int function must be invoked twice:

e_set("ms", ct_float(v("sec"))*1000)

e_keep(ct_float(v("ms")) > 500)For SPL in the new version, type conversion is not required twice, hence the conciseness.

| extend ms=cast(sec as double)*1000

| where ms>10243. In addition, SPL reuses the SQL functions of Simple Log Service. This way, you can understand and use SPL rules more easily. For more information, see Function overview.

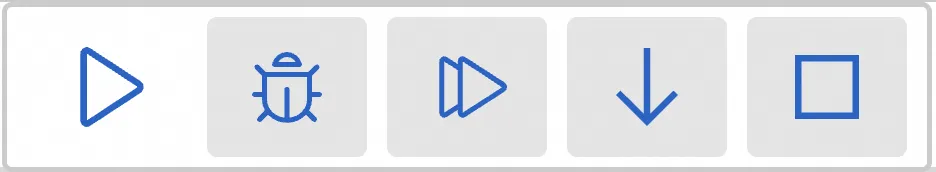

This section describes the SPL debugging menu and buttons in the menu.

• Run: runs the entire SPL rule in the edit box.

• Debug: starts the debugging mode and runs the rule until the first checkpoint. You can perform line-by-line or checkpoint-based debugging.

• Next checkpoint: continues debugging until the next checkpoint.

• Next line: continues debugging until the next line.

• Stop debugging: stops the debugging process.

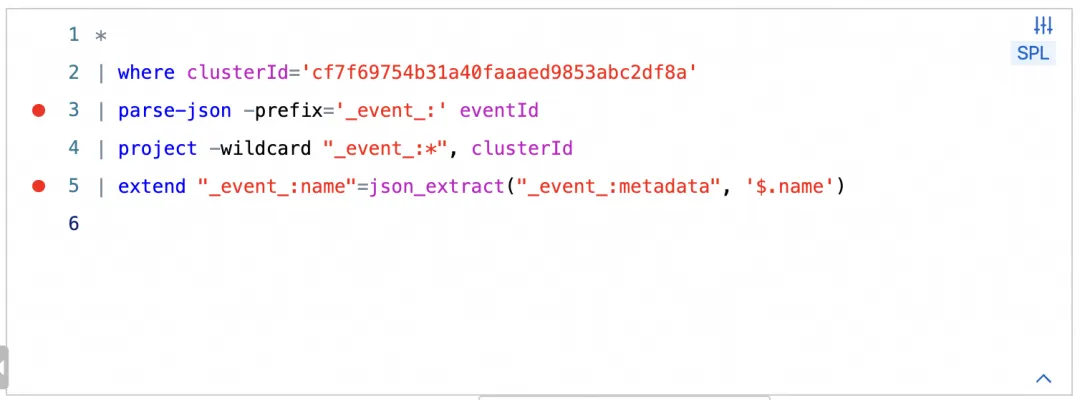

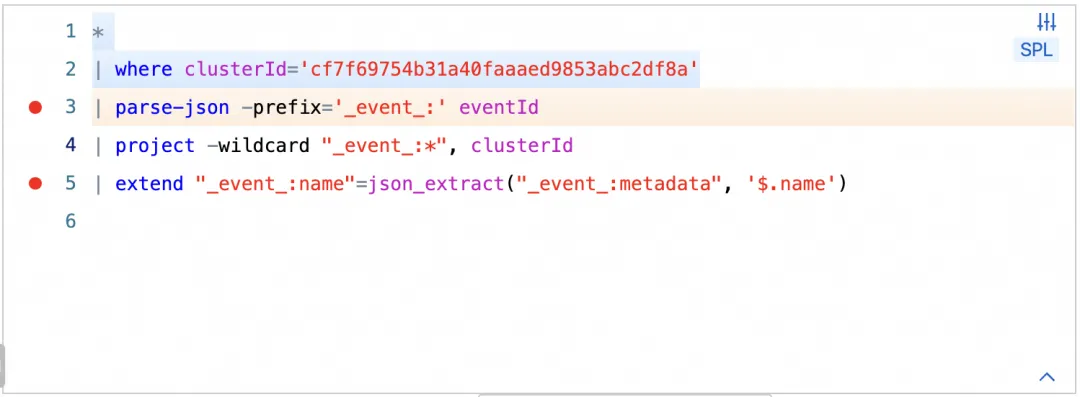

The blank area before the line numbers in the code editor is the checkpoint area. You can click in the checkpoint area to add a debugging checkpoint to a line. The following figure shows an example. You can click an added debugging checkpoint to remove it.

1. Prepare test data and write the SPL rule.

2. Add a checkpoint to the line to be debugged.

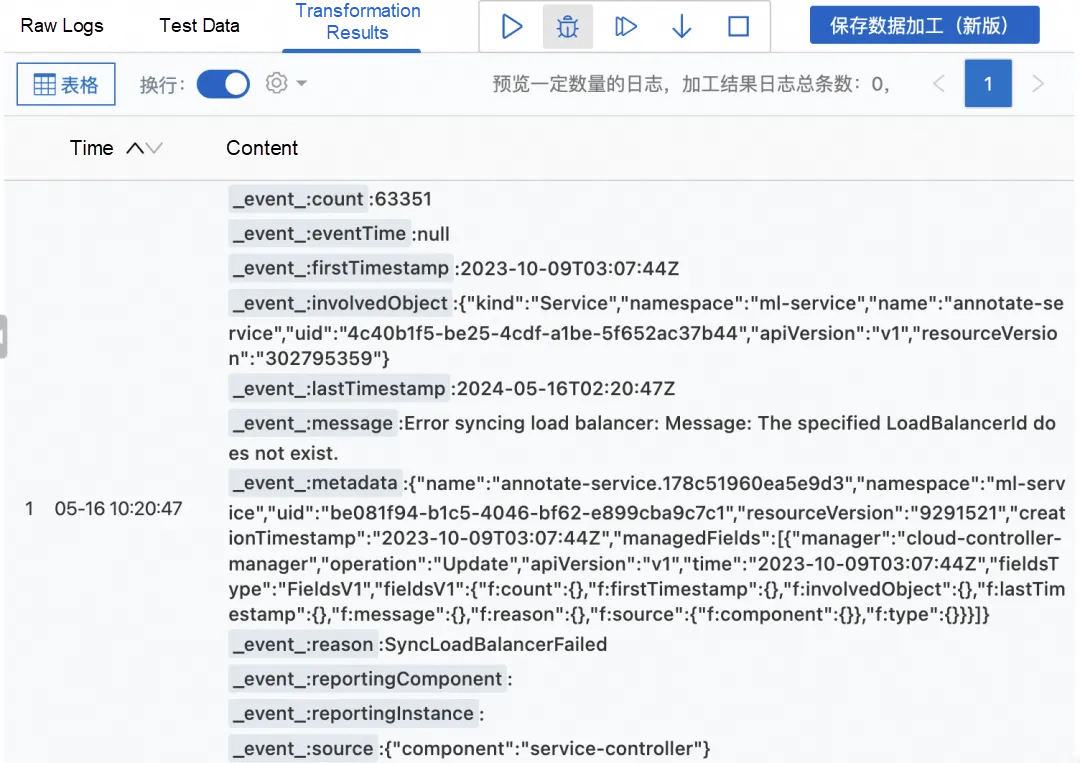

3. Click the debugging icon to start debugging. The following figure shows an example. The row highlighted in yellow indicates the current suspended position, where the statement has not been executed yet, and the row highlighted in blue indicates the executed SPL statements.

4. On the Transformation Results tab, check whether the results meet your business requirements.

Next, the new version will undergo continuous iterations and upgrades. Here, we will discuss two upcoming upgrades to be launched soon.

Currently, the data transformation (new version) is computation-optimized and focuses on unstructured data processing scenarios, while data forwarding scenarios are currently not supported, such as data distribution to multiple or dynamic destinations, dimension table enrichment, IP address mapping to geological locations, or data synchronization across regions over the Internet.

Therefore, in the subsequent iterations and upgrades, the new version will focus on supporting these scenarios and upgrade the architecture to provide more stable and user-friendly services, such as accelerated data synchronization across regions over the Internet and dataset-based data distribution.

For existing data transformation tasks running in production, if you need to upgrade the feature from the old version to the new version as described above, the data transformation service will support the in-place upgrade from two technical perspectives:

First, the data transformation service synchronizes consumer offsets to the new version after the upgrade to ensure data integrity. Therefore, the service consumes data from the synchronized offsets after the upgrade.

Second, based on Abstract Syntax Tree (AST) parsing, the service will automatically translate the DSL scripts of old data transformation tasks into equivalent SPL logic for data processing.

Observability of LLM Applications: Exploration and Practice from the Perspective of Trace

212 posts | 13 followers

FollowAlibaba Cloud Native Community - November 1, 2024

Alibaba Cloud Native Community - March 29, 2024

Alibaba Cloud Native Community - July 31, 2025

Alibaba Cloud Native Community - May 31, 2024

Alibaba Cloud Native - April 26, 2024

Alibaba Cloud Native Community - December 10, 2025

212 posts | 13 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Alibaba Cloud Native