By Xieyang

RocketMQ 5.0 has introduced a new solution for tiered storage. After undergoing several version iterations, the tiered storage feature of RocketMQ has become matured and is now an important aspect of reducing storage costs. In fact, almost all products that involve storage attempt to reduce costs by implementing cold storage. It is fascinating to explore challenging technical optimizations for message queue scenarios.

This article aims to analyze and evaluate the selection of technical architectures in a data-intensive application model, specifically in the context of a message system. It also discusses the implementation and evolution of tiered storage, allowing users to leverage the benefits of cloud computing resources.

RocketMQ was born in 2012. Storage nodes of RocketMQ adopt a shared-nothing architecture to read and write data from and to local disks. Messages of different topics on a single node are sequentially appended to the CommitLog file, and multiple indexes are created synchronously. This architecture offers high scalability and ease of maintenance, making RocketMQ highly competitive.

However, with the advancement of storage technology and the widespread adoption of high-speed networks, the storage layer of RocketMQ faces bottlenecks. The growth of data volume surpasses that of single-server hardware. Additionally, there has always been a trade-off between the speed of storage media and the price per unit capacity. In the era of cloud-native and serverless technologies, evolving the technical architecture is the only way to overcome the limitations of storage space on single-server disks. This evolution will enable more flexible scaling and cost reduction, bringing benefits in various aspects.

Tiered storage technologies are expected to be optimized in the following respects.

• Real-time: RocketMQ provides one primary node and multiple read-only nodes in message scenarios, and hot data is cached in the memory. By storing data in quasi-real-time instead of using a time- or capacity-based elimination algorithm, the overhead of data replication can be reduced and the recovery time objective (RTO) can be shortened. Cold read requests during reading can be redirected, eliminating the need for restoration time, and traffic can be strictly controlled to avoid impacting the writing of hot data.

• Elasticity: Although the shared-nothing architecture is simple, the data of the node to be undeployed cannot be read by other nodes in scale-in or node-replacing scenarios. Scaling in requires the node to be read-only for an extended period until the consumer consumes all the data or a complex migration process is performed. This architecture scales out quickly but scales in slowly, which is not ideal for cloud-native environments. By allowing deployed nodes to read data from undeployed nodes through a shared disk/storage architecture, not only can costs be reduced but operations and maintenance can also be simplified.

• Differentiation: Low-cost media often has poor random read and write capabilities. Structures similar to log-structured merge trees (LSM) require frequent compaction operations to compress and reclaim space. RocketMQ addresses this by setting different lifecycle rules for different topics, such as message retention time (TTL). Considering that data in the message system is immutable and ordered, RocketMQ minimizes the need for format regularization to avoid write amplification due to repeated integration, thus saving computing resources.

• Competitiveness: Tiered storage should also embrace the evolution of advanced technologies such as archive compression, data export, column-oriented storage, and interactive query and analysis capabilities.

Let's look at the problem from a new perspective. The message system provides users with a series of APIs for sending and receiving messages, managing offsets, and gracefully handling dynamic data streams. From this standpoint, the message system expands the boundaries of the storage system. In reality, most server-side applications are built on lower-level SQL and POSIX APIs, which are encapsulated to reduce complexity and hide information.

The primary focus of the message system lies in high availability, high throughput, and low costs. It aims to minimize concerns around storage media selection, storage system upgrades, sharding strategies, migration backups, and tiered compression. This approach reduces the long-term maintenance costs of the storage layer.

An efficient and well-implemented storage layer should support a wide range of storage backends, including local disks, various databases, distributed file systems, and object storage. These backends should be easily expandable.

Fortunately, almost all distributed file systems and object storage solutions provide strong consistency semantics, ensuring that objects can be immediately read once they are uploaded or copied. This aligns with the description in CAP theory, Every read receives the most recent write or an error, guaranteeing consistency among multiple replicas in a distributed storage system. For applications, the absence of Byzantine faults, where original data disappears and data persistence is compromised, is satisfactory. This simplifies achieving consistency between applications and distributed storage systems and significantly reduces application development and maintenance costs.

Common distributed file systems include Apsara Distributed File System, HDFS, GlusterFS, Ceph, and Lustre. Here is a simple comparison.

• API support: When using object storage as the backend, it typically lacks full POSIX capability support like HDFS and performs poorly in non-KV operations. For example, listing a large number of objects can take tens of seconds, whereas distributed file systems can perform the same operation in milliseconds or even microseconds. If object storage is chosen as the backend, the message system needs to manage the metadata of these objects in an orderly manner due to the weakened API semantics.

• Capacity and horizontal scaling: For cloud products or storage foundations in large-scale enterprises, if the cluster size exceeds hundreds of nodes and the number of files reaches hundreds of millions, the NameNode in HDFS can become a performance bottleneck. If multiple sets of storage clusters appear in the underlying storage due to capacity availability, the simplicity of the shared disk architecture will be compromised, and the complexity will be passed to applications. This can impact the design of multi-tenancy, migration, and disaster recovery in messaging products. Large enterprises often deploy multiple sets of Kubernetes and customize upper-layer cluster federations.

• Ecological chain: Both object storage and HDFS-like systems have a wide range of production-proven tools. In terms of monitoring and alerting, object storage has more product-oriented support.

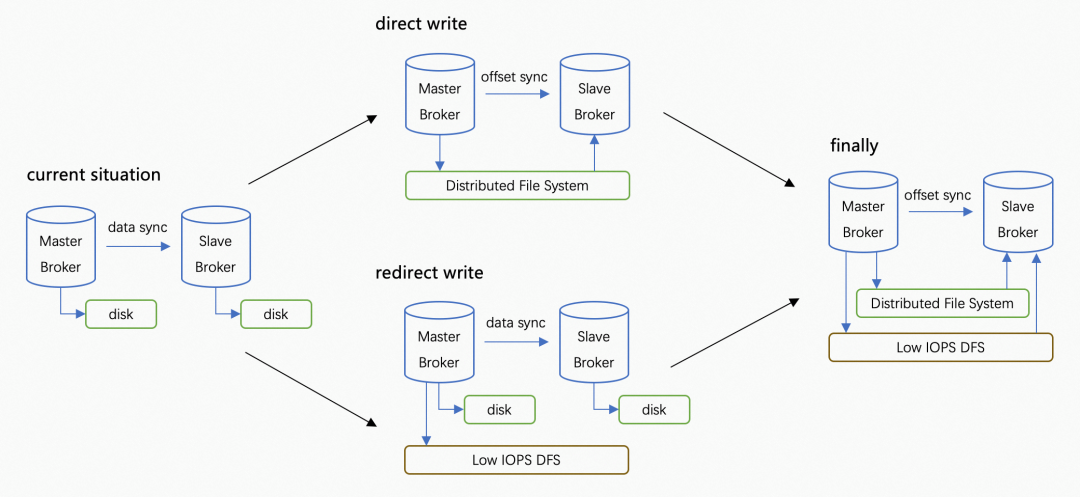

In the RocketMQ solution, the selection between direct and redirect writing modes has attracted much attention. I don't think they conflict. We can adopt the two separately and leverage their strengths.

For many years, RocketMQ has been running in systems that are based on local storage. Local disks usually have high IOPS and low costs, but their reliability is poor. Problems encountered in large-scale production practices include but are not limited to difficult vertical scaling, bad disks, and host failures.

The purpose of direct writing is to pool storage, and the purpose of redirect writing is to reduce the cost of long-term data storage, so I think an ideal ultimate state is a combination of the two. If RocketMQ does the work of transferring hot data to cold storage, you may ask: Wouldn't it be better to make DFS support transparent transferring?

My understanding is that RocketMQ hopes to make some internal format changes in the message system when moving hot data to cold storage, so as to accelerate reading cold data, reduce I/O operations, and configure different TTL values.

Compared with general algorithms, message systems have a better understanding of how to compress data and accelerate reading. Moreover, the active moving to cold storage can also be used to dump data from servers to different platforms such as the NoSQL system, ElasticSearch, ClickHouse, and object storage in some auditing and data ingestion scenarios. All this is so natural.

Then, is tiered storage a perfect ultimate solution? The ideal is beautiful, but let's look at a set of data in a typical production scenario.

When RocketMQ adopts block storage, the storage cost of storage nodes accounts for about 30%-50% of the total cost. When tiered storage is enabled, data dumping incurs certain computing overhead, including data replication, data encoding and decoding, and CRC verification. The computing cost is increased by 10%-40% in different scenarios. Through conversion, we can find that the total cost of ownership of storage nodes is reduced by about 30%.

Considering the consistency between commercial and open-source technical architectures, we choose to implement the redirect writing mode first. The storage cost of hot data is significantly reduced with the storage space, which reduces the storage cost more directly. When we fully establish the current transfer logic, we port the WAL mechanism and index building of hot data to implement direct writing based on distributed systems. This phased iteration is more concise and efficient. At this phase, we pay more attention to generality and usability.

• Portability: Direct writing distributed systems usually rely on specific SDKs and technologies such as RDMA to reduce latency. They are not completely transparent to applications, which leads to more O&M, labor, and higher technology complexity. In redirect writing systems, to retain mature local storage, you only need to implement storage plug-ins to switch to multiple storage backends. It does not use deep binding for IaaS, thus having advantages in portability.

• Latency and performance: In direct writing mode, storage is closely integrated. The simplified HA at the application layer also reduces latency. Messages are only visible to consumers when data is written to a majority of nodes. However, the latency of writing to a cloud disk or a local disk in the same zone is less than across zones. Therefore, the storage latency is not a bottleneck in sending and receiving hot data.

• Availability: Storage backends often have complex fault tolerance and failover policies. Both direct and redirect writing modes meet the availability requirements of the public cloud. Considering that the system in redirect writing mode is weakly dependent on secondary storage, it is more suitable for open-source and non-public cloud scenarios.

Why don't we further compress the capacity of block storage disks to minimize the cost?

Blindly pursuing a small capacity of local disks is of little value in tiered storage scenarios. The following are the main reasons.

• Fault redundancy. Message queues are an important part of the infrastructure, and their stability counts more than everything else. Object storage has high availability. If they are used as the primary storage, when the network fluctuates, they are prone to generate backpressure, which disables hot data to be written. Hot data belongs to the online production business, thus the influence is fatal to the availability.

• Local disks with too small capacities have no price advantage. As we all know, cloud computing focuses on inclusiveness and fairness, and if you choose a block storage of about 50 GB and an IOPS that an ESSD-level block storage of 150 GB can provide, the unit cost is almost several times that of ordinary block storage.

• If the local disk capacity is sufficient, you can use batch uploading to reduce the object storage request fee. It can also read warm data with lower latency and at a lower cost.

• If you only use object storage, it is difficult to retain the existing rich features of RocketMQ, such as the random message index for troubleshooting and the scheduled message. It is not worth greatly weakening the infrastructure capabilities and requiring the business side to build a complex middleware system to save a small amount of costs.

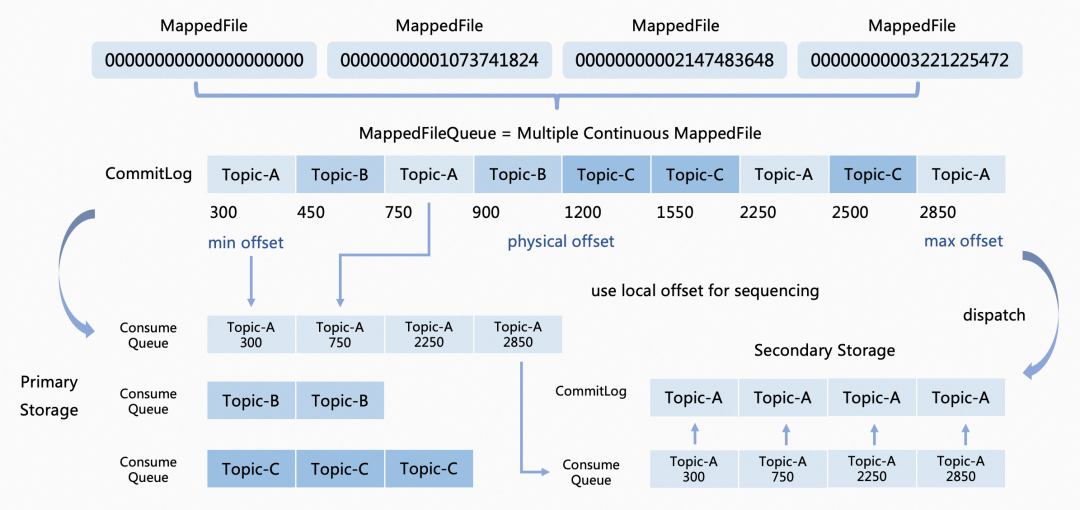

Data models for local storage in RocketMQ are as follows:

• MappedFile: It is a handle for a single real file, also known as fd. Files are mapped to a memory through mmap. It is an append-only fixed-length byte stream that supports appending and random reading at byte granularity. Each MappedFile has its own metadata, such as type, writing position, and creation and update time.

• MappedFileQueue: It can be viewed as a linked list of zero or more fixed-length MappedFiles, providing unbounded semantics for the stream. Only the last file in a queue can be in an unsealed state (writable), while all previous files must be in a sealed state (read-only). Once the Seal operation is completed, the MappedFile becomes immutable.

• CommitLog: It is the encapsulation of MappedFileQueue. Each "grid" stores a serialized message in an unbounded stream.

• ConsumeQueue: It is a sequential index that points to the offset of CommitLog in FileQueue.

The data models provided by RocketMQ tiered storage are similar to local models, which change the concepts of CommitLog and ConsumeQueue.

• TieredFileSegment: Similar to MappedFile, it describes a handle for a file in a tiered storage system.

• TieredFlatFile: Similar to MappedFileQueue.

• TieredCommitLog: Unlike the local CommitLog which writes in a mixed way, it splits multiple CommitLog files at the granularity of a single topic and a single queue.

• TieredConsumeQueue: An index that points to the offset of TieredCommitLog, and is strictly and continuously increasing. The actual position of the index changes from pointing to the position of the CommitLog to the offset of TieredCommitLog.

• CompositeFlatFile: It combines TieredCommitLog and TieredConsumeQueue objects and provides encapsulation of the concept.

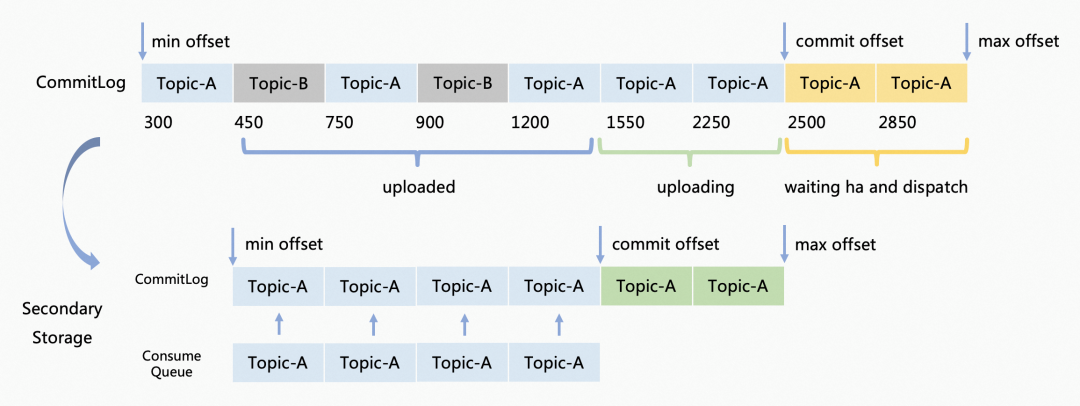

RocketMQ's storage implements a pipeline, similar to an interceptor chain or the handler chain of Netty, where read and write requests go through multiple processors in the pipeline. The concept of the dispatcher is to build an index for the written data. When the tiered storage module is initialized, a TieredDispatcher is created and registered as a processor in the dispatcher chain of CommitLog. Whenever a message is sent to the Broker, TieredDispatcher is called for message distribution. Let's trace the process of a single message entering the storage layer:

(1) The message is sequentially appended to the local CommitLog and the local max offset is updated (the yellow section in the figure). To prevent "read oscillation" caused by multiple replicas during a crash, the minimum position of the majority of replicas is confirmed as the lower limit, which is referred to as the commit offset (2500 in the figure). In other words, the data between the commit offset and the max offset is waiting for multi-replica synchronization.

(2) When the commit offset is equal to or greater than the message offset, the message is uploaded to the cache of the secondary storage CommitLog (the green section in the figure), and the max offset of this queue is updated.

(3) The index of the message is appended to the consumer queue of this queue, and the max offset of the consumer queue is updated.

(4) Once the cache size in the CommitLog file buffer exceeds the threshold or the cache of the message waits for a certain period, the cache will be uploaded to the CommitLog before the index information is submitted. There is an implicit data dependency here that causes the index to be updated later than the original data. This mechanism ensures that all data in the cq index can be found in the CommitLog. In a crash scenario, the CommitLog in the tiered storage may be built again, and there will be no cq pointing to this data. Since the file itself is still managed by the queue model, the entire data segment can be recycled when it reaches its TTL, and there is no "data leakage" in the data stream.

(5) When the index is also uploaded, the commit offset in the tiered storage is updated (the green section is committed).

(6) When the system restarts or crashes, the minimum offsets of multiple dispatchers are selected to redistribute to the max offset to ensure that data is not lost.

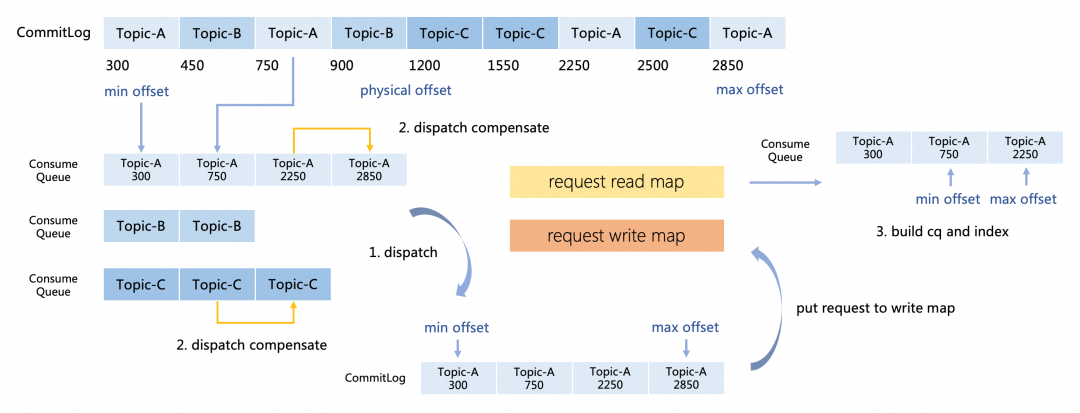

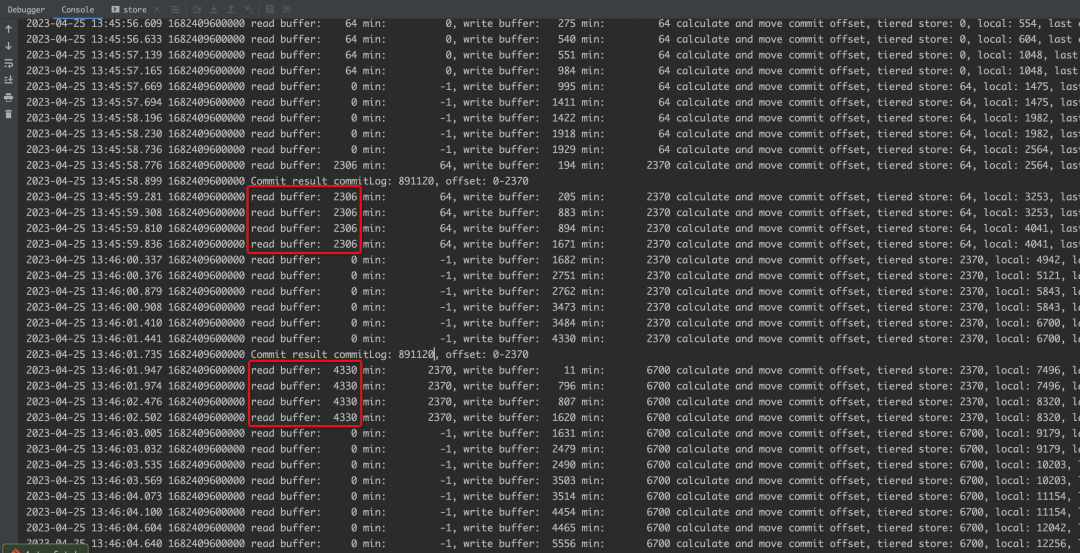

In practice, three groups of threads work together to upload.

(1) The thread to store dispatch. Since this thread is responsible for the distribution of local cq, we cannot block this thread for a long time. Otherwise, it will affect the visibility delay of messages entering the local storage. Therefore, it only attempts to lock the split file for a short time. If the lock is successful, the thread immediately exits after the message data is put into the buffer of the split CommitLog file, ensuring that the operation is not blocked. If the lock fails, the thread immediately returns.

(2) The thread to store compensate. It periodically scans the local cq. When the workloads on writes are high, the lock may not be obtained in the first step, and in this step, data that is not collected within a specified period will be put into CommitLog in batches. When the original data is put into the file, the dispatch request is put into the write map.

(3) The thread to build cq and index. The write map and the read map are a double-buffered queue. This thread builds cq and uploads the data in the read map. If the read map is empty, the buffer will be swapped, and this double-buffered queue reduces mutual exclusion and contention when multiple threads share access.

The buffer storage and batch policies of various storage systems are similar, but the online topic write traffic often has hot spots. According to the classic 80/20 rule, the RocketMQ tiered storage adopts the combination of "the number of data entries reaches the specified value" with "the time reaches the specified period" and takes the smaller of the two.

This method is simple and reliable. For topics with large traffic, it makes it easy to achieve the minimum data volume of a batch. For topics with low traffic, it does not occupy too much memory, thus reducing the number of object storage requests. The overhead of this method mainly includes the RESTful protocol request header, signature, and transmission. Admittedly, there is still a lot of room for optimization of the batch logic. For example, in scenarios where the traffic of each topic is relatively average, such as IOT and data shard synchronization, a weighted average algorithm like the sliding window algorithm, or a traffic control policy based on trust values can better balance the latency and the throughput.

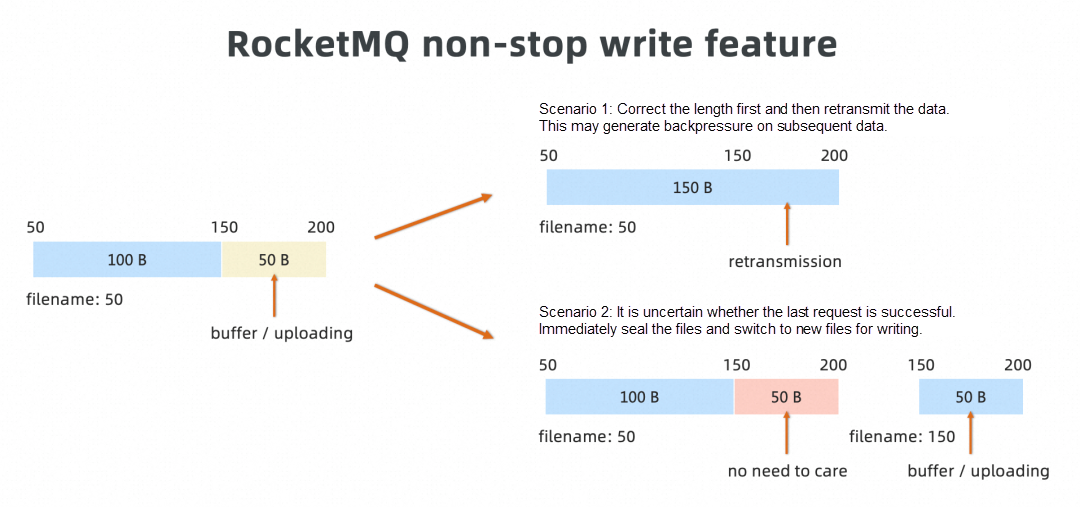

The Non-StopWrite model is part of the consistency model. In production, the disconnection and network issues of back-end distributed storage systems are sometimes inevitable. However, the Append model is a strong sequential model. Referring to the 2-3 asynchronous write of HDFS, we propose a hybrid model based on Append and Put.

For example, the following figure assumes that the commit/confirm offset value is 150, and the max offset value is 200. The output data in the buffer includes the uncommitted data in the range of 150-200 and new data that is continuously written to the range after 200.

Assume that the backend storage system supports atomic writing and that a single upload request contains data in the range of 150-200. If a single upload fails, we need to query the last write offset from the server and handle the error.

• If the returned length is 150, it indicates that the upload fails and the application needs to reupload the buffer.

• If the returned length is 200, it indicates that the previous upload is successful but no successful response is received. The application needs to update the commit offset to 200.

Another solution is to use the Non-StopWrite mechanism to immediately switch a new file, use 150 as the file name, and immediately reupload the data in the range of 150-200. If there is new data, it can also be uploaded with this data immediately. We find that the hybrid model has significant advantages.

• For most of the time when no successful response is received, it is due to uploading failure instead of timing out. Switching files immediately can reduce the number of RPCs without checking in file size.

• Immediate reupload does not block subsequent new data uploads and is not prone to cause backend data write failure which results in backpressure and consequent front-end write failure.

• It doesn't matter whether data in the range of 150-200 is written successfully in the first file because this data will not be read. Especially for models that do not support granularity atomic write requests, if the result of the last request is 180, error handling will be very complicated.

Note: The community version will support this feature soon.

In 2021, I first encountered the terms read fan-out and write fan-out to describe a design solution, which succinctly capture the essence of application performance design. In various business scenarios, we often employ read/write fan-out and format changes to store additional data in a more efficient or cost-effective storage solution. Alternatively, we utilize read fan-out to reduce data redundancy (although reducing indexes can increase average query costs).

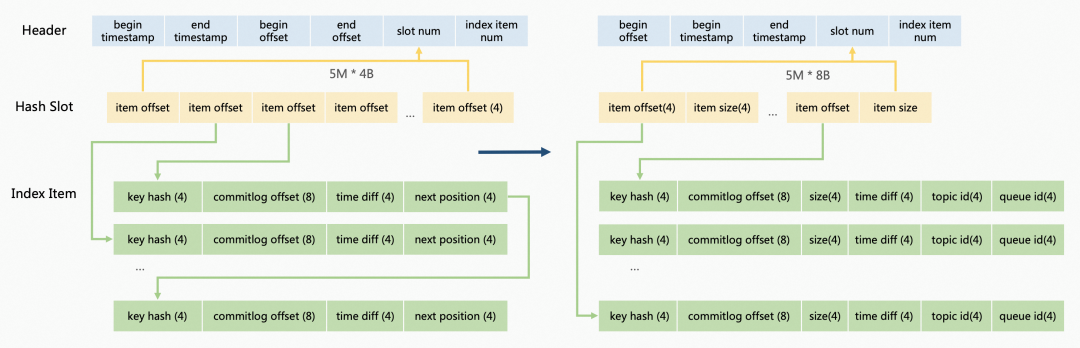

In RocketMQ, a hash-based persistence index file (not append-only) is first constructed in memory, and then the data is asynchronously persisted to disk using mmap. This file enables users to track messages based on information such as the key and message ID.

For each individual message, the hash(topic#key) % slot_num is calculated first. The hash slot (highlighted in yellow in the diagram) is selected as the pointer to the random index. The object index is attached to the index item, and conflicts in the hash slot are resolved using a hash zipper. This creates a linked list where the current slot is stored in reverse order based on time. It is important to note that querying requires randomly reading multiple linked list nodes..

I/O per second of cold storage is very expensive, so we hope that the design can be optimized for queries. The new file structure is similar to that of maintaining an LSM tree with no GC and only one compaction operation. The following adjustments are made to the data structure.

(1) Wait for a local index file to be full and avoid modifications. Asynchronously compact on the storage medium with high IOPS, and delete the original file after compaction.

(2) The latency of queries from cold storage is high, but in scenarios where the amount of data returned in a single time is not too large, the latency is not significantly influenced. The data structure is optimized during the compaction. It can replace multiple random point queries with one query of a continuous piece of data.

(3) The List<IndexItem> pointed to by the hash slot is continuous. During query, all records with the same hashcode can be retrieved at a time according to the item offset and item size in the hash slot and filtered in the memory.

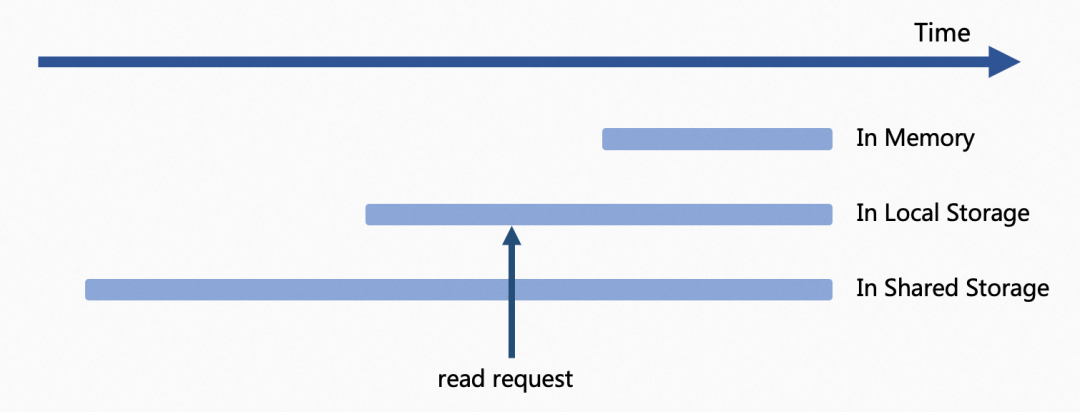

Reading is the reverse process of writing, and there is much to be considered and weighed in engineering about where to retrieve the desired data first. As shown in the figure, recent data is cached in memory, early data in memory and secondary storage, and earlier data only in secondary storage. As memory has a faster speed than storage medium, when the accessed data exists in memory, it is directly written to the client through the network. If the accessed data exists both in the local disk and secondary storage, as is shown in the figure, then which layer the request falls to should be weighed according to the characteristics of the primary and secondary storage.

There are two typical ideas:

(1) Data storage is regarded as a multi-level cache: The higher-level media reads and writes at a higher speed. Requests are preferentially queried to the upper-level storage. If the data does not exist in the memory, the local disk is queried. If it does not exist in the local disk, the secondary storage is queried.

(2) The data read from the secondary storage is continuous because the data is compacted when it is moved to cold storage. In this case, I/O per second of the more valuable primary storage is reserved for online businesses.

The tiered storage of RocketMQ abstracts this selection into a read policy. The queue offset in the request is used to determine which interval the data is in, and the selection is based on the specific policy.

• DISABLE: Reading messages from multi-tiered storage is disabled. This may be because the data source does not support it.

• NOT_IN_DISK: Messages that are not stored in the primary storage are read from the secondary storage.

• NOT_IN_MEM: Messages that are not stored in memory (cold data) are read from multi-tiered storage.

• FORCE: All messages must be read from multi-tiered storage. Currently, this feature is only used for testing.

TieredMessageFetcher is a specific implementation of RocketMQ tiered storage to retrieve data.

To accelerate the speed of reading from secondary storage and reduce the overall number of requests to secondary storage, RocketMQ adopts the design of the prereading cache.

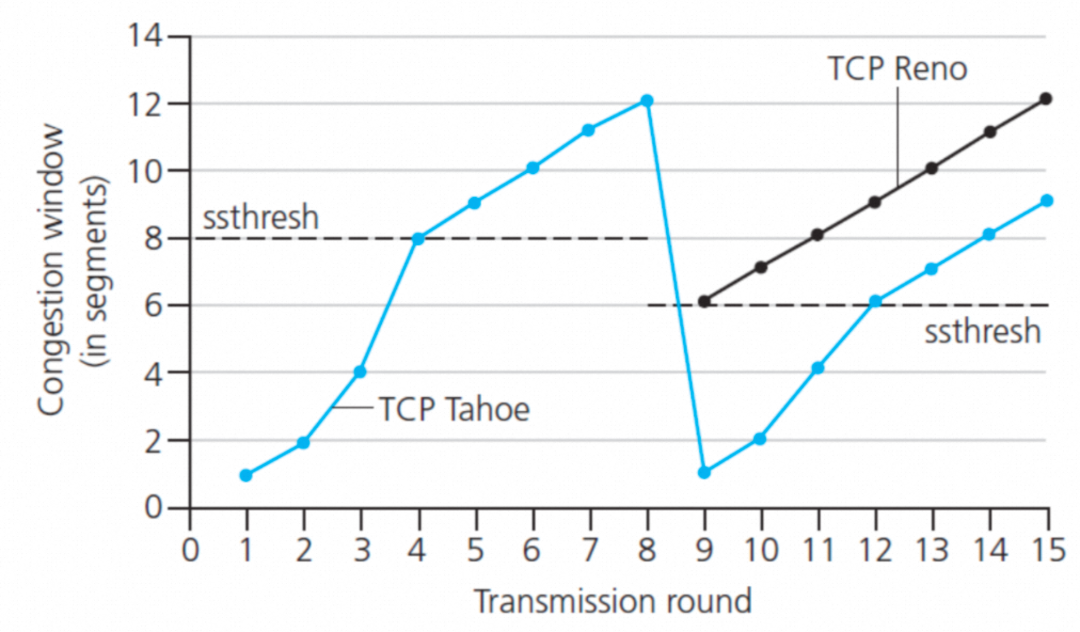

When TieredMessageFetcher reads messages, more messages are read ahead. The design of the prereading cache drew on the TCP Tahoe congestion control algorithm. The number of messages read ahead each time is controlled by the additive-increase and multiplicative-decrease mechanism, similar to the congestion window:

• Additive-increase: Starting from the smallest window, the number of messages increased each time is equal to the batchSize on the client.

• Multiplicative-decrease: If all cached messages are not pulled within the cache expiration time, which is often caused by message accumulation on the server due to the full client cache, the number of preread messages next time is reduced by half when the cache is cleared.

• In addition, when the consumption speed on the client is fast, the number of messages read from the secondary storage is large, and a segmentation policy is used to concurrently retrieve data.

In addition to normal messages, RocketMQ allows you to set long-term scheduled messages in the next tens of days, but this data occupies the storage space of hot data.

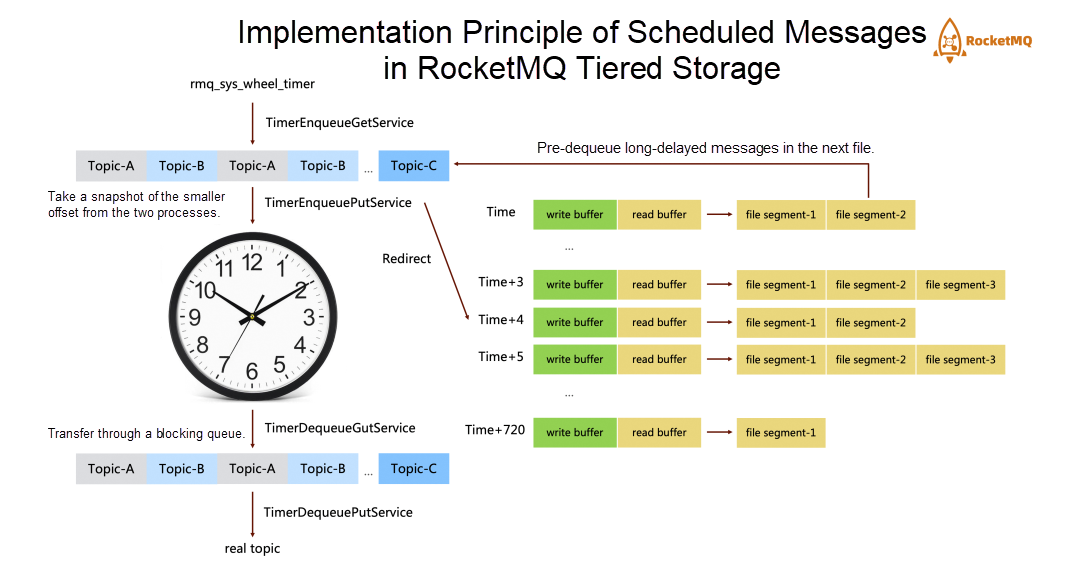

RocketMQ implements a time wheel based on the local file system. The overall design is shown in the left part of the following figure. All scheduled messages on a single node are first written to the system topic rmq_sys_wheel_timer, and then put to the time wheel. After the messages are dequeued, the topic of these messages is restored to the business topic. Reading data from the disk and putting the message index into the time wheel involve I/O and computing. To reduce lock contentions in these two phases, Enqueue is introduced as a waiting queue for transit. EnqueuGet and EnqueuePut are responsible for writing and reading data respectively. It is a simple and reliable design.

We can find that all messages will enter the time wheel, which is also the root cause of the occupied storage space.

• Message writing: Scheduled messages in RocketMQ's tiered storage are distributed for EnqueuePut. Messages whose scheduled time is a few hours later than the current time are written to the TimerFlatFile based on tiered storage. We have maintained ConcurrentSkipListMap timestamp /, TimerFlatFile> timerFlatFileTable. A TimerFlatFile is set for each hour. Messages whose scheduled time is between T + n and T + n + 1 are first mixed and appended to the corresponding file of T + n.

• Message reading: Messages whose scheduled time is one hour later than the current time are dequeued ahead and re-enter the system topic of the local TimerStore. Their scheduled time is within a short period in the future, so they will not enter the time wheel.

There are some considerations in terms of engineering in this design:

• There are many keys in the timerFlatFileTable, so will data in tiered storage be fragmented? The underlying layer of a distributed file system generally uses an LSM-like structure, but RocketMQ only focuses on the LBA structure. It optimizes the buffer of Enqueue to write data in tiered storage in batches.

• A reliable offset is important. From the time wheel to timerFlatFileTable, Enqueue can share one commit offset. For a single message, as long as it enters the time wheel or is uploaded, we consider it persisted. Updating data to the secondary storage requires batch buffering, so the update of the commit offset is delayed. However, the buffering time is controllable.

• We find that occasionally, dumping local storage to secondary storage is slow. Using a double-buffered queue to implement read/write splitting (as shown in green in the figure), the message is put into the write cache, then transferred to the read cache queue, and finally enters the upload process.

Compression is a well-known method to optimize data exchange in terms of time and space. It aims to reduce disk usage or network I/O transmission with minimal CPU overhead. In RocketMQ's hot storage, compression is applied to store individual messages larger than 4 KB. For cold storage, compression and archiving can be implemented at two levels:

• At the message queue business level, where the body size is typically similar across most business topics, compression can reduce the size to several times or even tens of times smaller.

• At the underlying storage level, data can be divided into multiple data blocks using EC erasure codes. Redundant blocks are generated based on specific algorithms. In the event of data loss, the remaining data blocks and redundant blocks can be used to recover the lost data blocks, ensuring data integrity and reliability. By using a typical EC algorithm, the storage space usage can be reduced to 1.375 replicas.

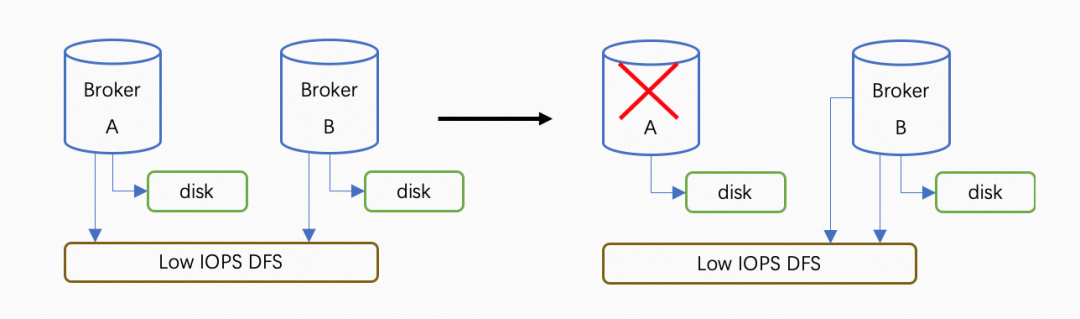

There are different interpretations of Serverless in the industry. In the past, RocketMQ nodes did not share storage, resulting in fast scale-out but slow scale-in. For example, if Machine A needs to go offline, you would have to wait for normal messages to be consumed and all scheduled messages to be sent out before performing any operations. However, with the tiered storage design, cross-node agents can read data from offline nodes through shared disks. As shown in the right diagram, Node B can read the data from Node A, effectively releasing the computing and primary storage resources of Node A.

The main process of scaling in is as follows:

RocketMQ storage has encountered interesting scenarios and challenges in the evolution of the cloud-native era. This complex project requires comprehensive optimization. To prioritize portability and generality, we have not effectively adopted novel technologies such as DPDK, SPDK, and RDMA. However, we have successfully addressed many engineering challenges and built the entire tiered storage framework. In future development, we will introduce more storage backend implementations to further optimize details such as latency and throughput.

[1] Chang, F., Dean, J., Ghemawat, S., et al. Bigtable: A distributed storage system for structured data. ACM Transactions on Computer Systems, 2008, 26(2): 4.

[2] Liu, Y., Zhang, K., & Spear, M. Dynamic-Sized Nonblocking Hash Tables. In Proceedings of the ACM Symposium on Principles of Distributed Computing, 2014.

[3] Ongaro, D., & Ousterhout, J. In Search of an Understandable Consensus Algorithm. Proceedings of the USENIX Conference on Operating Systems Design and Implementation, 2014, 305-320.

[4] Apache RocketMQ. GitHub, https://github.com/apache/rocketmq

[5] Verbitski, A., Gupta, A., Saha, D., et al. Amazon aurora: On avoiding distributed consensus for i/os, commits, and membership changes. In Proceedings of the 2018 International Conference on Management of Data, 2018, 789-796.

[6] Antonopoulos, P., Budovski, A., Diaconu, C., et al. Socrates: The new sql server in the cloud. In Proceedings of the 2019 International Conference on Management of Data, 2019, 1743-1756.

[7] Li, Q. More Than Capacity: Performance-oriented Evolution of Pangu in Alibaba. Fast 2023 https://www.usenix.org/conference/fast23/presentation/li-qiang-deployed

[8] Lu, S. Perseus: A Fail-Slow Detection Framework for Cloud Storage Systems. Fast 2023

508 posts | 49 followers

FollowAlibaba Cloud Native - July 18, 2024

Alibaba Cloud Native Community - July 17, 2023

Alibaba Cloud Native Community - December 6, 2022

Alibaba Cloud Native - June 6, 2024

Alibaba Cloud Native Community - February 15, 2023

Alibaba Cloud Community - December 21, 2021

508 posts | 49 followers

Follow Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Cloud Native Community