By Xiaojian Sun (Apache RocketMQ Committer)

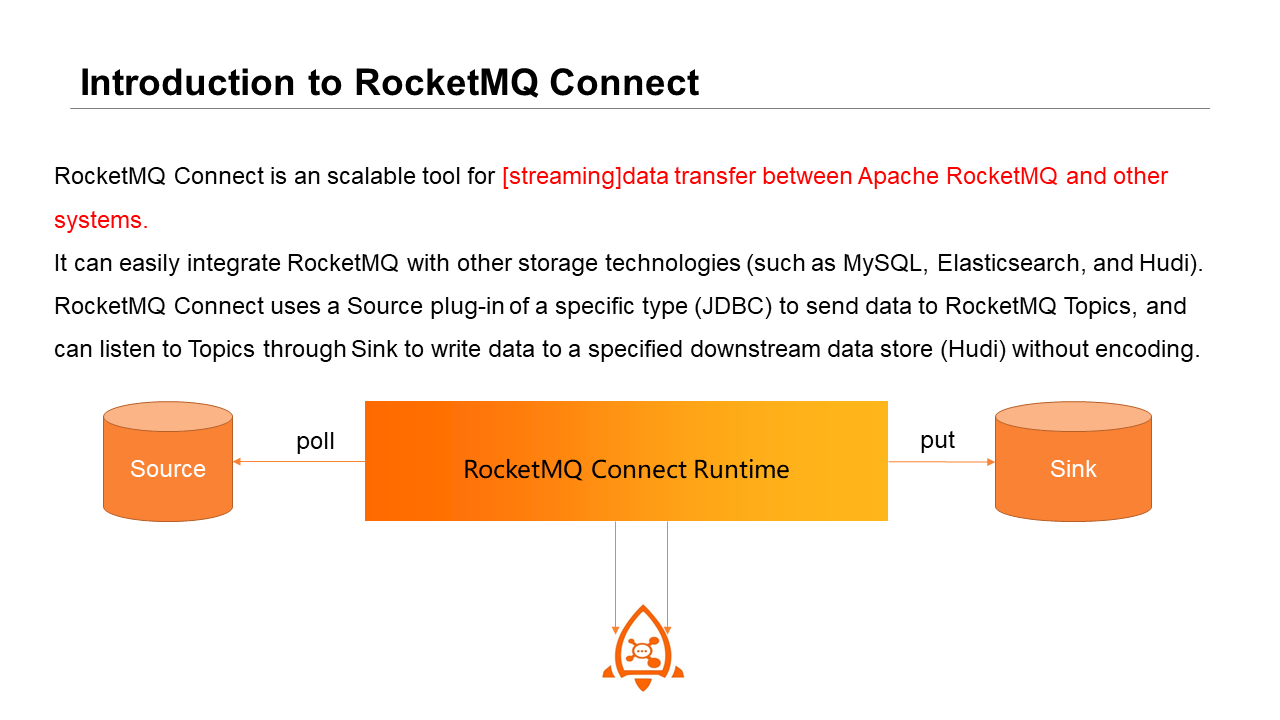

RocketMQ Connect is a scalable tool for stream data transfer between RocketMQ and other systems. It can easily integrate RocketMQ with other storage technologies. RocketMQ Connect uses a specific Source plug-in type to send data to RocketMQ Topics and uses Sink to listen to Topics to write data to a specified downstream data store. When using this tool, you can configure connectors in JSON mode. No encoding is required. Stream data transfer is bridged from Source to Sink through RocketMQ.

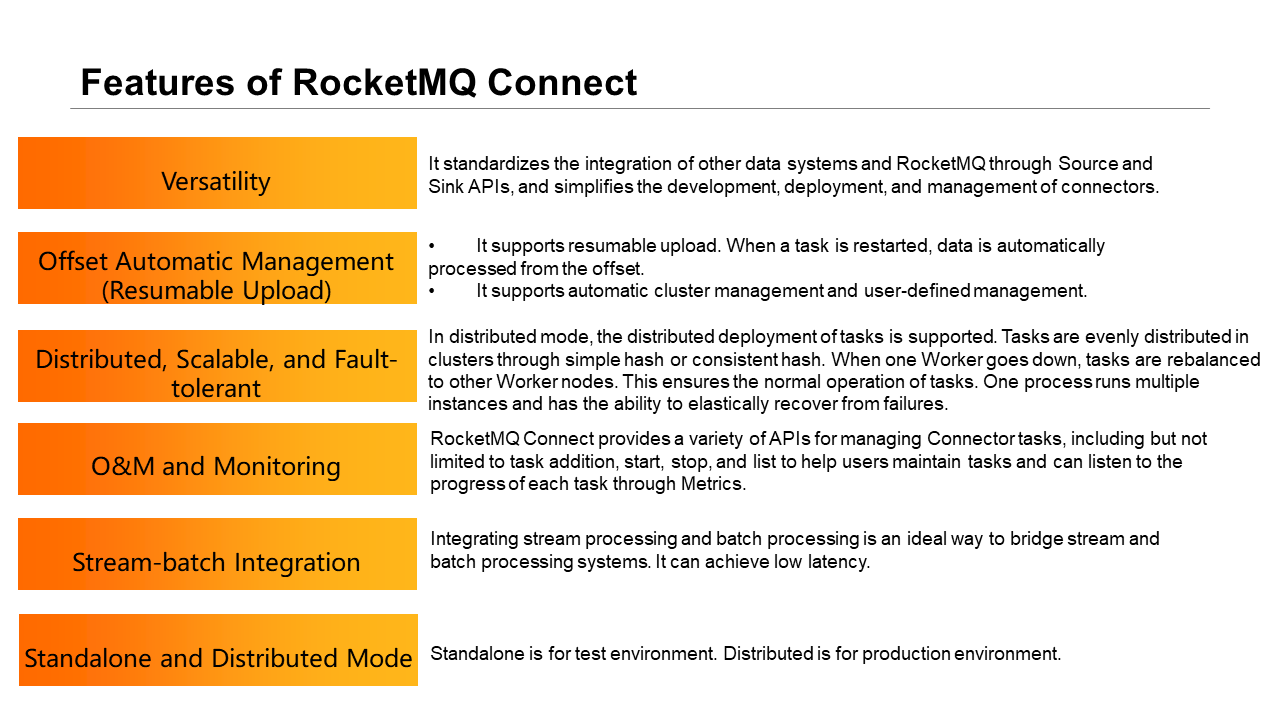

RocketMQ Connect has the following features:

① Generality: Connect has formulated standard APIs, including Connectors, Tasks, Converters, and Transforms. Developers can extend their plug-ins through standard APIs to meet their requirements.

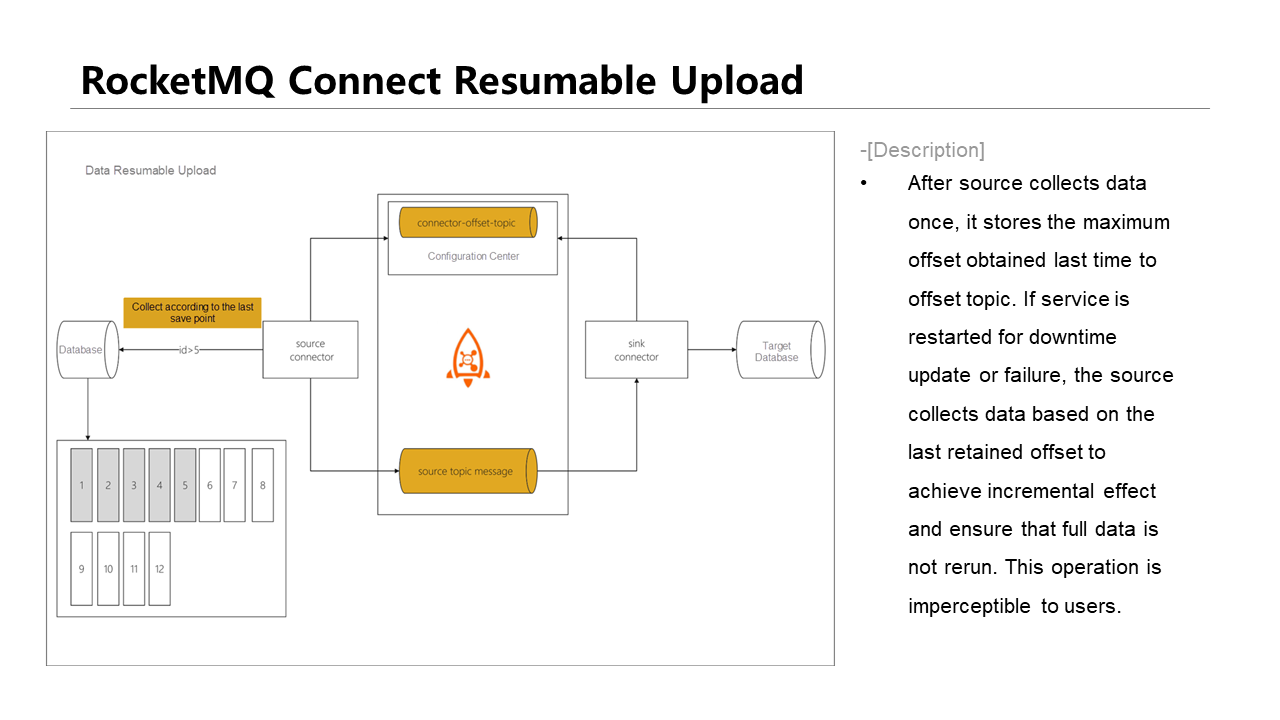

② Offset Automatic Management (Resumable Upload): In terms of Source, users can use Offsets to pull incremental data when developing Connect. Systems automatically manage Offsets and persist the Offset information of the last pull. When tasks are restarted, incremental data can be pulled based on the Offsets submitted last time. You don't need to synchronize data from the beginning. In terms of Sink, based on the Offset submission strategy of RocketMQ, Offsets can be automatically and internally submitted. Automatically process when tasks are running and allow users to configure Offset submission interval. If a system's Offsets can already meet requirements, no additional maintenance of Offsets is required. If it cannot, you can use Task APIs to perform maintenance. Task APIs provide Offset maintenance capability. It can determine Offset persistence logic in Connect (such as persisting in MySQL and Redis). When tasks restart, users can automatically obtain the next execution Offsets from Offset storage offsets and continue to do the incremental pulling.

③ Distributed, Scalable, and Fault-Tolerant: RocketMQ Connect can deploy in a distributed manner and comes with fault-tolerant capabilities. When a Worker goes down or is added to a cluster, tasks are automatically redistributed and run to balance among the Workers in each cluster. If tasks fail, they are automatically retried. The tasks can be automatically rebalanced to different Worker machines after being retried.

④ O&M and Monitoring: Connect provides standard cluster management functions, including the Connect management function and plug-in management function. We can call API operations to start or stop a task. We can also view the running status and exception status of the task. In addition, after data is pulled and written, the total amount of data, rate, and other metrics can be reported by Metrics. Metrics also provides standard reporting APIs that can be used to extend metrics and report methods based on standard APIs (such as reporting to RocketMQ Topics and Prometheus).

⑤ Integration of Batch and Stream: When Source pulls data, it can do batch pulling through JDBC or specified plug-in SDK and convert to stream pulling. It can also use CDC mode to obtain Source full and incremental change data through incremental snapshot or MySQL Binlog listening mode and push it to RocketMQ. Downstream can perform stream processing through Flink or RocketMQ Stream for status computing. It can also be directly stored in data storage engines (such as Hudi, Elasticsearch, and MySQL).

⑥ Standalone and Distributed Modes: Standalone mode is mainly used in a test environment, and Distributed mode is in a production environment. During the trial, you can use the Standalone mode for deployment. Since it does not do Config storage, it can bring independent tasks each time it is started to help with debugging.

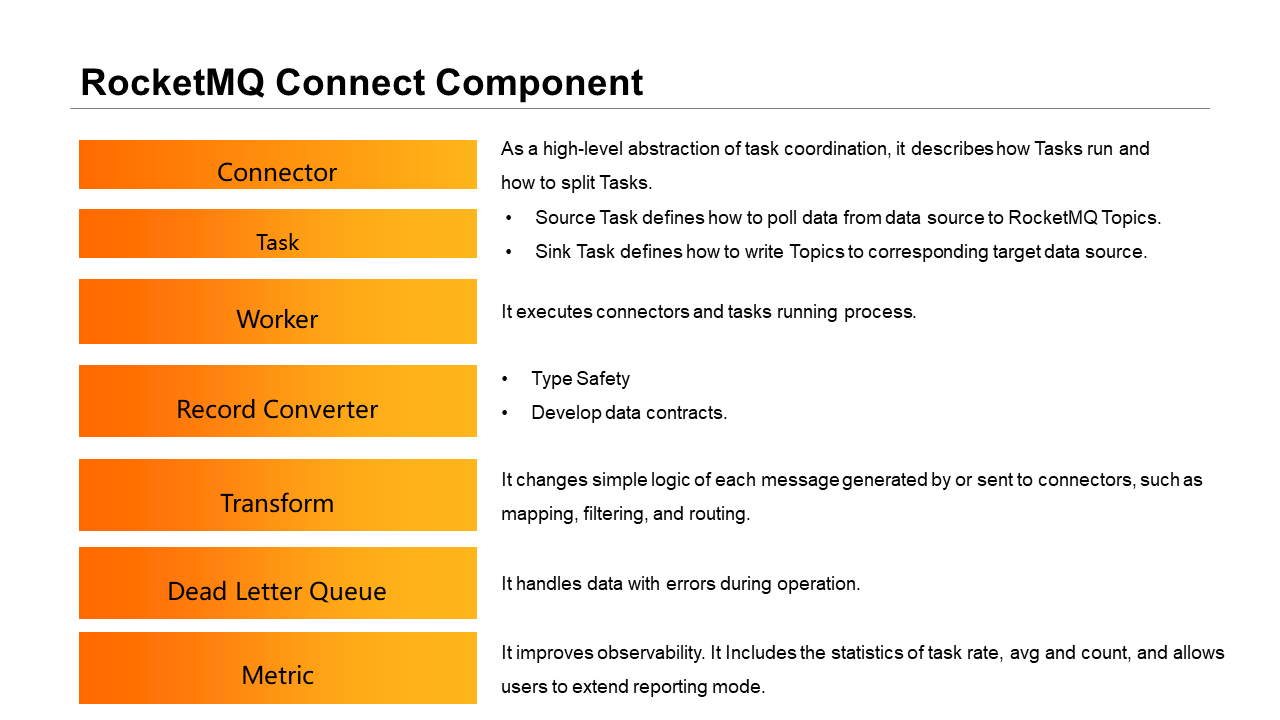

Connect contains the following components:

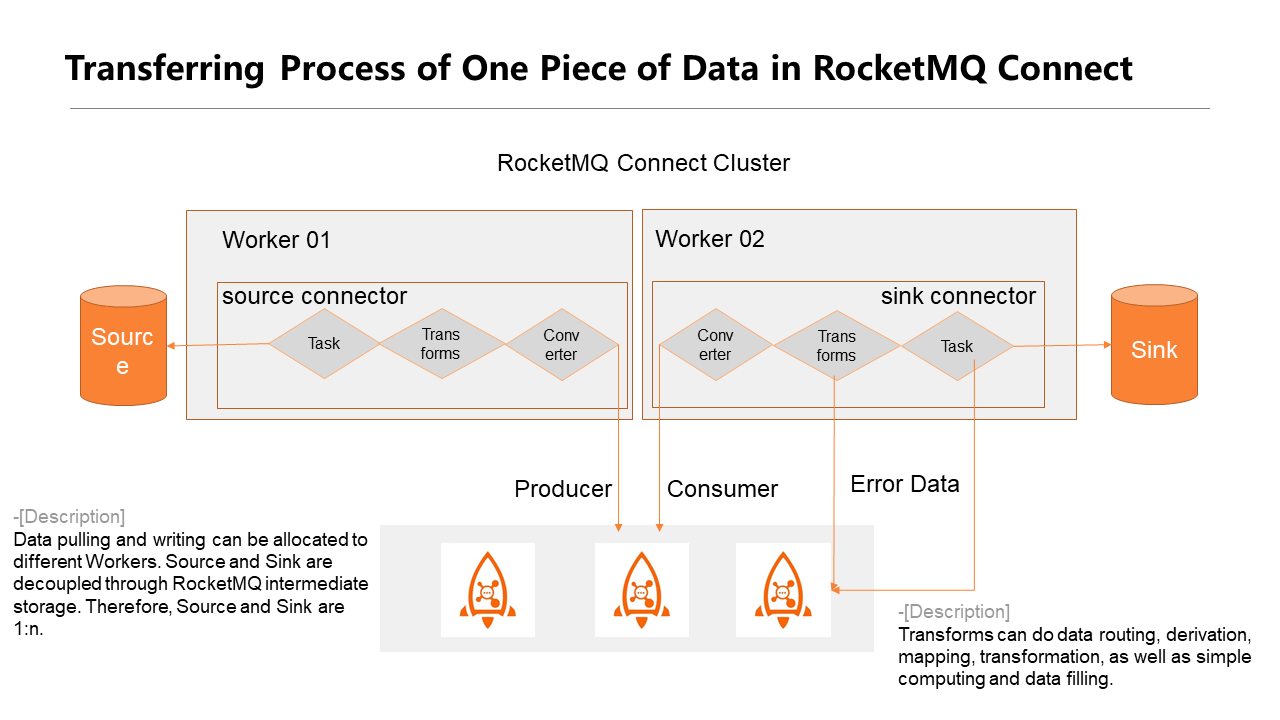

The preceding figure shows the data stream transfer process in Connect.

In a distributed deployment, Source and Sink can be in different Workers and do not depend on each other. A Connector can contain Tasks, Transforms, and Converters to execute in sequence. A Task pulls data from the source. Task concurrency is determined by the sharding of the custom plug-in. After data is pulled, if Transforms are configured in the middle, the data will pass through one or more configured Transforms in sequence and then transfer the data to Converter. The converter will reorganize the data into a transferable mode. If RocketMQ Schema Registry is used, Schema will be verified, registered, or upgraded. After the transformation, the data will be written to the middle Topics for downstream Sink to use. Downstream Sink can selectively listen to one or more Topics. The data transferred from Topics can be in the same storage engine or heterogeneous storage engines. After the data is converted in Sink, it is finally transferred to the stream computing engine or directly written to the destination storage.

During conversion, Source Converter and Sink Converter must be consistent. The formats of Schemas parsed by different Converters vary. If different Converters are used, Sink would fail to parse data. We can use custom Transforms to make components compatible with each other.

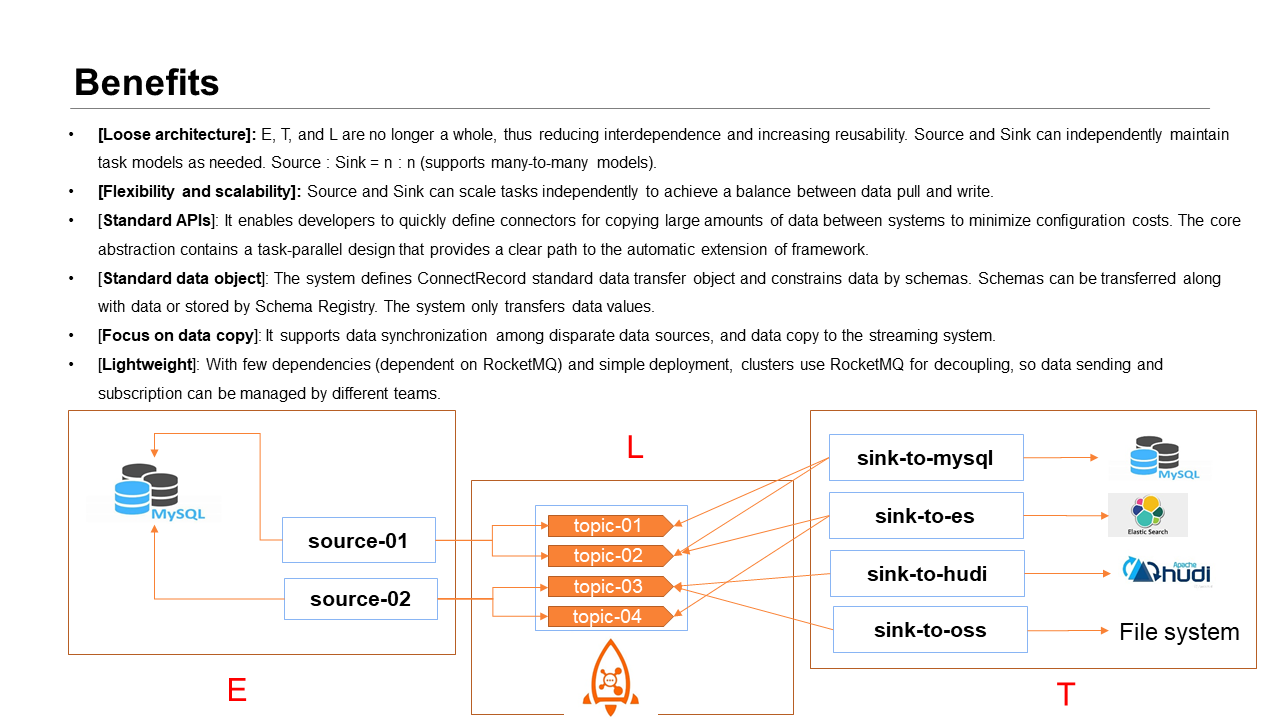

The preceding architecture has the following advantages:

① Loose Architecture: Source and Sink are decoupled through Topics. E, T, and L are no longer integrated. Generally, the QPS of data read from and written to the same storage engine is different. Therefore, integrated ETL is limited by the writing performance of the target database when reading data.

After the Source and Sink in RocketMQ Connect are decoupled, Source and Sink can be scaled independently to achieve a dynamic balance between data reading and writing without affecting each other.

② Standard APIs: They reduce using difficulty and are easy to extend. The specific way of writing concurrency is abstracted in APIs, and plug-in developers can customize split.

③ Standardized Data Abstraction: After Topics are used for decoupling, a data contract between Source and Sink needs to be established. Connect mainly uses Schemas to constrain data. It is aimed to support data integration among disparate data sources.

④ Focus on Data Copy: Connect focuses on data integration with disparate data sources and does not perform stream computing. It supports data to be copied to stream systems (Flink and RocketMQ Stream) for stream computing.

⑤ Lightweight: Less dependency – If a RocketMQ cluster is already in clusters, you can directly deploy RocketMQ Connect to synchronize data. The deployment is simple, and no additional scheduling components are required. RocketMQ Connect comes with a task allocation component. No additional attention is required.

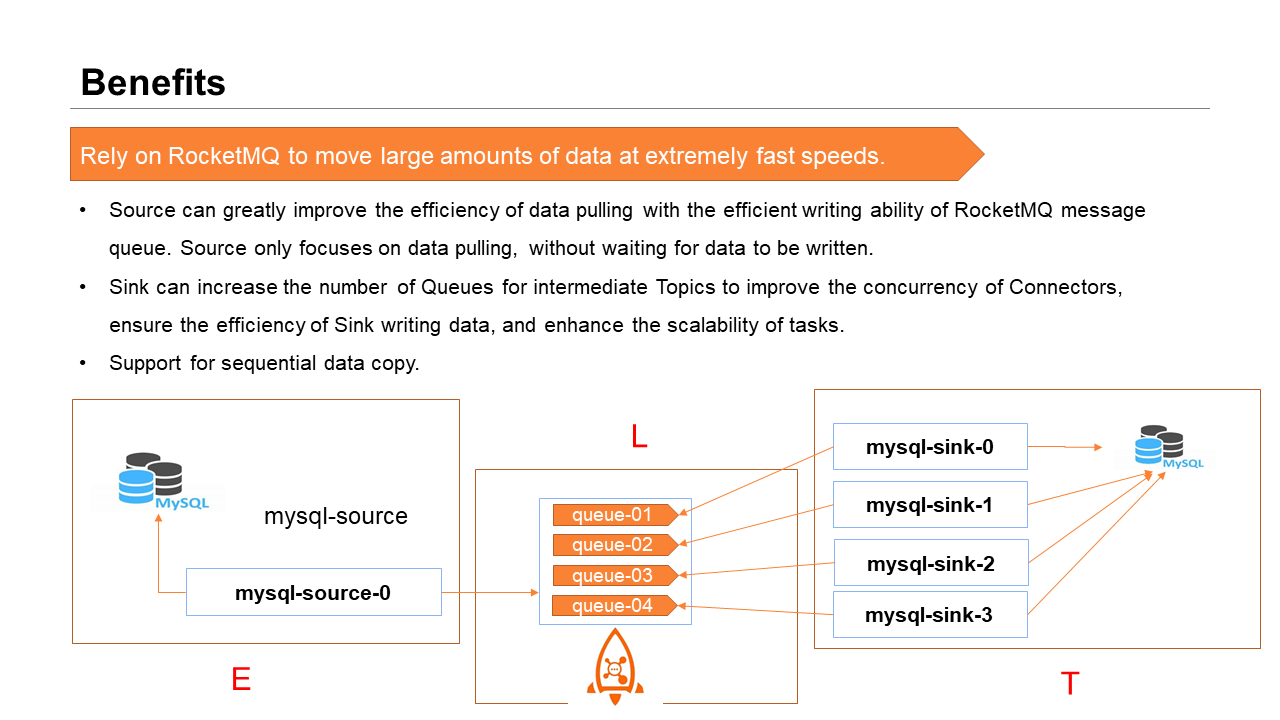

In addition, relying on the powerful performance of RocketMQ, you can migrate large-scale data between different systems. Source depends on the writing capability of RocketMQ, so you do not need to wait for data to be written at the end of a transaction. Based on the extension capability of Topics, the Sink can determine the concurrency of downstream Sink based on the number of partitions in the intermediate topics and automatically expand downstream Sink. After the tasks are expanded, the system will reallocate Connectors to ensure load balancing. Offsets are not lost, and you can continue to run down based on the last running status without manual intervention. We can also rely on the order policy of RocketMQ to synchronize sequential data.

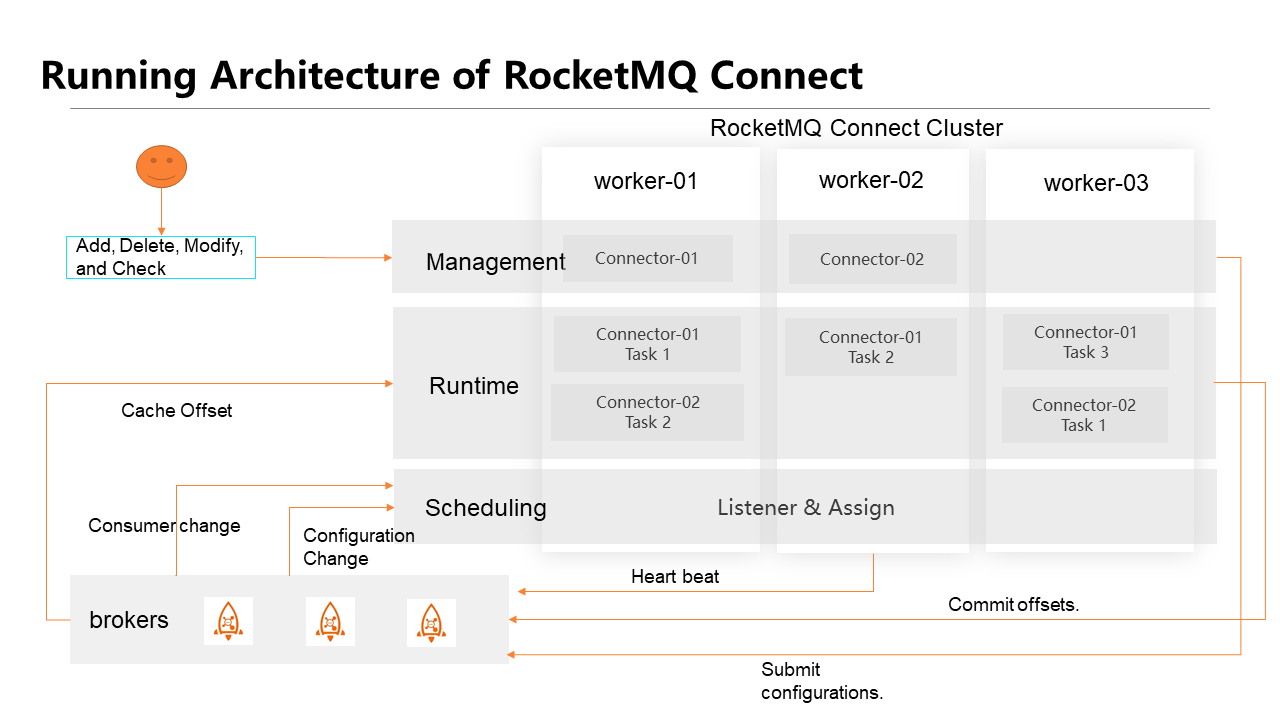

Management Area: It can change the configurations of a task or receive queries. We can create, delete, update, start, stop, and view Connectors. After tasks are changed, the management end submits the tasks to the shared Topics of RocketMQ. Each Worker listens to the same Topics. Therefore, each Worker can obtain config information and trigger cluster rebalances to re-assign tasks to achieve global task balance.

Running Time Zone: It provides running space for the Tasks assigned to current Workers. It includes task initialization, data pulling, Offset maintenance, Task start/stop status reporting, and Metrics reporting.

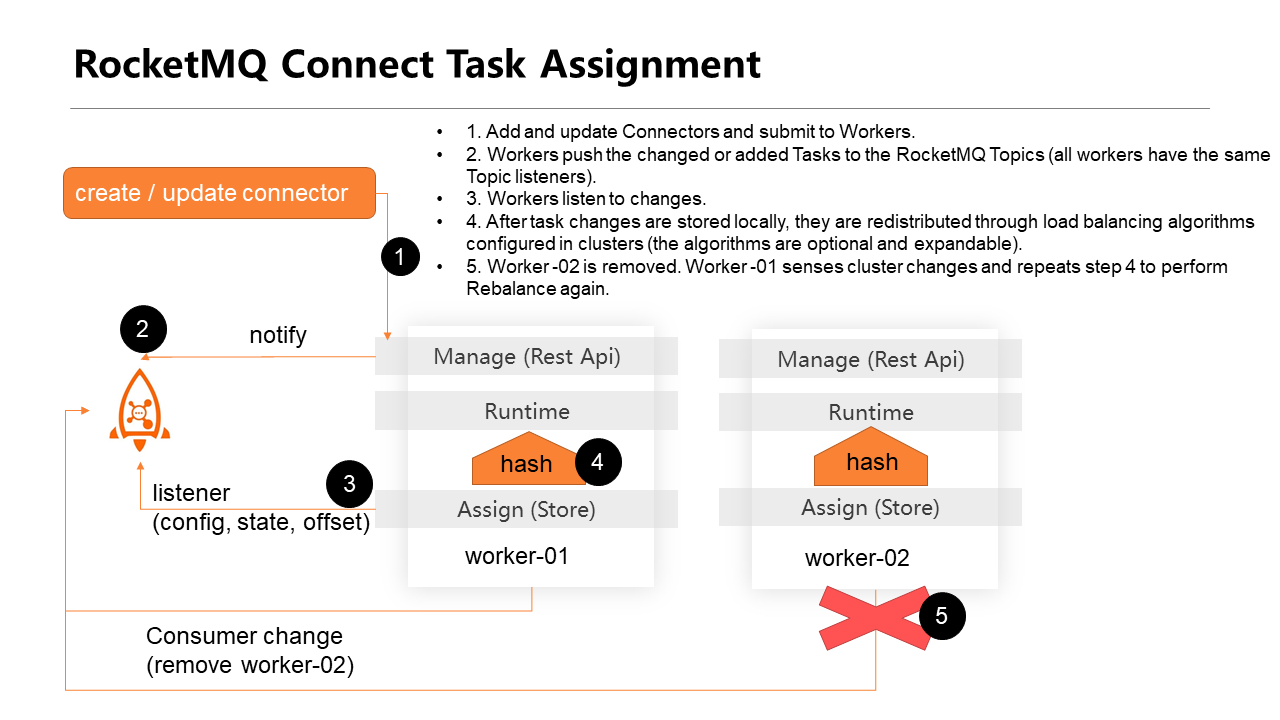

Scheduling Zone: Connect provides a task allocation and scheduling tool that uses hash or consistent hash to balance tasks among Workers and listens to the changes of Workers and Connectors. For example, it listens to the adding or deleting of Workers, the changing of Connectors' configuration, or the starting and stopping of tasks. Status obtainment change is used to update the status of local tasks and determine whether to perform the next round of Rebalance to achieve the load balancing of the entire clusters.

The management zone, running time zone, and scheduling area exist in each Worker of each cluster. Communication among Workers in a cluster is mainly notified through shared Topics. There are no master nodes and slave nodes between Workers. This makes cluster operation and maintenance convenient. You only need to create corresponding shared Topics in Brokers. However, since the change of Task status only occurs in one Worker, sharing between clusters will have a short delay. Therefore, temporary inconsistency may occur when you use Rest APIs to query Connector status.

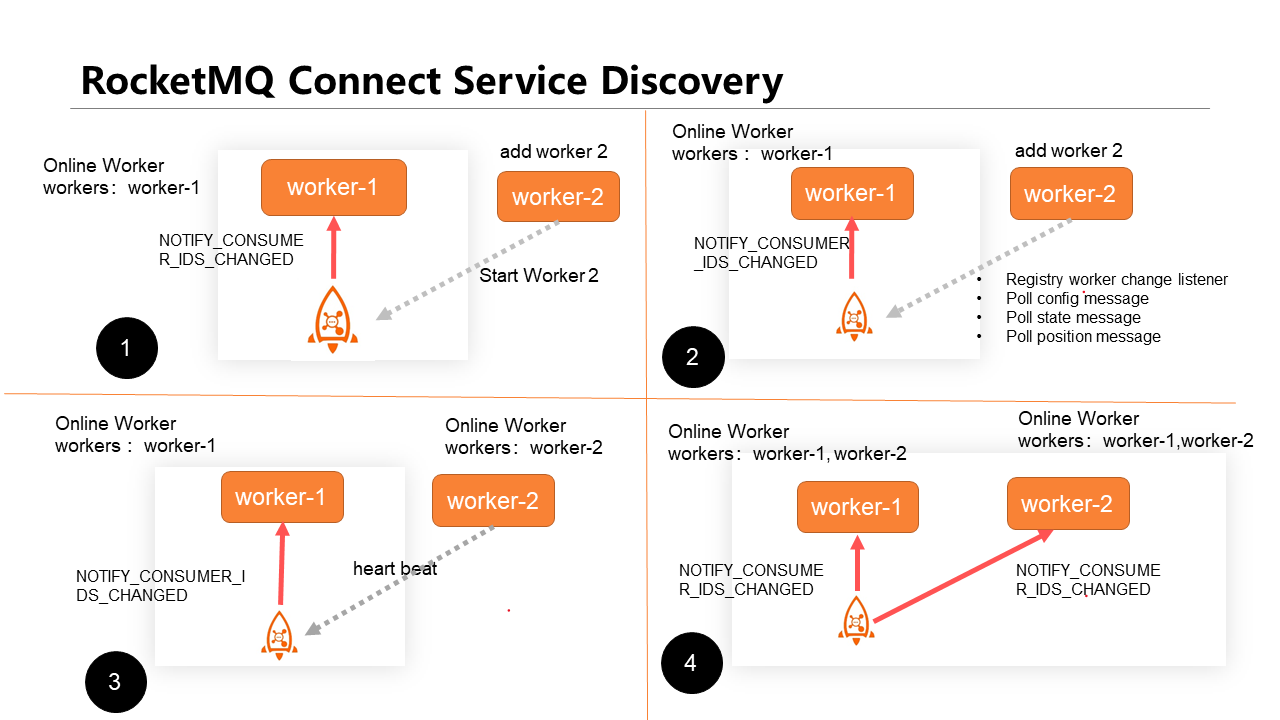

Service Discovery Process – When a change occurs, each Worker can discover the node change to realize automatic service discovery.

① When a new Worker is started, it would register a client with the RocketMQ Topics it relies on to change listeners. In the same Consumer Group, when a new client is added, the client that has registered for the event will receive a change notification. After receiving the change, Workers will update the Worker list of the current cluster.

② The same effect occurs when a Worker is down or scaled in.

The task assignment process of RocketMQ Connect is listed below:

Create a Connector by calling Rest APIs. If Connector does not exist, it is automatically created. If the Connector exists, it is updated. After it is created, a notification is sent to Config Topics to notify Workers that the task has changed. After obtaining the change, Workers are reallocated to achieve load balancing. Stopping a task also produces the same effect. Currently, each Worker stores the full task and status but only runs the Tasks assigned to the current Workers.

Currently, the system provides two task allocation modes by default: simple hash and consistent hash. I recommend consistent hash mode because, in the case of consistent hashing, the scope of changes during Rebalance is smaller than that of normal hash changes, and some tasks that have been assigned will no longer be loaded.

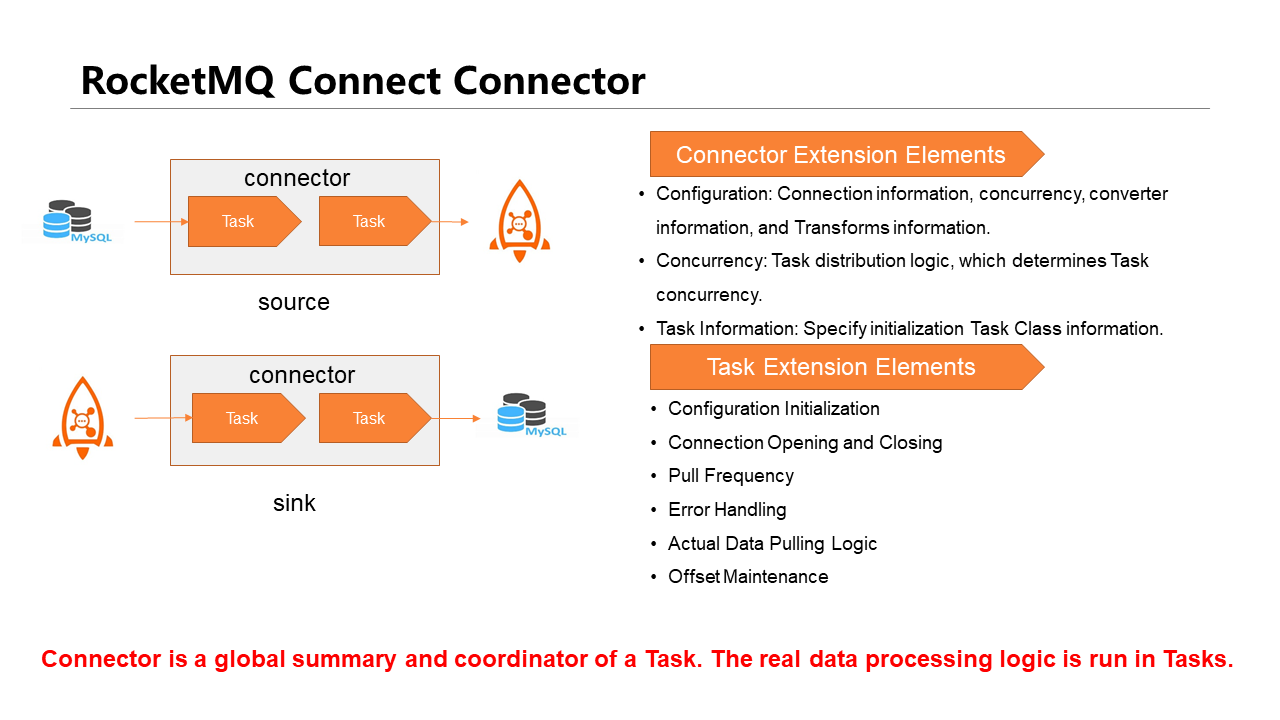

Connector extension elements include custom configuration, concurrency, and Task information.

Custom configurations include connection information (core configuration items), convertor information, and transform information. The connector only serves as the global summary and coordinator of tasks. The actual effect is still generated by assigned tasks. For example, 100 million data is pulled into and executed in multiple Tasks. Therefore, you need to use Connectors to split Tasks according to reasonable logic. These splitting operations need to be specified when Connectors are declared. After the connectors split the configuration, it informs the Tasks of the actual data-pulling logic configuration. The Tasks determine the specific data-pulling method.

Task extension elements include configuration initialization, connection opening and closing, pull frequency, error handling, actual data pull logic, and Offset maintenance.

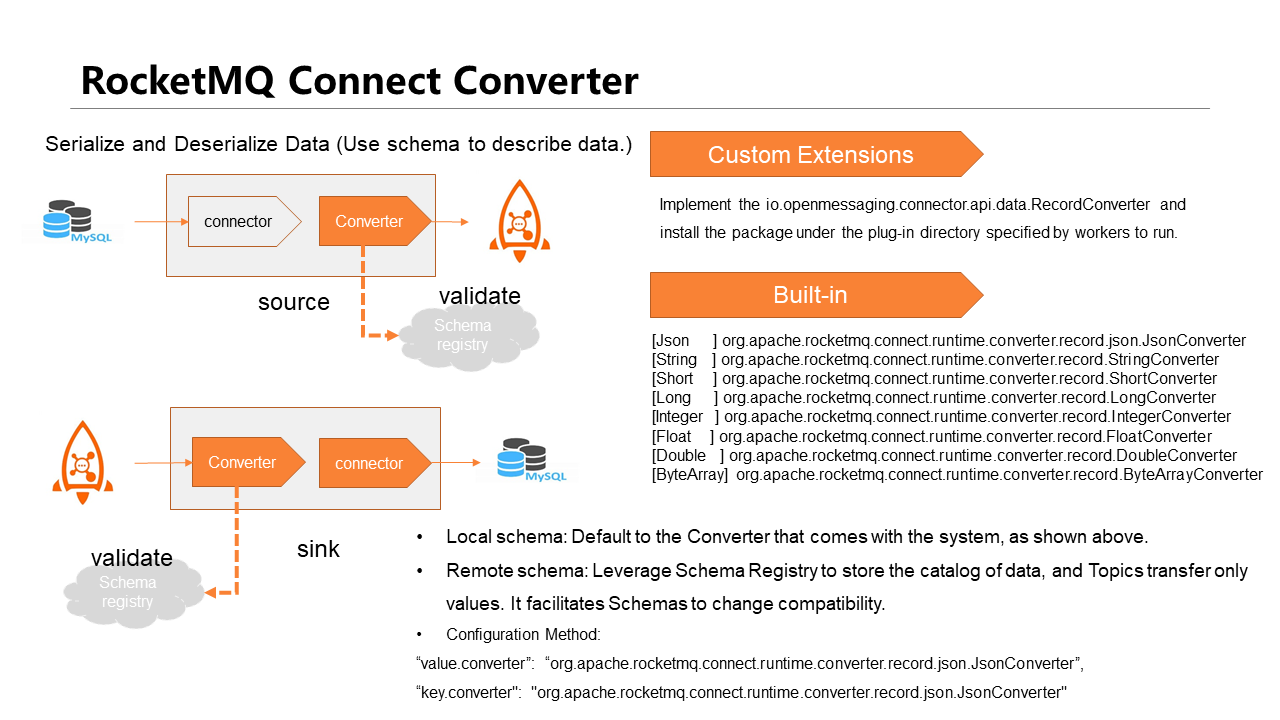

Global Converters throughout the system use the same set of APIs in two modes:

Local Schema: Data is pulled from Source Connect and converted by Converters. During the conversion, the local operation merges Schemas and Values into a Connect Record and passes it downstream. Downstream converts data into a Record using the same Converters and pushes it to the Sink Tasks for data writing. In the middle, a data contract is made through Convert Schemas, which can be converted between Source and Sink. In the local schema, Schemas and Values are transmitted as a whole. Data Body is bloated because each piece of data contains Schema information. However, its advantage is that there is no version compatibility problem.

Remote Schema: Schemas are stored in the remote RocketMQ Schema Registry system during data conversion. Only Values are included during data transfer without Schema constraint information. When Sink subscribes to Topics, it obtains schema information based on record IDs in headers, verifies Schemas, and converts data.

Schema maintenance is in the RocketMQ Schema Registry system. Therefore, you can manually update schemas in the system and then use the specified SchemaIDs for conversion, but the data must be compatible with converters.

Connect Converters have built-in extensions (such as native JSON and common data Converters). If built-in extensions cannot meet requirements, you can extend them with Record Converter APIs. After the extension, the Converter package is placed in the directory of the Worker running plug-in. Then, the system automatically loads the package.

There are two configuration methods: Key and Value. Key annotates the uniqueness of data. It can be Struct data. Value is the actual data transferred.

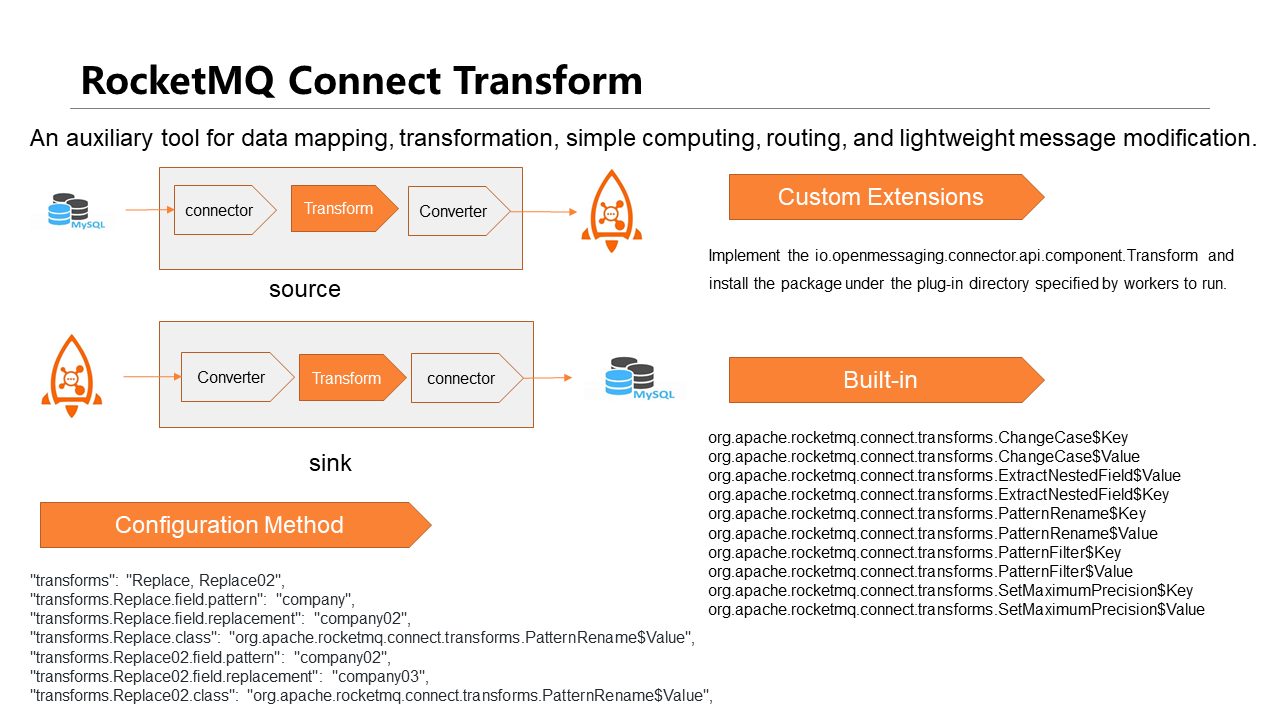

Transform is an auxiliary tool for data mapping, conversion, and simple computing between Connectors and Convertors. If Source Converters and Sink Connectors cannot meet business requirements, you can write Transforms to adapt data. For example, data conversion between different businesses and different data source plug-ins (such as field mapping, field derivation, type conversion, field completion, and complex function computing).

The built-in Transforms in the system include field extension and replacement. If requirements are not met, you can use APIs to extend Transforms. During deployment, you only need to pack the written extension and place it in the corresponding plug-in directory to automatically load it.

The specific configuration method is shown in the lower left of the preceding figure. The operation of Transforms is serial. You can perform multiple transformations on a value and configure multiple Transforms. If you need to configure multiple Transforms, separate them with commas. The names must be unique.

When a Source Task is used to pull data or change listener (for example, when it is used to pull incremental data using JDBC MySQL), you need to specify the Offset incremental pull method. You can use auto-increment ID or Modify time. Each time the data is pulled and sent, the incremental data (ID or Modify time) is submitted to the Offset writer. The system persists the incremental data asynchronously. When tasks are started, Offsets are automatically obtained, and the data is processed from the last Offsets to achieve the resumable upload.

There is no fixed mode when encapsulating Offsets. You can use your method to concatenate Offset keys or Values. You only rely on connecting Offset Topic information in RocketMQ. The information is mainly pushed to other Workers for local Offset updates. If you use the Offset maintenance of the system, you only need to determine reporting logic of maintenance. You do not need to pay attention to Offset commit and Offset rollback modes. They are in charge of the system.

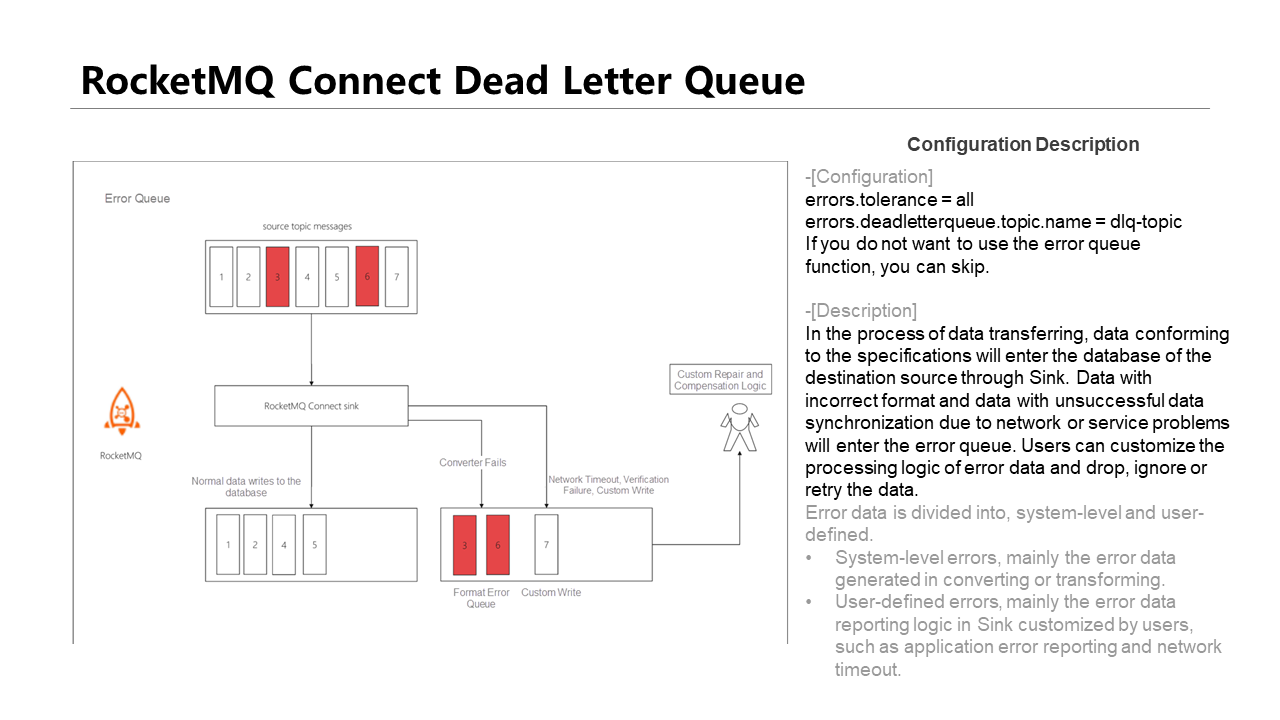

During operation, if the dead letter queue is enabled, correct data is delivered to Sink and incorrect data is delivered to the error queue. The business party can process data in asynchronous mode, but the order cannot be guaranteed. If you want to ensure that data is in order, you must stop Tasks when errors are triggered, fix the data, and start the tasks.

If errors are reported in a single Task, you only need to stop the Task that has errors. The other Tasks are not affected because each Task consumes different Queries when processing data. If Keys are specified, data is partitioned based on the keys, and each Query in the partitions is ordered. Therefore, stopping a single Task does not affect global ordering.

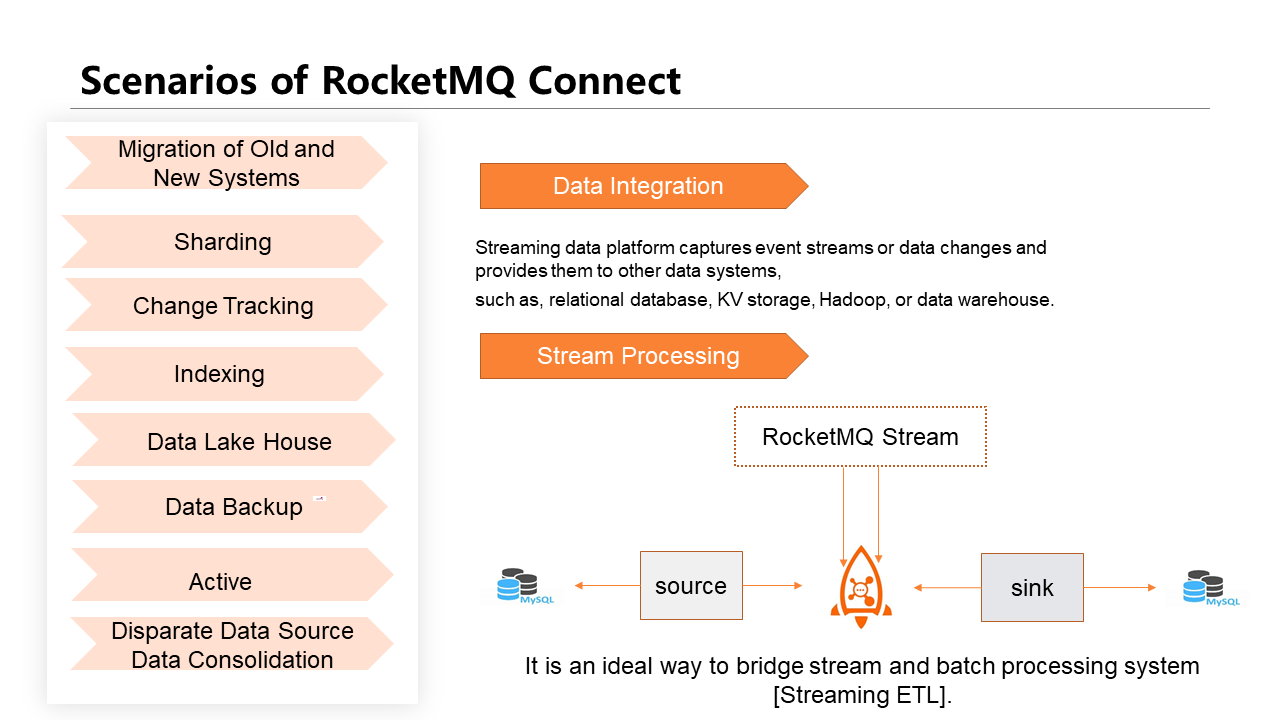

RocketMQ Connect applies to most scenarios where traditional ETL is applicable. In addition, RocketMQ Connect can implement real-time streaming, stream-batch integration, and snapshot functions that cannot be implemented by traditional ETL.

The Scenario of Migrating Data from the Old System to the New System: During the upgrade and change of the business department, if a data type is changed, a table is split or scaled out, or an index is added, downtime may take a long time. We can use RocketMQ Connect to migrate data again.

The Scenario of Sharding: There are many plug-ins for sharding on the market. You can use Connect to adapt to the open-source sharding client. You can also use RocketMQ to create sharding logic, and the Source and Sink remain unchanged. After data is retrieved from a single table, you can perform sharding logic in Transforms. We can use Transforms to perform routing. After routing the data to different Topics, you can drop it by listening to different Topics downstream and dropping it to sharded databases and tables.

Active Scenario: RocketMQ Connect allows copying Topics and metadata among clusters. This ensures the Offsets of multiple clusters are the same.

Change Tracking Scenario: We can use CDC mode to listen for data and send data notifications downstream. Therefore, downstream can achieve data change tracking and instant data update. You can directly push data to the downstream business system through HTTP, which is similar to Webhook, but you need to verify the request and limit the flow.

RocketMQ Connect can be used as a data processing solution in other business scenarios (such as putting data in a lake house, cold data backup, and disparate data source integration).

The overall usage scenario can be roughly divided into two parts: data integration and stream processing. Data integration is mainly used to move data from one system to another. Data can be synchronized in disparate data sources. Stream processing is mainly used to pull batch processing information through batch data or synchronize incremental data to the corresponding stream processing system in CDC mode, perform data aggregation and window computing, and write it to the storage engine through Sink.

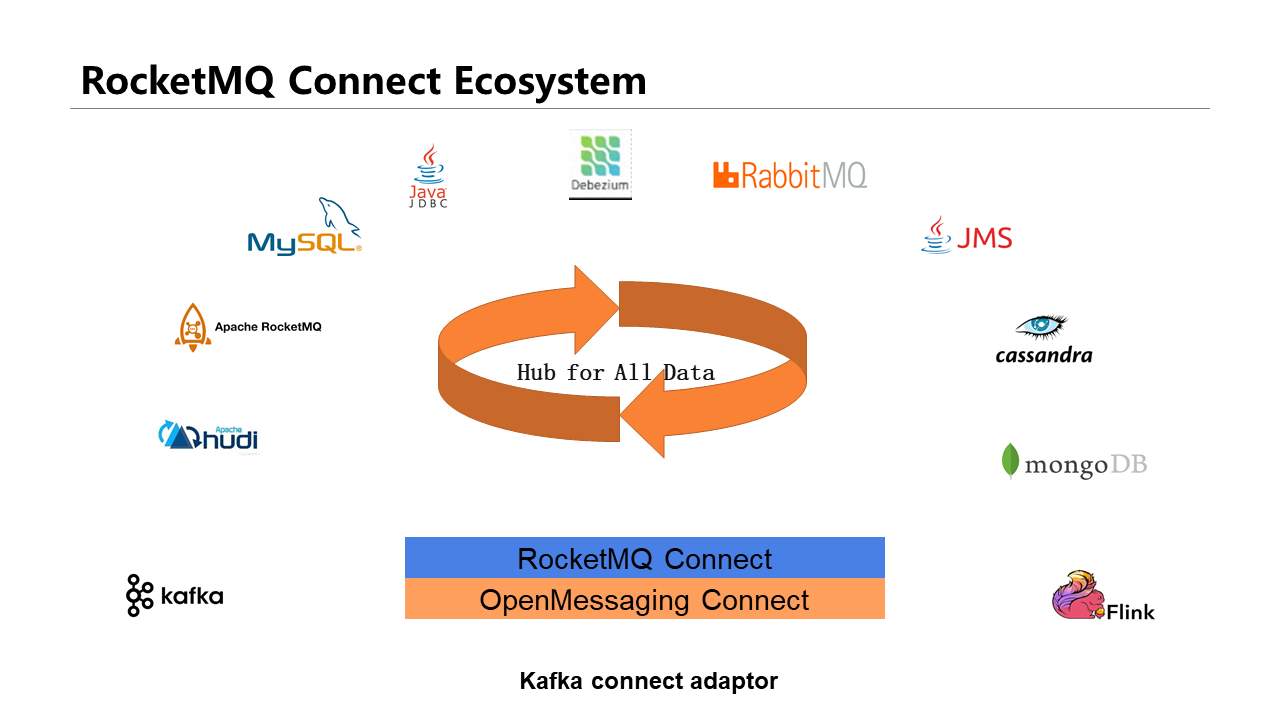

Currently, RocketMQ Connect supports all the products shown in the figure above. The platform also supports the Kafka Connect adaptor.

664 posts | 55 followers

FollowAlibaba Cloud Native Community - July 20, 2023

Alibaba Cloud Native Community - November 23, 2022

Alibaba Cloud Native Community - March 22, 2023

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native Community - July 27, 2022

664 posts | 55 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Alibaba Cloud Native Community