By Wu Yaodi (Wutong)

In the wave of artificial intelligence technology, the performance competition of large models continues to heat up. DeepSeek-R1 has swept the globe with its powerful inference capabilities, while the open-source QwQ from Tongyi has injected new vitality into the industry.However, a key issue has surfaced: neither DeepSeek-R1 (when self-deployed) nor Alibaba Cloud's latest QwQ (currently using API calls) currently support the ability of "networked search." This means that the knowledge boundaries of these models are strictly confined to local training data or closed knowledge bases, preventing them from real-time access to the vast dynamic information on the internet.

Why is this a major limitation?Imagine a scenario where a user asks, "How will the 2025 new energy subsidy policy affect consumer car purchasing choices?" Traditional models can only respond based on a fixed knowledge base from training, while large models equipped with networked search capabilities can also capture the latest policies, industry reports, market data, and even user comments in real-time,producing insights that are both timely and deep. This difference of "real-time dialogue with the world" is a key step for large models from "knowledge base Q&A" to becoming "intelligent decision assistants."

But the reality is that most mainstream models (including the two heavyweights mentioned above) do not yet offer this capability in their open-source versions.This is not only a technical challenge but also a redefinition of the application scenarios for large models——Should we allow large models to break through the "information islands" and truly become the intelligent hub that connects users with the dynamic world?

This article will start from this core question, analyzing the disruptive value of networked search for large model applications and examining how it addresses the limitations of traditional models.

● Core Viewpoint:

Currently, large model applications show two significant divides: those with networked search capabilities and those without.The latter has obvious shortcomings in output quality, timeliness, and user trust.Statistical data shows that integrating networked search can enhance the model's output accuracy by over 50% and increase user satisfaction by over 30%.

● Trend Drivers:

Developers deeply engaged in enterprise AI scenarios have gradually reached a consensus: "An AI without a network is like a tree without roots."Alibaba Cloud's Native API Gateway (AI Gateway) is redefining the standards for intelligent services through deep integration of networked search capabilities.

1. Real-Time Information Direct Connection, Ending the "Knowledge Cutoff Date" Dilemma

● Dynamic Data Acquisition: Break the time limitations of data at the time of model training, and capture information from credible sources like webpages, databases, and APIs in real-time

● Scenario Example: Real-time retrieval of financial news in the finance industry, dynamic querying of the latest clinical guidelines in the medical field.

● Technical Implementation: The Cloud Native API Gateway provides multi-engine networked search capabilities, completing cross-source integration within 1 second.

2. Complex Problems Terminator: From "Answering Questions" to "Solving Problems"

● Multi-Round Dialogue Enhancement: Completing missing information in a process through search (e.g., order number, logistics status).

● Big Data Correlation Reasoning: Analyzing implicit relationships in search results to output structured solutions.

● Scenario Example: A customer service system automatically correlates the user's orders and logistics information from the last 3 months to resolve complaints.

3. Intelligent Cost Optimization: Semantic Caching and Dynamic Routing Combination

● Duplicate Request Interception: By configuring caching services, common issues can be directly responded to through the cache, lowering API call costs by 25%.

● Multi-Model Intelligent Scheduling: Automatically match basic models/professional large models/search-enhanced modes based on query complexity.

● No reliance on local caching; directly connect to obtain the latest data (e.g., breaking news, industry news).

● Case Comparison: A traditional engine searching for "Korean chip export data for Q2 2024" may rely on outdated statistics, whereas AI networked search can real-time capture the latest announcements from the Korean Ministry of Industry.

● Handle multi-condition combinations and implicit logic queries, such as: "List provinces offering support policies for new energy battery research and development and analyze the effective time and subsidy amounts of these policies."

● Technical Support: The semantic understanding capabilities of large models combined with rule engines allow for precise parsing.

● Customize information priorities and presentation methods based on user roles (analysts, customer service, executives).

● Case: Provide structured data (e.g., hot issues + solutions) to customer service robots to enhance response speed and accuracy.

● Problem: The quality of internet data varies, and real-time capture faces performance bottlenecks.

● Solutions:

○ Intelligent Filtering and Validation: Use semantic analysis and credibility scoring (e.g., source authority) to filter valid information.

○ Incremental Update Mechanism: Focus on monitoring updates in key areas (e.g., finance, healthcare) to reduce the costs of comprehensive network scans.

● Problem: The external data retrieved may involve sensitive political or violent information.

● Solutions:

○ Green Net Interception Mechanism: By configuring green net security services, user inputs and search results can be uniformly filtered for content safety.

○ Consumer Authorization System: Only authorized users have API access qualifications, allowing fine-grained planning of access permissions.

● Problem: Real-time networked search may trigger high costs for large data downloads and large model inference.

● Solutions:

○ Summary Input: By default, use summarized information from search results to fill prompts, avoiding quick exhaustion of the context window.

○ Cache Optimization: Cache high-frequency query results to reduce repetitive inference and network requests.

Pre-configured Strategies and Plugins + Networked Search

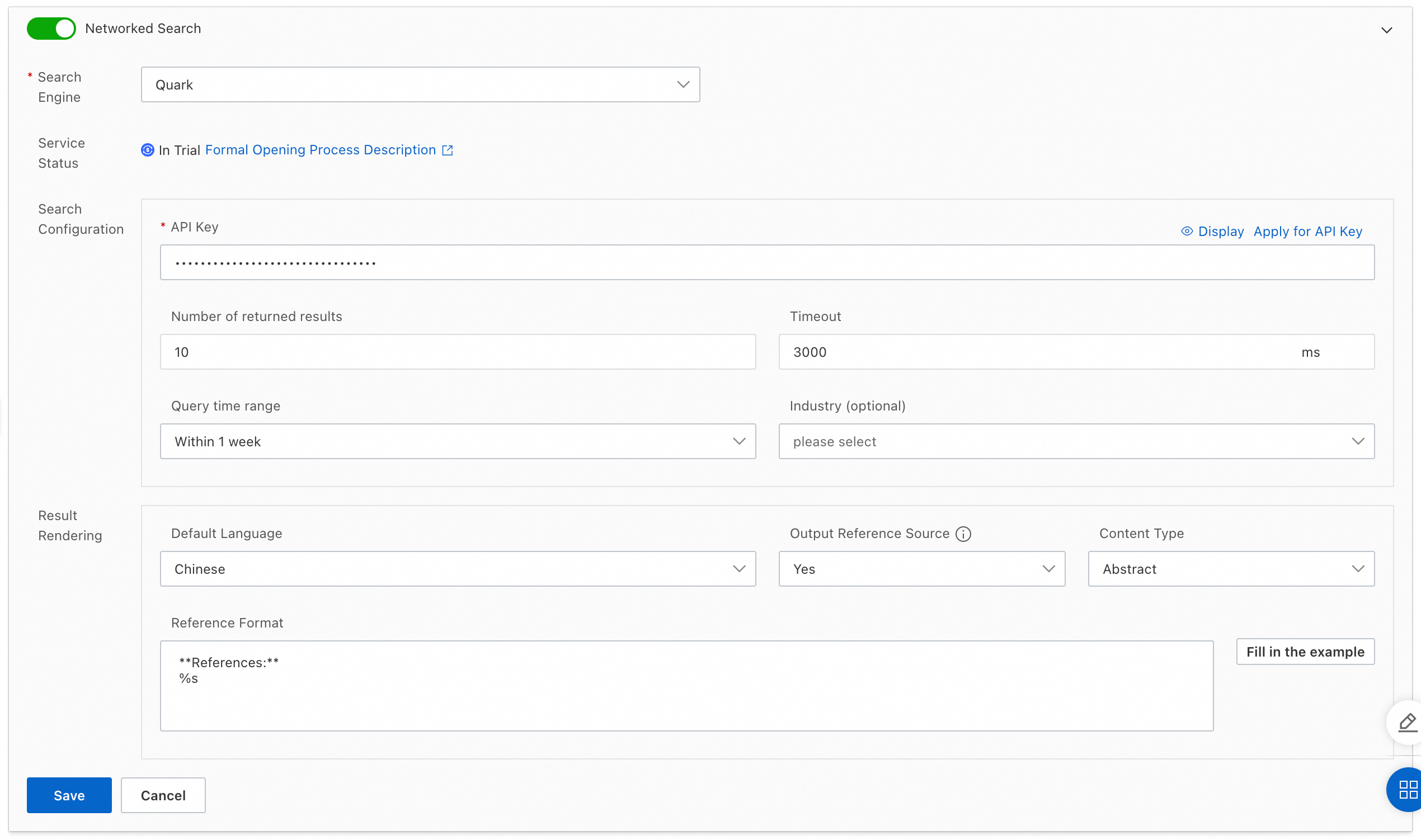

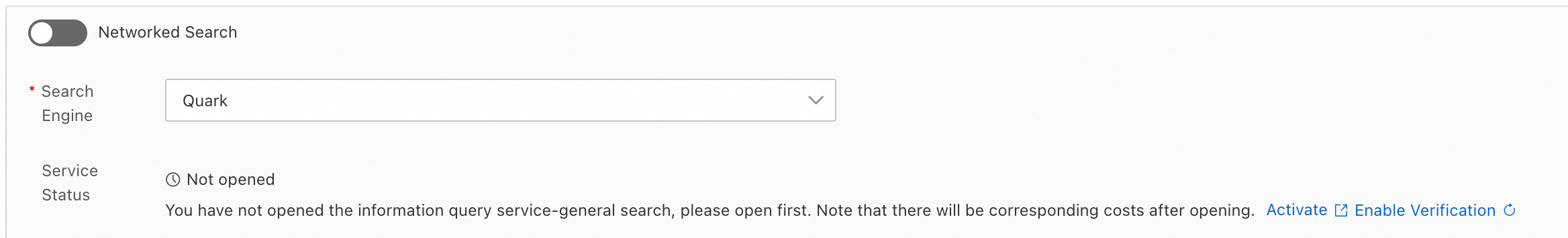

Use Quark search capabilities through Alibaba Cloud Information Query Services

When selecting Quark in the search engine, the default service status is "not activated."Click to activate, and you will be redirected to the service activation page for information query services.

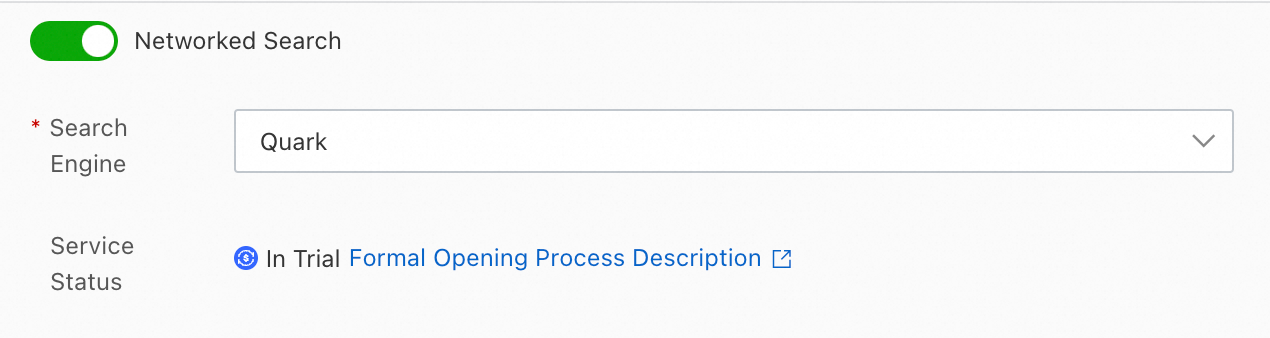

After activation, click on activation verification; the service status in the console will update to "in trial."

Alibaba Cloud Information Query Services provide a 15-day free trial, with a usage limit of 1000 times/day and a performance limit of 5 QPS.

You can apply for formal interfaces based on the steps provided in the activation instruction document.

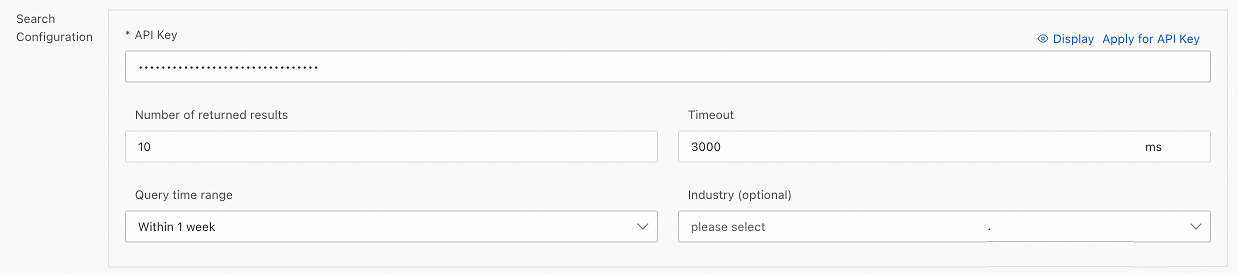

The application process for the API-KEY can refer to the documentation and access the Information Query Services Console for acquisition.

Search Result Rendering is used to configure the format and richness of the rendered search results.

• Default Language: Chinese, English.

• Output Citation Source:

Content Type:

In the wave of API standardization and cloud-native evolution, Alibaba Cloud's Native API Gateway is transforming networked search from a complex technical challenge into a basic capability that developers can use "out of the box" through an integrated architecture of intelligent routing, security enhancement, and cost optimization.

We look forward to exploring with industry pioneers so that every intelligent interaction is based on trustworthy, real-time, and comprehensive information, and we welcome everyone to continue to follow us.If you need support services, please join the networked search service support DingTalk group, group number: 88010006189.

532 posts | 52 followers

FollowFarruh - March 22, 2024

Alibaba Clouder - April 28, 2021

Alibaba Clouder - April 22, 2020

Data Geek - February 8, 2025

Alibaba Clouder - July 19, 2018

Alibaba Clouder - June 10, 2020

532 posts | 52 followers

Follow API Gateway

API Gateway

API Gateway provides you with high-performance and high-availability API hosting services to deploy and release your APIs on Alibaba Cloud products.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Cloud Native Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free