By Jia Yangqing, VP of Alibaba Group, President of Computing Platform BU, Alibaba Cloud Intelligence.

Today's presentation was prepared by Jia Yangqing for a special training session on Artificial Intelligence (AI) technology for the Alibaba's CIO Academy. During the live broadcast, Jia Yangqing discussed the engineering and product practices involved in AI. First, he introduced AI and its applications. Then, the participants discussed important issues in AI systems, including the breakthrough in computing power that drives algorithm innovation and the value that cloud platforms can provide. Last, Mr. Jia analyzed the relationship between big data and AI, discussed how enterprises should embrace AI, and presented the key emphasis for company intellectualized strategy.

AI has already become an important technology trend. Various industries are now embracing and more tightly related with AI. Areas where AI serves a prominent role are listed in the figure below, which includes not only those areas that are strongly related to AI, but also those indirectly empowered by it as well.

Before we get too ahead of ourselves, we need to understand the idea behind AI, its applications and its systems.

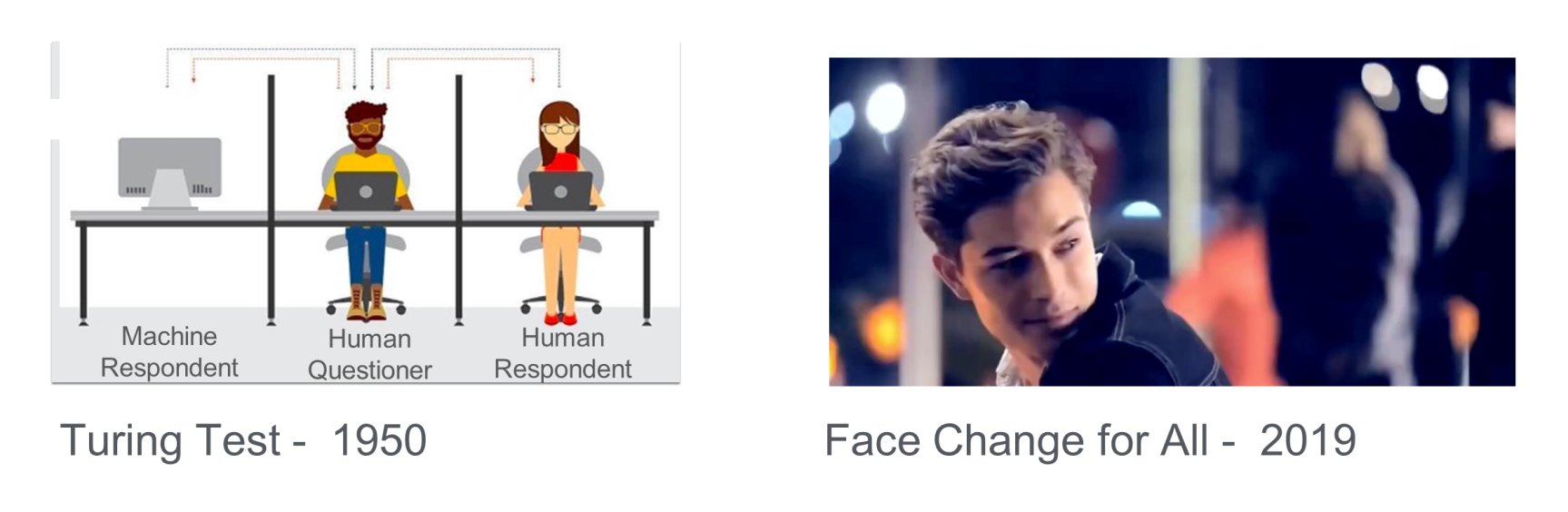

As AI has developed over the past 80 years, we have achieved from the Turing Test to Face Change for All. Machines use AI to answer questions and create or perform computation and analysis tasks just like humans. In some fields, computers have already achieved the capabilities of humans. For example, the Face Change for All app released in 2019 is based on the extensive application of deep learning and neural networks in the AI area.

Currently, many AI applications are used in our daily work and lives to replace human jobs. For example, Elon Musk's AI project was able to simulate the operations of the human brain. However, with the rapid development of AI, some examples of what I would like to call "fake AI" have also emerged.

During the development of AI, we have had to face several false AI projects, such as, a serious fake AI project that defrauded investors of 200 million CNY (over 28 million USD). Therefore, we need to better understand what AI is and how it can be used.

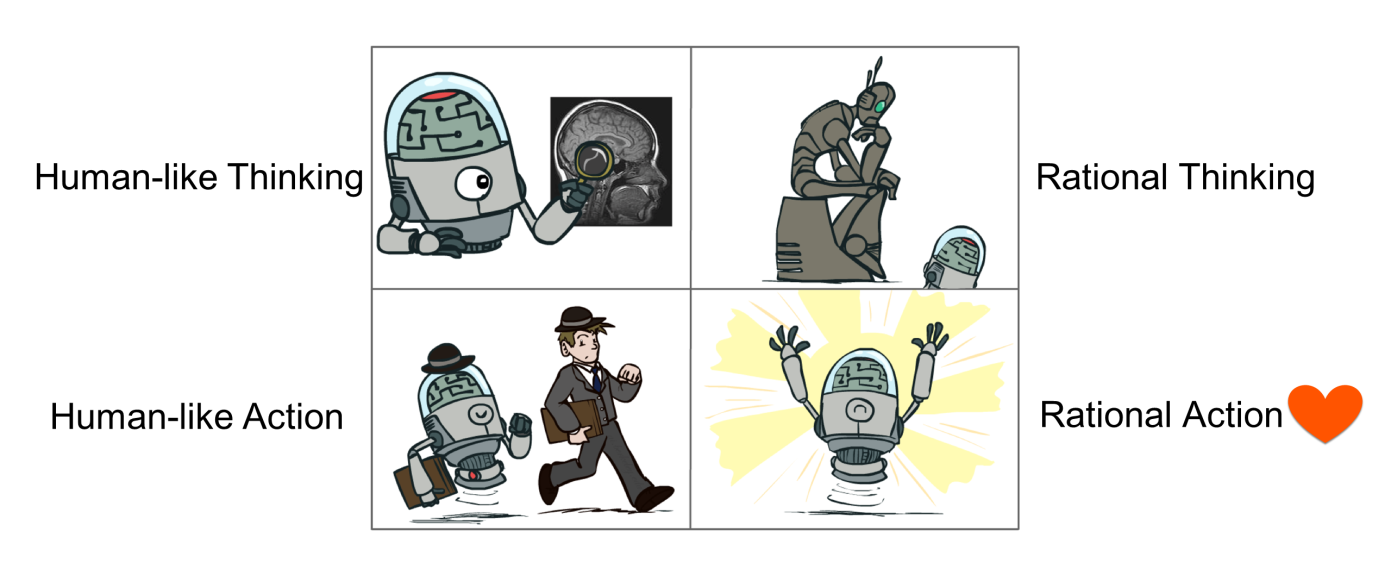

In academia, AI is defined somewhat differently. AI is the simulation of human intelligence able to receive and categorize information, rationally performing a series of tasks and make decisions based on it.

One of its main features is the ability to take rational actions.

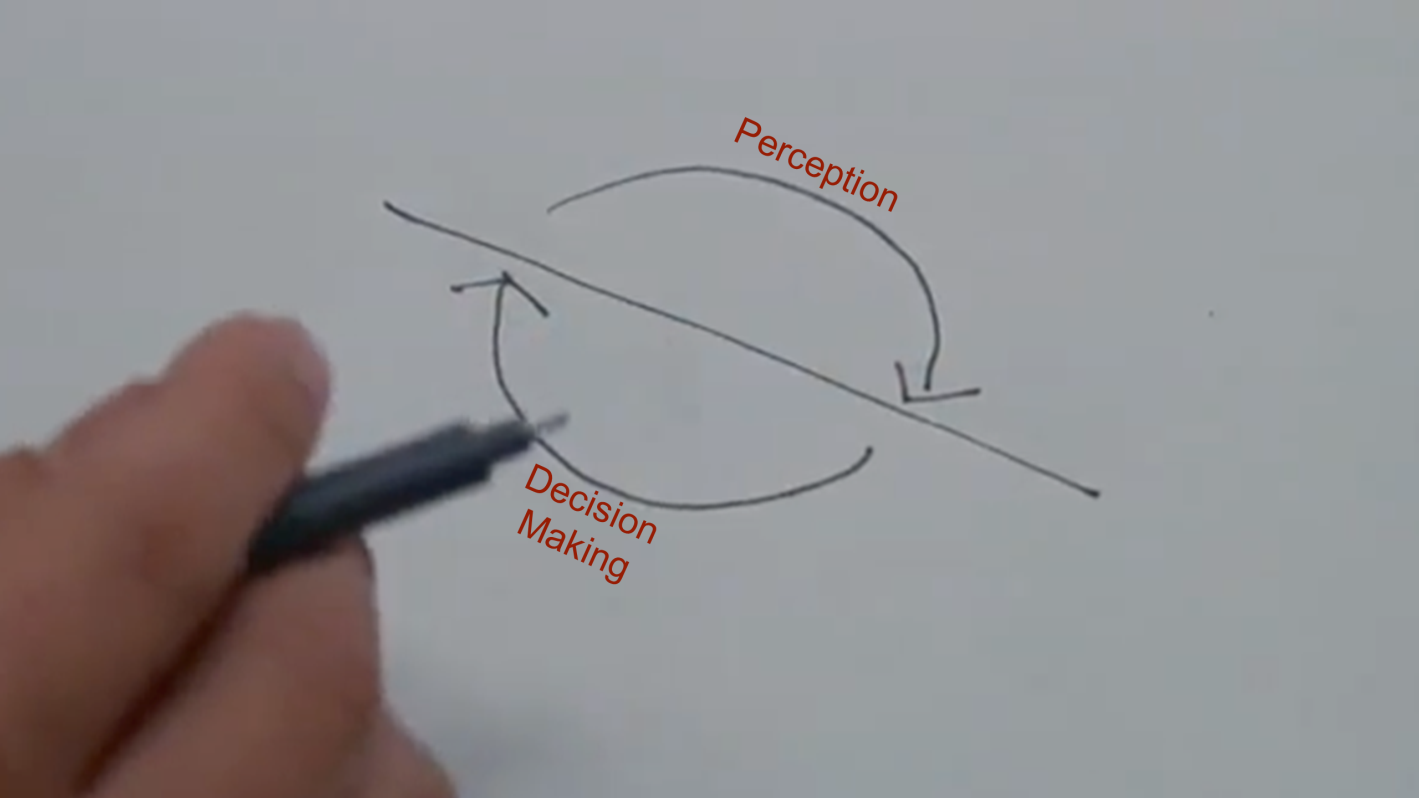

The AI process runs from perception to decision-making feedback. The key factor that determines whether or not AI systems can take the proper actions is how they perceive information about the external world. Since AI attempts to simulate the human brain, the process of perception is actually a process of understanding and learning. This is the problem that deep learning attempts to solve with AI.

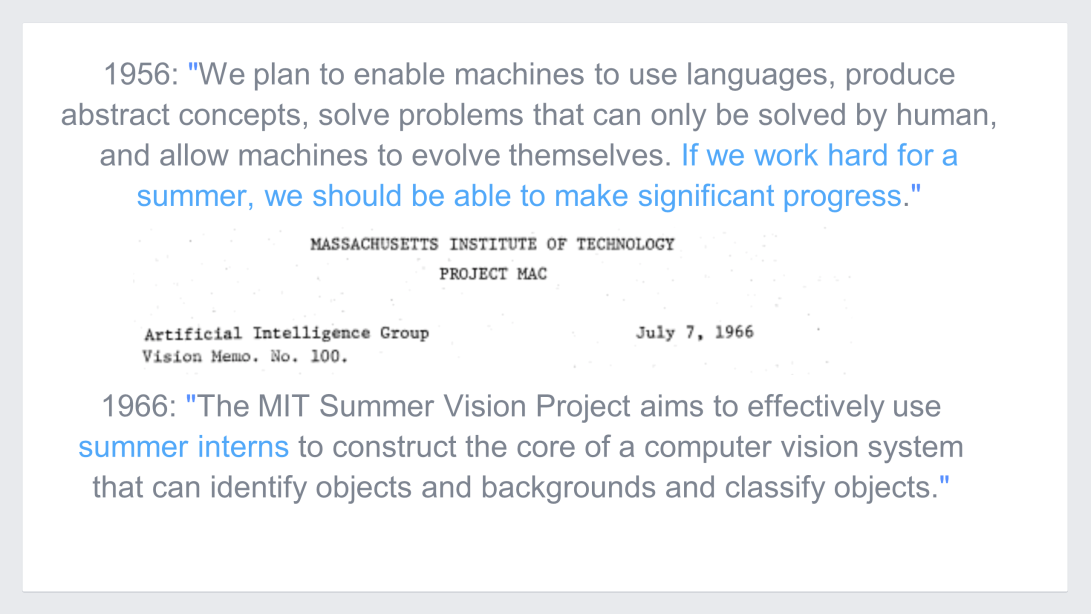

Only when external information, such as video, text, or voice commands, is converted into a machine language can AI accept and respond. This problem was considered and studied by scientists from the earliest days of AI.

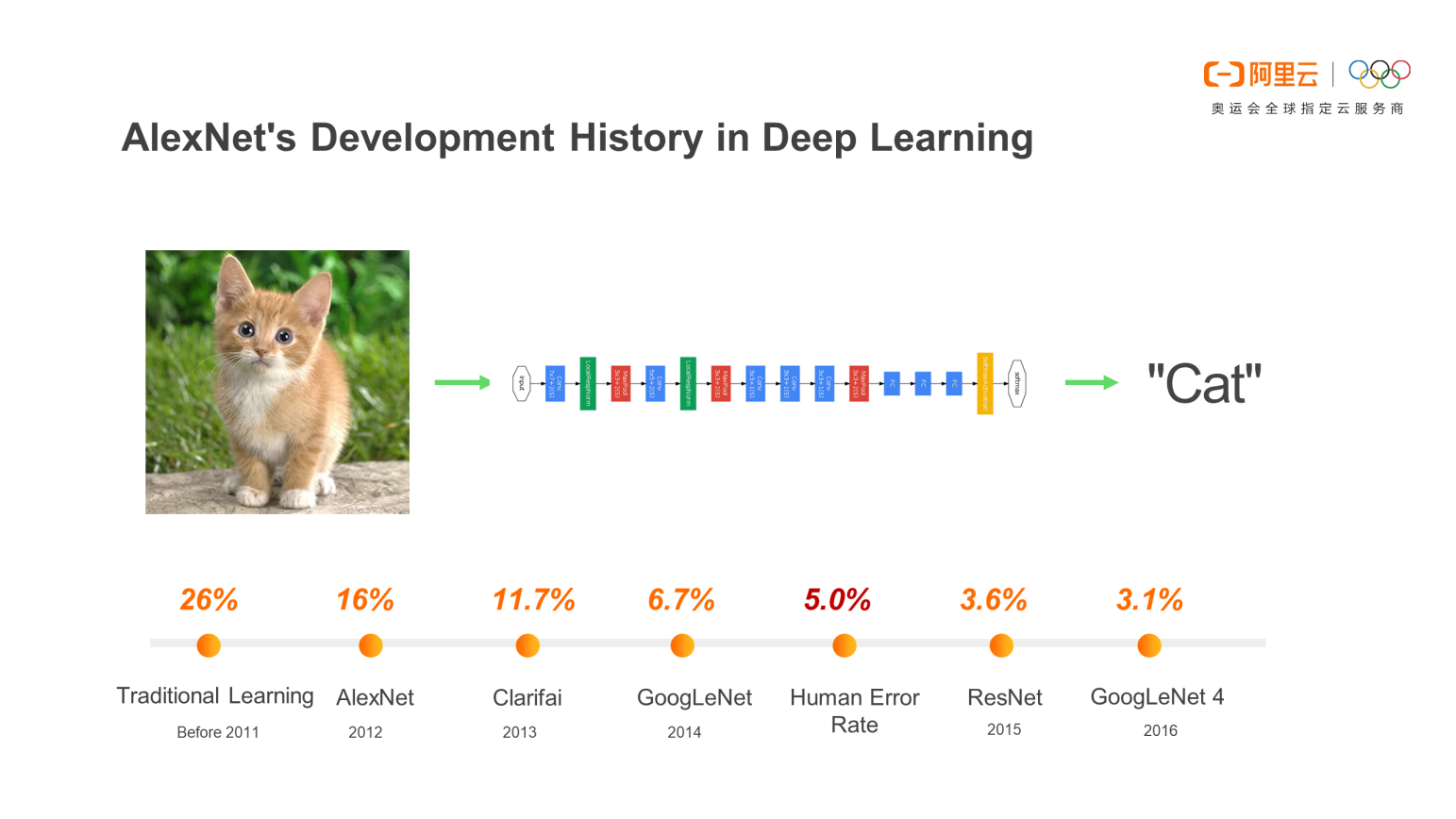

Subsequently, people began to discuss and do research on how to input information through visual perception. In 2012, Geoffrey Hinton, the winner of the ImageNet competition at the University of Toronto in Canada and his student Alex Krizhevsky designed a solution. After that year, deeper neural networks were proposed, such as the well-known VGG and GoogLeNet. These neural networks provide outstanding performance for traditional machine learning classification algorithms.

Simply put, AlexNet has an aim of accurately identifying the objects required in commands from a large number of objects. The application of this model has accelerated the development of the image recognition field and is currently widely used.

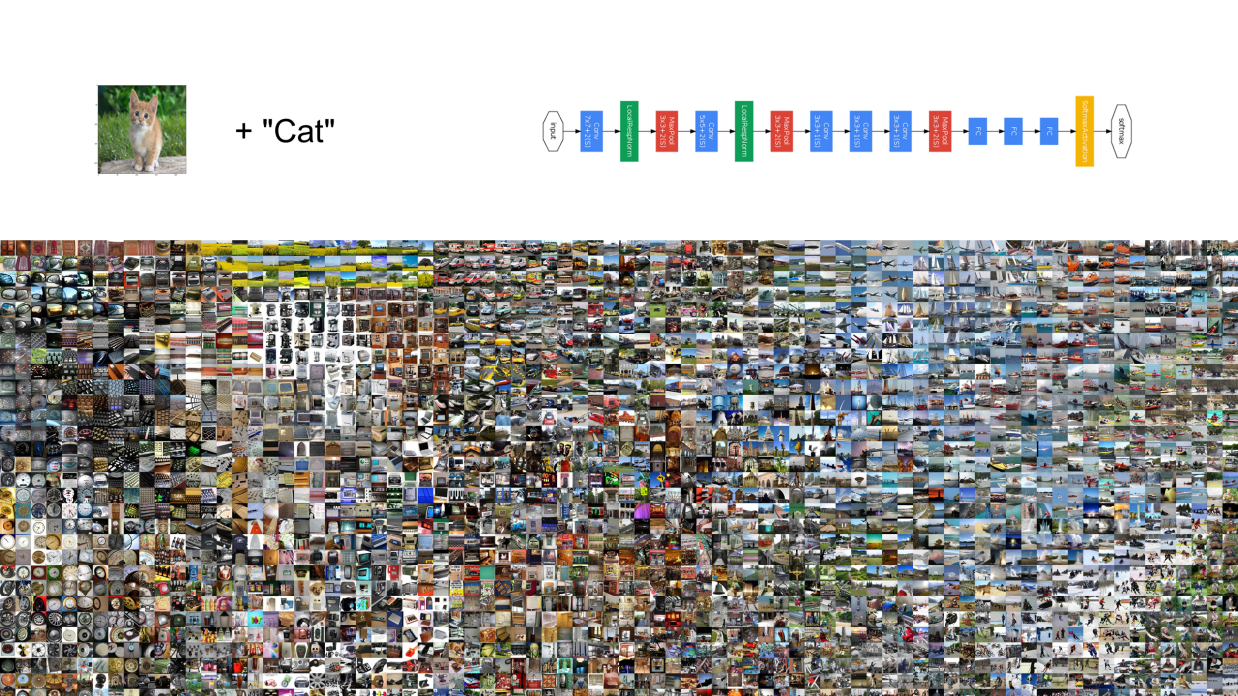

Like our human brain, neural networks use a multi-level learning model and become more and more complex as they continue to learn. Assume that you want to find the images tagged as "cat" from millions of images and train an edited visual network model on an extremely large dataset. Then, more complex training is implemented through model iteration.

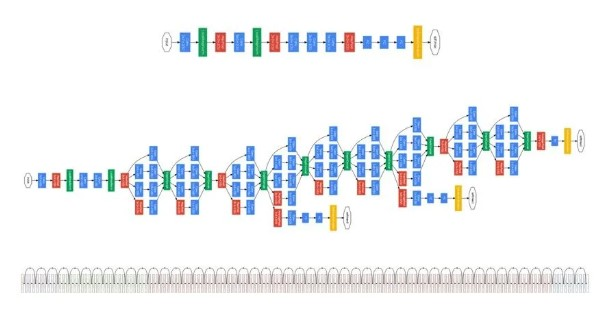

At present, the commonly used RestNet model has a depth of more than one hundred layers and has incorporated some latest scientific research findings, such as the quick bridge connections shown at the bottom of the below figure. This allows users to quickly and effectively train a deep network. Ultimately, this solves the problem of visual perception.

This solution uses AI to identify aircraft types, boarding doors, and airport vehicles and reflect them on actual maps. It also allows users to see an aircraft's trajectory during flight. This information can be used as input information for AI management, making the operation of airports more convenient and effective.

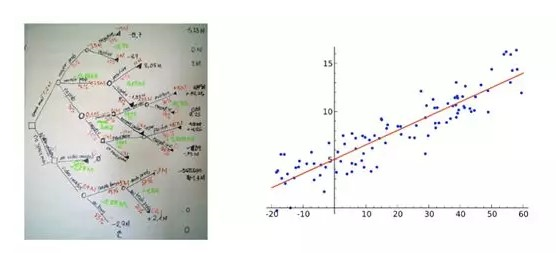

As mentioned earlier, deep learning is an important pattern and method of perception. Deep learning algorithms primarily involve:

After perception, the AI system needs to make decisions. Deep learning is a black-box operation that can learn and perceive external information, but cannot provide any feedback explain the causes of perception problems. So that analysis and feedback require decision-making capabilities.

Examples of traditional machine learning mainly include decision tree algorithms and logistic regression. For example, the process by which banks issue loans is a decision-making process that outputs a decision after balancing various factors. We can use a decision tree to output a "Yes" or "No" judgment to determine whether to grant a loan or not. Logistic regression refers to the correlation between two types of data. This is a mathematical method that outputs a precision solution.

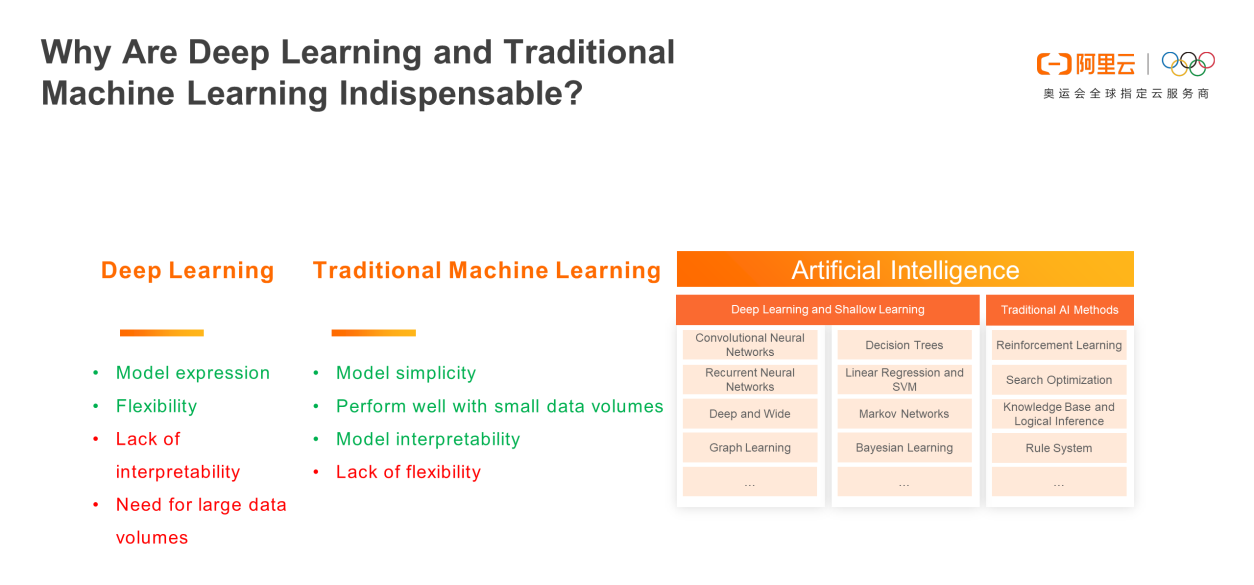

In fact, deep learning and machine learning are complementary. Deep learning can solve perception problems, such as computer vision and speech recognition. It uses neural network architectures to solve many perception problems, but cannot explain perception issues. Traditional machine learning does not provide such user-friendly perception functions. However, its model is relatively small and can be directly explained, which is necessary in the finance and risk control scenarios.

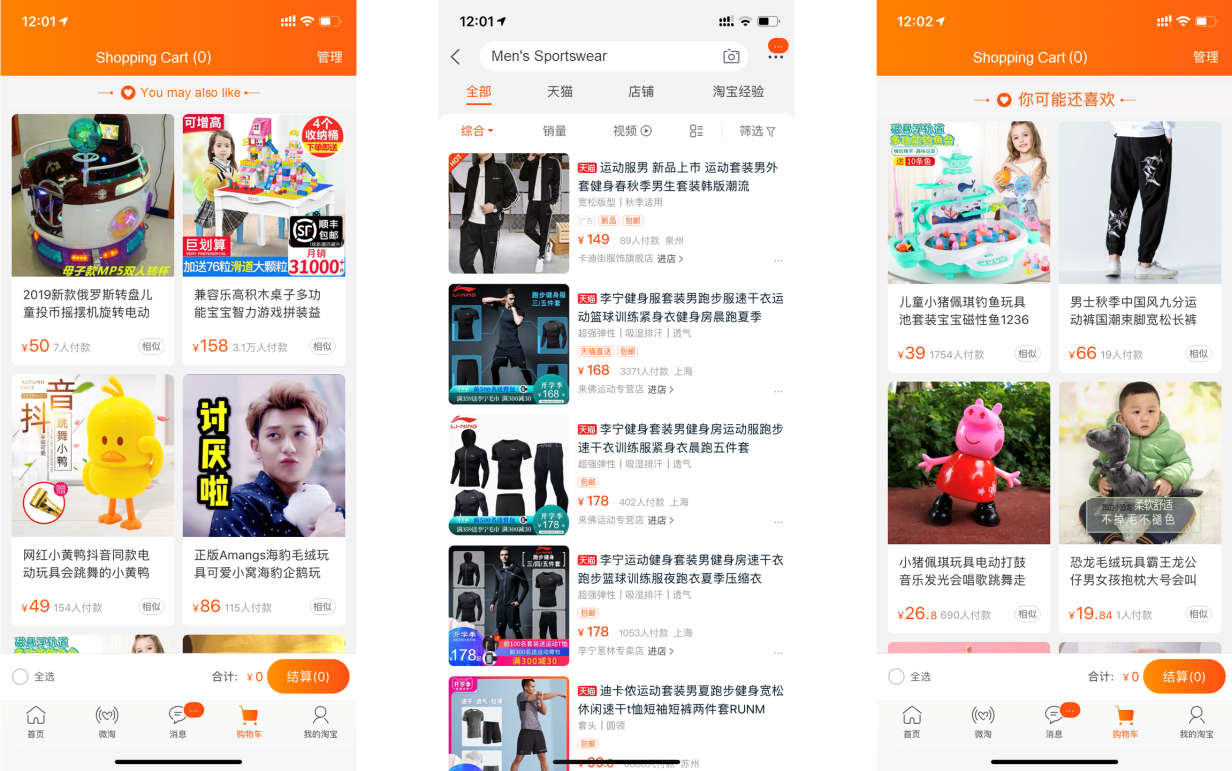

AI has long been applied in the advertising field. Taobao is one of the most common advertising scenarios. Sellers first investigate user preferences based on consumers' personal browsing information and then push products related to consumer searches through the intelligent recommendation system. The wide application of such intelligent algorithms makes user information mining more efficient and precise.

Both perception and decision-making are dependent on algorithms.

With the rapid development of algorithms, corresponding infrastructure support is becoming increasingly important. This requires the support of AI systems. Two essential factors in the construction of AI or machine learning systems are algorithms and computing power. Algorithm innovation is driven by breakthroughs in computing power.

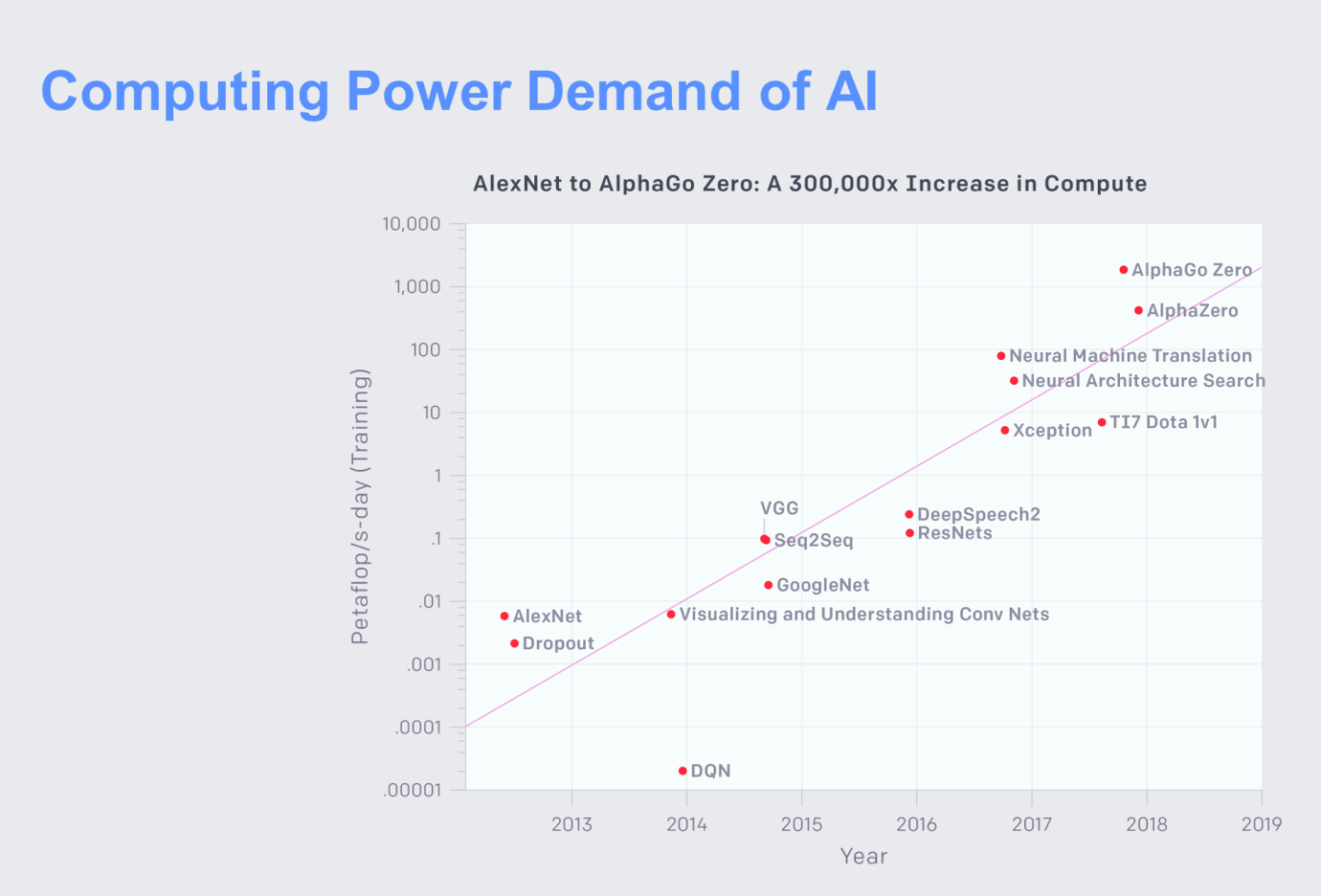

The following figure shows the computing power required by AI up to 2019. AlexNet's demand for computing power has increased 300,000 times over compared with the computing power needed by AlphaGo Zero. Given this situation, the solutions to algorithm iteration and algorithm implementation impose higher requirements on the system.

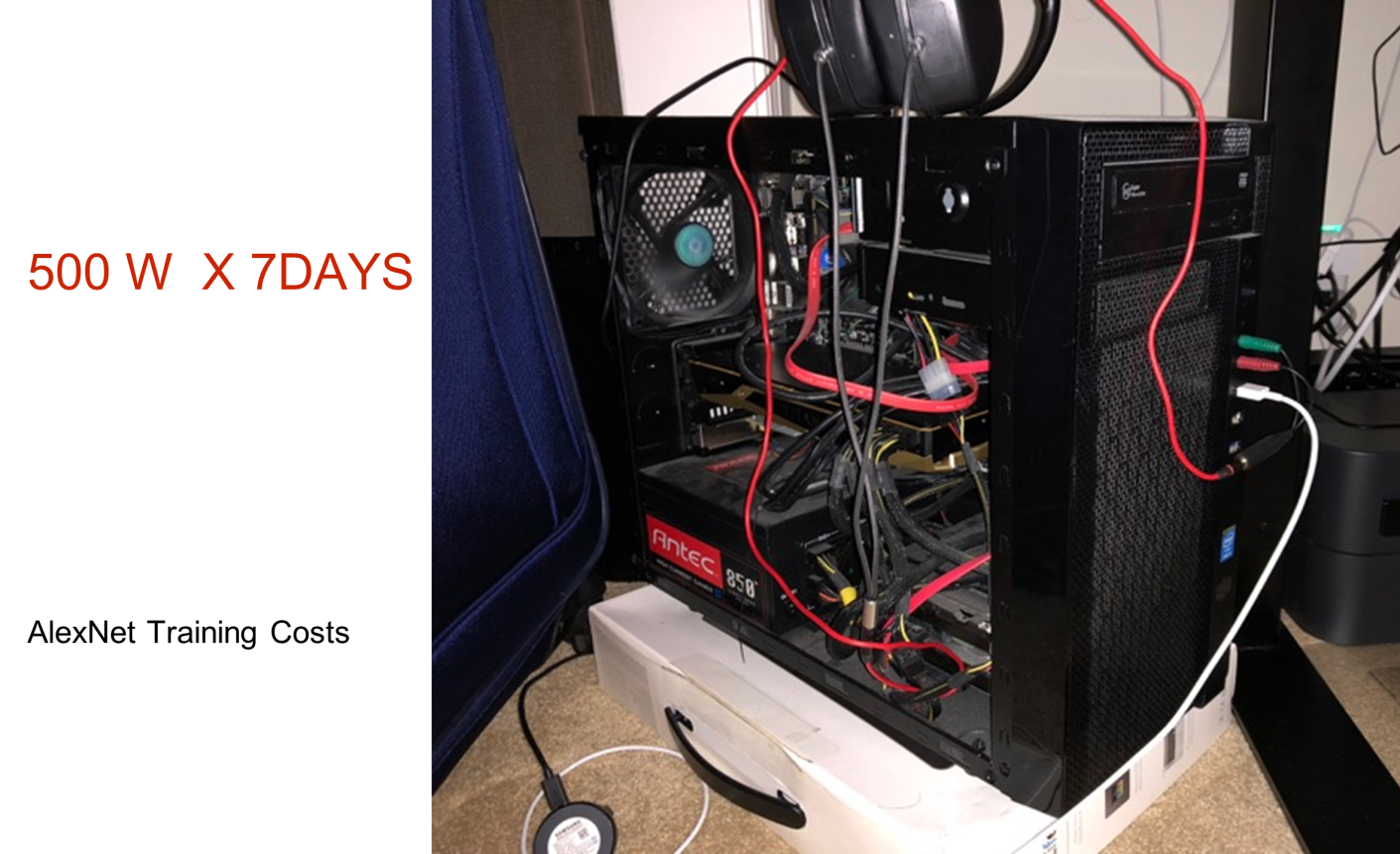

The following figure shows AlexNet's system in 2013. By simply adding a GPU to a machine, the training cost was about 500 watts per day for 7 days. This means the iteration cycle of the business model was about one week.

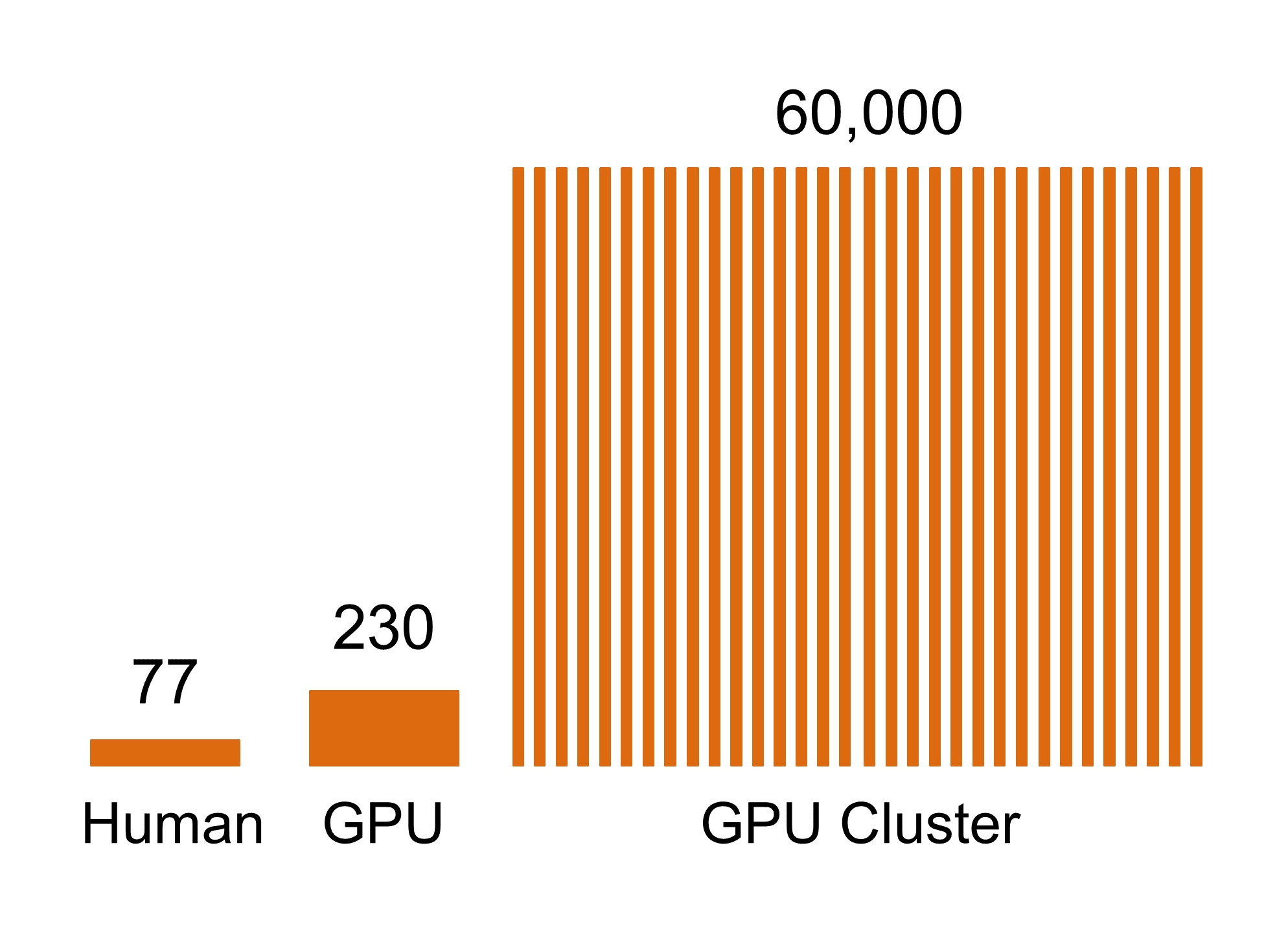

In today's era where businesses require rapid development for ad recommendations and other models, a one-week model iteration cycle is far too long. Therefore, more and more people are interested in using large-scale clusters or chips to provide higher computing power for AI systems. According to a comparison made by MIT in 2014, one person can process about 77 images in one minute, whereas a single GPU can process 230 images in the same period. Although the processing speed of a single GPU is not much greater than that of a human, we can achieve larger-scale and faster computing through a GPU cluster. As shown in the following figure, 512 GPU clusters can process 600,00 images in one minute.

When designing an AI system, you need to focus on how to implement high-performance storage, achieve fast communication between machines, and maintain the stability of distributed clusters. Currently, Alibaba Cloud has an internal Eflops platform that can implement 10^18 computations in three minutes and consumes 128 KW of electricity per minute. Such a system was unimaginable before 2015. Our ability to achieve such capabilities is mainly due to large-scale clusters and the scalability of the system's underlying chips.

At present, many enterprises around the world, especially those in China are researching and developing higher-performance chips, and Alibaba is no exception. In 2019, Alibaba released the world's highest-performance AI inference chip, Hanguang 800. It was tested in actual city brain and aviation brain scenarios and reached a peak performance of nearly 800,000 images per second. This represents a performance increase of about 4000% over the previous generation.

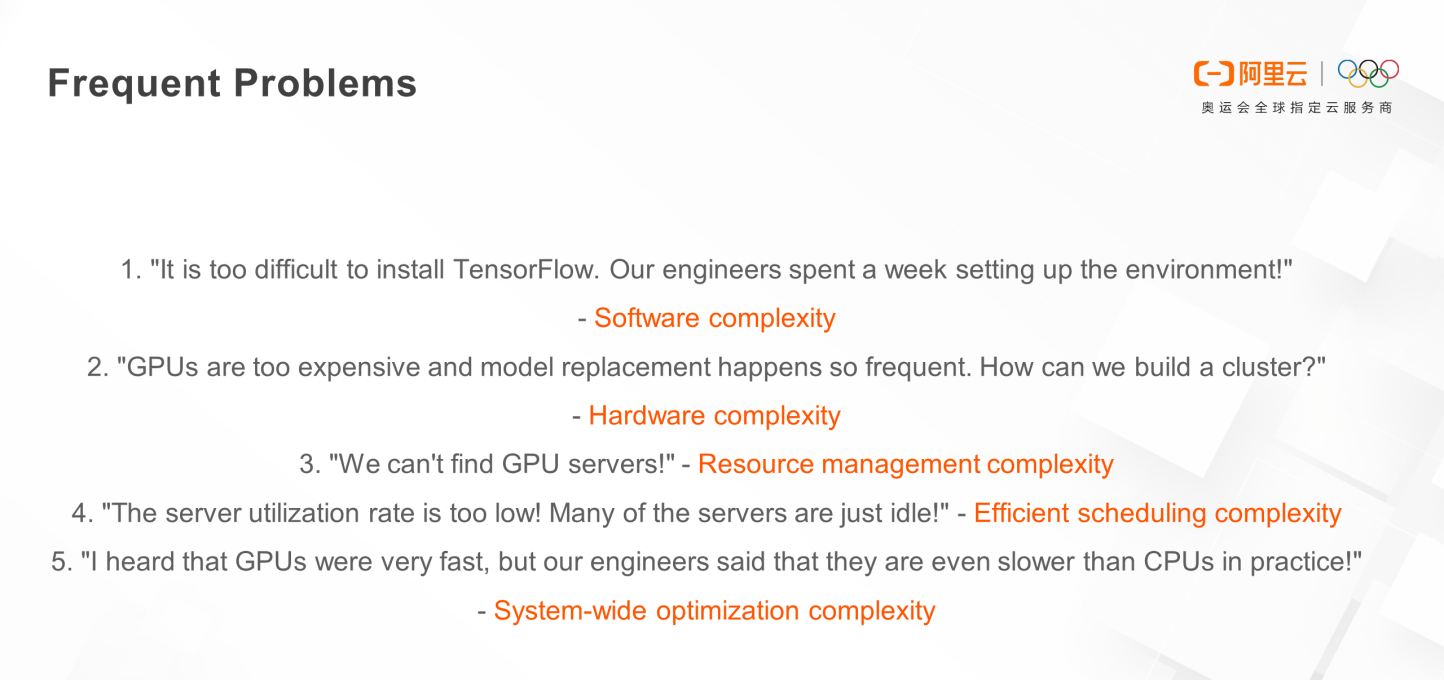

Increasing system complexity poses a series of problems by increasing the complexity of software & hardware, resource management, efficient scheduling, and system-wide optimization. This is a challenge that must be faced by all parties in the system development process.

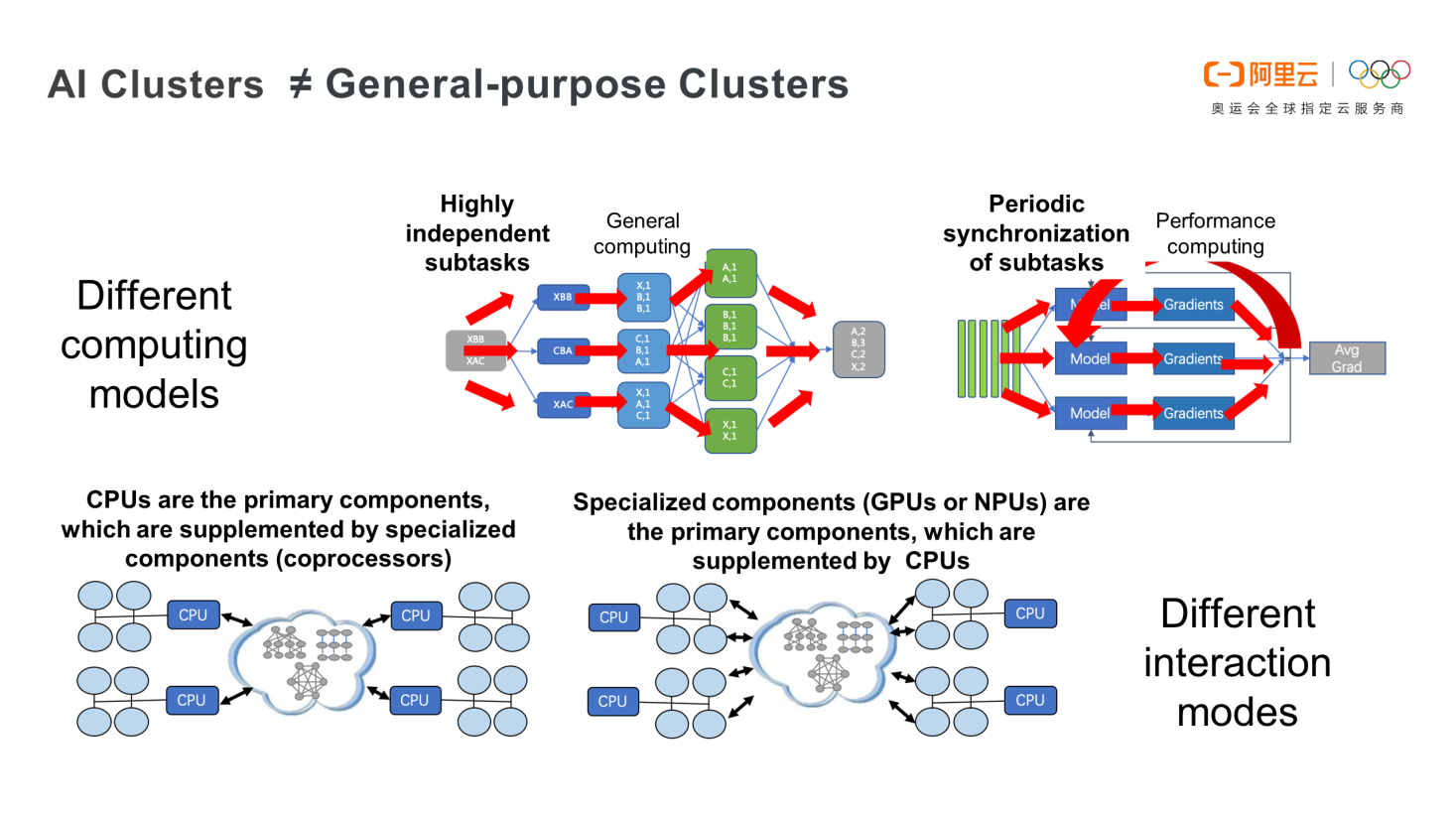

You must note that an AI cluster is not a general-purpose cluster. During AI training, subtasks need to be periodically synchronized, and high-performance communication is required between different machines. In most cases, specialized GPU- or NPU-based components are used. Different computing models and interaction modes currently pose a major challenge to AI training.

AI is used in various Alibaba business scenarios. Therefore, we can use practical AI applications to optimize the platform design. Some examples include Taobao Mobile's Pailitao (Snap-and-search) classification model with millions of categories, Taobao's Voice + NLP solution, and Alimama's advertising recommendation system.

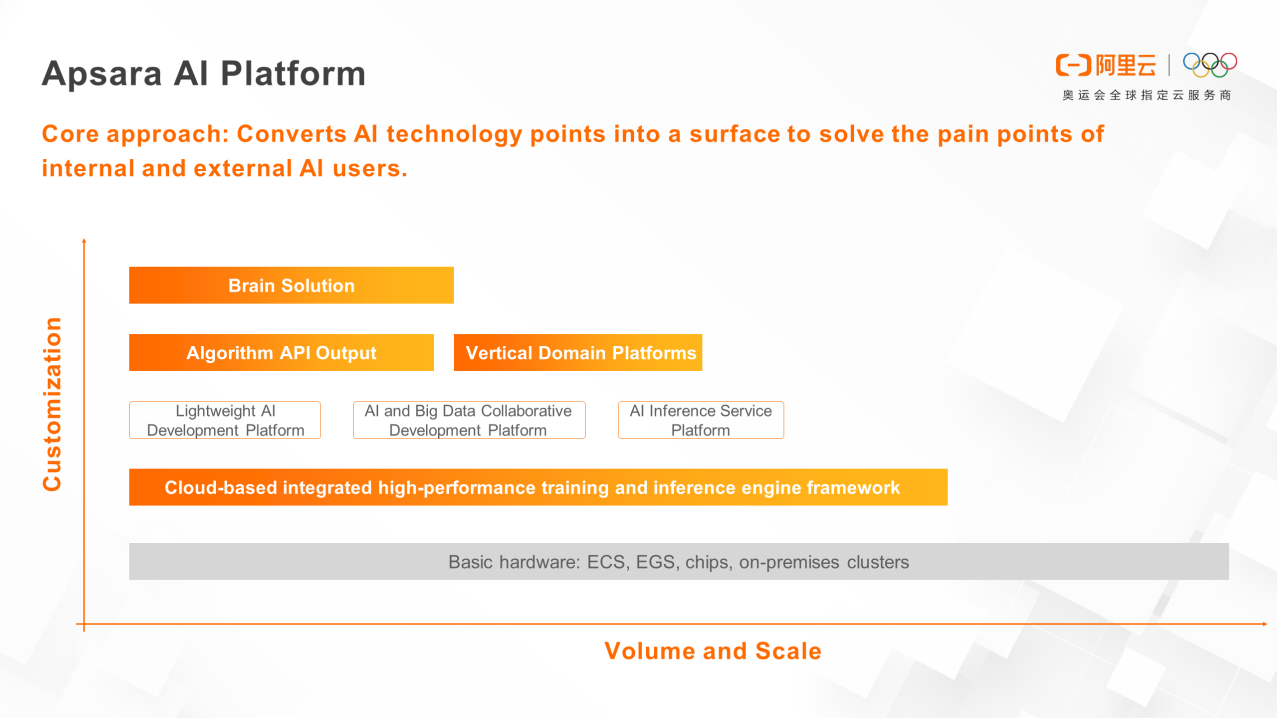

The optimized Apsara AI platform is divided into three layers, which are the underlying infrastructure at the bottom, the training and inference framework in the middle, and the development platform on the top. There are three important types of AI platforms:

These three types of platforms support the output of algorithm APIs and the development of vertical domain platforms and brain solutions.

In the deep learning field, Stanford University launched a benchmark called DAWNBench. Compared with the previous best performance, Alibaba Cloud's machine learning solution has improved the performance by about 10%.

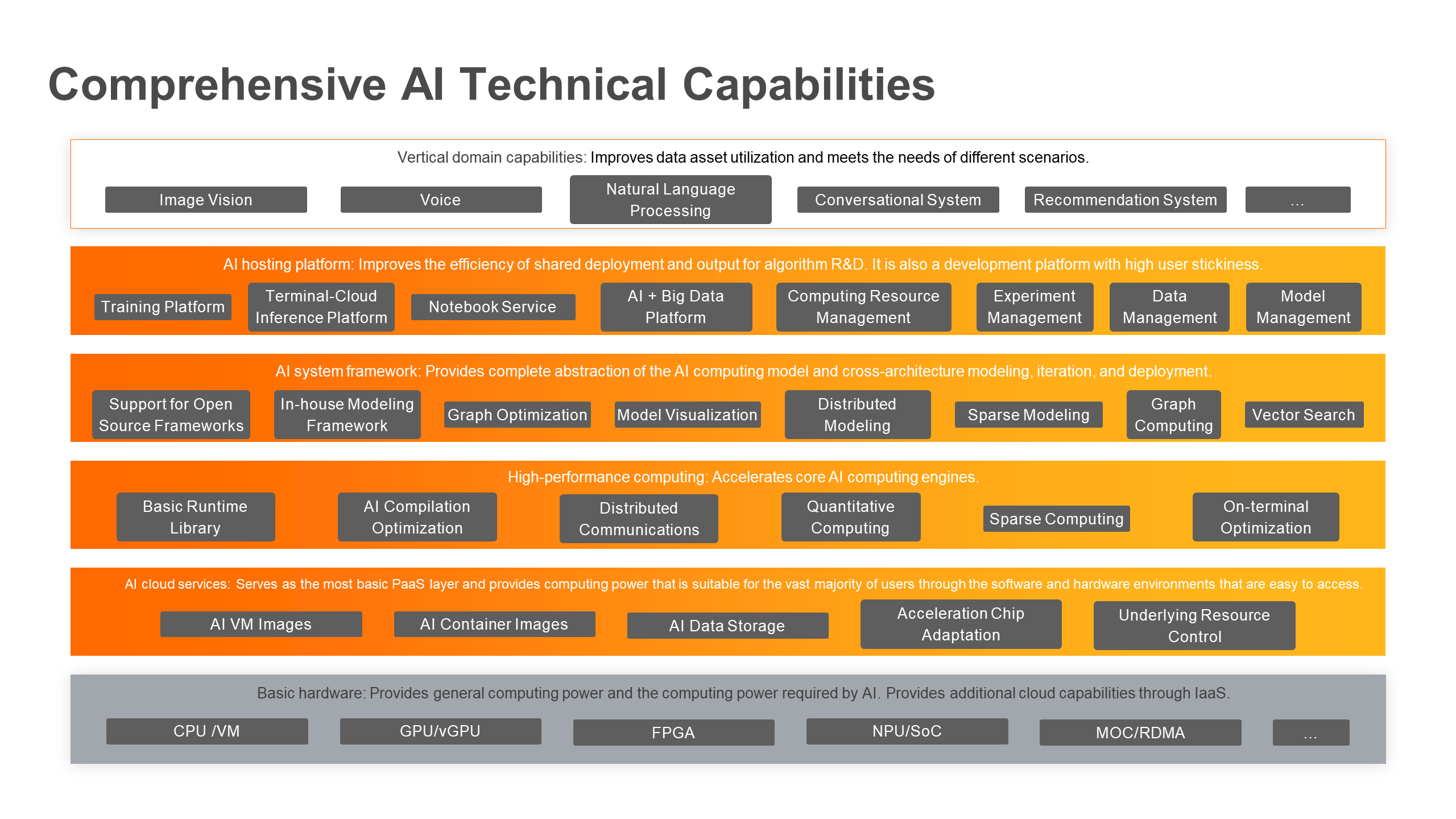

Today, AI technology capabilities play a significant role in improving asset utilization and meeting the needs of different scenarios. Comprehensive AI technical capabilities mainly rely on the following infrastructure and services:

AI is for intelligent computing, and Big data is for data computing. Both capabilities complement each other.

The algorithms and computing power mentioned earlier require the support of large data volumes. Data is an important embodiment of the value of algorithms and computing power.

The following two images show papal audiences from 2005 and 2013, respectively. With the development of mobile Internet, data has increased exponentially, and the larger volumes of available data improve the performance of deep learning.

In 1998, the training data of the small MNIST system was only 10 MB, the training data of ImageNet in 2009 was 200 GB, WebVision had a 3 TB dataset in 2017, and the visual system of a typical product requires 1 PB data. Massive data volumes have helped Alibaba improve its performance almost linearly.

Let's look at a common scenario we are all familiar with to illustrate how larger data volumes can improve performance. In the X-ray medical diagnosis field, studies show that a doctor's ability to diagnose diseases from X-ray images is directly correlated to the number of X-ray images they have viewed. The more images they examine, the higher their diagnostic accuracy. Similarly, current medical engine systems can be trained on more data through large-scale computer systems to achieve more accurate medical diagnoses.

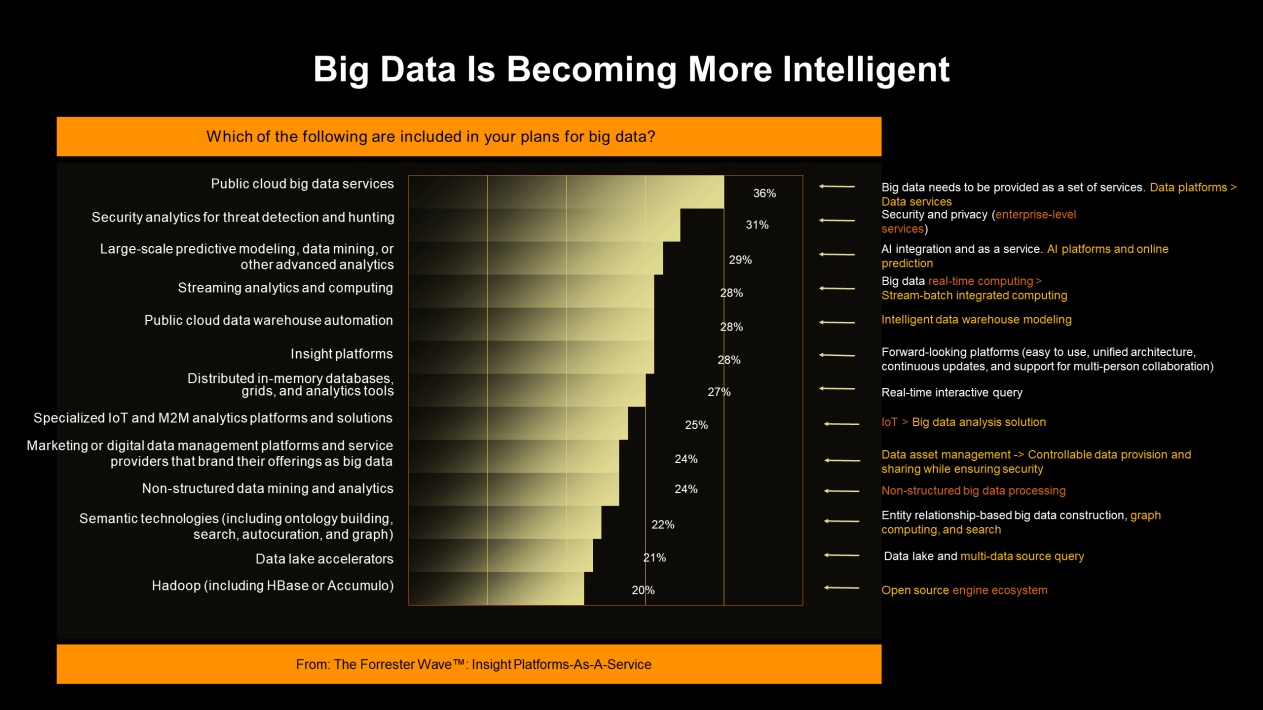

The following figure shows the trends in the big data field. At present, the big data field wants to extract more information, implement real-time computing, AI platforms, and perform online prediction. All these trends show the increasing intelligence of big data.

Currently, different types of data, including structured, semi-structured, and non-structured, are obtained from multiple data sources and stored in data warehouses. In order to leverage the potential value of these data, intelligent computing is required. In an ad recommendation scenario, the data sources are user clicks, views, and purchases on Taobao. Data is written into data warehouses through offline or real-time synchronization and offline or real-time extract-transform-load (ETL). Then, various data models are generated and trained based on data warehouse or data lake solutions. Finally, the training results are output through data services. As you can see, the process of data understanding and usage is becoming more intelligent.

A few years ago, hybrid transactional/analytical processing (HTAP) included OLTP and OLAP. OLAP can be further divided into big data, offline, and real-time analysis. Different engines apply to the cases with different amount of data. Currently, data services have become increasingly important. In some intelligent customer service scenarios, data extraction models are required for real-time AI inference services and applications. Therefore, it is critical to find a way to combine analytics and services. This is why we are currently pursuing hybrid serving and analytical processing (HSAP). Combined with AI we can distill insights from data via both offline and real-time data warehouses, and present such insights to users via online services.

Alibaba has developed AI-powered big data methodologies and solutions in our own applications. The offline computing (batch processing), real-time computing (stream computing), interactive analysis, and graph computing scenarios in the Double 11 Shopping Festival have been combined with the Apsara AI platform to provide a new generation of Apsara big data products empowered by AI.

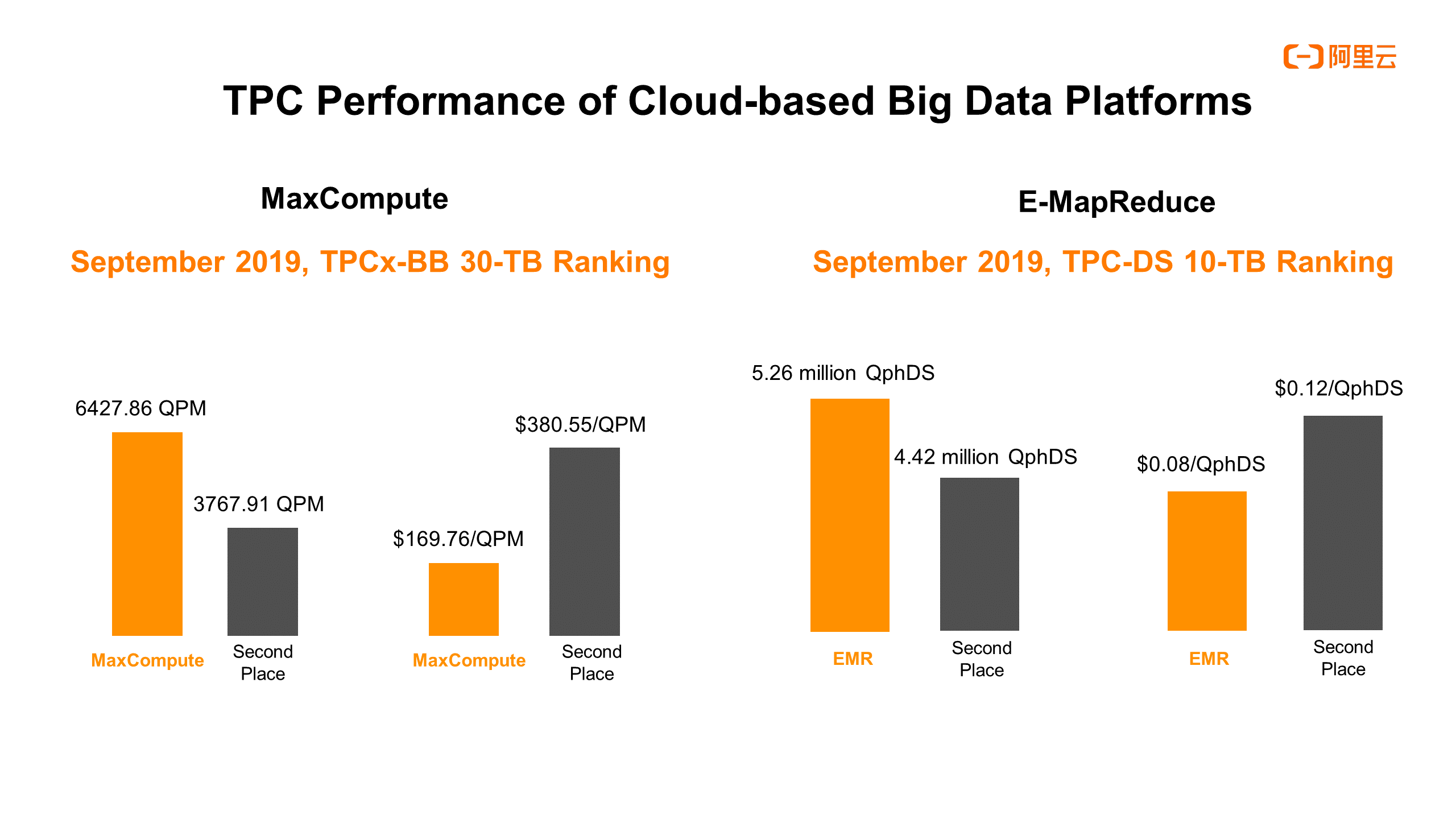

Similar to AI, big data focuses on performance. In 2019, Alibaba Cloud's big data platforms MaxCompute and E-MapReduce showed significant advantages in computing performance and cost-effectiveness as measured by TPC benchmarks. The following figure shows the benchmark results.

Alibaba's AlimeBot currently provides users with an intelligent voice interaction service in customer service scenarios by applying the AI-based deep learning and intelligent perception technologies. To achieve intelligent performance, it must be closely linked with the big data business system, such as a logistics or user data system.

That brings us to our next question: How should an enterprise embrace AI? Put it simply, to make AI a reality, we should proceed from application needs and gradually pursue technological innovation, just like how Edison improved the electric lightbulb. Cloud provides a low-cost, high-performance, and high-stability infrastructure, but for us, the key is to clearly define our needs.

Over the past few years, the AI field has been working on algorithm innovation and demonstrations, but this is far from enough.

AI algorithms are only a part of the system. When implementing AI, enterprises must also consider how to collect data, obtain useful features, and perform verification, process management, and resource management.

AI is not all-powerful, but it is also impossible to ignore. When enterprises embrace AI, they must start from their business considerations first. As the volume of data and number of algorithms increase, it is essential to build teams of data engineers and algorithm engineers who know the business. This is the key to the success of intelligent enterprises. All the algorithm, computing power, and data solutions we have mentioned can be implemented by using the services and solutions currently available in the cloud. Which can help enterprises implement AI more quickly.

Get to know our core technologies and latest product updates from Alibaba's top senior experts on our Tech Show series

2,593 posts | 792 followers

FollowAlibaba Clouder - June 12, 2018

Alibaba Clouder - June 20, 2018

JDP - June 24, 2022

Alibaba Clouder - October 9, 2019

Iain Ferguson - March 10, 2022

ray - April 16, 2025

2,593 posts | 792 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Clouder