By Zi You & Wang Cheng

With the rapid development of large model technology, the engineering of AI applications has raised numerous demands for underlying infrastructure, particularly in terms of security, efficiency, and performance during the engineering process. Among these, the AI gateway is one of the crucial components of AI infrastructure.

The AI gateway is a deep evolution of the traditional API gateway tailored for large model scenarios. While ensuring the fundamental capabilities of a basic gateway, it has been specially enhanced to cater to the characteristics of AI services:

● Scenario Adaptation: Specifically optimized for long connections, high concurrency, and large bandwidth, adapting to the high-latency characteristics of large model services.

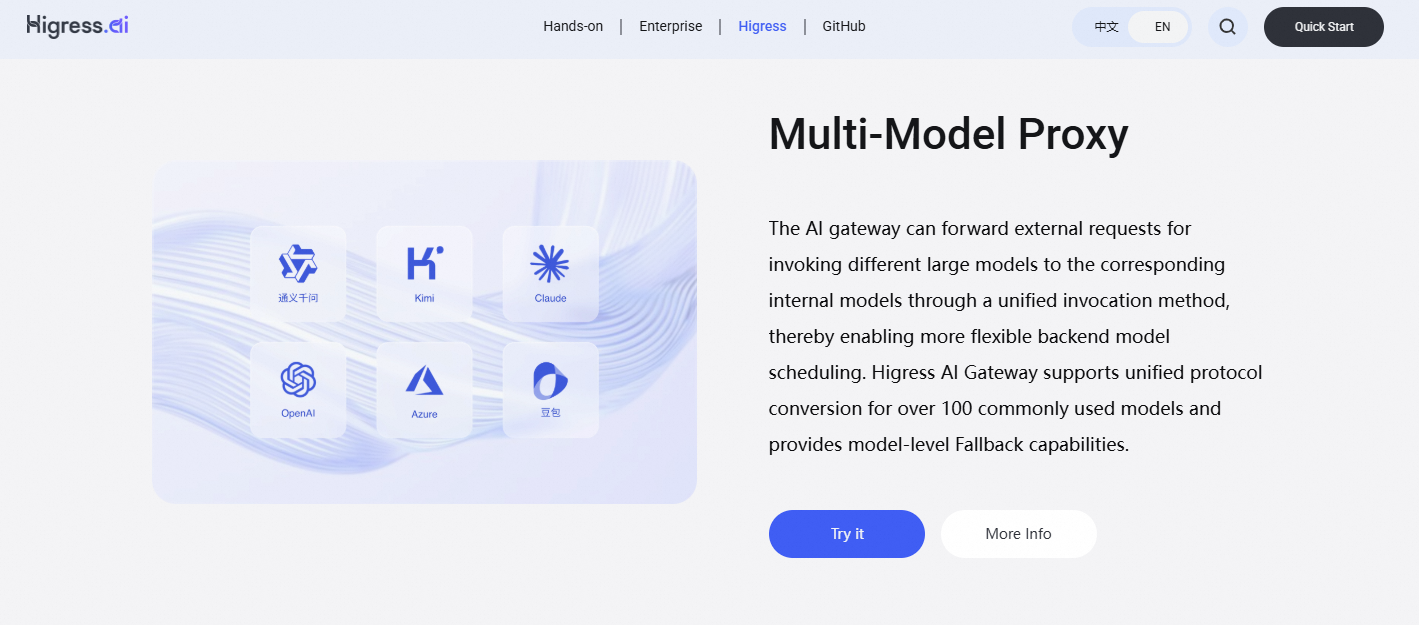

● Intelligent Traffic Management: Supports dynamic routing for multiple models, intelligent load balancing, API Key rotation scheduling, and semantic request caching.

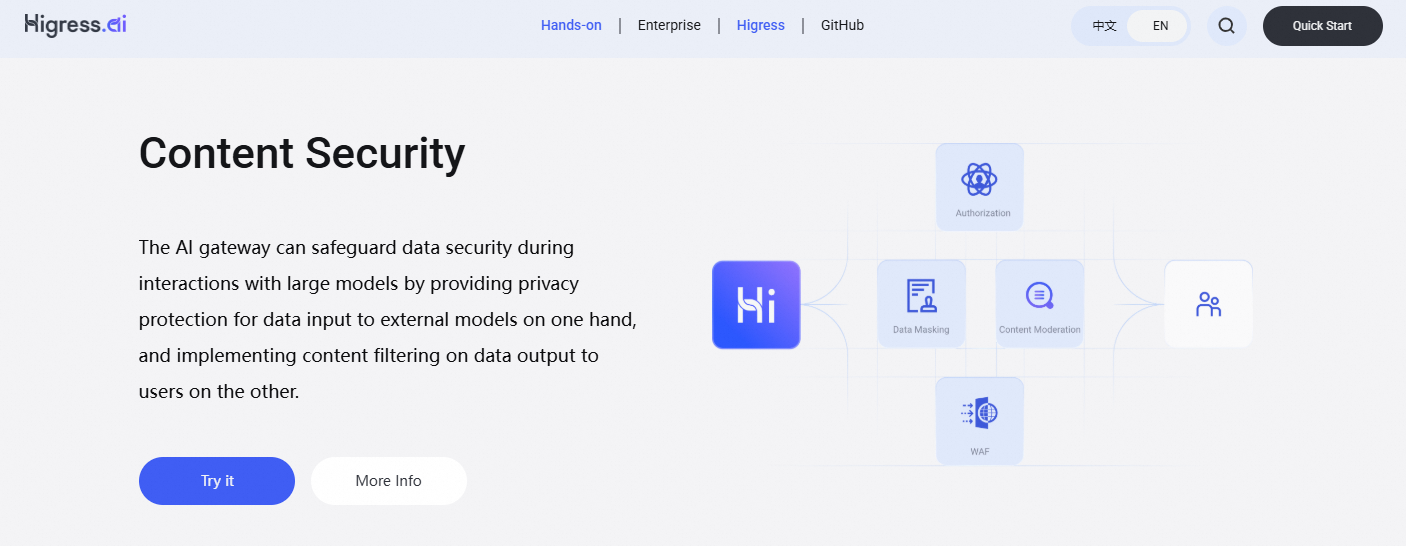

● Safety and Compliance Assurance: Built-in security capabilities including content safety filtering, token quota management, and multi-level rate limiting and circuit breaking.

● Cost Precision Control: Provides operational tools such as call auditing and analysis, traffic grayscale distribution, and automatic retry for failed requests.

Through a unified access layer protocol, the AI gateway helps developers efficiently integrate and manage multi-source AI services, reducing access and operational costs in complex scenarios. For a more comprehensive description of the core capabilities and use cases of the AI gateway, please refer to the following two articles:

The core capabilities of the AI gateway are still in the initial stages of definition but are intrinsically linked to the rapid integration of AI Agents and LLM APIs.

Wang Cheng & Cheng Tan: HigressAI - The 10 Essential Capabilities of an AI Gateway

These eight use cases are the most frequent scenarios summarized from our service to open-source and commercial users. As the capabilities of the AI gateway expand and enhance, the use cases will gradually diversify.

Wang Cheng & Ji Yuan: Higress has organized for you the 8 Common Use Cases of the AI Gateway

Higress has launched a new sub-site specifically for AI scenarios, providing both a Chinese version and an international version (Beta) to serve global developers.

Original main site: https://higress.cn/

New sub-site: https://higress.ai/

In addition to providing common best practices (in article form), community support, enterprise versions, GitHub, documentation, etc., Higress.ai has been specially designed with a "Scenario Experience" feature for quickly experiencing the AI gateway. It offers both open-source and cloud experiences. Additionally, we will debut the latest capabilities of the Higress AI gateway on this site. For example, we will soon launch the AI Guideline feature, allowing developers to quickly convert Nginx/Kong Lua plugins into Higress Wasm plugins using AI programming tools like Tongyi Lingma/Cursor.

After the launch of Higress.ai, you may have the following questions:

Higress is a cloud-native API gateway based on Istio and Envoy, integrating traffic gateway, microservice gateway, security gateway, and AI gateway into one. It allows for the creation of Wasm plugins using Go/Rust/JS and provides dozens of ready-to-use generic plugins along with an out-of-the-box console.

Higress.cn is the main site of Higress, serving as the official technical portal and one-stop resource platform, focusing on providing developers with core capability demonstrations related to the Higress technology stack, open-source ecosystem support, and best practices for enterprise users.

Among them, the AI gateway, as a key component of modern AI infrastructure, has a deep coupling with the ecological development of large language models. The continuous emergence of new technologies such as Retrieval-Augmented Generation (RAG), Agents, and the MCP protocol within the LLM technology stack has opened multiple dimensions for the technical evolution of AI gateways in protocol optimization, traffic governance, and model scheduling.

To better showcase the richness of AI gateway content to developers, Higress.ai was born, aiming to provide independent channels for experiencing the AI gateway and showcasing typical AI application scenarios like agent development framework integration and LLM API governance. Additionally, Higress.ai will highlight Higress's explorations in AI gateways, collaborating with AI developers to define the technical direction of the next generation of AI-native gateways. Furthermore, Higress.ai will serve as our starting point for serving global AI developers.

It is important to note that the AI gateway is not a new form independent of the API gateway. Essentially, it is still a type of API gateway but is specifically extended to meet new demands in AI scenarios. It is both an inheritance and an evolution of API gateways.

Of course not.

In the AI era, Agents and large models have raised greater demands for access layers to avoid service "burden." This brings historic developmental opportunities for AI gateways.

As early as June of last year, when we released v1.4, we had already open-sourced many capabilities of the AI gateway, which was not a sudden idea after the accelerated development of large models during the Spring Festival.

We believe that AI loads and classic loads will continue to merge to unleash the infinite capabilities of AI and form unified management at the access layer. Therefore, Higress will continue to deepen its focus on traffic gateways, microservice gateways, and security gateways to enhance capabilities and experiences.

In terms of traffic gateways, Higress can serve as an Ingress gateway for K8s clusters and is compatible with numerous K8s Nginx Ingress annotations, enabling smooth and rapid migration from K8s Nginx Ingress to Higress.

In terms of microservice gateways, Higress can interface with various types of service discovery configurations such as Nacos, ZooKeeper, Consul, and Eureka, and is deeply integrated with microservices technology stacks like Dubbo, Nacos, and Sentinel. Compared to traditional Java-based microservice gateways, Higress, based on the Envoy C++ gateway core, can demonstrate superior performance, significantly reduce resource usage, and lower costs.

In terms of security gateways, Higress provides WAF capabilities and supports various authentication and authorization strategies, including key-auth, hmac-auth, jwt-auth, basic-auth, and OIDC.

Higress's traffic gateway, microservice gateway, security gateway, and AI gateway all offer commercially enhanced cloud services, with the cloud service product on Alibaba Cloud being API Gateway.

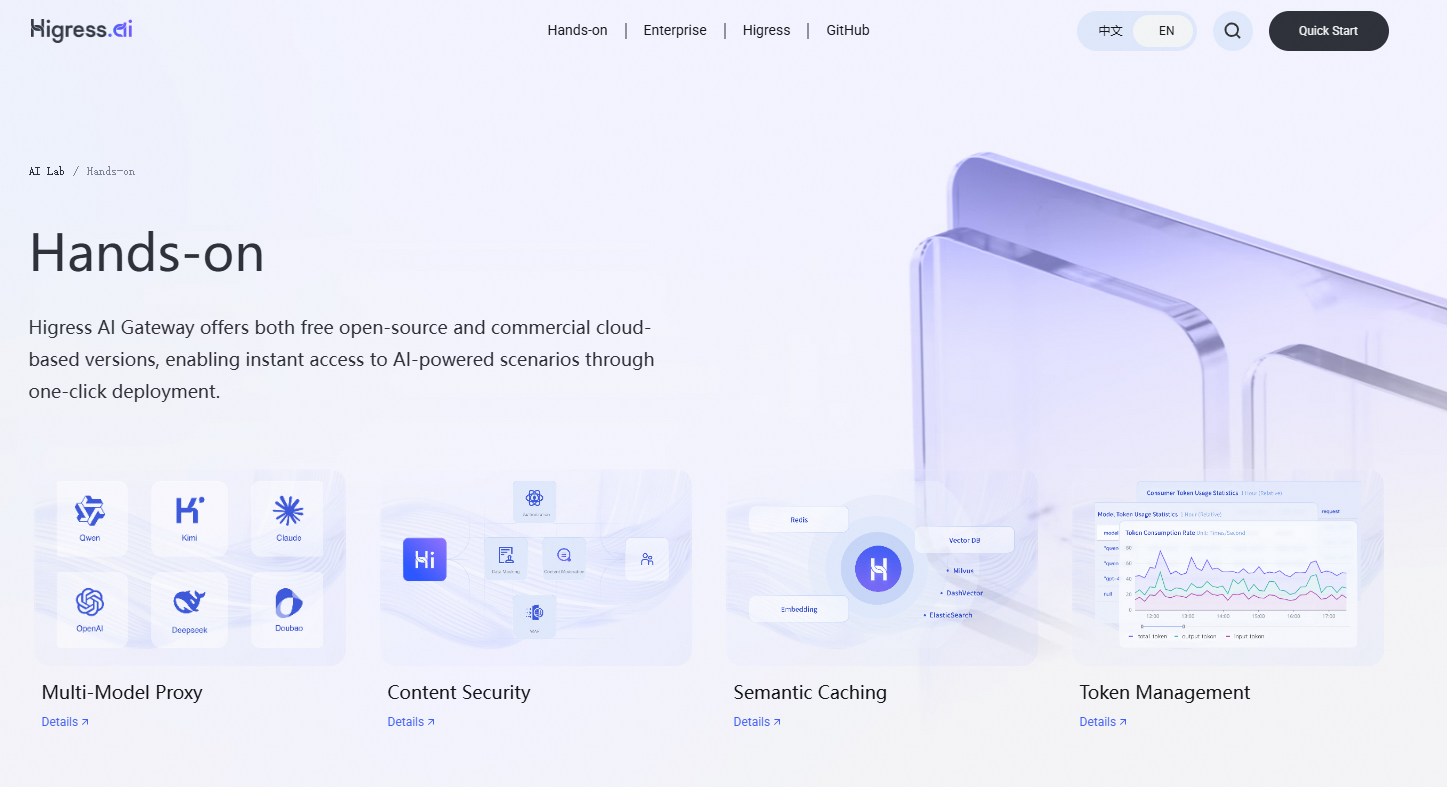

Higress.ai currently offers four experience scenarios: multi-model proxy, content safety, semantic caching, and token rate limiting, accessible through a one-click experience on the top navigation bar of the homepage.

Alternatively, you can click on Scenario Experience to enter the experience topic page.

The scenario experience on Higress.ai provides two methods:

● Cloud Experience: Developers can log into their Alibaba Cloud accounts and use the “Cloud Lab” option on the API gateway to experience the AI gateway functions using free quotas.

● Open Source Experience: Developers can deploy the AI gateway in a local Docker environment with one click and experience the role of AI in various scenarios.

The overall interface and functionality of Higress.ai are continuously being improved. Our next steps include:

● International Version: The entire site content will undergo internationalization adaptation, and overseas social media and technical community official accounts will be activated. We welcome everyone to follow us.

● Online Plugin Editor: Developers will be able to complete plugin development without configuring a local compilation environment. In this editor, we will use AI programming capabilities to achieve natural language generation of plugin code, online compilation and debugging, and AI assistant problem-solving, providing a one-stop service for plugin generation based on Higress.

● MCP Server: Higress.ai will soon introduce the ability to transform the API capabilities of backend services into MCP Server capabilities, supporting various MCP Client calling scenarios and allowing API capabilities to be better utilized by AI tools. The official platform will support several mainstream applications, and developers can also freely integrate other applications.

● Best Practice Demonstration: Covering the full lifecycle of agent development framework integration and LLM API governance, we will release a series of best practices, including architectural design reference schemes and performance tuning recommendations.

● More Experience Scenarios: Based on the 10+ plugin functions in the open-source version and user scenarios on the commercial version, we will launch more experience scenarios. If you have any innovative practices in the open-source version, we welcome you to contact us for co-construction.(wechat:zjjxg2018,Note Higress)

Annotations for Nacos Configuration Center in Spring Cloud Applications

The Consumption of Tokens by Large Models Can Be Quite Ambiguous

535 posts | 52 followers

FollowAlibaba Cloud Native Community - July 20, 2023

Alibaba Cloud Community - May 16, 2024

Alibaba Cloud Native Community - February 28, 2025

PM - C2C_Yuan - March 20, 2024

Alibaba Cloud Community - October 31, 2023

Alibaba Cloud Native - December 10, 2024

535 posts | 52 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Cloud Native Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free