"Data Lake" is becoming more commonly known in the IT industry. Although defined in different ways, it has already been implemented by enterprises, including Alibaba Cloud. Data Lake is a new system that integrates storage and computing in the era of big data and AI.

Let's briefly discuss about its development. Led by the explosive growth in data volumes, digital transformation has become a major trend in the IT industry. For deeper value exploration of data, we must retain the original information stored in the data to cope with the changing future needs. Current database middleware products such as Oracle fail to adapt to this trend. Thus, the industry has been developing new computing engines to cope up with the needs of the big data era. Owing to this trend, enterprises have begun to establish their own open-source Hadoop data lake architectures, where the original data is stored in Hadoop Distributed File System (HDFS). The engines here are mainly based on Hadoop and Spark open-source ecosystems, integrating storage and computing. However, enterprises need to maintain and manage the entire cluster by themselves, resulting in higher cost and poor cluster stability.

Cloud-hosted Hadoop data lake architecture, also known as E-MapReduce (EMR) open-source data lake, came into existence to overcome these challenges. Cloud vendors manage the underlying physical servers and the open-source software of these traditional data lakes, while data storage and engines are the same as before. This architecture improves the elasticity and stability of the machine layer through the cloud IaaS layer, which reduces the overall O&M costs for enterprises. However, enterprises still need to govern the HDFS and service running conditions, which refers to the O&M of the application layer.

EMR open-source data lakes provide less stability due to coupled functions of storage and computing. The usage cost is also high because scaling the two resources independently is not possible. Moreover, limited by the open-source software itself, traditional data lake technologies do not meet the enterprises' needs of data scale, storage costs, query performance, and elastic computing architecture upgrade. Realizing the ideal goal of data lake architecture is also difficult here. Because of this, enterprises expect lower costs for data storage, more refined data asset management, shared data lake metadata, higher data update frequency, and more powerful data access tools.

In the cloud-native era, we can effectively use public cloud infrastructures. Data lake platforms also have more technology support now. For example, cloud-hosted storage systems are gradually replacing HDFS as the data lake storage infrastructure with more variable engines.

In addition to Hadoop and Spark ecosystem engines, cloud vendors provide engine products that support data lakes. For example, AWS Athena are available for analytics, and AWS Sagemaker is available for AI. The cloud-hosted Hadoop data lake architecture still maintains the structure of one storage system with multiple engines, emphasizing the importance of unified metadata services.

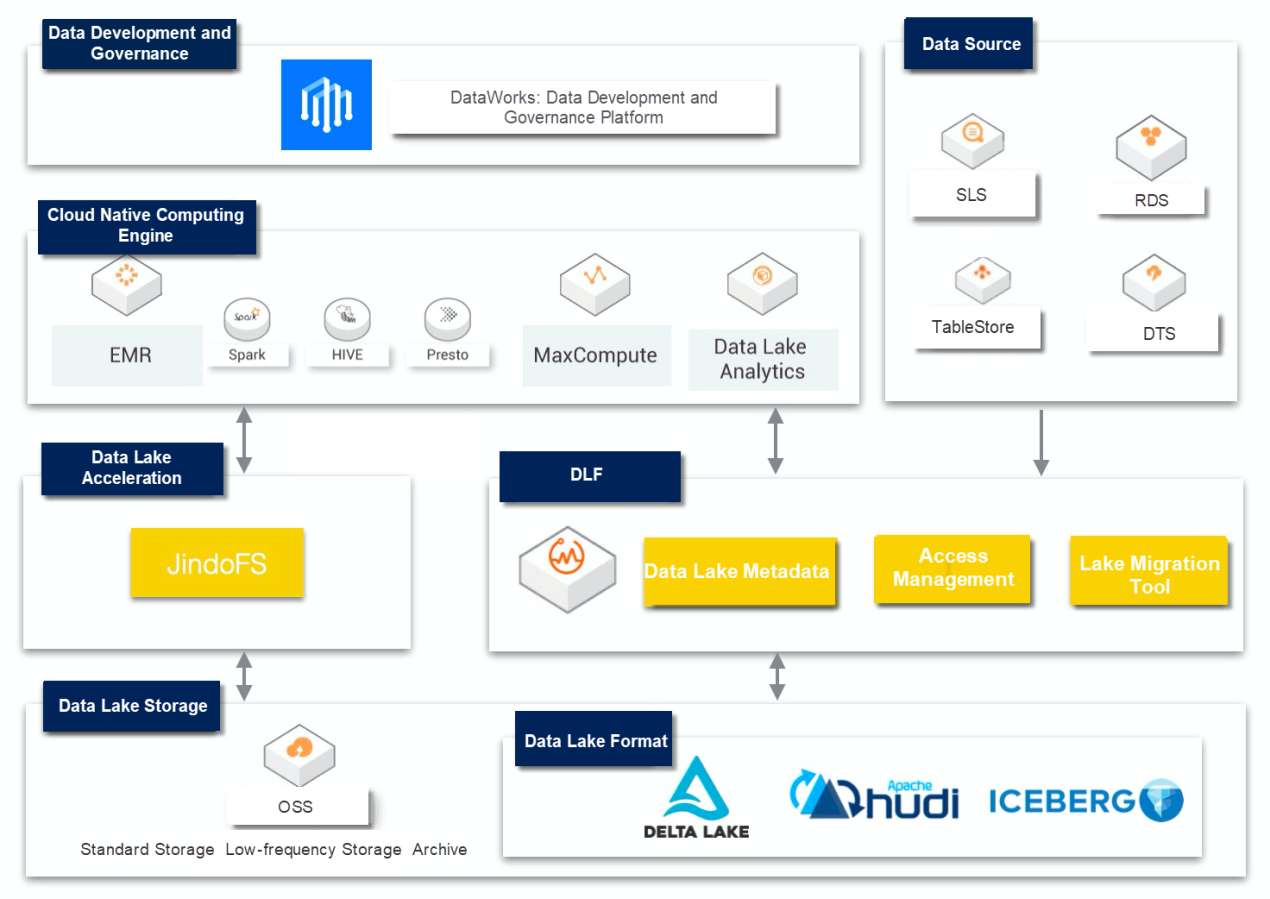

Based on the above architecture, Alibaba Cloud has officially launched its cloud-native data lake system, consisting of Object Storage Service (OSS), Data Lake Formation (DLF), and E-MapReduce (EMR). Alibaba Cloud offers enterprise-level cloud-native data lake solutions, combining lake storage, lake acceleration, lake management, and lake computing, based on the storage-compute separation architecture.

Alibaba Cloud's cloud-native data lake system supports EB-level data lake, storing more than 100,000 databases, 100 million tables, and 1 billion partitions. It supports more than 3 billion metadata service requests per day and more than 10 open-source computing engines and cloud-native data warehouse engines such as MaxCompute and Hologres.

Additionally, the storage cost of Alibaba Cloud Data Lake is 10 times less than the highly efficient cloud disks. The query performance is also 3 times higher than the traditional object storage. Besides, with extremely high elasticity, the query engine can start more than 1,000 Spark executors in 30 seconds. Based on its powerful storage and computing capabilities, Alibaba Cloud offers a leading data lake system. All these indicate that developing a system that can retain the original data information while connecting to different computing platforms is vital to stay ahead in the big data era.

With this new product, we are launching a series of topics about cloud-native data lake technologies. These articles will introduce the establishment of our brand new cloud-native data lake system based on Alibaba Cloud OSS, JindoFS, DLF, and various computing engines in Alibaba Cloud.

Alibaba Cloud OSS is the unified storage layer of Data Lake. With 99.99999999% reliability, OSS can store data of any size, and interface with business applications and various computing and analysis platforms. It is very suitable for enterprises to form OSS-based data lakes. Compared with HDFS, OSS can store a large number of small files and reduce unit storage cost through advanced technologies such as the separation of cold and hot data, high-density storage, and high-compression algorithms. OSS is also more eco-friendly than Hadoop and supports seamless integration with various Alibaba Cloud computing platforms. Besides, it provides OSS Select, Shallow Copy, and multiple versions for data analysis to accelerate data processing and enhance data consistency.

For more information, see: EB-level Data Lake Based on OSS

OSS architecture design is different from distributed file systems, such as HDFS. Besides, as a remote storage service in the compute-storage separated architecture, OSS lacks support for data localization in big data computing. Therefore, Alibaba Cloud has customized an exclusive big data storage service, JindoFS, which greatly enhances the engine analysis performance in a data lake. In common benchmark tests, such as TPC-DS and TeraSort, JindoFS with the compute-storage separated architecture offers better performance than locally-deployed HDFS. Simultaneously, JindoFS is fully compatible with the Hadoop file system interfaces, providing more flexible and efficient computing and storage solutions for users. So far, JindoFS supports mainstream computing services and engines in the Hadoop open-source ecosystem, including Spark, Flink, Hive, MapReduce, Presto, and Impala. Currently, JindoFS is available for Alibaba Cloud EMR. In the future, more services of JindoFS will be available for data lake acceleration.

For more information, see:

A traditional data lake architecture emphasizes unified data storage but lacks the necessary means and tools for data schema management. It requires upper-layer analysis and computing engines to maintain metadata. Moreover, it provides no unified permission management for data access, which makes it useless for enterprise users. Launched in September 2020, DLF is a core service from Alibaba Cloud that satisfies users' needs for data asset management while creating data lakes. DLF provides unified metadata views and permission management for data available in OSS. It also provides real-time lake migration and cleaning templates for data and production-level metadata services for upper-layer data analysis engines.

For more information, see:

Many cloud-native computing engines on Alibaba Cloud are integrated or ready to be integrated into DLF. These cloud-native engines include open-source EMR computing engines such as Spark, Hive, Presto, Flink, MaxCompute, Databricks, and Data Lake Analytics (DLA). For example, the most commonly used open-source engine Spark can directly connect to the metadata service in DLF. All Spark tasks running on multiple clusters or platforms share the same metadata view. Besides, EMR provides Shuffle Service for Spark for elastic scaling on cloud-native platforms. The combination of cloud-native computing engines and data lake architecture can realize higher flexibility and lower data analysis costs. Moreover, the cloud-native data warehouse, MaxCompute, and the real-time HSAP analysis engine, Hologres, can access DLF.

For more information, see: EMR Shuffle Service: A Powerful Elastic Tool of Serverless Spark

DataWorks provides unified data views for Alibaba Cloud users. With these views, DataWorks can identify data assets' status, improve data quality, obtain data more efficiently, guarantee data security compliance, and accelerate analysis efficiency for data querying. It supports the establishment of offline big data warehouses and the query, analysis, and processing of data federation. Moreover, it also supports low-frequency interactive queries of massive data, the establishment of intelligent reports, and the implementation of data lake solutions.

For more information, see:

DataWorks: A Platform for Developing and Governing a Data Lake

To sum up, users can establish a data lake platform to complete the transformation of enterprise big data architecture with Alibaba Cloud's basic components and overall solutions.

How Delta Lake and DLF Service Facilitate Real-time CDC Synchronization in a Data Lake

StarLake: Exploration and Practice of Mobvista in Cloud-Native Data Lake

62 posts | 7 followers

FollowApsaraDB - January 9, 2023

ApsaraDB - November 17, 2020

Alibaba Clouder - November 17, 2020

Alibaba Clouder - January 20, 2021

Alibaba Clouder - November 23, 2020

Apache Flink Community - February 20, 2025

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba EMR