By Chi Wai Chan, Product Development Solution Architect at Alibaba Cloud

The first part of the article series (Part A) is available here.

There are three parts to this series:

After reading Part A, you should have all of the components required (VPC, Kafka, HBase, and Spark virtual cluster) on Alibaba Cloud. Part B illustrates the building of the streaming processing solution on spark streaming with the serverless Data Lake Analytics (DLA) service for the "Feed Streams Processer" part. The source code from this demo is available here.

Alibaba Cloud's Data Lake Analytics (DLA) is an end-to-end, on-demand, serverless data lake analytics and computing service. It offers a cost-effective platform to run Extract-Transform-Load (ETL), machine learning, streaming, and interactive analytics workloads. DLA can be used with various data sources, such as Kafka, HBase, MongoDB, Object Storage Service (OSS), and many other relational and non-relational databases. For more information, please visit the Alibaba Cloud DLA product introduction page.

DLA's Serverless Spark is a data analytics and computing service that is developed based on the cloud-native, serverless architecture. To submit a Spark job, you can perform simple configurations after you activate DLA. When a Spark job is running, computing resources are dynamically assigned based on task loads. After the Spark job is completed, you are charged based on the resources consumed by the job. Serverless Spark helps you eliminate the workload for resource planning and cluster configurations. It is more cost-effective than the traditional mode. Serverless Spark uses the multi-tenant mode. The Spark process runs in an isolated and secure environment.

A virtual cluster is a secure unit for resource isolation. A virtual cluster does not have fixed computing resources. Therefore, you only need to allocate the resource quota based on your business requirements and configure the network environment to where the destination data that you want to access belongs. You do not need to configure or maintain computing nodes. You can also configure parameters for Spark jobs of a virtual cluster. This facilitates unified management of Spark jobs. A Compute Unit (CU) is the measurement unit of Serverless Spark. One CU equals one vCPU and 4 GB of memory. After a Spark job is completed, you are charged based on the CUs that are consumed by the Spark job and the duration for running the Spark job. The pay-as-you-go billing method is used. The computing hours are used as the unit for billing. If you use one CU for an hour, the resources consumed by a job are the sum of resources consumed by each computing unit.

To access resources in your VPC, you must configure the AccessKey ID and AccessKey secret. The resources include ApsaraDB RDS, AnalyticDB for MySQL, PolarDB, ApsaraDB for MongoDB, Elasticsearch, ApsaraDB for HBase, E-MapReduce Hadoop, and self-managed data services hosted on ECS instances. The Driver and Executor processes on the serverless Spark engine are running in a security container.

You can attach an Elastic Network Interface (ENI) of your VPC to the security container. This way, the security container can run in the VPC as an ECS instance. The lifecycle of an ENI is the same as that of a process on the serverless Spark engine. After a job succeeds, all ENIs are released. To attach an ENI of your VPC to the serverless Spark engine, you must configure the security group and VSwitch of your VPC in the job configuration of the serverless Spark engine. In addition, you must make sure that the network between the ENI and service that stores the destination data is interconnected. If your ECS instance can access the destination data, you only need to configure the security group and VSwitch of the ECS instance on the serverless Spark engine.

spark.dla.eni.enable is true, which indicates that VPC is enabled.spark.dla.eni.vswitch.id is the VSwitch ID obtained from your selected VSwitch ID using the ECS instance.spark.dla.eni.security.group.id is the security group ID obtained from your selected security group ID using the ECS instance.You can click this link to download the dla-spark-toolkit.tar.gz package or use the following wget command to download the package in a Linux environment. For more details, you can refer to the DLA serverless spark document.

wget https://dla003.oss-cn-hangzhou.aliyuncs.com/dla_spark_toolkit_1/dla-spark-toolkit.tar.gzAfter you download the package, decompress it.

tar zxvf dla-spark-toolkit.tar.gzThe spark-defaults.conf file allows you to configure the following parameters:

You should replace the parameter in square bucket [xxxxx] for your environment.

For mappings between regions and regionIds, please see the Regions and Zones document here.

# cluster information

# AccessKeyId

keyId = [AccessKeyId]

# AccessKeySecret

secretId = [AccessKeySecret]

# RegionId

regionId = [RegionId]

# set spark virtual cluster vcName

vcName = [vcName]

# set OssUploadPath, if you need upload local resource

ossUploadPath = [ossUploadPath]

##spark conf

# driver specifications : small 1c4g | medium 2c8g | large 4c16g

spark.driver.resourceSpec = small

# executor instance number

spark.executor.instances = 2

# executor specifications : small 1c4g | medium 2c8g | large 4c16g

spark.executor.resourceSpec = small

# when use ram, role arn

#spark.dla.roleArn =

# when use option -f or -e, set catalog implementation

#spark.sql.catalogImplementation =

# config dla oss connectors

# spark.dla.connectors = oss

# config eni, if you want to use eni

spark.dla.eni.enable = true

spark.dla.eni.vswitch.id = [vswitch ID from your ecs]

spark.dla.eni.security.group.id = [security group ID from your ecs]

# config spark read dla table

#spark.sql.hive.metastore.version = dlaAfter configuring all of the requirement parameters, you can submit your spark job to the DLA virtual cluster by running the following command:

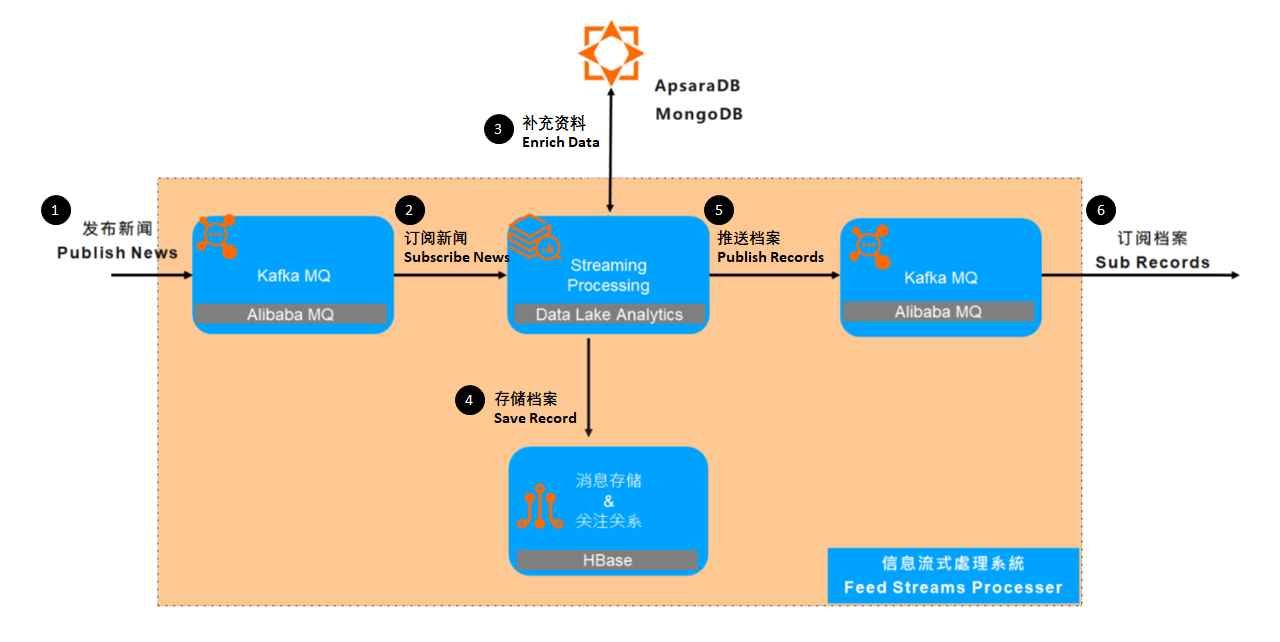

./bin/spark-submit --verbose --class [class.name] --name [task.name] --files [oss path log4j.property file] [spark jar] [arg0] [arg1] ...This demo will provide a step-by-step guide on building a simplified feed streams processing systems with the following tasks, as shown in Figure 1.

Figure 1. Feed Streams Processor Solution Overview

The simple Feed Streams Processor program with spark structured streaming on:

You can use any relational database management system (RDBMS) or integrated development environment (IDE) to access the HBase via Phoenix. I will use DBeaver for this demo. You can download the DBeaver Community Edition for free.

You can download the phoenix jar file from this link or any open-source compatible version. Unzip the gz and tar files. You have to extract the ali-phoenix-5.2.4.1-HBase-2.x-shaded-thin-client.jar for DBeaver.

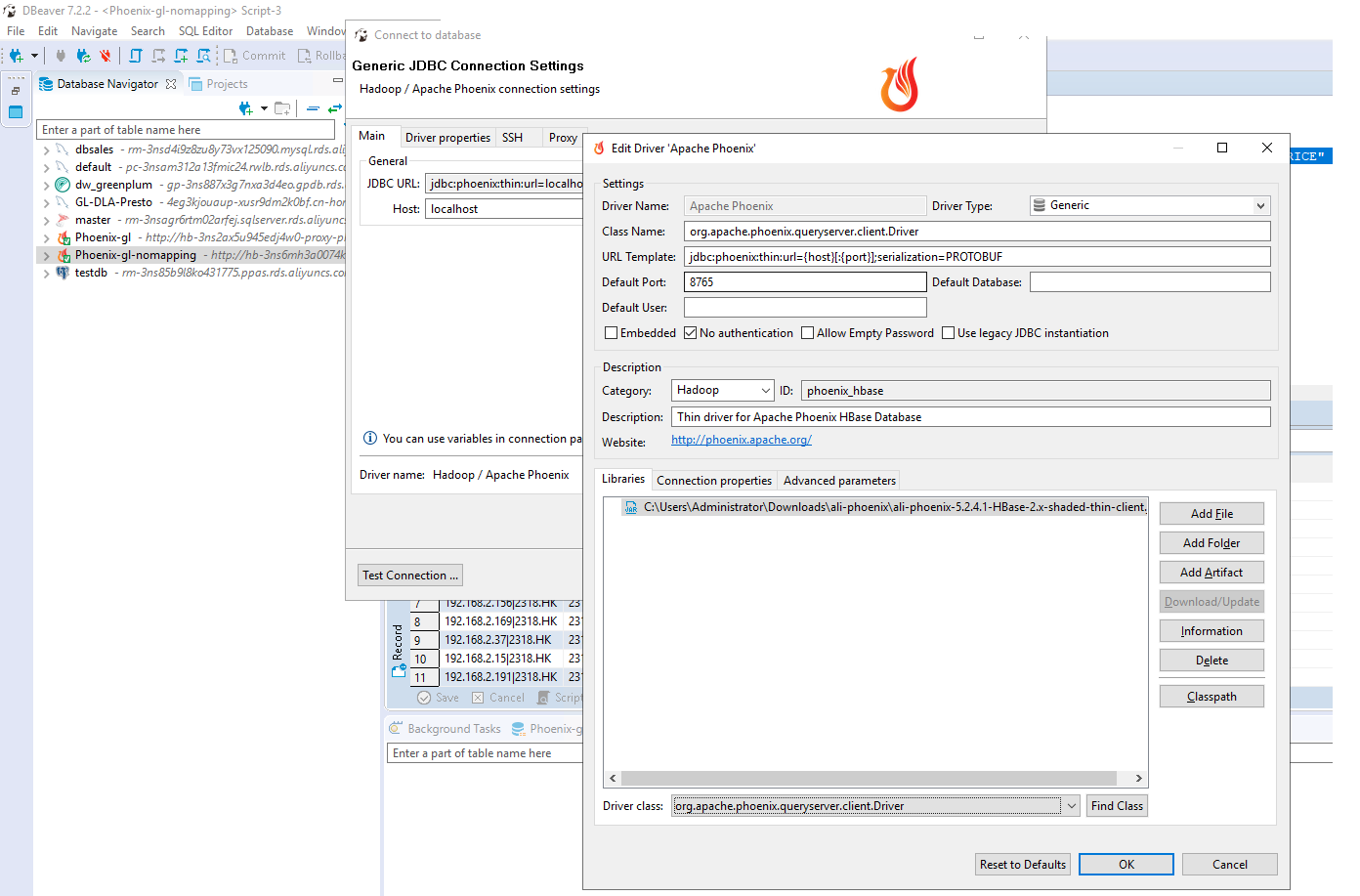

After installing DBeaver, you can create a new connection to the database, and search for the "Apache Phoenix" database type. Then, click "Next." Go to "Edit Driver Settings," then "Add File," and configure the phoenix jdbc driver to the previously extracted ali-phoenix-5.2.4.1-HBase-2.x-shaded-thin-client.jar file, as shown in Figure 2.

Figure 2. DBeaver Setup for Apache Phoenix Database Connection

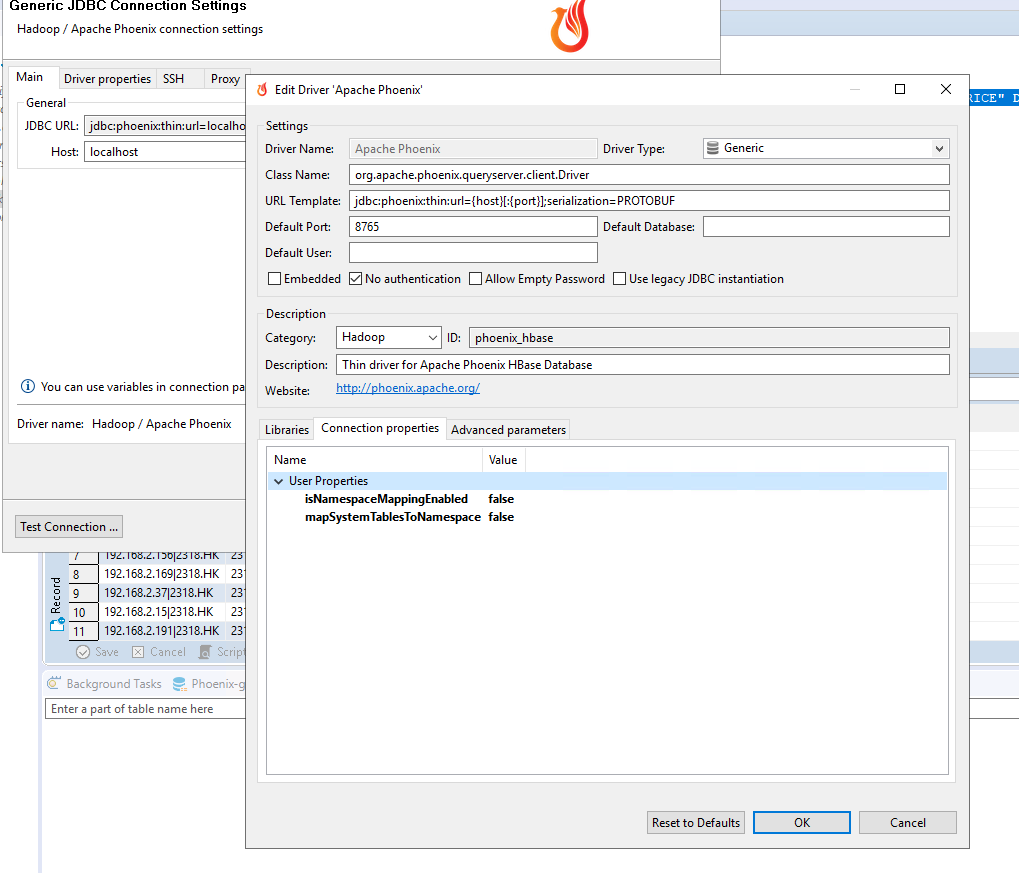

Click the "Connection Properties" tab in Figure 2, and configure phoenix.schema.isNamespaceMappingEnabled and phoenix.schema.mapSystemTablesToNamespace to false, as shown in Figure 3. Lastly, click "OK" to complete the driver setup, and provide the connection URL provided in the "Server Load Balancer Connection" from the SQL Service of the HBase provisioned in the previous section.

Figure 3. DBeaver Setup for Apache Phoenix Database Connection – Properties

Now, you are connected to the HBase instance via the Phoenix Query Server. You can create the Phoenix table, which is mapped to the HBase table and corresponding secondary global index for a simple search using the supported SQL syntax. You can use the Phoenix syntax link for reference.

To create a table with mapping to HBase:

CREATE TABLE USER_TEST.STOCK (ROWKEY VARCHAR PRIMARY KEY,"STOCK_CODE" VARCHAR, "STOCK_NAME" VARCHAR, "LAST_PRICE" DECIMAL(12,4),"TIMESTAMP" TIMESTAMP ) SALT_BUCKETS = 16;To create a global secondary index to the support filter query:

create index STOCK_code_index ON USER_TEST.STOCK ("STOCK_CODE") SALT_BUCKETS = 16;You can run the following select statement to verify the table creation, if there is a return with zero rows, then, you are finished with the Phoenix and HBase table creation.

SELECT * FROM USER_TEST.STOCK

WHERE "STOCK_CODE" = '2318.HK' -- Filtering on secondary index

ORDER BY "TIMESTAMP" desc

;

Go to this link and copy the price and volume information for the Top 30 Components of Hang Seng Index. Convert them into .csv files, and then upload them to MongoDB using this tutorial.

There are many dependencies. It is recommended to set up a maven project with a maven-shade-plugin to compile an uber jar for simple deployment. You can refer to this POM file to set up your maven project.

The following sample code simulates publishing a very simple message to a particular Kafka Topic with a key:value pair. The key is the "ip" address of the sender, and the value is "stock_code" with the timestamp information.

val props = new Properties()

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

props.put(ProducerConfig.MAX_BLOCK_MS_CONFIG, "30000");

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer");

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer");

val kafkaProducer = new KafkaProducer[String, String](props)

for (nEvents <- Range(0, events)) {

val runtime = new Date().getTime()

val ip = "192.168.2." + rnd.nextInt(255)

val msg = SKUs.HSIStocks(rnd.nextInt(SKUs.HSIStocks.length-1)) + "," + runtime;

val data = new ProducerRecord[String, String](topicName, ip, msg)

kafkaProducer.send(data)

Thread.sleep(1000)

}

The following sample code simulates:

MongoSpark.load(sparkSession, loadMongo())...

SparkSession.readStream.format("kafka")...

val sparkSession = loadConfig();

val dfStatic = MongoSpark.load(sparkSession, loadMongo())

.selectExpr("symbol as Symbol", "CompanyName", "LastPrice", "Volume")

val dfStream = sparkSession

.readStream

.format("kafka")

.option("kafka.bootstrap.servers", bootstrapServers)

.option("subscribe", topicName)

.option("group.id", groupId)

.load()

.selectExpr("CAST(key AS STRING) as SourceIP", "split(value, ',')[0] as StockCode", "CAST(split(value, ',')[1] AS long)/1000 as TS")

val dfJoin = dfStream

.join(dfStatic, dfStream("StockCode") === dfStatic("Symbol"), "leftouter")

.selectExpr("concat_ws('|', nvl(SourceIP, ''), nvl(StockCode, '')) as ROWKEY", "StockCode as stock_code", "CompanyName as stock_name", "LastPrice as last_price", "from_unixtime(TS) as timestamp")

SparkHBaseWriter.open();

val query = dfJoin

.writeStream

.foreachBatch { (output: DataFrame, batchId: Long) =>

SparkHBaseWriter.process(output);

}

.start

.awaitTermination();

SparkHBaseWriter.close();

We recommend using the Apache Spark Plugin to save the spark dataframe into HBase. The phoenix-spark plugin extends Phoenix's MapReduce support to allow Spark to load Phoenix tables as DataFrames, and enables persisting them back to Phoenix. The phoenix-spark integration can leverage the underlying splits provided by Phoenix to retrieve and save data across multiple workers. Only a database URL and a table name are required. Optional SELECT columns can be given, as well as pushdown predicates for efficient filtering. You can refer to this document for more details.

object SparkHBaseWriter {

var address: String = null;

var zkport: String = null;

var driver: String = null;

def open(): Unit = {

address = ConfigFactory.load().getConfig("com.alibaba-inc.cwchan").getConfig("HBase").getString("zookeeper")

zkport = ConfigFactory.load().getConfig("com.alibaba-inc.cwchan").getConfig("HBase").getString("zkport")

driver = "org.apache.phoenix.spark"

}

def close(): Unit = {

}

def process(record: DataFrame): Unit = {

record.write

.format(driver)

.mode(SaveMode.Overwrite)

.option("zkUrl", address.split(",")(0) + ":" + zkport)

.option("table", "USER_TEST.STOCK")

.save()

}

}

}In local mode, after running the program with the publisher program on sending messages to Kafka MQ, and also the program with subscriber program on receiving and enriching message from Kafka MQ, save the transformed records into HBase.

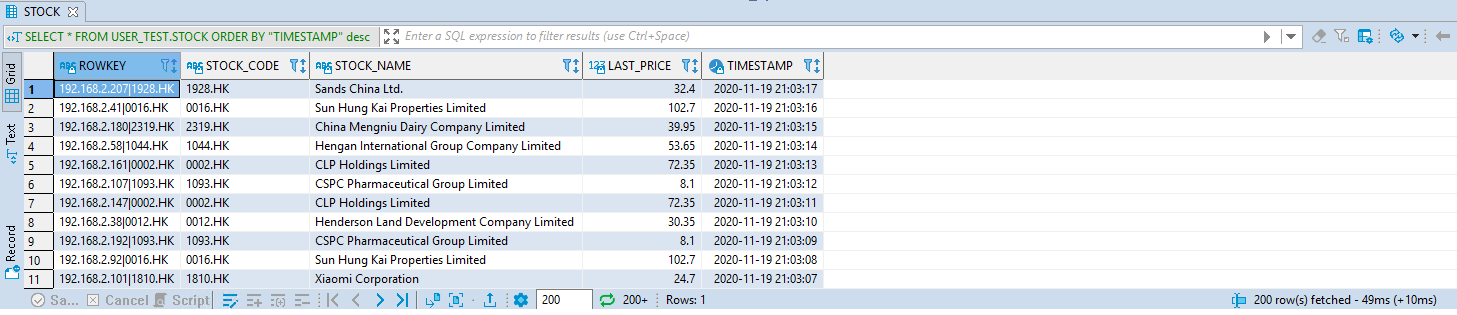

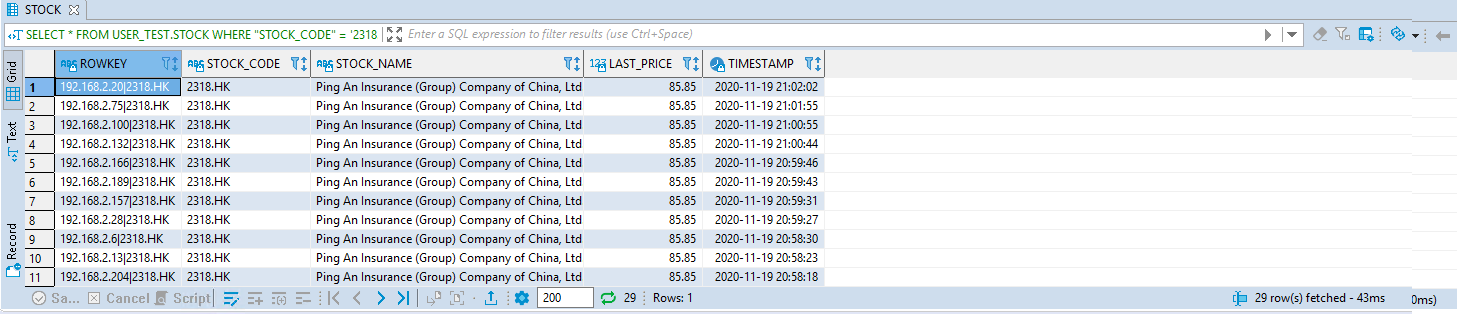

After a few seconds (e.g., 10 seconds), you can access the DBeaver (RDBMS IDE) to make the following queries:

SELECT * FROM USER_TEST.STOCK

ORDER BY "TIMESTAMP" desc

;You can retrieve the enriched records from HBase.

You can also access the DBeaver (RDBMS IDE) to make the following queries with filtering:

SELECT * FROM USER_TEST.STOCK

WHERE "STOCK_CODE" = '2318.HK'

ORDER BY "TIMESTAMP" desc

;You can retrieve the enriched records from HBase with filtering.

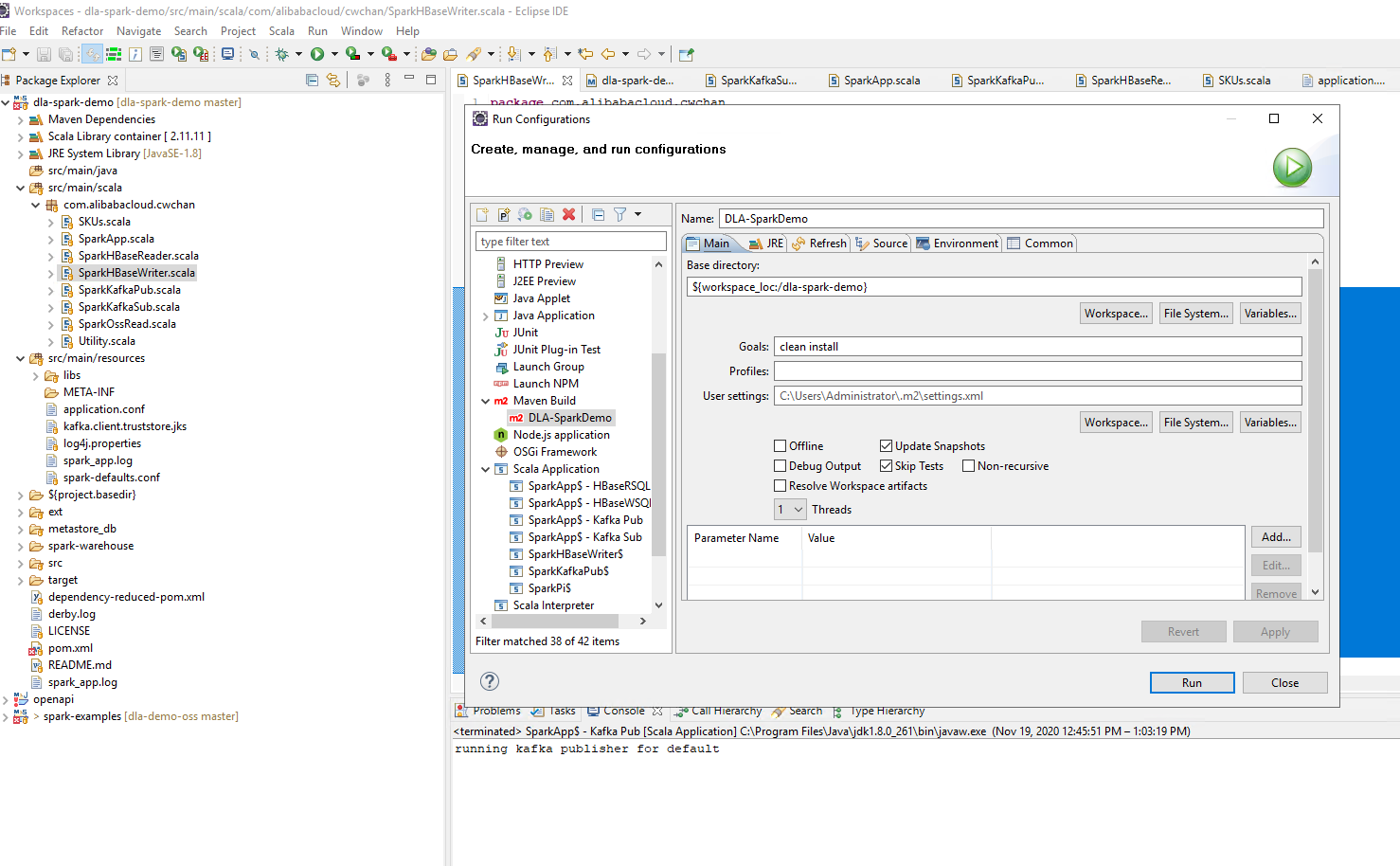

You should compile and package the scala project into an uber jar while running "mvn clean install," as shown in the figure below. The uber jar is now available under the "[workspace_directory]\target\".

Figure 4. Compile the Scala Project Into an Uber Jar for Deployment

Send the Uber jar to a Linux environment. Afterward, you can use the previously configured "Spark-SQL Command Line Tool" to send the task to DLA for large scale production deployment with the following command:

[root@centos7 dla-spark-toolkit]# pwd

/root/dla-spark-toolkit

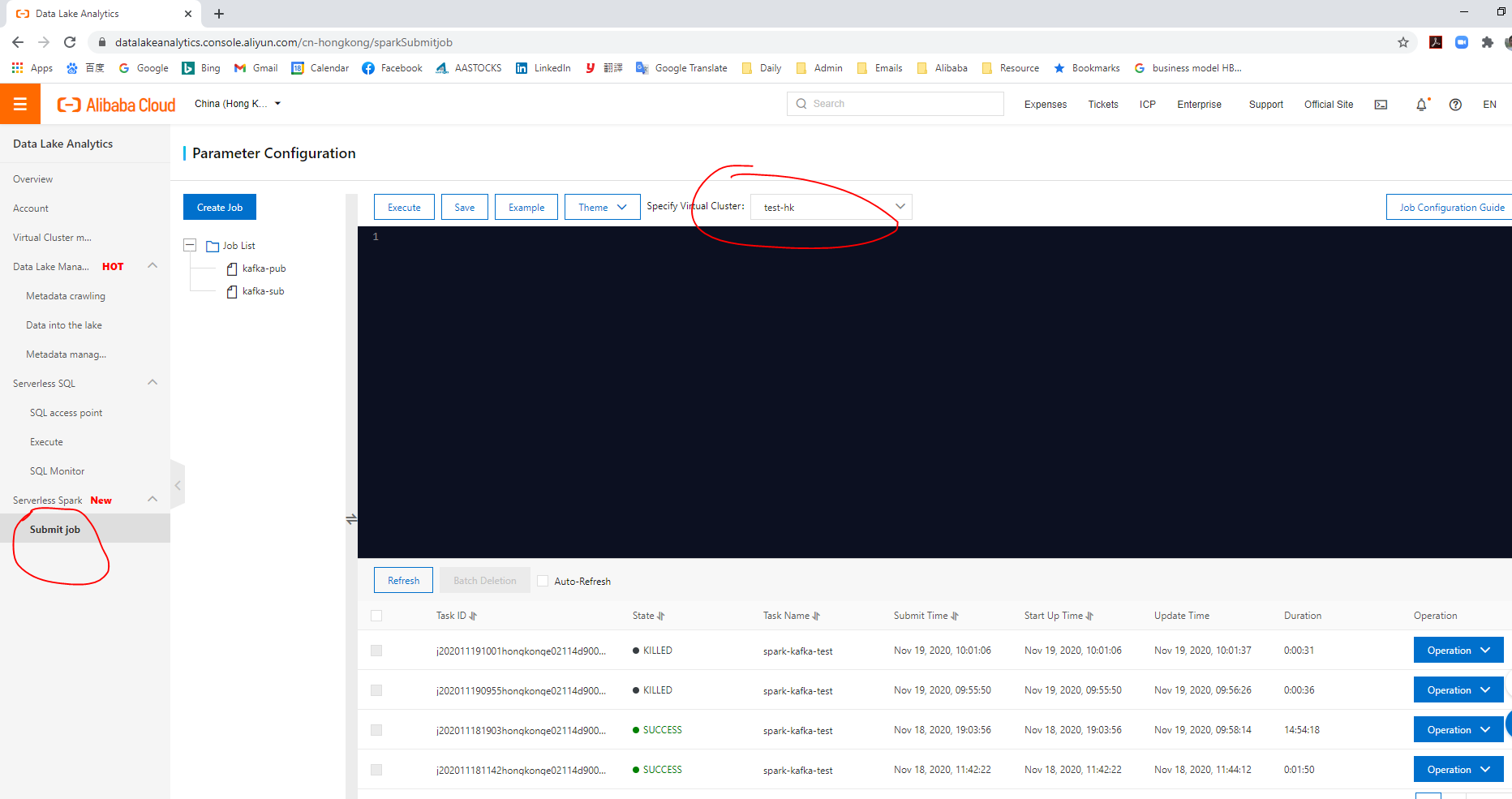

[root@centos7 dla-spark-toolkit]# ./bin/spark-submit --verbose --class com.alibabacloud.cwchan.SparkApp --name spark-kafka-test --files oss://dla-spark-hk/dla-jars/log4j.properties /root/dla-spark-demo-0.0.1-SNAPSHOT-shaded.jar run subGo to the search bar under the "Products and Services" dropdown menu by clicking on the top left menu icon. Type "data lake analytics" in the search bar, as shown below, and click on "data lake analytics" under the filtered results. After that, you will be redirected to the DLA product landing page.

Figure 5. Data Lake Analytics Management Page

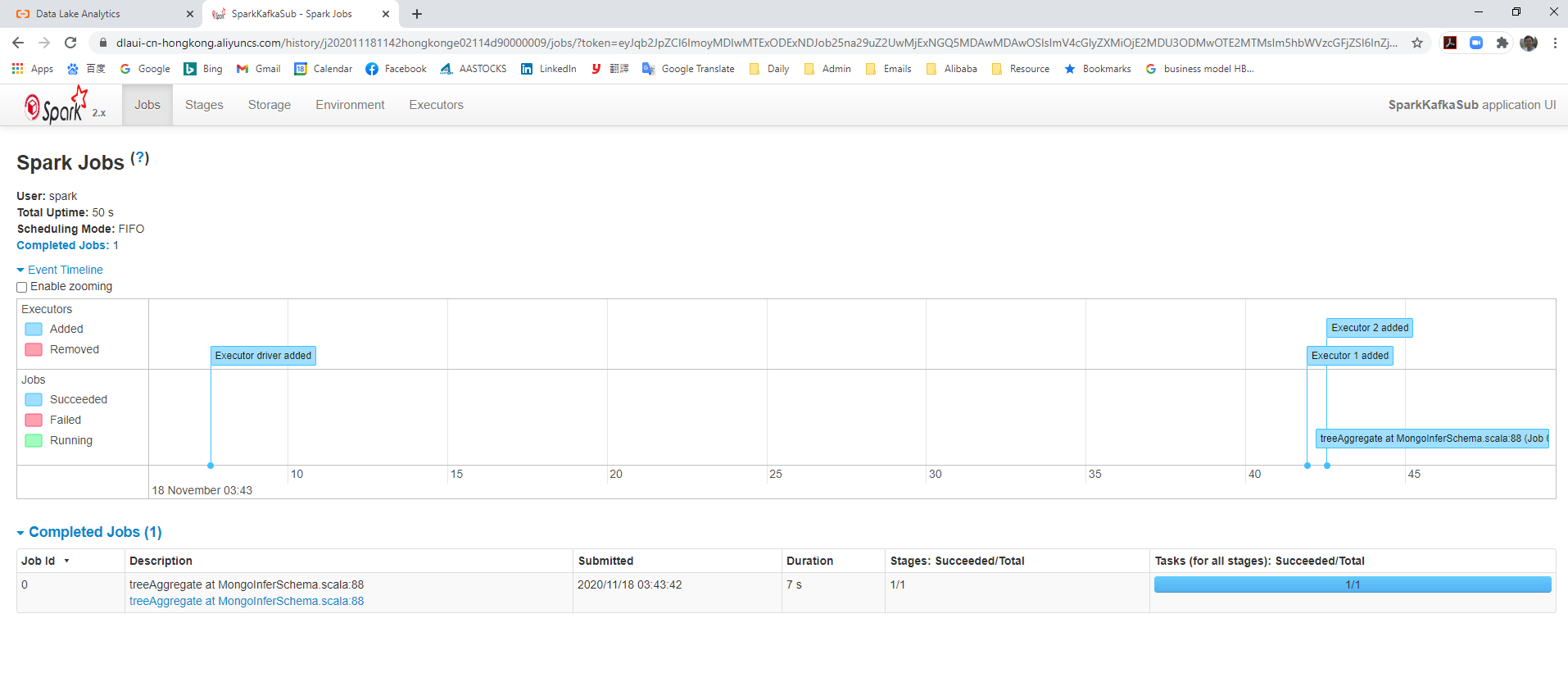

Click "Submit Job" on the menu bar on the left hand side. Select your virtual cluster ("test-hk") and the spark tasking submitted will be listed on the data grid in the button of the window. For this particular task, you can click on the "Operation" dropdown button, and click "SparkUI." You will be redirected to the corresponding spark history server for detailed task information and executor logs.

Figure 6. SparkUI of DLA

Congratulations, you have successfully deployed your first spark program to the Data Lake Analytics Serverless Virtual Cluster on Alibaba Cloud. Enjoy yourself! In the next section (Part C), I will show you how to deploy a simple machine learning model on Spark MLlib for building an intelligent distribution list and personalized content distribution.

2,593 posts | 793 followers

FollowAlibaba Clouder - November 23, 2020

Apache Flink Community China - September 27, 2020

Alibaba Clouder - September 17, 2020

Apache Flink Community - November 21, 2025

Apache Flink Community - April 16, 2024

Apache Flink Community China - June 8, 2021

2,593 posts | 793 followers

Follow Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn MoreMore Posts by Alibaba Clouder