Are you an AI enthusiast with a keen eye on innovation? Sign up for the Alibaba Cloud Global AI Innovation Challenge and win big! Sign Up Here >>

By GarvinLi

In this article, Alibaba technical expert Aohai introduces the basic concepts and architecture of a recommender system, specifically, what a recommender system is and how an enterprise-level recommender system architecture looks like.

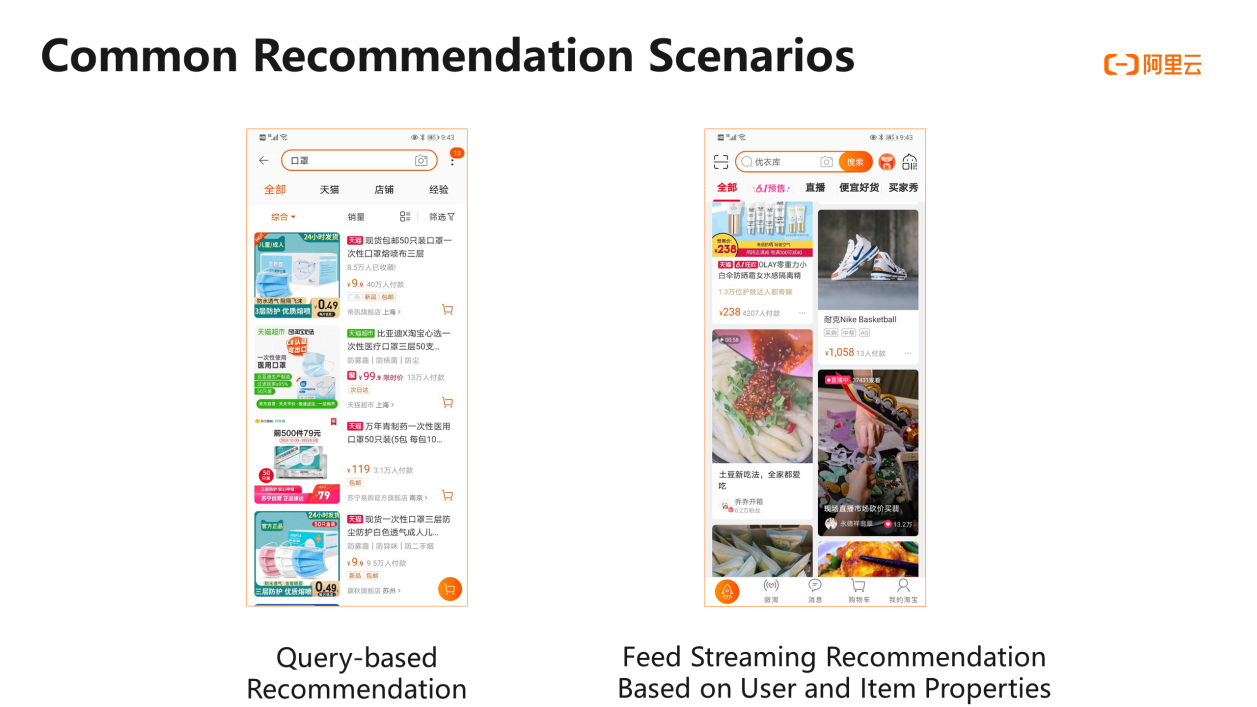

First, let's take a look at what a recommender system is and why such a system is required. As the Internet applications evolve on, people can dabble in more information. For example, the Taobao platform showcases many products. How to bridge users with the products that suit them is a challenge that Taobao needs to tackle. The recommender system essentially addresses the information matching problem to better match user information with item information. In the use of mobile apps, you must have known more or less about many recommendation scenarios. Two recommendation scenarios are common. One is the recommendations based on queries, and the other is the feed streaming recommendations based on user and item properties.

The left part of the following figure shows what a query-based recommendation is. For example, if I search for a mask, the displayed items must be related to masks. Mask-related items may be as many as more than 110,000. A recommender system is required to determine which items should be ranked at the top and which ones should be ranked behind. The system needs to rank the items according to users' properties, such as their favorite colors and price preferences. If a user prefers luxury goods, the system will surely rank the expensive masks with good performance at the top for this user. If a user is sensitive to prices, the system may need to rank cheaper masks with higher cost performance at the top for this user. To sum up, a query-based recommendation is the matching between users' purchase preferences and item properties.

The right part of the following figure shows that feed streaming recommendations have increasingly become a major interaction mode between many apps and their users. If you open apps such as Hupu and Toutiao, you will find that the news feeds on their home pages are recommended according to your daily preferences. For example, if you love basketball-related news, more sports-related content may be recommended to you. We utilize machine learning to develop a recommendation model based on the feed streaming recommendations that involve users and items. The model is expected to learn about both user preferences and item properties. In the architecture of the recommender system that we introduce today, the underlying implementation of the matching between user and item properties is about how to implement the feed streaming recommendation based on user and item properties.

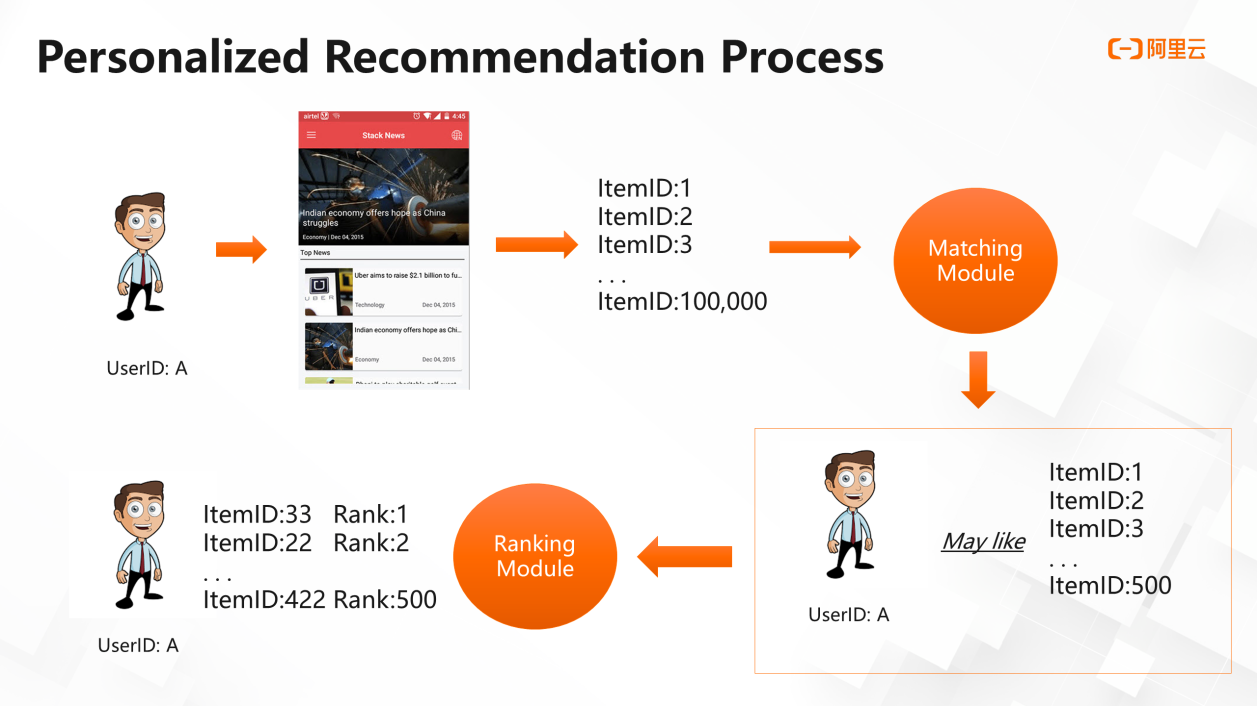

First, I would like to illustrate the whole recommendation service by using the following schematic diagram. Suppose we have a news platform, and user A with an ID visits the platform. This platform has thousands of news pieces, and we call each piece of news an item. Each item has an ID, such as 1, 2, or 3. Now we need to filter user A's favorite items from a total of 100,000 items. Which modules are needed in the underlying architecture to implement the recommendation? A typical recommender system based on matching and ranking usually has two modules. One is the matching module, and the other is the ranking module. The former performs a preliminary filtering of the 100,000 items to select those items user A may like. For example, if 500 items have been shortlisted, we only know that user A may like the 500 items, but we do not know which ones are user A's favorite and second favorite. The latter ranks the 500 items based on user A's preferences to create a final item list to be delivered to user A. Therefore, in the recommendation service, the matching module provides preliminary filtering to determine the general outline and scope. This accelerates property-based ranking of items by the ranking module, and makes recommendation feedback more efficient for users. A professional recommender system must be able to provide recommendation feedback within dozens of milliseconds after it receives a user request. A refresh of feed stream content may take dozens of milliseconds, and then the system must immediately show the newly recommended items. This is the logic behind the recommendation service.

The recommender system can be understood as the sum of recommendation algorithms and system engineering, specifically, Recommender system = Recommendation algorithms + System engineering. When a recommender system is discussed, many books and online documents focus more on how algorithms are implemented, and many papers are about the latest recommendation algorithms. However, if you get down to building such a recommender system, especially when you try to deploy it on the cloud, you will find it is actually a systematic project. Even if you know which algorithms a recommender service requires, you will face many problems such as performance problems and data storage problems. Therefore, this article focuses on both algorithms and system engineering, which together form a complete recommender system.

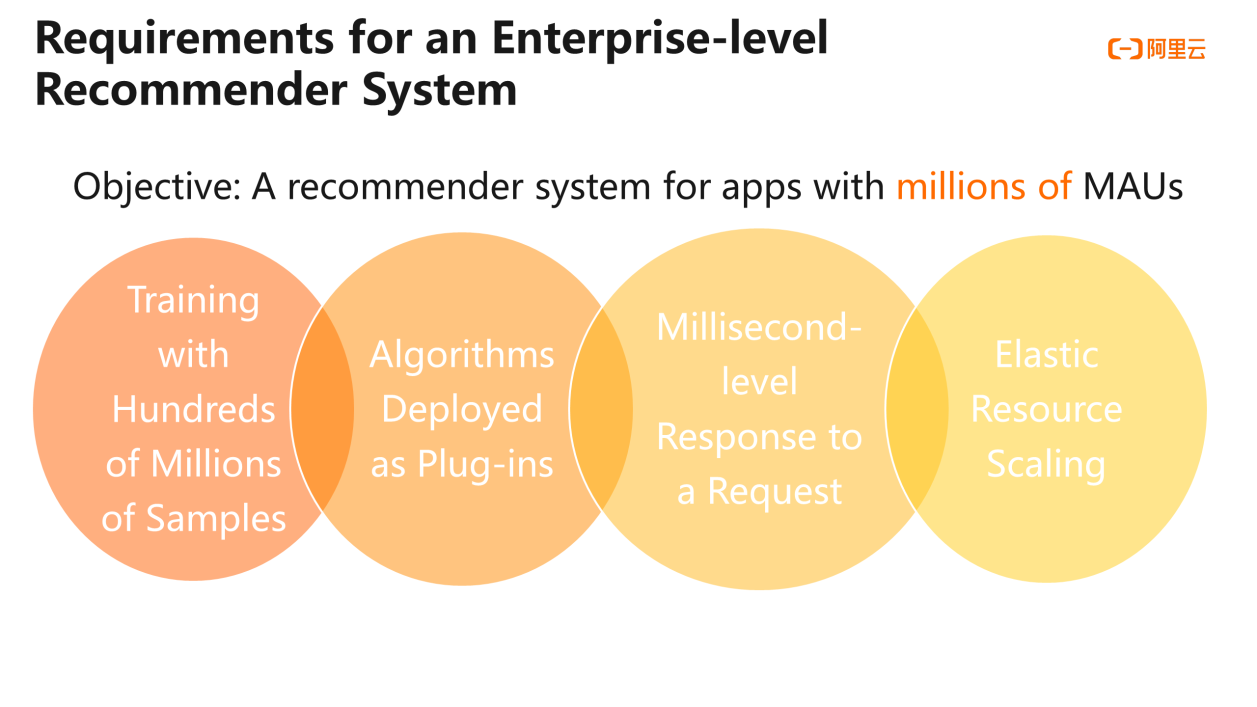

The following section describes the architecture of an enterprise-level recommender system. Designing such an architecture must meet four basic requirements. First, the target customer must have an app with millions of monthly active users (MAUs), which needs to recommend items to users. In each model training, the total number of samples for training may be hundreds of millions. We need to build an overall model based on the data of the entire platform in the past month or even half a year. In machine learning, the larger the data volume, the more accurate the model is. Data can be categorized into three types, namely the user behavior data, the item behavior data, and the user-item interaction data. Second, the schema must have the capability to deploy algorithms as plug-ins. The machine learning field, including the recommendation field, is enjoying fast development with new algorithms emerging every year. However, these algorithms cannot be plugged into or unplugged from the whole system flexibly. For example, if I want to use algorithm A today and algorithm B tomorrow, is there a convenient way for me to remove algorithm A? This demonstrates the robustness of a system, including its capability in supporting componentized algorithms. Alibaba Cloud Machine Learning Platform for AI (PAI) has such a capability. Third, the service performance must be high enough to provide feedback within milliseconds for each request. Fourth, the architecture must support elastic scaling of resources. For example, some apps may be more often used during evening rush hours when users are on the metro back home from work, but less used during wee hours. Then the recommendation model requires more underlying resources during the peak hours and fewer resources during early mornings. Therefore, the architecture must support flexible scaling of underlying resources to balance the cost. Cloud services can meet this requirement because they can use elastic resources. To build an enterprise-level recommender system, it is essential to meet the preceding four basic requirements.

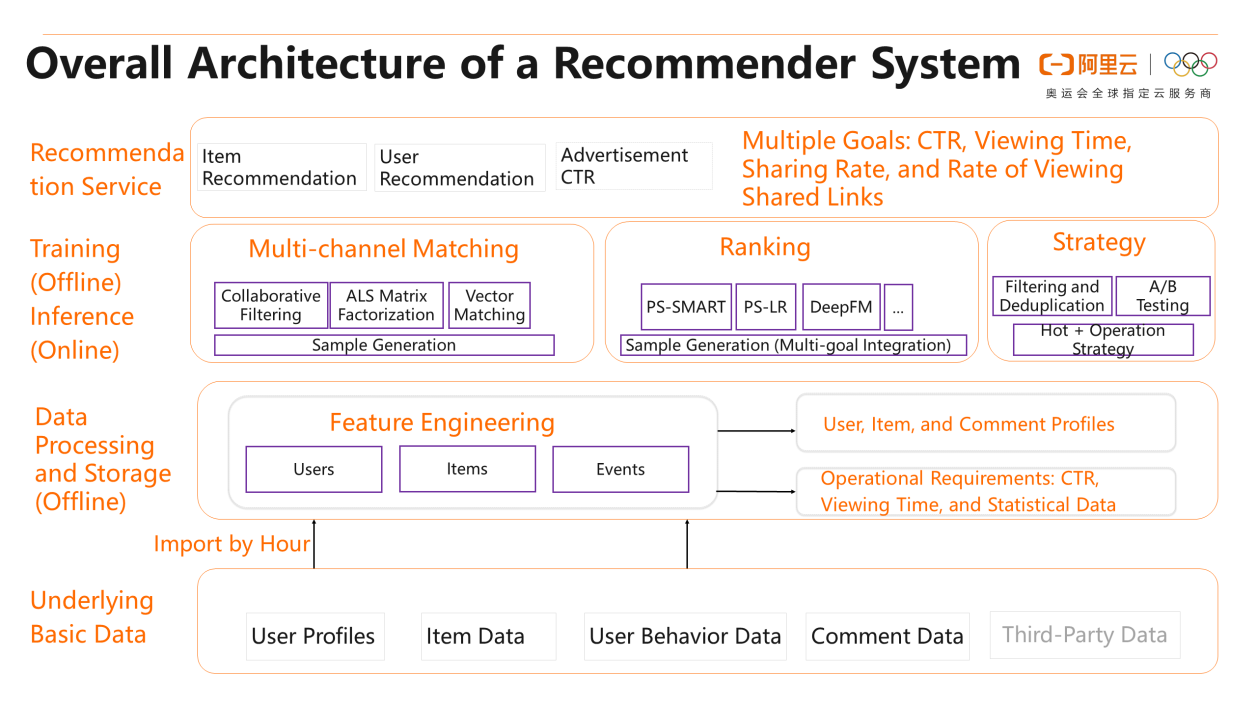

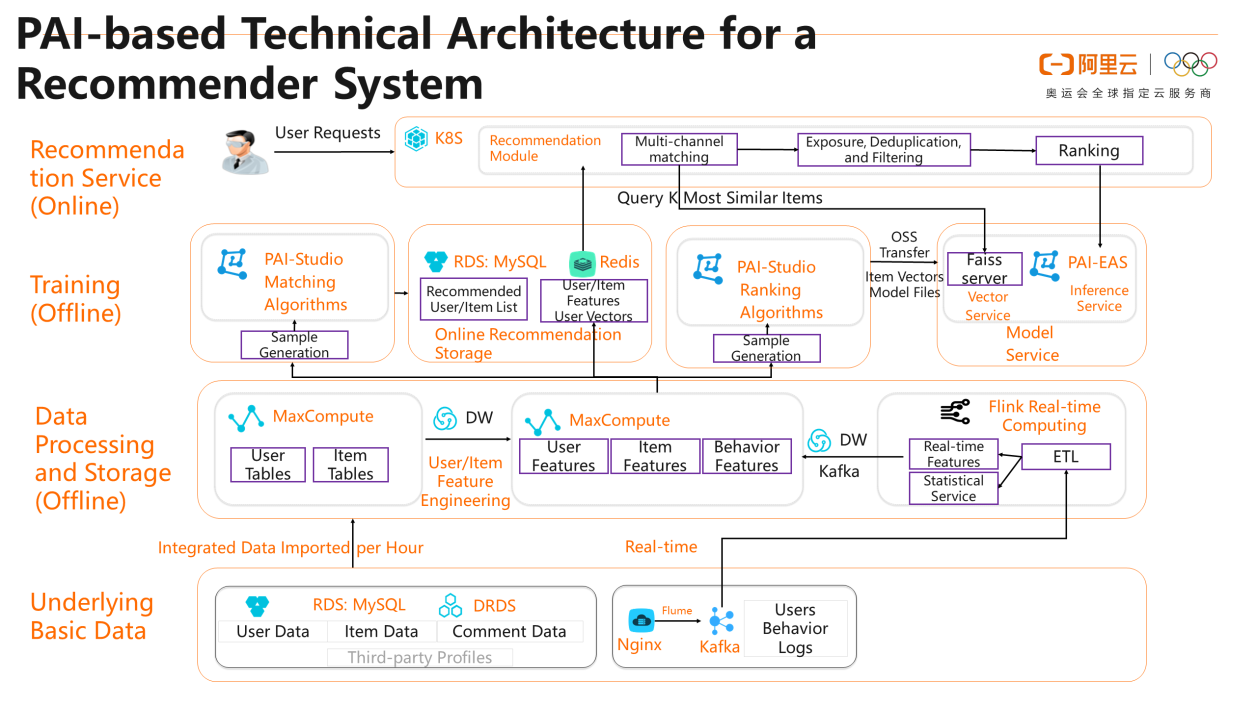

This section describes the overall architecture of a recommender system. The following figure shows the underlying basic data layer. This layer contains user profile data, item data, behavior data, and comment data. The user profile data may be users' heights and weights, items they purchased, their purchase preferences, or their education background. The item data is the prices, colors, and origins of items. If the item is a video, the item data is the information of the video such as the video content and tags. The behavior data refers to the interaction between users and items. For example, when a user watches a video, the user may add a like to the video, add the video to favorites, or pay for the video. These actions are all the user's behavior data. The comment data may involve third-party data, and may not be available for every item on every platform. However, the user data, the item data, and the behavior data are essential. With the three types of data ready, we can move on to the data processing and storage layer. In this layer, we can perform data processing, such as identifying user features, material features, and event features. Going forward is modeling based on these features. As we have aforementioned in the preceding section, the entire recommendation process contains two important modules: matching and ranking. Multiple algorithms can run in parallel in the matching module.

Matching is followed by ranking. Many ranking algorithms are also available. We will go into details about which ranking algorithm to use in the third article of the series. Next, you need to develop a new policy. You must filter and deduplicate the recommendation results, perform A/B tests on the results, and try the operational strategies before you push the recommendations online. The top layer is the recommendation service, which can recommend an advertisement, a product, or a user. For example, a social networking app can recommend users to let them follow each other. When you have such a recommendation architecture, some cloud services will be needed to make the architecture meet the four basic requirements on an enterprise-level recommender system. The most common practice is to build these modules based on cloud services and cloud ecosystems.

This section describes Alibaba Cloud's technical implementation of a recommender system. At the basic data layer of the PAI-based recommender system, some demo offline data of the website is provided to help you get started. The data can be stored in a database such as ApsaraDB RDS for MySQL. You may want to process some online behavior data in real time, such as some clicks or follows. Apache Kafka can help you in this regard. At the data processing layer, you can use Flink to process data and generate real-time behavior data. After samples are generated, you can send them to the model training layer. The model training layer uses some algorithms of PAI. We recommend that you adopt a cloud-native solution for the top-layer application to ensure resource and service elasticity. In the final service orchestration phase, you can perform matching and then deduplicate the matching results to generate the final sample for ranking. Then the ranking result will be fed back to the user. This is Alibaba Cloud's overall technical architecture.

Learn more about Alibaba Cloud Machine Learning Platform for AI (PAI) at https://www.alibabacloud.com/product/machine-learning

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Recommender System: Ranking Algorithms and Training Architectures

2,593 posts | 793 followers

FollowAlibaba Clouder - September 25, 2020

Alibaba Clouder - May 11, 2021

Alibaba Clouder - September 17, 2020

Alibaba Clouder - October 15, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 17, 2020

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Clouder