In this article, Alibaba technical expert Aohai introduces how to establish a simple recommender system based on Machine Learning Platform for AI (PAI) within 10 minutes. This article focuses on four parts: the personalized recommendation process, collaborative filtering algorithm, architecture of the recommender system, and practices.

We will introduce how to build a simple recommender system based on PAI. This recommender system has two characteristics. First, the system is convenient to build, with the help of tools that we have developed. Second, this system is scalable. We all know that apps today have many feed streaming recommendations, such as advertising and content recommendations. Such an app is essentially a recommender system. A recommender system can be divided into two main modules. The first is the matching module, which is responsible for preliminary filtering. For example, the module can narrow down 100,000 candidate news feeds to only 500. The second is the ranking module, which ranks the 500 news feeds based on the user's preference and then generates the final recommended rank. A simple recommender system with only the matching module can also make recommendations. If we narrow this scope to as small as possible, such as 10 news feeds, we can push all of the 10 feeds to users without ranking. Therefore, we want to use this video to illustrate how to establish a simple recommender system with the matching module only.

Many algorithms are available for both the matching and ranking modules. For example, multiple matrix factorization algorithms and the collaborative filtering algorithm are available for the matching module. The most classic one is collaborative filtering, which is easy to understand. We will illustrate it. For example, the following figure shows the preferences of users A, B, and C. Users A and C have similar tastes. Specifically, both users A and C like rice and milk. In addition, user A likes lamb but user C does not like it. We assume that user C also likes lamb and regard lamb as a matching result of user C. This is standard collaborative filtering based on data statistics. This figure can help you understand how collaborative filtering works. In other words, we first find similar items or users, and then identify a recommendation logic based on the correlation between the similar users or items. The recommender system discussed today is based on the collaborative filtering algorithm.

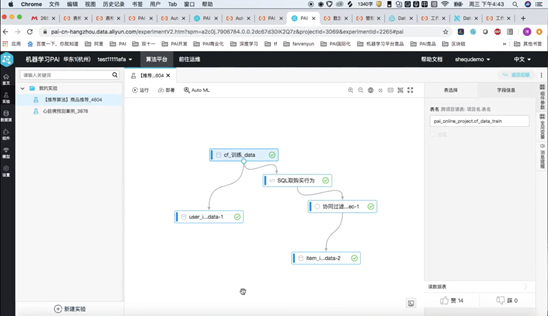

The following figure shows the overall architecture of the recommender system, which includes DataWorks, PAI-Studio, Tablestore, PAI-AutoLearning, and PAI-EAS.

First, we generate your collaborative filtering result data based on your raw data in the PAI-Studio, and obtain two tables.

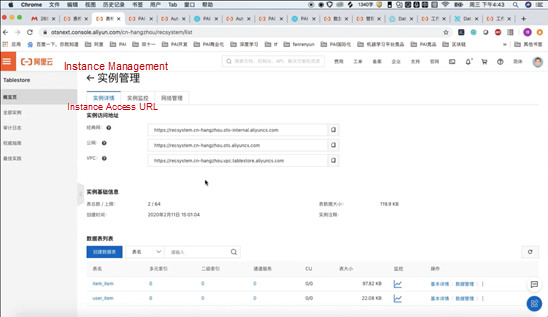

Next, we create the two tables in Tablestore in the required format.

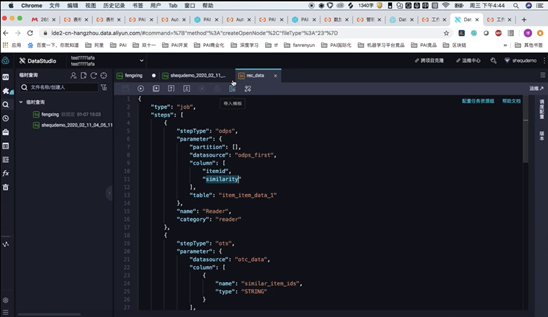

Then, we use DataWorks to migrate the data of the two tables from PAI-Studio to Tablestore.

You can find the next steps in Building a PAI-based Recommender System within 10 Minutes.

In this article, Alibaba technical expert Aohai introduces the basic concepts and architecture of a recommender system, specifically, what a recommender system is and how an enterprise-level recommender system architecture looks like.

First, let's take a look at what a recommender system is and why such a system is required. As the Internet applications evolve on, people can dabble in more information. For example, the Taobao platform showcases many products. How to bridge users with the products that suit them is a challenge that Taobao needs to tackle. The recommender system essentially addresses the information matching problem to better match user information with item information. In the use of mobile apps, you must have known more or less about many recommendation scenarios. Two recommendation scenarios are common. One is the recommendations based on queries, and the other is the feed streaming recommendations based on user and item properties.

The left part of the following figure shows what a query-based recommendation is. For example, if I search for a mask, the displayed items must be related to masks. Mask-related items may be as many as more than 110,000. A recommender system is required to determine which items should be ranked at the top and which ones should be ranked behind. The system needs to rank the items according to users' properties, such as their favorite colors and price preferences. If a user prefers luxury goods, the system will surely rank the expensive masks with good performance at the top for this user. If a user is sensitive to prices, the system may need to rank cheaper masks with higher cost performance at the top for this user. To sum up, a query-based recommendation is the matching between users' purchase preferences and item properties.

The right part of the following figure shows that feed streaming recommendations have increasingly become a major interaction mode between many apps and their users. If you open apps such as Hupu and Toutiao, you will find that the news feeds on their home pages are recommended according to your daily preferences. For example, if you love basketball-related news, more sports-related content may be recommended to you. We utilize machine learning to develop a recommendation model based on the feed streaming recommendations that involve users and items. The model is expected to learn about both user preferences and item properties. In the architecture of the recommender system that we introduce today, the underlying implementation of the matching between user and item properties is about how to implement the feed streaming recommendation based on user and item properties.

First, I would like to illustrate the whole recommendation service by using the following schematic diagram. Suppose we have a news platform, and user A with an ID visits the platform. This platform has thousands of news pieces, and we call each piece of news an item. Each item has an ID, such as 1, 2, or 3. Now we need to filter user A's favorite items from a total of 100,000 items. Which modules are needed in the underlying architecture to implement the recommendation? A typical recommender system based on matching and ranking usually has two modules. One is the matching module, and the other is the ranking module. The former performs a preliminary filtering of the 100,000 items to select those items user A may like. For example, if 500 items have been shortlisted, we only know that user A may like the 500 items, but we do not know which ones are user A's favorite and second favorite. The latter ranks the 500 items based on user A's preferences to create a final item list to be delivered to user A. Therefore, in the recommendation service, the matching module provides preliminary filtering to determine the general outline and scope. This accelerates property-based ranking of items by the ranking module, and makes recommendation feedback more efficient for users. A professional recommender system must be able to provide recommendation feedback within dozens of milliseconds after it receives a user request. A refresh of feed stream content may take dozens of milliseconds, and then the system must immediately show the newly recommended items. This is the logic behind the recommendation service.

The recommender system can be understood as the sum of recommendation algorithms and system engineering, specifically, Recommender system = Recommendation algorithms + System engineering. When a recommender system is discussed, many books and online documents focus more on how algorithms are implemented, and many papers are about the latest recommendation algorithms. However, if you get down to building such a recommender system, especially when you try to deploy it on the cloud, you will find it is actually a systematic project. Even if you know which algorithms a recommender service requires, you will face many problems such as performance problems and data storage problems. Therefore, this article focuses on both algorithms and system engineering, which together form a complete recommender system.

The following section describes the architecture of an enterprise-level recommender system. Designing such an architecture must meet four basic requirements. First, the target customer must have an app with millions of monthly active users (MAUs), which needs to recommend items to users. In each model training, the total number of samples for training may be hundreds of millions. We need to build an overall model based on the data of the entire platform in the past month or even half a year. In machine learning, the larger the data volume, the more accurate the model is. Data can be categorized into three types, namely the user behavior data, the item behavior data, and the user-item interaction data. Second, the schema must have the capability to deploy algorithms as plug-ins. The machine learning field, including the recommendation field, is enjoying fast development with new algorithms emerging every year. However, these algorithms cannot be plugged into or unplugged from the whole system flexibly. For example, if I want to use algorithm A today and algorithm B tomorrow, is there a convenient way for me to remove algorithm A? This demonstrates the robustness of a system, including its capability in supporting componentized algorithms. Alibaba Cloud Machine Learning Platform for AI (PAI) has such a capability. Third, the service performance must be high enough to provide feedback within milliseconds for each request. Fourth, the architecture must support elastic scaling of resources. For example, some apps may be more often used during evening rush hours when users are on the metro back home from work, but less used during wee hours. Then the recommendation model requires more underlying resources during the peak hours and fewer resources during early mornings. Therefore, the architecture must support flexible scaling of underlying resources to balance the cost. Cloud services can meet this requirement because they can use elastic resources. To build an enterprise-level recommender system, it is essential to meet the preceding four basic requirements.

In this article, Alibaba technical expert Aohai introduces the matching algorithms and architecture, specifically, the introduction of matching module in a recommender system, matching algorithms, collaborative filtering, and vector matching architecture.

In the first article of the series for building an enterprise-level recommender system, we have introduced the recommender system architecture, its modules, and the application of cloud services in each module. In this article, we will focus on the matching algorithms in a recommender system and how you can build a matching architecture. First, let's review the matching module in a recommender system. The matching module is used for preliminary filtering. When user A visits a platform, the matching module filters out items that user A may like from a huge number of items. For example, the platform has 100,000 items, and the matching module filters out 500 items that user A may like. Then, the ranking module ranks these items based on user A's preferences.

This section describes the algorithms that the matching module may use. The following figure shows four popular algorithms. The rightmost is the collaborative filtering algorithm, and the left three algorithms are related to vector matching. Collaborative filtering is similar to statistics-based algorithms. It finds out users with the same interests or items that are purchased at the same time. For example, we find out that beers and diapers in supermarkets are always purchased together based on a large amount of data statistics. Vector matching algorithms are deep-level models based on machine learning. For example, Alternating Least Squares (ALS) is a typical matrix factorization method. It can generate a user embedding table and item embedding table based on behavior data tables. This is a basic method for vector matching. Factorization Machine (FM) has the similar logic and uses the inner product method to enhance feature representation. I would like to introduce the GraphSage algorithm. It is actually a matching algorithm for graph neural networks (GNNs). Currently, the algorithm is not widely used in the Internet field. However, some large Internet companies, such as Taobao, use it frequently in recommendation scenarios. GraphSage is a graph algorithm built based on a deep learning framework. It can generate the user embeddings and item embeddings based on features and behavior of users and items. The GraphSage algorithm is often used in e-commerce matching scenarios.

This section describes the collaborative filtering algorithm, which is easy to understand. For example, the following figure shows the preferences of users A, B, and C. Users A and C have similar tastes. Specifically, both users A and C like rice and milk. In addition, user A likes lamb but user C does not like it. We assume that user C also likes lamb and regard lamb as a matching result of user C. This is standard collaborative filtering based on data statistics. This figure can help you understand how collaborative filtering works.

This section describes how you can use the preceding three vector matching algorithms. The input data of these algorithms are user IDs, item IDs, and behavior data. The following figure shows a user behavior data table. After you access the table, you can use a vector matching algorithm to obtain two vector tables. These vector tables contain key-value pairs. Each user ID corresponds to a vector, and the key-value pairs can be cached in Redis. In actual use, you need to store the data to the Faiss server. Faiss is an open-source engine developed by the Facebook AI team for vector retrieval. It provides multiple vector retrieval modes and can return the result for retrieving millions of vectors in one millisecond. It has an excellent performance and is usually used in the recommendation matching field. For example, when we want to recommend an item to a user, we use the user ID and its vector to check which item vectors in the Faiss engine have the closest Euclidean distance to the user's vector. For example, we use the top 10 item vectors as the matching result of the user. That is the overall vector matching architecture, in which both Redis and Faiss are used.

In this article, Alibaba technical expert Aohai introduces the ranking algorithms and training architectures of a recommender system, specifically, the introduction of the ranking module in a recommender system, ranking algorithms, and online and offline training architectures for a ranking model.

In the series of articles for building an enterprise-level recommender system, we have introduced how to design the overall architecture of an enterprise-level recommender system in the first article, and the matching algorithms and their meanings in the second article. In this article, we will introduce the ranking algorithms of a recommender system and the features of the online and offline training architectures for a ranking model. First, let's review the following figure to learn about the ranking module in a recommender system. When a user visits a platform, the user will find a large number of items. Therefore, we need to filter out items that the user may like. The matching module provides a preliminary filtering to narrow down the item list delivered to the ranking module.

Specifically, the matching module first selects a small proportion of items that the user may like from a huge number of items. For example, the platform has 100,000 items, and the matching module filters out 500 items that the user may like. Then, the ranking module ranks these items based on the user's preferences. To rank the 500 items from the user's favorite to the least favorite, we need a ranking algorithm, which can provide a rank of the items based on user and item properties.

This section describes the types of ranking algorithms, the training process of a ranking model, and training architectures. With the development of deep learning, ranking algorithms have been gradually integrated with deep learning. The following figure shows the typical ranking algorithms. The first is logistic regression (LR), which is a widely used algorithm. It is the most classic linear binary algorithm in the industry. It is easy to use, has low requirements for the computation power, and has good model interpretability. The second is Factorization Machine (FM), which has been applied to a large number of customer scenarios over the past one or two years and achieved a good performance. It uses the inner product method to enhance feature representation. The third is LR that uses gradient boosted decision trees (GBDTs) and feature encoding to enhance interpretability of data features. The fourth is the DeepFM algorithm, which is also a deep learning algorithm that is widely used. It combines deep learning and classic machine learning algorithms. If you attempt to build a recommender system for the first time, we recommend that you first try simple algorithms and then use complex algorithms. The preceding algorithms have been embedded to Machine Learning Platform for AI (PAI) and can be used as plug-ins.

This section describes how you can train a ranking model. A ranking model can be trained online or offline, and we will first introduce offline training.

Offline training uses T – 1 data. Specifically, the models used for businesses today are trained based on data from before today. Offline training allows you to integrate a large amount of historical data to one data warehouse, train a model based on features and data of all samples, and verify the trained model offline. You can then use the verified model online the next day, which ensures security and good performance. The following figure provides an offline model training architecture. Currently, almost all customers train ranking models offline. If you want to perform real-time training, you will face great challenges in the architecture.

We will focus on the online model training. What are the benefits and features of online model training when compared with offline model training? Offline training uses T – 1 or T – 2 data and does not make use of online real-time behavior data. For example, our platform launches an activity targeting only girls under 14 years old. However, the platform has a large number of customers in other ages, and the interactive behavior of the whole platform is changed. If you still use T – 1 data to train a model, you cannot perceive online businesses in a timely manner. Online training can solve this problem. The following figure shows the online model training process. Online model training allows you to use real-time online data to train a model based on the real-time machine learning model training framework. Even though real-time data is used for online training, your model training does not start from scratch. The model is first trained offline and then optimized based on real-time data. This is an online training architecture for a ranking model, which must have the following three features: First, it is streaming model training based on the Flink framework. Second, it uses the model generated in real time to evaluate the model performance. Third, it has the online model rollback and version management capabilities. You can have a complete online model training architecture only when the architecture you build has the preceding features.

Simple Application Server is a new generation computing service for stand-alone application scenarios. It provides one-click application deployment and supports all-in-one services such as domain name resolution, website publishing, security, O&M, and application management.

Alibaba Cloud Elastic Compute Service (ECS) offers high performance, elastic & secure virtual cloud servers with various instance types at cost-effective prices for all your cloud hosting needs.

This course is the 4th class of the Alibaba Cloud Machine Learning Algorithm QuickStart series, It mainly introduces the Decision Tree main algorithms and pruning principle,as well as applies feature engineering,implements Random Forest Model with PAI to explain the model comparison and selection, prepar for the knowledge associate with subsequent machine learning courses.

SVM (Support Vector Machine) is among the best “off-the-shelf” supervised learning algorithms which can be used for both regression and classification tasks. It is widely used in classification objectives. A Support Vector Machine (SVM) is a discriminative classifier formally defined by a separating hyperplane. In other words, given labeled training data (supervised learning), the algorithm outputs an optimal hyperplane which categorizes new examples. In two dimentional space this hyperplane is a line dividing a plane in two parts where in each class lay in either side. This course introduces the basic concepts of SVM as well as how to use Alibaba Cloud Machine Learning Platform for AI to apply SVM.

This topic describes how to use the NGC-based Real-time Acceleration Platform for Integrated Data Science (RAPIDS) libraries that are installed on a GPU-accelerated instance to accelerate tasks for data science and machine learning as well as improve the efficiency of computing resources.

RAPIDS is an open source suite of data processing and machine learning libraries developed by NVIDIA to enable GPU-acceleration for data science and machine learning. For more information about RAPIDS, visit the RAPIDS website.

NVIDIA GPU Cloud (NGC) is a deep learning ecosystem developed by NVIDIA to provide developers with free access to deep learning and machine learning software stacks to build corresponding development environments. The NGC website provides RAPIDS Docker images, which come pre-installed with development environments.

JupyterLab is an interactive development environment that makes it easy to browse, edit, and run code files on your servers.

Dask is a lightweight big data framework that can improve the efficiency of parallel computing.

This topic provides sample code that is modified based on the NVIDIA RAPIDS Demo code and dataset and demonstrates how to use RAPIDS to accelerate an end-to-end task from the Extract-Transform-Load (ETL) phase to the Machine Learning (ML) Training phase on a GPU-accelerated instance. The RAPIDS cuDF library is used in the ETL phase and the XGBoost model is used in the ML Training phase. The sample code is based on the Dask framework and runs on a single machine.

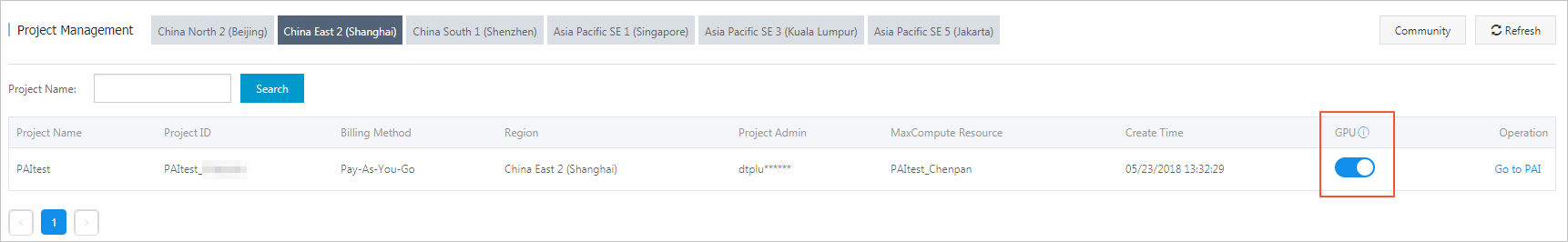

The deep learning feature is currently in beta testing. Three deep learning frameworks: TensorFlow, Caffe, and MXNet are supported. To enable deep learning, log on to your machine learning platform console and enable GPU resources, as shown in the following figure.

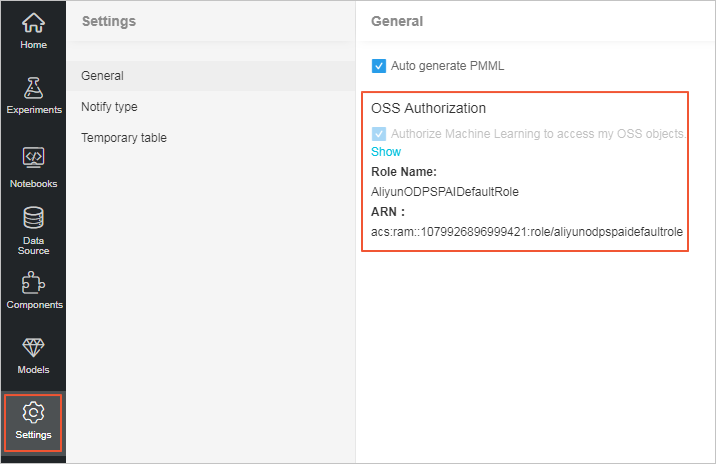

After GPU resources have been enabled, the corresponding projects are allowed to access the public resource pool and dynamically use the underlying GPU resources. You also need to grant OSS permissions to the machine learning platform as follows.

How to use Alibaba Cloud advanced machine learning platform for AI (PAI) to quickly apply the linear regression model in machine learning to properly solve business-related prediction problems.

How to use Alibaba Cloud advanced machine learning platform for AI (PAI) to quickly apply the linear regression model in machine learning to properly solve business-related prediction problems.

What is Serverless Computing? Challenges, Architecture and Applications

Events Simulation Service: How to Digitally Transform Your Venue

2,593 posts | 793 followers

FollowAlibaba Clouder - May 11, 2021

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 17, 2020

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More RAM(Resource Access Management)

RAM(Resource Access Management)

Secure your cloud resources with Resource Access Management to define fine-grained access permissions for users and groups

Learn MoreMore Posts by Alibaba Clouder