Are you an AI enthusiast with a keen eye on innovation? Sign up for the Alibaba Cloud Global AI Innovation Challenge and win big! Sign Up Here >>

By GarvinLi

In this article, Alibaba technical expert Aohai introduces the online service orchestration and architecture, specifically, the online inference service architecture and online multi-goal implementation.

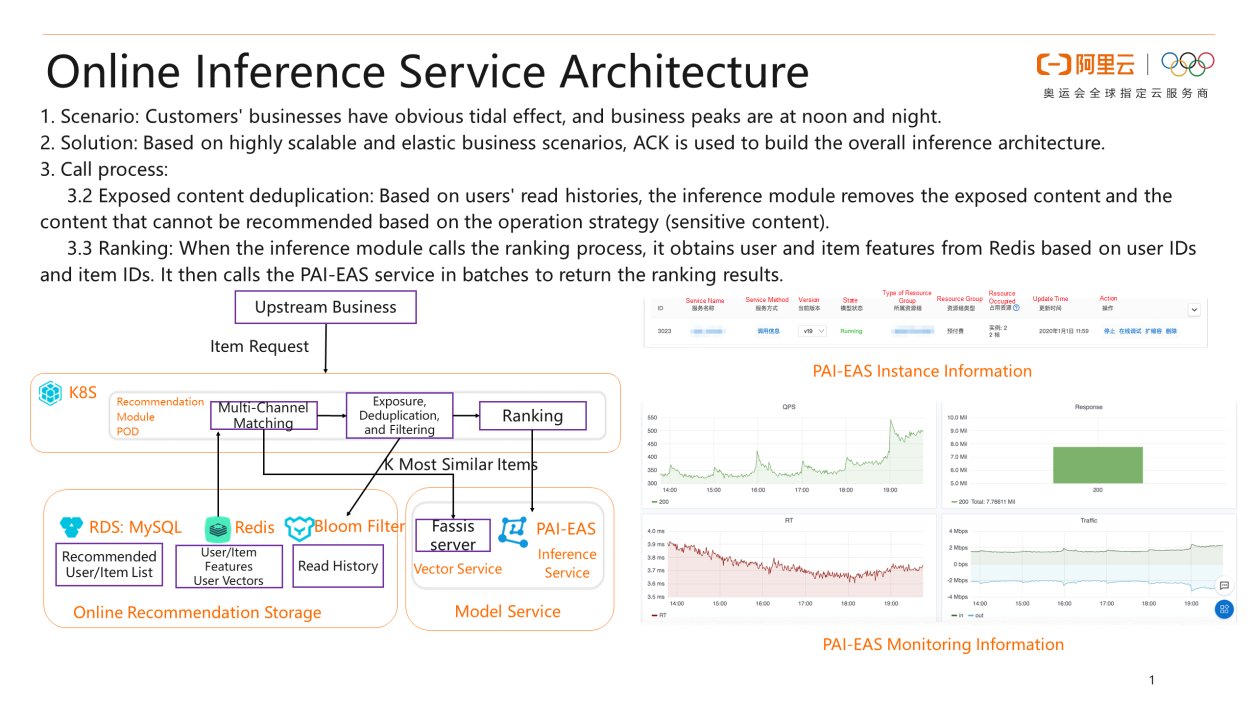

In the first two articles of the series for building an enterprise-level recommender system, we have introduced the algorithms and architectures in the matching and ranking modules. In this article, we will describe how you can orchestrate the results of the matching and ranking algorithms and apply the trained model to on-site businesses. First, let's take a look at the entire framework. Users' business scenarios, especially the Internet recommendation service, have peak traffic basically at noon and night. Therefore, you need an elastic mechanism to reduce resource consumption. For example, you need 10 servers at peak hours and only one server at off-peak hours. Without an elastic mechanism, you have to purchase 10 servers offline to meet the peak traffic requirements. However, if you use cloud services, you can implement elastic scaling. Costs can be reduced substantially because you no longer need to purchase 10 servers. The recommendation service requires an elastic mechanism. In the service orchestration phase, the cloud architecture is typically used to cope with the tidal effect of businesses.

To create the whole process of matching and ranking, we use Alibaba Cloud Container Service for Kubernetes (ACK) to build the entire inference architecture based on highly scalable and elastic business scenarios. The process includes the following steps: (1) Multi-channel matching: The inference module uses item-based collaborative filtering, semantic matching, hot matching, and operation strategy matching to retrieve thousands of candidates. (2) Exposed content deduplication: Based on users' read history, the inference module removes the exposed content and the content that cannot be recommended based on the operation strategy. (3) Ranking: When the inference module calls the ranking process, it obtains user and item features based on user IDs and item IDs. It then calls the Elastic Algorithm Service (EAS) provided by Machine Learning Platform for AI (PAI) in batches to return the ranking results. In the following figure, the right part shows the online monitoring capability of the PAI-EAS online inference service. You need to dynamically scale out or scale in the ranking model based on its metric to avoid a high RT or that the QPS cannot meet your business requirements. This is the entire online service orchestration framework.

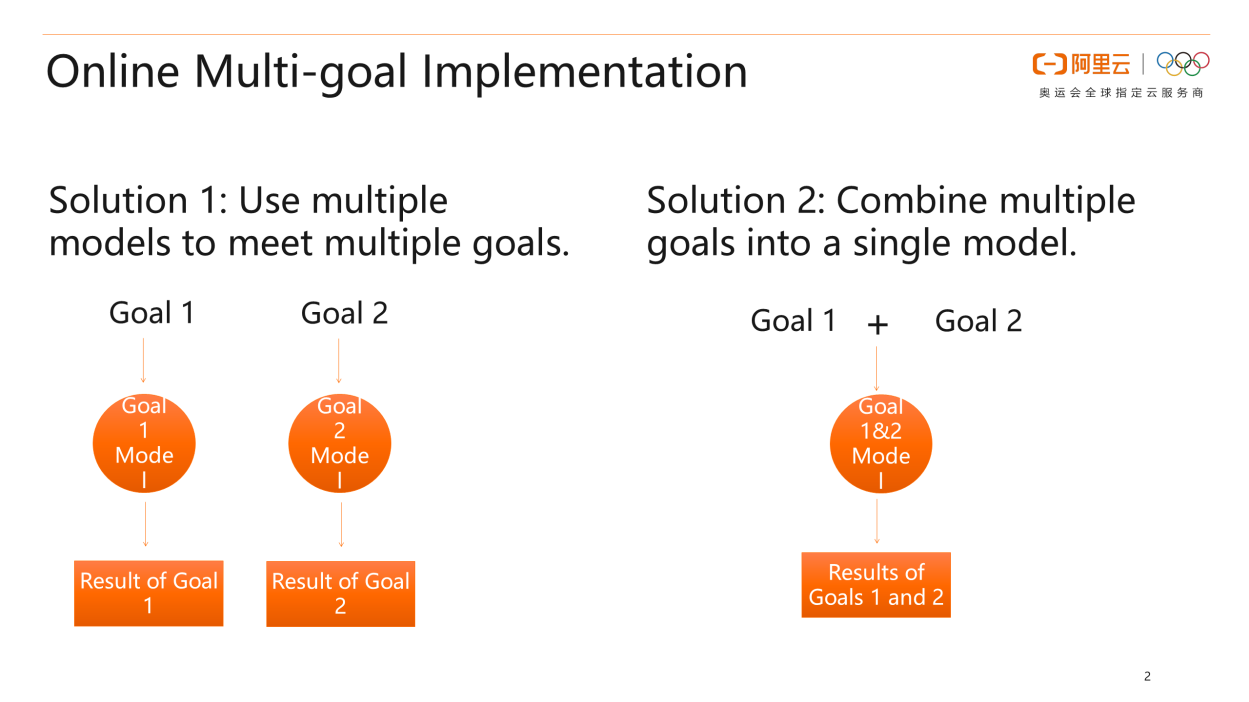

After the model is released, we will face another issue. Sometimes, we design the architecture not for a single goal but multiple goals. Take article or video recommendation as an example. Most customers want users not only to click but also watch their recommended videos. For example, some videos have attractive titles but poor-quality content. Users may click them but will not watch them. The user experience for such videos is poor. Therefore, the architecture may need to meet multiple goals, such as click and viewing time in this example. To achieve multiple goals, we need to re-design the whole solution and orchestrate the matching logic for recommendation. We provide two solutions. The first solution is to use multiple models to meet multiple goals. For example, there are two goals: click and viewing time. You can use one matching module for click and another one for viewing time. Then, you can integrate the results of the two modules to obtain the final recommendation results. This solution requires high costs because you have to maintain two systems and the ratios of the two are difficult to quantify. The second solution is to combine multiple goals into a single model. This solution is widely used and has a good performance. You can combine the two goals into one goal. For example, you compress click and viewing time to a range of 0 to 1 based on a ratio. If a user does not click, it is 0. If a user clicks, it is 1. Then, you can normalize the viewing time and compress the whole time to a range of 0 to 1. In this way, the whole range is changed to 0 to 2, specifically, a value range for a single goal. You can train your matching and ranking models based on this goal to obtain the final result. By using this solution, you only need to maintain one recommendation service modeling process and will obtain a better performance than that of the first solution.

Learn more about Alibaba Cloud Machine Learning Platform for AI (PAI) at https://www.alibabacloud.com/product/machine-learning

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Recommender System: Ranking Algorithms and Training Architectures

2,593 posts | 792 followers

FollowAlibaba Clouder - May 11, 2021

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 25, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - October 15, 2020

2,593 posts | 792 followers

Follow Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn MoreMore Posts by Alibaba Clouder