Change Data Capture (CDC) tracks data changes in the data sources. It synchronizes these changes to the target storage systems (such as a data lake or data warehouse) for data backup or subsequent analysis. We can perform synchronization by the minute/hour/day or even in real-time. CDC solutions are of two types: an intrusive manner and a non-intrusive manner.

In an intrusive manner, we can request the data source system directly. For example, reading data through Java Database Connectivity (JDBC). However, it puts performance pressure on the data source system. Common solutions are as follows.

Last Modified: The source table must contain a time modification column, and users must specify a last modified time parameter to synchronize the modified data after the specified time point. Last Modified method cannot synchronize the deletion record changes. Users can only record the last change when there are more than two record changes.

Auto-increment ID Column: The source table must have an auto-increment ID column. Users must specify the maximum ID value for the last synchronization to synchronize the new record row after the last synchronization. This method cannot synchronize the deletion record changes, and perceiving former records changes is also impossible.

In a non-intrusive manner, we can record data changes of the data source in logs, such as the database binlog, which must activate in the source database beforehand. binlog will record every operation (e.g., insertion, update, and deletion) in the data source to track data insertions, data deletions, multiple data updates, and Data Definition Language (DDL) operations in real-time.

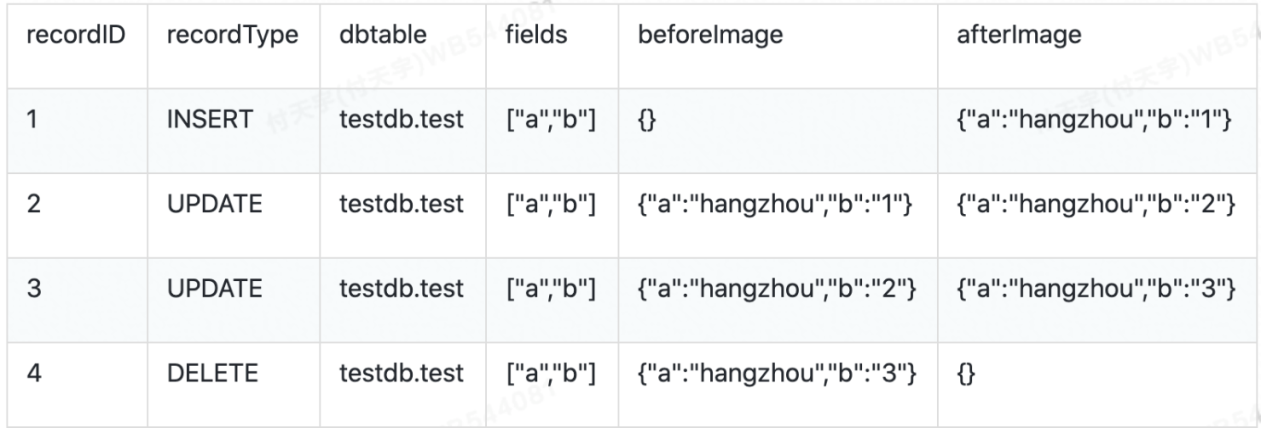

Example:

insert into table testdb.test values("hangzhou",1);

update testdb.test set b=2 where a="hangzhou";

update testdb.test set b=3 where a="hangzhou";

delete from testdb.test where a="hangzhou";

The system orderly puts logs of binlog back to the target storage to synchronize data export from the data source.

We can implement common open-source CDC solutions in two ways.

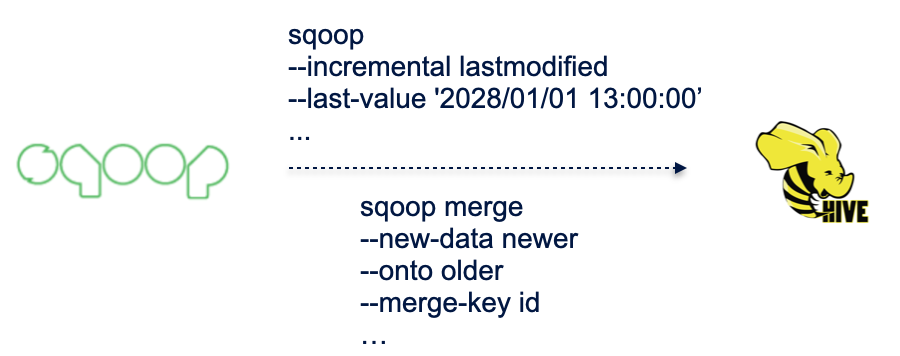

Sqoop is an open-source data synchronization tool that synchronizes database data to HDFS or Hive, supporting full synchronization and incremental synchronization. Users can configure a scheduling task to synchronize data by the hour/day.

Sqoop incremental synchronization is an intrusive manner CDC solution that supports Last Modified mode and Append mode.

Disadvantages:

Users can use some tools to synchronize binlog logs in real-time with Message-oriented Middleware (MOM), such as Kafka. Then, streaming engines, such as Spark and Flink, put the binlog logs in real-time back to target storage systems such as Kudu and HBase.

Disadvantages:

The two common CDC solutions above have their shortcomings. Alibaba Cloud E-MapReduce team offers a new CDC solution, using proprietary Streaming SQL and Delta Lake to realize the real-time CDC synchronization in a data lake. Implementing this solution through the latest Data Lake Formation (DLF) service from Alibaba Cloud can be done for an all-in-one experience during data synchronization in a data lake.

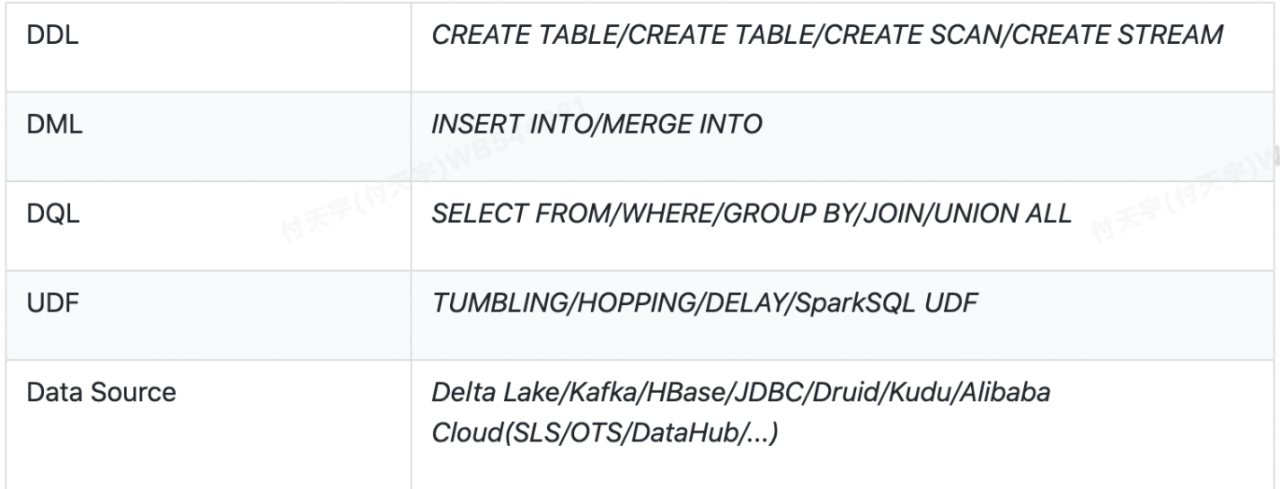

Spark Streaming SQL provides SQL capability through Spark Structured Streaming to reduce the threshold for real-time business development, simplifying the offline real-time business.

Spark Streaming SQL supports the following syntax:

Take SLS real-time consumption as an example.

# Create a loghub source table.

spark-sql> CREATE TABLE loghub_intput_tbl(content string)

> USING loghub

> OPTIONS

> (...)

# Create a delta target table.

spark-sql> CREATE TABLE delta_output_tbl(content string)

> USING delta

> OPTIONS

> (...);

# Create a streaming SCAN task.

spark-sql> CREATE SCAN loghub_table_intput_test_stream

> ON loghub_intput_tbl

> USING STREAM;

# Insert data from the loghub source table into the delta target table.

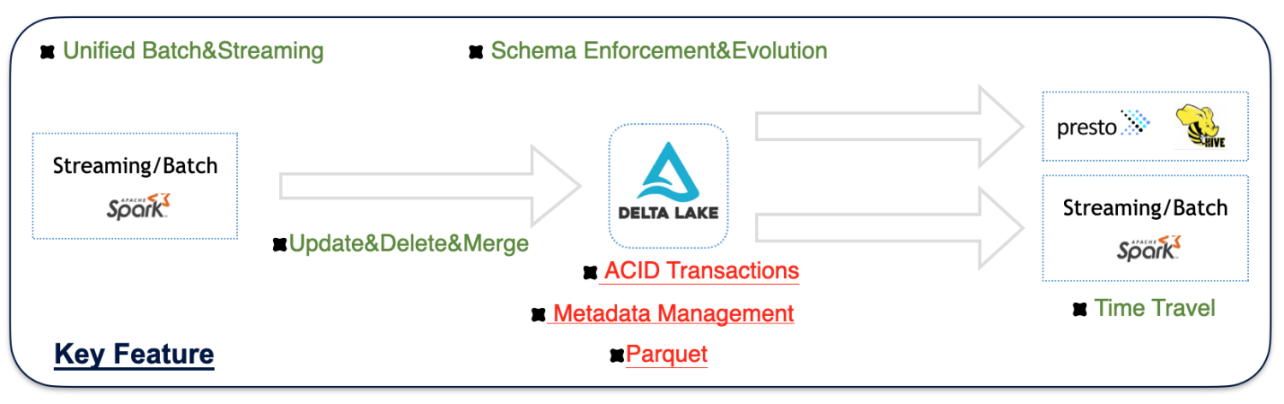

spark-sql> INSERT INTO delta_output_tbl SELECT content FROM loghub_table_intput_test_stream;Delta Lake is an open-source data lake format of Databricks. Based on the Parquet format, it provides capabilities in ACID transactions, metadata management, etc. With better performance than Parquet, it also supports diversified data application scenarios, such as data update and schema evolution.

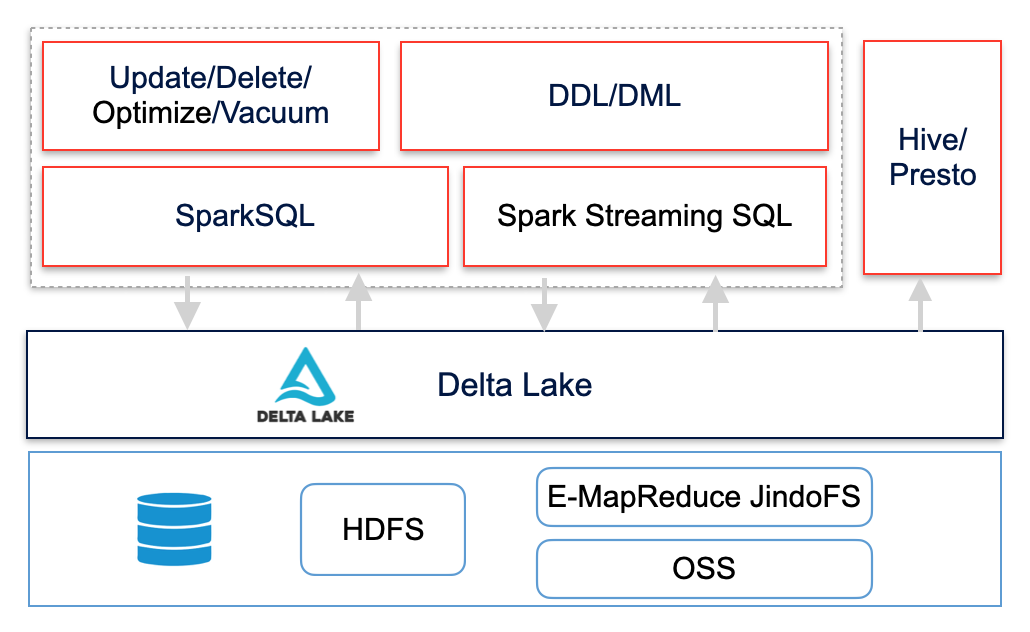

E-MapReduce team has made significant improvements in the function and performance based on open-source Delta Lake. For example, it supports the integration of small files in Optimize, Dataskiping, Zorder, and deep Delta integration in Spark SQL, Streaming SQL, Hive, and Presto.

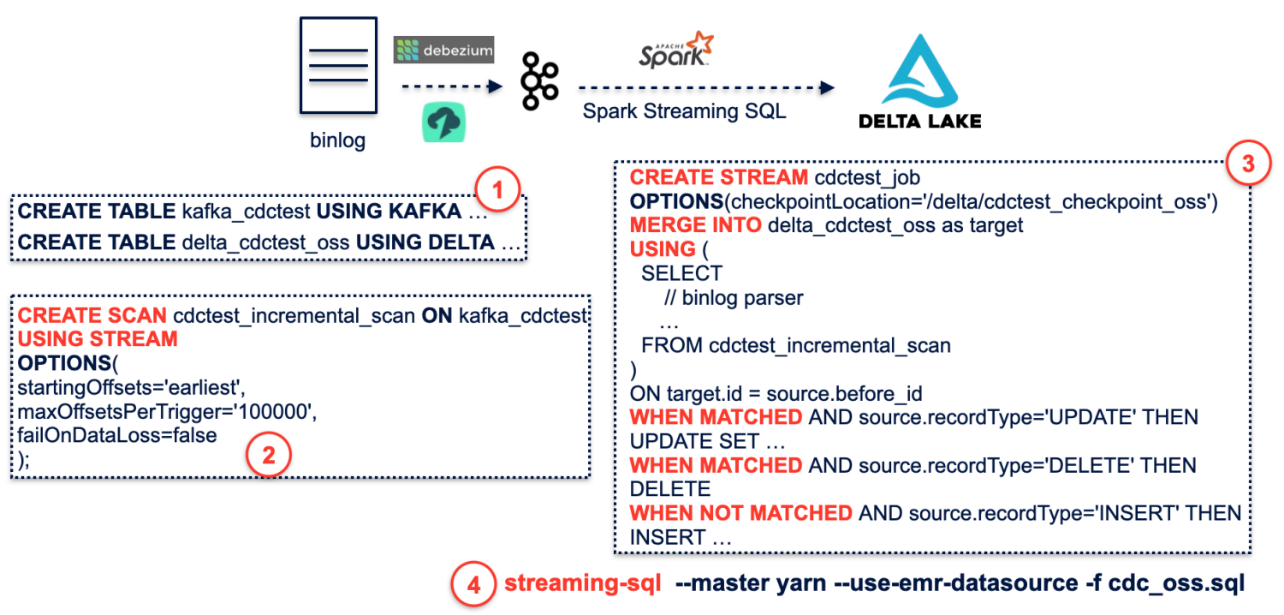

Spark Streaming SQL provides Merge Into syntax, which, together with the Delta Lake real-time writing capability, can realize the solution for real-time CDC synchronization in a data lake.

As the preceding figure shows, real-time CDC synchronization is possible through SQL. For more information, see E-MapReduce documentation.

The latest Data Lake Formation (DLF) service from Alibaba Cloud provides a complete solution for real-time CDC synchronization in a data lake.

How to Use JindoDistCp for Offline Data Migration to a Data Lake

An Overview of Alibaba Cloud's Comprehensive Cloud-Native Data Lake System

62 posts | 7 followers

FollowAlibaba EMR - July 20, 2022

Alibaba EMR - October 12, 2021

Alibaba EMR - June 8, 2021

Apache Flink Community - August 21, 2025

Alibaba EMR - May 10, 2021

Hologres - December 2, 2022

62 posts | 7 followers

Follow Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Data Lake Analytics

Data Lake Analytics

A premium, serverless, and interactive analytics service

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Alibaba EMR