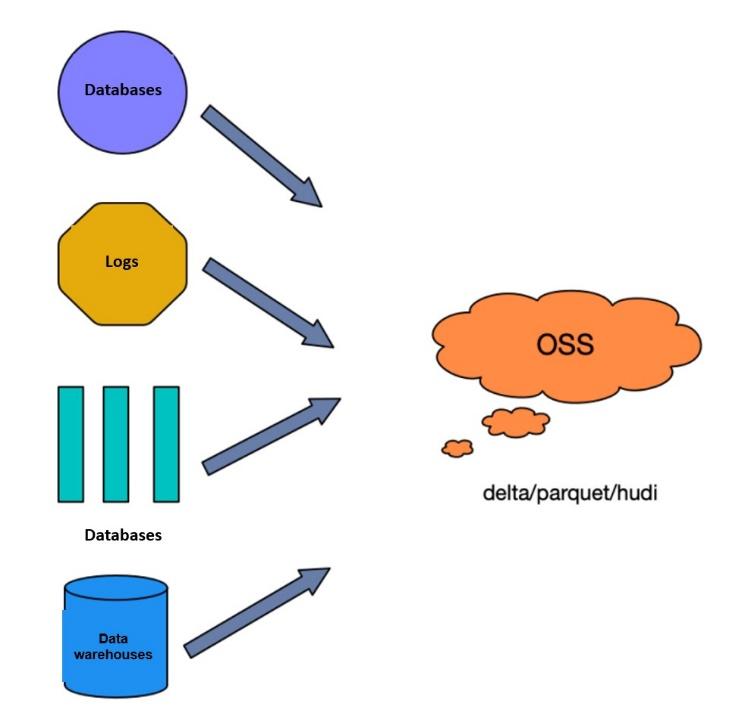

A data lake supports various data types, including structured, semi-structured, and unstructured data, as a centralized data storage warehouse. The data sources include database data, binlog incremental data, log data, and stock data in existing data warehouses. For these data, a data lake enables centralized storage and management in cost-effective object storage services such as OSS. It also provides a unified data analysis method for external systems. This effectively solves the problem of "data islands" and high data storage costs for enterprises.

Enterprises face difficulty in migrating data from heterogeneous data sources to centralized data lake storage during a data lake formation. This happens due to the presence of a variety of data sources in a data lake. A complete and all-in-one lake migration solution can solve these problems, mainly including the following aspects:

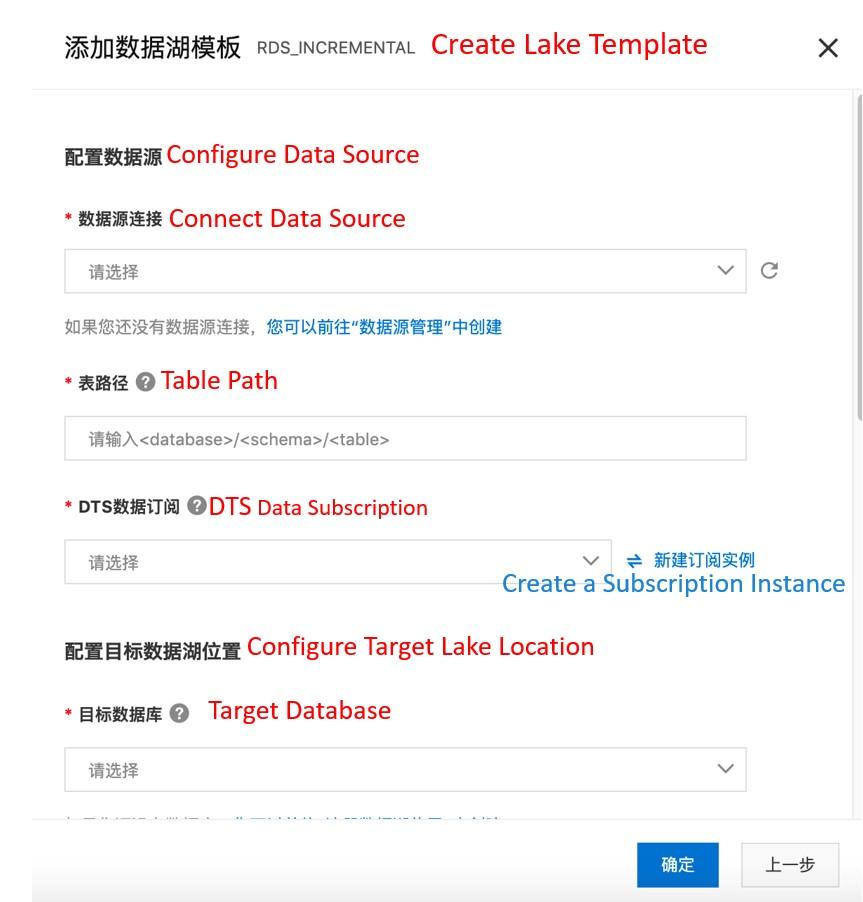

A simple and unified solution enables easy data migration to the lake. You can implement lake migration of data from heterogeneous data sources through simple page configurations.

For data from sources such as logs and binlogs, the lake migration must ensure only minute-level latency to realize the timeliness for real-time interactive analysis scenarios.

For data sources such as databases and Tablestore tunnels, the source data may frequently change due to operations like data update and delete and field structure changes in the schema. Therefore, better data formats are needed to support these changes.

Alibaba Cloud has launched Data Lake Formation (DLF) service, providing a complete lake migration solution.

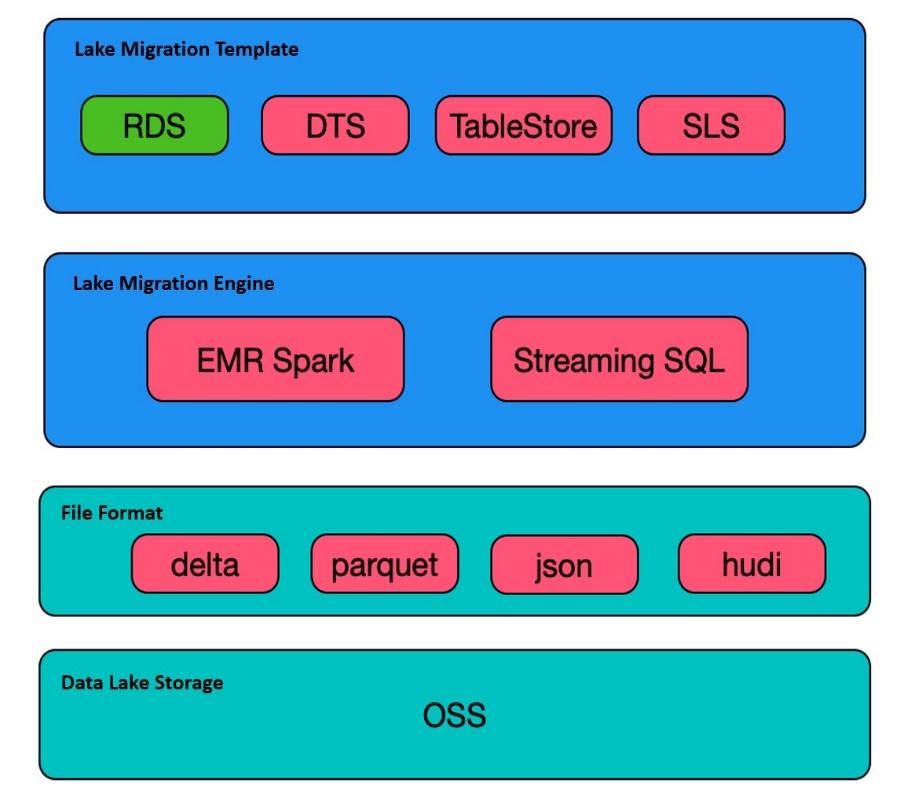

The following figure shows the technical lake migration solution of DLF:

Lake migration solution consists of lake migration template, lake migration engine, file format, and data lake storage.

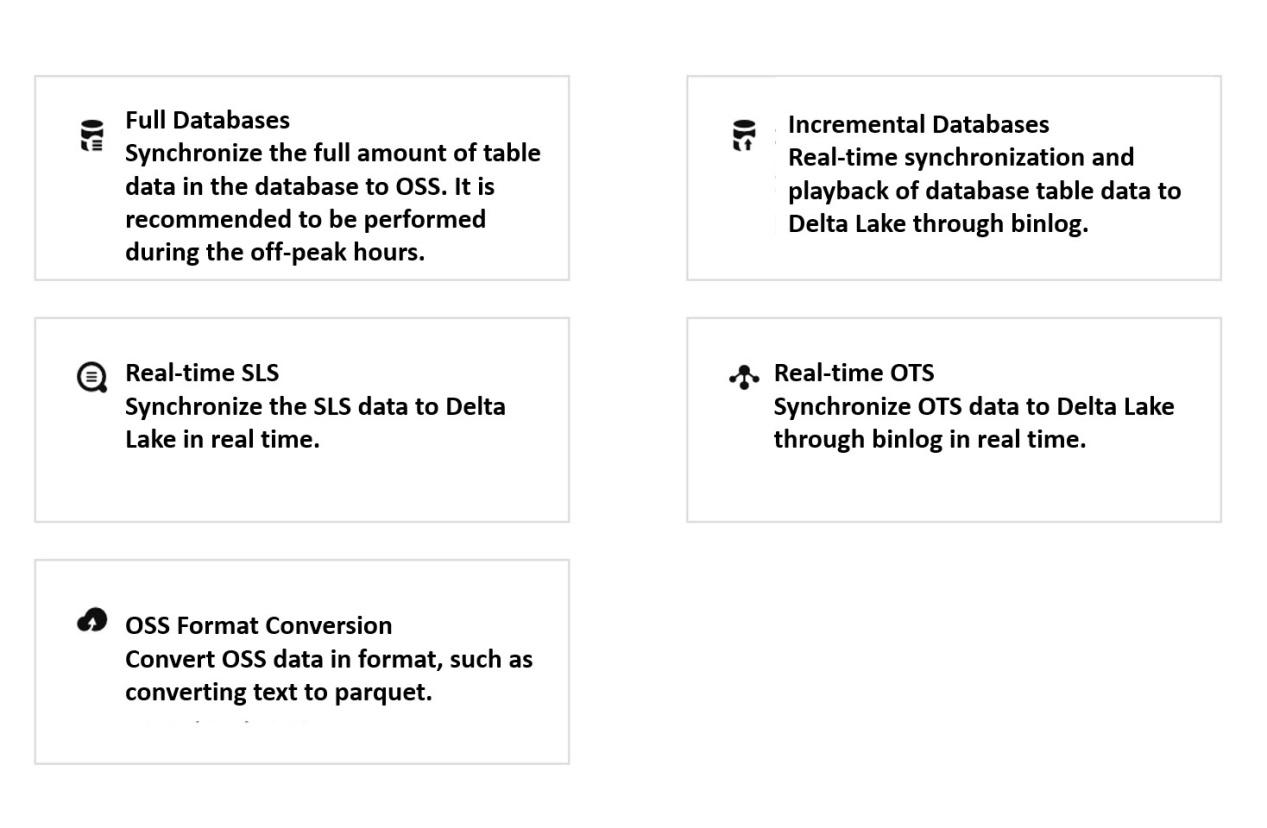

The lake migration template defines common migration ways. Currently, there are five templates: RDS full template, DTS incremental template, Tablestore template, SLS template, and file format conversion.

You can select the corresponding template according to different data sources, then fill in the source-related parameters to complete the template creation, and finally, submit it to the lake migration engine for running.

Spark Streaming SQL and EMR Spark engine developed by the Alibaba Cloud E-MapReduce (EMR) team are used in the lake migration engine. Streaming SQL is based on Spark Structured Streaming and provides a complete set of Streaming SQL syntax. This improves the development cost of real-time computing. You can translate the template upper part into Streaming SQL for the real-time incremental template for real-time incremental template and submit it to the Spark cluster for running. You can also extend the Merge Into syntax in Streaming SQL to support update and delete operations. Relational Database Service (RDS) and other full templates are directly translated into Spark SQL for running.

DLF supports several file formats, including Delta Lake, Parquet, and JSON. It also supports file formats such as Hudi. Delta Lake and Hudi provide excellent support for update and delete operations, as well as schema merge. This feature can effectively handle the issue of real-time data source change.

OSS stores the data in a data lake. OSS is capable of storing large amounts of data and is better in reliability and price.

The all-in-one lake migration solution solves the problems above.

Through template configuration, you can realize a unified and simple way of lake migration.

You can complete like migration with only minute-level latency using exclusive Streaming SQL that meets the timeliness requirement.

You can realize real-time data changes caused by update and delete operations in a better way with file formats such as Delta Lake.

With the continuous development of big data, users' demand for data timeliness is increasing. Thus, real-time lake migration of data is critical. Currently, Alibaba Cloud supports real-time lake migration of Data Transmission Service (DTS), Tablestore, and Log Service (SLS).

Alibaba Cloud offers a highly reliable data transmission service, DTS. It supports the subscription and consumption of incremental data from different types of databases. Alibaba Cloud is capable of lake migration of real-time subscripted DTS data. You can perform lake migration through existing subscription channels or automatically created subscription channels. This greatly reduces the configuration costs for your business.

Technically, historical data can be updated and deleted through operations on incremental data. With this, you can detect data changes in several minutes. In terms of technical implementation, the "merge into" syntax in Streaming SQL is extended to connect to the corresponding APIs of Delta Lake.

MERGE INTO delta_tbl AS target

USING (

select recordType, pk, ...

from {{binlog_parser_subquery}}

) AS source

ON target.pk = source.pk

WHEN MATCHED AND source.recordType='UPDATE' THEN

UPDATE SET *

WHEN MATCHED AND source.recordType='DELETE' THEN

DELETE

WHEN NOT MATCHED THEN

INSERT *The data lake-based solution offers more advantages than the traditional warehouse migration of binlog. In a traditional data warehouse, two tables are usually maintained to store changed data, such as database data. One is an incremental table that stores the daily log of database changes, and the other is a full table that stores all historical merge data. You can perform merge operation in the full table and the increment table according to the primary key. Obviously, the data lake-based solution is better in terms of simplicity and timeliness.

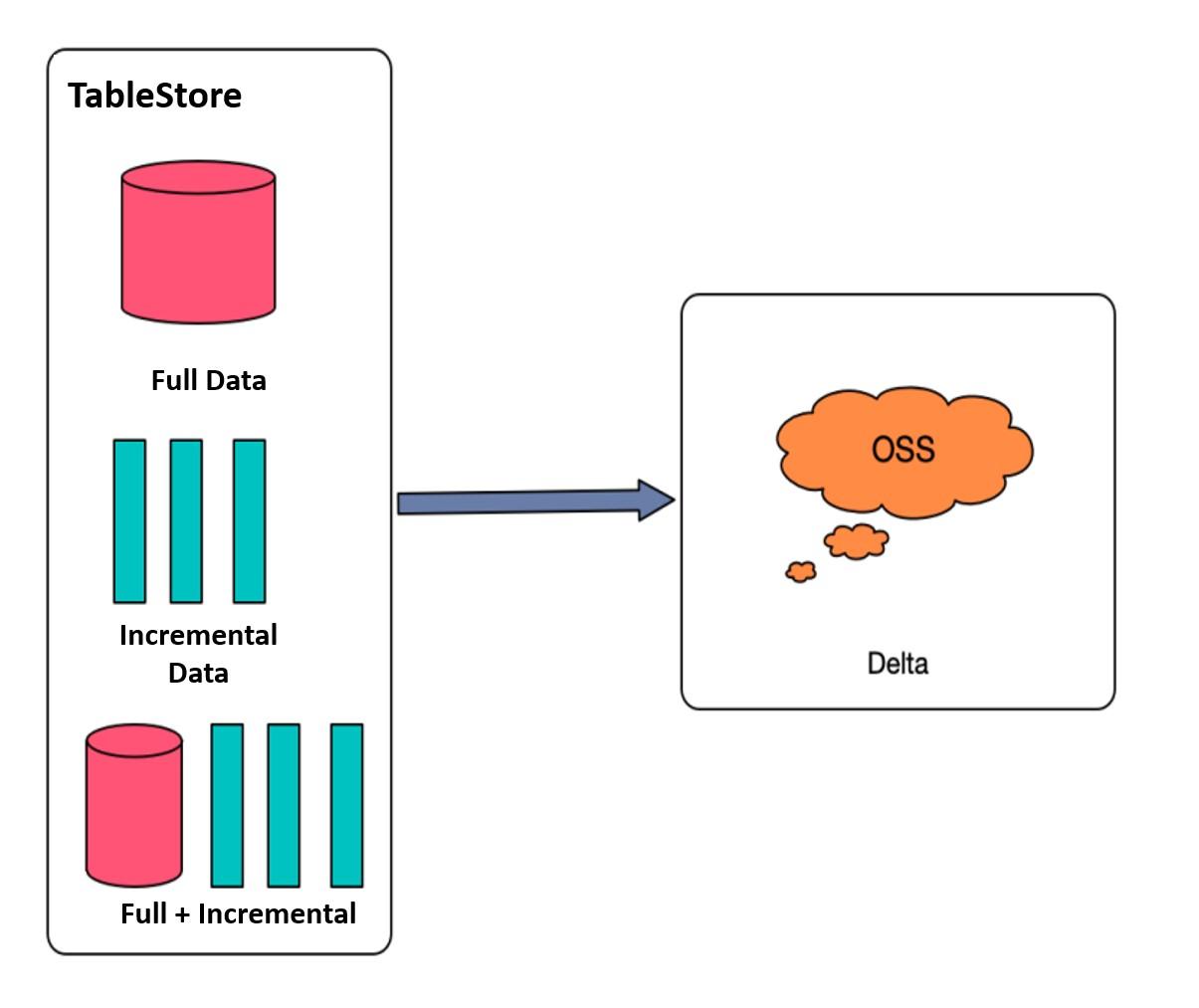

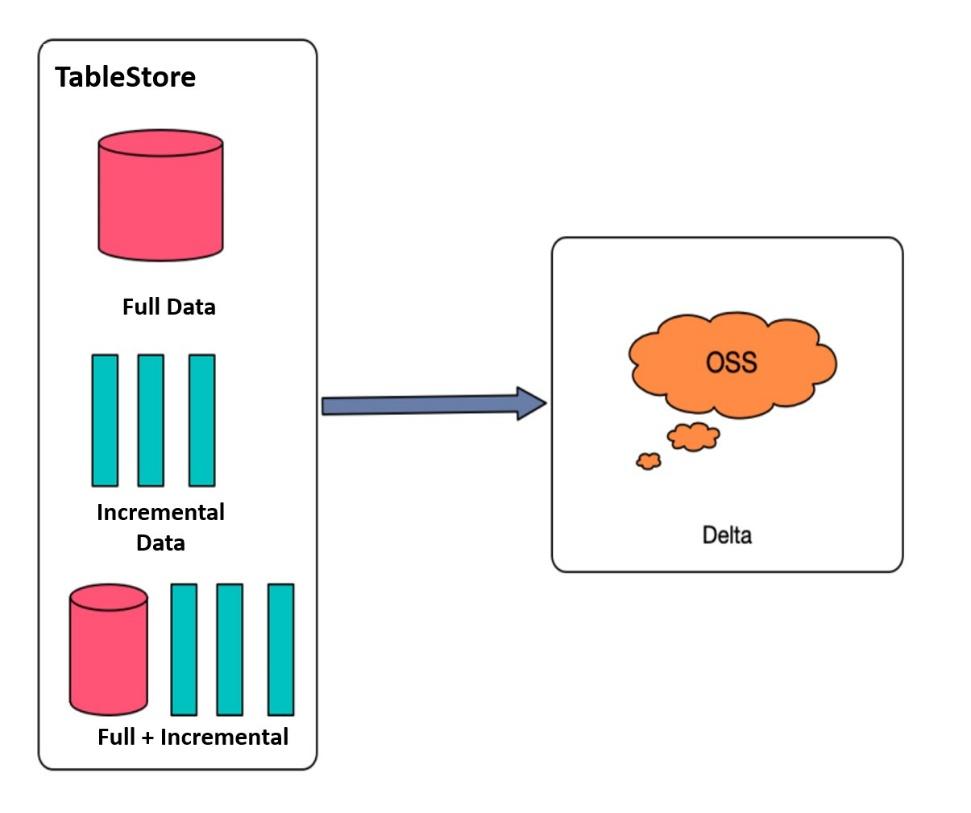

Alibaba Cloud provides a multi-model NoSQL database service, Tablestore. It can store a large amount of structured data and support fast query and analysis. Support for tunnels and real-time changes of data consumption is also present in Tablestore. DLF offers lake migration of Tablestore full channel, incremental channel, and full-incremental channel. A full channel contains all historical data. An incremental channel contains data of incremental changes, and a full-incremental channel contains data of historically full and incremental changes.

Alibaba Cloud provides an all-in-one service, SLS, for storing user log data. You can archive log data in the SLS in a Data Lake in real-time for further analysis and processing to explore the full value of the data. You can also migrate the SLS logs to a data lake in real-time by using the lake migration template and filling in a small amount of information, such as project and logstore.

The lake migration solution reduces the migration cost of data from heterogeneous data sources. It also satisfies the timeliness requirements of data sources such as SLS and DTS and supports real-time data source changes. You can uniformly store data from different data sources in the OSS-based centralized data lake storage. Thus, you can avoid the data island problem and build a solid foundation for unified data analysis.

In the future, the all-in-one lake migration solution from Alibaba Cloud will continue to provide improved functions and support more types of data sources. In terms of migration template, more functions will be provided to users. It will also support the function of customized data extract-transform-load (ETL) to improve flexibility. Moreover, continuous performance optimization will be available to provide better timeliness and stability.

JindoFS Cache-based Acceleration for Machine Learning Training in a Data Lake

62 posts | 6 followers

FollowAlibaba EMR - April 27, 2021

Alibaba EMR - June 8, 2021

ApsaraDB - February 20, 2021

Alibaba Cloud Industry Solutions - January 13, 2022

Alibaba EMR - July 9, 2021

Alibaba Clouder - May 20, 2020

62 posts | 6 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba EMR

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free