Catch the replay of the 2021 Alibaba Cloud Summit Live at this link!

By Chen Xu Senior Algorithm Architect of Mobvista and Product Leader of EnginePlus 2.0

This article introduces the exploration and practice of Mobvista in the field of cloud-native data lakes, as well as the architecture of StarLake.

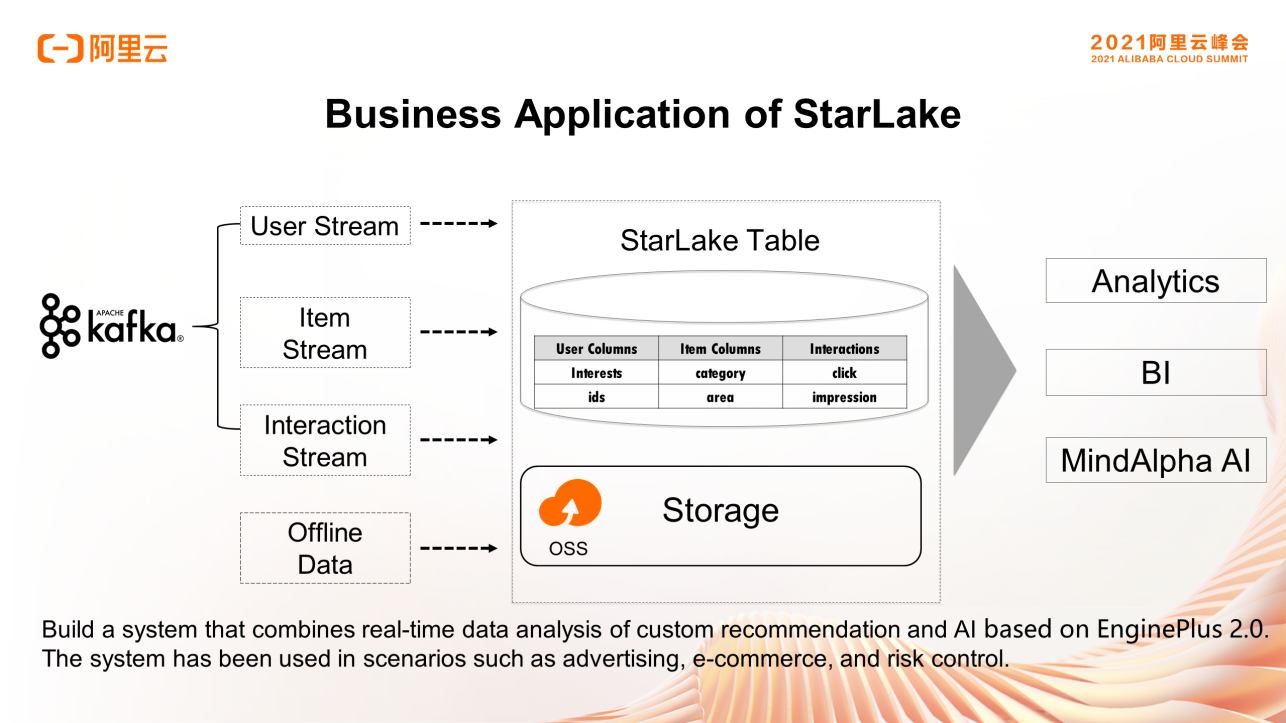

We practiced applications based on lake-warehouse integration in some business scenarios, such as the recommendation system, device management, and DMP. We also encountered some problems with some open-source data lake components, which drove us to redesign a new data lake product called StarLake.

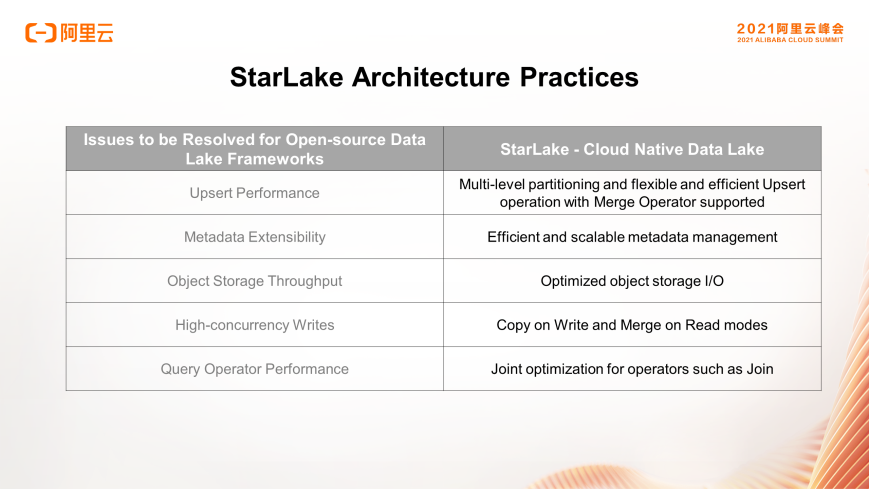

Specifically, the first problem to be solved is the performance of Upsert. Upsert needs to insert data into tables in real-time. The data in each column and each row is written in different streams, which may be concurrent. This is quite different from the general ETL process. For traditional frameworks, the performance improvement may not be so perfect. However, StarLake was designed for Upsert performance improvements.

The second problem is the scalability of metadata. When the number of small files reaches a threshold, for example, hundreds of millions to billions, metadata scalability problems may occur. StarLake uses distributed databases to solve this problem.

The third problem is the throughput of object storage. Generally, data lake frameworks, such as Hive, do not care about the throughput, and they are not considered for cloud object storage scenarios. However, when we designed StarLake, we designed it to improve the object storage throughput.

The fourth problem is high-concurrency writing. High-frequency writes need to be supported to update a table in multiple concurrent streams in real-time. In addition, modes like Copy on Write and Merge on Read also need to be supported. In each mode, different data optimizations are provided to improve the real-time data ingestion performance.

The last problem is about partition modes. Some of our partition modes optimize operators together with the query engine.

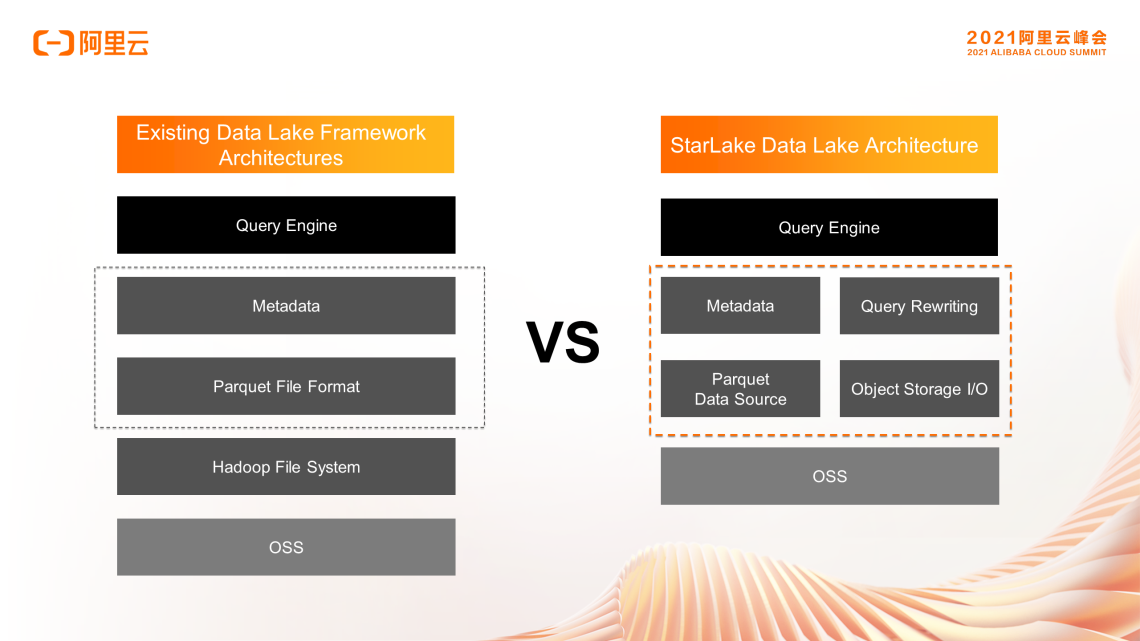

To achieve the optimization goal mentioned above, the architecture of the data lake framework must be different from the existing architecture.

Previously, data lakes focused on multi-version control and concurrency control in metadata management. In deeper layers, each computing engine reads and writes data. For example, to read a development file in Parquet format (disadvantageous in storage), the Hadoop File Format abstraction is required. Then, OSS data is read and written. This is the design of conventional data lakes. When designing StarLake, we found that metadata alone is not enough. For metadata, query engine, and query plan, the file resolution and object storage need to be associated. We can push down some information from metadata to the query plan and then to the read and write of the file. Finally, the file I/O layer prefetches data directly from the object storage. These four layers are combined in StarLake.

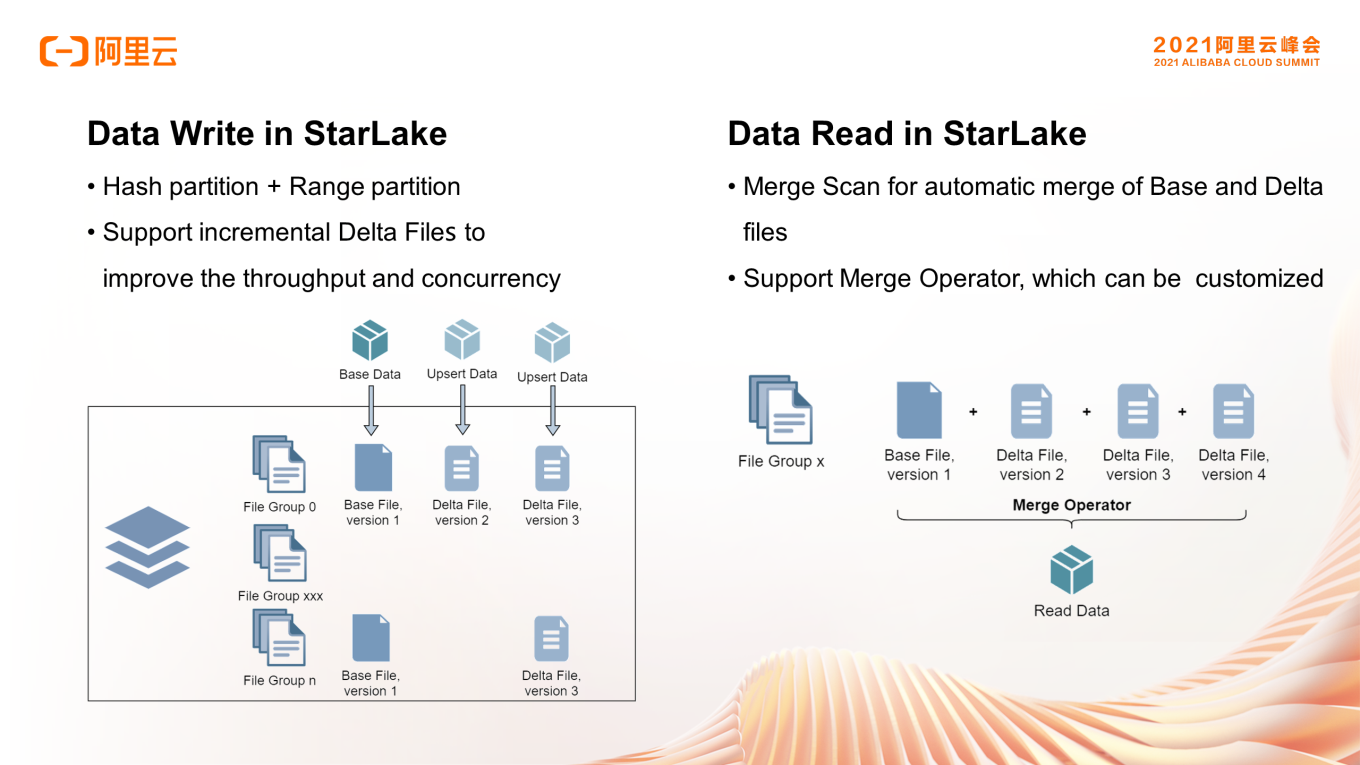

The first is the basic data storage model. One of the characteristics of StarLake is that it supports two partition modes, which is similar to HBase. StarLake can support both Hash partitions and Range partitions, which can exist in the same table at the same time. Data distribution is different in different partition modes. For example, in the case of Hash partitioning, data in each partition is already well distributed and is ordered in the file. Thus, the performance can be improved substantially. The second is that StarLake is similar to other data lakes in terms of incremental writes. The file of the first version is the benchmark file called Base File, and the incremental files are Delta Files. During data writing, metadata manages these files in the format of a file group. The advantage is that the throughput and concurrency during incremental data writes are relatively high.

However, data lakes have two modes: Copy on Write and Merge on Read. Copy on Write mainly applies to low-frequency updates. Merge on Read is equivalent to fast write, but the overhead of data merging may incur during data read.

To solve this problem, we rewrite the physical plan layer for the data read of Merge Scan, which will merge the Base File and Delta Files automatically. This may be different from other data lake frameworks, where the computing engine merges files. StarLake does this directly at the file reading layer. For example, when merging files in Hash partitions, we designed the Merge Operator. Generally, an Upsert scenario may require that the data is not only overwritten but also updated. For example, an accumulation pool may continue to accumulate data, instead of only overwriting old data directly. In this scenario, the Merge Operator allows users to customize the data read process. It can be set to overwrite data by default or be customized as needed. Users can implement custom logic, such as value summation or string concatenation, through customization, which can reduce large amounts of computing resources. The implementation of the Merge Operator draws on traditional database analysis engines. The difference is that we implement it in the framework of a data lake.

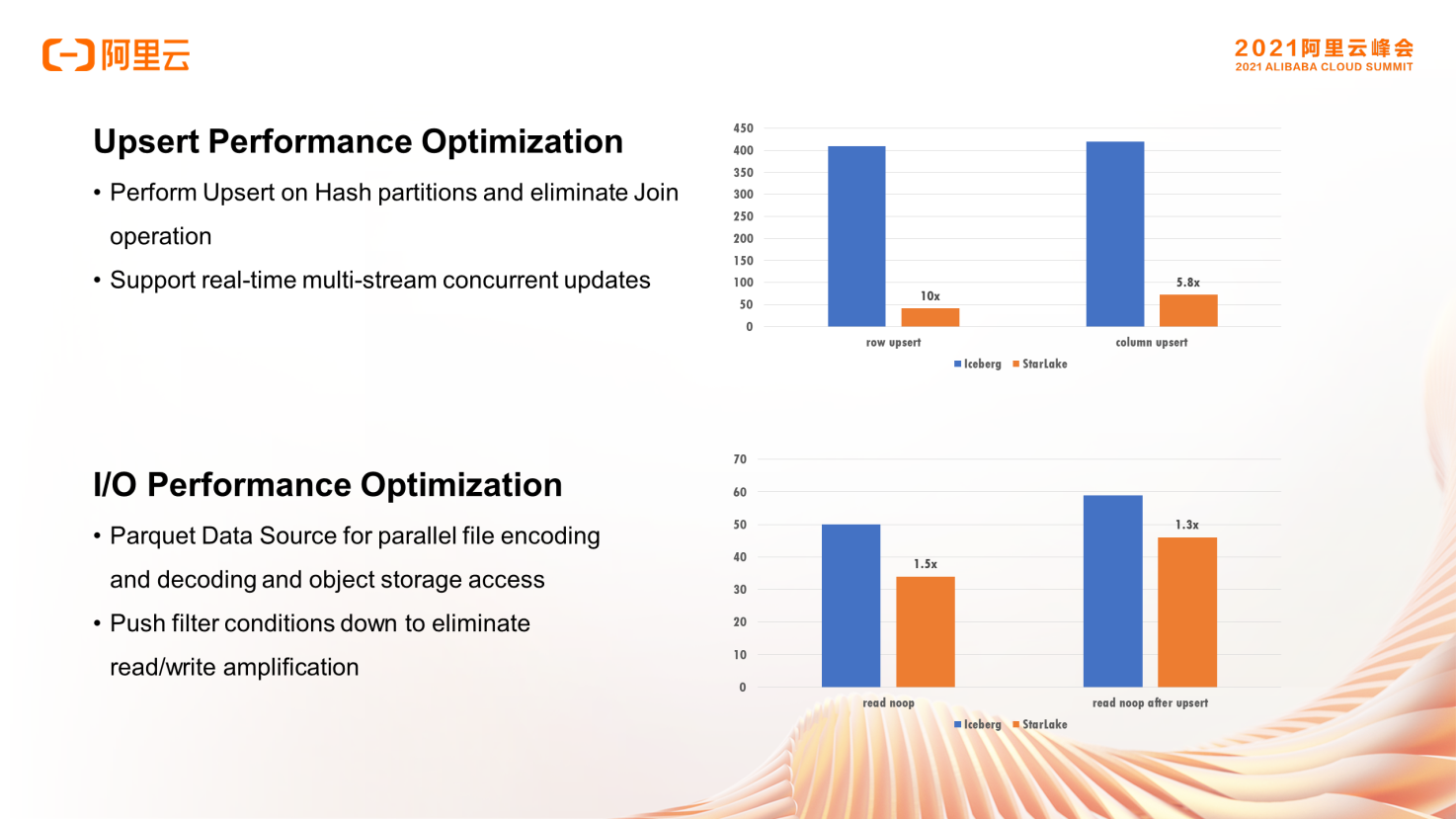

After multi-level partitioning, the Upsert performance in Hash partitions is great in this scenario because the overhead is very low when writing data. Users only need to properly determine Hash partitions and sort part of the file data for data write. Upsert does not involve historical data but only incremental data. There is no read and write overhead of historical data. Therefore, the Upsert performance is great.

By comparing StarLake with Iceberg through testing, the performance of row-level updates like this in StarLake is ten times higher than in Iceberg. This significant performance improvement allows StarLake to support multiple-stream concurrent updates easily.

The second part is the joint optimization of the file format layer and OSS access. The major difference between OSS and user-created HDFS is that the access latency of OSS is relatively high. Therefore, the OSS access through the previous Hadoop File System usually has significant latency. The CPU usage is low when data is read. What we want to do is to overlap the data read and the computation. However, data prefetching is not feasible at the file system layer because the Parquet format is disadvantageous in storage. As a result, only some intermediate columns may be read in the analysis scenario, and only one or two columns are read for a business query. At the file system layer, it is unclear how to prefetch data. We achieve this in Parquet Data Source. We have obtained all the pushdown conditions in Parquet Data Source for parallel prefetching. This improves the performance and does not cause the bandwidth overhead for OSS access. Therefore, after such optimization, table reading is improved to some extent. This E2E test shows that the read performance is improved two to three times over.

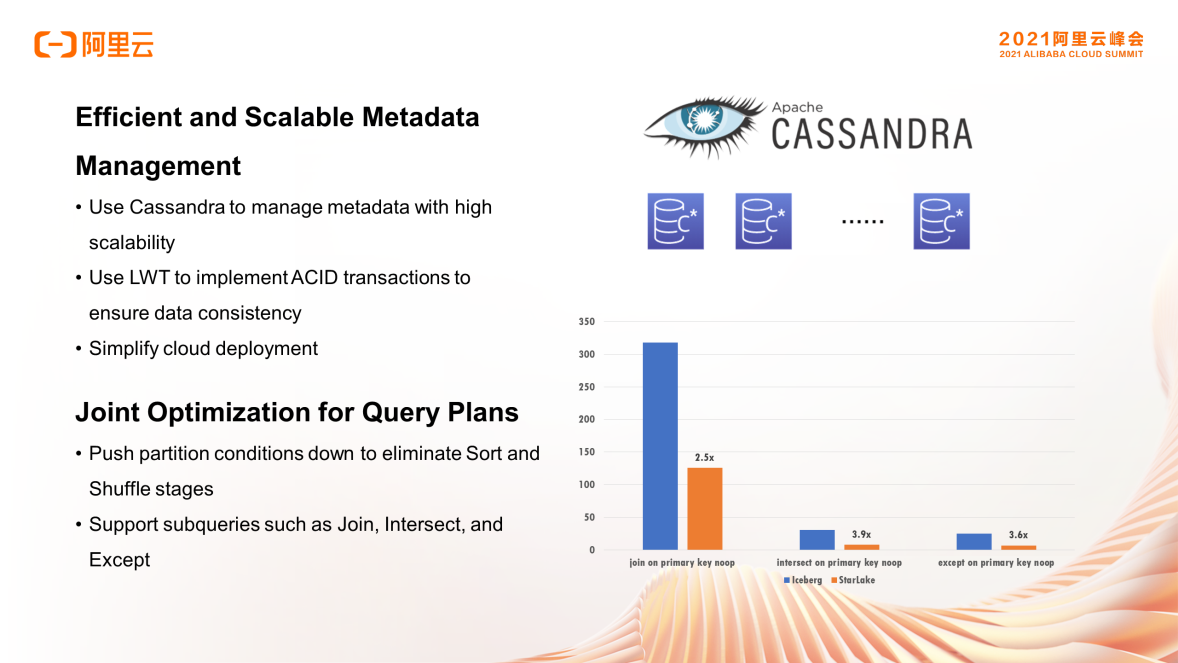

The following part describes how to expand metadata. In the past, Delta Lake and Iceberg mainly wrote files in the file system, which is equivalent to recording a multi-version Mata. The system rolls back and retries when a conflict occurs, so the efficiency is relatively low. Users often encounter a problem when using data lakes. When there are a lot of small files, the performance may drop sharply. The data lake needs to scan and merge a lot of small files in OSS using Mata, which is very inefficient. Therefore, we use Cassandra to improve scalability. Cassandra is a database with strong distributed scalability capability. It provides the LWT feature to implement ACID transactions for high concurrency to ensure data consistency. The maintenance and management of Cassandra are relatively easy because it is a decentralized database. On the cloud, scaling is more convenient.

Additional joint optimization for the query plan is required to expand the metadata. Some Range partitions and Hash partitions have organized the data distribution in advance, and the organization information is pushed down to the query engine layer. For example, when Spark executes an SQL query, it will tell it that this is a query for the same Hash partition. The Sort and Shuffle stages can be eliminated, and the performance in scenarios, such as Join and Intersect, is improved significantly.

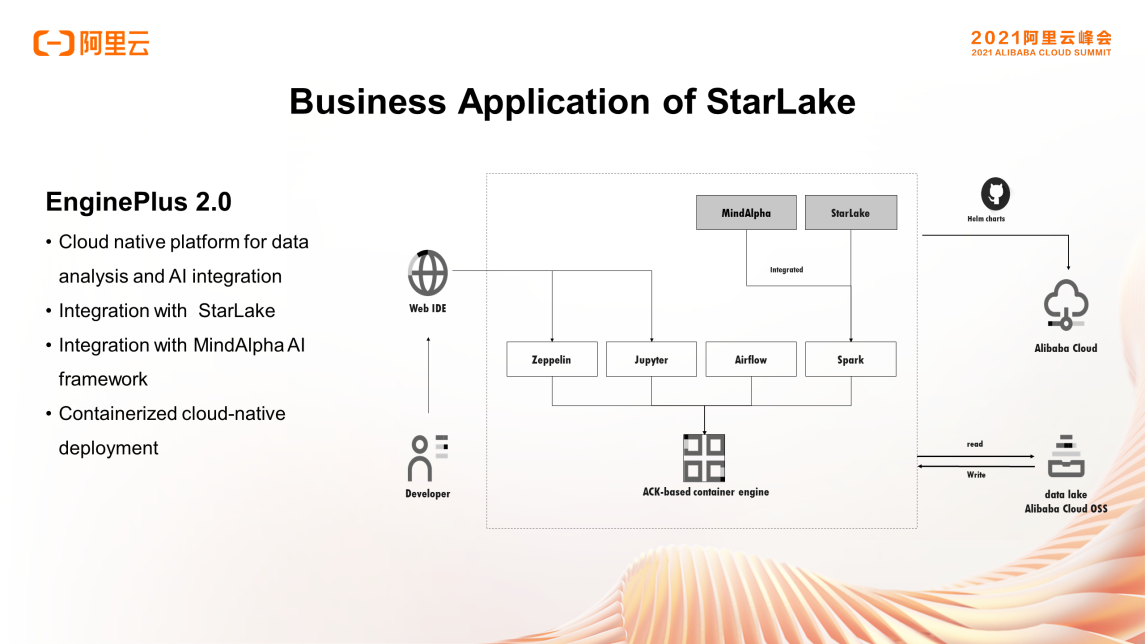

The following part describes some real application examples of StarLake. First, we built a cloud-native platform for data analysis and AI integration called EnginePlus. Its architecture is fully cloud-native, where computing components, including computing nodes and computing engines, are deployed in a containerized manner. Its storage is totally separated from computing and uses OSS. StarLake is used to obtain the capabilities of lake-warehouse integration. We have also integrated some AI components. Based on MindAlpha for cloud-native deployment and EnginePlus 2.0, StarLake can be deployed quickly with good elasticity.

⭐For open-source StarLake, please see this GitHub link

An Overview of Alibaba Cloud's Comprehensive Cloud-Native Data Lake System

Construction, Analysis, and Development Governance of a Cloud-Native Data Lake

62 posts | 6 followers

FollowClouders - July 22, 2022

Alibaba EMR - February 20, 2023

Alibaba Cloud MaxCompute - July 15, 2021

Alibaba Cloud MaxCompute - July 14, 2021

ApsaraDB - May 19, 2023

ApsaraDB - November 17, 2020

62 posts | 6 followers

Follow Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba EMR