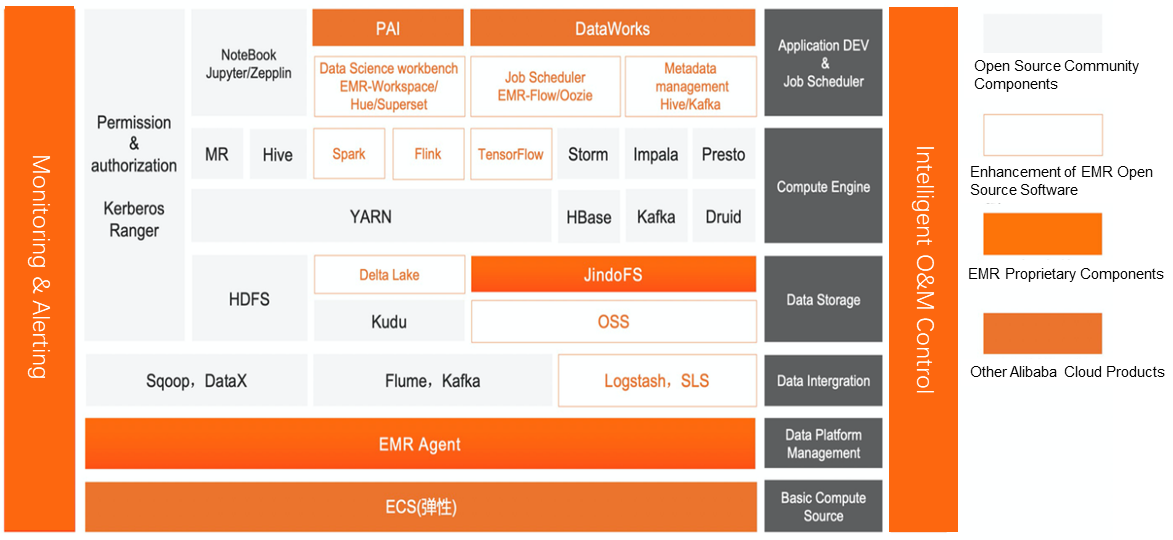

Figure 1 shows E-MapReduce (EMR) architecture based on Elastic Compute Service (ECS). As an open-source big data ecosystem, the EMR architecture provides an indispensable open-source big data solution for every digital enterprise in the last decade. It has the following layers:

Each layer has many open-source components that constitute the classic big data solution - the EMR architecture. Here are our further considerations about it:

Figure 1: Open-source big data solution based on ECS

Based on the considerations above, let's focus on the concept of cloud-native. Kubernetes is a more promising implementation for cloud-native. Therefore, when we mention cloud-native, it is actually about Kubernetes. As Kubernetes becomes increasingly popular, many customers are showing interest in this technology. Most of the customers have moved their online business to Kubernetes too. They want to build a unified and complete big data architecture on a similar operating system with such a migration plan. Therefore, we summarize the following features:

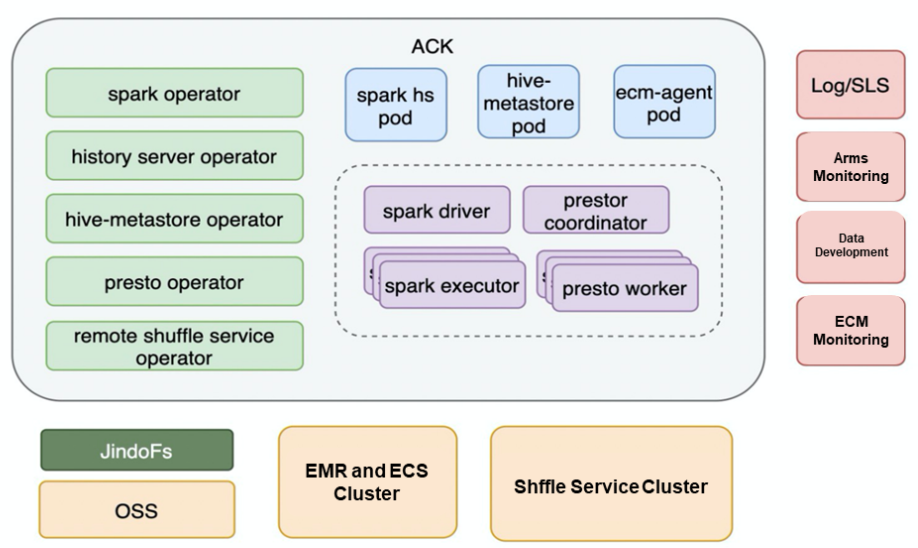

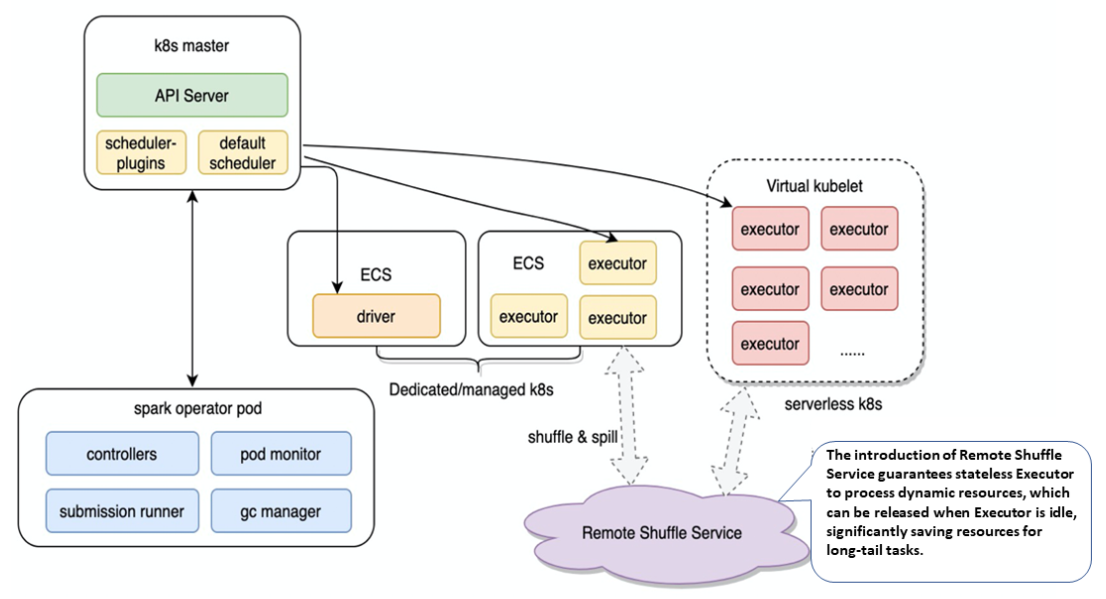

Figure 2 shows the architecture of the EMR compute engine on Container Service for Kubernetes (ACK). As a Kubernetes solution of Alibaba Cloud, ACK is compatible with APIs of the Kubernetes community version. ACK and Kubernetes are not differentiated in this article and represent the same concept.

Based on the initial discussion, we believe that the more promising batch processing engines of big data are Spark and Presto. We will add some more promising engines gradually as we iterate the ACK version.

The EMR compute engine provides products based on Kubernetes. This is essentially a combination of Custom Resource Definition (CRD) and Operator, which is also the basic cloud-native philosophy. We classify components into service components and batch components. According to this classification, Hive metastore is the service component, and Spark is the batch component.

The green parts in the following figure are operators, which are improved in many aspects based on open-source architecture. They are also compatible with the basic module of ACK on the product layer. This helps to perform control operations in the cluster conveniently. The right section in the figure contains components of log, monitoring, data analytics, and ECM control, which are the infrastructure components of Alibaba Cloud. Let's discuss the features of the bottom part:

It is relatively easy to deploy Presto in ACK as it is a stateless MPP architecture. Thus, this article mainly discusses the solution of Spark on ACK.

Figure 2: Kubernetes-based compute engine

Generally, Spark on Kubernetes faces the following challenges:

Let's look at the solutions that address these problems:

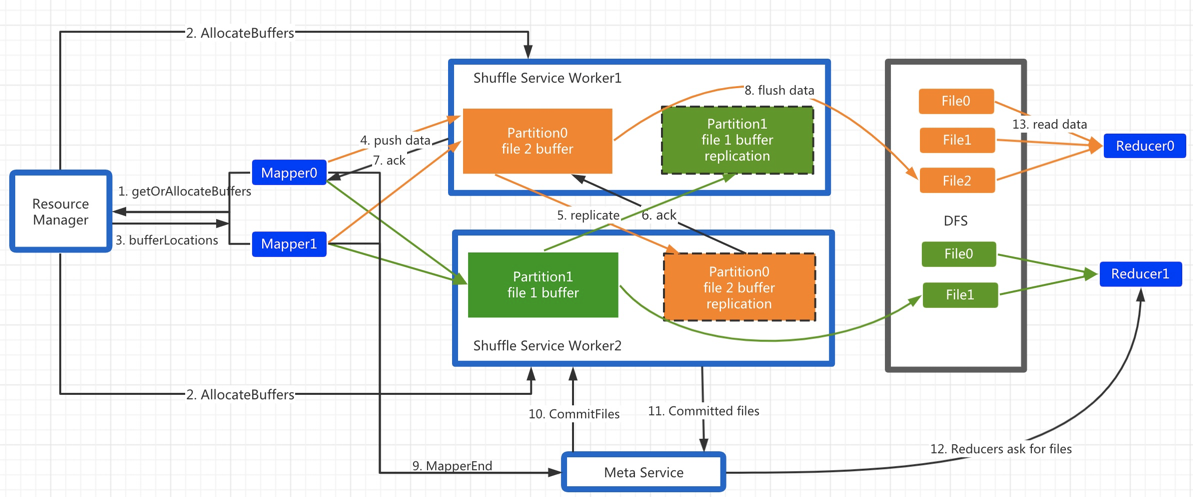

To solve the Spark Shuffle problem, we designed the Shuffle R/W separation architecture called Remote Shuffle Service. First, let's explore the possible reasons for refusing to use cloud disk in Kubernetes:

Therefore, the remote Shuffle architecture can significantly optimize the existing Shuffle mechanism to solve this problem. It shows a lot of control flows in figure 3, which we will not discuss in detail here. For more information about the architecture, see the article EMR Shuffle Service - a Powerful Elastic Tool of Serverless Spark. The focus here is the data flow All Mappers and Reducers of executor marked in blue are running in the Kubernetes container. In the figure, the middle architecture is the Remote Shuffle Service. The blue part of Mapper writes Shuffle data remotely into service, eliminating the dependency of the executor's task on the local disk. Shuffle service performs merge operation on data in the same partition from different Mappers and then writes the data into the distributed file system. In the Reduce stage, the Reducer can improve the performance by reading files sequentially. The major implementation difficulties of this system are the control flow design, fault tolerance in all aspects, data deduplication, and metadata management.

In short, we have summarized the difficulties in the following three points:

Figure 3: Architecture of Remote Shuffle Service

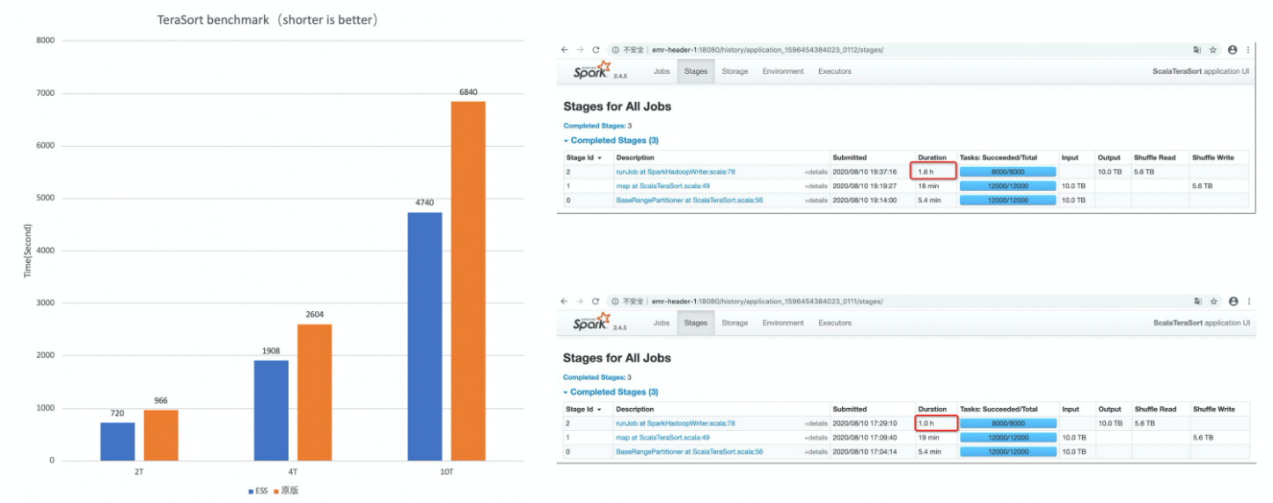

Regarding performance, figure 4 shows the Benchmark score of TeraSort. The reason for choosing the TeraSort workload for testing is that it is a large Shuffle task with only three stages. Therefore, it is very easy to observe the changes in Shuffle performance. In the left part of the figure, the blue bars show the runtime of the Shuffle service, and the orange ones show the runtime of the original Shuffle. With data volumes of 2 TB, 4 TB, and 10 TB, it is clear that the larger the data volume is, the more obvious the advantage of Shuffle service is.

In the right part of the figure, the performance improvement is reflected at the Reduce stage. The duration of Reduce with 10 TB of data is reduced from 1.6 hours to 1 hour. Earlier, we have explained the reason clearly. Those familiar with the Spark Shuffle mechanism know that the original sort Shuffle is M*N times of random IO. In this example, M is 12000 and N is 8000. Remote Shuffle has only N times of sequential IO. Therefore, a remote Shuffle with 8000 times of sequential IO is the fundamental reason for performance improvement.

Figure 4: Benchmark of Remote Shuffle Service Performance**

Other aspects of EMR optimization are as follows:

In general, the EMR version of Spark on ACK has greatly improved in architecture, performance, stability, and usability.

From our perspective, the direction for cloud-native containerization of Spark is to achieve unified O&M with cost-effectiveness. These are our summaries:

Figure 5: Hybrid Architecture of Spark on Kubernetes

Implementing native-cloud big data has many challenges. To solve these challenges, the EMR team collaborates with communities and its partners to develop better technologies and ecosystems.

Here are our visions:

Data Lake: How to Explore the Value of Data Using Multi-engine Integration

JindoTable for Data Optimization and Query Acceleration in a Data Lake

62 posts | 7 followers

FollowAlibaba EMR - June 8, 2021

Alibaba Cloud Native Community - April 15, 2025

Alibaba Cloud Big Data and AI - January 8, 2026

Alibaba Cloud Native Community - December 6, 2022

Apache Flink Community - March 26, 2025

Iain Ferguson - April 28, 2022

62 posts | 7 followers

Follow Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn MoreMore Posts by Alibaba EMR