By Qingshan Lin (Longji)

The development of message middleware has spanned over 30 years, from the emergence of the first generation of open-source message queues to the explosive growth of PC Internet, mobile Internet, and now IoT, cloud computing, and cloud-native technologies.

As digital transformation deepens, customers often encounter cross-scenario applications when using message technology, such as processing IoT messages and microservice messages simultaneously, and performing application integration, data integration, and real-time analysis. This requires enterprises to maintain multiple message systems, resulting in higher resource costs and learning costs.

In 2022, RocketMQ 5.0 was officially released, featuring a more cloud-native architecture that covers more business scenarios compared to RocketMQ 4.0. To master the latest version of RocketMQ, you need a more systematic and in-depth understanding.

Today, Qingshan Lin, who is in charge of Alibaba Cloud's messaging product line and an Apache RocketMQ PMC Member, will provide an in-depth analysis of RocketMQ 5.0's core principles and share best practices in different scenarios.

Today, we're going to learn about stream storage in RocketMQ 5.0. What is stream storage? As we mentioned earlier, RocketMQ 5.0 has the capabilities of "message, event, and stream" integration. Stream here refers to stream processing, and stream storage is the cornerstone of stream processing. Stream storage is also the basis for RocketMQ to move from application architecture integration to data architecture integration, providing asynchronous decoupling capabilities for data components in the big data architecture.

In the first part, we'll explore the scenario of stream storage in more detail from the perspective of usage, highlighting how it differs from business message scenarios. In the second part, we'll discuss the features provided by RocketMQ 5.0 for stream storage scenarios. In the third part, we'll use two data integration cases to help you understand the usage of stream storage.

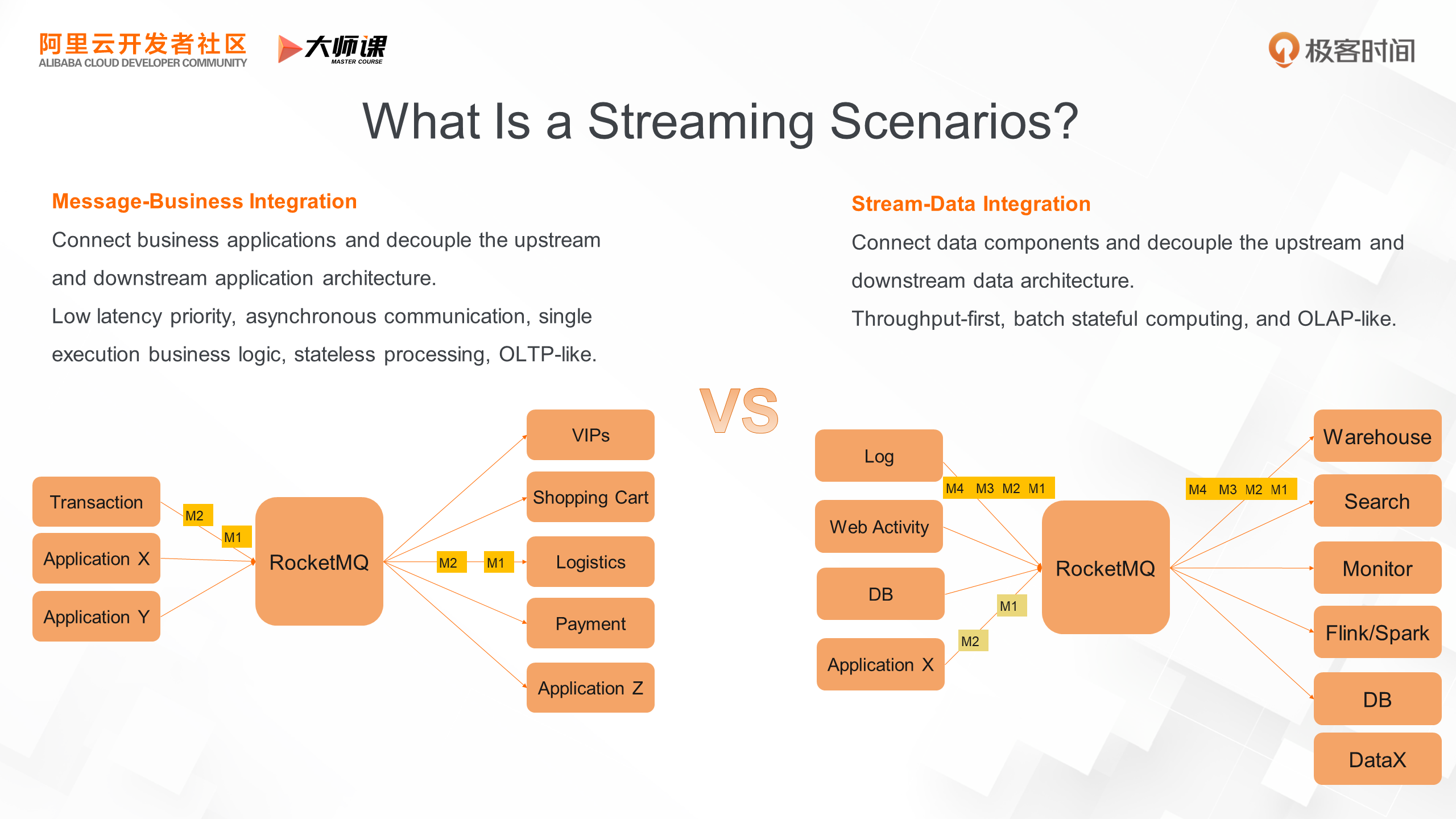

Let's start with the first part: what is a stream scenario? By comparing the two, we can see the difference between a message and a stream. Earlier, we focused on message basics and message advancement, which emphasized business integration of messages. RocketMQ's main role is to connect business applications and decouple upstream and downstream systems of the business architecture, such as decoupling transaction systems. This scenario is more about online businesses, where a workflow is triggered by the user, such as a purchase. To ensure user experience, the messaging system must first ensure low latency. In this scenario, the message system is responsible for asynchronous communication, which corresponds to synchronous RPC communication. At the message consumption level, the corresponding business logic is executed based on the message data to trigger the next workflow. The processing of each message is irrelevant and stateless. It focuses on business digitalization scenarios, which can be compared to OLTP for databases. It requires less data in a single operation and is used for online transactions.

The stream scenario focuses on data integration, connecting various data components, distributing data, and decoupling the upstream and downstream systems of the data architecture. For example, log solutions collect log data and perform ETL to distribute log data to search engines, stream computing, and data warehouses. In addition to logs, database binlog distribution and page click streams are also common data sources. In this offline transaction scenario, low latency is not demanded, but large-scale throughput is more important. Moreover, in the message consumption phase, instead of processing a single message, there is more batch dumping or batch stream computing. It focuses on digital business scenarios, which are similar to OLAP of databases. It operates a large amount of data at one time and is used for offline analysis scenarios.

In the second section, we learn about how to use RocketMQ in streaming scenarios.

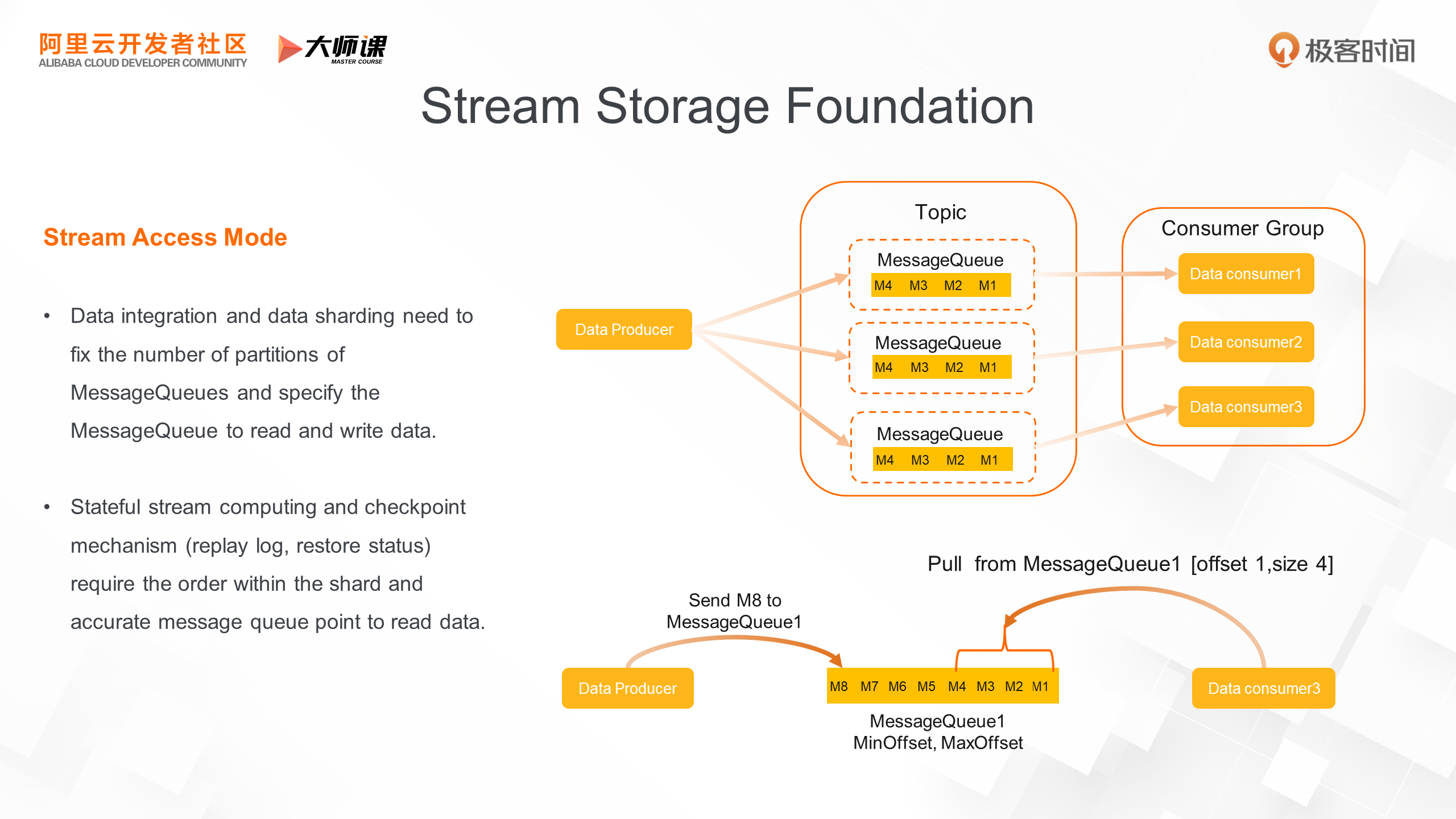

The biggest difference is its access mode of message data.

• When used in data integration scenarios, large-scale data integration inevitably involves data sharding as the basis for connecting upstream and downstream data systems. To ensure data integration quality, the number of partitions in a topic must remain unchanged, ensuring that data within the same partition remains ordered. In terms of message reading and writing methods, instead of reading and writing topics, you can specify topic shards, i.e., queues, to perform read and write operations.

• As a stream storage system, downstream consumption typically involves some stream computing engines for stateful computing. To support fault-tolerant processing of the stream computing engine, it requires supporting a checkpoint mechanism, similar to providing a redo log for the stream computing engine, which can replay messages based on the queue location and restore the state of stream computing. Additionally, it requires internal ordering within shards, ensuring that data with the same key is hashed to the same shard to implement key-by operations.

This is the difference between a stream storage access mode and a message access mode. In message scenarios, users only need to focus on topic resources, without worrying about concepts like queues and locations.

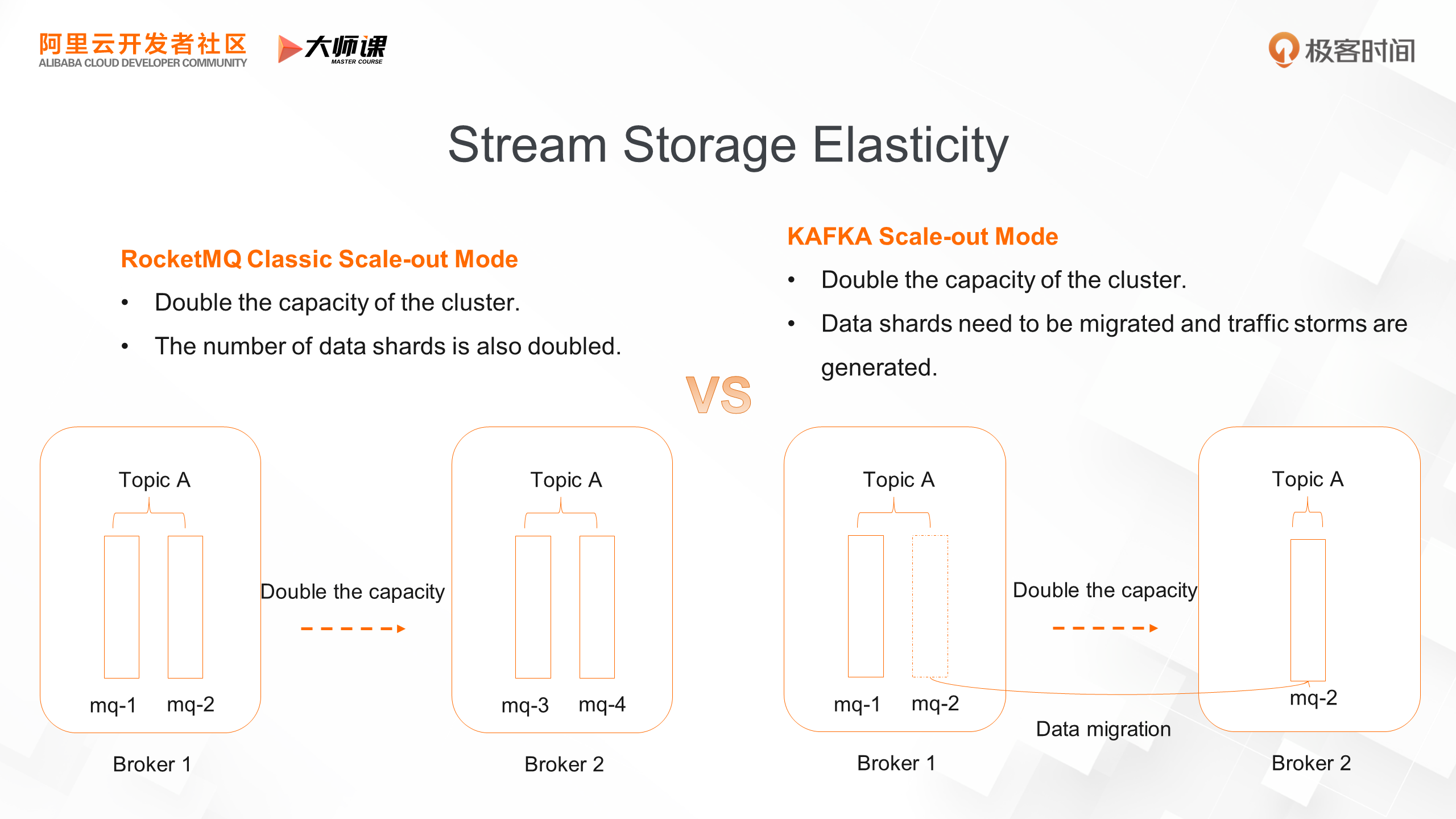

We’ve learned how to use stream storage from the user's perspective. Now, let's explore how elastic stream storage is from an O&M perspective. We're going to review the industry's current resilience approaches. The following figure shows the classic scale-out mode of RocketMQ. For example, to double the capacity of Topic A, you'd need to add a new machine, create Topic A, and add the same number of queues. This would double the number of shards, which can't meet the requirements of fixed partitions in stream storage.

The following figure shows Kafka's scale-out mode. To double the capacity of Topic A, you'd need to add a new node and migrate the partition mq-2 from the old node to the new node. Although this ensures the number of partitions remains the same, it requires partition data migration. When there are a large number of partitions and a massive amount of data, it can cause traffic storms on the cluster, seriously affecting stability, and the entire scale-out time becomes uncontrollable. This is the deficiency of the existing stream storage elasticity mechanism.

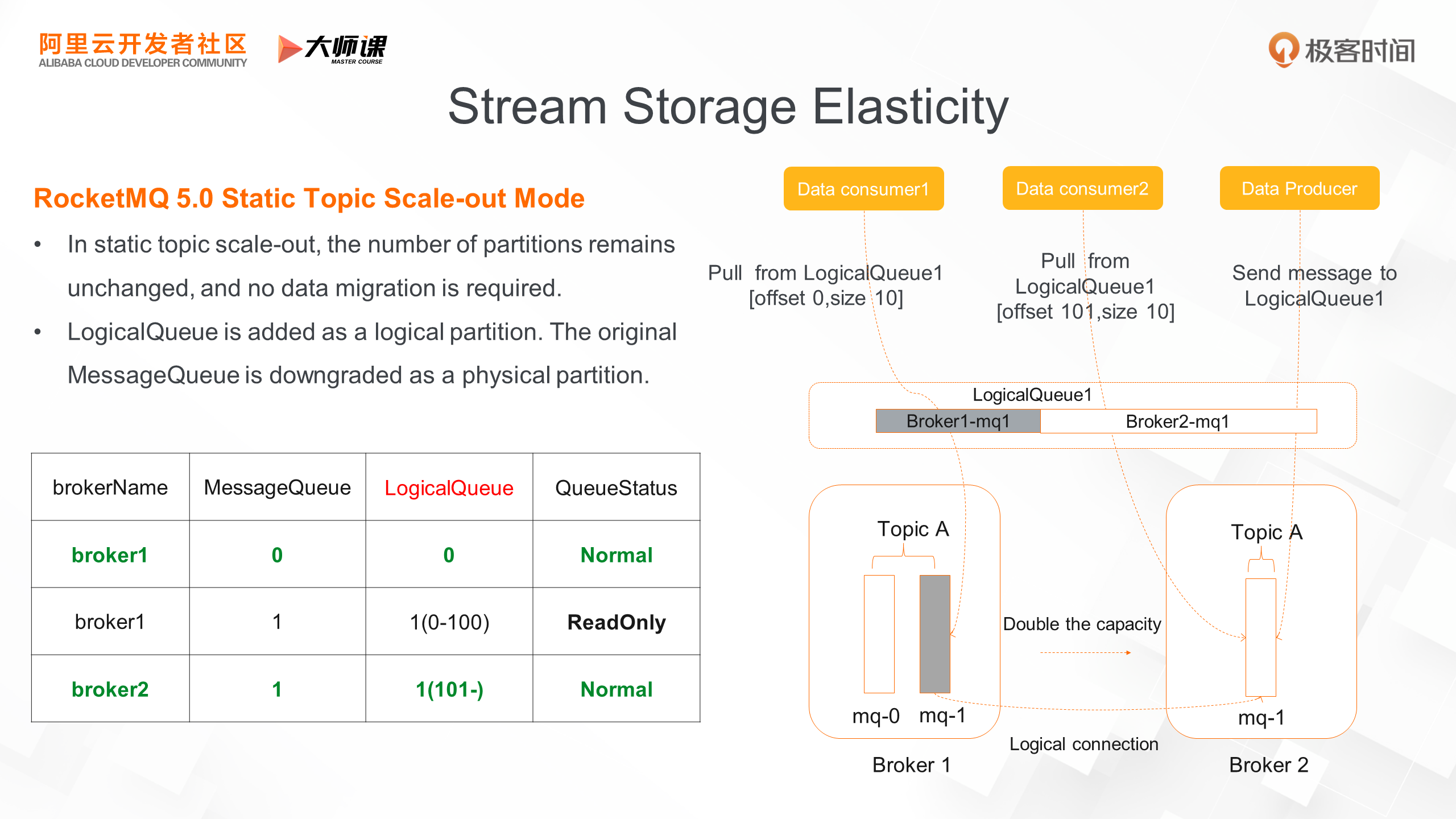

To solve the scale-out problem of classic stream storage, RocketMQ 5.0 provides a new mode that introduces static topics. In the scale-out mode of a static topic, the number of partitions remains the same, and no data migration is required during the scale-out process.

The key point of its implementation is the introduction of the concept of logical queues. For users, the object they access is no longer the physical queue originally bound to a broker, but the logical queue of the global topics, and each logical queue corresponds to one or more physical queues. We can understand the implementation of logical queues based on an actual case. The figure shows Topic A doubling the traffic. Initially, the physical queue bound to logical queue 1 is mq1 of broker 1. After the scale-out, the broker1-mq1 becomes read-only, and the latest read and write operations on logical queue 1 are broker2-mq1. The latest news from producers will be sent to broker2-mq1. If the consumer reads the latest data, it reads directly from the broke2-mq1 physical queue. If it reads old data, the read request is forwarded to the old physical queue broker1-mq1. This completes the scale-out process of the entire static topic. The number of partitions remains unchanged and no data migration is implemented. This reduces the number of data replications and improves system stability.

In streaming scenarios, there is also a very important change, that is, the change of data type.

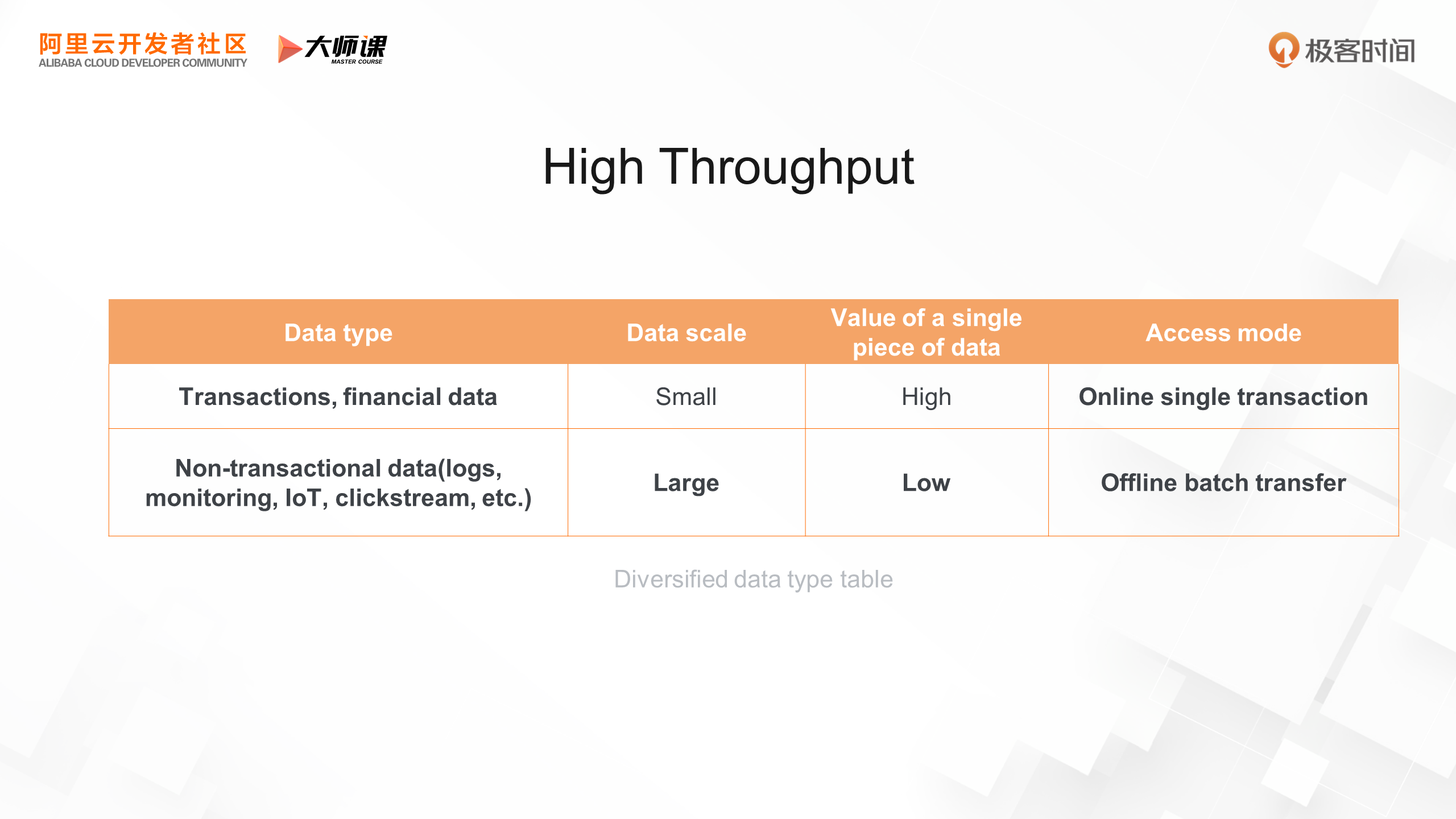

To make a simple comparison, in the business integration scenarios, message data carries business events, such as order operations and logistics operations. The characteristic is that the data scale is small, but the value of each piece of data is particularly high and the access mode is short and fast online access mode of a single transaction.

In streaming scenarios, there is more non-transactional data. For example, user logs, system monitoring, some sensor data of IoT, click streams of websites, etc. It is characterized by an order of magnitude increase in data size, but the value of a single piece of data is relatively low, and the access mode is biased towards offline batch transmission. Therefore, in streaming scenarios, RocketMQ storage needs to be optimized for high throughput.

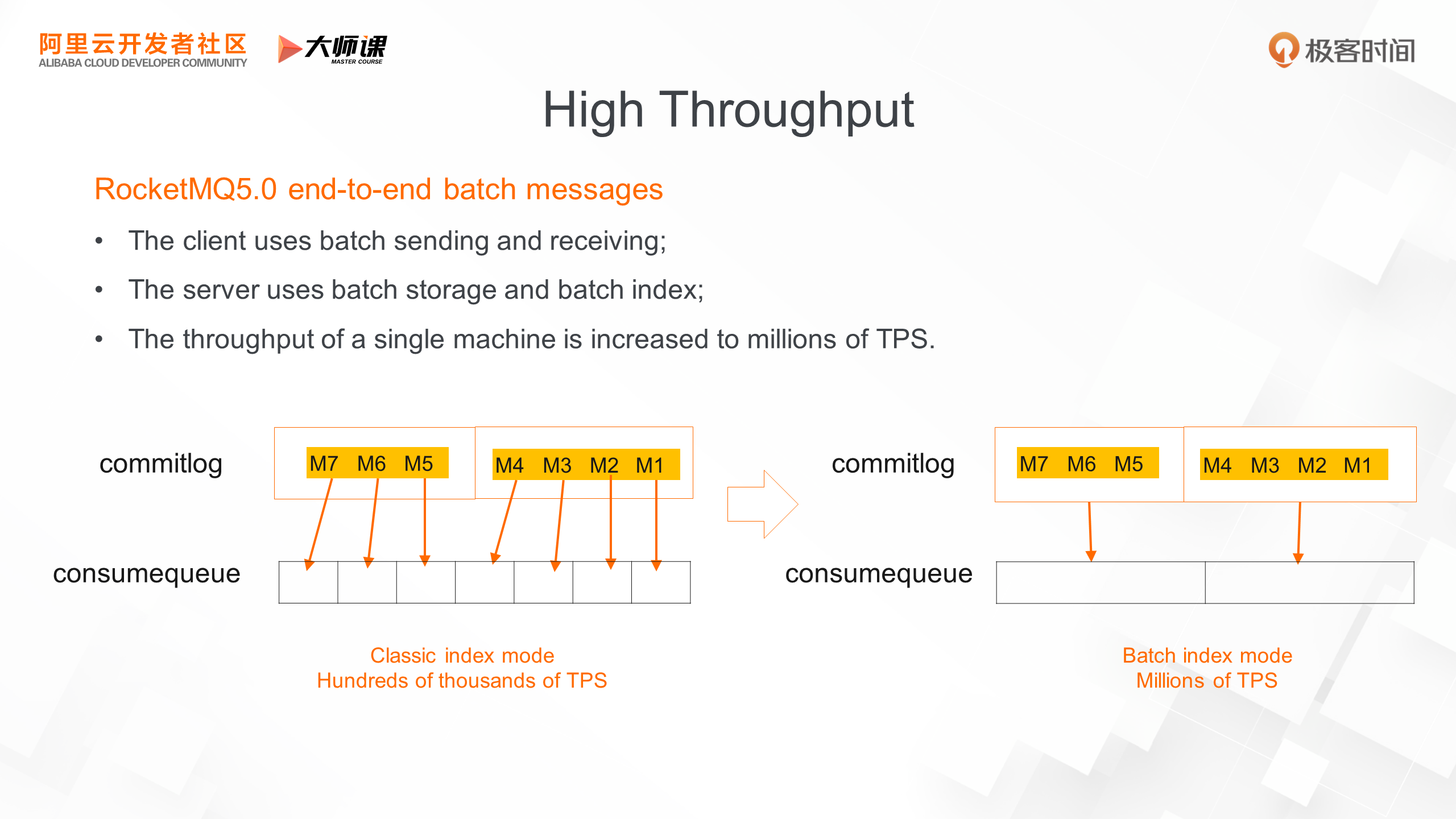

In RocketMQ 5.0, we introduced end-to-end batch messages. How to understand end-to-end? Starting from the client, in the sending phase, the message is collected to a certain amount in the client and sent directly to the broker in one RPC request. In the broker storage phase, the entire batch of messages is directly stored. With the batch index technology, only one index is built for a batch of messages, which greatly improves the index construction speed. In the consumption phase, the entire batch of data is read to the consumer, the unpacking operation is performed, and finally, the consumption logic is executed. In this way, the message TPS of the entire broker can be increased from hundreds of thousands to millions.

Stream storage is usually connected to stream computing engines, such as Flink and Spark. Some stateful computations such as data aggregation are involved in the stream computing engine, and operators such as average, sum, keyby, and time window, require the computing state maintenance.

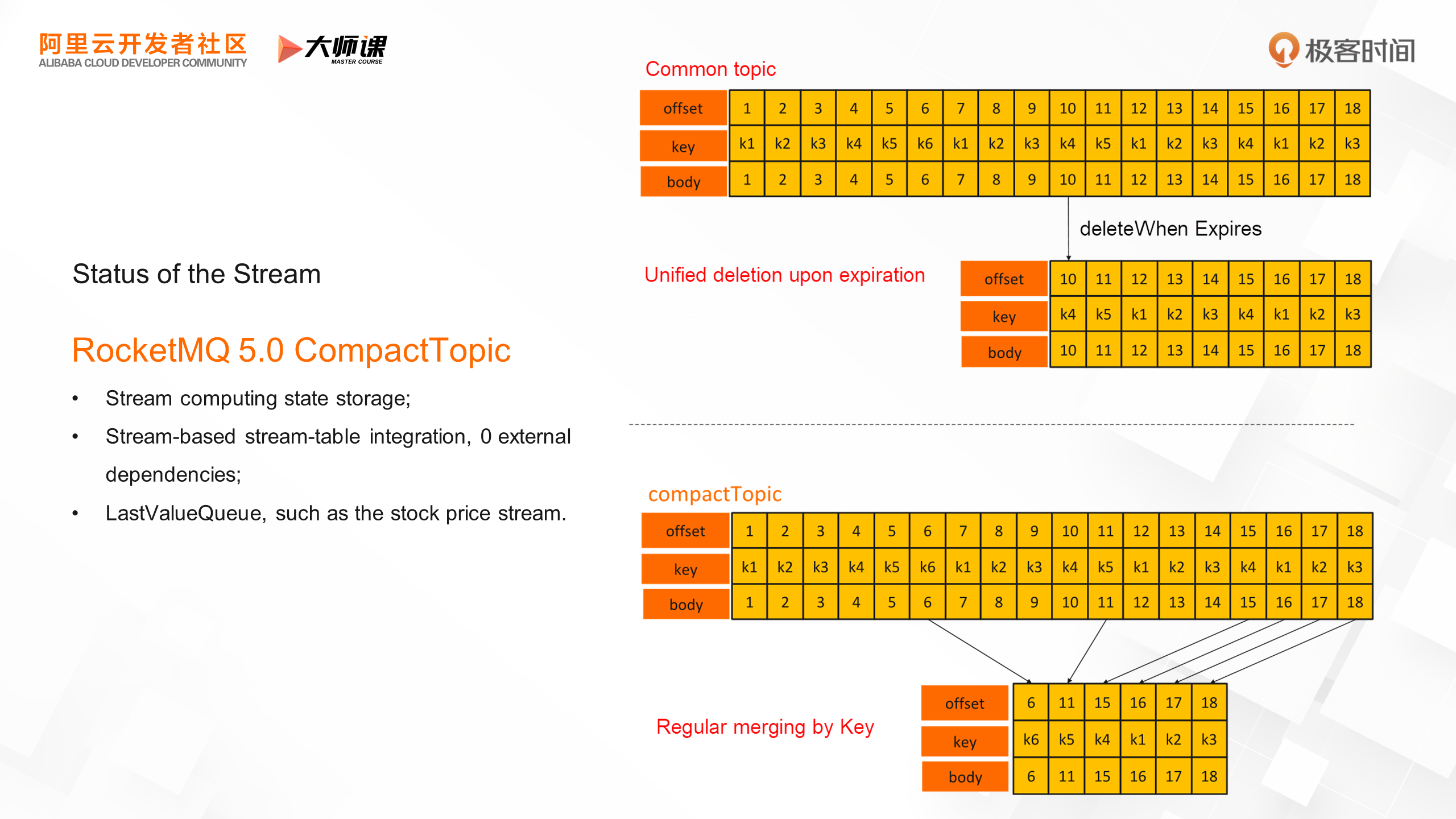

Therefore, in RocketMQ 5.0, we have added the CompactTopic type, which is a KV-like service with stream as the core, and maintains the stream status without introducing an external KV system. It is also suitable for some special scenarios and can be used as the latest value queue. For example, in stock price stream scenarios and stock trading, users only focus on the latest price of each stock.

The following figure shows the implementation of CompactTopic. In CompactTopic, each message is a pair of KV. If regular topics are used, continuous updates of the same key occupy a large amount of space and affect the read efficiency. In terms of lifecycle management, old data is also deleted in batches in FIFO mode because the disk usage is too high. For CompactTopic, the broker regularly merges messages with the same key to save storage space. Users can only read the latest value of each key during streaming access to the topic.

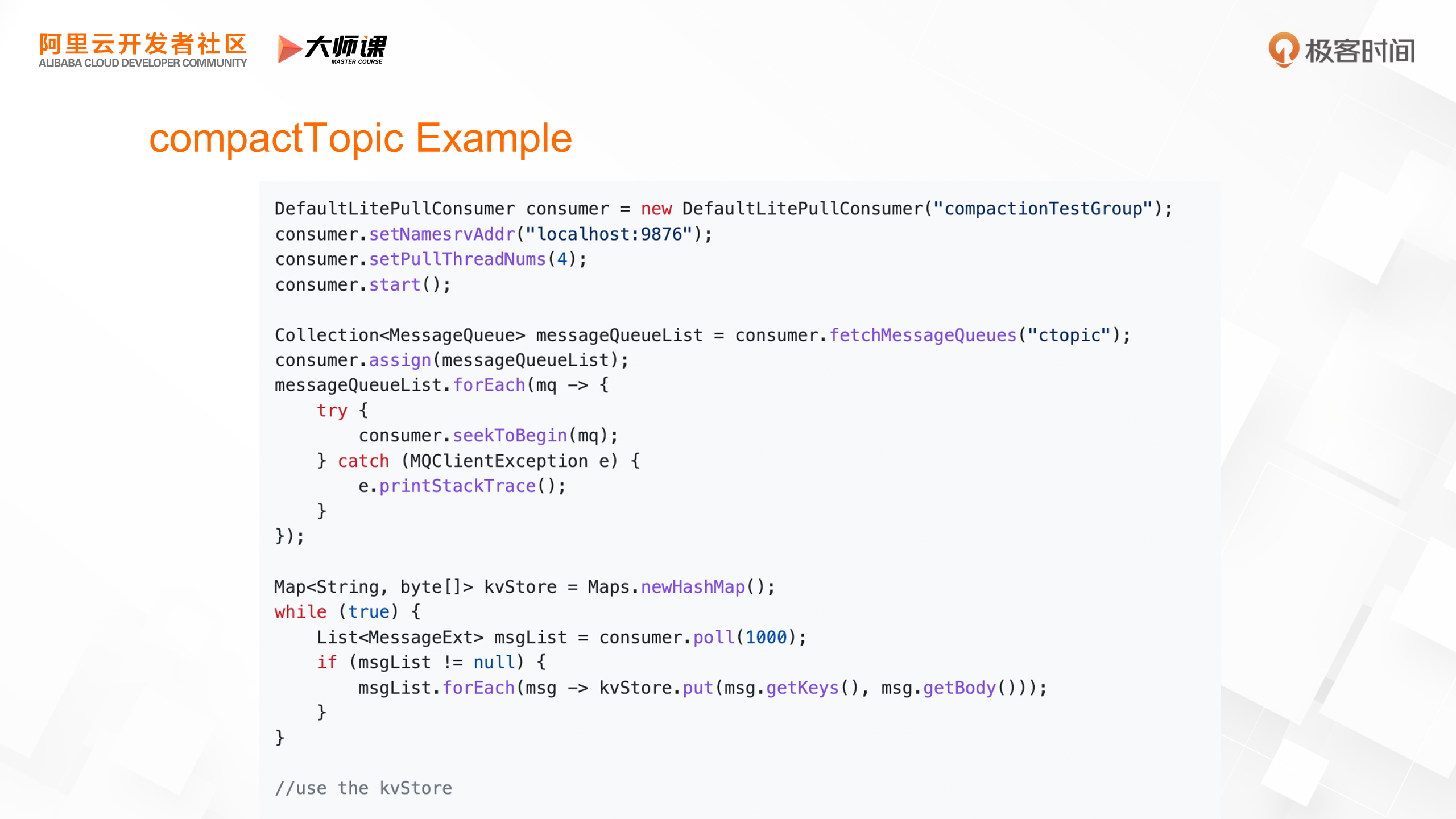

With this example, we can get a more vivid understanding of CompactTopic. There is no difference in message production. You only need to add a key to the message. The difference is mainly in the consumption mode. First, we use PullConsumer, which is a consumer SDK for streaming scenarios. Then, we want to obtain the queue of the Compact topic and perform queue sharding. Each consumer instance is then assigned to a fixed queue to undertake the stream state recovery of that partition. In this case, HashMap is used to simulate, replay the entire queue, and rebuild KV.

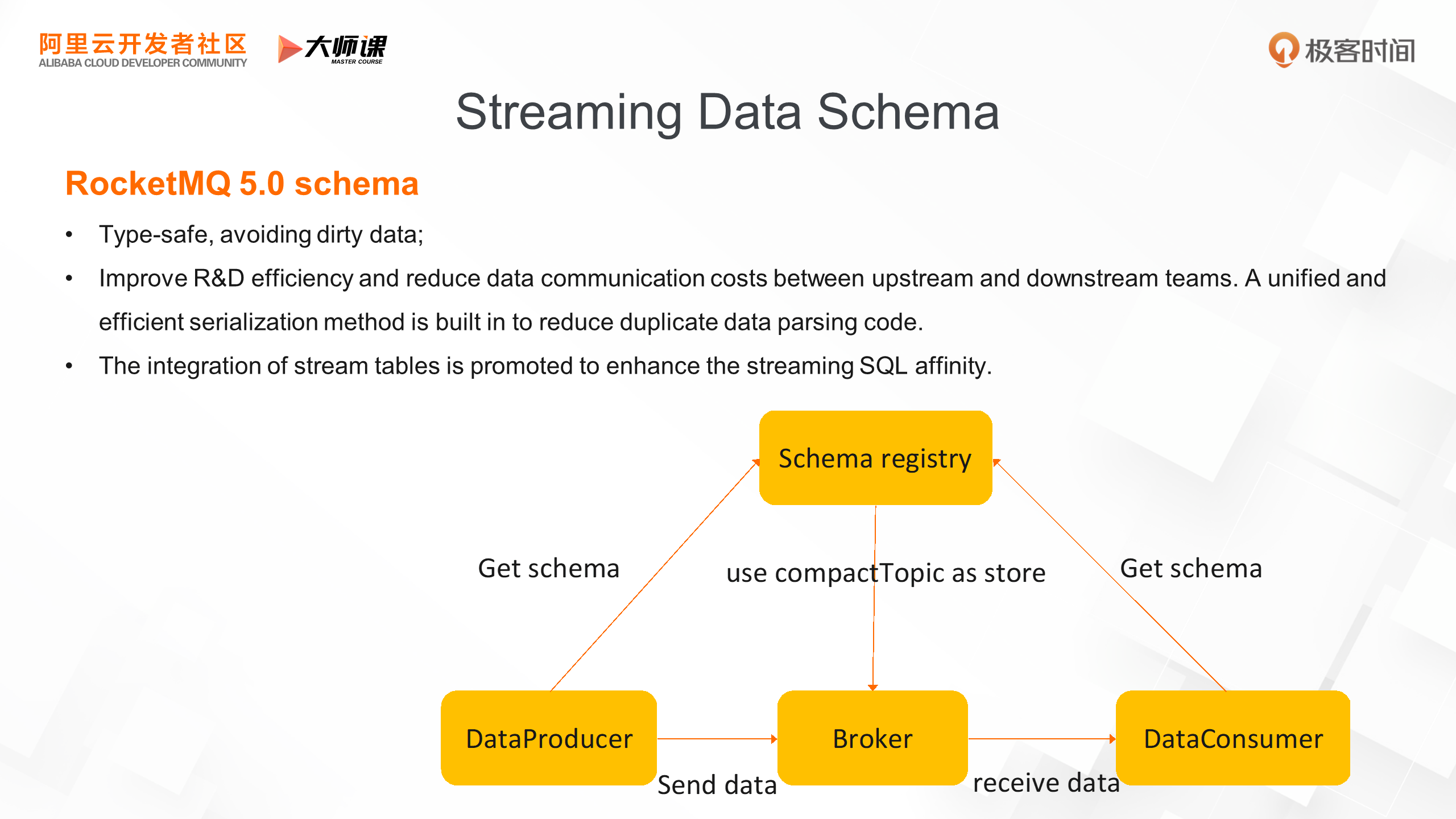

With the prosperity of the data ecosystem of RocketMQ, data integration involves an increasing number of upstream and downstream components, and it is urgent to improve data governance capabilities. Therefore, we introduced the concept of schema in the RocketMQ 5.0 to add a structured description for messages. It brings several benefits. First, it can improve type security and avoid incompatibility between upstream and downstream data integration and integration failure caused by changes in message structure. Second, it improves the efficiency of data integration research and development. Upstream and downstream can obtain the message structure through the schema registry, saving communication costs; the built-in efficient serialization mechanism saves the need to write repeated serialization code. At the same time, in the background of stream-table integration, the message schema can be mapped with the concept of the table structure of the database to improve the affinity of streaming SQL.

The following figure shows the structure of the message schema. First, there will be a component of the schema registry to maintain the data of the schema. The data storage is based on CompactTopic. In the process of sending and receiving messages, the client first obtains the format of the schema, verifies the format, and serializes it with the built-in sequence tool to complete the entire message-sending and receiving process.

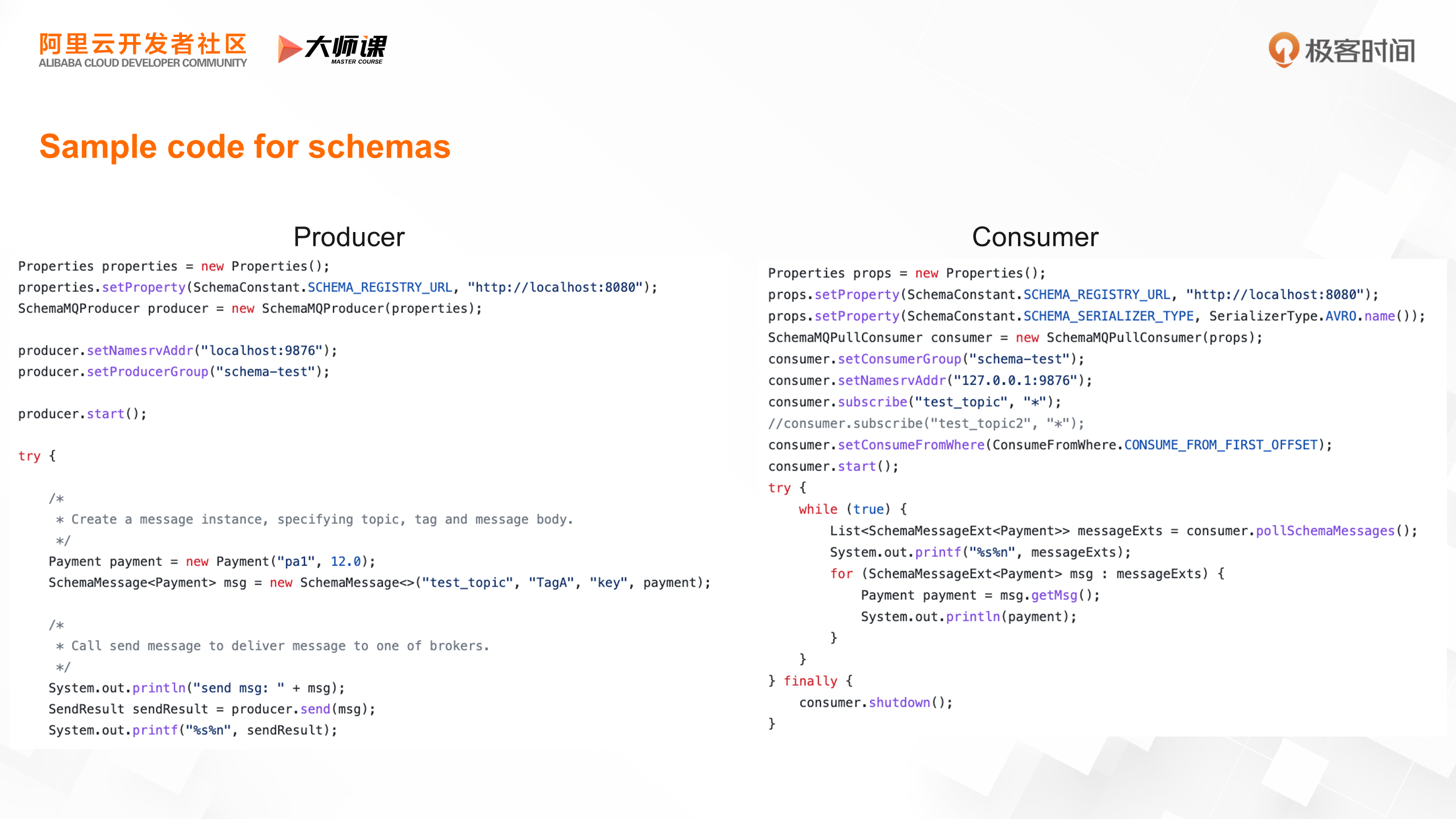

Let's look at the sample code of the schema. On the left is the producer. On the right is the consumer. The code structure is close to the common structure. The only difference is that the sent object is a business domain object and does not need to be converted into a byte array by itself. The same is true for consumers. Consumers directly obtain business objects to execute business logic, which reduces the complicated work of serialization and deserialization and improves the efficiency of research and development.

Finally, let's look at some cases of RocketMQ stream storage.

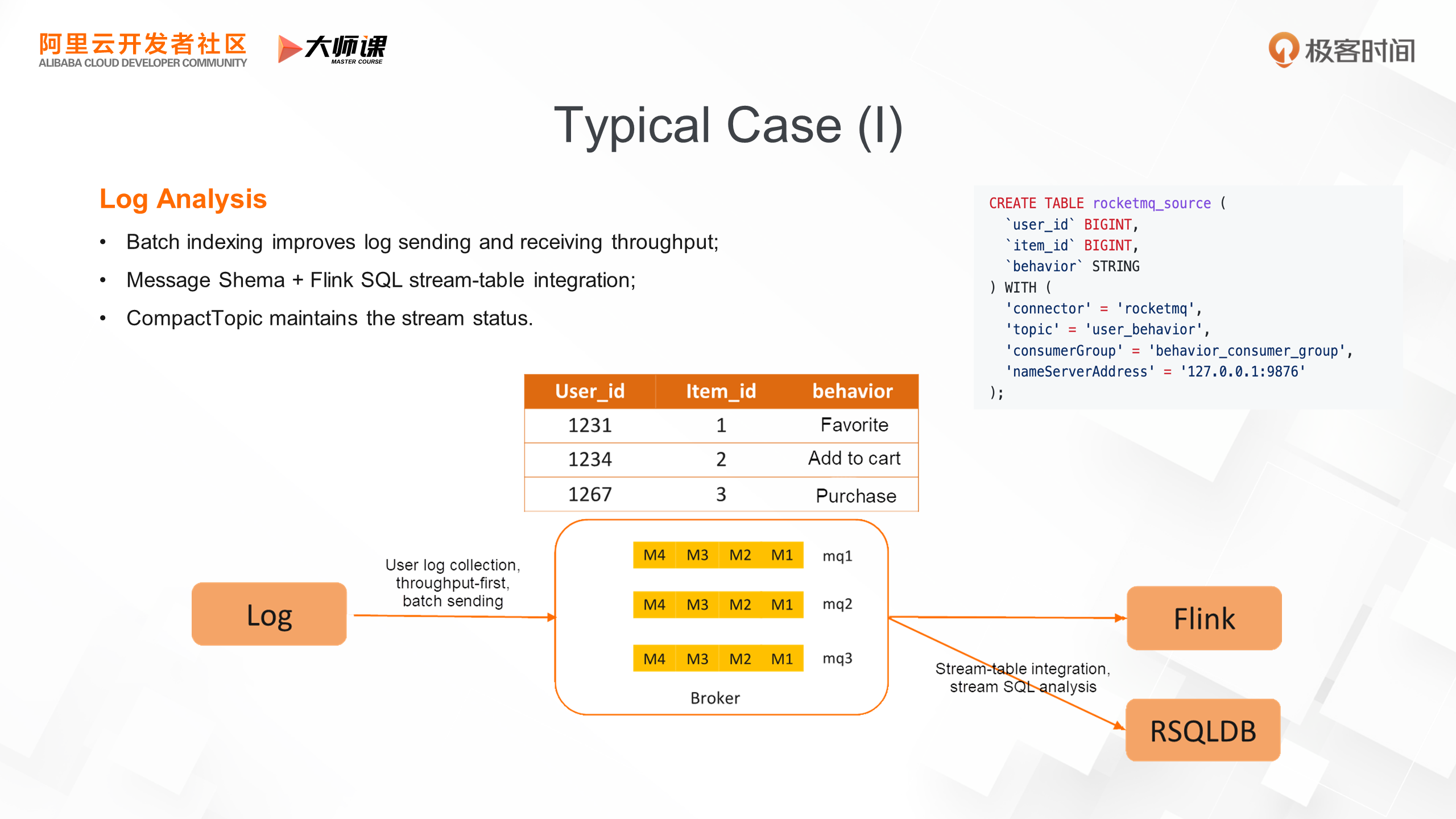

• The first case is log collection and streaming SQL analysis. First, we use batch indexes to improve the throughput of log collection and reduce machine costs. We introduce a schema for log messages. For example, the behavior of users on the e-commerce platform, such as collecting, adding to the cart, and purchasing, makes the message data look like a flowing table. We connect to FlinkSQL or RSQLDB in the stream storage downstream to analyze streaming SQL statements.

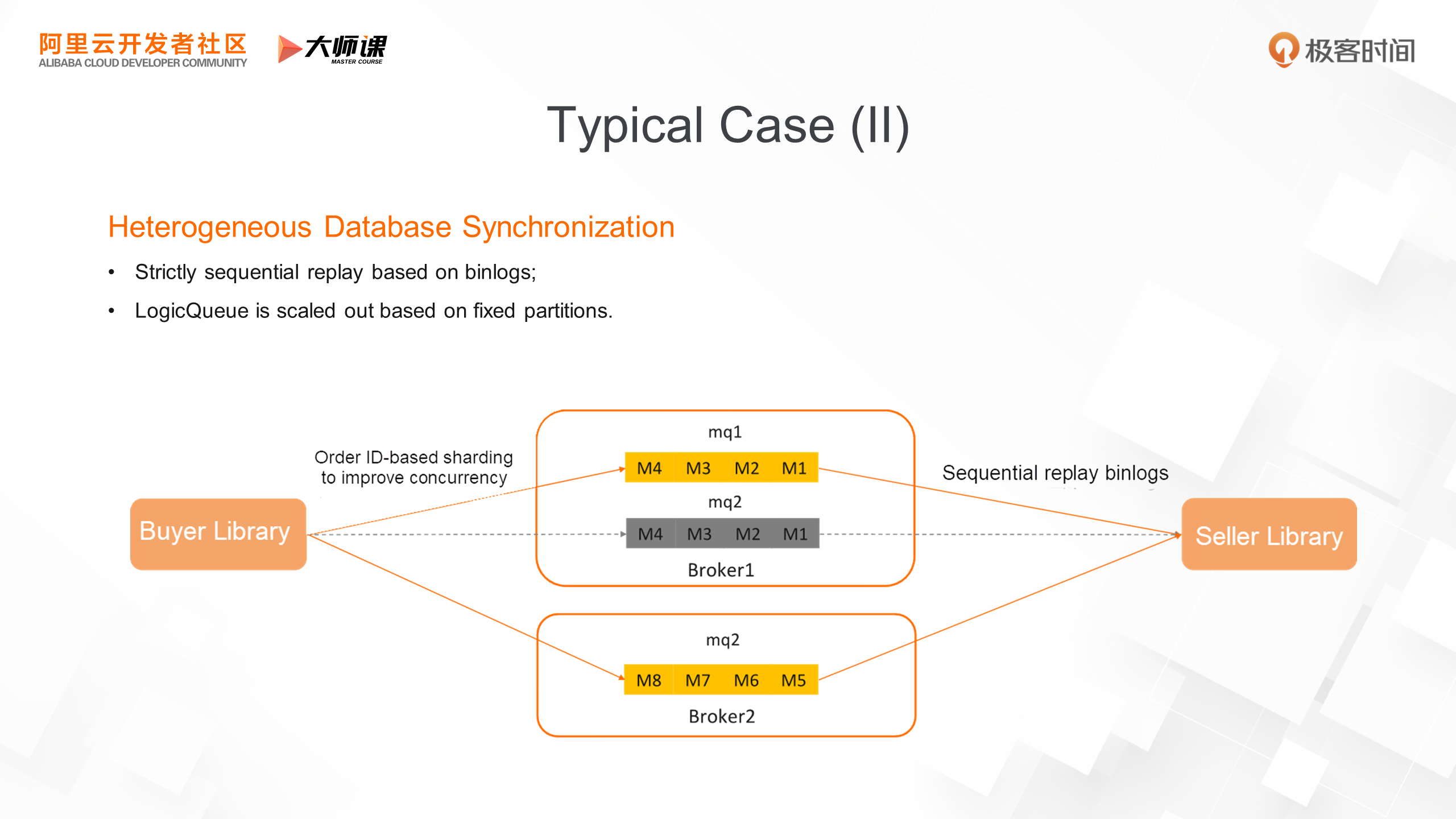

• The second case is the synchronization of heterogeneous databases. As shown in the following figure, we have two databases. One of which is sharded by buyer ID. The other is sharded based on the seller ID. We need to synchronize the order status of the two databases in real time. Based on the stream storage capability of RocketMQ, the upstream shards binlogs based on the order ID to ensure that binlogs of the same record can be distributed to the same queue. In the consumption phase, the binlog data in the queue is replayed in sequence and synchronized to the seller database. When the traffic is insufficient, RocketMQ scales out the static topics with the number of partitions unchanged. This ensures the correct data synchronization.

In this course, we’ve learned how stream storage is used in data integration scenarios, serving as a data hub for big data architectures and connecting upstream and downstream components of data. The features of stream storage in RocketMQ include not only stream access, state storage, and data governance capabilities but also elasticity and high throughput of streams. Additionally, we've introduced two data integration cases, including log analysis and synchronization of heterogeneous databases.

In the next course, we'll further explore stream processing and learn about RocketMQ 5.0's stream database together.

Click here to go to the official website for more details.

RocketMQ 5.0: How to Support Complex Business Message Scenarios?

RocketMQ 5.0 Stream Database: How to Implement Integrated Stream Processing?

206 posts | 12 followers

FollowAlibaba Cloud Native - June 6, 2024

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native Community - December 16, 2022

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud Native Community - November 23, 2022

206 posts | 12 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn MoreMore Posts by Alibaba Cloud Native