By Chengli, EMR technology expert from the Alibaba Computing Platform Division

JindoFS is a new cloud-native data lake solution. Before JindoFS was introduced, off-premises big data was primarily stored in Hadoop distributed file systems (HDFS), Object Storage Service (OSS), and S3. HDFS is a native storage system of Hadoop. Over the past decade, HDFS has become the standard storage system in the big data ecosystem. However, the bottleneck created by Java Virtual Machine (JVM) persisted despite continuous HDFS optimizations.

Before JindoFS was introduced, off-premises big data was primarily stored in HDFS, OSS, and S3. HDFS is a native storage system of Hadoop. Over the past decade, HDFS has become the standard storage system in the big data ecosystem. However, the bottleneck created by JVM persisted despite continuous HDFS optimizations. To solve this problem, the community redesigned OZone. OSS and S3 are typically used to store off-premises objects and have been adapted to the big data ecosystem. However, due to its design features, object storage cannot support metadata operations as efficiently as HDFS does. Object storage requires increasing bandwidth but it has a limit. In some cases, object storage cannot effectively meet the needs of big data use.

EMR Jindo is a cloud-based distributed computing and storage engine customized by Alibaba Cloud based on Apache Spark and Hadoop. Jindo is proprietary code developed by Alibaba Cloud. EMR Jindo includes many open-source optimizations and extensions. It is deeply integrated with a range of basic Alibaba Cloud services. The TPC-DS results submitted by Alibaba Cloud Elastic MapReduce (EMR) were obtained by using EMR Jindo.

http://www.tpc.org/tpcds/results/tpcds_results5.asp

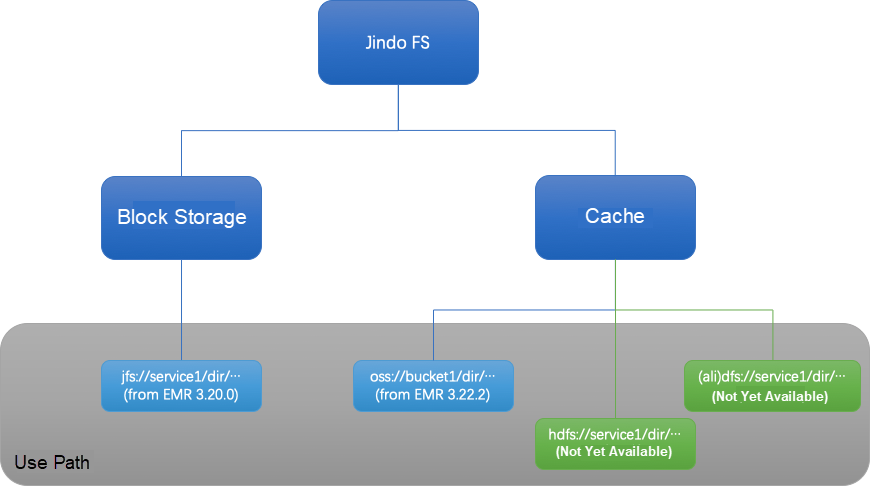

EMR Jindo provides computing and storage features. The storage feature is implemented by JindoFS. JindoFS is a file system designed by Alibaba Cloud for big data storage on the cloud. It is fully compatible with the APIs of HDFS. JindoFS provides a more flexible and efficient storage solution to support cloud computing and storage. JindoFS currently supports all computing services and engines in EMR, including Spark, Flink, Hive, MapReduce, Presto, and Impala. JindoFS supports two storage modes: block storage and cache. The following sections explain how JindoFS solve the problems of big data storage.

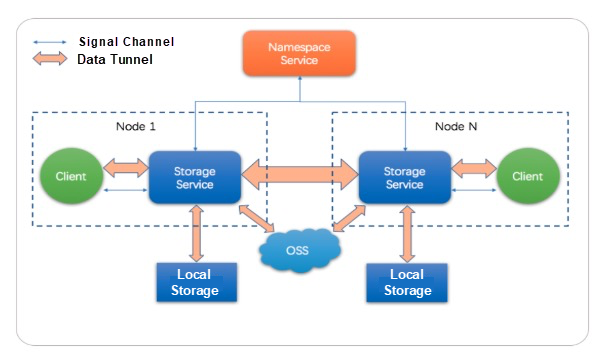

The separation of computing and storage is an industry trend. OSS provides unlimited and cost-efficient cloud storage capabilities. Therefore, we want to use the unlimited storage capabilities of OSS while efficiently operating the metadata stored in a file system. JindoFS provides block storage with a complete cloud-native solution.

In block storage mode, Jindo provides the name service to manage the metadata stored in Jindo file systems. The performance and user experience of metadata operations are comparable to those provided by the name node of HDFS. Jindo provides the storage service, which stores a replica of each data item in OSS. Even when a data node is released, data can be pulled from OSS as needed. This makes costs more controllable.

JindoFS supports multiple block storage policies. For example, you can store two replicas of each data item locally and one replica in OSS, store two replicas locally but no replicas in OSS, or store one replica in OSS but no replicas locally. You can select different storage policies to suit your business needs or based on hot and cold data.

Block storage uses the new jfs:// format. The raw data in HDFS and OSS can be imported to JindoFS through distributed copy (DistCp). JindoFS provides an SDK to allow you to read and write data from and to JindoFS outside the EMR cluster.

In cache mode, a distributed cache service can be built in the local cluster based on the storage capabilities of JindoFS. This allows you to store remote data in the local cluster. The cache mode of JindoFS solves the following problems:

When a large volume of data is stored in OSS or HDFS at the remote end and each data read operation fully occupies network bandwidth, the cache mode of JindoFS can be used to optimize the bandwidth limit.

If your original file path is oss://bucket1/file1 or hdfs://namenode/file2 , you can configure certain settings to use the cache mode without having to modify the job path because EMR is adapted to OSS. In the future, EMR will support reading and writing HDFS data at the remote end. Caching is completely transparent to upper-layer jobs.

However, the cache mode does not work well for the rename operation. To ensure data consistency at multiple ends, you need to synchronize data updates to OSS or HDFS at the remote end when you perform the rename operation. In addition, the rename operation is time-consuming in OSS. Currently, the cache mode cannot be optimized for the rename operation on file metadata.

At the 2019 Apsara Conference, the computing and storage separation solution represented by EMR Jindo received a lot of attention.

By Chengli, an EMR technology expert from the Alibaba Computing Platform Division, Apache Sentry Project Management Committee (PMC) member, and Apache Commons committer, currently engaged in open-source big data storage and optimization.

DataWorks: A Platform for Developing and Governing a Data Lake

62 posts | 7 followers

FollowAlibaba EMR - June 8, 2021

Alibaba EMR - March 1, 2021

Alibaba EMR - December 2, 2019

Alibaba EMR - November 18, 2020

Alibaba EMR - May 11, 2021

Alibaba Developer - March 1, 2022

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba EMR