By Li Zhipeng, object storage development engineer

The E-MapReduce team of the computing platform division has explored and developed the JindoFS framework to accelerate data read/write performance in computing-storage separation scenarios. Mr. Yao Shunyang from Alibaba Cloud Intelligence team presented a detailed introduction to JindoFS storage policies and read/write optimization. Based on his introduction, this article further discusses the following topics:

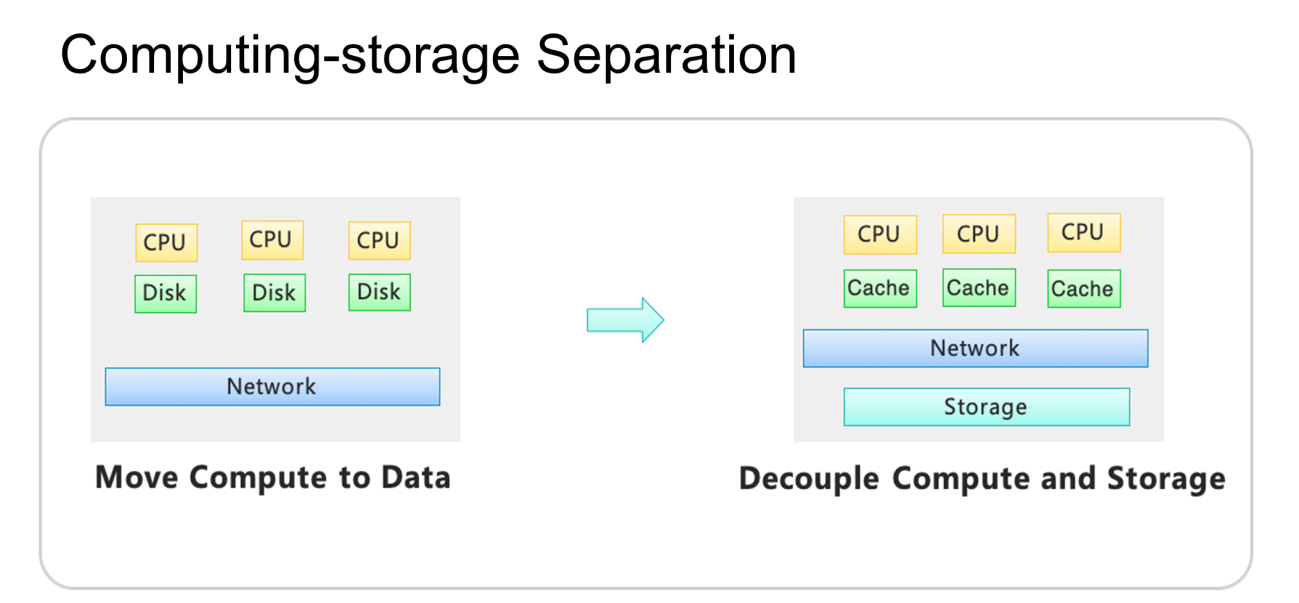

In traditional analysis scenarios of big data, HDFS is a de facto storage standard. It typically deploys computing resources and storage resources in a set of clusters, namely the computing-storage integration architecture as shown in the following figure. It causes asymmetric extensibility in the computing and storage capabilities of clusters. With the development of data migration to the cloud in recent years, the computing-storage separation architecture gradually emerged in the big data analysis scenario. More and more customers choose this architecture to deploy their clusters. The difference between this architecture and the traditional HDFS-based architecture is that, in the computing-storage separation architecture, computing resources and storage resources are physically isolated. The computing cluster is connected to the backend storage cluster through networks, as shown in the following figure. A large amount of data read/write operations in a computing cluster are performed through various network requests to interact with the storage cluster. In this scenario, the network throughput is usually a performance bottleneck in the job execution process.

Therefore, it is necessary to create a cache layer for the backend storage cluster on the computing side, which is in the computing cluster. Through cache layer's data cache, access traffic from the computing cluster to the storage backend is reduced, which significantly mitigates the bottleneck caused by network throughput.

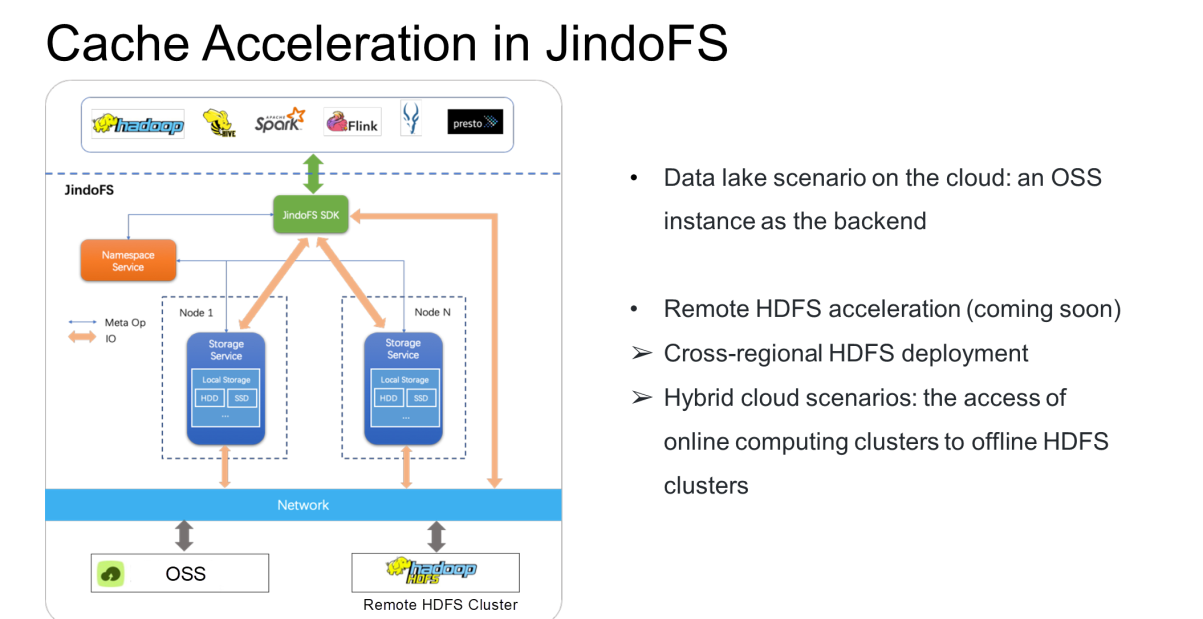

JindoFS accelerates the data cache of storage end at the computing end in the computing-storage separation scenario. The following figure shows its architecture and its position in the system.

JindoFS is consisted by the following components:

JindoFS is a cloud-native file system that supports the performance of local storage and the ultra-large capacity of OSS. It also supports the following types of backend storage:

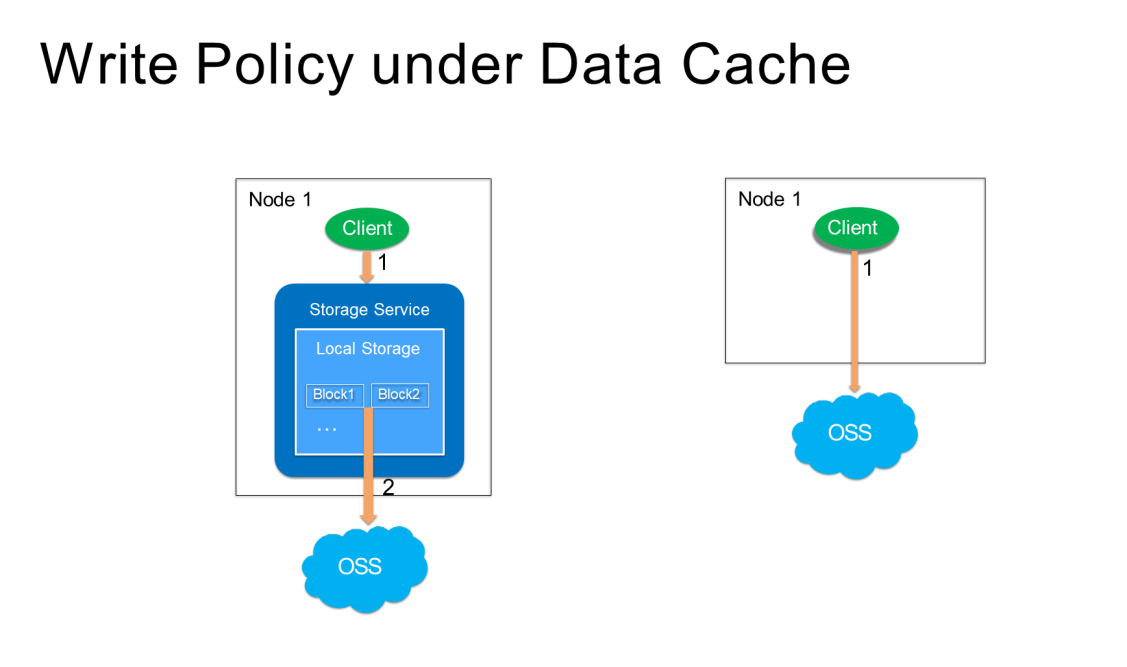

There are two types of data write policies in JindoFS, as shown in the following figure.

Write Policy 1: During the write process, the client writes data to the cache blocks of the corresponding Storage Service, and the Storage Service uploads the data in cache blocks to the backend storage through multiple threads in parallel.

Write Policy 2: In terms of the pass-through, use the JindoFS SDK pass-through to upload data to the backend storage system. The SDK has made a lot of related performance optimizations. This method applies to the data production environment. It is only responsible for generating data and has no requirement for the subsequent computing process and read operations

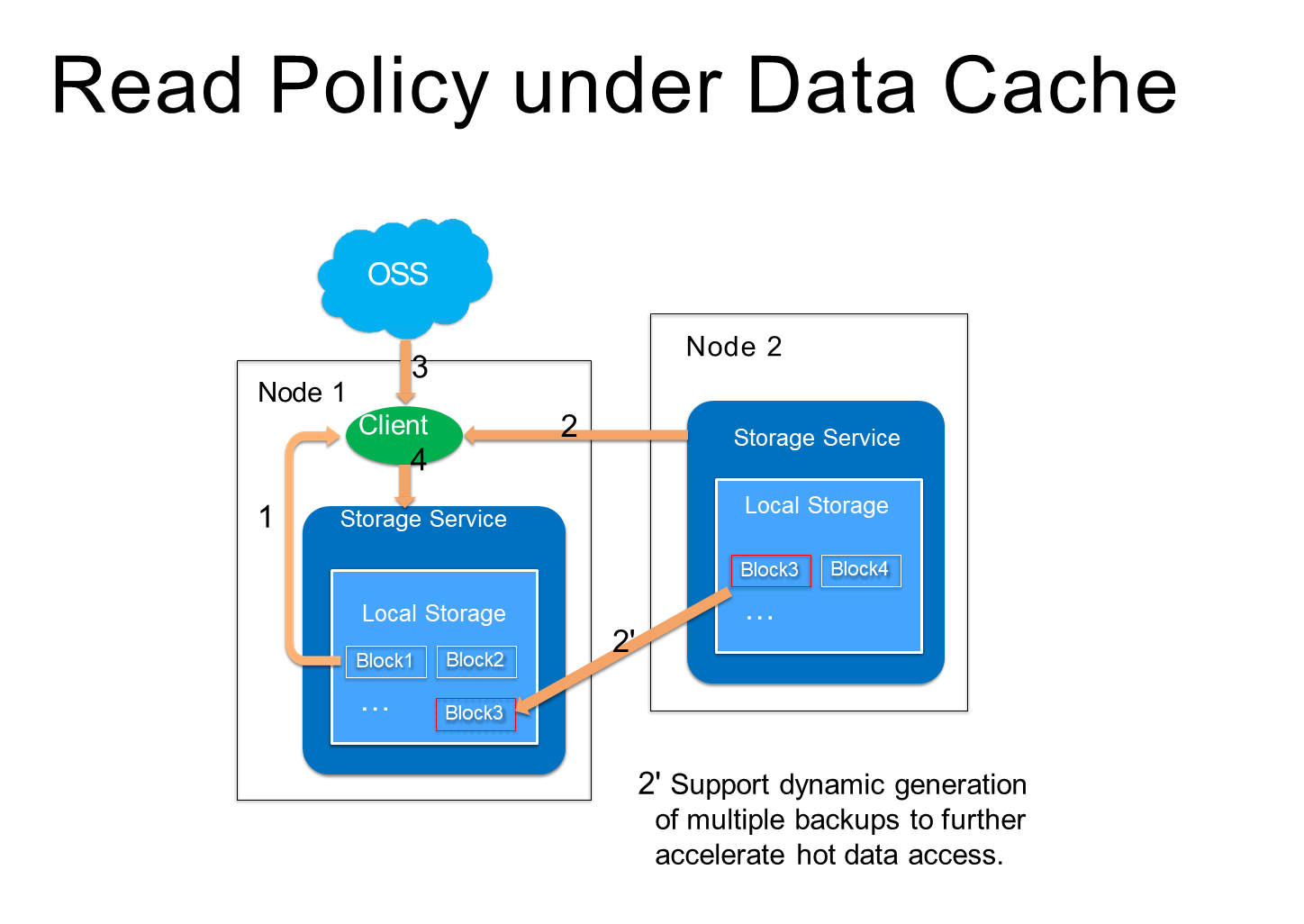

The read policy is one of the most important parts of JindoFS. A distributed cache service is built based on the storage capability of JindoFS in the local cluster. Then, the remote data can be saved in the local cluster, and localized. By doing so, multiple data read requests are processed more quickly. This is the fastest way to read data in the cache with optimal read performances. According to this principle, the read policy is elaborated as follows:

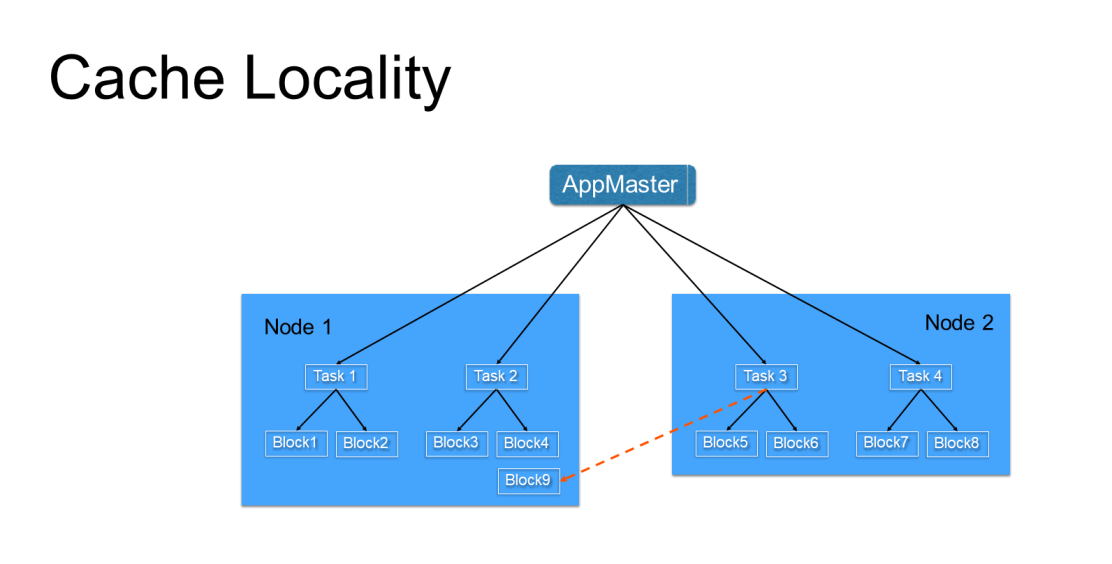

Similar to the Data Locality of HDFS, Cache Locality is a tool that pushes a task in the computing layer to the node where the data block is located for execution. Based on this policy, tasks first read the data in the local cache, which is the most efficient way to read data.

JindoFS Namespace maintains the location information of all cache data blocks. Therefore, through related APIs provided by the Namespace, the location information of data blocks can be delivered to the computing layer. Then, the computing layer pushes tasks to the node where the cache data block is located. In this way, most data can be read from the local cache and the rest of the data can be read through networks. Through Cache Locality, most data are read locally, ensuring an optimal data reading performance for computing jobs, as shown in the following figure.

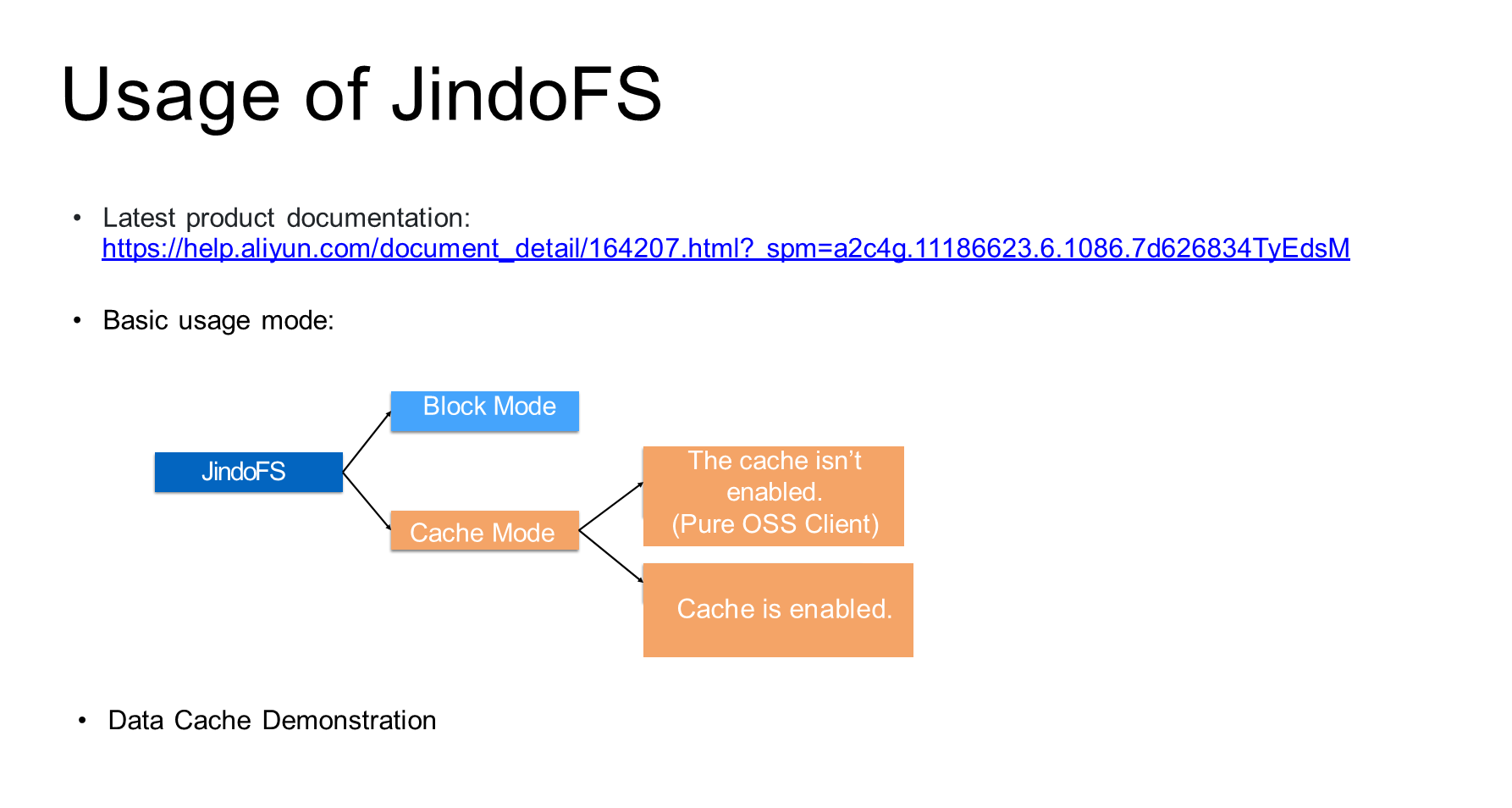

JindoFS is usually used in the following basic usage modes:

Cache mode: This mode is transparent and imperceptible to users. It has the following scenarios:

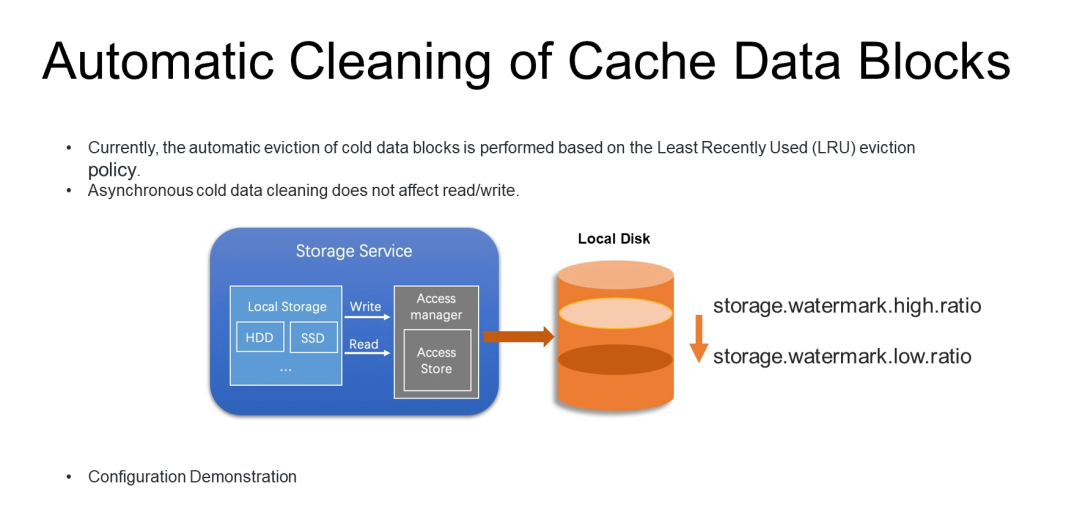

As a cache system, JindoFS uses limited local cache resources to cache OSS backend which has almost unlimited space. Therefore, the main features of cache data management are as follows:

Storage Service implements the management of data access information. That is, all read/write operations are registered with AccessManager, while storage.watermark.high.ratio and storage.watermark.low.ratio are provided to manage cache data. When the used cache capacity in the local disk reaches the threshold value of storage.watermark.high.ratio, AccessManager automatically triggers the cleanup mechanism. Some cold data in the local disk are deleted till the storage.watermark.low.ratio is triggerd to give more disk space for hot data.

Currently, the automatic eviction of cold data blocks is performed based on the Least Recently Used (LRU) eviction policy, while the asynchronous cold data cleaning does not affect read/write operations..

The following figure shows the cleanup process of Cache Data Blocks.

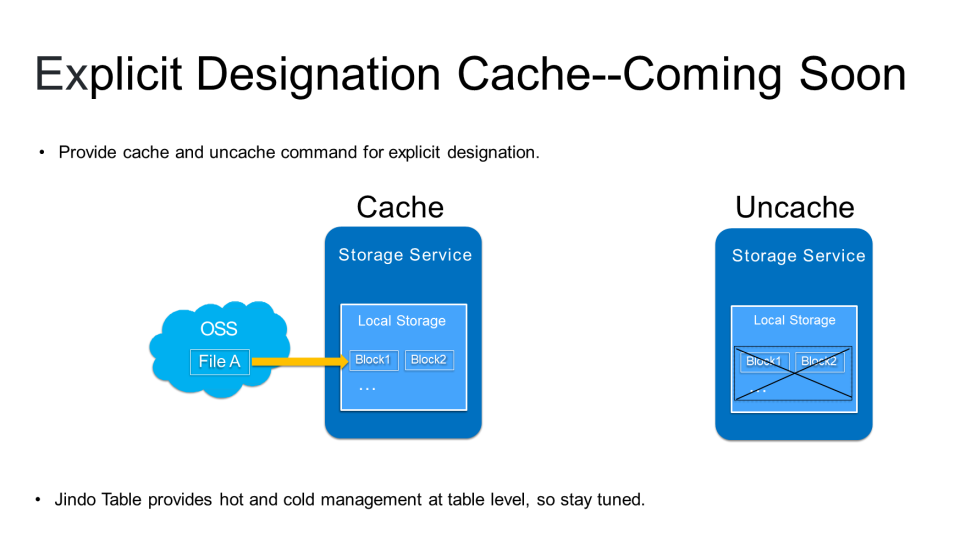

JindoFS also provides designation cache, which is coming soon.

Use the cache command to explicitly cache the backend directories or files, and use the uncache command to release cold data.

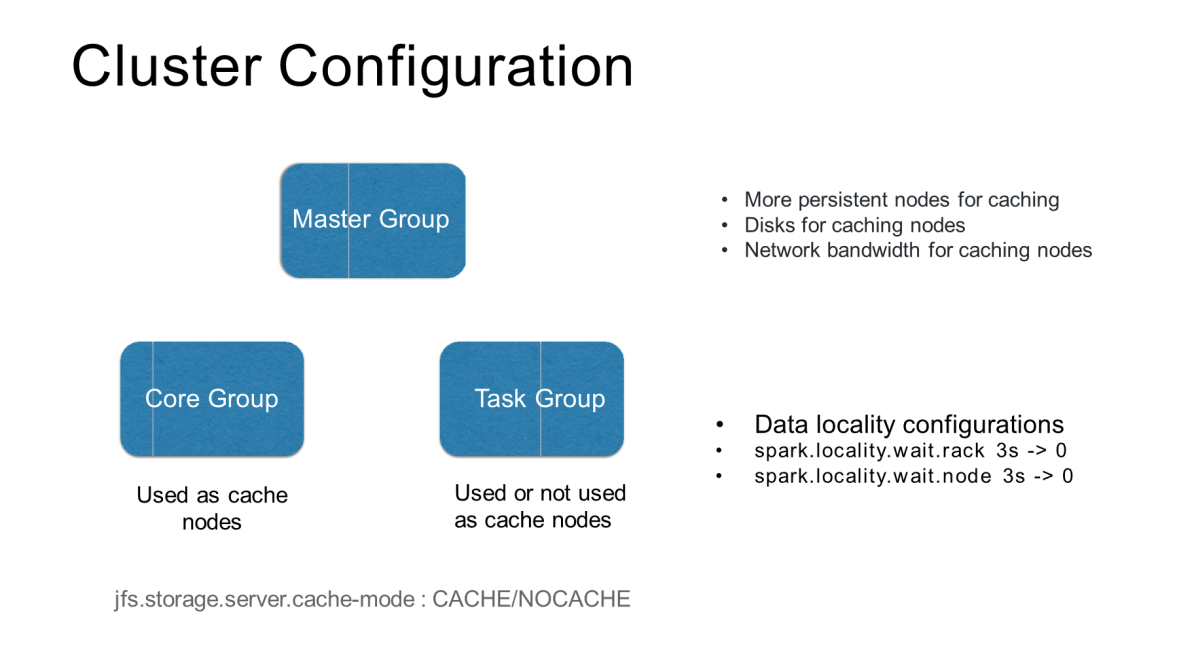

Follow the principles below to configure a cluster

Configure the configuration item to set network bandwidth:

jfs.storage.server.cache-mode:CACHE/NOCACHEConfigure the following items for data locality:

62 posts | 7 followers

FollowAlibaba EMR - July 19, 2021

Alibaba Container Service - October 30, 2024

Alibaba Developer - July 8, 2021

Alibaba EMR - April 2, 2021

Alibaba EMR - March 16, 2021

Alibaba EMR - December 2, 2019

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba EMR