This article describes how to use Databricks and MLflow to build a machine learning lifecycle management platform and implement the entire process from data preparation, model training, parameter and performance metrics tracking, and model deployment.

By Li Jingui (Development Engineer of Alibaba Cloud Open-Source Big Data Platform)

There are three pain points in MLFlow:

MLFlow was created to solve the pain points in machine learning workflow. It can implement the whole process of experiment parameter tracking, environment packaging, model management, and model deployment through simple API.

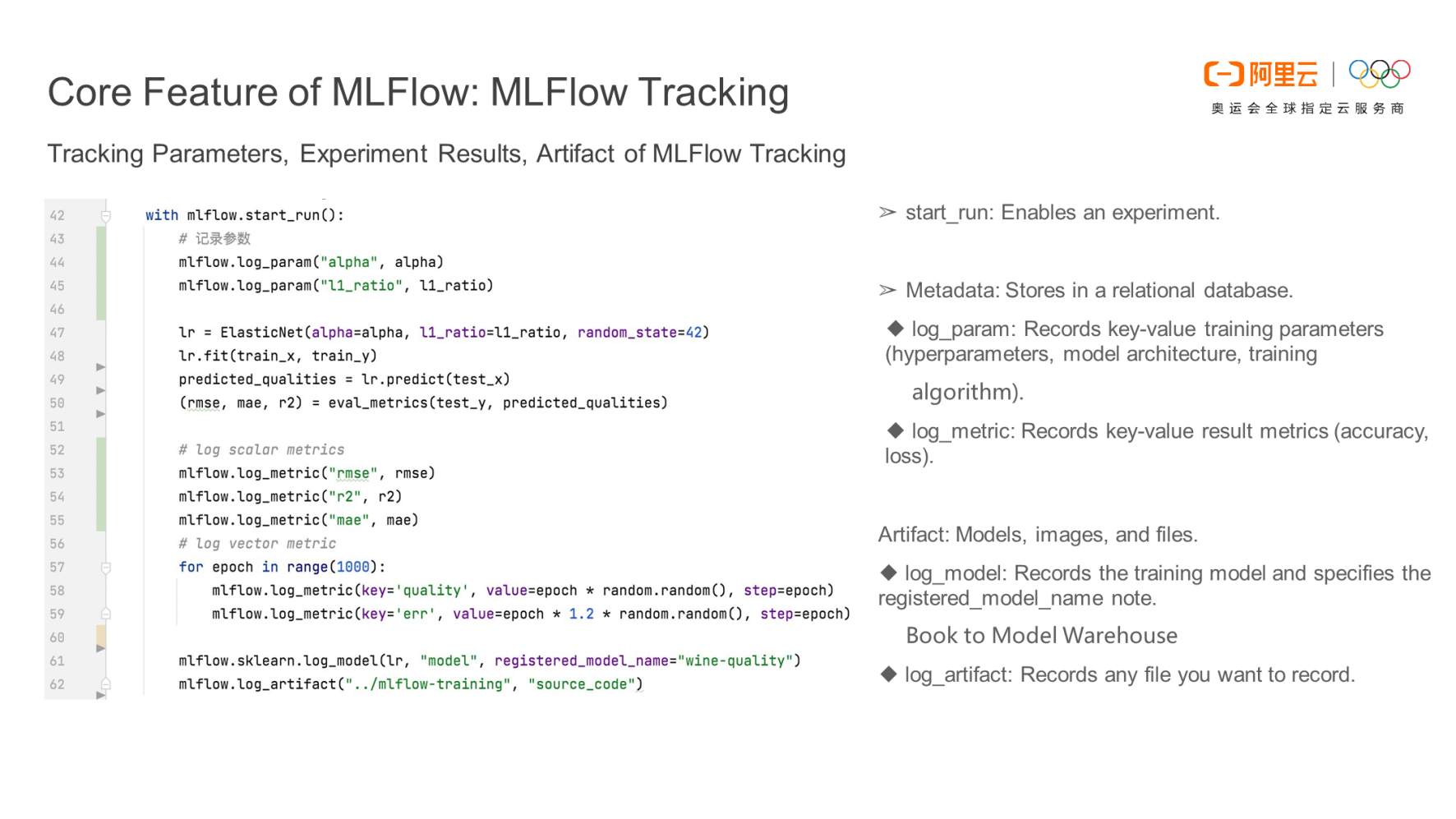

It can track learning-based experimental parameters, model performance metrics, and various files of the model. It is usually necessary to record some parameter configurations and model performance metrics during machine learning experiments. MLFlow can help users avoid manual recording. It can record parameters (and any file), including models, pictures, and source code.

As shown in the code on the left side of the preceding figure, an experiment can be started using the start_run of MLFlow. log_param can record the parameter configuration of the model. log_metric can record the performance metrics of the model, including scalar performance metrics and vector performance metrics. log_model can record the t rained model. log_artifact can record any file you want to record. For example, the source code is recorded in the figure.

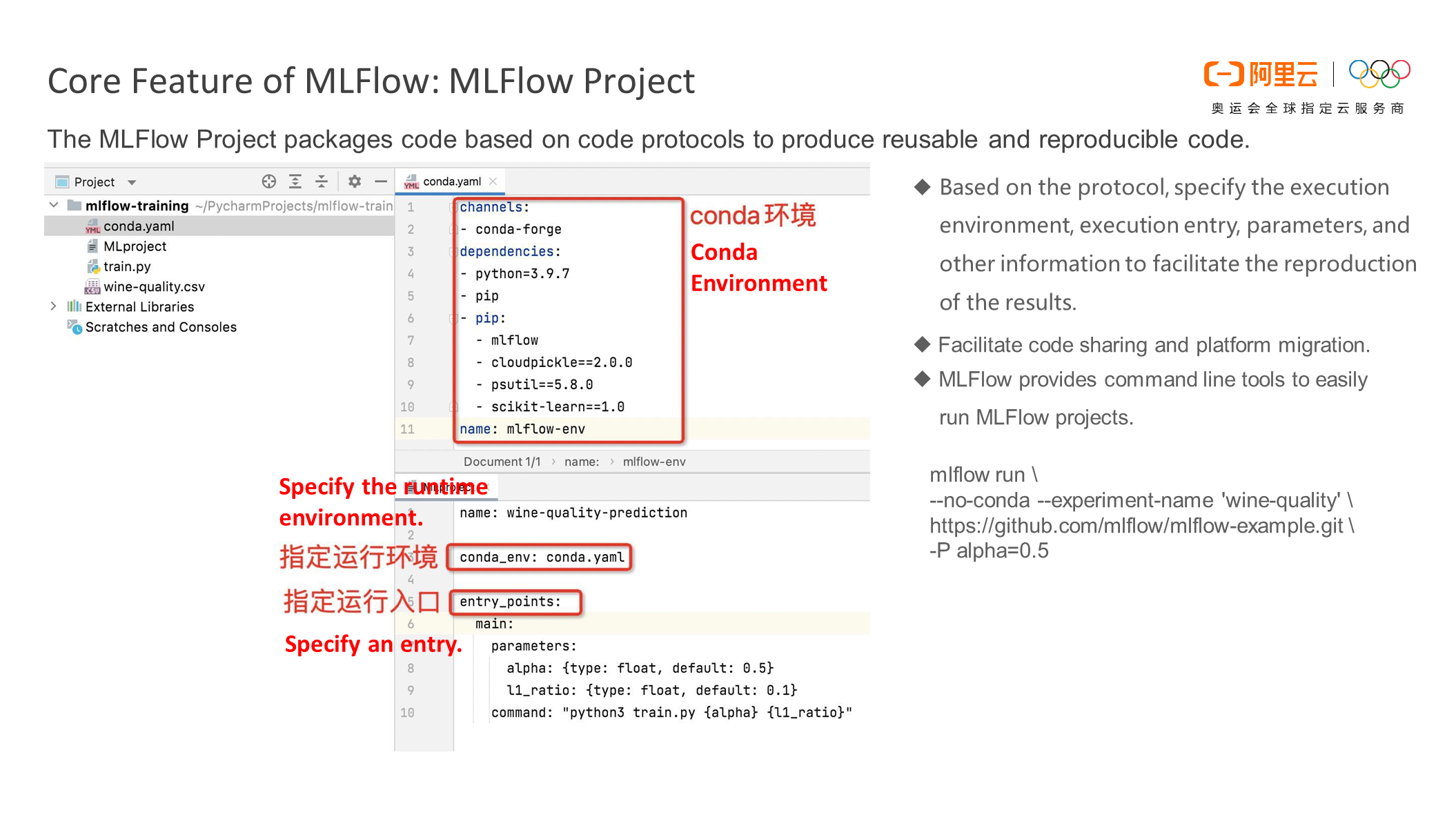

It packages the training code based on the code specification and specifies the execution environment, execution entry, and parameters to reproduce the experimental results. Moreover, this standard packaging method can facilitate code sharing and platform migration.

As shown in the preceding figure, the MLFlow -training project contains two important files: content.yaml and MLproject. The content.yaml file specifies the runtime environment of the project, which contains all the code libraries it depends on and the versions of these code libraries. The MLproject specifies the runtime environment, which is conda.yaml. It specifies the runtime entry, which is how to run the project. The entry information contains the corresponding runtime parameters, which are alpha and l1_ratio.

In addition, MLFlow provides command-line tools that enable users to run MLFlow projects easily. For example, if the project is packaged and uploaded to the Git repository, the user only needs to run the MLFlow run command to execute the project and transmit the alpha parameter through -P.

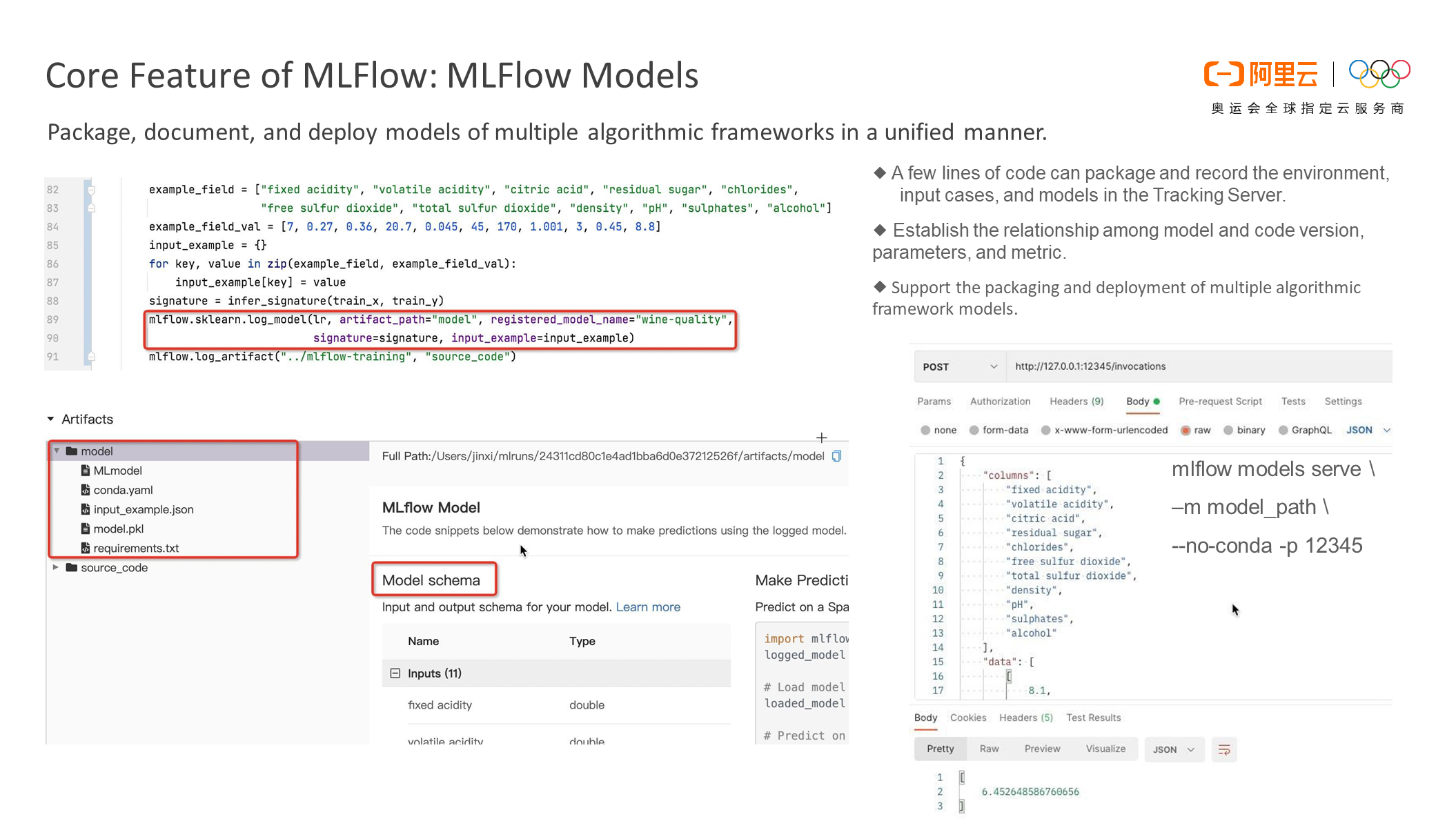

It supports packaging records and deploying multiple algorithmic framework models in a unified manner. After the model is trained, you can use the log_model of MLFlow to record the model. MLFlow automatically stores the model on your local computer or OSS. Then, you can view the relationship between the model and the code version, parameters and metrics, and the storage path of the model on the MLFlow WebUI.

In addition, MLFlow provides an API to deploy the model. After using the MLFlow models serve to deploy the model, you can obtain the predicted result by the rest API to call the model.

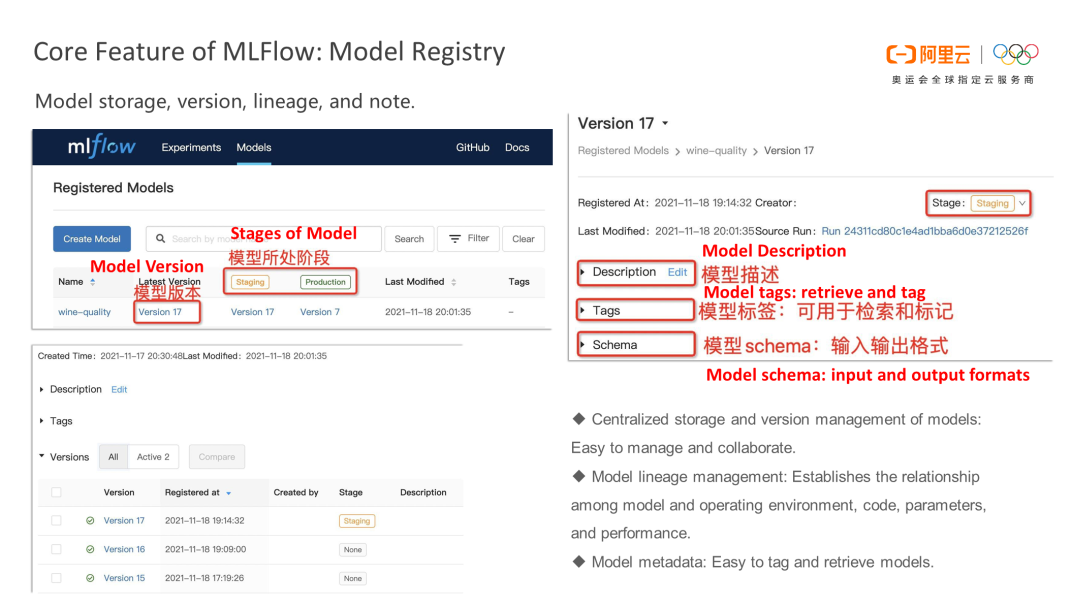

MLFlow stores models and provides WebUI to manage models. The WebUI page displays the version and stage of the model. The detail page of the model displays the description, label, and schema of the model. The label of the model can retrieve and label the model. The schema of the model is used to represent the format of the model input and output. In addition, MLFlow establishes the relationship between the model and the operating environment, code, and parameters, namely, the lineage of the model.

The four core features of MLFlow have solved the pain points in the machine learning workflow. They can be divided into three aspects:

Next, I will describe how to use MLFlow and DDI to build a machine learning platform to manage its lifecycle.

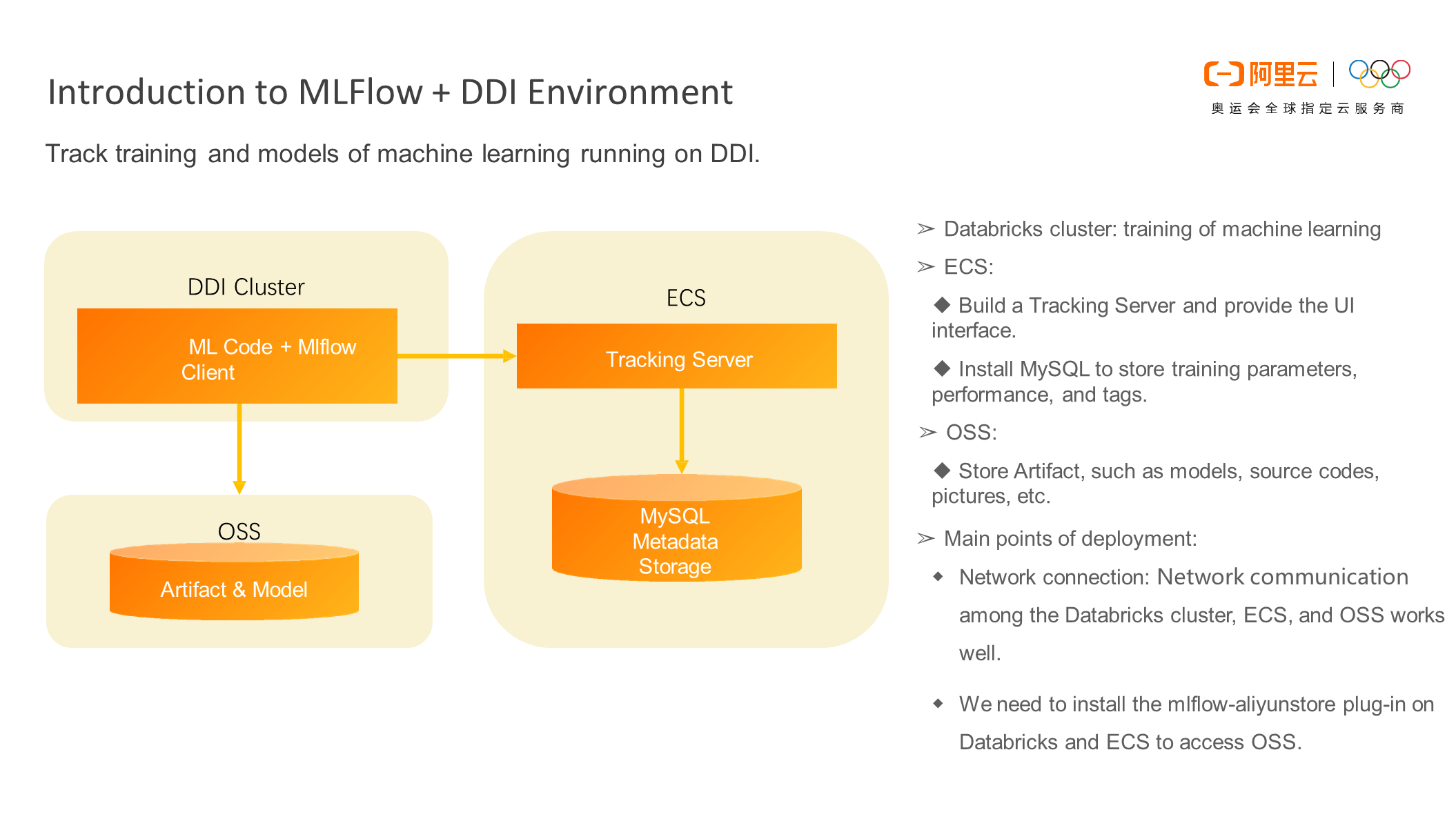

As you can see in the architecture diagram, the main components include DDI clusters, OSS, and ECS. The DDI cluster is responsible for some machine learning training. It needs to start an ECS to build the MLFlow tracking server to provide the UI interface. In addition, you need to install MySQL on the ECS to store metadata (such as training parameters, performance, and tags). OSS is used to store training data and model source code.

Please watch the demonstration video (only available in Chinese):

https://developer.aliyun.com/live/248988

Databricks Data Insight Open Course - An Introduction to Delta Lake (Open-Source Edition)

62 posts | 7 followers

FollowAlibaba EMR - August 22, 2022

Alibaba EMR - September 13, 2022

Alibaba EMR - September 23, 2022

Alibaba EMR - September 2, 2022

Alibaba EMR - May 16, 2022

Alibaba Cloud Community - September 30, 2022

62 posts | 7 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn MoreMore Posts by Alibaba EMR