By Wang Xiaolong (Xiaolong), Alibaba Cloud Open-source Big Data Platform Technical Expert

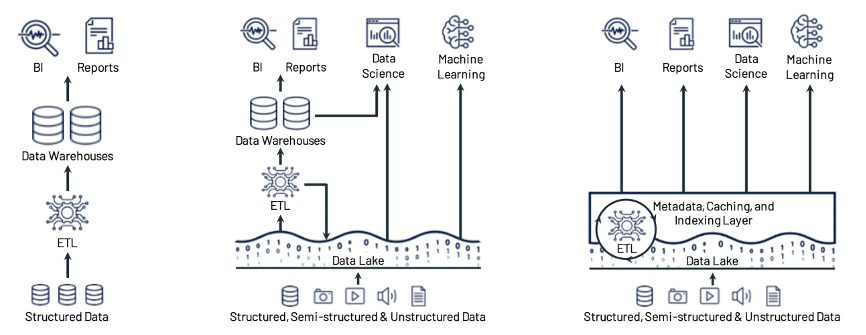

The big data platform has undergone three stages:

A. First Generation: Data Warehouse Architecture

B. Second Generation: Data Lake-Warehouse Architecture

C. Third Generation: Lakehouse Architecture

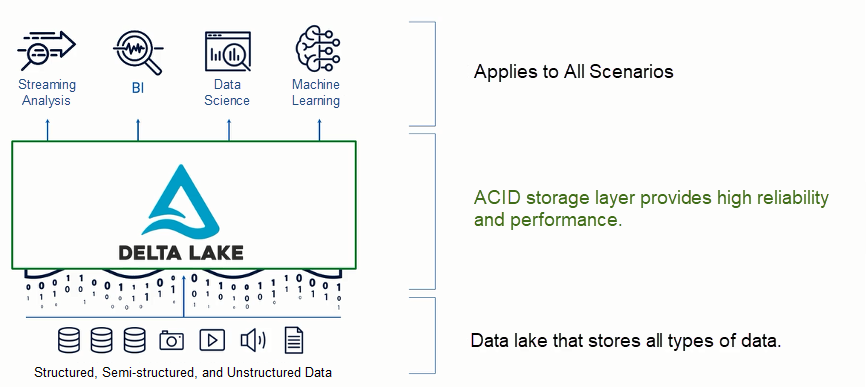

As a reliable data storage middle layer, Delta Lake provides the core support for building Lakehouse.

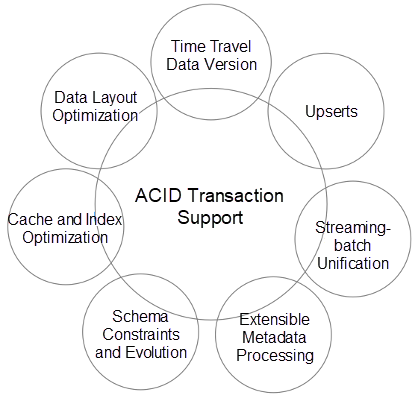

The core feature of Delta Lake is to support ACID. Based on the transaction log mechanism, it implements a serializable isolation level, provides ACID, and ensures the consistency of data read and write.

Delta Lake provides the following capabilities around ACID transaction logs:

This is an example to understand the basic syntax of Delta Lake.

Use PySpark to create a Delta Lake table and perform read and write operations.

Example version:

PySpark 3.2.1

Delta Lake 1.1.0

A. Delta Lake Starter-Start PySpark

# Using Spark Packages

./bin/pyspark --packages io.delta:delta-core_2.12:1.1.0

--conf "spark.databricks.delta.retentionDurationCheck.enabled=false"

- -conf "spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension"B. Delta Lake Starter-DML Preparation

Create a table and perform several Update/Delete/Merge operations.

Create a Delta table by PySpark Datafram API. The table name is random_num and only contains one column of numbers:

>>> data = spark.range(0, 5)

>>> data.write.format("delta").save("/tmp/delta_course/delta_table")

>>> spark.sql("CREATE TABLE random_num USING DELTA location \"{}\"”.format('/tmp/delta_course/delta_table')Next, execute several simple modification statements in the table:

>>> spark.sql("insert into random_num values(5)").show()

>>> spark.sql("update random_num set id = '10' where id = 1").show()

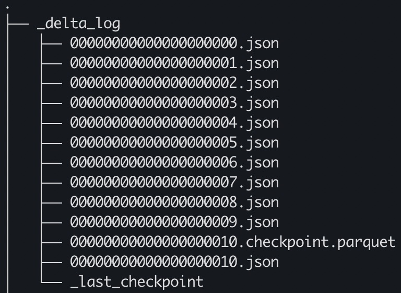

>>> spark.sql("delete from random_num where id = 3 ").show()C. Delta Lake File System Directory Structure

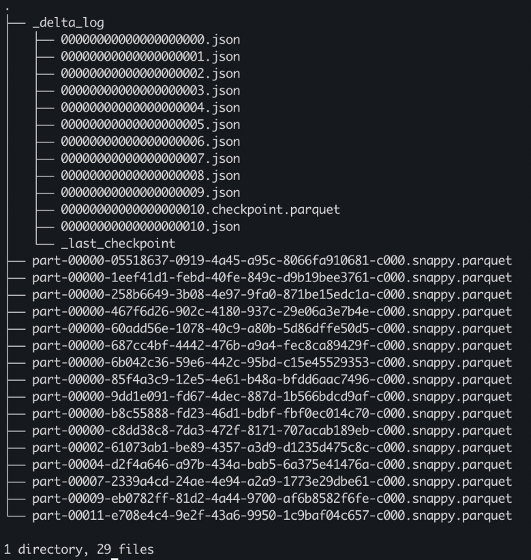

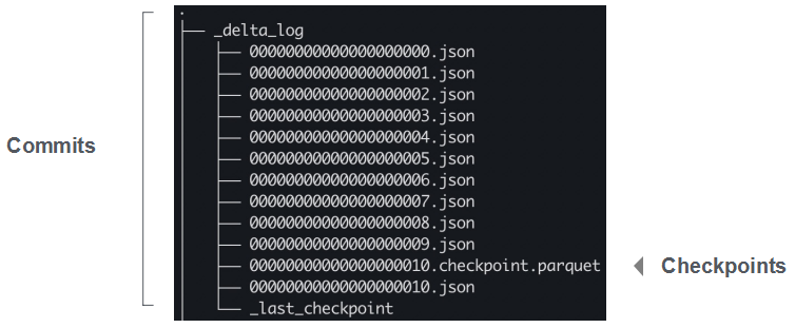

The physical storage directory of the Delta Lake table includes the table data files and Delta Logs that record table schemas and table changes.

Transaction Log (also known as Delta Log) is an ordered record set. It sequentially records all transaction operations of the Delta Lake table since its initial creation.

A. The overall design goal of Transaction Log is to realize a single information source (Single Source of Truth). It provides users with accurate data views at any time by tracking and recording all table operations of users.

B. Delta Lake is built based on Apache Spark and relies on Spark to manage and maintain transaction logs. Compared with a single database to manage metadata through Metastore, Delta Lake has highly scalable metadata processing capabilities, so it can support hundreds of petabytes of read and write access to Delta tables.

C. In addition, Delta Lake's transaction log is the basis for the implementation of other important Data Management features, such as data version backtracking (Time Travel).

A. Commit

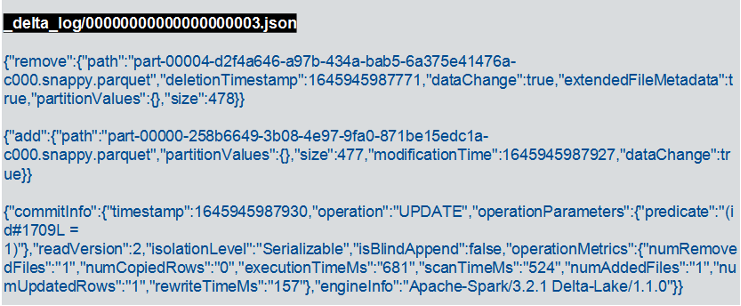

Transaction is called Commit in Delta Lake. Each Commit represents a transaction operation and a data version, which corresponds to a JSON file in the_delta_log directory.

>>> spark.sql("update random_num set id = '10' where id = 1").show()

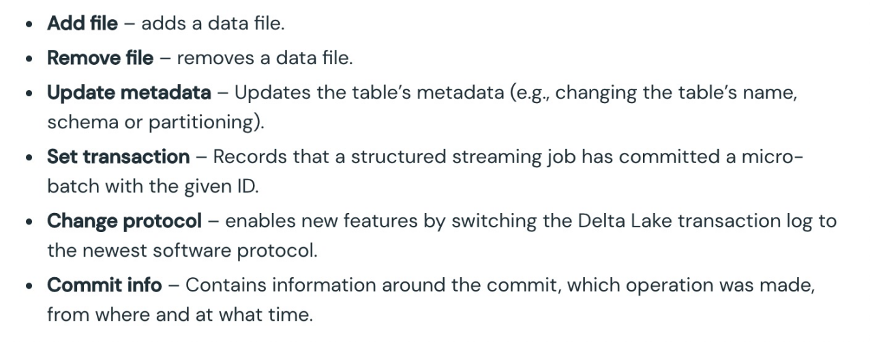

The actions defined by Delta Lake include adding and removing files, updating metadata, setting transactions, and changing protocols.

The Commit defined by Delta Lake maintains the process records of change operations. When a query statement is executed against a Delta table, you can use Spark to obtain the latest status of the table. Spark aggregates transaction logs, checks which transaction operations the logs have gone through, and builds reliable and accurate Delta table states based on the transaction logs.

Many JSON files are generated in scenarios with many change operations (such as CDC and delta log), which will affect the processing performance.

B. Checkpoint

Delta Log introduces the Checkpoint mechanism to solve the problem of small files.

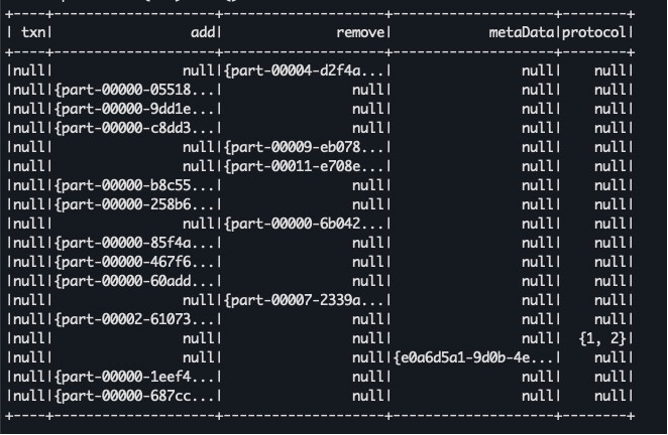

Example: View the content of a checkpoint file

>>> chkpt0 = spark.read.parquet(

"/tmp/delta_course/delta_table/_delta_log/00000000000000000010.checkpoint.parquet")

>>> chkpt0.select("*").show()

The picture includes the history of all changes from the first version to the present version.

Spark can quickly build the state of a table with checkpoints. Spark performs the ListFrom operation to view all transaction log files and go to the latest checkpoint file. Therefore, you only need to process the commits after the checkpoint.

Example: Implementation of ListForm

In this example, Spark is assumed to maintain the state in the following table in version 7. After version 7, the Delta table has been submitted several times. When you want to view the latest state of a table, Spark first obtains all change files after version 7 through the ListFrom interface. It finds that the checkpoint file associated with version 10 is the latest checkpoint. Spark only needs to build the state of the table based on version 10 and the subsequent two commits of 11 and 12, thus improving the performance of metadata operations.

Therefore, Delta Lake supports scalable metadata processing with the help of transaction logs and Spark.

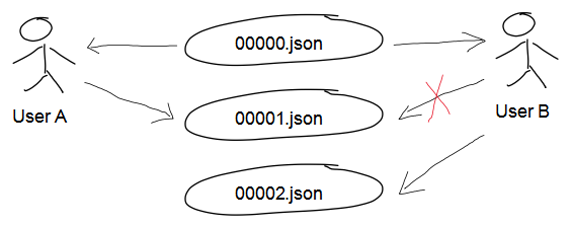

C. Optimistic Concurrency Control

Concurrency control mainly solves the isolation problem between multiple concurrent transactions in the ACID, which is how the system should determine the order among transactions if multiple transactions are triggered at the same time.

In the field of traditional databases, there are two typical implementation mechanisms: Optimistic Concurrency Control and Pessimistic Concurrency Control.

The designers of Delta Lake chose OCC and adopted mutual exclusion in the event of conflicts.

Delta Lake uses mutual exclusion when handling conflicts in concurrent transaction scenarios, including the following five steps:

Example: Concurrent write transactions-Demonstrates how the OCC protocol is implemented.

ACID transactions have four characteristics: atomicity, consistency, isolation, and durability.

A. Atomicity

As mentioned before, Transaction Log abstracts transactions into Commits. Commits contain different types of actions, but each Commit is atomic.

Martin Kleppman's definition of atomicity in the DDIA:

“ACID atomicity describes what happens if a client wants to make several writes, but a fault occurs after some of the writes have been processed. If the writes are grouped together into an atomic transaction, and the transaction cannot be completed (committed) due to a fault, then the transaction is aborted and the database must discard or undo any writes it has made so far in that transaction."

-- Martin Kleppmann - Designing Data-Intensive Applications

B. Isolation

Isolation is the way concurrent transactions are handled. Concurrent transactions should not interfere with each other. In Delta Lake, isolation is realized through OCC + mutual exclusion and serialization of reading and writing is realized.

Martin Kleppman's definition of isolation in the DDIA:

“Isolation in the sense of ACID means that concurrently executing transactions are isolated from each other: they cannot step on each other's toes."

C. Durability

Transaction Log is written to a distributed disk. After the transaction is completed, the modification of data is permanent and will not be lost even if the system fails.

Martin Kleppman's definition of durability in the DDIA:

"Durability is the promise that once a transaction has committed successfully, any data it has written will not be forgotten, even if there is a hardware fault or the database crashes."

D. Consistency

Martin Kleppman's definition of consistency in the DDIA shows that atomicity, isolation, and durability are the properties of the database. Applications may rely on the atomicity and isolation properties of the database to achieve consistency. It does not depend on the database itself, and the consistency is determined by the application.

Martin Kleppman's definition of consistency in the DDIA:

“Atomicity, isolation, and durability are properties of the database, whereas consistency (in the ACID sense) is a property of the application. The application may rely on the database's atomicity and isolation properties in order to achieve consistency, but it's not up to the database alone. Thus, the letter C doesn't really belong in ACID.”

As mentioned before, based on transaction logs, Delta Lake can implement Time Travel/Upserts and supports scalable metadata processing.

In addition, features (such as Schema constraints and evolution) are supported in the community version of Delta Lake. We will share the construction of a batch-stream unified Lakehouse architecture based on Delta Lake in the following public courses.

In addition to the community version of Delta Lake, Databricks commercial edition provides commercial versions of Spark and Delta Lake engines. It has some proprietary enterprise-level performance optimization features. We will introduce the features of the commercial version of Delta Lake in the next course.

Databricks Data Insight Open Course - An Introduction to Delta Lake (Commercial Edition)

62 posts | 7 followers

FollowAlibaba EMR - September 13, 2022

Alibaba EMR - August 22, 2022

Alibaba Cloud Community - September 30, 2022

Alibaba EMR - September 2, 2022

Alibaba EMR - May 16, 2022

Alibaba EMR - October 12, 2021

62 posts | 7 followers

Follow Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba EMR