Tablestore is a multi-model data storage service that is developed by Alibaba Cloud. Tablestore can store large volumes of structured data by using multiple models, and supports fast data query and analytics. This topic describes how to synchronize data from an ApsaraDB RDS for MySQL instance to a Tablestore instance by using Data Transmission Service (DTS). You can also follow the procedure to synchronize data from a self-managed MySQL database to a Tablestore instance. The data synchronization feature allows you to transfer and analyze data with ease.

Prerequisites

A Tablestore instance is created. For more information, see Create instances.

Precautions

During initial full data synchronization, DTS consumes the read and write resources of the source and destination databases. This may increase the loads of the database servers. Before you synchronize data, evaluate the impact of data synchronization on the performance of the source and destination databases. We recommend that you synchronize data during off-peak hours.

DTS does not synchronize data definition language (DDL) operations. If a DDL operation is performed on a table in the source database during data synchronization, you must perform the following steps: Remove the table from the selected objects, remove the table from the Tablestore instance, and then add the table to the selected objects again. For more information see Remove an object from a data synchronization task and Add an object to a data synchronization task.

You can synchronize up to 64 tables to the Tablestore instance. If you want to synchronize more than 64 tables to the Tablestore instance, you can disable the limit for the destination Tablestore instance.

The names of the tables and columns to be synchronized must comply with the naming conventions of the Tablestore instance.

The name of a table or an index can contain letters, digits, and underscores (_). The name must start with a letter or underscore (_).

The name of a table or an index must be 1 to 255 characters in length.

Billing

| Synchronization type | Task configuration fee |

| Schema synchronization and full data synchronization | Free of charge. |

| Incremental data synchronization | Charged. For more information, see Billing overview. |

Initial synchronization types

Initial synchronization type | Description |

Initial schema synchronization | DTS synchronizes the schemas of tables from the source database to the destination database. Warning MySQL and Tablestore are heterogeneous databases. DTS does not ensure that the schemas of the source and destination databases are consistent after initial schema synchronization. We recommend that you evaluate the impact of data type conversion on your business. For more information, see Data type mappings for schema synchronization. |

Initial full data synchronization | DTS synchronizes historical data of tables from the source database to the destination database. Historical data is the basis for subsequent incremental data synchronization. |

Initial incremental data synchronization | DTS synchronizes incremental data from the source database to the destination database in real time. The following SQL operations can be synchronized during initial incremental data synchronization: INSERT, UPDATE, and DELETE. Warning We recommend that you do not execute DDL statements in the source database. Otherwise, data synchronization may fail. |

Before you begin

When you configure the destination database, you must specify the AccessKey pair. To protect the AccessKey pair of your Alibaba Cloud account, we recommend that you grant permissions to a RAM user and create an AccessKey pair for the RAM user.

Create a RAM user and grant the AliyunOTSFullAccess permission on Tablestore to the RAM user.

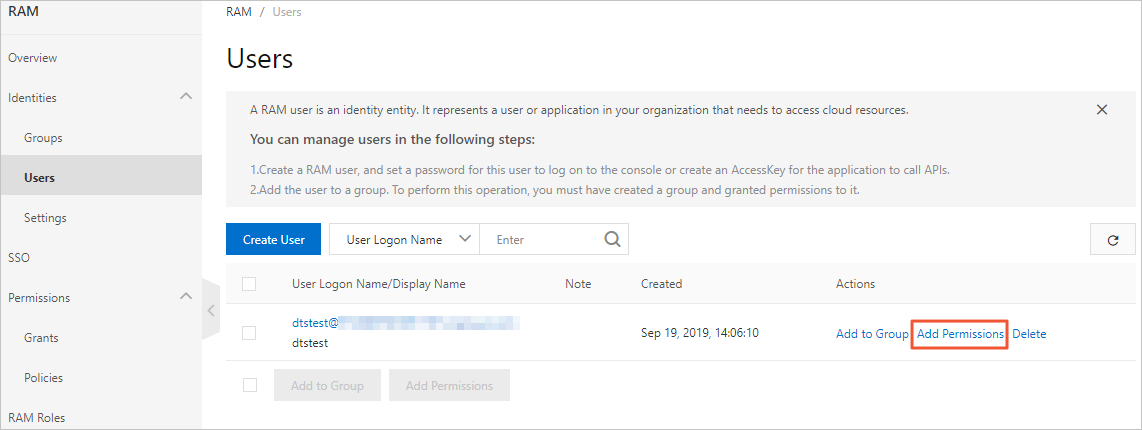

Log on to the RAM console.

In the left-side navigation pane, choose Identities > Users.

On the Users page, find the RAM user and click Add Permissions in the Actions column.

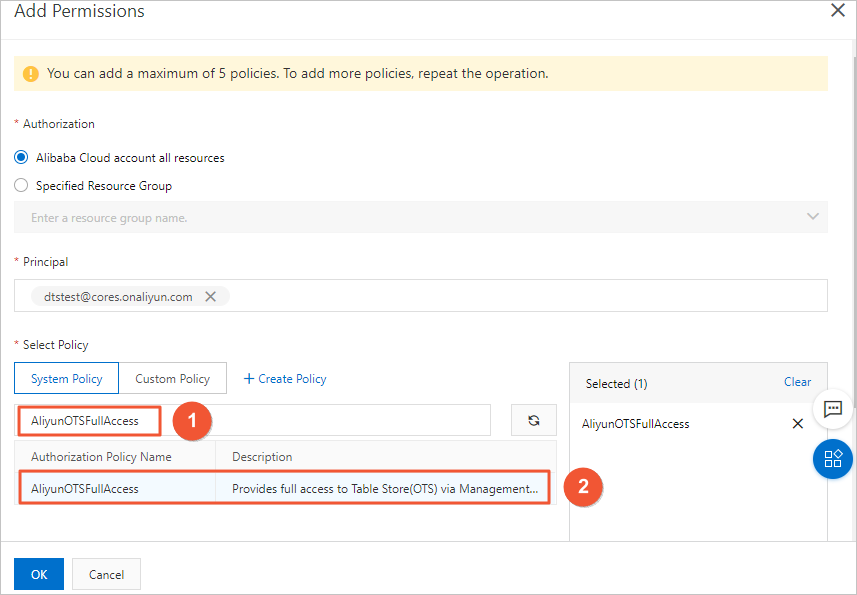

In the dialog box that appears, enter AliyunOTSFullAccess in the search box, and click the policy name to add the policy to the Selected section.

Click OK.

Click Complete.

Create an AccessKey pair for the RAM user. For more information, see Create an AccessKey pair.

Optional:If you need to configure a data synchronization task as a RAM user, you must grant the AliyunDTSDefaultRole permission to the RAM user. For more information, see Authorize DTS to access Alibaba Cloud resources.

NoteIf you use an Alibaba Cloud account to configure a data synchronization task, skip this step.

Procedure

Purchase a data synchronization instance. For more information, see Purchase a DTS instance.

NoteOn the buy page, set Source Instance to MySQL, set Destination Instance to Tablestore, and set Synchronization Topology to One-way Synchronization.

Log on to the Data Transmission Service (DTS) console.

In the left-side navigation pane, click Data Synchronization.

In the upper part of the Data Synchronization Tasks page, select the region in which the destination instance resides.

Find the data synchronization task and click Configure Task in the Actions column.

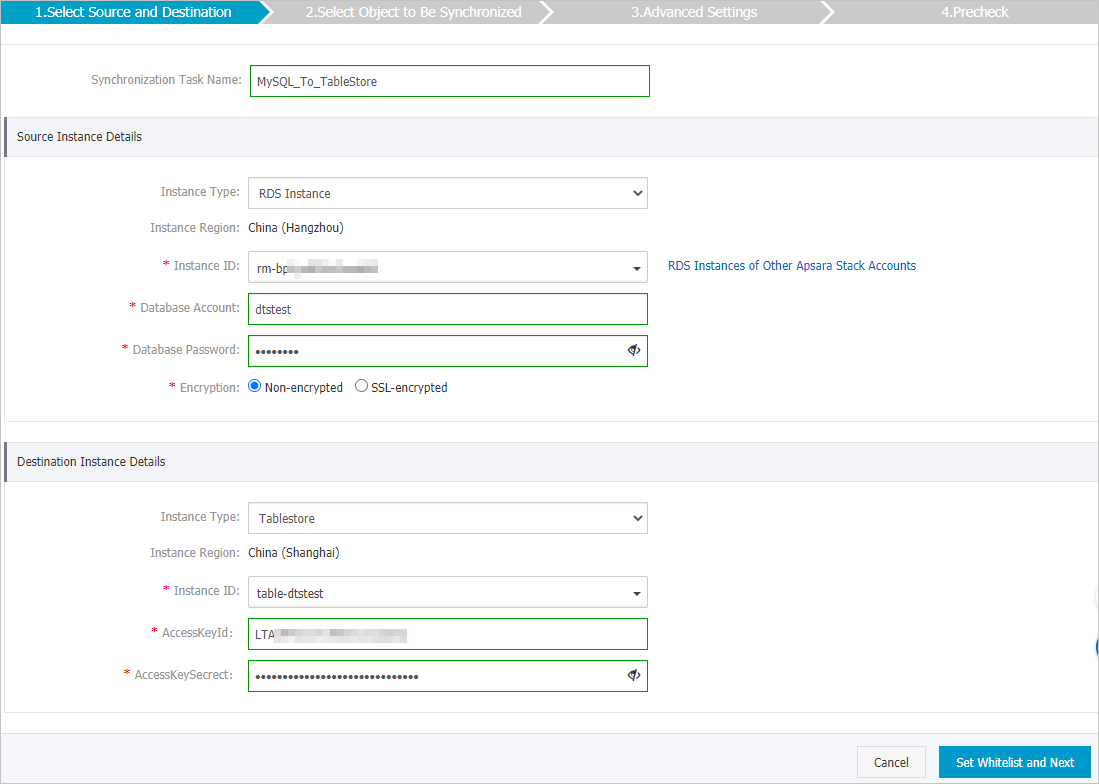

Configure the source and destination databases.

Section

Parameter

Description

N/A

Synchronization Task Name

The task name that DTS automatically generates. We recommend that you specify a descriptive name that makes it easy to identify the task. You do not need to use a unique task name.

Source Instance Details

Instance Type

Select RDS Instance.

Instance Region

The source region that you selected on the buy page. The value of this parameter cannot be changed.

Instance ID

The ID of the source ApsaraDB RDS for MySQL instance.

Database Account

The database account of the source ApsaraDB RDS instance. The account must have the SELECT permission on the objects to be synchronized and the REPLICATION CLIENT, REPLICATION SLAVE permission, and SHOW VIEW permissions.

NoteIf the database engine of the source ApsaraDB RDS instance is MySQL 5.5 or MySQL 5.6, you do not need to configure the Database Account or Database Password parameter.

Database Password

The password of the database account.

Encryption

Select Non-encrypted or SSL-encrypted. If you want to select SSL-encrypted, you must enable SSL encryption for the ApsaraDB RDS instance before you configure the data synchronization task. For more information, see Use a cloud certificate to enable SSL encryption.

ImportantThe Encryption parameter is available only within regions in the Chinese mainland and the China (Hong Kong) region.

Destination Instance Details

Instance Type

Select Tablestore.

Instance Region

The destination region that you selected on the buy page. The value of this parameter cannot be changed.

Instance ID

The ID of the destination Tablestore instance.

AccessKeyId

The AccessKey ID. For more information, see Create an AccessKey pair.

AccessKeySecrect

The AccessKey secret.

In the lower-right corner of the page, click Set Whitelist and Next.

If the source or destination database is an Alibaba Cloud database instance, such as an ApsaraDB RDS for MySQL or ApsaraDB for MongoDB instance, DTS automatically adds the CIDR blocks of DTS servers to the IP address whitelist of the instance. If the source or destination database is a self-managed database hosted on an Elastic Compute Service (ECS) instance, DTS automatically adds the CIDR blocks of DTS servers to the security group rules of the ECS instance, and you must make sure that the ECS instance can access the database. If the self-managed database is hosted on multiple ECS instances, you must manually add the CIDR blocks of DTS servers to the security group rules of each ECS instance. If the source or destination database is a self-managed database that is deployed in a data center or provided by a third-party cloud service provider, you must manually add the CIDR blocks of DTS servers to the IP address whitelist of the database to allow DTS to access the database. For more information, see Add the CIDR blocks of DTS servers.

WarningIf the CIDR blocks of DTS servers are automatically or manually added to the whitelist of the database or instance, or to the ECS security group rules, security risks may arise. Therefore, before you use DTS to synchronize data, you must understand and acknowledge the potential risks and take preventive measures, including but not limited to the following measures: enhancing the security of your username and password, limiting the ports that are exposed, authenticating API calls, regularly checking the whitelist or ECS security group rules and forbidding unauthorized CIDR blocks, or connecting the database to DTS by using Express Connect, VPN Gateway, or Smart Access Gateway.

Configure the synchronization policy and the objects to be synchronized.

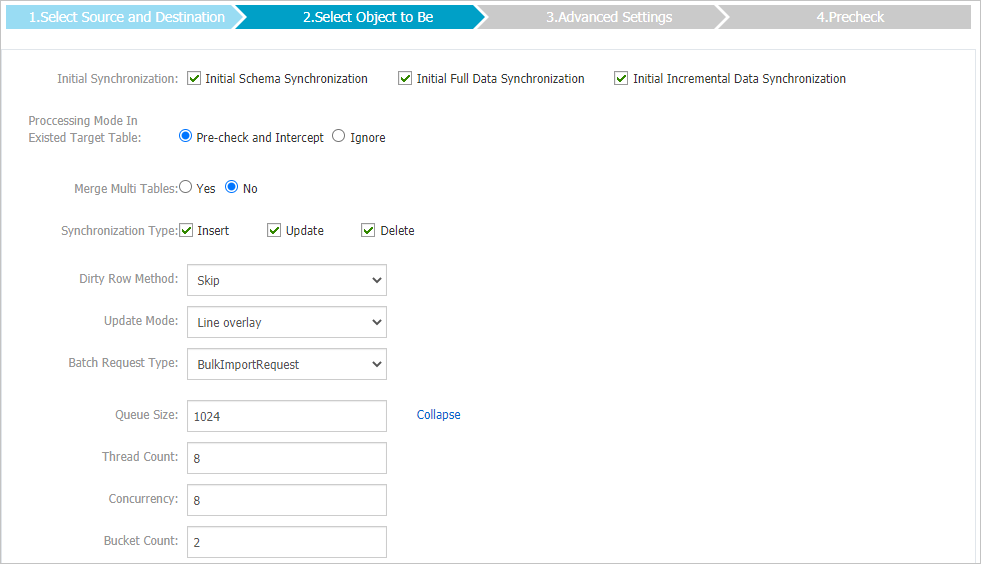

Configure the synchronization policy.

Parameter

Description

Initial Synchronization

Select Initial Schema Synchronization, Initial Full Data Synchronization, and Initial Incremental Data Synchronization. For more information, see Initial synchronization types.

Processing Mode of Conflicting Tables

Precheck and Report Errors: checks whether the destination database contains tables that have the same names as tables in the source database. If the destination database does not contain tables that have the same names as those in the source database, the precheck is passed. Otherwise, an error is returned during precheck and the data synchronization task cannot be started.

NoteYou can use the object name mapping feature to rename the tables that are synchronized to the destination database. You can use this feature if the source and destination databases contain identical table names and the tables in the destination database cannot be deleted or renamed. For more information, see Rename an object to be synchronized.

Ignore Errors and Proceed: skips the precheck for identical table names in the source and destination databases.

WarningIf you select Ignore Errors and Proceed, data inconsistency may occur and your business may be exposed to potential risks.

During initial data synchronization, DTS does not synchronize the data records that have the same primary keys as the data records in the destination database. This occurs if the source and destination databases have the same schema. However, DTS synchronizes these data records during incremental data synchronization.

If the source and destination databases have different schemas, initial data migration may fail. In this case, only specific columns are migrated, or the data migration task fails.

Merge Tables

Yes: In online transaction processing (OLTP) scenarios, sharding is implemented to speed up the response to business tables. You can merge multiple source tables that have the same schema into a single destination table. This feature allows you to synchronize data from multiple tables in the source database to a single table in the Tablestore instance.

NoteDTS adds a column named

__dts_data_sourceto the destination table in the Tablestore instance. This column is used to record the data source. The data type of this column is VARCHAR. DTS specifies the column values based on the following format:<Data synchronization instance ID>:<Source database name>.<Source table name>. Such column values allow DTS to identify each source table. For example,dts********:dtstestdata.customer1indicates that the source table is customer1.If you set this parameter to Yes, all selected source tables in the task are merged into the destination table. If you do not need to merge specific source tables, you can create a separate data synchronization task for these tables.

No: the default value.

Operation Types

The types of operations that you want to synchronize based on your business requirements. All operation types are selected by default.

Processing Policy of Dirty Data

The processing policy for handling data write errors:

Skip

Block

Data Write Mode

Update Row: The PutRowChange operation is performed to update rows in the Tablestore instance.

Overwrite Row: The UpdateRowChange operation is performed to overwrite rows in the Tablestore instance.

Batch Write Operation

The operation used to write multiple rows of data to the Tablestore instance.

BulkImportRequest: Data is written offline.

BatchWriteRowRequest: Data is written in batches.

To achieve higher read and write efficiency and reduce your costs of using the Tablestore instance, we recommend that you select BulkImportRequest.

More

└ Queue Size

The length of the queue for writing data to the Tablestore instance.

└ Thread Quantity

The number of callback threads for writing data to the Tablestore instance.

└ Concurrency

The maximum number of concurrent threads for the Tablestore instance.

└ Buckets

The number of concurrent buckets for incremental and sequential writes. To improve the concurrent write capability, you can set this parameter to a relatively larger value.

NoteThe value must be less than or equal to the maximum number of concurrent threads.

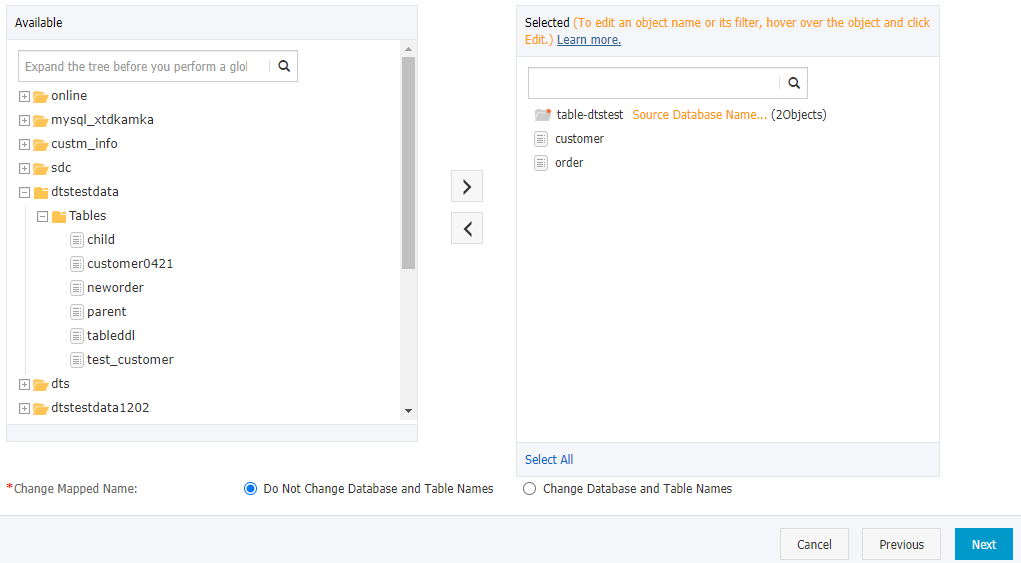

Select the objects to be synchronized.

Setting

Description

Select the objects to be synchronized

Select one or more tables from the Available section and click the

icon to add the tables to the Selected section. Up to 64 tables can be selected.

icon to add the tables to the Selected section. Up to 64 tables can be selected. By default, after an object is synchronized to the destination instance, the name of the object remains unchanged. You can use the object name mapping feature to rename the objects that are synchronized to the destination instance. For more information, see Rename an object to be synchronized.

WarningIf you set Merge Tables to Yes in Step 8 and select multiple source tables, you must use the object name mapping feature to change their names to the same table name in the Tablestore instance. Otherwise, data inconsistency may occur.

Click Next.

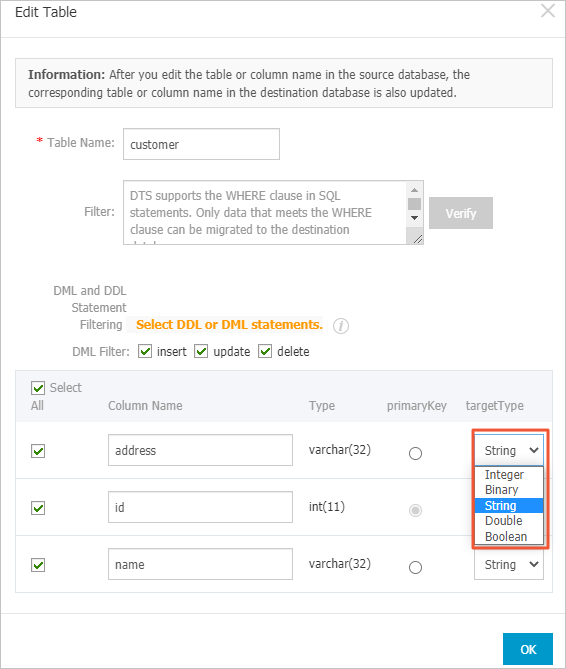

Optional:In the Selected section, move the pointer over a table, and then click Edit. In the Edit Table dialog box, set the data type of each column in the Tablestore instance.

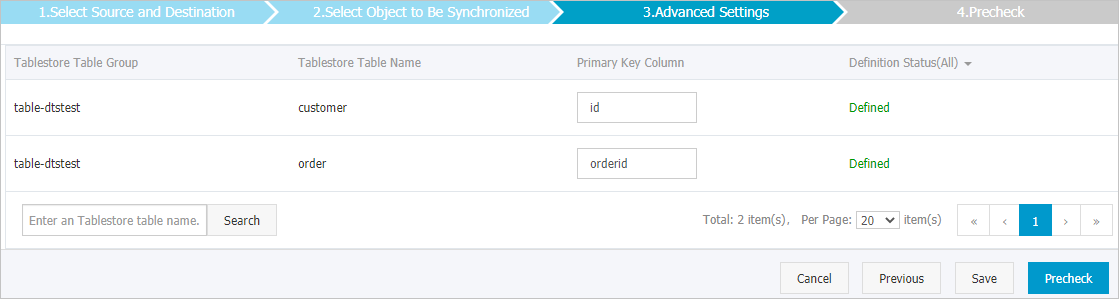

Configure the primary key columns of the tables that you want to synchronize to the Tablestore instance.

Note

NoteFor more information about primary keys of Tablestore instances, see Primary keys.

In the lower-right corner of the page, click Next: Precheck and Start Task.

NoteBefore you can start the data synchronization task, DTS performs a precheck. You can start the data synchronization task only after the task passes the precheck.

If the task fails to pass the precheck, you can click the

icon next to each failed item to view details. After you troubleshoot the issues based on the causes, run a precheck again.

icon next to each failed item to view details. After you troubleshoot the issues based on the causes, run a precheck again.

Close the Precheck dialog box after the following message is displayed: Precheck Passed. Then, the data synchronization task starts.

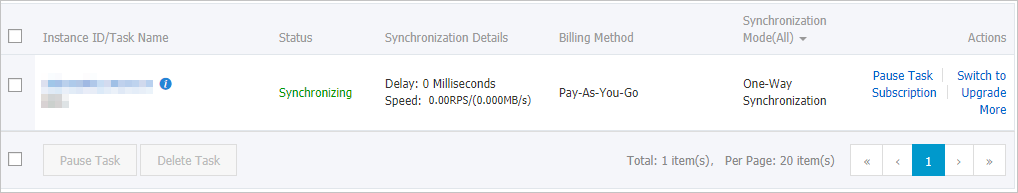

Wait until the initial synchronization is complete and the data synchronization task enters the Synchronizing state.

You can view the status of the data synchronization task on the Data Synchronization Tasks page.