This topic describes how to synchronize data from a self-managed MySQL database to a Message Queue for Apache Kafka instance by using Data Transmission Service (DTS). The data synchronization feature allows you to extend message processing capabilities.

Prerequisites

The version of the self-managed MySQL database is 5.1, 5.5, 5.6, 5.7, or 8.0.

The version of the destination Message Queue for Apache Kafka instance is 0.10.1.0 to 2.x.

In the destination Message Queue for Apache Kafka instance, a topic is created to receive the synchronized data. For more information, see Create a topic.

Background information

ApsaraMQ for Kafka is a distributed, high-throughput, and scalable message queue service that is provided by Alibaba Cloud. It provides fully managed services for the open source Apache Kafka to resolve the long-standing shortcomings of open source products. Message Queue for Apache Kafka allows you to focus on business development without spending much time in deployment and O&M. Message Queue for Apache Kafka is used in big data scenarios such as log collection, monitoring data aggregation, streaming data processing, and online and offline analysis. It is important for the big data ecosystem.

Usage notes

DTS uses read and write resources of the source and destination RDS instances during initial full data synchronization. This may increase the loads of the RDS instances. If the instance performance is unfavorable, the specification is low, or the data volume is large, database services may become unavailable. For example, DTS occupies a large amount of read and write resources in the following cases: a large number of slow SQL queries are performed on the source RDS instance, the tables have no primary keys, or a deadlock occurs in the destination RDS instance. Before data synchronization, evaluate the impact of data synchronization on the performance of the source and destination RDS instances. We recommend that you synchronize data during off-peak hours. For example, you can synchronize data when the CPU utilization of the source and destination RDS instances is less than 30%.

- The source database must have PRIMARY KEY or UNIQUE constraints and all fields must be unique. Otherwise, the destination database may contain duplicate data records.

Limits

- Only tables can be selected as the objects to synchronize.

- DTS does not synchronize the data in a renamed table to the destination Kafka cluster. This applies if the new table name is not included in the objects to be synchronized. If you want to synchronize the data in a renamed table to the destination Kafka cluster, you must reselect the objects to be synchronized. For more information, see Add an object to a data synchronization task.

Before you begin

Create an account for a self-managed MySQL database and configure binary logging

Procedure

Purchase a data synchronization instance. For more information, see Purchase a DTS instance.

NoteOn the buy page, set Source Instance to MySQL, set Dstination Instance to Kafka, and set Synchronization Topology to One-way Synchronization.

Log on to the DTS console.

NoteIf you are redirected to the Data Management (DMS) console, you can click the

icon in the

icon in the  to go to the previous version of the DTS console.

to go to the previous version of the DTS console.In the left-side navigation pane, click Data Synchronization.

In the upper part of the Synchronization Tasks page, select the region in which the destination instance resides.

Find the data synchronization instance and click Configure Task in the Actions column.

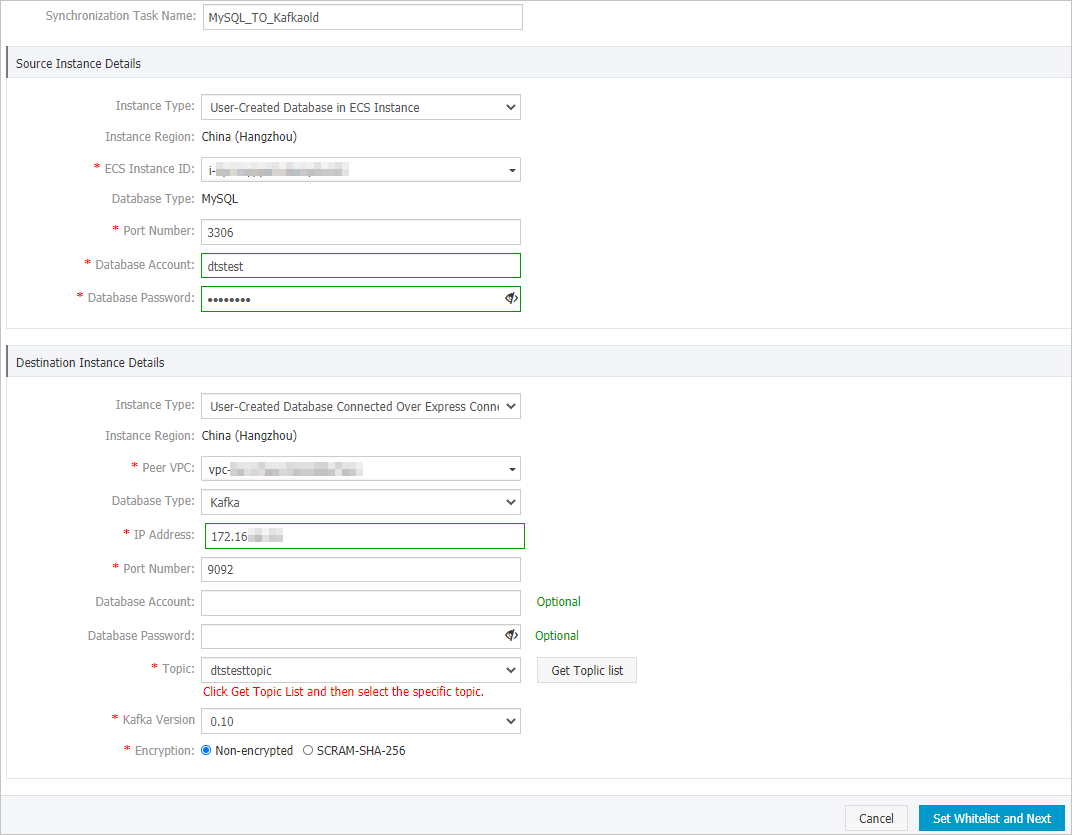

Configure the source and destination instances.

Section

Parameter

Description

None

Synchronization Task Name

DTS automatically generates a task name. We recommend that you specify an informative name to identify the task. You do not need to use a unique task name.

Source Instance Details

Instance Type

Select an instance type based on the deployment of the source database. In this example, select User-Created Database in ECS Instance. You can also follow the procedure to configure data synchronization tasks for other types of self-managed MySQL databases.

Instance Region

The source region that you selected on the buy page. You cannot change the value of this parameter.

ECS Instance ID

Select the ID of the Elastic Compute Service (ECS) instance that hosts the self-managed MySQL database.

Database Type

This parameter is set to MySQL and cannot be changed.

Port Number

Enter the service port number of the self-managed MySQL database.

Database Account

Enter the account of the self-managed MySQL database. The account must have the SELECT permission on the required objects, the REPLICATION CLIENT permission, the REPLICATION SLAVE permission, and the SHOW VIEW permission.

Database Password

Enter the password of the database account.

Destination Instance Details

Instance Type

Select User-Created Database Connected over Express Connect, VPN Gateway, or Smart Access Gateway.

NoteYou cannot select Message Queue for Apache Kafka as the instance type. You can use Message Queue for Apache Kafka as a self-managed Kafka database to configure data synchronization.

Instance Region

The destination region that you selected on the buy page. You cannot change the value of this parameter.

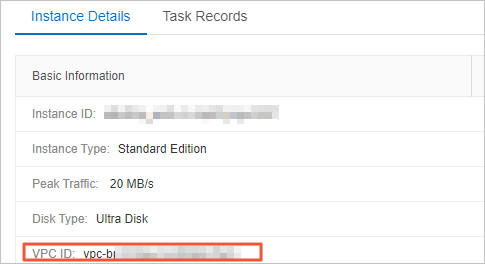

Peer VPC

Select the ID of the virtual private cloud (VPC) to which the destination Message Queue for Apache Kafka instance belongs. To obtain the VPC ID, you can log on to the Message Queue for Apache Kafka console and go to the Instance Details page of the Message Queue for Apache Kafka instance. In the Basic Information section, you can view the VPC ID.

Database Type

Select Kafka.

IP Address

Enter an IP address that is included in the Default Endpoint parameter of the Message Queue for Apache Kafka instance.

NoteTo obtain an IP address, you can log on to the Message Queue for Apache Kafka console and go to the Instance Details page of the Message Queue for Apache Kafka instance. In the Basic Information section, you can obtain an IP address from the Default Endpoint parameter.

Port Number

Enter the service port number of the Message Queue for Apache Kafka instance. The default port number is 9092.

Database Account

Enter the username that is used to log on to the Message Queue for Apache Kafka instance.

NoteIf the instance type of the Message Queue for Apache Kafka instance is VPC Instance, you do not need to specify the database account or database password.

Database Password

Enter the password of the username.

Topic

Click Get Topic List and select a topic name from the drop-down list.

Kafka Version

Select the version of the Message Queue for Apache Kafka instance.

Encryption

Select Non-encrypted or SCRAM-SHA-256 based on your business and security requirements.

In the lower-right corner of the page, click Set Whitelist and Next.

If the source or destination database is an Alibaba Cloud database instance, such as an ApsaraDB RDS for MySQL or ApsaraDB for MongoDB instance, DTS automatically adds the CIDR blocks of DTS servers to the IP address whitelist of the instance. If the source or destination database is a self-managed database hosted on an Elastic Compute Service (ECS) instance, DTS automatically adds the CIDR blocks of DTS servers to the security group rules of the ECS instance, and you must make sure that the ECS instance can access the database. If the self-managed database is hosted on multiple ECS instances, you must manually add the CIDR blocks of DTS servers to the security group rules of each ECS instance. If the source or destination database is a self-managed database that is deployed in a data center or provided by a third-party cloud service provider, you must manually add the CIDR blocks of DTS servers to the IP address whitelist of the database to allow DTS to access the database. For more information, see Add the CIDR blocks of DTS servers.

WarningIf the CIDR blocks of DTS servers are automatically or manually added to the whitelist of the database or instance, or to the ECS security group rules, security risks may arise. Therefore, before you use DTS to synchronize data, you must understand and acknowledge the potential risks and take preventive measures, including but not limited to the following measures: enhancing the security of your username and password, limiting the ports that are exposed, authenticating API calls, regularly checking the whitelist or ECS security group rules and forbidding unauthorized CIDR blocks, or connecting the database to DTS by using Express Connect, VPN Gateway, or Smart Access Gateway.

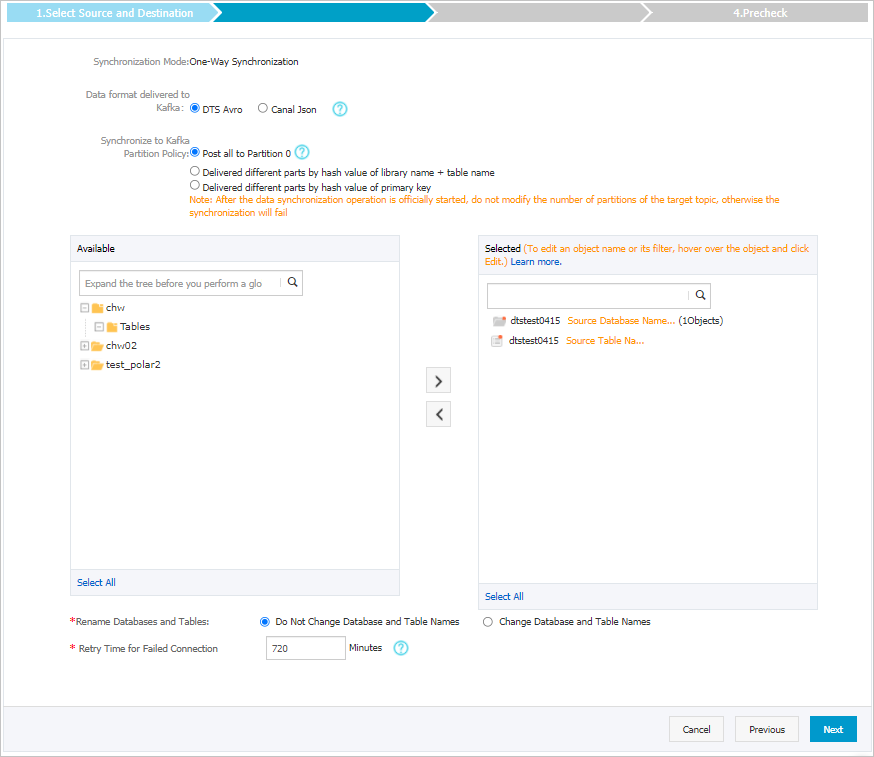

- Select the objects to be synchronized.

Parameter Description Data Format in Kafka The data that is synchronized to the Kafka cluster is stored in the Avro or Canal JSON format. For more information, see Data formats of a Kafka cluster. Policy for Shipping Data to Kafka Partitions The policy used to synchronize data to Kafka partitions. Select a policy based on your business requirements. For more information, see Specify the policy for synchronizing data to Kafka partitions. Objects to be synchronized Select one or more tables from the Available section and click the  icon to add the tables to the Selected section. Note DTS maps the table names to the topic name that you select in Step 6. You can use the table name mapping feature to change the topics that are synchronized to the destination cluster. For more information, see Rename an object to be synchronized.

icon to add the tables to the Selected section. Note DTS maps the table names to the topic name that you select in Step 6. You can use the table name mapping feature to change the topics that are synchronized to the destination cluster. For more information, see Rename an object to be synchronized.Rename Databases and Tables You can use the object name mapping feature to rename the objects that are synchronized to the destination instance. For more information, see Object name mapping.

Retry Time for Failed Connections By default, if DTS fails to connect to the source or destination database, DTS retries within the next 720 minutes (12 hours). You can specify the retry time based on your needs. If DTS reconnects to the source and destination databases within the specified time, DTS resumes the data synchronization task. Otherwise, the data synchronization task fails.

NoteWhen DTS retries a connection, you are charged for the DTS instance. We recommend that you specify the retry time based on your business needs. You can also release the DTS instance at your earliest opportunity after the source and destination instances are released.

- In the lower-right corner of the page, click Next.

- Configure initial synchronization.

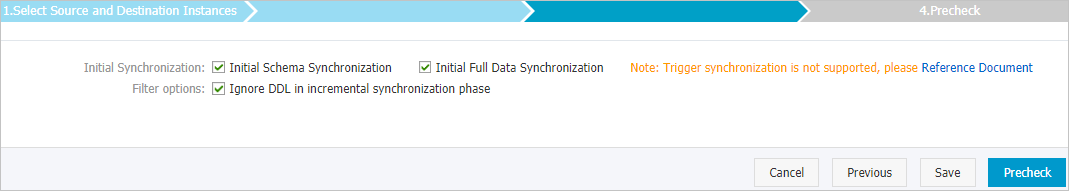

Parameter Description Initial Synchronization Select both Initial Schema Synchronization and Initial Full Data Synchronization. DTS synchronizes the schemas and historical data of the required objects and then synchronizes incremental data. Filter options Ignore DDL in incremental synchronization phase is selected by default. In this case, DTS does not synchronize DDL operations that are performed on the source database during incremental data synchronization. In the lower-right corner of the page, click Precheck.

NoteBefore you can start the data synchronization task, DTS performs a precheck. You can start the data synchronization task only after the task passes the precheck.

If the task fails to pass the precheck, you can click the

icon next to each failed item to view details.

icon next to each failed item to view details. After you troubleshoot the issues based on the details, initiate a new precheck.

If you do not need to troubleshoot the issues, ignore the failed items and initiate a new precheck.

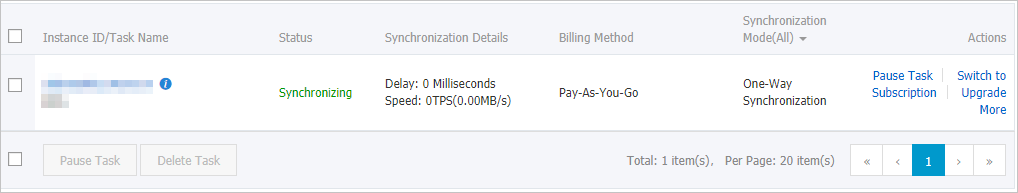

- Close the Precheck dialog box after the following message is displayed: Precheck Passed. Then, the data synchronization task starts. You can view the status of the data synchronization task on the Data Synchronization page.