This topic describes how to use the data transmission service to migrate data from a cloud-native multi-model Lindorm database to OBKV.

A data migration task remaining in an inactive state for a long time may fail to be resumed depending on the retention period of incremental logs. Inactive states are Failed, Stopped, and Completed. The data transmission service releases data migration tasks remaining in an inactive state for more than 3 days to reclaim related resources. We recommend that you configure alerts for data migration tasks and handle task exceptions in a timely manner.

Background

You can create a task in the ApsaraDB for OceanBase console to seamlessly migrate existing and incremental business data from a Lindorm database to OBKV through schema migration, full migration, and incremental synchronization.

Lindorm is a cloud-native multi-model integrated database designed and optimized for the Internet of Things (IoT), Internet, and Internet of Vehicles (IoV). The data transmission service supports LindormTable, a wide table engine service. For more information, see Overview.

OBKV belongs to the Standard Edition (Key-Value) series supported by ApsaraDB for OceanBase Standard Edition. It is developed based on OceanBase Database, a relational SQL database system, and fully reuses the Shared-Nothing architecture of OceanBase Database. For more information, see Product series.

Prerequisites

The data transmission service has the privilege to access cloud resources. For more information, see Grant privileges to roles for data transmission.

You have created a dedicated user in the source Lindorm database for data migration, and granted required permissions to the user. For more information, see Lindorm data source.

You have created a dedicated user in the target OBKV instance for data migration, and granted required permissions to the user. For more information, see Create a database account for an OBKV instance and grant privileges to the account.

Limitations

At present, the data transmission service supports LindormTable V2.x, and OBKV V4.2.1 and V4.2.4.

The data transmission service can migrate only the data whose

tablenameandcolumnfamilyvalues consist of digits (0 to 9), lowercase letters (a to z), uppercase letters (A to Z), and underscores (_) from a Lindorm database to OBKV.When you migrate data from a Lindorm database to OBKV, only HBase table objects are supported. Other types of table objects may cause data quality issues.

We recommend that you set the number of Kafka partitions to 1 when you configure incremental synchronization settings.

Considerations

We recommend that you create a data migration task between a Lindorm database and OBKV only for data migration, but not for long-term data synchronization. In the following cases, data quality issues may occur in the incremental synchronization phase and will be detected in the full verification phase.

If you update values by specifying the version, which is a timestamp, the data transmission service cannot identify the updates.

If you perform a Put operation that sets the field value of a rowkey to an empty string ("), the value may be deleted during the incremental synchronization phase.

When you migrate data from a Lindorm database to OBKV, data is pulled only in IN mode during full verification. In this mode, verification is inapplicable if the target contains data that does not exist in the source, and as a result, the verification performance is downgraded.

If the source or target database contains table objects that differ only in letter cases, the data migration results may not be as expected due to case insensitivity in the source or target database.

If the clocks between nodes or between the client and the server are out of synchronization, the latency may be inaccurate during incremental synchronization or reverse incremental synchronization.

For example, if the clock is earlier than the standard time, the latency can be negative. If the clock is later than the standard time, the latency can be positive.

If the Time To Live (TTL) values configured for the Lindorm database and OBKV are inconsistent, the data in the source and target may be inconsistent.

If you selected only Incremental Synchronization when you created a data migration task, the data transmission service requires that data in the source database be retained in Kafka for more than 48 hours.

If you have selected Full Migration and Incremental Synchronization when you created the data migration task, the data transmission service requires that data in the source database be retained in Kafka for at least 7 days. Otherwise, the data transmission service cannot obtain the retained data from Kafka. As a result, the data migration task may fail or even the data between the source and target may be inconsistent after migration.

Procedure

Log on to the

ApsaraDB for OceanBase console and purchase a data migration task.For more information, see Purchase a data migration task.

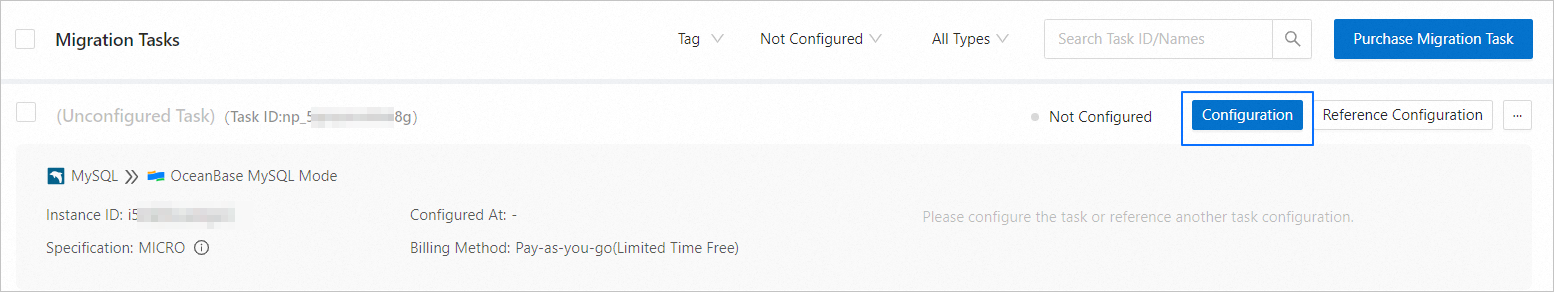

Choose Data Transmission > Data Migration. On the page that appears, click Configuration for the data migration task.

If you want to reference the configurations of an existing task, click Reference Configuration. For more information, see Reference the configuration of a data migration task.

On the Select Source and Target page, configure the parameters.

Parameter

Description

Migration Task Name

We recommend that you set it to a combination of digits and letters. It must not contain any spaces and cannot exceed 64 characters in length.

Source

If you have created a Lindorm data source, select it from the drop-down list. Otherwise, click New Data Source in the drop-down list and create one in the dialog box that appears on the right. For more information about the parameters, see Create a Lindorm data source.

Target

If you have created an OBKV data source, select it from the drop-down list. Otherwise, click New Data Source in the drop-down list and create one in the dialog box that appears on the right. For more information about the parameters, see Create an OceanBase data source.

Tags

Select a target tag from the drop-down list. You can also click Manage Tags to create, modify, and delete tags. For more information, see Use tags to manage data migration tasks.

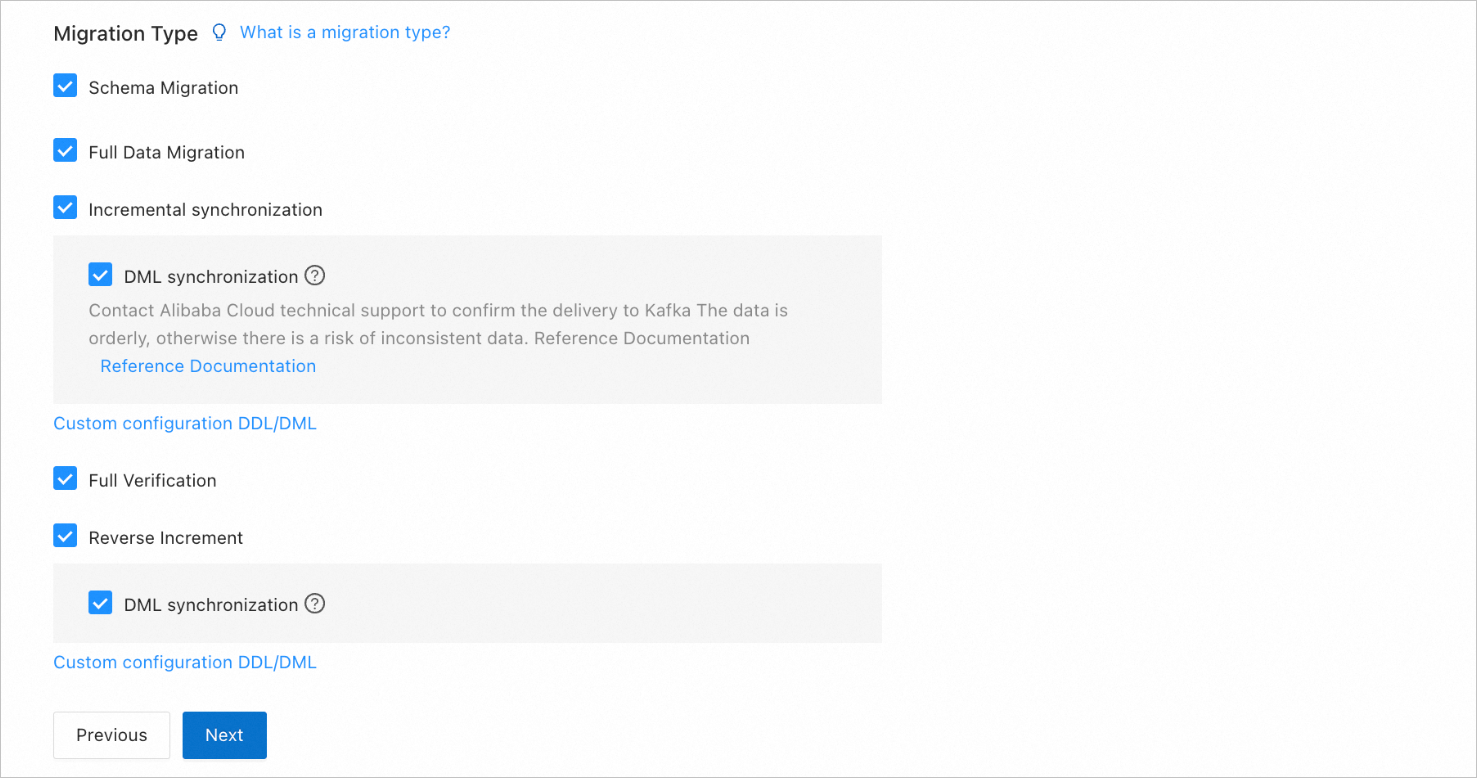

Click Next. On the Select Migration Type page, specify migration types for the current data migration task.

Supported migration types are schema migration, full migration, incremental synchronization, full verification, and reverse incremental synchronization.

Migration type

Description

Schema migration

After a schema migration task is started, the data transmission service migrates the definitions of data objects, such as tables and column families, from the source database to the target database, and automatically filters out temporary tables.

Full migration

After a full migration task is started, the data transmission service migrates existing data from tables in the source database to corresponding tables in the target database.

Incremental synchronization

After an incremental synchronization task is started, the data transmission service synchronizes changed data (data that is added, modified, or removed) from the source database to corresponding tables in the target database.

DML Synchronization is supported for Incremental Synchronization. You can select operations as needed. For more information, see Configure DDL/DML synchronization.

NoteIf you create a Lindorm data source without binding it to a Kafka data source, you cannot select Incremental Synchronization.

If you want to select DML Synchronization in Incremental Synchronization, contact Alibaba Cloud Technical Support to confirm that the data delivered to Kafka is ordered. Otherwise, the data in the source and target may be inconsistent. For more information about the configuration for delivering data from Lindorm to Kafka, see Overview.

Full verification

After the full migration and incremental synchronization tasks are completed, the data transmission service automatically initiates a full verification task to verify the tables in the source and target databases.

Reverse incremental synchronization

Data changes made in the target database after the business database switchover are synchronized to the source database in real time through reverse incremental synchronization.

Generally, incremental synchronization configurations are reused for reverse incremental synchronization. You can also customize the configurations for reverse incremental synchronization as needed.

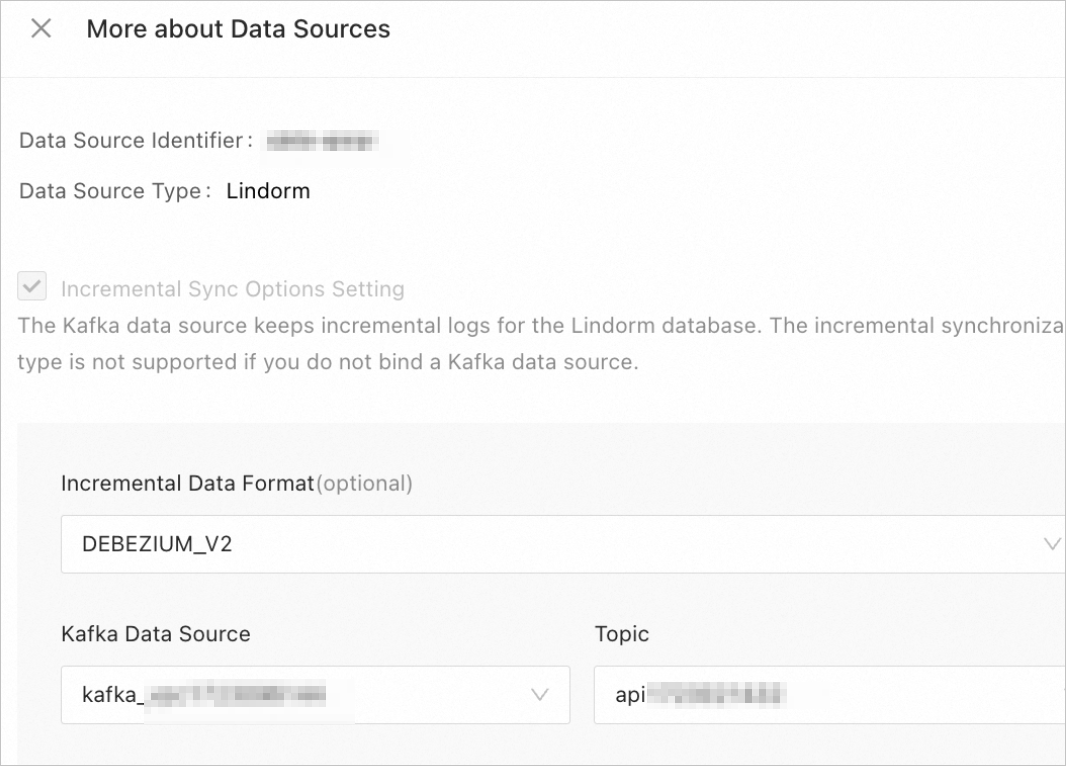

(Optional) Click Next. In the More about Data Sources dialog box, select the Kafka data source and the corresponding topic. Then, click Test Connectivity. After the test succeeds, click Save.

NoteThe More about Data Sources dialog box appears during task creation only if you do not select Incremental Synchronization Settings when you create the Lindorm data source, but select Incremental Synchronization in the Select Migration Type step. For more information about the parameters, see Create a Lindorm data source.

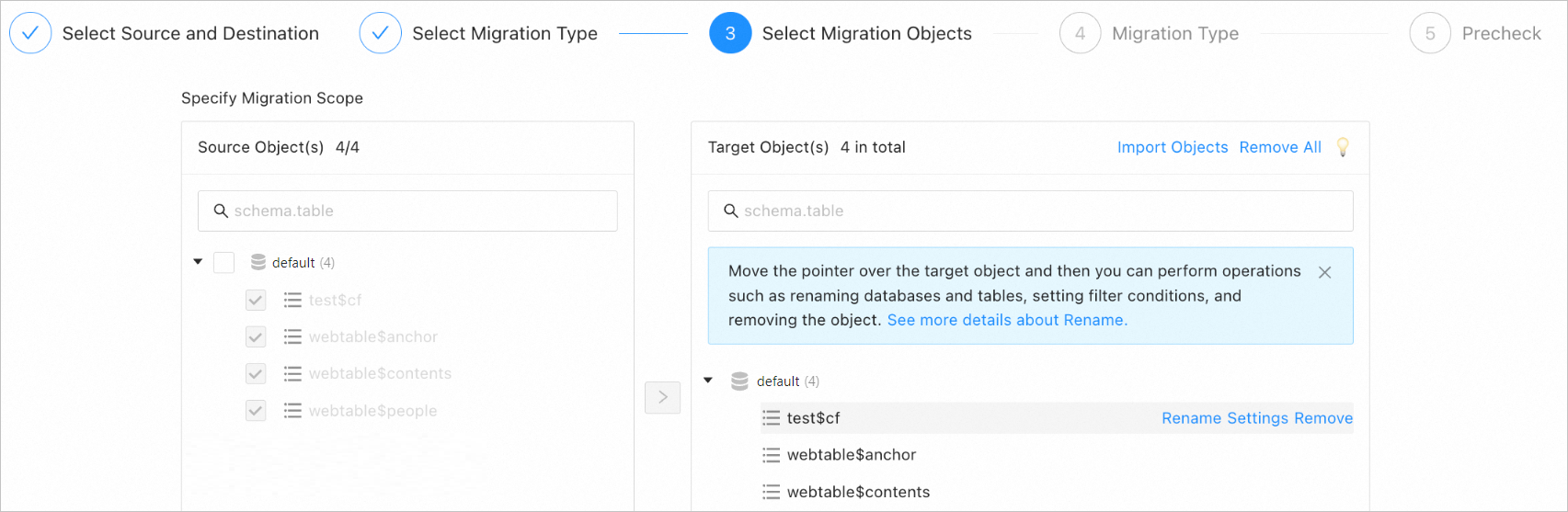

Click Next. On the Select Migration Objects page, select the migration objects of the current data migration task.

Select the object to be migrated on the left, and click > to add it to the list on the right.

The data transmission service allows you to import objects from text files, rename target objects, set partitions, and remove a single migration object or all migration objects.

Operation

Description

Import objects

In the list on the right, click Import Objects in the upper-right corner.

In the dialog box that appears, click OK.

ImportantThis operation will overwrite previous selections. Proceed with caution.

In the Import Objects dialog box, import the objects to be migrated.

You can import CSV files to rename databases or tables and set row filtering conditions. For more information, see Download and import the settings of migration objects.

Click Validate.

After you import the migration objects, check their validity.

After the validation succeeds, click OK.

Rename objects

The data transmission service allows you to rename migration objects. The name of a migration object is in the

tablename$columnfamilyformat. For more information, see Rename a database table.ImportantWhen you migrate data from a Lindorm database to OBKV, you can migrate only objects in the default namespace to the default database of OBKV, and you cannot rename the default database.

Configure settings

The data transmission service allows you to set partitions only if Schema Migration is selected.

In the Partition Settings dialog box, Partition Method supports only Key Partition. You can configure the following options.

Partition Key

The default value is K and cannot be modified.

Number of partitions

You can specify a value ranging from 1 to 1024.

Use combined virtual column partition

By default, this option is not selected, which specifies to use KEY partitioning without adding a virtual column.

If you select this option, you need to specify Virtual Column Definition. Only substring virtual columns are supported, for example,

substring(K,1,4).

Remove one or all objects

The data transmission service allows you to remove a single object or all migration objects that are added to the right-side list during data mapping.

Remove a single migration object

In the list on the right, move the pointer over the object that you want to remove, and click Remove to remove the migration object.

Remove all migration objects

In the list on the right, click Remove All in the upper-right corner. In the dialog box that appears, click OK to remove all migration objects.

Click Next. On the Migration Options page, configure the parameters.

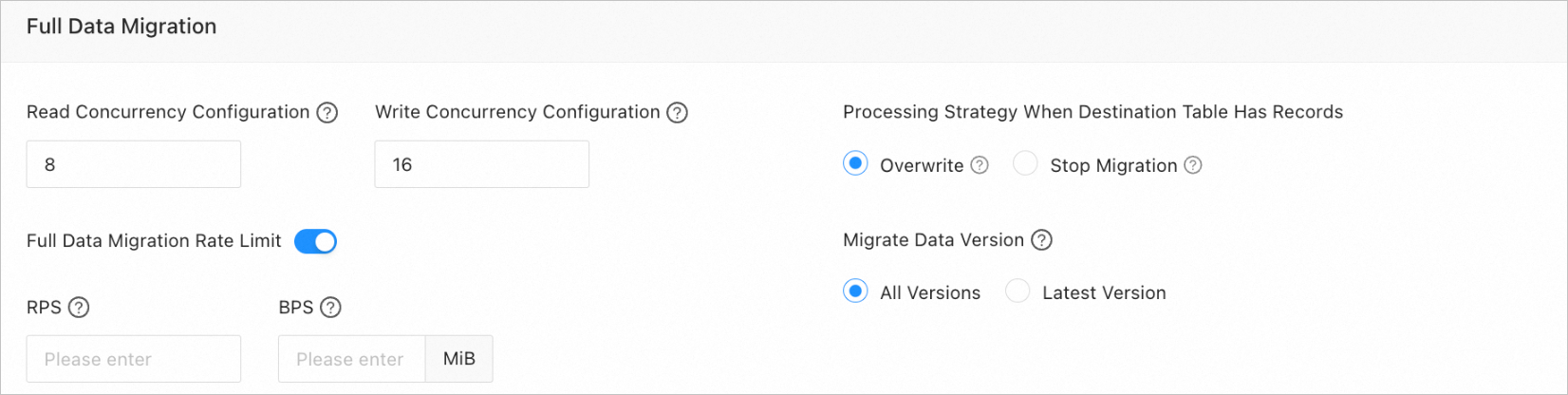

Full migration

The following table describes the parameters for full migration, which are displayed only if you have selected Full Migration on the Select Migration Type page.

Parameter

Description

Read Concurrency

The concurrency for reading data from the source during full migration. The maximum value is 512. A high read concurrency may incur excessive stress on the source, affecting the business.

Write Concurrency

The concurrency for writing data to the target during full migration. The maximum value is 512. A high write concurrency may incur excessive stress on the target, affecting the business.

Full Migration Rate Limit

You can choose whether to limit the full migration rate as needed. If you choose to limit the full migration rate, you must specify the records per second (RPS) and bytes per second (BPS). The RPS specifies the maximum number of data rows migrated to the target per second during full migration, and the BPS specifies the maximum amount of data in bytes migrated to the target per second during full migration.

NoteThe RPS and BPS values specified here are only for throttling. The actual full migration performance is subject to factors such as the settings of the source and target and the instance specifications.

Handle Non-empty Tables in Target Database

The processing strategy adopted when a target table contains records. This parameter is displayed only if Full Migration is selected on the Select Migration Type page. Valid values: Overwrite and Stop Migration.

If you select Overwrite, existing data in the target may be overwritten by data in the source. For example, if the target contains a data record with the same key and timestamp as a data record in the source, other values of this data record in the target will be replaced with those in the source.

If you select Stop Migration and a target table contains records, an error prompting migration unsupported is reported during full migration. In this case, you must process the data in the target table before continuing with the migration.

ImportantIf you click Restore in the dialog box prompting the error, the data transmission service ignores this error and continues to migrate data. Proceed with caution.

Migrate Data Version

Lindorm supports multi-version storage and marks data row versions by timestamps. If you select All Versions, all versions of rows are migrated during full migration. If you select Latest Version, only rows of the latest timestamps are migrated during full migration.

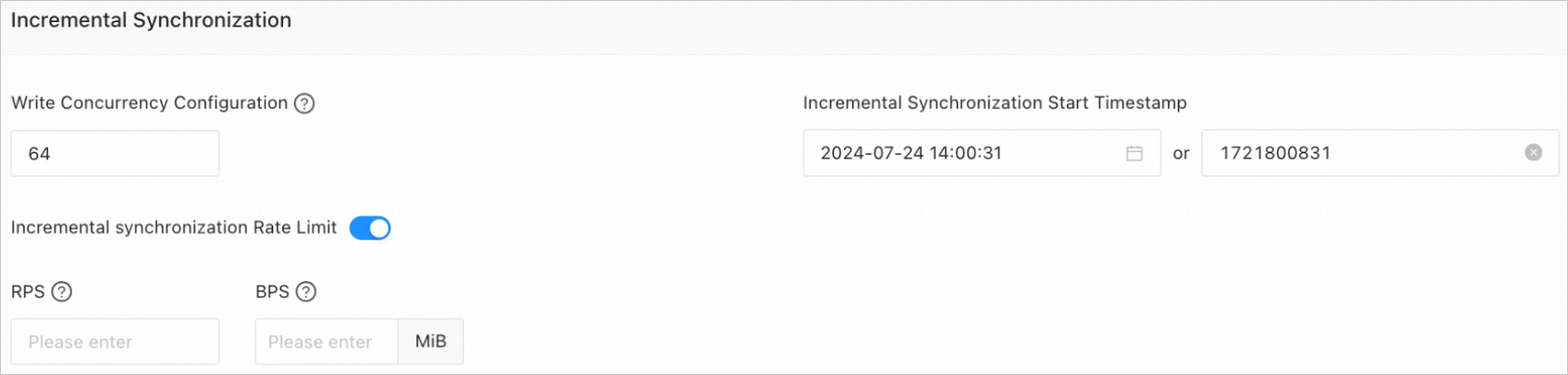

Incremental synchronization

The following table describes the parameters for incremental synchronization, which are displayed only if you have selected Incremental Synchronization on the Select Migration Type page.

Parameter

Description

Write Concurrency

The concurrency for writing data to the target during incremental synchronization. The maximum value is 512. A high write concurrency may incur excessive stress on the target, affecting the business.

Incremental Synchronization Rate Limit

You can choose whether to limit the incremental synchronization rate as needed. If you choose to limit the incremental synchronization rate, you must specify the records per second (RPS) and bytes per second (BPS). The RPS specifies the maximum number of data rows synchronized to the target per second during incremental synchronization, and the BPS specifies the maximum amount of data in bytes synchronized to the target per second during incremental synchronization.

NoteThe RPS and BPS values specified here are only for throttling. The actual incremental synchronization performance is subject to factors such as the settings of the source and target and the instance specifications.

Incremental Synchronization Start Timestamp

This parameter is not displayed if you have selected Full Migration on the

Select Migration Type page.If you have selected Incremental Synchronization but not Full Migration, specify a point in time after which the data is to be synchronized. The default value is the current system time. For more information, see Set an incremental synchronization timestamp.

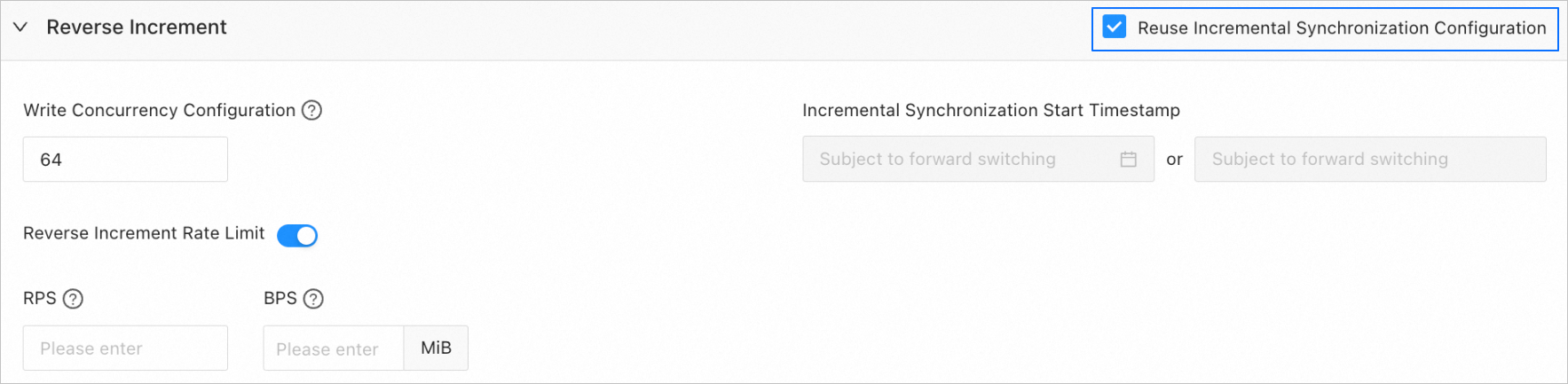

Reverse increment

The following table describes the parameters for reverse increment, which are displayed only if you have selected Reverse Increment on the Select Migration Type page. By default, incremental synchronization configurations are reused for reverse increment.

You can choose not to reuse the incremental synchronization configurations and configure reverse increment as needed.

Parameter

Description

Write Concurrency

The concurrency for writing data to the source during reverse increment. The maximum value is 512. A high concurrency may incur excessive stress on the source, affecting the business.

Reverse Increment Rate Limit

You can choose whether to limit the reverse increment rate as needed. If you choose to limit the reverse increment rate, you must specify the requests per second (RPS) and bytes per second (BPS). The RPS specifies the maximum number of data rows synchronized to the source per second during reverse increment, and the BPS specifies the maximum amount of data in bytes synchronized to the source per second during reverse increment.

NoteThe RPS and BPS values specified here are only for throttling. The actual reverse increment performance is subject to factors such as the settings of the source and target and the instance specifications.

Incremental Synchronization Start Timestamp

This parameter is not displayed if you have selected Full Migration on the Select Migration Type page.

If you have selected Incremental Synchronization but not Full Migration, the forward switchover start timestamp (if any) is used by default. This parameter cannot be modified.

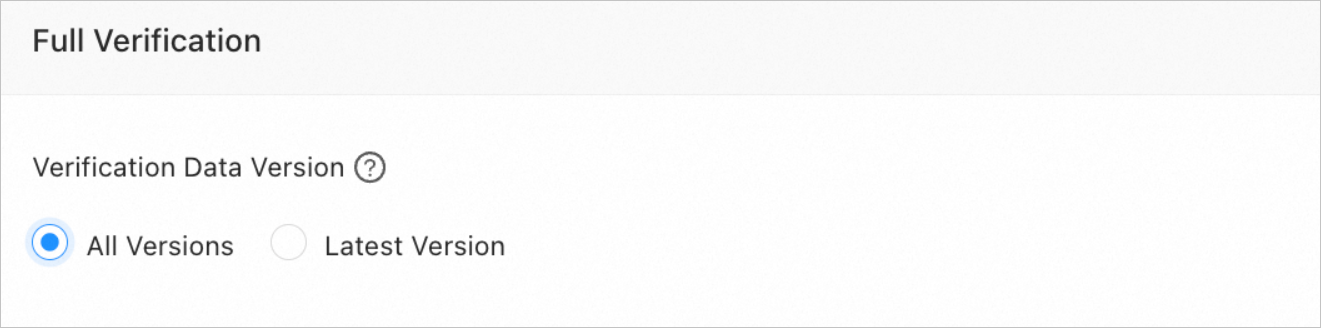

Full verification

This section is displayed only if you have selected Full Verification on the Select Migration Type page.

Lindorm supports multi-version storage and marks data row versions by timestamps. If you select All Versions for Verification Data Version, all data row versions are verified during full verification. If you select Latest Version for Verification Data Version, only data rows of the latest timestamp are verified during full verification.

Click Precheck to start a precheck on the data migration task.

During the precheck, the data transmission service checks the read and write privileges of the database users and the network connections of the databases. A data synchronization task can be started only after it passes all check items. If an error is returned during the precheck, you can perform the following operations:

Identify and troubleshoot the problem and then perform the precheck again.

Click Skip in the Actions column of the failed precheck item. In the dialog box that prompts the consequences of the operation, click OK.

After the precheck is passed, click Start Task.

If you do not need to start the task now, click Save. You can start the task later on the Migration Tasks page or by performing batch operations. For more information about batch operations, see Perform batch operations on data migration tasks. After the data migration task is started, it is executed based on the selected migration types. For more information, see View migration details.

The data transmission service allows you to remove migration objects during data migration. For more information, see Remove migration objects.

ImportantYou cannot add migration objects during the running of a task for migrating data from a Lindorm database to OBKV.