By Shuangkun Tian

Argo Workflows is an open source workflow engine for automating complex workflow orchestration on Kubernetes. You can use Argo Workflows to create a collection of tasks and configure the execution sequence and dependencies for the tasks. This helps you efficiently create and manage custom automated workflows.

Argo Workflows is widely used in scenarios such as scheduled tasks, machine learning, simulation, scientific computing, extract, transform, load (ETL) tasks, model training, and continuous integration/continuous delivery (CI/CD) pipelines

Argo Workflows uses YAML files to configure workflows for clarity and simplicity. This may pose challenges to users who are new to or unfamiliar with the YAML syntax that requires strict indentation to build a hierarchical code structure. This may lead to a long learning curve and complicated configuration steps for these users.

Hera is an Argo Workflows SDK for Python intended for workflow creation and submission based on Argo Workflows. Hera aims to simplify the procedures of creating and submitting workflows and is suitable for data scientists who are familiar with Python rather than YAML. Hera provides the following advantages:

1) Simplicity: Hera provides intuitive and easy-to-use code to greatly improve development efficiency.

2) Support for complex workflows: Hera helps eliminate YAML syntax errors in complex workflow orchestration.

3) Integration with Python ecosystem: Each function can be defined in a template. Hera is integrated with Python frameworks.

4) Observability: Hera supports Python testing frameworks to help improve code quality and maintainability.

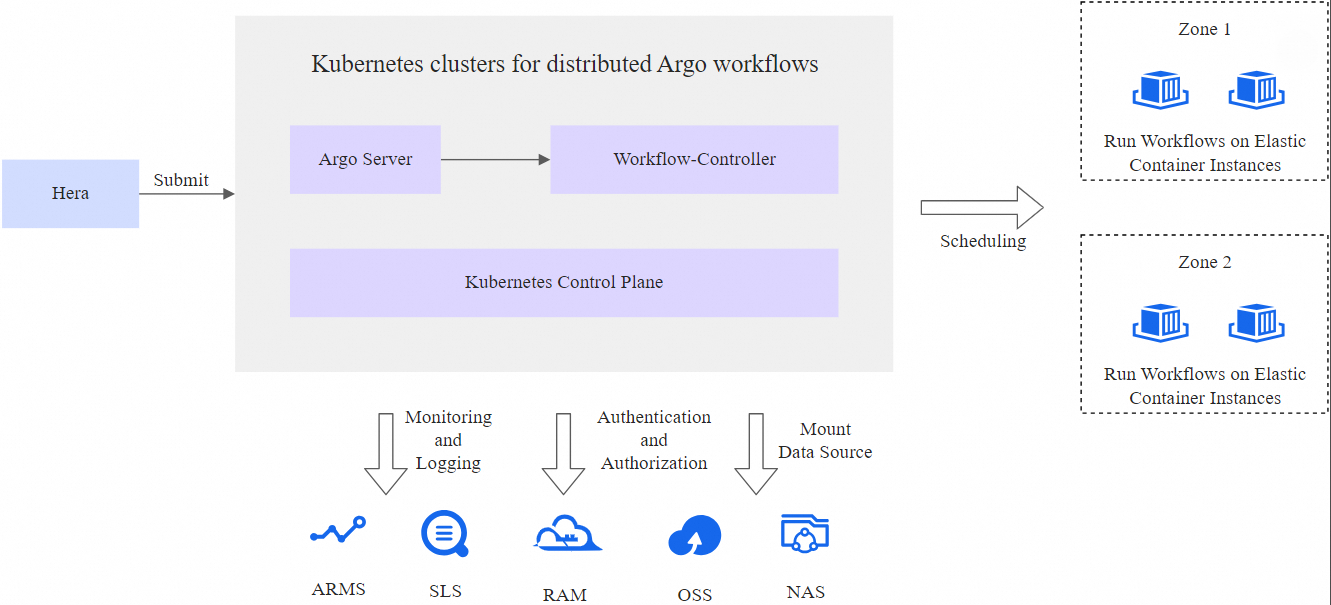

Workflow clusters of Distributed Cloud Container Platform for Kubernetes (ACK One) run in a serverless mode. Argo Workflows is a managed component of workflow clusters. The following figure shows the architecture of Argo Workflows in workflow clusters.

1. Create a Kubernetes cluster for distributed Argo workflows.

2. Use one of the following methods to enable Argo Server for the workflow cluster:

3. Run the following command to generate and obtain an access token of the cluster:

kubectl create token default -n default1) Install Hera

Run the following command to install Hera:

pip install hera-workflows2) Orchestrate and submit a workflow.

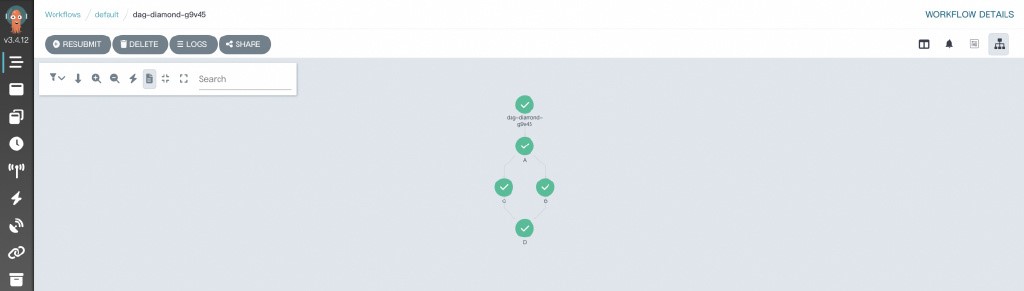

Argo Workflows uses directed acyclic graphs (DAGs) to define complex dependencies for tasks in a workflow. The Diamond structure is commonly adopted by workflows. In a Diamond workflow, the execution results of multiple parallel tasks are aggregated into the input of a subsequent task. The Diamond structure can efficiently aggregate data flows and execution results.

The following sample code provides an example on how to use Hera to orchestrate a Diamond workflow where Task A and Task B run in parallel and the execution results of Task A and Task B are aggregated into the input of Task C.

# Import the required packages.

from hera.workflows import DAG, Workflow, script

from hera.shared import global_config

import urllib3

urllib3.disable_warnings()

# Specify the endpoint and token of the workflow cluster.

global_config.host = "https://argo.{{clusterid}}.{{region-id}}.alicontainer.com:2746"

global_config.token = "abcdefgxxxxxx" # Enter the token you obtained.

global_config.verify_ssl = ""

# The script decorator is the key to enabling Python-like function orchestration by using Hera.

# You can call the function below a Hera context manager such as a Workflow or Steps context.

# The function still runs as normal outside Hera contexts, which means that you can write unit tests on the given function.

# The following code provides a sample input.

@script()

def echo(message: str):

print(message)

# Orchestrate a workflow. The Workflow is the main resource in Argo and a key class of Hera. The Workflow is responsible for storing templates, setting entry points, and running templates.

with Workflow(

generate_name="dag-diamond-",

entrypoint="diamond",

) as w:

with DAG(name="diamond"):

A = echo(name="A", arguments={"message": "A"}) # Create a template.

B = echo(name="B", arguments={"message": "B"})

C = echo(name="C", arguments={"message": "C"})

D = echo(name="D", arguments={"message": "D"})

A >> [B, C] >> D # Define dependencies. In this example, Task A is the dependency of Task B and Task C. Task B and Task C are the dependencies of Task D.

# Create the workflow.

w.create() Submit the workflow:

python simpleDAG.pyAfter the workflow starts running, you can go to the Workflow Console (Argo) to view the DAG process and the result.

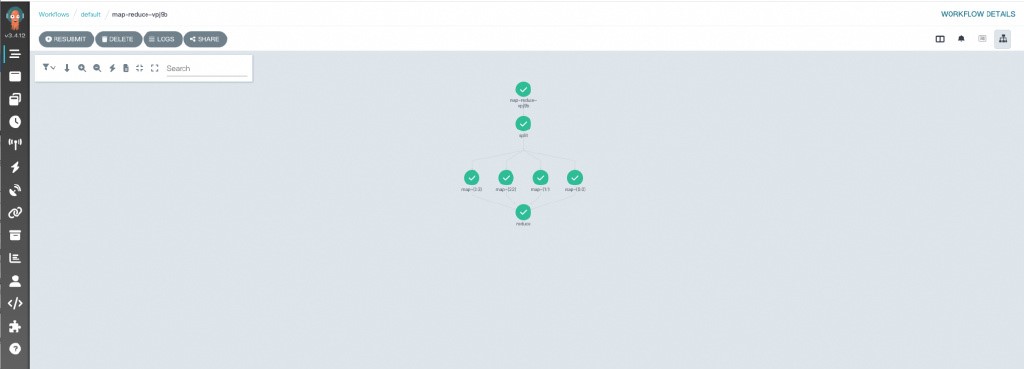

In Argo Workflows, the key to processing data in the MapReduce style is to use DAG templates to organize and coordinate multiple tasks in order to simulate the Map and Reduce phases.

The following sample code provides a detailed example on how to use Hera to orchestrate a sample MapReduce workflow that is used to count words in text files. Each step is defined in a Python function to integrate with the Python ecosystem.

from hera.workflows import DAG, Artifact, NoneArchiveStrategy, Parameter, OSSArtifact, Workflow, script

from hera.shared import global_config

import urllib3

urllib3.disable_warnings()

# Specify the endpoint of the workflow cluster.

global_config.host = "https://argo.{{clusterid}}.{{region-id}}.alicontainer.com:2746"

global_config.token = "abcdefgxxxxxx" # Enter the token you obtained.

global_config.verify_ssl = ""

# When you use the script decorator, you need to pass the script parameters to the script decorator. The parameters include image, inputs, outputs, and resources.

@script(

image="python:alpine3.6",

inputs=Parameter(name="num_parts"),

outputs=OSSArtifact(name="parts", path="/mnt/out", archive=NoneArchiveStrategy(), key="{{workflow.name}}/parts"),

)

def split(num_parts: int) -> None: # Create multiple files based on the num_parts input parameter. Each file contains the foo key and a part number as the value.

import json

import os

import sys

os.mkdir("/mnt/out")

part_ids = list(map(lambda x: str(x), range(num_parts)))

for i, part_id in enumerate(part_ids, start=1):

with open("/mnt/out/" + part_id + ".json", "w") as f:

json.dump({"foo": i}, f)

json.dump(part_ids, sys.stdout)

# Define the image, inputs, and outputs parameters in the script decorator.

@script(

image="python:alpine3.6",

inputs=[Parameter(name="part_id", value="0"), Artifact(name="part", path="/mnt/in/part.json"),],

outputs=OSSArtifact(

name="part",

path="/mnt/out/part.json",

archive=NoneArchiveStrategy(),

key="{{workflow.name}}/results/{{inputs.parameters.part_id}}.json",

),

)

def map_() -> None: # Generate new files based on the number of files that contain foo. Each new file contains the bar key and a value that equals the result of multiplying the corresponding part number by 2.

import json

import os

os.mkdir("/mnt/out")

with open("/mnt/in/part.json") as f:

part = json.load(f)

with open("/mnt/out/part.json", "w") as f:

json.dump({"bar": part["foo"] * 2}, f)

# Define the image, inputs, and outputs parameters in the script decorator.

@script(

image="python:alpine3.6",

inputs=OSSArtifact(name="results", path="/mnt/in", key="{{workflow.name}}/results"),

outputs=OSSArtifact(

name="total", path="/mnt/out/total.json", archive=NoneArchiveStrategy(), key="{{workflow.name}}/total.json"

),

)

def reduce() -> None: # Aggregate the value of the bar key for each part number.

import json

import os

os.mkdir("/mnt/out")

total = 0

for f in list(map(lambda x: open("/mnt/in/" + x), os.listdir("/mnt/in"))):

result = json.load(f)

total = total + result["bar"]

with open("/mnt/out/total.json", "w") as f:

json.dump({"total": total}, f)

# Orchestrate a workflow. Specify the workflow name, entry point, namespace, and global parameters.

with Workflow(generate_name="map-reduce-", entrypoint="main", namespace="default", arguments=Parameter(name="num_parts", value="4")) as w:

with DAG(name="main"):

s = split(arguments=Parameter(name="num_parts", value="{{workflow.parameters.num_parts}}")) # Orchestrate templates.

m = map_(

with_param=s.result,

arguments=[Parameter(name="part_id", value="{{item}}"), OSSArtifact(name="part", key="{{workflow.name}}/parts/{{item}}.json"),],

) # Specify input parameters and orchestrate templates.

s >> m >> reduce() # Define the dependencies of tasks.

# Create the workflow.

w.create()Submit the workflow:

python map-reduce.pyAfter the workflow starts running, you can go to the Workflow Console (Argo) to view the DAG process and the result.

| Feature | YAML | Hera Framework |

|---|---|---|

| Simplicity | Relatively high | High. This method is low-code. |

| Workflow orchestration complexity | High | Low |

| Integration with the Python ecosystem | Low | High. This method is integrated with rich Python libraries) |

| Testability | Low. This method is prone to syntax errors. | High. This method supports testing frameworks. |

Hera Framework gracefully integrates the Python ecosystem with Argo Workflows to reduce the complexity of workflow orchestration.

Compared with YAML, Hera Framework provides a simplified alternative to large-scale workflow orchestration. In addition, Hera Framework allows data engineers to use Python, which is familiar to them.

Hera Framework also enables seamless and efficient workflow orchestration and optimization for machine learning scenarios. This allows you to transform creative ideas into actual deployments through iterations, promoting efficient implementation and sustainable development of intelligent applications.

If you have any questions about ACK One, join the DingTalk group 35688562.

Building a Large Language Model Inference Service Optimized by TensorRT-LLM Based on KServe on ASM

224 posts | 33 followers

FollowAlibaba Container Service - December 18, 2024

Alibaba Container Service - December 18, 2024

Alibaba Container Service - April 12, 2024

Alibaba Cloud Native Community - March 11, 2024

Alibaba Container Service - November 21, 2024

Alibaba Container Service - October 15, 2024

224 posts | 33 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Container Service