By Ye Cao and Yang Che

This article explains how to use Fluid and Vineyard to optimize intermediate data management in Kubernetes and address development efficiency, cost, and performance challenges. Fluid offers dataset orchestration, allowing data scientists to build cloud-native workflows with Python. Vineyard utilizes memory mapping to enable zero-copy data sharing, which enhances efficiency. Together, they reduce network overhead and improve end-to-end performance with data affinity scheduling. A real-world example is used to illustrate the steps to install Fluid, configure data and task scheduling, and run a linear regression model with Vineyard, demonstrating effective data management in Kubernetes. In the future, the project will extend to AIGC model acceleration and Serverless scenarios.

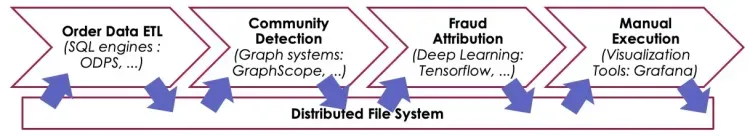

As Kubernetes gains popularity in AI and big data fields, and business scenarios become increasingly complex, data scientists are facing new challenges in R&D and operational efficiency. Modern applications often require seamless end-to-end pipelines. For instance, consider a risk control data workflow: First, order-related data is exported from the database. Then, a graph computing engine processes the raw data to build a "user-product" relationship graph. A graph algorithm identifies potential fraud groups hidden within. Next, a machine learning algorithm analyzes these groups to attribute fraud more accurately. Finally, the results undergo manual review, and final business decisions are made.

In such scenarios, we often face the following issues:

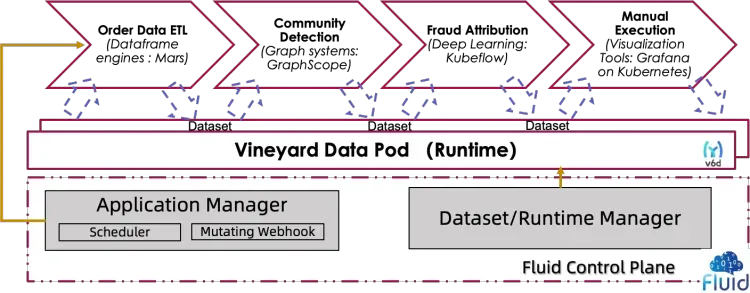

To resolve the preceding issues, we propose a solution that combines the data sharing capabilities of Vineyard with the data orchestration feature of Fluid:

• The Fluid SDK for Python makes it easy to orchestrate data flows, providing Python-savvy data scientists with a straightforward way to create and submit workflows focused on dataset operations. In addition, Fluid allows for data flow management with a single codebase in both the development and cloud production environments.

• Vineyard improves data sharing between tasks in end-to-end workflows. It enables zero-copy data sharing with memory mapping, eliminating additional I/O overhead.

• By using the data affinity scheduling feature of Fluid, the pod scheduling strategy considers data writing nodes, reducing network overhead from data migration and improving end-to-end performance.

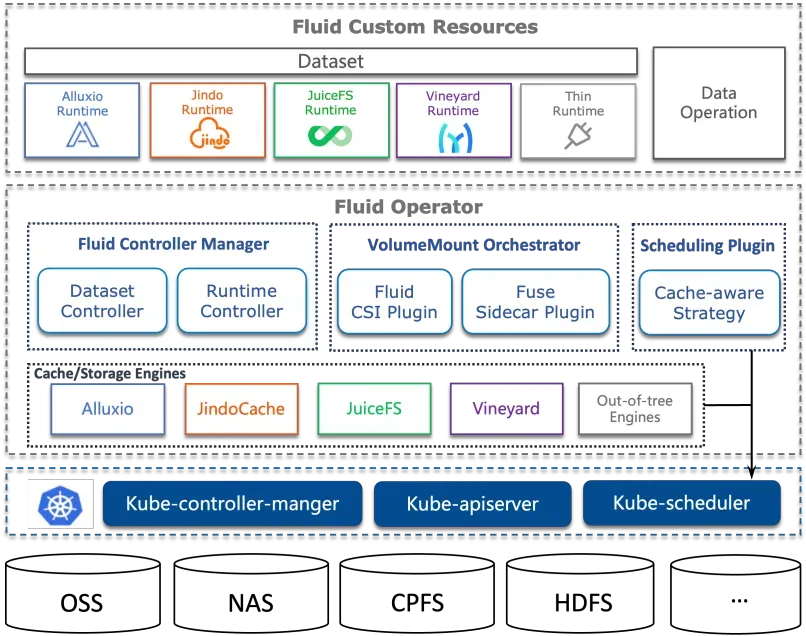

Fluid is an open source, Kubernetes-native engine for orchestrating and accelerating distributed datasets. It is designed for data-intensive applications in cloud-native environments. Fluid uses Kubernetes services to abstract the data layer. You can efficiently move, replicate, evict, transform, and manage data between storage sources such as HDFS, OSS, and Ceph, and Kubernetes cloud-native applications. Specific data operations are performed without user awareness. You do not need to worry about the efficiency of accessing remote data, the convenience of managing data sources, or how to help Kubernetes make O&M and scheduling decisions. You can directly access abstracted data with native Kubernetes volumes, while Fluid handles all remaining tasks and underlying details.

The architecture of the Fluid project is shown in the diagram below. It focuses on two key scenarios: dataset orchestration and application orchestration. For dataset orchestration, the specified datasets are cached in Kubernetes nodes with specific characteristics. For application orchestration, applications are scheduled to nodes that can store or have already stored the specified datasets. These scenarios can also be combined into a collaborative orchestration, considering both dataset and application requirements for node resource scheduling.

Next, let's introduce the concept of DataSet in Fluid. A dataset is a collection of logically related data used by computing engines such as Spark for big data or TensorFlow for AI scenarios. Dataset management involves multiple aspects such as security, version control, and data acceleration.

Fluid provides data acceleration to support dataset management. You can define a Runtime execution engine on a dataset to implement capabilities for data security, version control, and data acceleration. The Runtime engine defines a series of lifecycle interfaces that can be implemented to manage and accelerate datasets. Fluid aims to offer efficient and convenient data abstraction for cloud-native AI and big data applications. Data is abstracted from storage to achieve the following functions:

• Accelerate access to data by using data affinity scheduling and distributed cache engine acceleration to integrate data with computing.

• Manage data independently of storage and use Kubernetes namespaces to isolate resources, thus ensuring data security.

• Break down data silos caused by different storage sources by combining data from different storage sources in computing.

Vineyard is a data management engine designed for cloud-native environments to efficiently share intermediate results between different tasks in big data analytics workflows. By using shared memory, Vineyard enables zero-copy sharing of intermediate data between different computing engines, avoiding the overhead of storing data in external storage (such as local disks, S3, and OSS). This improves data processing efficiency and speed.

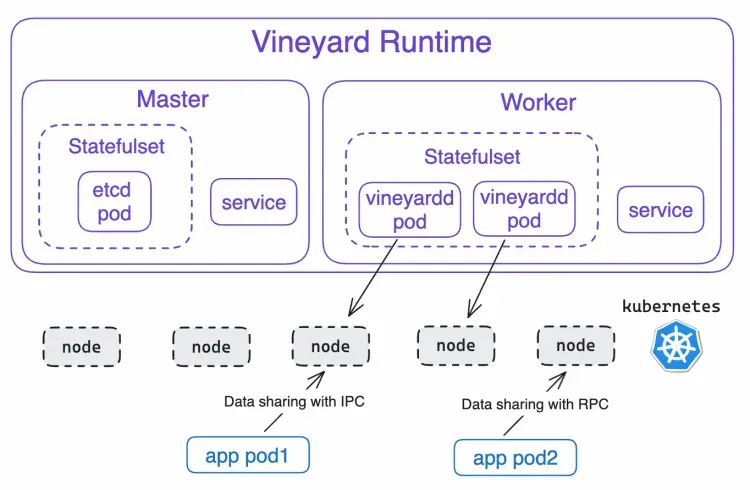

Vineyard Runtime abstracts the Vineyard-related components within Fluid. Its architecture is shown in the diagram below.

Vineyard Runtime has two core components: Master and Worker. Together, they manage the metadata and payload of each object in Vineyard:

• Master uses etcd as the metadata management system to store metadata.

• Worker uses vineyardd (the Vineyard daemon) to manage shared memory and store payload data.

For performance, when application tasks and vineyardd are on the same node, they use internal process communication (IPC) for high-speed data transfer. When on different nodes, they use remote procedure calls (RPC) for data transfer, which is relatively slower.

• High performance: Vineyard avoids unnecessary memory copying, serialization and deserialization, file I/O, and network transmission. Within a single node, applications can read data almost instantly (around 0.1 seconds) and write data in just a few seconds. When data is transmitted across nodes, the speed can approach the maximum network bandwidth.

• Ease of use: Vineyard abstracts data using distributed objects. You can store and retrieve data with simple put and get operations. It supports SDKs for multiple languages, including C++, Python, Java, Rust, and Golang.

• Python ecosystem integration: Vineyard integrates with popular Python objects such as numpy.ndarray, pandas.DataFrame, pyarrow.{Array, RecordBatch, Table}, pytorch.Dataset, and pytorch.Module.

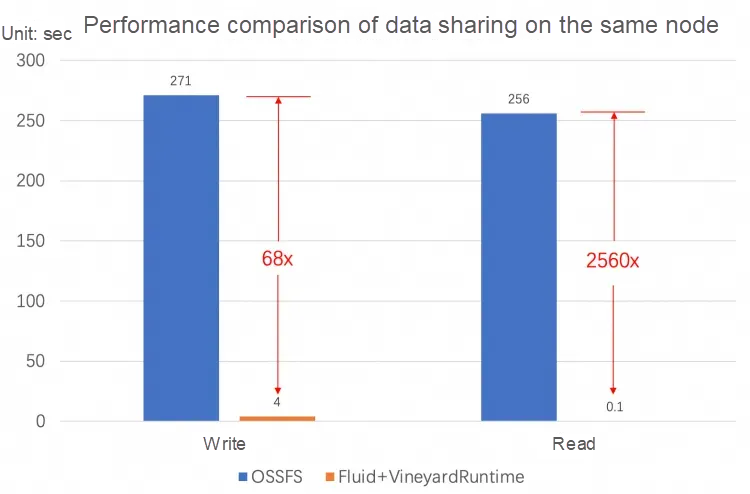

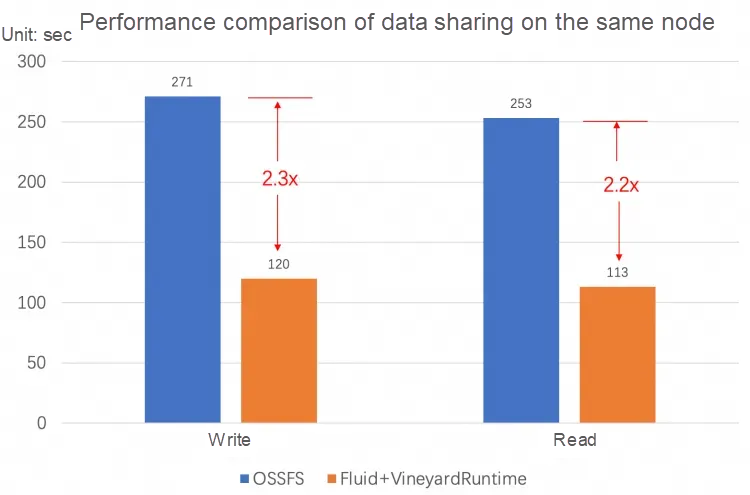

The diagram below shows the performance of Vineyard Runtime on the same node and across different nodes for a data size of 22 GB.

When a user task runs on the node where vineyardd is located, Vineyard achieves its best performance by using IPC for data transfer. Vineyard pre-allocates memory, making data writes about 68 times faster than using object storage as an intermediary. In addition, because the user task shares the same memory space with Vineyard, data is transmitted without being copied, allowing for instant data reads.

When a user task runs on a different node from vineyardd, Vineyard achieves its lowest performance using RPC for data transfer. Despite this, Vineyard can still fully utilize the available bandwidth during RPC transfers. Writing data to Vineyard is about 2.3 times faster than writing to OSS, and reading data from Vineyard is about 2.2 times faster than reading from OSS.

In this tutorial, we will show you how to train a linear regression model in Alibaba Cloud Container Service for Kubernetes (ACK) using Vineyard Runtime. Follow these steps:

Option 1: Install ack-fluid. For the installation steps, see Deploy the cloud-native AI suite.

Option 2: Use the open source version. We use Kubectl to create a namespace named fluid-system, and then use Helm to install Fluid. Run the following commands:

# Create the fluid-system namespace

$ kubectl create ns fluid-system

# Add the Fluid repository to Helm

$ helm repo add fluid https://fluid-cloudnative.github.io/charts

# Get the latest Fluid repository

$ helm repo update

# Find the development versions in the Fluid repository

$ helm search repo fluid --devel

# Deploy the appropriate version of the Fluid chart in ACK

$ helm install fluid fluid/fluid --develAfter the Fluid platform is deployed in ACK, run the following command to install the Fluid SDK for Python:

$ pip install git+https://github.com/fluid-cloudnative/fluid-client-python.gitIn a cloud environment, end-to-end data operations often consist of multiple subtasks. When these subtasks are scheduled by Kubernetes, the system only considers resource constraints and cannot ensure that two consecutive tasks will run on the same node. If you use Vineyard to share intermediate results, additional network overhead will be incurred due to data migration. To achieve optimal performance, you can use the following configmap to enable FUSE affinity scheduling, which ensures that tasks and Vineyard run on the same node. This way, related tasks will access the memory from the same node, which reduces network overhead.

# Modify the webhook-plugins configuration to enable FUSE affinity scheduling

$ kubectl edit configmap webhook-plugins -n fluid-system

data:

pluginsProfile: |

pluginConfig:

- args: |

preferred:

# Enable FUSE affinity scheduling

- name: fluid.io/fuse

weight: 100

...

# Restart the fluid-webhook pod

$ kubectl delete pod -lcontrol-plane=fluid-webhook -n fluid-systemThis step includes data preprocessing, model training, and model testing. Follow the sample code for a complete implementation. The following main steps are included:

1. Create a Fluid client: Connect to the Fluid control plane using the default kubeconfig file and create a Fluid client instance.

import fluid

# Connect to the Fluid control plane using the default kubeconfig file and create a Fluid client instance.

fluid_client = fluid.FluidClient(fluid.ClientConfig())2. Create and configure the Vineyard dataset and runtime environment: Create a dataset named vineyard and initialize its configuration, including the number of replicas and memory size. Bind the dataset to the runtime environment.

# Create a dataset named vineyard in the default namespace

fluid_client.create_dataset(dataset_name="vineyard")

# Get the vineyard dataset instance

dataset = fluid_client.get_dataset(dataset_name="vineyard")

# Initialize the configuration for the vineyard runtime and bind the dataset instance to this runtime.

# Set the number of replicas to 2 and memory size to 30 GiB.

dataset.bind_runtime(

runtime_type=constants.VINEYARD_RUNTIME_KIND,

replicas=2,

cache_capacity_GiB=30,

cache_medium="MEM",

wait=True

)3. Define data preprocessing, model training, and model evaluation functions: Implement functions for cleaning and splitting data (preprocessing), training the linear regression model (training), and evaluating model performance (testing).

# Define the data preprocessing function

def preprocess():

...

# Store training and testing data into Vineyard

import vineyard

vineyard.put(X_train, name="x_train", persist=True)

vineyard.put(X_test, name="x_test", persist=True)

vineyard.put(y_train, name="y_train", persist=True)

vineyard.put(y_test, name="y_test", persist=True)

...

# Define the model training function

def train():

...

# Retrieve testing data from Vineyard

import vineyard

x_test_data = vineyard.get(name="x_test", fetch=True)

y_test_data = vineyard.get(name="y_test", fetch=True)

...

# Define the model evaluation function

def test():

...

# Retrieve training data from Vineyard

import vineyard

x_train_data = vineyard.get(name="x_train", fetch=True)

y_train_data = vineyard.get(name="y_train", fetch=True)

...4. Create task templates and define workflows: Use the previously defined functions to create task templates for data preprocessing, model training, and model testing. Execute these tasks sequentially to form a complete workflow.

preprocess_processor = create_processor(preprocess)

train_processor = create_processor(train)

test_processor = create_processor(test)

# Create the task workflow for the linear regression model: data preprocessing > model training > model testing

# The mount path /var/run/vineyard is the default path for Vineyard configuration files.

flow = dataset.process(processor=preprocess_processor, dataset_mountpath="/var/run/vineyard") \

.process(processor=train_processor, dataset_mountpath="/var/run/vineyard") \

.process(processor=test_processor, dataset_mountpath="/var/run/vineyard")5. Submit and execute the workflow: Submit the workflow to the Fluid platform, execute the workflow, and wait for the tasks to complete.

# Submit the task workflow for the linear regression model to the Fluid platform and execute the workflow

run = flow.run(run_id="linear-regression-with-vineyard")

run.wait()6. Clean resources: After execution, clean up all resources created on the Fluid platform.

# Clean up all resources

dataset.clean_up(wait=True)With this Python code, data scientists can use a kind environment for debugging in local development, while using ACK as the production environment. This approach enhances development efficiency and achieves excellent runtime performance.

By combining the data orchestration feature of Fluid with the efficient data sharing mechanisms of Vineyard, we can resolve issues in Kubernetes data workflows such as low development efficiency, high intermediate data storage costs, and poor runtime efficiency.

Looking ahead, we plan to support two key scenarios: AIGC model acceleration, where we will improve model loading performance by eliminating FUSE overhead and storage object format conversion, and serverless support, where we provide efficient native data management for serverless containers.

Alibaba Cloud Service Mesh (ASM): Efficient Traffic Management with Gateway API

Fluid 1.0: Bridging the Last Mile for Efficient Cloud-Native Data Usage

222 posts | 33 followers

FollowAlibaba Container Service - August 16, 2024

Alibaba Container Service - February 25, 2025

Alibaba Cloud Native - July 14, 2023

Alibaba Cloud Native Community - March 1, 2022

Alibaba Developer - March 1, 2022

Alibaba Developer - July 8, 2021

222 posts | 33 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Container Service