By Shuangkun Tian

With the deepening of technical requirements in fields such as autonomous driving and scientific computing and the increasing richness of the Kubernetes ecosystem, containerization has become the mainstream mode of batch task execution. In response to this trend, the market offers two broad categories of solutions: one is a closed platform independently developed by cloud service providers represented by Batch Compute, and the other is an open and compatible platform built for the open-source project Argo Workflows.

For enterprise R&D teams, it is crucial to choose a batch task platform that meets their business needs, which is directly related to development efficiency, cost control, and scalability of future technologies. This article uses a typical data processing application scenario as an example to compare the core features and applicable scenarios of Batch Compute and Argo Workflows to assist technology decision-makers in making more appropriate choices.

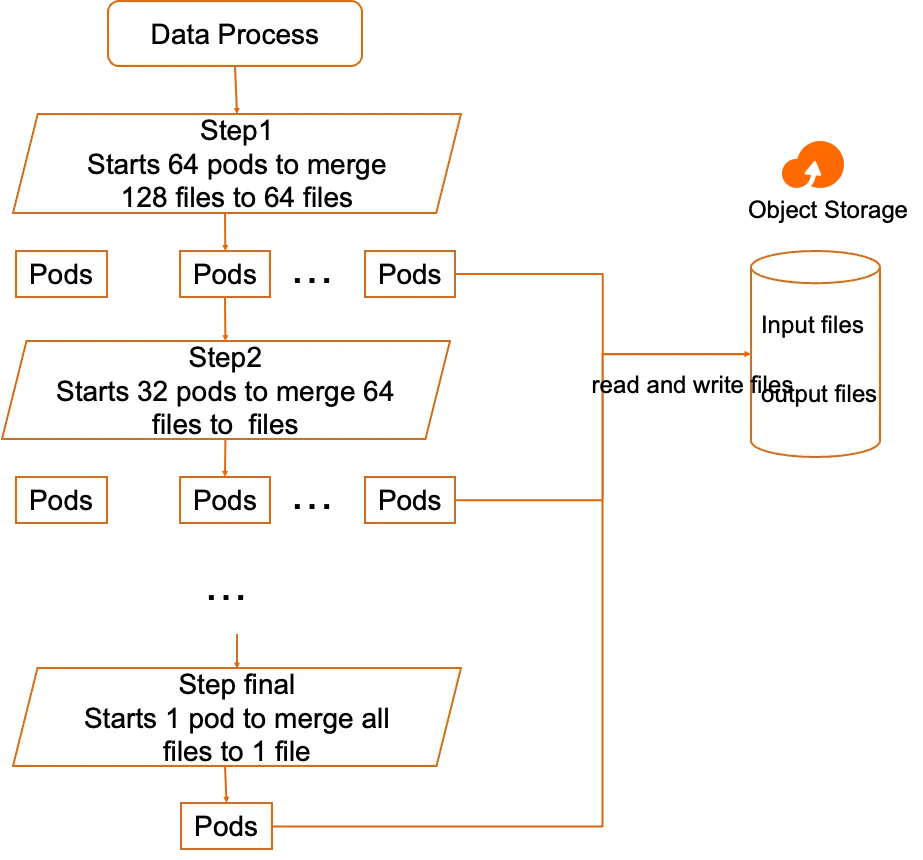

The following figure shows a typical data processing task. In the first step, 64 pods are used for data processing to merge 128 files into 64 files. In the second step, 32 pods are used for data processing to merge 64 files into 32 files. In the last step, a pod is started to calculate the final result and output it to OSS.

Architecture diagram:

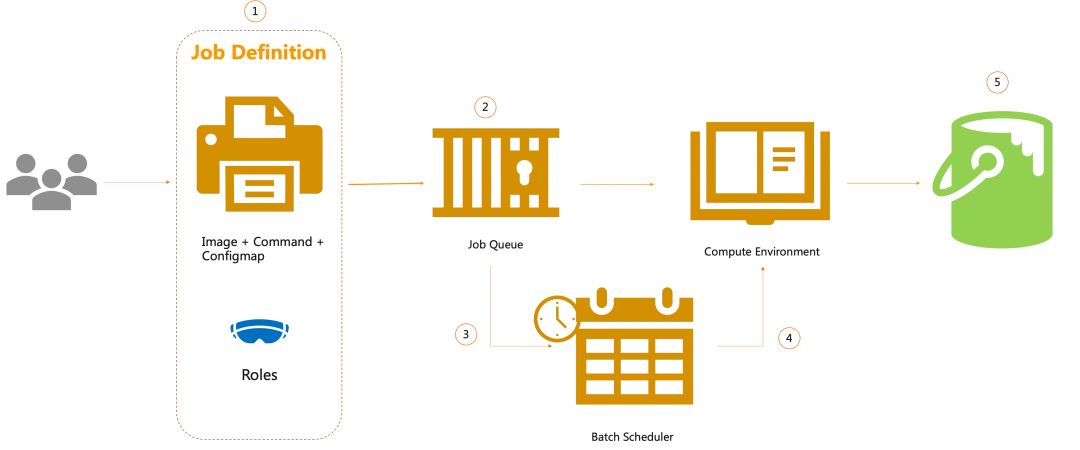

Batch Compute is a fully managed service that allows you to run batch workloads at any scale. The following procedure describes how Batch Compute runs each job.

The first step is to create task definitions such as process-data and merge-data. You need to prepare images, startup parameters, and required resources. The Batch Compute service usually provides user-friendly console interaction. To facilitate programming and avoid frequent console demonstrations, we directly use JSON to define tasks.

a. process-data

{

"type": "container",

"containerProperties": { # Execution command

"command": [

"python",

"process.py"

],

"image": "python:3.11-amd", # Image address

"resourceRequirements": [ # Resource requirements

{

"type": "VCPU",

"value": "1.0"

},

{

"type": "MEMORY",

"value": "2048"

}

],

"runtimePlatform": {

"cpuArchitecture": "X86_64", # CPU architecture

"operatingSystemFamily": "LINUX"

},

"networkConfiguration": {

"assignPublicIp": "DISABLED"

},

"executionRoleArn": "role::xxxxxxx", # Permission

},

"platformCapabilities": [ # Backend resources: server or serverless container

"Serverless Container"

],

"jobDefinitionName": "process-data" # Job definition name

}b. merge-data

{

"type": "container",

"containerProperties": { # Execution command,merge.py

"command": [

"python",

"merge.py"

],

"image": "python:3.11-amd", # Image

"resourceRequirements": [ # Resource requirements

{

"type": "VCPU",

"value": "1.0"

},

{

"type": "MEMORY",

"value": "2048"

}

],

"runtimePlatform": {

"cpuArchitecture": "X86_64",

"operatingSystemFamily": "LINUX"

},

"networkConfiguration": {

"assignPublicIp": "ENABLED"

},

"executionRoleArn": "role::xxxx", # Permission

"repositoryCredentials": {},

},

"platformCapabilities": [ # Backend resources: server or serverless container

"Serverless Container"

],

"jobDefinitionName": "merge-data" # Job definition name

}a. Define and submit process-data-l1 Job

Job definition:

{

"jobName": "process-data-l1",

"jobDefinition": "arn::xxxx:job-definition/process-data:1", # Definition used by the job

"jobQueue": "arn::xxxx:job-queue/process-data-queue", # Queue used by the job

"dependsOn": [],

"arrayProperties": { # Number of started tasks

"size": 64

},

"retryStrategy": {},

"timeout": {},

"parameters": {},

"containerOverrides": {

"resourceRequirements": [],

"environment": []

}

}Submit to get the Job ID:

# batch submit process-data-l1 | get job-id

job-id: b617f1a3-6eeb-4118-8142-1f855053b347b. Submit process-data-l2 Job

This job depends on process-data-l1 Job.

{

"jobName": "process-data-l2",

"jobDefinition": "arn::xxxx:job-definition/process-data:2", # Definition used by the job

"jobQueue": "arn::xxxx:job-queue/process-data-queue", # Queue used by the job

"dependsOn": [

{

"jobId": "b617f1a3-6eeb-4118-8142-1f855053b347" # process-data-l1的job Id

}

],

"arrayProperties": { # Number of started tasks

"size": 32

},

"retryStrategy": {},

"timeout": {},

"parameters": {},

"containerOverrides": {

"resourceRequirements": [],

"environment": []

}

}Submit to get the Job ID:

# batch submit process-data-l2 | get job-id

job-id: 6df68b3e-4962-4e4f-a71a-189be25b189cc. Submit merge-data Job

This job depends on process-data-l2 Job.

{

"jobName": "merge-data",

"jobDefinition": "arn::xxxx:job-definition/merge-data:1", # Definition used by the job

"jobQueue": "arn::xxxx:job-queue/process-data-queue", # Queue used to merge jobs

"dependsOn": [

{

"jobId": "6df68b3e-4962-4e4f-a71a-189be25b189c" # process-data-l2的job Id

}

],

"arrayProperties": {},

"retryStrategy": {},

"timeout": {},

"parameters": {},

"containerOverrides": {

"resourceRequirements": [],

"environment": []

}

}Submit the job:

batch submit merge-data

All tasks run normally in order.

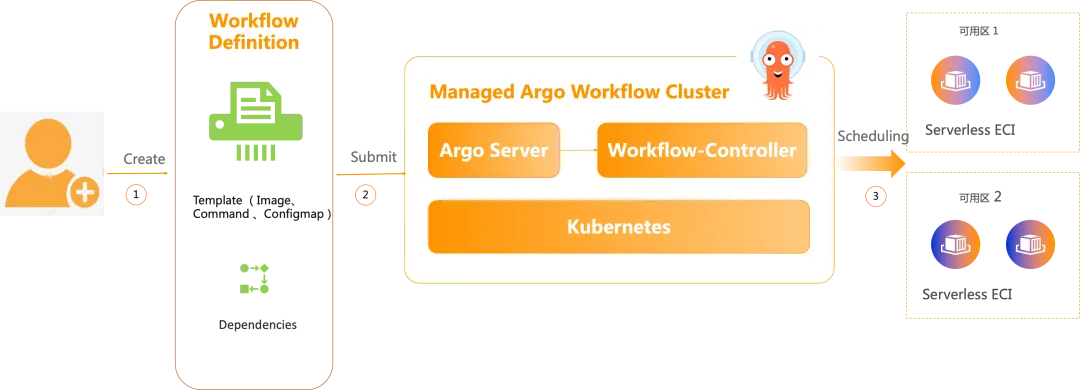

Serverless Argo Workflows is a fully managed service of Alibaba Cloud, which is built based on the open-source Argo Workflows project and fully complies with open-source workflow standards. It allows you to run batch loads of any scale on Kubernetes. It adopts the serverless mode and uses Alibaba Cloud Elastic Container Instance (ECI) to run workflows. By optimizing Kubernetes cluster parameters, it implements efficient and elastic scheduling of large-scale workflows and works with preemptible ECI instances to optimize costs. The following procedure describes how Serverless Argo runs each job:

1) Job definition, which specifies how to run a job, including the CPU, memory, image, and execution commands of each job. Job dependency definition, including serial, parallel loop, and retry.

2) Submit the workflow to the Serverless Argo cluster.

3) Serverless Argo evaluates the resources of each job and schedules elastic instances to run the job.

When building a workflow, define the job and its dependencies in the same file.

In the first step, define the process-data and merge-data task templates, and describe the image and startup parameters of each task.

In the second step, define the Step/DAG template to describe the parallel and serial execution relationships of tasks.

In the third step, integrate the template dependencies, storage, and input parameters into a workflow.

You can use YAML or SDK for Python to build workflows. Their construction methods are shown respectively below.

a. Use YAML to build workflows

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: process-data- # Data processing workflow

spec:

entrypoint: main

volumes: # OSS mounting

- name: workdir

persistentVolumeClaim:

claimName: pvc-oss

arguments:

parameters:

- name: numbers

value: "64"

templates:

- name: main

steps:

- - name: process-data-l1 # First-level processing: 64 pods are started and 128 files are merged.

template: process-data

arguments:

parameters:

- name: file_number

value: "{{item}}"

- name: level

value: "1"

withSequence:

count: "{{workflow.parameters.numbers}}"

- - name: process-data-l2 # Second-level processing: 32 pods are started and 64 files are merged after the previous step is completed.

template: process-data

arguments:

parameters:

- name: file_number

value: "{{item}}"

- name: level

value: "2"

withSequence:

count: "{{=asInt(workflow.parameters.numbers)/2}}"

- - name: merge-data # Last-level processing: a pod is started and 32 files are merged after the previous step is completed.

template: merge-data

arguments:

parameters:

- name: number

value: "{{=asInt(workflow.parameters.numbers)/2}}"

- name: process-data # process-data task definition

inputs:

parameters:

- name: file_number

- name: level

container:

image: argo-workflows-registry.cn-hangzhou.cr.aliyuncs.com/argo-workflows-demo/python:3.11-amd

imagePullPolicy: Always

command: [python3] # command

args: ["process.py", "{{inputs.parameters.file_number}}", "{{inputs.parameters.level}}"]# Receive input parameters and start pods for processing.

volumeMounts:

- name: workdir

mountPath: /mnt/vol

- name: merge-data # merge-data task definition

inputs:

parameters:

- name: number

container:

image: argo-workflows-registry.cn-hangzhou.cr.aliyuncs.com/argo-workflows-demo/python:3.11-amd

imagePullPolicy: Always

command: [python3]

args: ["merge.py", "0", "{{inputs.parameters.number}}"] # Receive input parameters and process files.

volumeMounts:

- name: workdir

mountPath: /mnt/volSubmit the workflow:

argo submit process-data.yamlb. Use SDK for Python to build workflows

from hera.workflows import Container, Parameter, Steps, Workflow, Volume

import urllib3

urllib3.disable_warnings()

# Configure the access address and token

global_config.host = "https://argo.{{clusterid}}.{{region-id}}.alicontainer.com:2746"

global_config.token = "abcdefgxxxxxx" # Enter the token of the cluster

global_config.verify_ssl = ""

with Workflow(

generate_name="process-data-", # Data processing workflow

entrypoint="main",

volumes=[m.Volume(name="workdir", persistent_volume_claim={"claim_name": "pvc-oss"})], # OSS mounting

arguments=[Parameter(name="numbers", value="64")]

) as w:

process-data = Container( # process-data task definition

name="process-data",

inputs=[Parameter(name="file_number"), Parameter(name="level")],

image="argo-workflows-registry.cn-hangzhou.cr.aliyuncs.com/argo-workflows-demo/python:3.11-amd",

command=["python3"],

args=["process.py","{{inputs.parameters.file_number}}", "{{inputs.parameters.level}}"],

volume_mounts=[

m.VolumeMount(name="workdir", mount_path="/mnt/vol"),

],

)

merge-data = Container( # merge-data task definition

name="merge-data",

inputs=[Parameter(name="number")],

image="argo-workflows-registry.cn-hangzhou.cr.aliyuncs.com/argo-workflows-demo/python:3.11-amd",

command=["python3"],

args=["merge.py", "0", "{{inputs.parameters.number}}"],

volume_mounts=[

m.VolumeMount(name="workdir", mount_path="/mnt/vol"),

],

)

with Steps(name="main") as s:

process-data(

name="process-data-l1",

arguments=[Parameter(name="file_number", value="{{item}}"), Parameter(name="level", value="1")],

) # First-level processing: 64 pods are started and 128 files are merged.

process-data(

name="process-data-l2",

arguments=[Parameter(name="file_number", value="{{item}}"), Parameter(name="level", value="2")],

) # Second-level processing: 32 pods are started and 64 files are started after the previous step is completed.

merge-data(

name="merge-data",

arguments=[Parameter(name="number", value="{{=asInt(workflow.parameters.numbers)/2}}")],

) # Last-level processing: a pod is started and 32 files are merged after the previous step is completed.

# Create a workflow

w.create()Submit the job:

python process.pyAfter you submit the workflow built by using Yaml or SDK for Python, you can view the workflow running status in the Argo Server console.

The workflow is executed in order.

It can be seen that both Serverless Argo Workflows and Batch Compute have comprehensive support for container batch processing. Despite the similarity in their core goals, there are some key differences in task definition, usage scenarios, flexibility, and resource management. The following table shows a brief comparison.

| Batch Compute | Serverless Argo Workflows | |

|---|---|---|

| Job Definition | Console/Json definition with multiple steps and interactions. | Define the template in Yaml, which is general and concise. Support definitions in Python. |

| Dependency Definition | Enter the dependency. Tasks need to be submitted in sequence and be orchestrated with a script. | Tasks are defined in Workflow Yaml and submitted once. |

| Scale Concurrency | High with tens of thousands of tasks and thousands of concurrent tasks | High with tens of thousands of tasks and thousands of concurrent tasks |

| Advanced Orchestration | Flow orchestration capabilities of cloud vendors | Natively support complex orchestration logic. It can also be combined with the flow orchestration capabilities of cloud vendors through the Kubernetes API. |

| Portability | Weak, only applicable to specific cloud vendor ecosystems | Strong, compatible with open-source ecosystems, and support cross-cloud running |

| Supported Resource Type | Most of them are servers and resources usually need to be reserved. | Serverless container, and resources can be used and perform auto scaling on demand. |

| Console | Console, easy to use | Argo Server Console, aligning with open source |

| Ecosystem Integration | Compatible with the cloud vendor ecosystem | Compatible with the open-source Kubernetes ecosystem and integrate good observability |

Both Serverless Argo Workflows and Batch Compute offer comprehensive support for container batch processing. The choice of Argo Workflows or Batch Compute mainly depends on your technology stack, your reliance on cloud vendors, the complexity of your workflow, and the need for control. If your team is familiar with Kubernetes and needs highly customized workflows, Argo Workflows may be a better choice. Conversely, if you operate within the cloud vendor ecosystem and are looking for an easy-to-use solution that is tightly integrated with other cloud vendor services, Batch Compute may be more suitable for you.

Best Practices for Managing Knative Traffic Based on Service Mesh

Cloud Elasticity Provided by ACK One Registered Clusters: A New Tool for Business Expansion

224 posts | 33 followers

FollowAlibaba Cloud Native Community - March 11, 2024

Alibaba Container Service - December 18, 2024

Alibaba Container Service - July 24, 2024

Alibaba Container Service - September 19, 2024

Alibaba Container Service - November 21, 2024

Alibaba Container Service - July 1, 2024

224 posts | 33 followers

Follow Batch Compute

Batch Compute

Resource management and task scheduling for large-scale batch processing

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Container Service