Watch the replay of the Apsara Conference 2024 at this link!

At KubeCon in Seattle, North America in 2018, the Alibaba Cloud Container Service team shared the industry's first open-source GPU container sharing and scheduling solution for the Kubernetes community. Since then, container service and hot technologies like GPU cluster scheduling, deep learning, AI training and inference, and big models have become increasingly closely linked.

In 2021, the container service began to promote the concept and reference architecture of cloud-native AI in the CNCF community, participate in the construction of Kubeflow cloud-native machine learning open-source community, and promote the Kubernetes scheduler framework to support batch task scheduling, create Kube-queue task queues, and accelerate fluid dataset orchestration and other open-source projects.

In 2023, before the CNCF community published its Cloud Native AI white paper, the Alibaba Cloud Container Service for Kubernetes (ACK) cloud-native AI suite was commercially launched. At the same year's Apsara Conference container session, we shared the key technologies of container products in the field of cloud-native AI. By supporting PAI Lingjun products and customers' AIGC/LLM applications, the container service entered the AI intelligent computing and large model fields.

At Apsara Conference 2024, we summarized the past practical experience, discovery and, thinking of supporting AI intelligent computing basic base, and gave a speech on Innovation and Practice of ACK in AI Intelligent Computing Scenarios. We hope to share what we have done with customers and the community and also look forward to promoting exchanges and co-construction in the field of cloud-native AI.

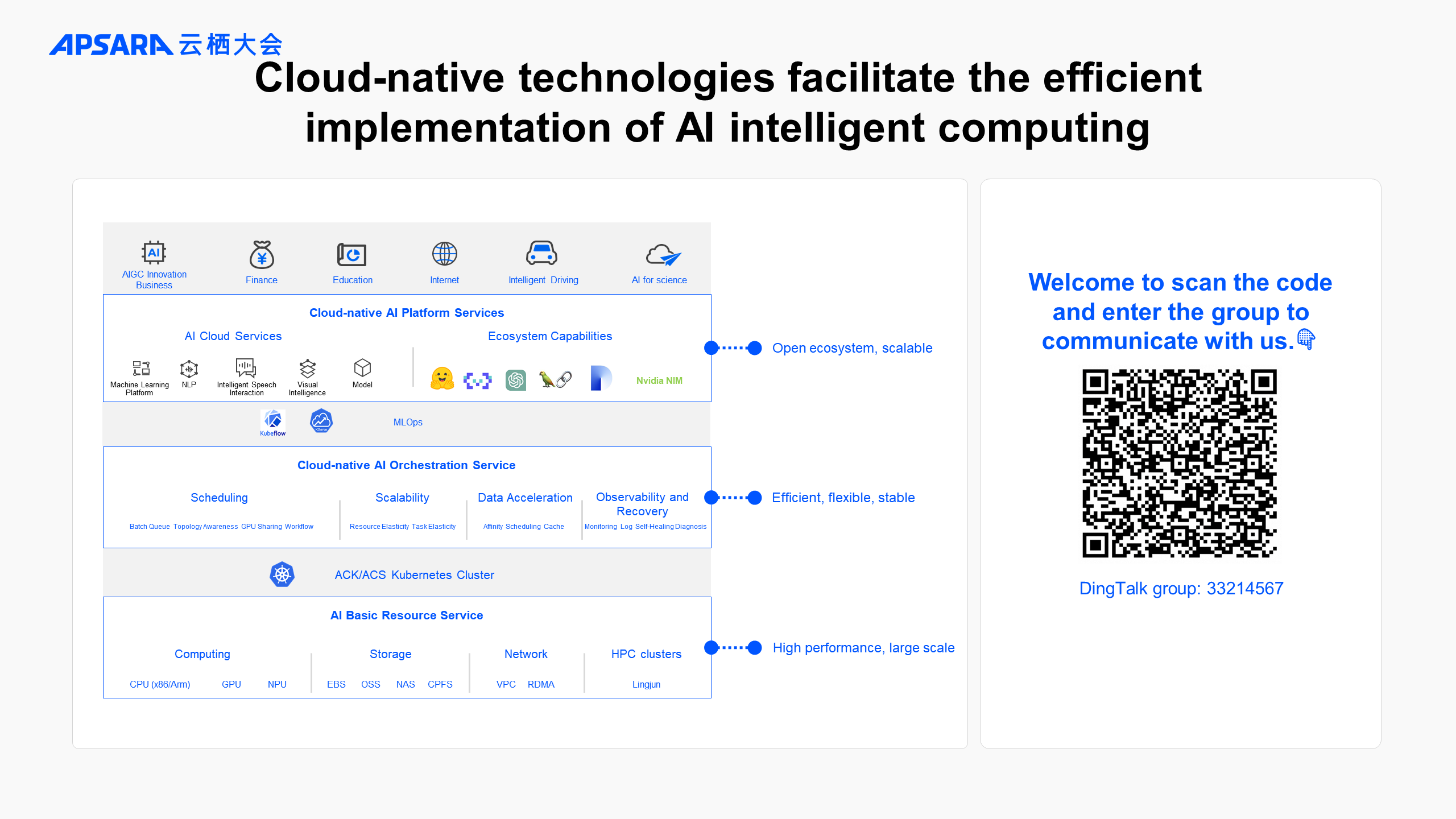

ACK focuses on the requirements of intelligent computing and large model scenarios and provides several optimizations in the layered architecture of the cloud-native AI system to enhance the stability, elasticity, efficiency, and openness of Kubernetes to support AI workloads. The main work includes:

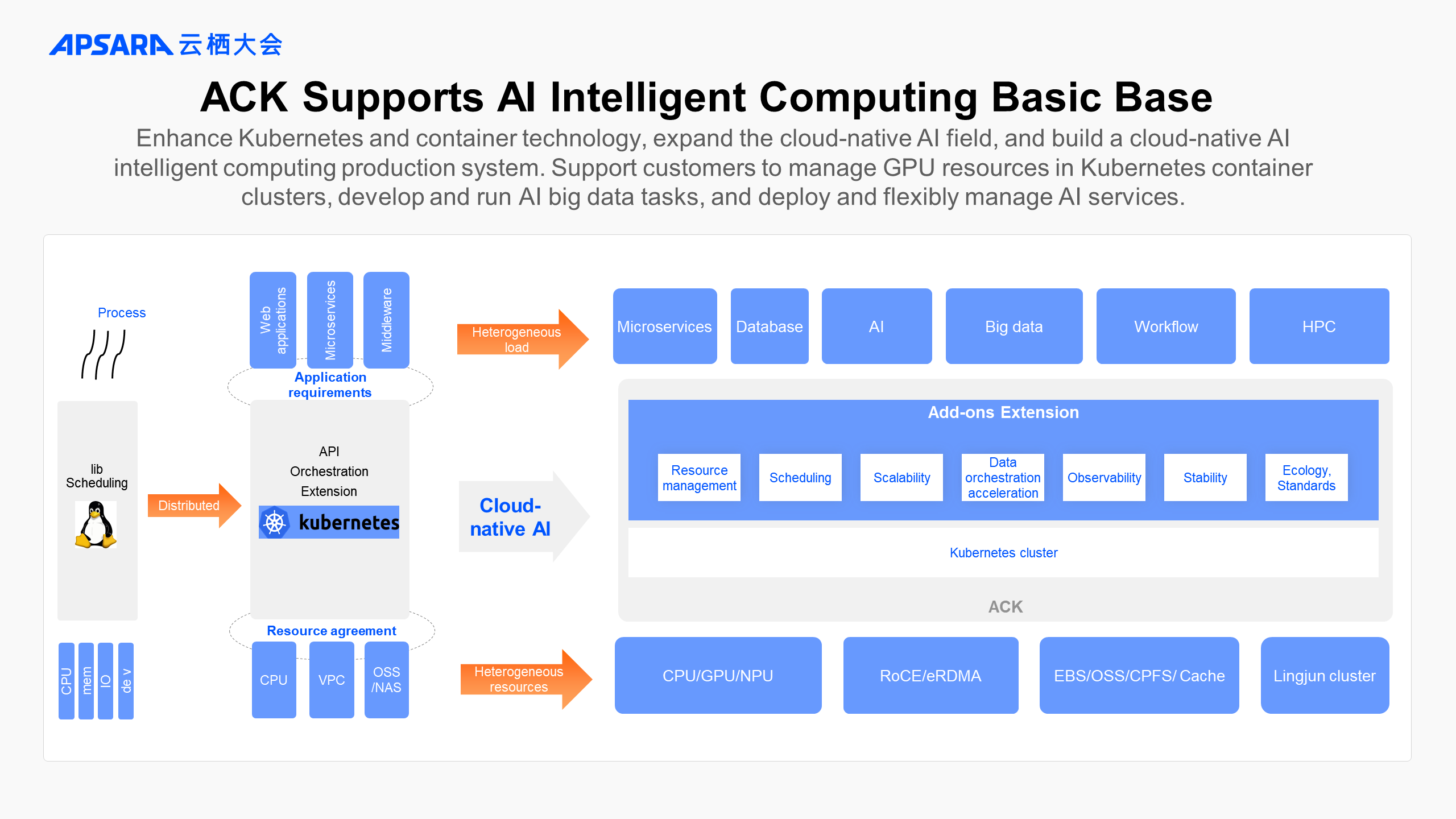

After 10 years of development, Kubernetes container technology has become the basic technology base for managing business applications and microservices. Kubernetes is often compared to a distributed operating system in the cloud era.

In the AI era, Kubernetes continues to evolve rapidly, giving rise to the new domain of cloud-native AI. Users can manage and schedule high-performance heterogeneous resources such as GPU, NPU, and RDMA in Kubernetes clusters, develop and run AI and big data tasks in containerized mode, and deploy AI inference services.

By hosting Kubernetes clusters and expanding a series of innovative capabilities in the cloud-native AI field, ACK builds a cloud-native AI base to support users' AI intelligent computing production systems.

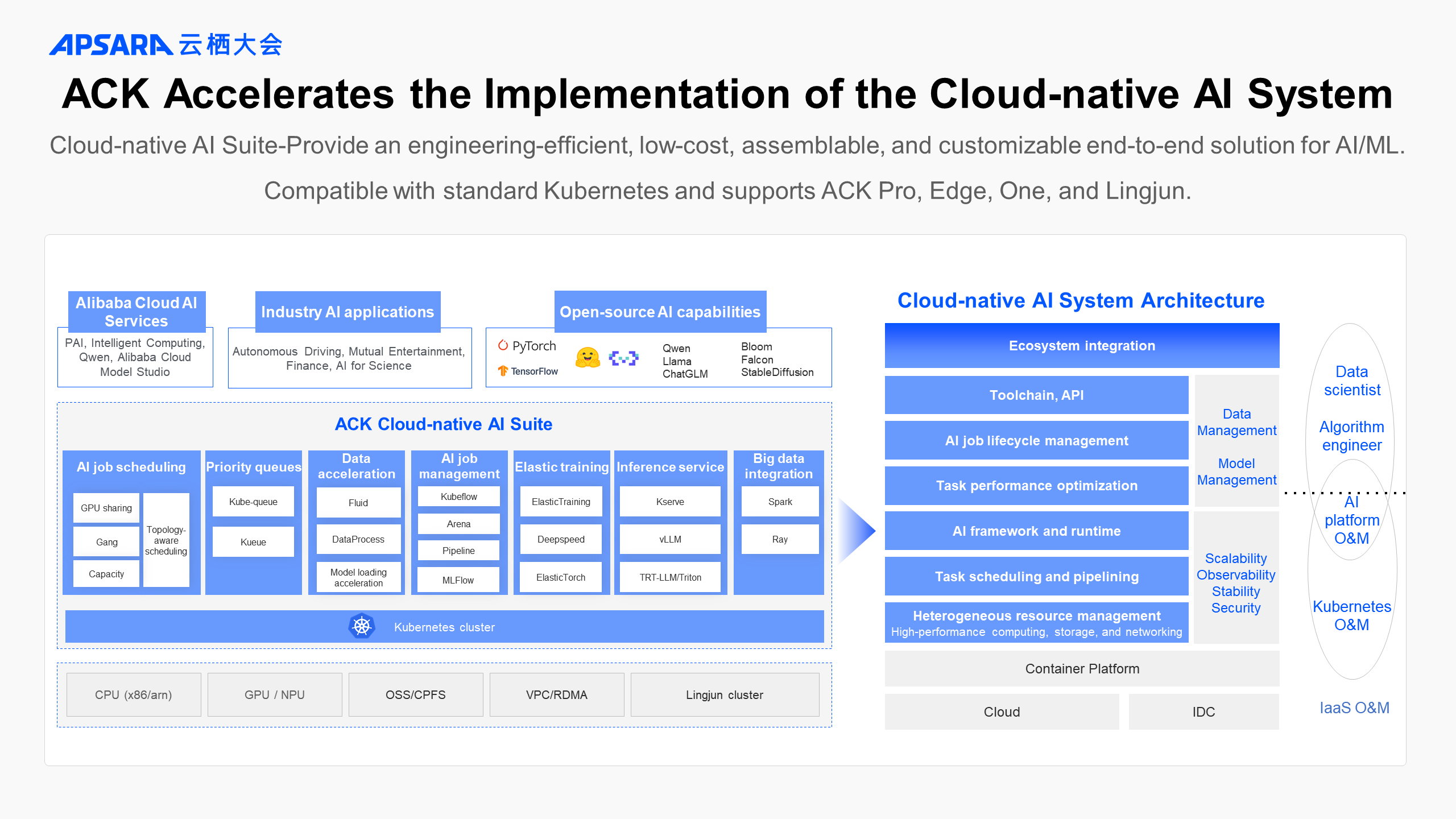

Over the years, ACK has supported the needs of a large number of customers for AI big data load and GPU cluster scheduling. We have distilled our production practices into the ACK cloud-native AI suite product, which was commercially launched in 2023.

The cloud-native AI suite is compatible with standard Kubernetes and supports various cluster types such as ACK Pro, Edge, One, and Lingjun, as well as the new ACS. It has been implemented in production for customers in various fields, including autonomous driving, AI for science, Internet, finance, and large model services.

At the same time, ACK also first proposed a cloud-native AI system layered architecture in the industry. For different roles, it provides unified management of underlying heterogeneous resources, task orchestration and scheduling, AI framework support, AI task lifecycle management, and performance optimization, and then integrates with a wide range of open-source AI communities through standard APIs.

Through the cloud-native AI suite, we hope to provide AI/ML customers and communities with a complete set of end-to-end solutions with high engineering efficiency, low cost, and customizable assembly. The goal is to help users build the basic base of the cloud-native AI platform in their Kubernetes clusters within 30 minutes.

Cloud-native AI Suite and ACK documentation:

Looking back over the past year, the leap-forward development in the fields of LLMs and AIGC has greatly promoted the rapid evolution of AI distributed training and inference technologies, as well as large-scale GPU intelligent computing cluster scheduling and management technologies.

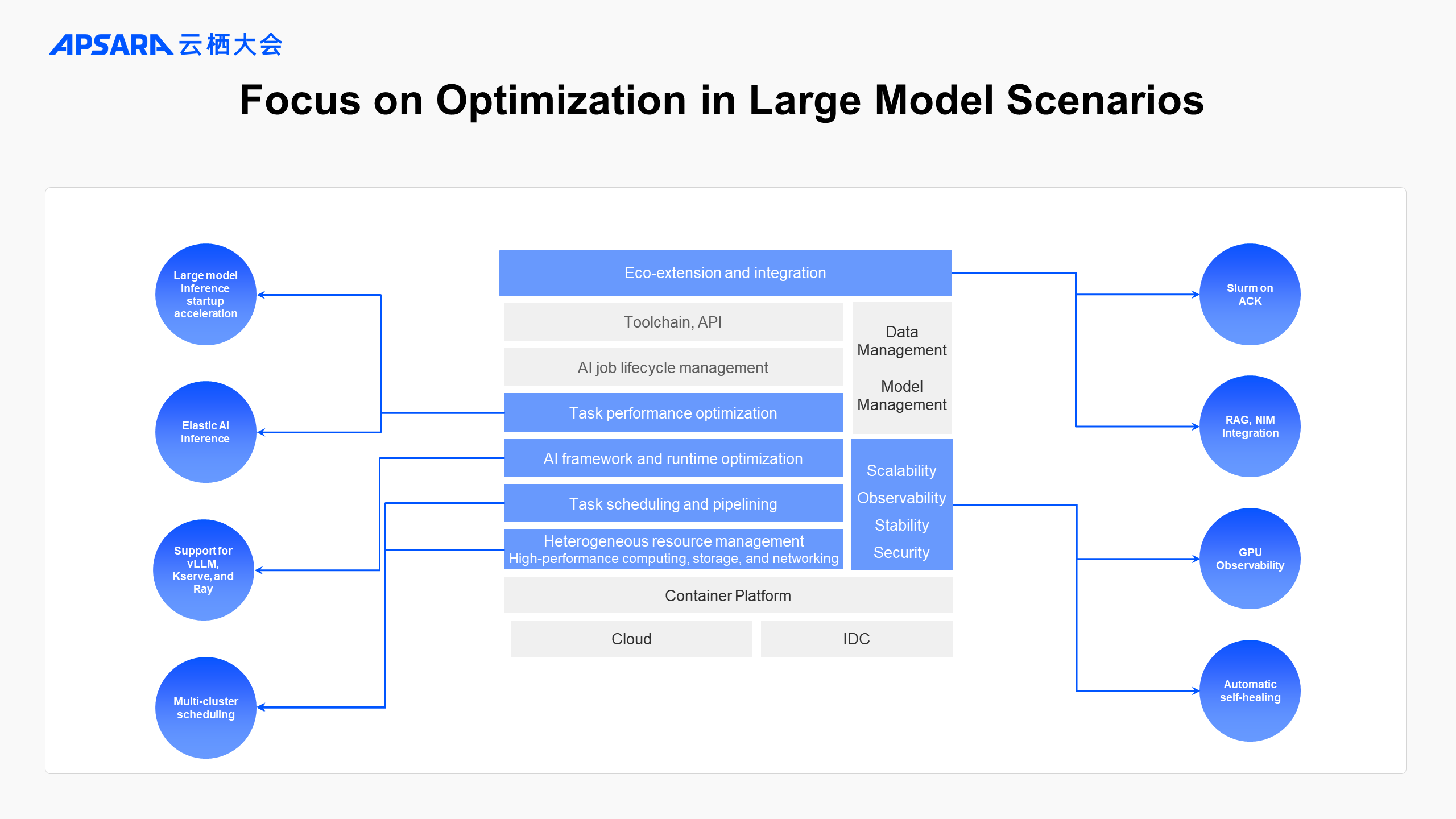

In this context, ACK cloud-native AI Suite focuses on large model scenarios and provides a series of basic capability optimization and innovative practices.

Large models bring many challenges and demands to underlying basic services, such as the challenges of large-scale GPU resource requirements, cluster stability challenges, the need for task elasticity policies and performance optimization, and the demand for integration with fast-growing model and computing framework ecosystems.

Cloud-native AI Suite focuses on these requirements, optimizing layer by layer in a layered architecture:

It prioritizes enhancing GPU observability and fault self-healing capabilities.

It also implements unified scheduling of AI tasks in multi-cluster and cross-region GPU resource pools to solve the problem of resource shortage.

Built-in best practices based on vLLM, Kserve, and other open-source large model inference engines.

Optimize the performance and cost of AI inference services through more flexible auto scaling policies.

It integrates more distributed computing and task scheduling systems such as Ray and Slurm, and also integrates many excellent LLM application architecture schemes, such as RAG schemes like Dify and Flowise, and Nvidia NIM large model inference acceleration schemes, providing customers' cloud-native AI systems with wider and deeper connections with the community.

The following section describes these new capabilities of the ACK cloud-native AI suite in more detail.

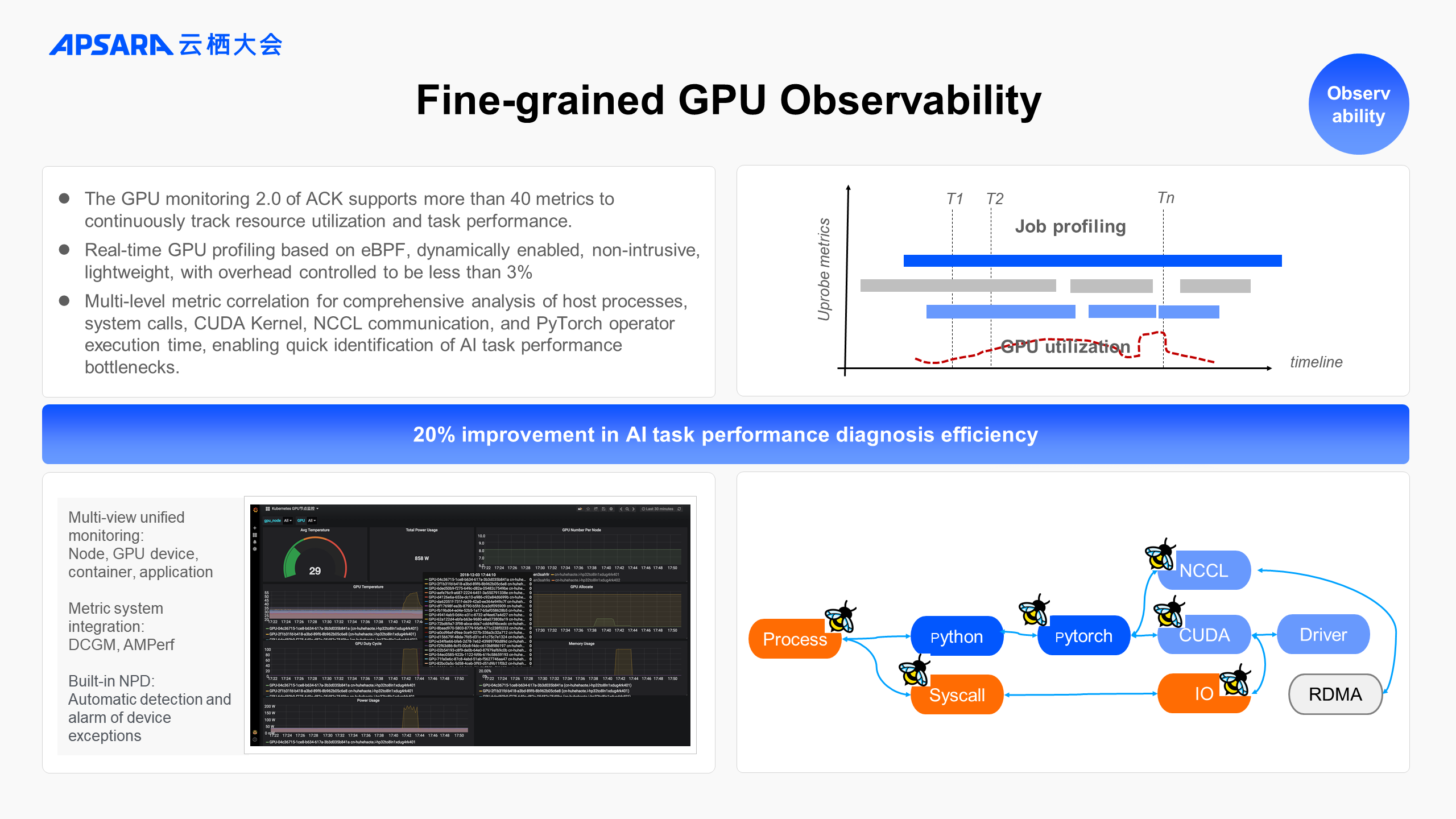

First, real-time observation and analysis of GPU resource utilization and performance of GPU tasks are essential for insight and optimization of the efficiency of AI intelligent computing systems.

In terms of monitoring, the ACK GPU monitoring dashboard supports more than 40 metrics by default, covering different perspectives of resource utilization and task performance.

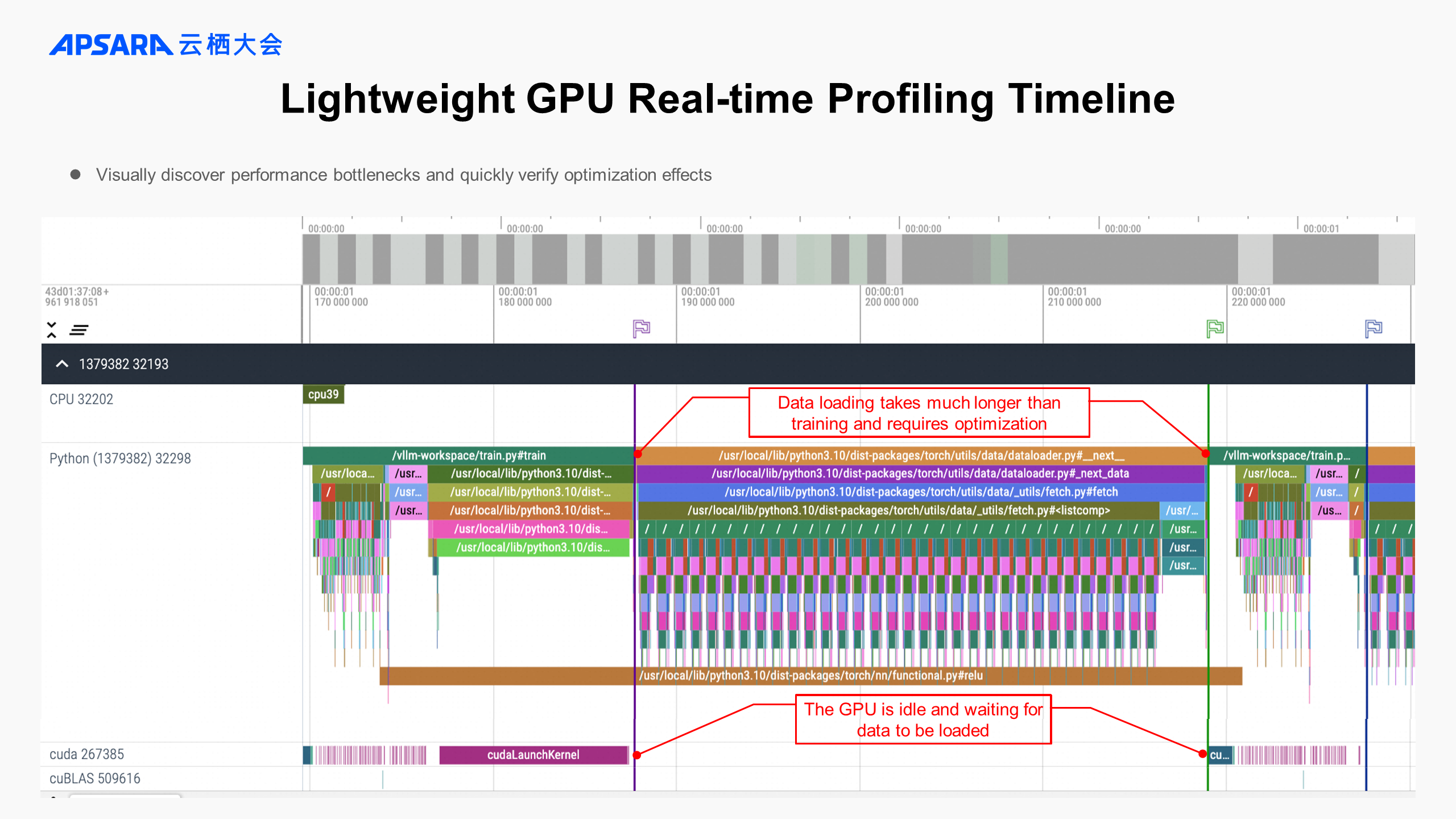

In terms of diagnostics, ACK adds the real-time GPU profiling capability based on the eBPF technology. When an AI task is running, it can be dynamically enabled and continuously track the task execution process in a non-intrusive manner.

CPU processes, system calls, Python and Pytorch method scheduling, CUDA operators, and the running processes of NCCL communication and Data I/O operations are associated and analyzed on a consistent timeline. This allows you to more intuitively and quickly locate the performance bottlenecks of online AI tasks.

Compared with offline diagnostic tools such as NSight and Pytorch profiler, the eBPF-based real-time GPU profiling solution is more lightweight and can be dynamically enabled without interrupting tasks.

It is easier to dynamically initiate diagnosis when problems occur online and find optimization points in time. This improves the diagnosis efficiency of AI tasks on ACK by 20%.

As shown in the preceding example, through the analysis of the call time of the CUDA operator and torch training code, it is obvious that the loading time of training data is much longer than that of training computing, indicating a clear area for optimization.

The stability of AI intelligent computing clusters greatly affects the efficiency and cost of model training and inference.

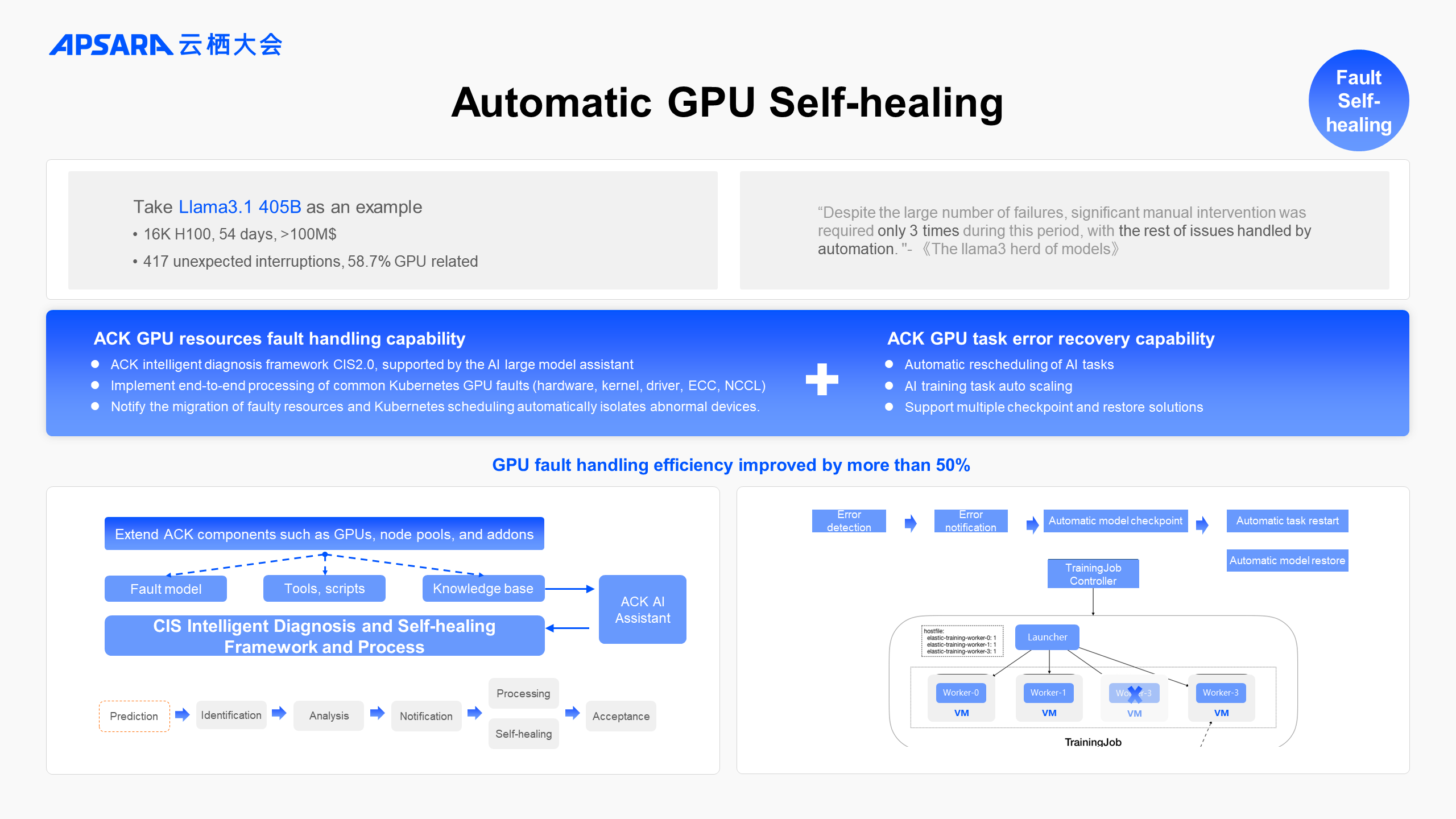

In the case of training Llama3.1 405B model by Meta, which took 54 days, there were 417 unexpected interruptions, 58.7% of which were GPU-related failures.

Automatic handling of faults was crucial for Llama3.1 in completing training. According to Meta's technical report, except for three instances of manual intervention, all other faults were handled automatically.

In container intelligent computing scenarios, ACK provides the CIS intelligent diagnosis and self-healing system. It supports components such as GPUs, node pools, and Kubernetes Addons to freely extend specific fault types, and customize fault diagnosis and recovery tools and scripts. It accelerates root cause analysis by integrating the ACK AI assistant with the LLM knowledge base. Therefore, the whole process from the discovery of faults to self-healing is automatically handled.

ACK combines the automatic processing of GPU resource faults with the error recovery capability of AI tasks. After a GPU fault is found, on the one hand, the underlying IaaS is notified for resource diagnosis, migration, and maintenance. On the other hand, Kubernetes scheduling automatically isolates the faulty resource to prevent it from affecting new tasks. At the same time, it is combined with the training framework to initiate quick checkpointing of the model, and reschedule tasks under appropriate conditions to resume training.

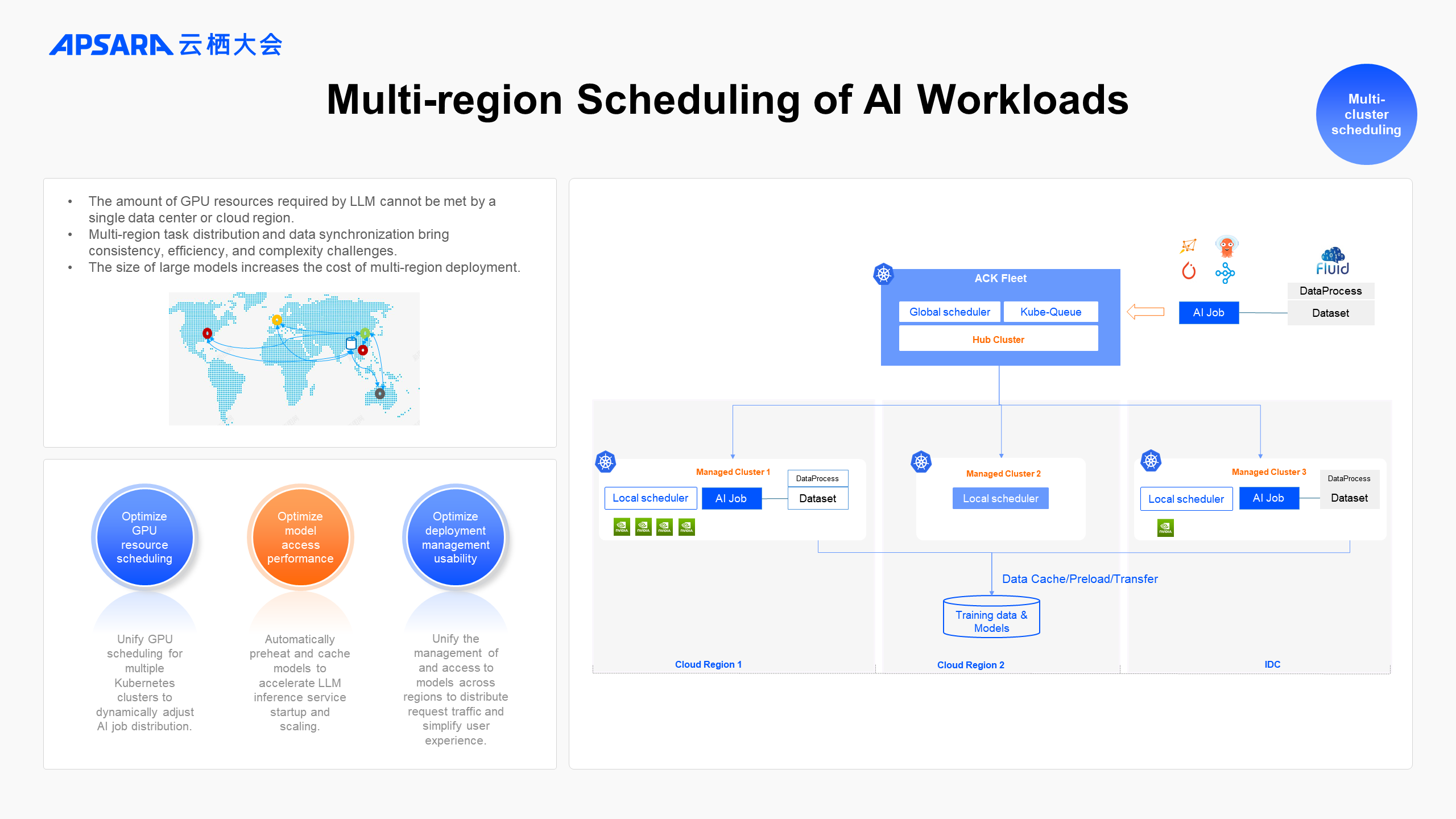

The scaling laws for large models support the continuous growth of GPU computing power requirements. A single data center or a single region of the cloud may not be able to meet the large-scale GPU resource requirements for big tasks. The industry has been experimenting with solutions for unified scheduling of multi-region, multi-cluster resources and AI tasks.

However, multi-region task distribution and data synchronization bring consistency, efficiency, and complexity challenges.

ACK enhances the computing and data co-scheduling capabilities of ACK One. This allows you to schedule AI workloads across regions in multiple Kubernetes clusters. It supports strategies such as inventory priority and cost optimization to alleviate GPU resource provisioning challenges. Further, it is also necessary to dynamically observe and adjust the distribution of AI jobs to continuously balance the optimal match between GPU resources and AI loads.

It should be noted that the large size of a large model affects the cost and performance of deploying model services in multiple regions. Accelerating the startup and auto scaling of the LLM inference service in multiple clusters by automatically preheating and caching models to different regions, optimizing the efficiency and cost of GPU resource usage.

At the same time, it is also necessary to simplify the complexity of user experience in multi-cluster environments by managing cross-region model service request traffic in a unified manner.

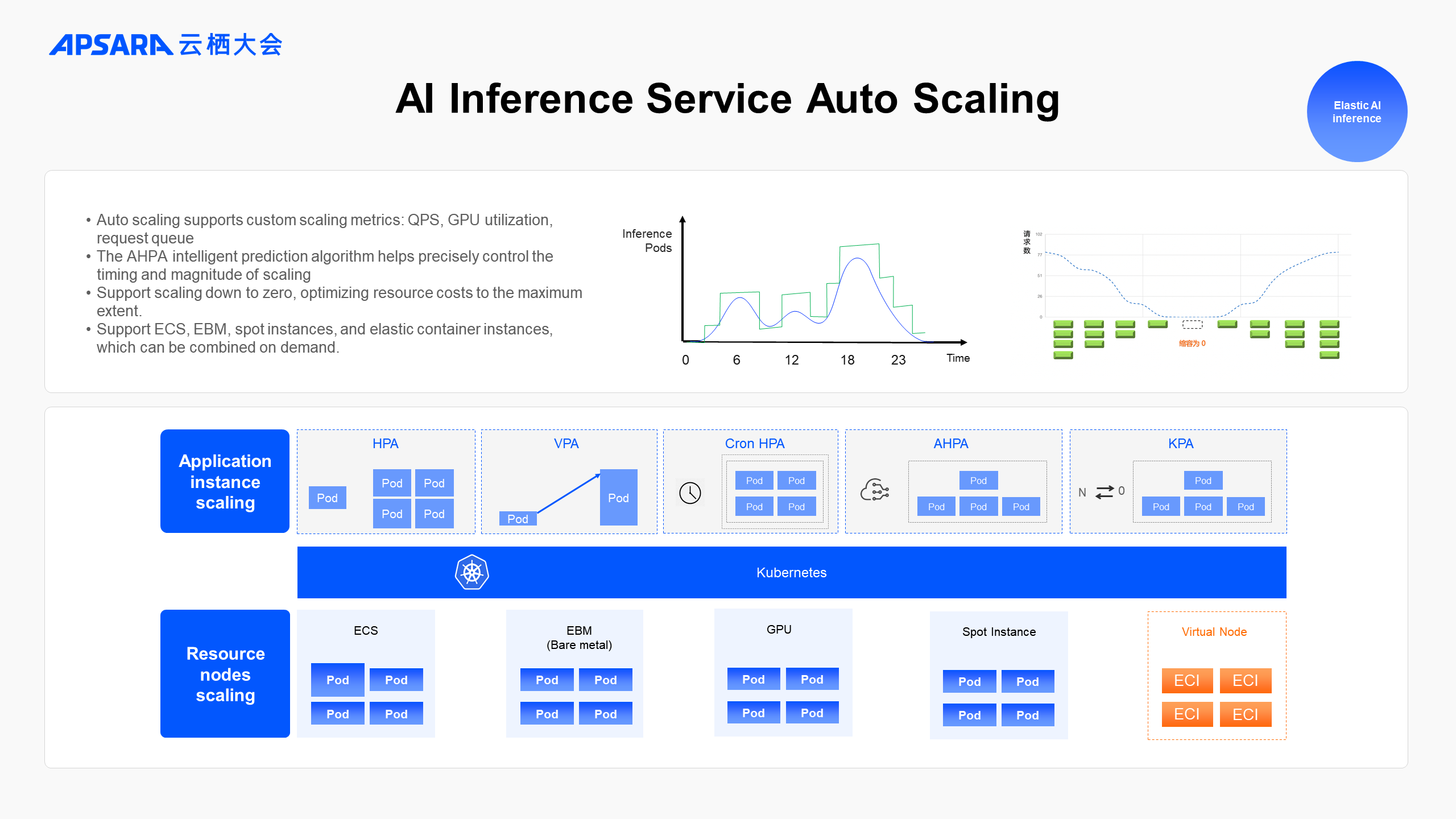

AI inference services, like many online businesses, are characterized by changes in access peak and off-peak hours. On the cloud, auto scaling is a best practice to deal with changes in service traffic.

ACK supports automatic scaling of AI inference services based on custom metrics such as QPS, request latency, GPU utilization, and token request queue length.

To provide more timely and accurate resource elasticity, ACK provides AHPA, an intelligent elasticity policy supported by the time series data prediction algorithm, to precisely control the timing and magnitude of scaling.

At the same time, combined with Knative, it also supports the ability to scale down AI inference instances to zero, so as to optimize resource costs to the maximum extent.

However, using the auto scaling capabilities of the inference service presents additional challenges at scale, especially in large model scenarios. For example:

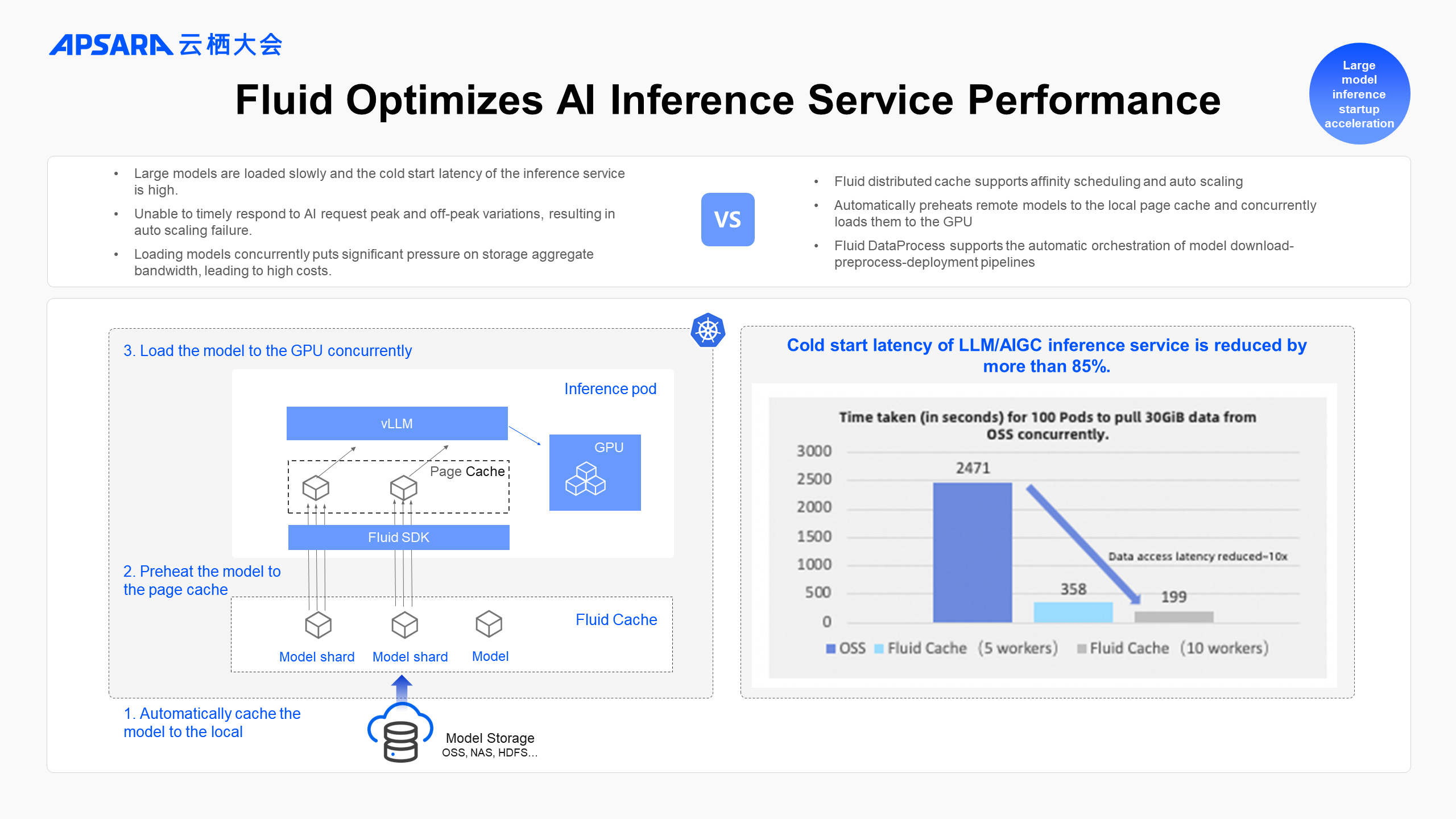

Large models are loaded slowly, and the cold start latency of the inference service is high.

Unable to timely respond to AI request peak and off-peak variations, resulting in auto scaling failure.

Loading models concurrently puts significant pressure on storage aggregate bandwidth, leading to high costs.

ACK cloud-native AI suite extends the caching acceleration of Fluid Datasets to large model inference. You can use Fluid Dataset and Preload to automatically preheat remote large model parameters to the local page cache, and use the Fluid SDK to concurrently load the parameters to the GPU memory.

End-to-end model caching and preheating optimization help reduce the cold start latency of the LLM/AIGC inference service by more than 85%. It not only supports the acceleration of model service containers on ECS/EGS and bare metal instances, but also the acceleration of ECI/ACS serverless instances. This effectively supports the feasibility and practical value of large model inference service auto scaling.

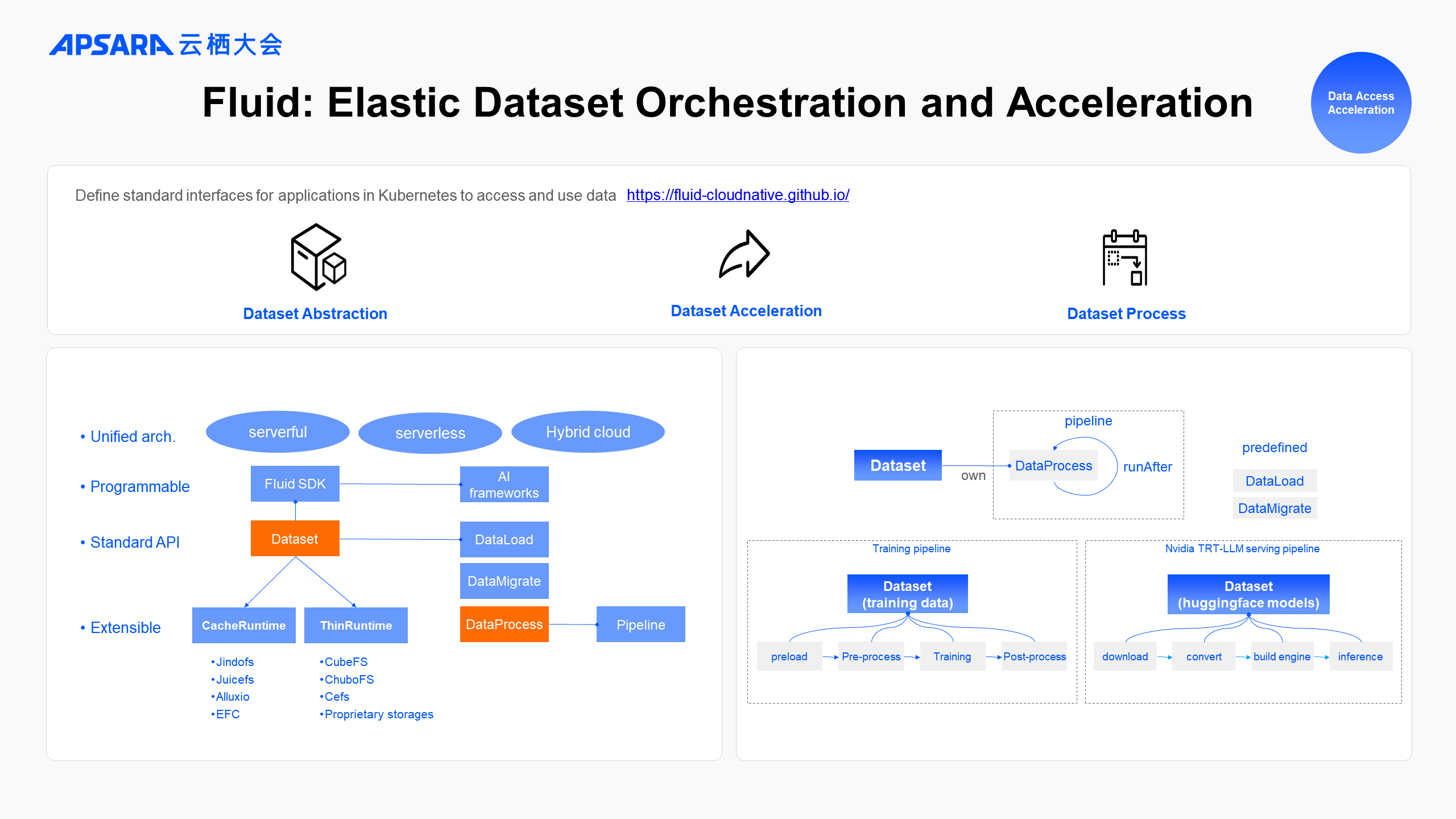

Fluid is a cloud-native dataset orchestration and acceleration open-source project co-founded by Alibaba Cloud, Nanjing University, Alluxio, and other organizations. Fluid defines a standard interface for applications in Kubernetes to access and consume various types of disparate data sources. Fluid presents the Dataset abstraction, which models the process of using data for tasks. It supports the distributed cache technology to accelerate access to datasets and the minimalist Dataset Centric data processing pipeline.

Fluid can be used to accelerate distributed training tasks to read training data and accelerate the start of large model inference services. You can use Fluid DataProcess to customize the orchestration of the download- preprocess- deployment process of inference service models. For example, the TensorRT-LLM can automatically execute convert model > build engine > serving.

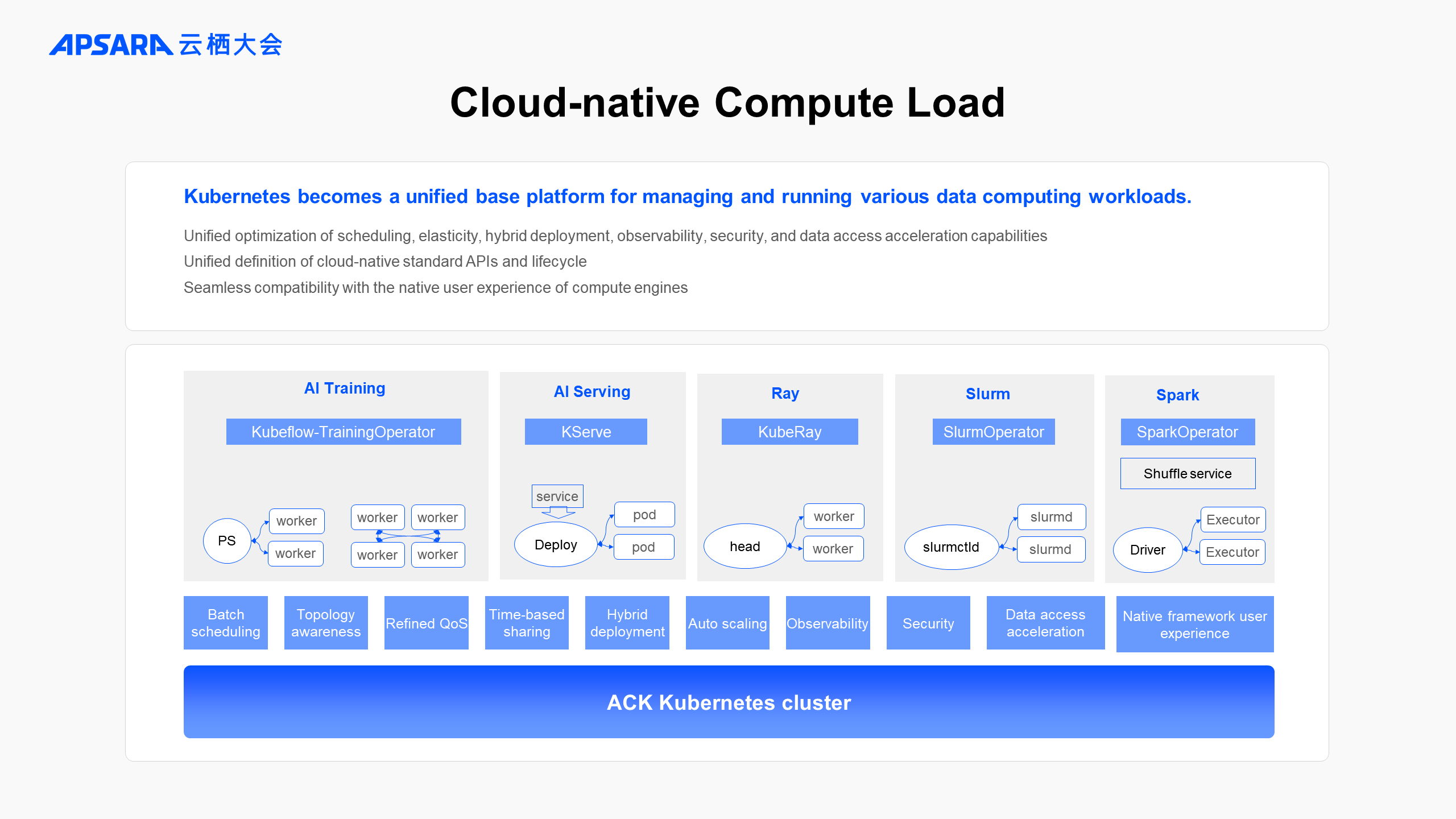

In the evolution path of many customers' IT system architectures, the integration of intelligent computing and big data applications with cloud-native technologies is forming a trend. The community is working to enhance Kubernetes to become a unified basic platform for managing and running various data computing workloads.

In ACK Kubernetes clusters, you can easily and efficiently run AI training tasks, inference services, and Spark/Flink big data tasks.

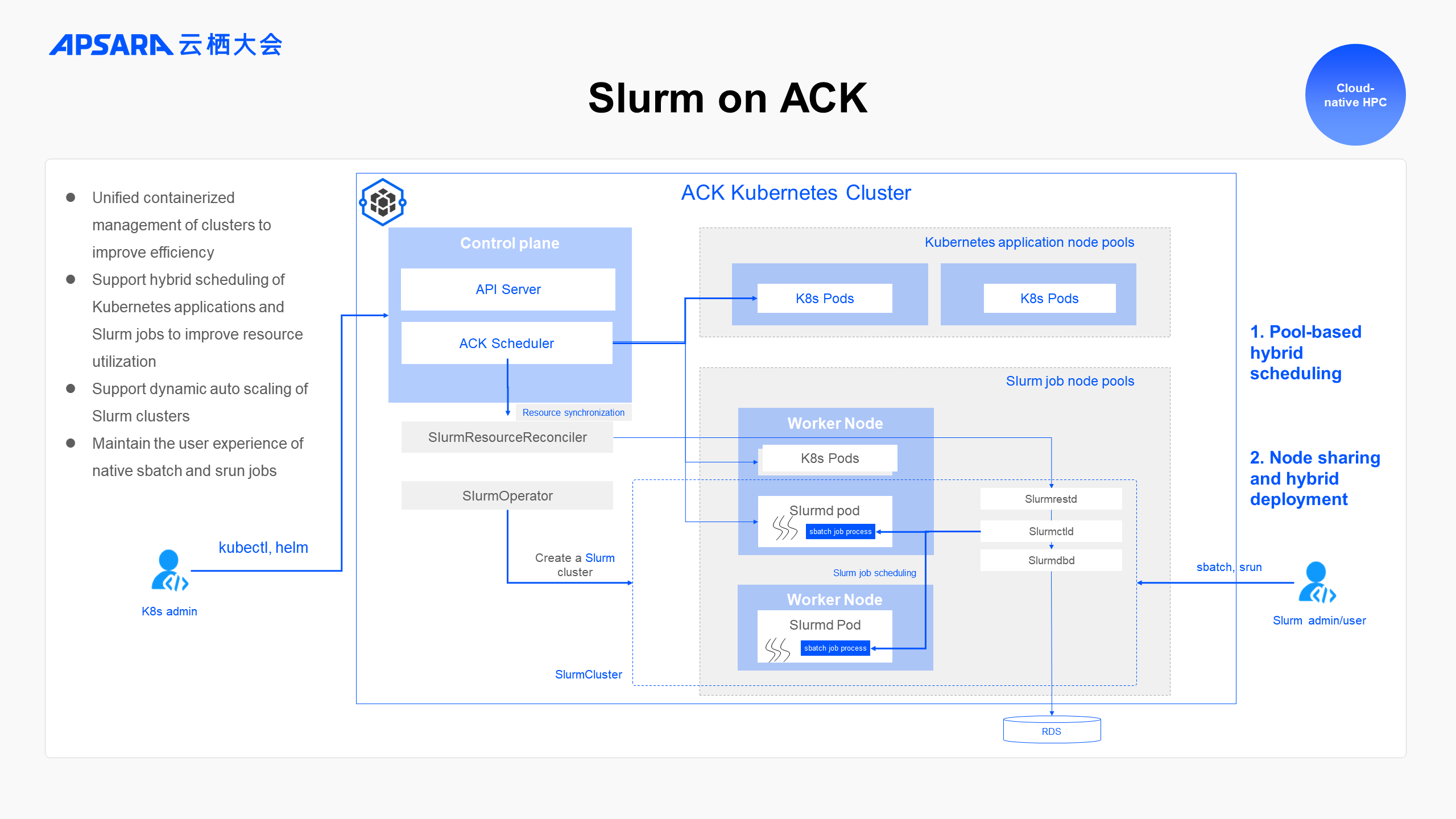

In addition, ACK adds support for the distributed computing framework Ray and the Slurm task scheduling capability in the traditional HPC domain. It proposes a Ray on ACK and Slurm on ACK product solution, and manages the clusters and tasks of Ray and Slurm through the standard Operator mechanism. It focuses on the advantages of the cloud and containers to optimize scheduling, elasticity, hybrid, observability, security, data access acceleration, and other capabilities. At the same time, it is seamlessly compatible with the native user experience of Ray and Slurm.

ACK is also actively promoting the upstream open-source community. Under the Kubernetes system, it defines cloud-native task standard APIs and lifecycles that support various computing frameworks and task types. This helps you manage and schedule all types of data computing workloads in Kubernetes clusters based on unified standards and interfaces.

ACK extends the Kube-scheduler framework to integrate with the Slurm scheduling system, supporting both node-level scheduling within node pools and mixed scheduling of shared node resources. With Kube-queue, you can manage the queuing of Kubernetes jobs and Slurm jobs in a unified manner.

ACK also implements bidirectional synchronization of Kubernetes and Slurm resource states. You can use customizable scaling policies to dynamically adjust Slurm nodes, thus achieving auto scaling of the Slurm cluster. Traditional HPC cluster scheduling can also take advantage of the elasticity of the cloud.

For HPC users, they do not need to care about the underlying scheduling details and still maintain the native experience of submitting sbatch and srun jobs through the login node.

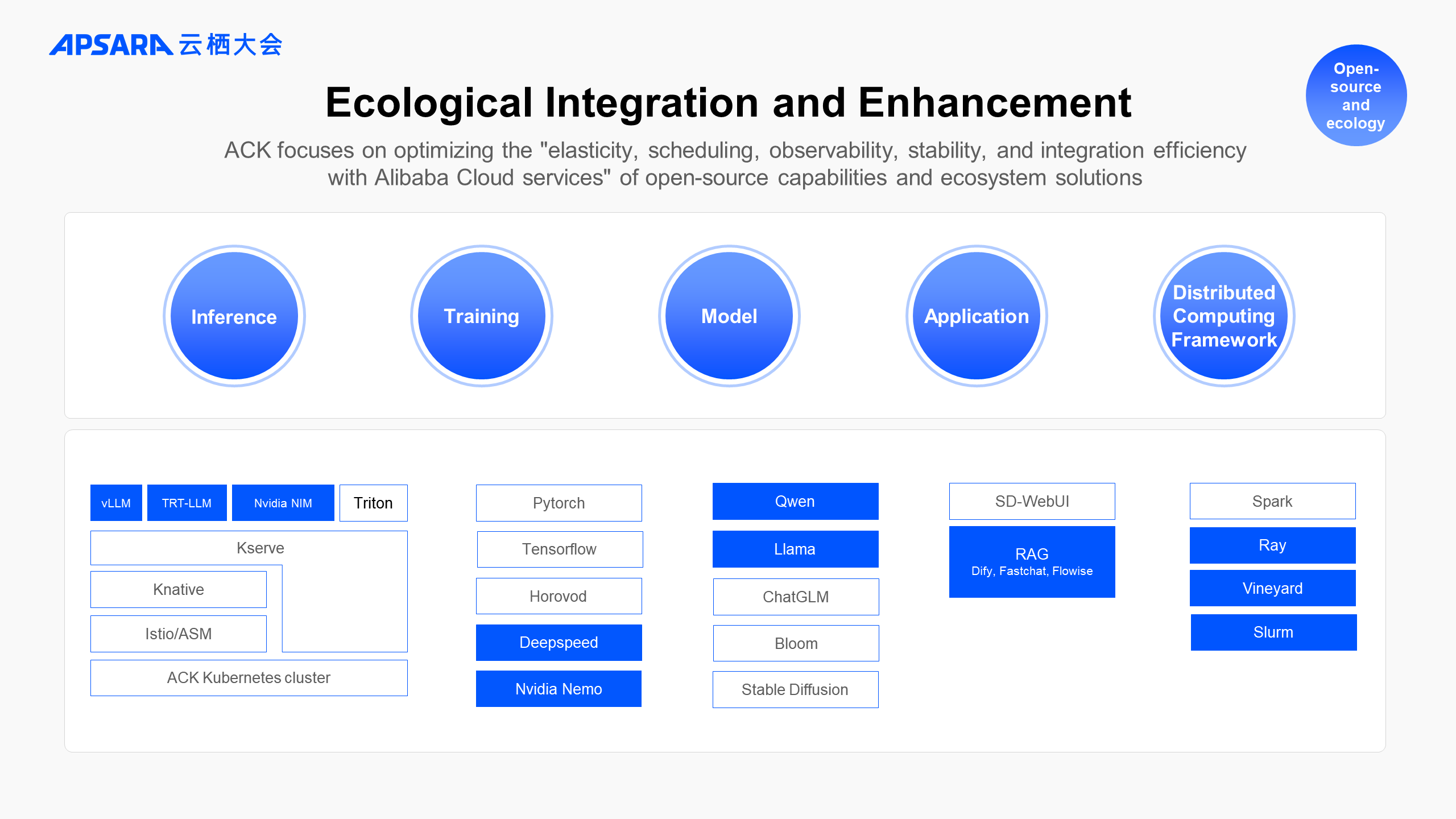

Open source and openness are the most important development paths and drivers for both the cloud-native and AI communities. Regardless of training frameworks, inference engines, distributed computing software, open-source models, and new AI application architectures, ACK continues to help users quickly and efficiently integrate into Kubernetes clusters with open architectures and open-source standards. More importantly, ACK will focus on optimizing the elasticity, scheduling capability, observability, stability, and security of open-source capabilities and ecological solutions. By integrating with Alibaba Cloud services, ACK aims to enhance the maturity and implementation of these solutions.

We have built an open cloud-native AI reference architecture by using the accumulated innovative capabilities and practical experience of ACK in AI intelligent computing scenarios. Through the ACK cloud-native AI suite, we help customers continuously optimize heterogeneous resource efficiency and AI engineering efficiency, improving cloud-native AI implementation and usage costs. With an open architecture and implementation, we provide the greatest flexibility for customers' AI business. Therefore, the cloud-native AI systems of customers can evolve continuously.

Practice on the Construction of a Production-level Observability System of Alibaba Cloud ACK

Implementation of Alibaba Cloud Distributed Cloud Container Platform for Kubernetes (ACK One)

223 posts | 33 followers

FollowAlibaba Container Service - July 10, 2025

Alibaba Container Service - July 25, 2025

Alibaba Container Service - July 10, 2025

Alibaba Developer - March 8, 2021

Alibaba Container Service - April 8, 2025

Aliware - June 23, 2021

223 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Conversational AI Service

Conversational AI Service

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn MoreMore Posts by Alibaba Container Service