Watch the replay of the Apsara Conference 2024 at this link!

This article is based on Liu Jiaxu's speech at the Apsara Conference 2024.

As cloud-native technology continues to develop rapidly and be applied in depth in the enterprise IT field, the high-availability architecture in cloud-native scenarios is increasingly important for the availability, stability, and security of enterprise services. Through proper architecture design and technical support from the cloud platform, the cloud-native high-availability architecture can provide many advantages, including high availability, elastic scalability, simplified O&M management, and improved reliability and security, providing enterprises with a more reliable and efficient application runtime environment.

As one of the core technologies of cloud-native, Kubernetes provides container orchestration and management capabilities, including infrastructure automation, elastic scalability, microservice architecture, and automated O&M. The high-availability architecture of Kubernetes applications is the cornerstone of cloud-native high availability. This article takes Alibaba Cloud Container Service for Kubernetes (ACK) as an example to describe the best practices for high-availability architecture and governance of applications based on ACK.

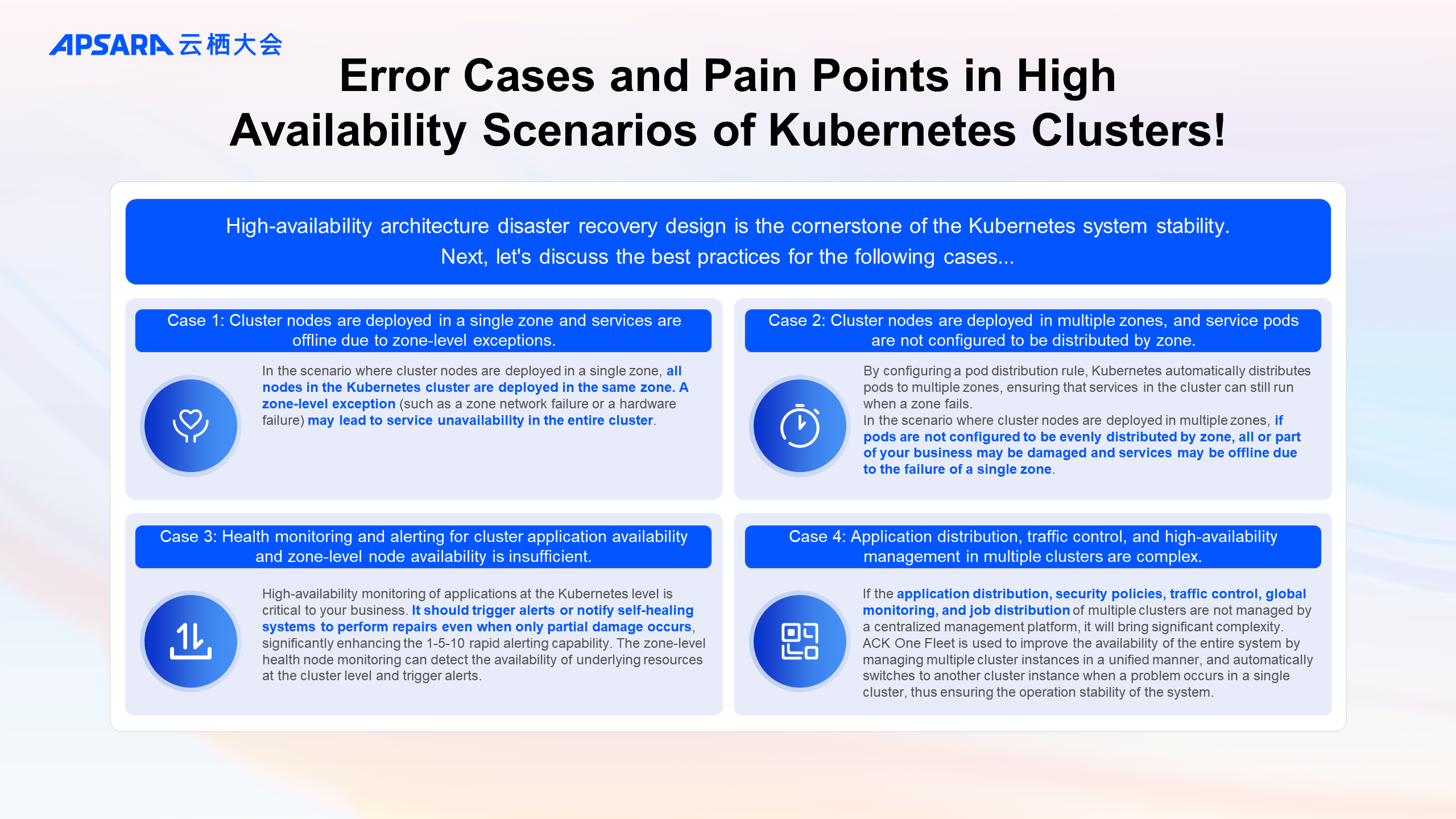

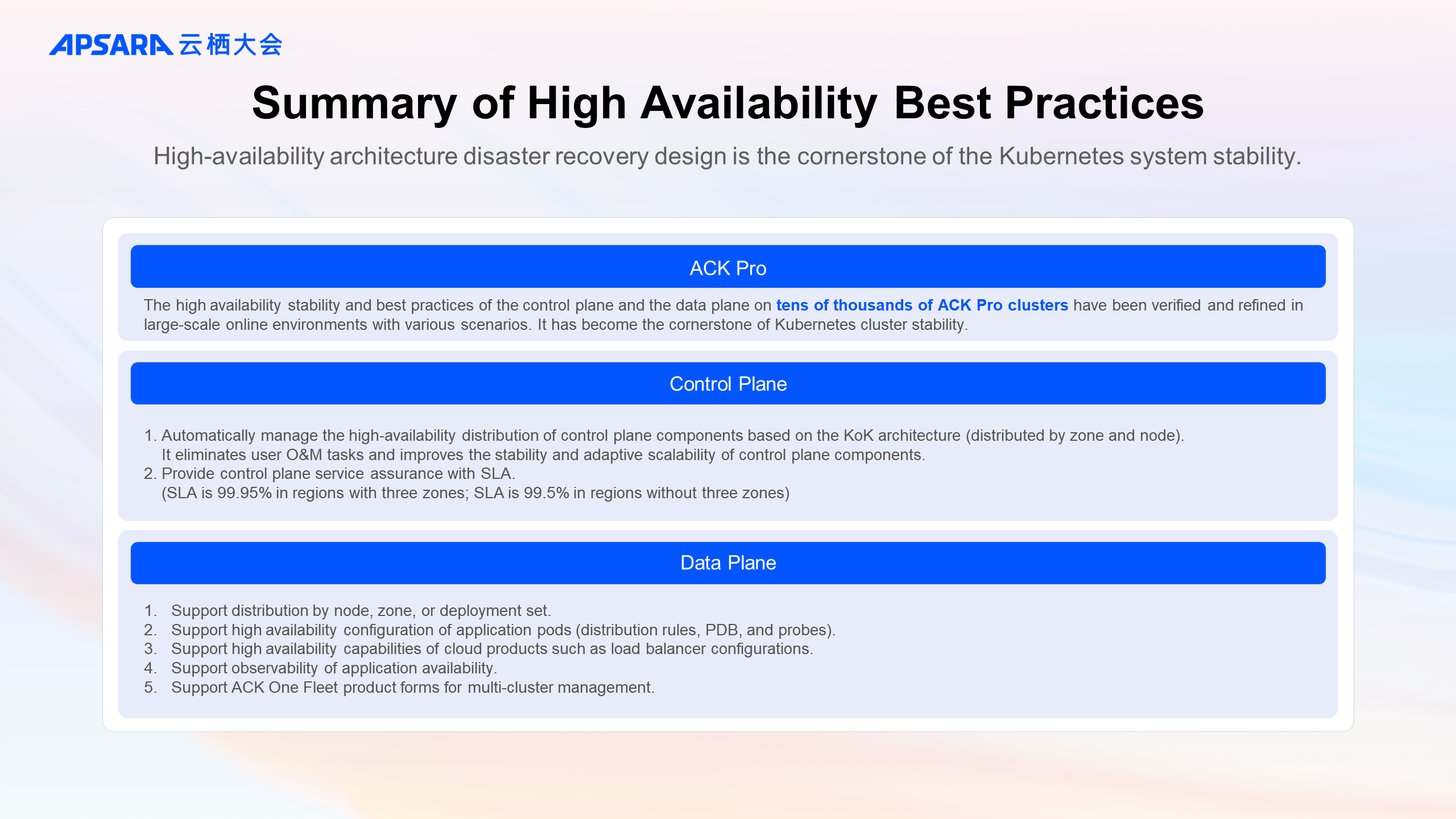

High-availability architecture disaster recovery design is the cornerstone of the Kubernetes system stability and is of great significance in the production environment.

Let's first take a look at the error cases and pain points of Kubernetes clusters in high availability scenarios and how ACK addresses these issues through architecture design, product capabilities, and best practices.

In the scenario where cluster nodes are deployed in a single zone, all nodes in the Kubernetes cluster are deployed in the same zone. A zone-level exception, such as network or hardware failure in a zone, may lead to service unavailability in the entire cluster.

With the configuration of pod distribution rules, Kubernetes automatically distributes pods to multiple zones, ensuring that services in the cluster can still run when a zone fails.

In the scenario where cluster nodes are deployed in multiple zones, if pods are not configured to be evenly distributed by zone, all or part of your business may be damaged and services may be offline due to the failure of a single zone.

High-availability monitoring of applications at the Kubernetes level is crucial to your business. The monitoring functionality should trigger alerts or notify self-healing systems to perform repairs even when only partial damage occurs, significantly enhancing the rapid alerting capability by 1-5-10. The zone-level health node monitoring can detect the availability of underlying resources at the cluster level and trigger alerts.

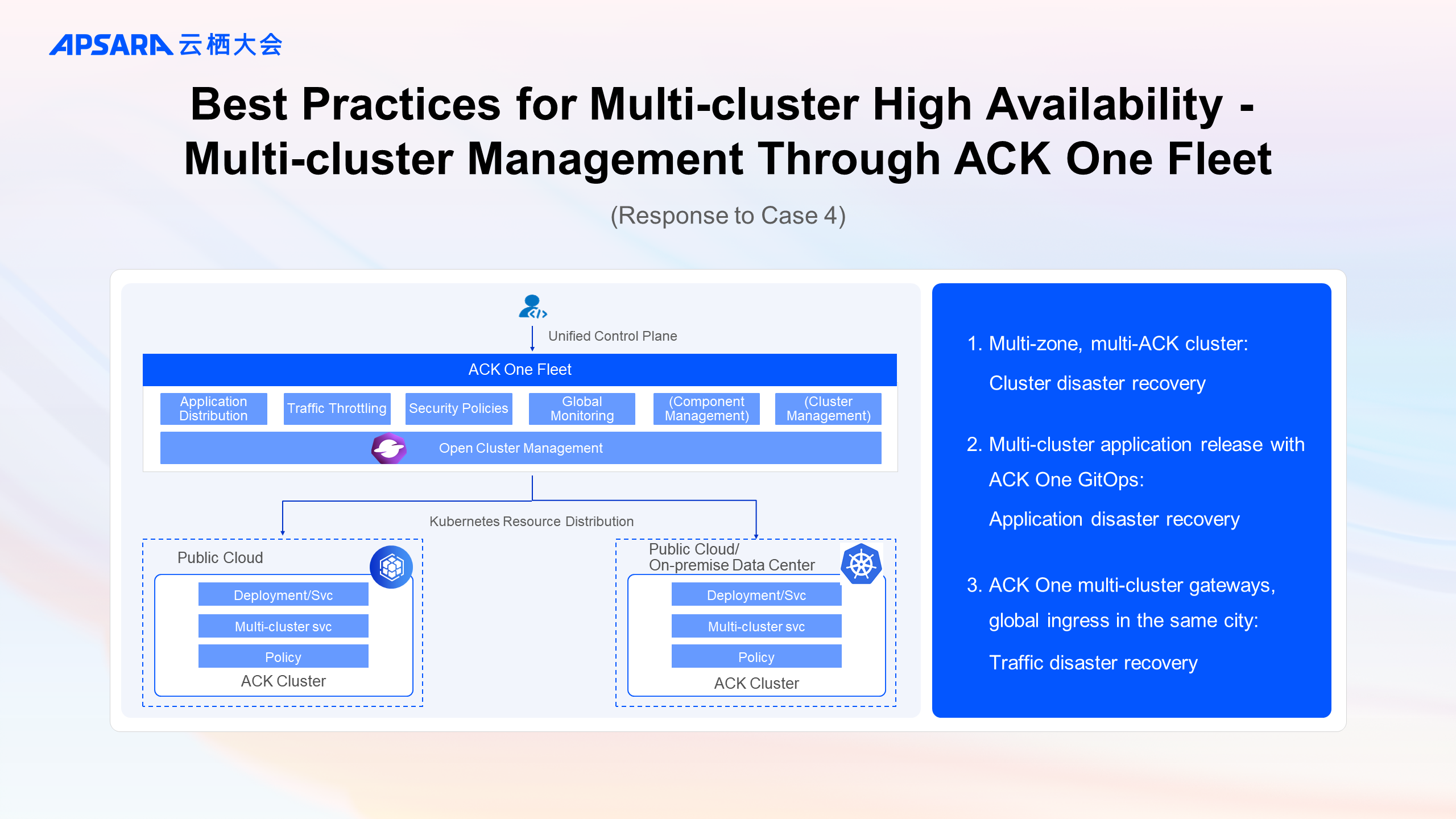

Application distribution, security policies, traffic control, global monitoring, and job distribution of multiple clusters that are not managed by a centralized management platform will bring significant complexity. In terms of the product capabilities of ACK, you can use ACK One Fleet to uniformly manage multiple cluster instances, improving overall system availability. When problems occur in a single cluster, it can automatically switch to another cluster instance, ensuring stable system operation.

After summarizing common misconfigurations and pain points in Kubernetes scenarios, let's take a look at the single-cluster high-availability architecture of ACK and how to deal with high-availability stability risks.

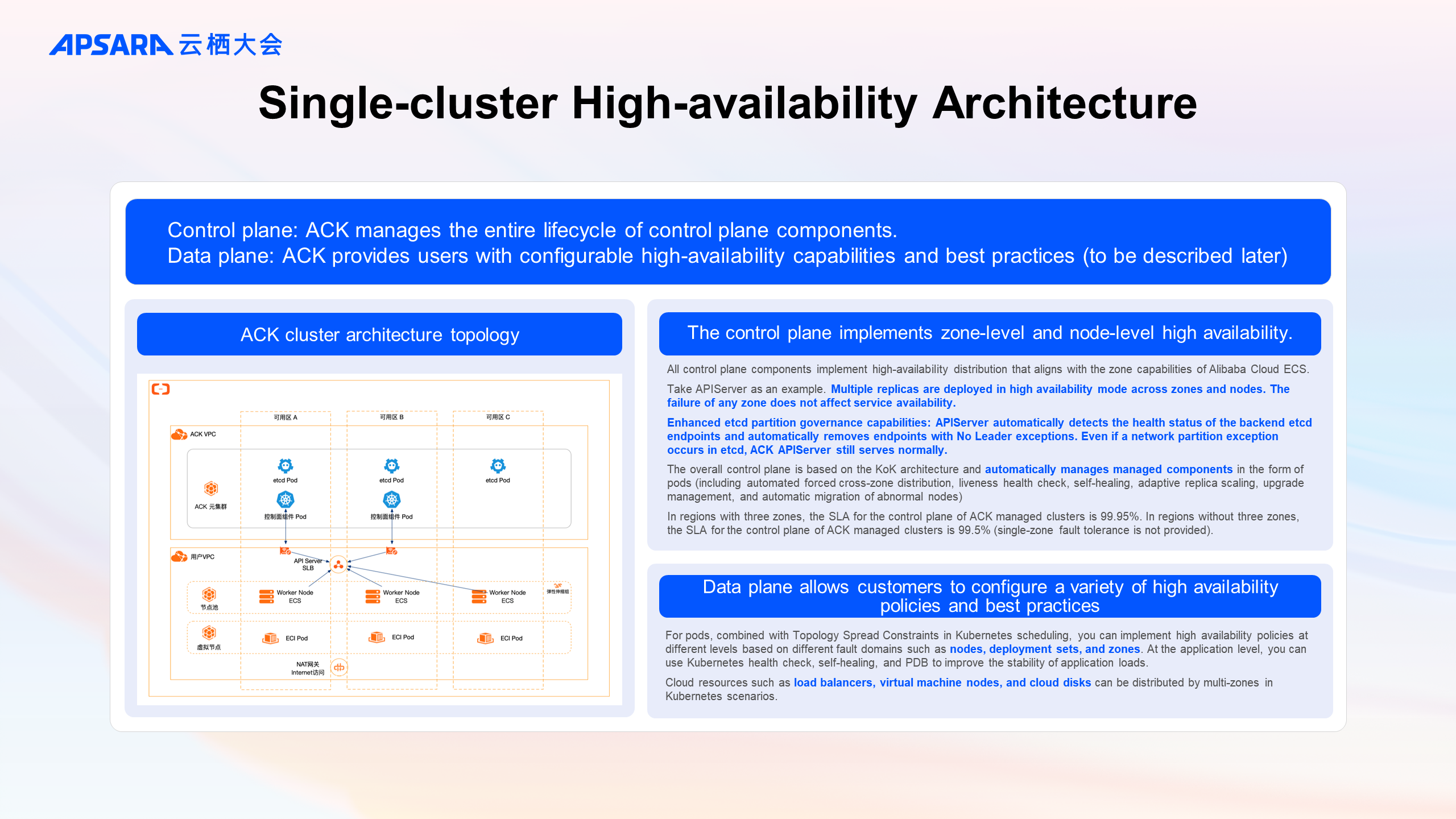

Let's take a look at the single-cluster high-availability architecture of ACK. The left side of the picture shows the high-availability architecture diagram of the ACK cluster. In the upper part, you can view the resources in the ACK VPC, including the ACK meta cluster, which is the form of an ACK dedicated cluster, hosting the control plane components and managed components of an ACK managed cluster. The nodes of the ACK meta cluster and managed components that run as pods are distributed across multiple zones to implement disaster recovery with high availability. The lower part displays the resources in your VPC, including ECS, SLB, and ECI.

An ACK-managed cluster consists of the control plane and the data plane. Control plane components run as pods in ACK meta clusters and are managed by using the KoK architecture. ACK manages the entire lifecycle of control plane components. Data plane resources are deployed in your VPC and ACK provides users with configurable high-availability capabilities and best practices.

All control plane components implement high-availability distribution that aligns with the zone capabilities of Alibaba Cloud ECS. In regions with three zones, the SLA for the control plane of ACK Pro-managed clusters is 99.95%. In regions without three zones, the SLA for the control plane of ACK Pro-managed clusters is 99.5% (single-zone fault tolerance is not provided).

Take APIServer as an example. Multiple replicas are deployed in high availability mode across zones and nodes. The failure of any zone does not affect service availability. In addition, it supports enhanced etcd partition governance capabilities, that is, APIServer automatically detects the health status of the backend etcd endpoints and automatically removes endpoints with No Leader exceptions. Even if a network partition exception occurs in etcd, ACK APIServer still serves normally. The overall control plane is based on the KoK architecture and automatically manages managed components in the form of pods, including automated forced cross-zone distribution, liveness health check, self-healing, adaptive replica scaling, upgrade management, and automatic migration of abnormal nodes.

In the data plane, ACK combines the native scheduling capabilities of Kubernetes, such as Topology Spread Constraints, and Alibaba Cloud service capabilities to support pod placement across different failure domains, including nodes, deployment sets, and availability zones, implementing various levels of high availability strategies. For application loads, you can use Kubernetes health check, self-healing, and PDB to improve the stability of application loads. Cloud resources such as load balancers, virtual machine nodes, and cloud disks support multi-zone high availability configurations in Kubernetes scenarios and the corresponding containerized configuration interface. The following section describes the best practices for data plane high availability.

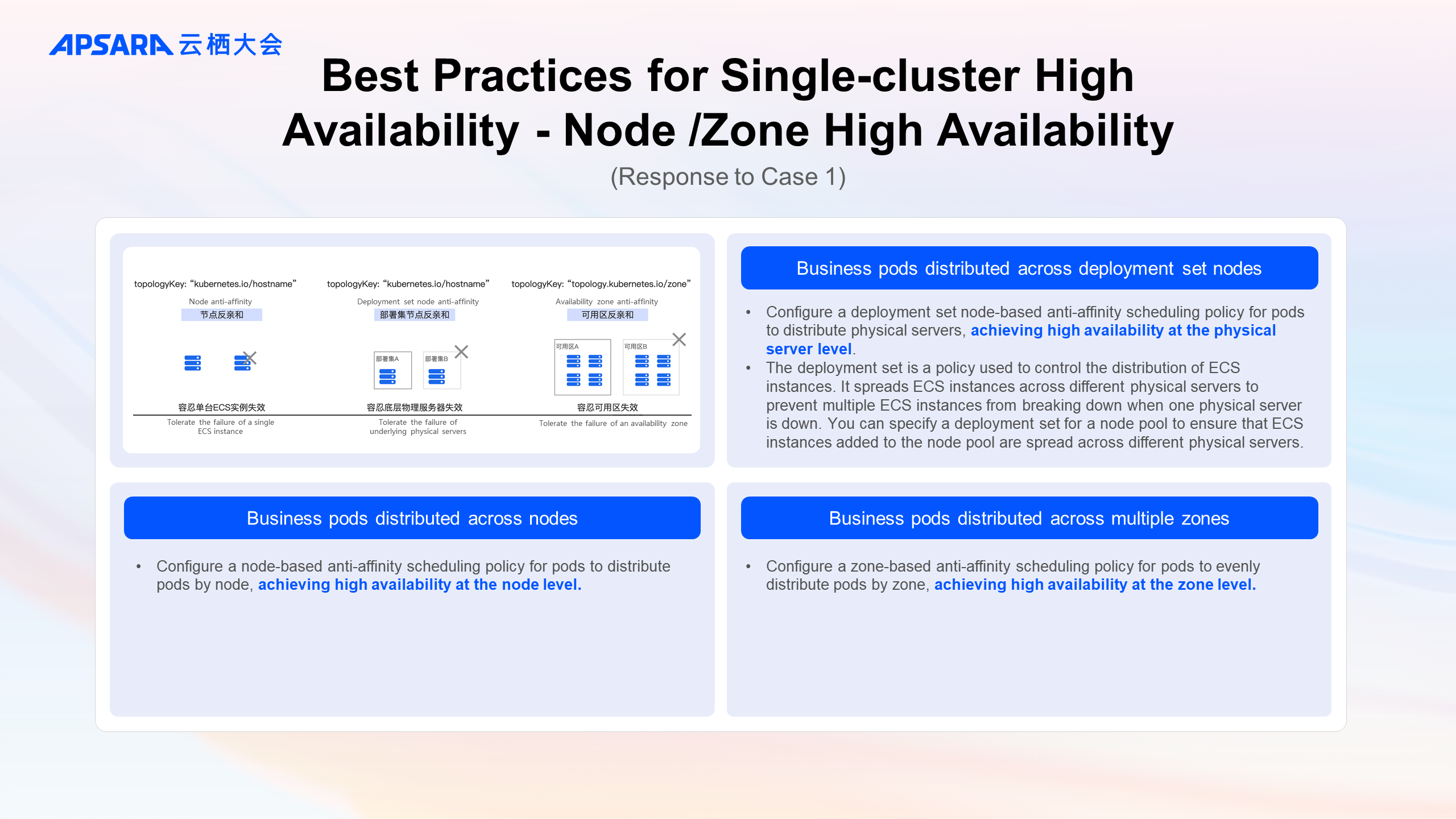

The upper-left part is a diagram that illustrates the distribution scheduling of pods across nodes, deployment sets, and availability zones, and their disaster recovery capabilities. Pods should be distributed by node and zone as much as possible. If needed, they can be more strictly distributed across nodes within deployment sets.

Configure a node-based anti-affinity scheduling policy for pods to distribute pods by node, achieving high availability at the node level.

Configure a deployment set node-based anti-affinity scheduling policy for pods to distribute physical servers, achieving high availability at the physical server level.

The deployment set is a policy used to control the distribution of ECS instances. It spreads ECS instances across different physical servers to prevent multiple ECS instances from breaking down when one physical server is down. You can specify a deployment set for a node pool to ensure that ECS instances added to the node pool are spread across different physical servers.

Configure a zone-based anti-affinity scheduling policy for pods to evenly distribute pods by zone, achieving high availability at the zone level.

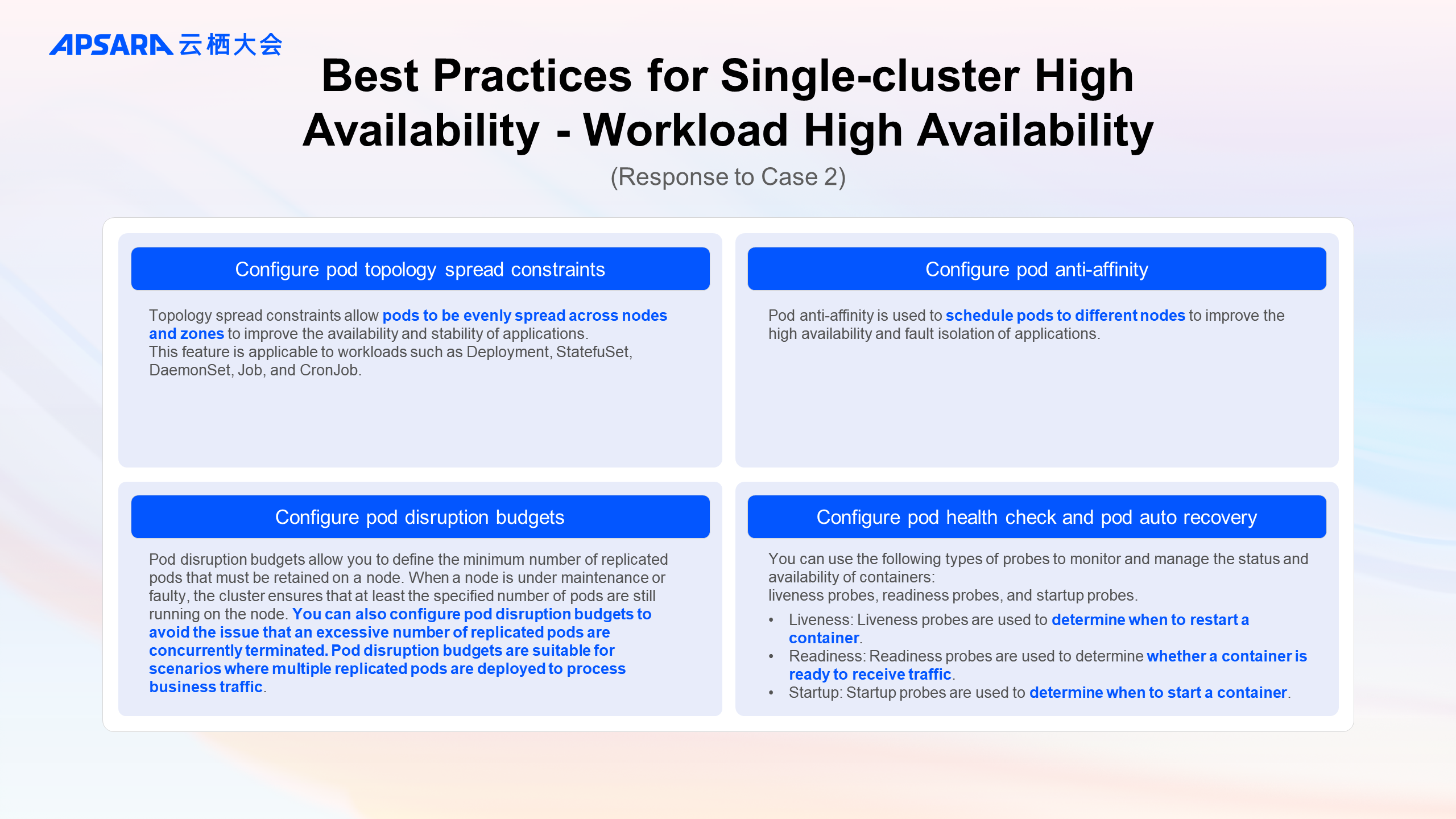

Based on the features of Kubernetes, you can refer to the following best practices to enhance the availability of application loads.

Topology spread constraints allow pods to be evenly spread across nodes and zones to improve the availability and stability of applications.

This feature is applicable to workloads such as Deployment, StatefuSet, DaemonSet, Job, and CronJob.

Pod anti-affinity is used to schedule pods to different nodes to improve the high availability and fault isolation of applications.

Pod disruption budgets allow you to define the minimum number of replicated pods that must be retained on a node. When a node is under maintenance or faulty, the cluster ensures that at least the specified number of pods are still running on the node. You can also configure pod disruption budgets to avoid the issue that an excessive number of replicated pods are concurrently terminated. Pod disruption budgets are suitable for scenarios where multiple replicated pods are deployed to process business traffic.

You can use the following probes to monitor and manage the status and availability of containers, including liveness probes, readiness probes, and startup probes.

Liveness: Liveness probes are used to determine when to restart a container.

Readiness: Readiness probes are used to determine whether a container is ready to receive traffic.

Startup: Startup probes are used to determine when to start a container.

High Availability Configuration of Container Registry Enterprise Edition

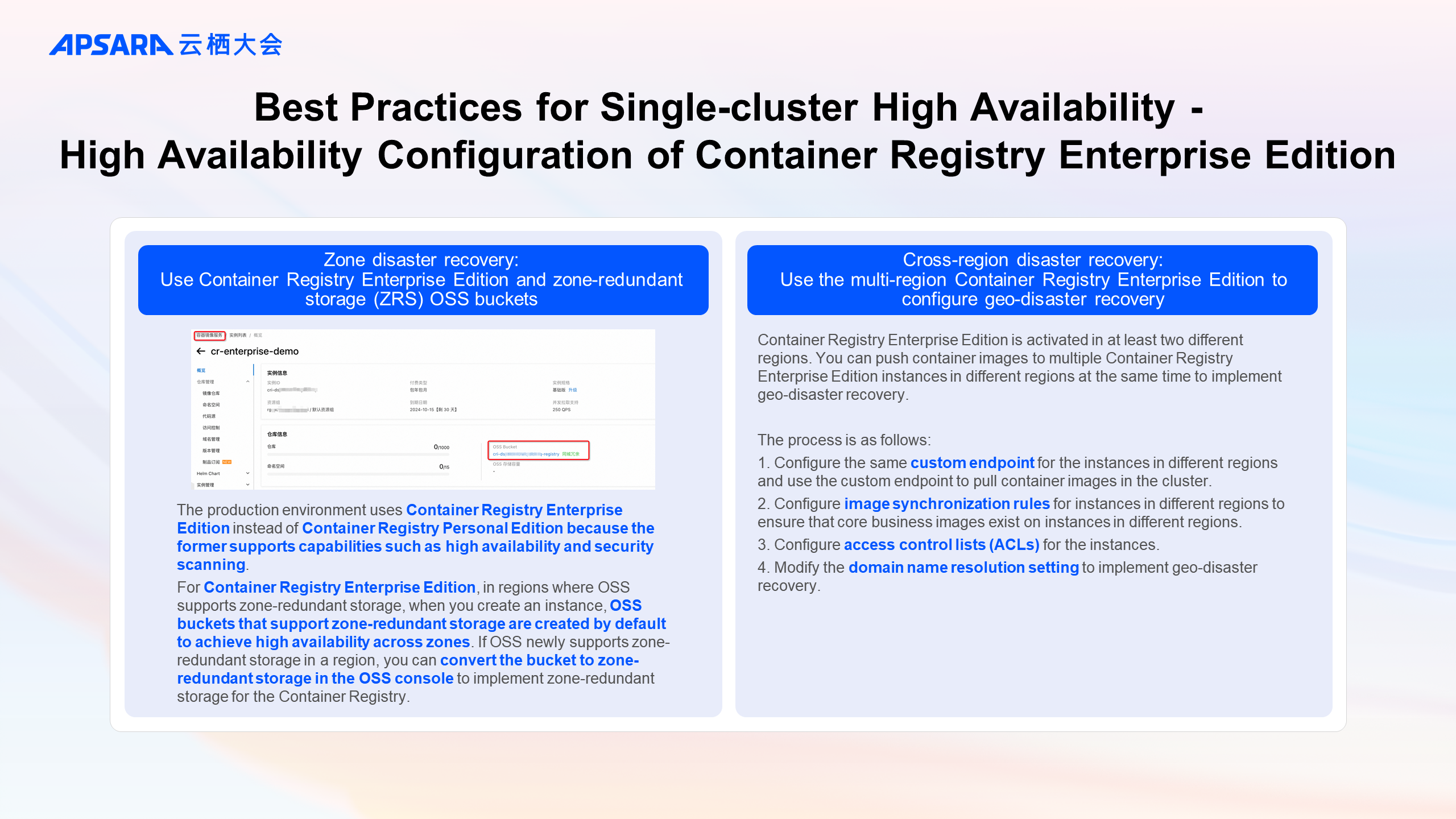

The high availability configuration of Container Registry Enterprise Edition includes two best practices: zone disaster recovery and cross-region disaster recovery.

The production environment uses Container Registry Enterprise Edition instead of Container Registry Personal Edition because the former supports capabilities such as high availability and security scanning.

For Container Registry Enterprise Edition, in regions where OSS supports zone-redundant storage, when you create an instance, OSS buckets that support zone-redundant storage are created by default to achieve high availability across zones. If OSS newly supports zone-redundant storage in a region, you can convert the bucket to zone-redundant storage in the OSS console to implement zone-redundant storage for the Container Registry.

Container Registry Enterprise Edition is activated in at least two different regions. You can push container images to multiple Container Registry Enterprise Edition instances in different regions at the same time to implement geo-disaster recovery.

The process is as follows:

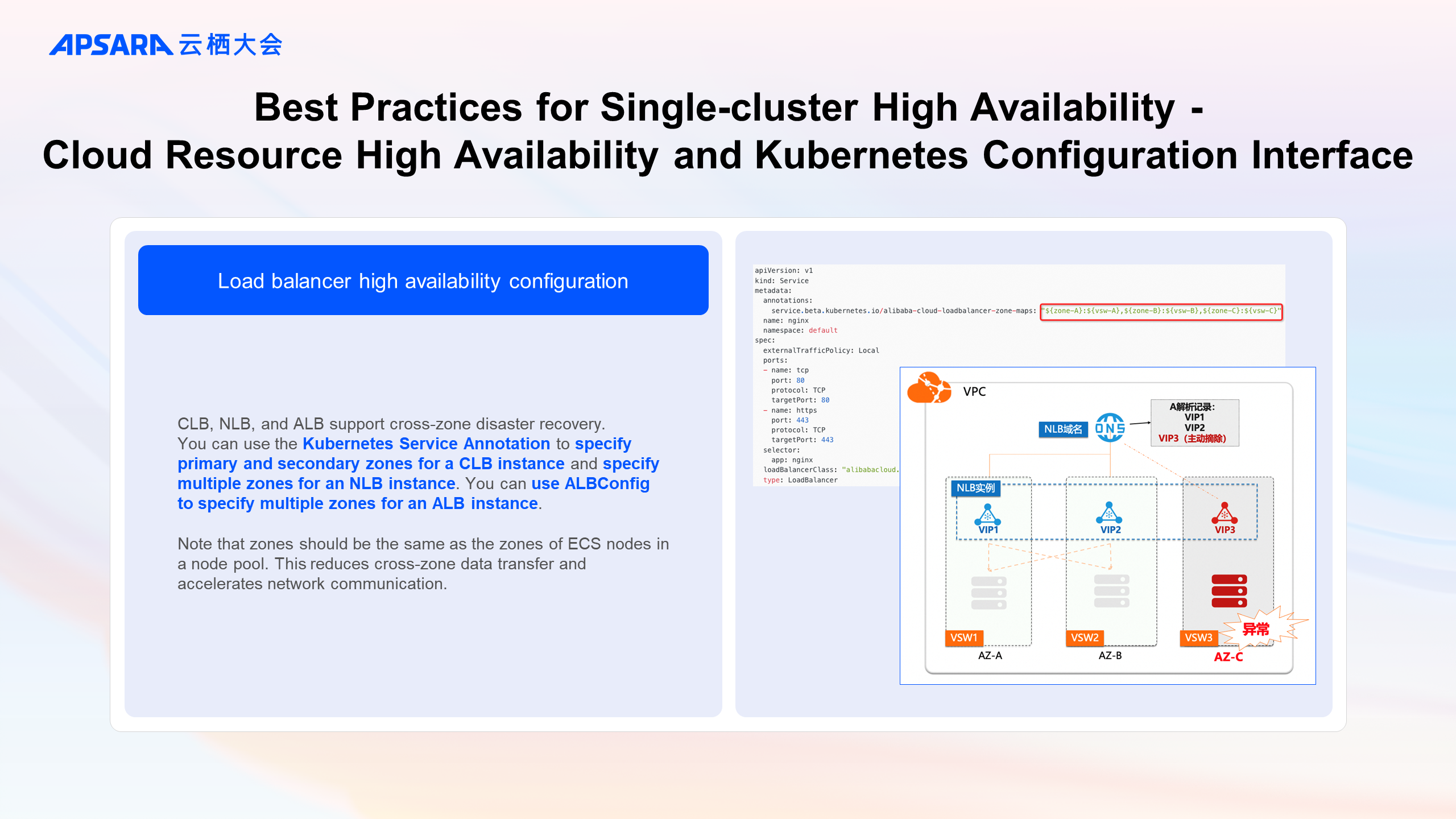

The high availability capabilities of Alibaba Cloud products provide a Kubernetes configuration interface so that users can flexibly configure high availability capabilities as needed.

Take the load balancer high availability configuration as an example. Let's look at how the high-availability configuration of cloud products is revealed through the container interface. Load balancer cloud products, including CLB, NLB, and ALB, support cross-zone disaster recovery. You can use the Kubernetes Service Annotation to specify primary and secondary zones for a CLB instance and specify multiple zones for an NLB instance. You can use ALBConfig to specify multiple zones for an ALB instance. Note that zones should be the same as the zones of ECS nodes in a node pool. This reduces cross-zone data transfer and enhances network access performance. You can search for the containerized configuration methods of cloud products on the Alibaba Cloud official website.

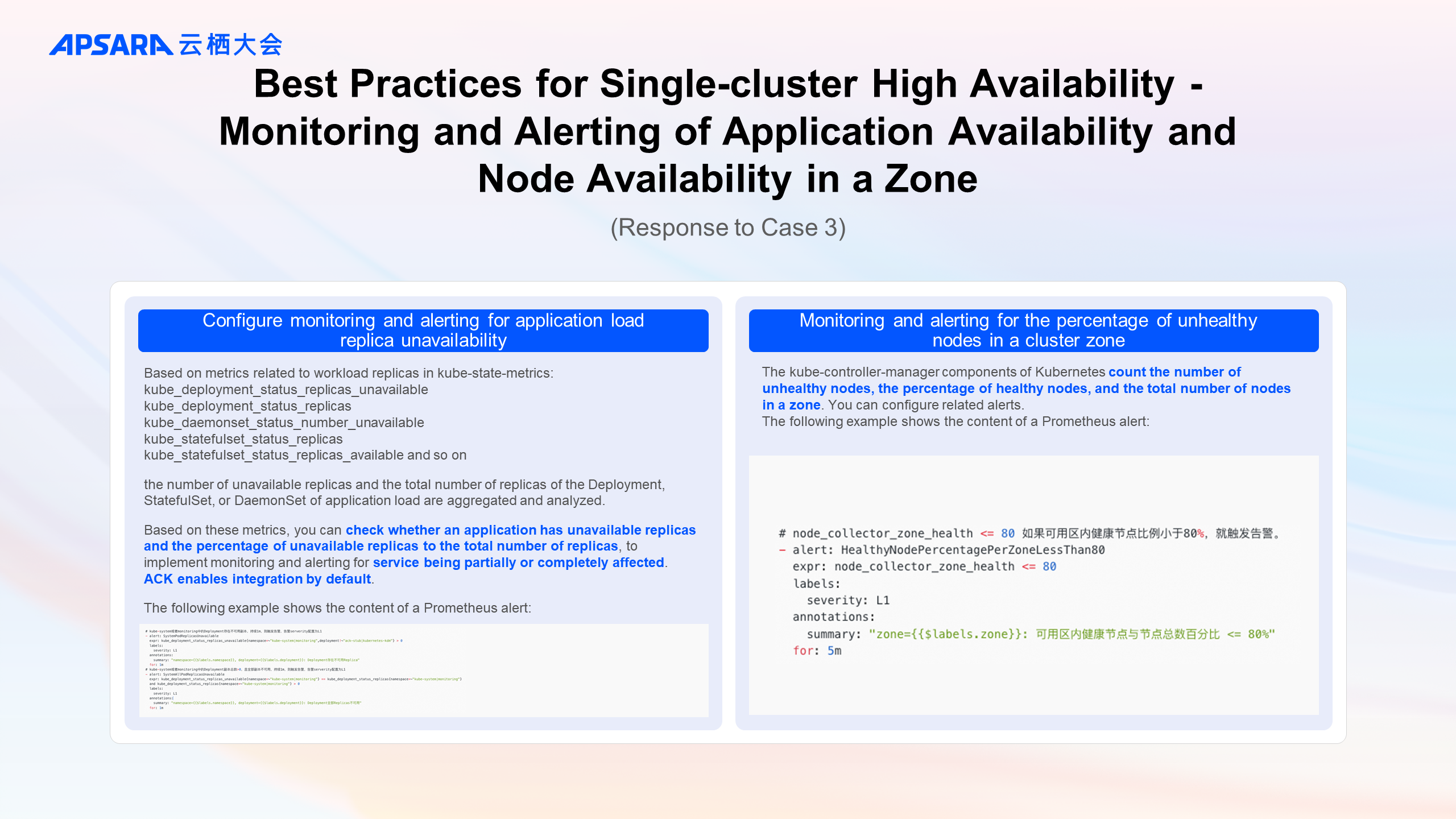

Based on metrics related to workload replicas in kube-state-metrics:

kube_deployment_status_replicas_unavailable

kube_deployment_status_replicas

kube_daemonset_status_number_unavailable

kube_statefulset_status_replicas

kube_statefulset_status_replicas_available

and so on

the number of unavailable replicas and the total number of replicas of the Deployment, StatefulSet, or DaemonSet of application load are aggregated and analyzed.

Based on these metrics, you can check whether an application has unavailable replicas and the percentage of unavailable replicas to the total number of replicas, to implement monitoring and alerting for services being partially or completely affected. ACK enables integration by default.

The following example shows the content of a Prometheus alert:

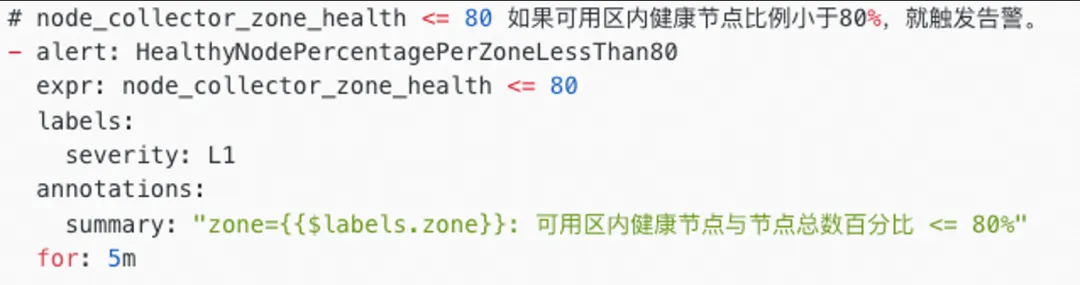

The kube-controller-manager components of Kubernetes count the number of unhealthy nodes, the percentage of healthy nodes, and the total number of nodes in a zone. You can configure related alerts.

The following example shows the content of a Prometheus alert:

With the wide adoption of Kubernetes clusters, enterprises may need to run and manage multiple Kubernetes clusters. This brings challenges such as how to manage multiple clusters, how to use a unified external ingress to access the clusters, and how to schedule resources for the clusters. The Fleet instances of Distributed Cloud Container Platform for Kubernetes (ACK One) are managed by Container Service for Kubernetes (ACK). You can use the Fleet instances to manage Kubernetes clusters that are deployed in different environments in a centralized manner. Fleet instances create a consistent experience in cloud-native application management for enterprises.

This diagram introduces the functions of application distribution, traffic control, security policy, global monitoring, component management, and cluster management for multiple clusters through the unified control plane of ACK One Fleet, efficiently managing public cloud and IDC clusters.

Cluster disaster recovery is achieved through multiple zones and multiple clusters. Application disaster recovery is achieved through ACK One GitOps multi-cluster application deployment. Traffic disaster recovery is achieved through ACK One multi-cluster gateway and global ingress in the same city.

High-availability architecture design and best practices in cloud-native scenarios are critical to the availability, stability, and security of enterprise services. They can effectively improve application availability and user experience, and provide fault isolation and fault tolerance capabilities.

This article shares the high availability and stability architecture of ACK and its best practices. These practices are based on the high availability capabilities and experience of tens of thousands of ACK Pro clusters on the control plane and data plane across the network. They have been verified and trained in a large-scale online environment with rich scenarios. It is hoped that these experiences can provide reference and help for enterprises with related requirements. At present, ACK's high-availability stability architecture, product capabilities, and best practices have become the cornerstone of ACK cluster stability. ACK will continue to provide customers with cloud-native products and services that are continuously optimized and upgraded in security, stability, performance, and cost.

Implementation of Alibaba Cloud Distributed Cloud Container Platform for Kubernetes (ACK One)

Use Alibaba Cloud ASM LLMProxy Plug-in to Ensure User Data Security for Large Models

193 posts | 33 followers

FollowAlibaba Clouder - July 15, 2020

Alibaba Container Service - December 19, 2024

Alibaba Cloud Community - March 8, 2022

Alibaba Cloud Native Community - July 26, 2022

Alibaba Developer - August 19, 2021

Alibaba Container Service - October 30, 2024

193 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Container Service