The large language model (LLM) is a hot and exciting topic that has developed rapidly in recent years, introducing many new scenarios to meet the needs of all walks of life. With the continuous enhancement of open-source models, more and more enterprises begin to deploy them in production environments and connect AI models to existing infrastructure to optimize system latency and throughput and improve monitoring and security. However, it is complex and time-consuming to deploy this set of model inference services in production environments. To simplify the process and help enterprises accelerate the deployment of generative AI models, this article combines NVIDIA NIM (a set of microservices designed for secure and reliable deployment of high-performance AI model inference, and a set of easy-to-use pre-built containerized tools) and products such as Alibaba Cloud Container Service for Kubernetes (ACK). You can quickly build a high-performance, observable, flexible, and elastic LLM model inference service with a set of out-of-the-box operation guides provided by the article.

ACK is one of the first services to participate in the Certified Kubernetes Conformance Program in the world. ACK provides high-performance containerized application management services and supports lifecycle management for enterprise-class containerized applications. By using ACK, you can run and manage containerized applications on the cloud with ease and efficiency.

The cloud-native AI suite is a cloud-native AI technology and product solution provided by Alibaba Cloud Container Service for Kubernetes. The cloud-native AI suite can help you fully utilize cloud-native architectures and technologies to quickly develop an AI-assisted production system in ACK. The cloud-native AI suite also provides full-stack optimization for AI or machine learning applications and systems. The cloud-native AI suite provides the command-line tool Arena, an open-source project in the Kubeflow community, to simplify and efficiently manage the core production tasks of deep learning (including data management, model training, model evaluation, and inference service deployment) while reducing the complexity of Kubernetes concepts. Arena allows you to quickly submit distributed training tasks and manage the lifecycle of tasks. In addition, the cloud-native AI suite also provides scheduling policies optimized for distributed scenarios. For example, the binpack algorithm is used to allocate GPU cards to improve GPU card utilization. It also supports custom task priority management and tenant elastic resource quota control. On the basis of ensuring user resource allocation, the overall resource utilization of the cluster is improved through resource sharing.

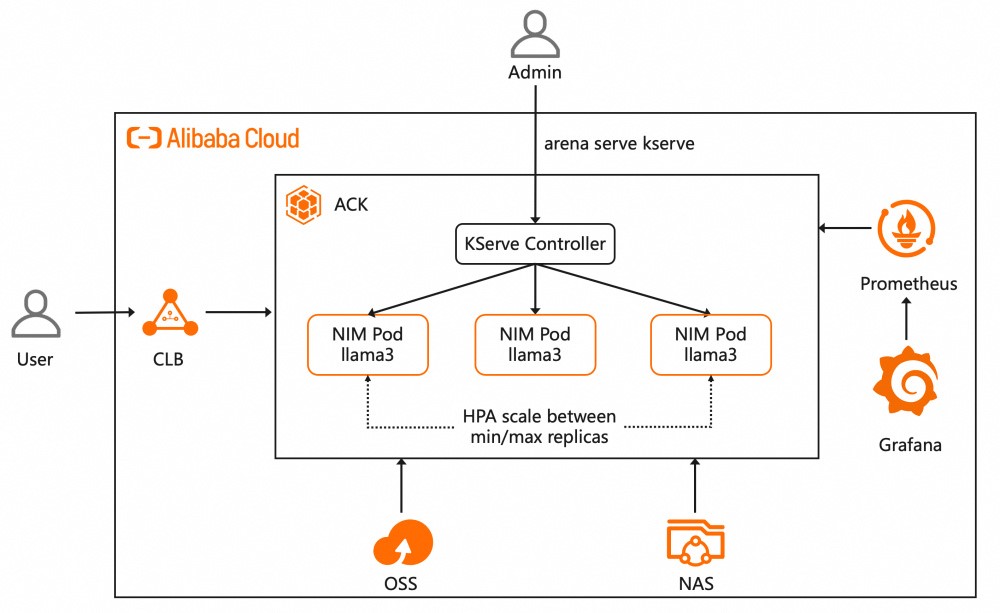

This article describes how to use the cloud-native AI suite to integrate the open-source inference service framework KServe and quickly deploy NVIDIA NIM in an ACK cluster. You can use Managed Service for Prometheus and Managed Service for Grafana to quickly build a monitoring dashboard to observe the inference service status in real time. You can use the various monitoring metrics provided by NVIDIA NIM, such as num_requests_waiting, to configure elastic scaling policies for the inference service. When a traffic burst causes requests that need to be processed by the inference service to be queued, new instances can be automatically scaled out to cope with the peak traffic. The overall solution architecture is as follows:

In this article, we will introduce the steps to deploy NVIDIA NIM in an ACK cluster. The steps are as follows:

Create an ACK cluster with GPU and deploy the cloud-native AI suite. To use KServe to manage the inference service in the cluster, installation of ack-kserve is also required. After the cluster environment is ready, you can refer to the following steps to deploy the service.

Note: You shall abide by the user agreements, usage specifications, and relevant laws and regulations of the third-party models. You agree that your use of the third-party models is at your sole risk.

1. Refer to NVIDIA NIM documentation to generate the NVIDIA NGC API key and apply for the access permission of the model image to be deployed, such as the Llama3-8b-instruct used in this article. Please read and comply with the Custom commercial open-source agreement of the Llama Model.

2. Create an imagePullSecret to pull NIM images from the NGC private repository.

export NGC_API_KEY=<your-ngc-api-key>

kubectl create secret docker-registry ngc-secret \

--docker-server=nvcr.io\

--docker-username='$oauthtoken'\

--docker-password=${NGC_API_KEY}3. Create a nvidia-nim-secret to access the NGC private repository in the container. For more information, please refer to the nim-deploy deployment documentation.

kubectl apply -f-<<EOF

apiVersion: v1

kind: Secret

metadata:

name: nvidia-nim-secrets

stringData:

NGC_API_KEY: <your-ngc-api-key>

EOF4. Configure PVs and PVCs in the target cluster. The subsequent model files will be downloaded to the created shared storage. For more information, please refer to Use NAS volumes.

The following table describes the parameters of the PV.

| Parameter | Description |

|---|---|

| PV type | NAS |

| Name | nim-model |

| Access mode | ReadWriteMany |

| Domain name of the mount target | You can select a mount target or enter a custom mount target. For more information about how to view the domain name of the mount target, please refer to View the domain name of the mount target. |

| Mount path | Specify the path where the model is located, such as /nim-model. |

The following table describes the parameters of the PVC.

| Parameter | Description |

|---|---|

| PVC type | NAS |

| Name | nim-model |

| Allocation mode | Set allocation mode to existing volumes. |

| Existing volumes | Click the existing volumes hyperlink and select the PV that you created. |

5. Run the following command to deploy a KServe inference service, use the image provided by NVIDIA NIM, specify an NVIDIA GPU, mount the PVC to the /mnt/models directory in the container to save the model file, configure autoscalerClass=external to specify to use a custom HPA policy, and enable Prometheus to collect inference service monitoring metrics for subsequent monitoring dashboard in Grafana. After the container is started, you can use the Llama3-8b-instruct model to provide the inference service.

arena serve kserve \

--name=llama3-8b-instruct \

--image=nvcr.io/nim/meta/llama3-8b-instruct:1.0.0 \

--image-pull-secret=ngc-secret \

--gpus=1 \

--cpu=8 \

--memory=32Gi \

--share-memory=32Gi \

--port=8000 \

--security-context runAsUser=0 \

--annotation=serving.kserve.io/autoscalerClass=external \

--env NIM_CACHE_PATH=/mnt/models \

--env-from-secret NGC_API_KEY=nvidia-nim-secrets \

--enable-prometheus=true \

--metrics-port=8000 \

--data=nim-model:/mnt/modelsExpected output:

INFO[0004] The Job llama3-8b-instruct has been submitted successfully

INFO[0004] You can run `arena serve get llama3-8b-instruct --type kserve -n default` to check the job statusThe preceding output indicates that the inference service is deployed.

6. After the inference service is deployed, you can run the following command to view the deployment of the KServe inference service:

arena serve get llama3-8b-instructExpected output:

Name: llama3-8b-instruct

Namespace: default

Type: KServe

Version: 1

Desired: 1

Available: 1

Age: 24m

Address: http://llama3-8b-instruct-default.example.com

Port: :80

GPU: 1

Instances:

NAME STATUS AGE READY RESTARTS GPU NODE

---- ------ --- ----- -------- --- ----

llama3-8b-instruct-predictor-545445b4bc-97qc5 Running 24m

1/1 0 1 ap-southeast-1.172.16.xx.xxxThe preceding output indicates that the KServe inference service is deployed and the model access address is:

http://llama3-8b-instruct-default.example.com

7. You can access the inference service through the obtained IP address of the NGINX Ingress.

# Obtain the IP address of the Nginx ingress.

NGINX_INGRESS_IP=$(kubectl -n kube-system get svc nginx-ingress-lb -ojsonpath='{.status.loadBalancer.ingress[0].ip}')

# Obtain the Hostname of the Inference Service.

SERVICE_HOSTNAME=$(kubectl get inferenceservice llama3-8b-instruct -o jsonpath='{.status.url}' | cut -d "/" -f 3)

# Send a request to access the inference service.

curl -H "Host: $SERVICE_HOSTNAME" -H "Content-Type: application/json" http://$NGINX_INGRESS_IP:80/v1/chat/completions -d '{"model": "meta/llama3-8b-instruct", "messages": [{"role": "user", "content": "Once upon a time"}], "max_tokens": 64, "temperature": 0.7, "top_p": 0.9, "seed": 10}'Expected output:

{"id":"cmpl-70af7fa8c5ba4fe7b903835e326325ce","object":"chat.completion","created":1721557865,"model":"meta/llama3-8b-instruct","choices":[{"index":0,"message":{"role":"assistant","content":"It sounds like you're about to tell a story! I'd love to hear it. Please go ahead and continue with \"Once upon a time...\""},"logprobs":null,"finish_reason":"stop","stop_reason":128009}],"usage":{"prompt_tokens":14,"total_tokens":45,"completion_tokens":31}}The preceding output indicates that the inference service can be accessed through the IP address of the NGINX Ingress and the inference result is returned.

NVIDIA NIM provides a variety of Prometheus monitoring metrics, such as the first token latency, the number of currently running requests, the number of tokens requested, and the number of tokens generated. You can use Managed Service for Prometheus and Managed Service for Grafana to quickly build a monitoring dashboard in Grafana to observe the inference service status in real time.

1. Managed Service for Prometheus is enabled for an ACK cluster. For more information, please refer to Enable Managed Service for Prometheus.

2. Create a Grafana workspace and log on to the Dashboards page of Grafana.

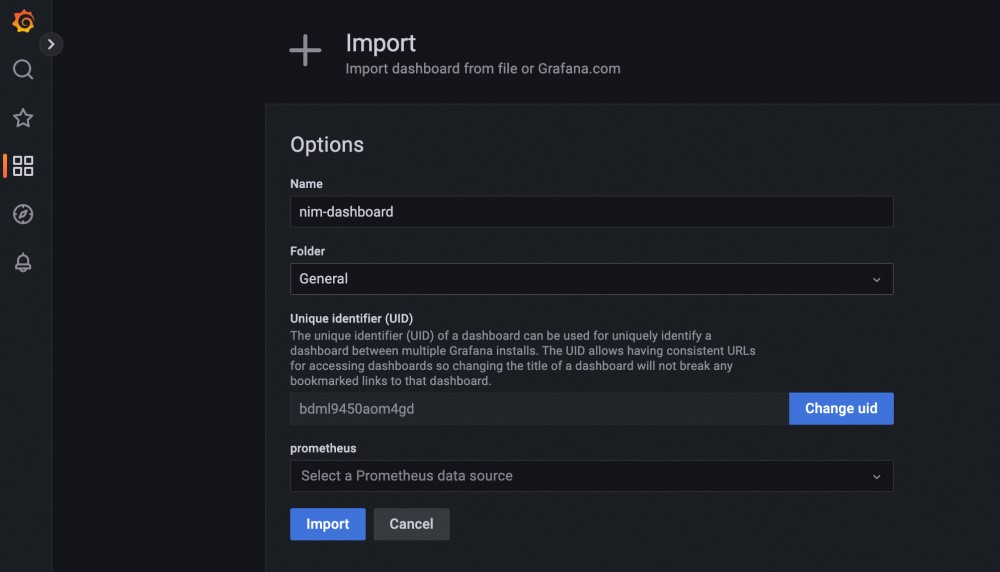

3. Import the dashboard example provided by NVIDIA NIM.

4. After imported, the dashboard example is as follows:

When you deploy a model as an inference service by using KServe, the model that is deployed as the inference service needs to handle loads that dynamically fluctuate. KServe integrates Kubernetes-native Horizontal Pod Autoscaler (HPA) technology and the scaling controller to automatically and flexibly scale pods for a model based on CPU utilization, memory usage, GPU utilization, and custom performance metrics. This ensures the performance and stability of services.

Configure an auto-scaling policy for custom metrics based on the number of queued requests

Custom metric-based auto-scaling is implemented based on the ack-alibaba-cloud-metrics-adapter component and the Kubernetes HPA mechanism provided by ACK. For more information, please refer to Horizontal pod scaling based on Managed Service for Prometheus metrics.

The following example demonstrates how to configure a scaling policy based on the num_requests_waiting metric provided by NVIDIA NIM.

1. Managed Service for Prometheus and ack-alibaba-cloud-metrics-adapter are deployed. For more information, please refer to Horizontal pod scaling based on Managed Service for Prometheus metrics.

2. On the Helm page, click Update in the Actions column of ack-alibaba-cloud-metrics-adapter. Add the following rules to the custom field:

- seriesQuery: num_requests_waiting{namespace!="",pod!=""}

resources:

overrides:

namespace: {resource: "namespace"}

pod: {resource: "pod"}

metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>}) by (<<.GroupBy>>)3. Perform auto-scaling based on custom metrics. The example configures scaling when the number of waiting requests exceeds 10.

Create a file named hpa.yaml and add the following content to the file:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: llama3-8b-instruct-hpa

namespace: default

spec:

# Specify the maximum and minimum number of pods.

minReplicas: 1

maxReplicas: 3

# Specify the metrics based on which HPA performs auto-scaling. You can specify different types of metrics at the same time.

metrics:

- pods:

metric:

name: num_requests_waiting

target:

averageValue: 10

type: AverageValue

type: Pods

# Describe the object that you want the HPA to scale. The HPA can dynamically change the number of pods that are deployed for the object.

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: llama3-8b-instruct-predictorRun the following command to create the HPA:

kubectl apply -f hpa.yamlRun the following command to create the HPA:

kubectl get hpa llama3-8b-instruct-hpaExpected output:

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

llama3-8b-instruct-hpa Deployment/llama3-8b-instruct-predictor 0/10 1 3 1 34s4. Run the following command to perform a stress test on the service.

For more information about the Hey stress testing tool, please refer to Hey.

hey -z 5m -c 400 -m POST -host $SERVICE_HOSTNAME -H "Content-Type: application/json" -d '{"model": "meta/llama3-8b-instruct", "messages": [{"role": "user", "content": "Once upon a time"}], "max_tokens": 64}' http://$NGINX_INGRESS_IP:80/v1/chat/completions5. Open another terminal during stress testing and run the following command to check the scaling status of the service.

kubectl describe hpa llama3-8b-instruct-hpaThe expected output includes the following content:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulRescale 52s horizontal-pod-autoscaler New size: 3; reason: pods metric num_requests_waiting above targetThe preceding output indicates that the number of pods is increased to 2 during stress testing. However, after about 5 minutes, the number of pods is decreased to 1. That is, pods can be scaled.

In this article, NVIDIA NIM is deployed on the Alibaba Cloud Container Service for Kubernetes, and Managed Service for Prometheus and Managed Service for Grafana is used to quickly build a monitoring dashboard in Grafana to observe the inference service status in real time. To handle loads that dynamically fluctuate that the inference service faces, configure an auto scaling policy for custom metrics based on the number of queued requests. This enables the model inference service instance to dynamically scale out based on the number of waiting requests. You can use the preceding methods to quickly build a high-performance model inference service that is observable and extremely elastic.

Use Alibaba Cloud ASM LLMProxy Plug-in to Ensure User Data Security for Large Models

Fleet Management Feature of ACK One: Enterprise-Class Multi-Cluster Management Solution

223 posts | 33 followers

FollowAlibaba Container Service - November 15, 2024

Alibaba Container Service - May 14, 2025

Alibaba Cloud Native Community - September 25, 2023

Alibaba Container Service - July 24, 2024

Alibaba Container Service - August 30, 2024

Alibaba Cloud Native Community - April 2, 2024

223 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Container Service