By Zhishi

In today's digital era, the rapid growth of enterprise business places higher demands on IT infrastructure. However, traditional data centers are found to be inadequate in dealing with the challenges of business peaks and troughs. The problems of slow scale-out and difficult scale-in are mainly due to the inability of traditional data centers to achieve dynamic resource scaling. Progress has stagnated due to the lack of flexible and efficient solutions.

To address this, Alibaba Cloud has introduced the architecture of ACK One Registered Clusters, which provides breakthroughs for enterprises with its features such as auto scaling of cloud node pools (CPU/GPU). Auto scaling of cloud node pools dynamically allocates cloud computing resources to respond to changes in business demands in real time. You can use cloud node pools to automatically scale out resources during business peaks to ensure service stability or reduce resources during business troughs to save costs. This allows enterprises to achieve maximum benefits at minimal cost, enabling them to focus on expanding their core business.

Cloud elasticity provided by ACK One registered clusters can be applied in the following scenarios:

Periodic business peaks or sudden growth: The number of computing resources in the on-premises data center is relatively fixed, making it difficult to cope with periodic business peaks or sudden growth in business traffic. Examples include hot searches and large-scale e-commerce promotions.

Rapid business growth: Kubernetes clusters deployed in on-premises data centers often cannot be dynamically expanded due to the limited computing resources in the data center. The procurement and deployment of computing resources often take a long time, which cannot keep up with the rapid growth of business traffic.

AI inference and training: A variety of GPU-accelerated instance types are provided to support multiple types of AI tasks.

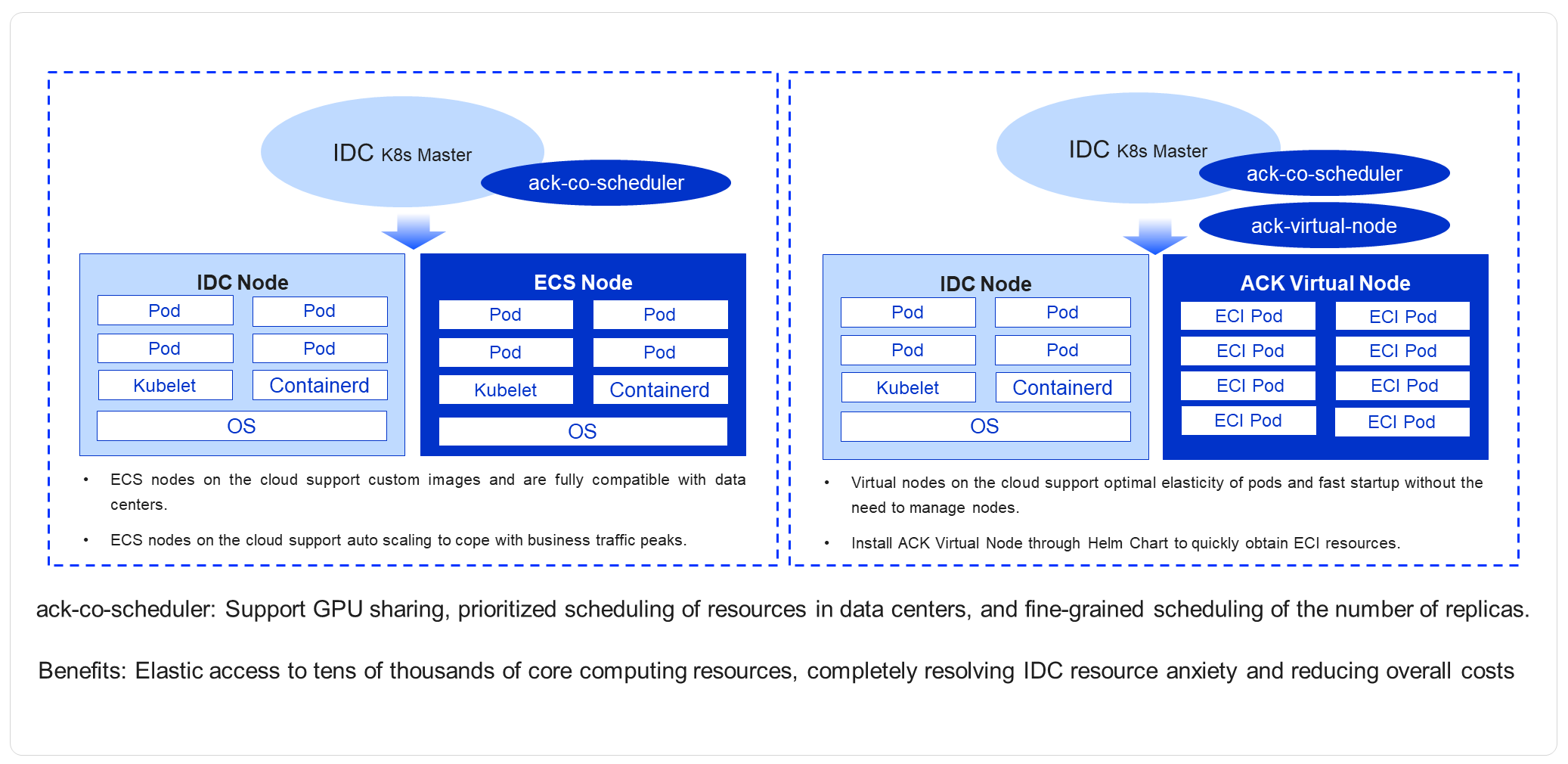

The following figure shows the architecture of cloud elasticity provided by ACK One registered clusters.

Through ACK One registered clusters, the Kubernetes clusters in an on-premises data center can elastically scale out the ECS node pools (CPU/GPU). With the extreme elasticity of Alibaba Cloud Container Service, the node pool automatically scales out nodes when business demand increases, and reduces nodes when demand decreases. The auto scaling method can effectively address customers' demands for elasticity while offering significant advantages in cost and efficiency.

The cloud elasticity architecture figure shows that two types of elastic computing power are available: ECS elastic computing power and Serverless elastic computing power. The following section describes their features, advantages, and applicable scenarios.

• Controllability: You have full control over instance configurations, networks, and security.

• Multiple instance types: A wide range of CPU and GPU instance types are provided to meet the needs of common applications and also applications in AI scenarios.

• Persistent storage: You can mount different types of storage volumes to meet the needs for data persistence.

• Long-term applications and services: ECS computing power is suitable for applications that require long-term operation and have stable resource requirements.

• High-performance computing tasks: ECS computing power is suitable for tasks that require dedicated high-performance resources (such as GPUs). Such tasks include AI training and inference.

• Serverless architecture: You do not need to manage the underlying virtual machines or servers; you can focus solely on running the containers.

• Quick Start: Business containers can be started within seconds, which is suitable for scenarios that require rapid capacity expansion.

•Pay-as-you-go: Billing is based on the actual usage time, making it very efficient for cost management.

• Short-term tasks or batch processing tasks: Serverless computing power is quite suitable for scenarios where a large amount of data needs to be processed in a short period.

• On-demand scaling applications: Serverless computing power is suitable for scenarios like e-commerce promotions and hot news, where a quick response to business peaks is necessary.

Management level: Serverless computing power is a serverless container service that does not require infrastructure management. ECS computing power is a customizable virtual machine service that allows you to manage server configurations and maintenance.

Startup speed: The startup speed of serverless computing power is extremely fast, making it suitable for scenarios that require quick response. The startup of ECS computing power is relatively slow.

Flexibility: Serverless computing power is more flexible and thus suitable for short-term tasks. ECS computing power is suitable for applications that require long-term operation and complex settings.

Cost: Serverless computing power is billed based on the actual usage time and therefore is more suitable for short-term and volatile tasks. ECS computing power is suitable for long-term stable loads and is more cost-effective for instances that run for a long term.

This section primarily describes the cloud ECS elasticity of ACK One registered clusters.

kubectl -n kube-system get pod |grep ack-cluster-agentExpected output:

ack-cluster-agent-5f7d568f6-6fc4k 1/1 Running 0 9s

ack-cluster-agent-5f7d568f6-tf6fp 1/1 Running 0 9s1. Log on to the ACK console. In the left-side navigation pane, click Clusters.

2. On the Clusters page, click the name of the cluster that you want to manage. In the left-side navigation pane, choose Nodes> Node Pools.

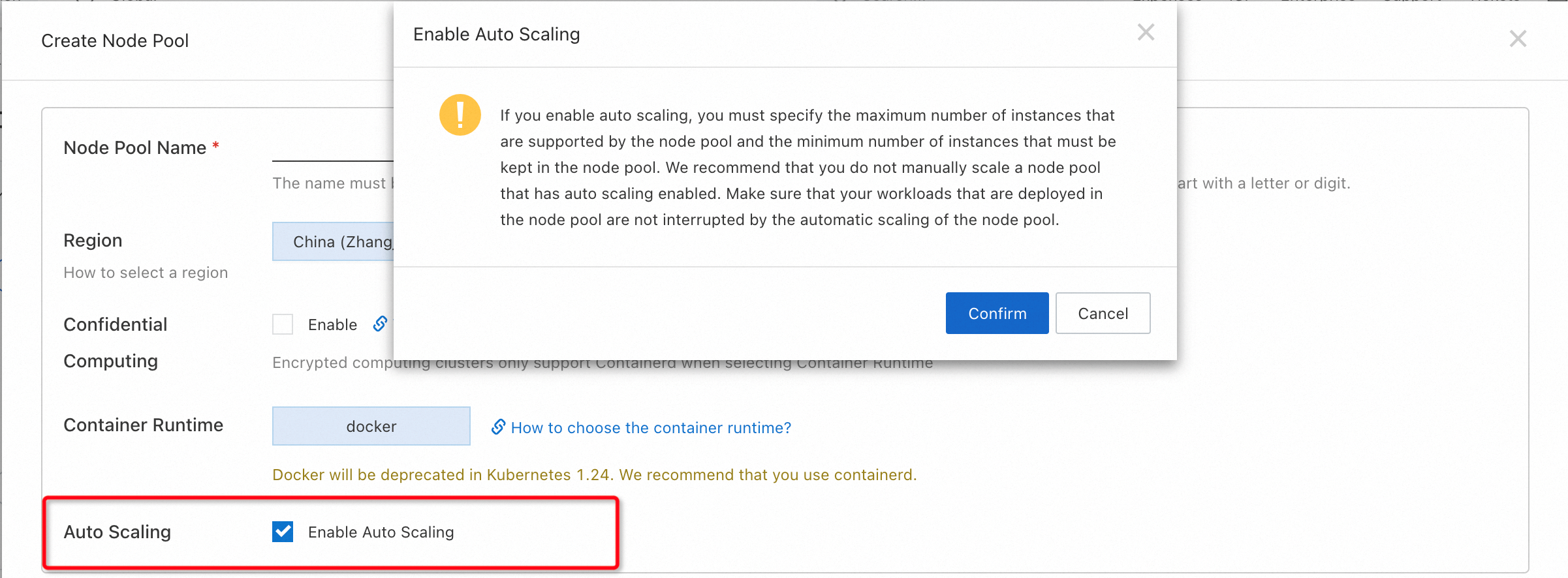

3. On the Node Pools page, create a node pool and add ECS instances to the node pool. For more information, see Create a Node Pool.

Select Enable Auto Scaling.

4. Run the following command to view the resources in the node pools:

kubectl get no -l alibabacloud.com/nodepool-id=<NodePoolID> # This is the ID of the elastic node pool.Expected output:

No resources found1. Run the following command to create a pod in the elastic node pools of the registered clusters:

kubectl apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-autoscaler-cloud

name: nginx-deployment-autoscaler-cloud

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: nginx-autoscaler-cloud

template:

metadata:

labels:

app: nginx-autoscaler-cloud

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: alibabacloud.com/nodepool-id

operator: In

values:

- <NodePoolID> # Enter the ID of the elastic node pool created above.

containers:

- image: 'registry.cn-hangzhou.aliyuncs.com/eci_open/nginx:1.14.2'

imagePullPolicy: IfNotPresent

name: nginx-autoscaler-cloud

ports:

- containerPort: 80

protocol: TCP

resources:

limits:

cpu: '2'

memory: 4Gi

requests:

cpu: '1'

memory: 2Gi

EOF2. Pods cannot be scheduled because there are no nodes in the node pools by default. Run the following command to view the pending pods:

kubectl get po -owide |grep nginx-deployment-autoscaler-cloudExpected output:

nginx-deployment-autoscaler-cloud-567d69ddb8-78szz 0/1 Pending 0 85s <none> <none> <none> <none>

nginx-deployment-autoscaler-cloud-567d69ddb8-8c6h2 0/1 Pending 0 85s <none> <none> <none> <none>3. At this point, wait for the auto scaling to be triggered. Run the following command again to view the resources in the node pools:

kubectl get no -l alibabacloud.com/nodepool-id=<NodePoolID> # This is the ID of the elastic node pool.Expected output:

NAME STATUS ROLES AGE VERSION

cn-zhangjiakou.192.168.XX.XXX Ready <none> 2m2s v1.28.24. When the resources in the node pools are running normally, we run the following command again:

kubectl get po -owide |grep nginx-deployment-autoscaler-cloudExpected output:

nginx-deployment-autoscaler-cloud-66db9cb877-8r6bc 1/1 Running 0 5m29s 192.168.XX.XXX cn-zhangjiakou.192.168.XX.XXX <none> <none>

nginx-deployment-autoscaler-cloud-66db9cb877-s44b8 1/1 Running 0 5m29s 192.168.XX.XXX cn-zhangjiakou.192.168.XX.XXX <none> <none>5. The application is scaled in and the node resources are automatically recycled.

The cloud node pools provided by ACK One registered clusters offer enterprises unprecedented flexibility and scalability with their abundant elastic resource options and simple operation procedures. Alibaba Cloud can quickly respond to business requirements, whether for general computing, container instances, or high-performance computing tasks, helping enterprises more efficiently address the challenge of rapid elasticity in their operations.

Visit the Alibaba Cloud ACK One Official Website right now to learn more and start your journey of intelligent scaling.

ACK One GitOps: Simplified Multi-cluster GitOps Application Management with ApplicationSet UI

Application Distribution Capability by ACK One: Efficient Multi-cluster Application Management

182 posts | 32 followers

FollowAlibaba Container Service - December 18, 2024

Alibaba Cloud Native - October 18, 2023

Alibaba Cloud Native - October 18, 2023

Alibaba Container Service - October 30, 2024

Alibaba Container Service - August 10, 2023

Alibaba Container Service - November 21, 2024

182 posts | 32 followers

Follow Auto Scaling

Auto Scaling

Auto Scaling automatically adjusts computing resources based on your business cycle

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Elastic Container Instance

Elastic Container Instance

An agile and secure serverless container instance service.

Learn MoreMore Posts by Alibaba Container Service