Author | Dongwei Xiang, Senior Quantitative Engineer at JoinQuant and Yuchen Tao, Senior Quantitative Engineer at Join Quant

In the ever-changing financial markets, quantitative investment, with its data-driven decision-making advantages, is emerging as a new force in the investment community. JoinQuant is a technology company. Moreover, it is a pioneer in quantitative research based on big data in the domestic financial market. We use cutting-edge technologies such as quantitative research and artificial intelligence to continuously explore market patterns, optimize algorithm models, and automate strategy execution through programmed trading. In JoinQuant's typical quantitative investment research process, there are several key steps as follows.

Each of these steps is a data-intensive computing task driven by massive amounts of data. Relying on cloud products such as ECS, ECI, ACK, NAS, and OSS provided by Alibaba Cloud, JoinQuant's technical team has quickly built a complete quantitative investment research platform in the past few years.

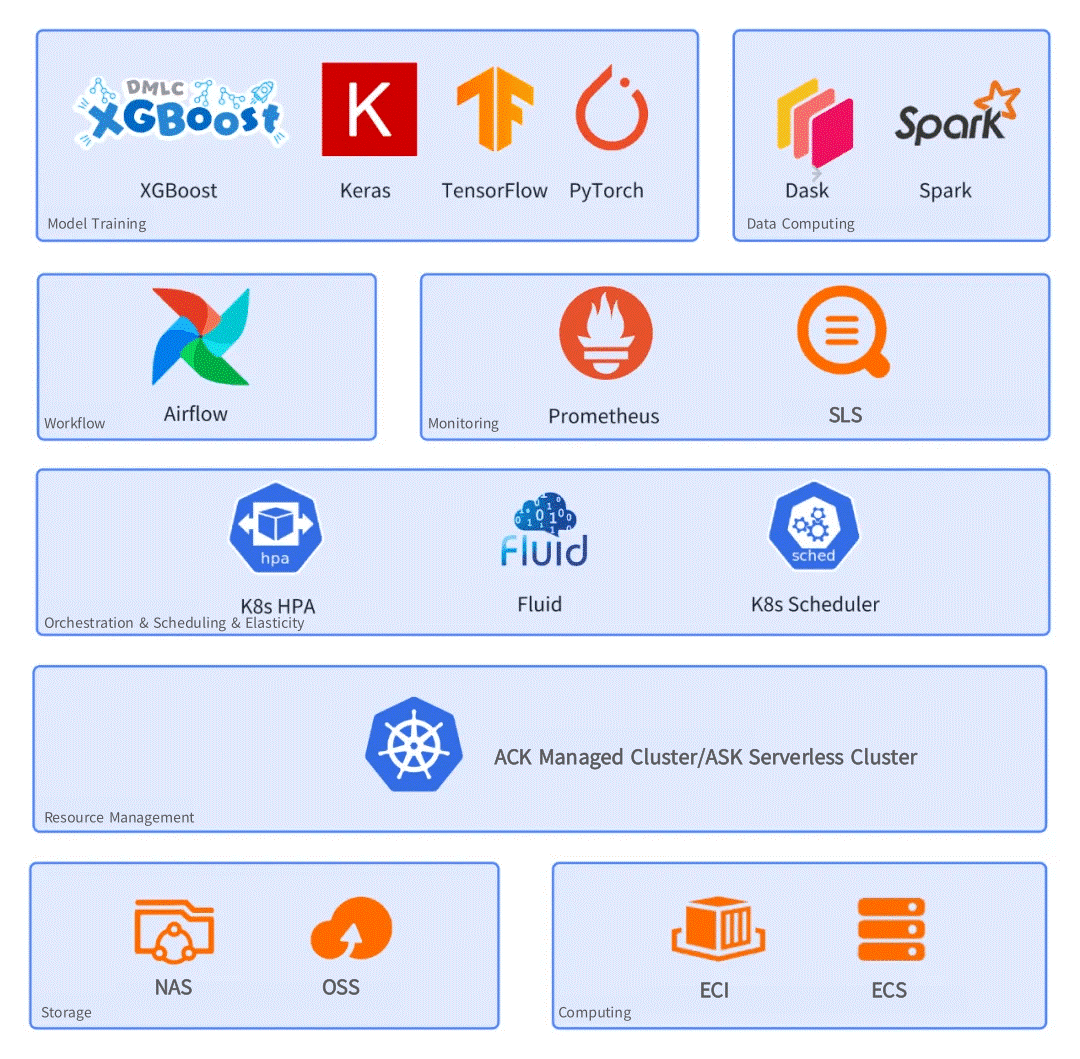

Software Technology Stack Applied in the JoinQuant Investment Research Platform

We use Kubernetes as the base and adopt Alibaba Cloud-native technologies, including NAS, OSS, SLS, GPU sharing, HPA, Prometheus, and Airflow at the same time. Thanks to its advantages in computing cost and easy scalability, as well as the strengths of containerization in efficient deployment and agile iteration, we have covered more computing scenarios like factor calculation driven by massive historical financial data, quantitative model training, and investment research strategy backtesting.

However, in practice, we found that there are still many deficiencies in the support of real quantitative investment research products for data-intensive and elastic flexibility scenarios in the cloud.

In the course of quantitative investment research, the platform encountered multiple challenges such as performance bottlenecks, cost control, complex dataset management, data security issues, and user experience ensurance. Especially in terms of high-concurrency access and dataset management, traditional NAS and OSS storage solutions no longer meet our dual requirements for performance and cost-effectiveness. Data scientists are often limited by the existing technologies when trying to process data more efficiently and flexibly.

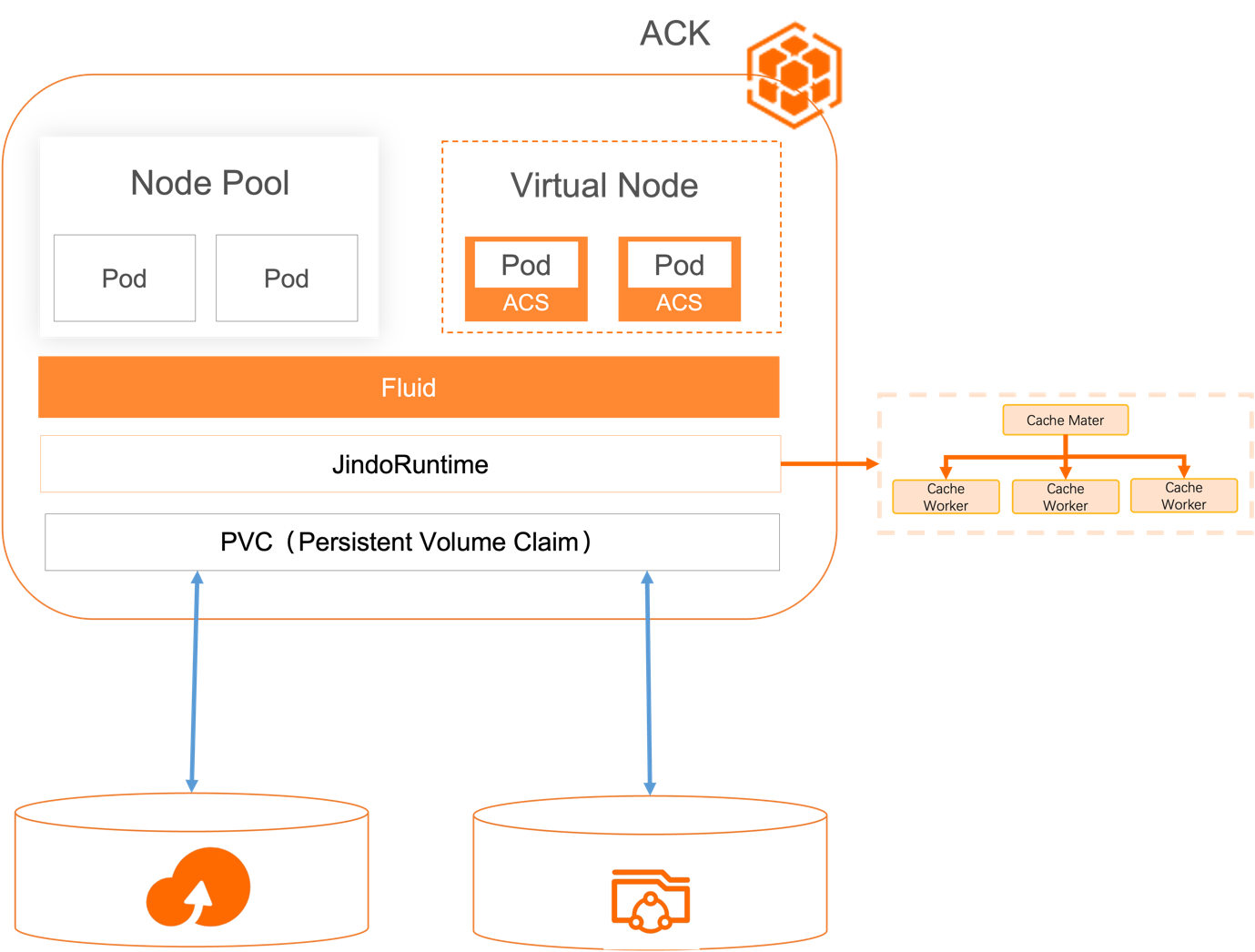

We found that the CSI system of Kubernetes alone could not meet our demand for accelerating multiple data sources, while the CNCF Sandbox project, Fluid, provides a simple way to manage and accelerate data from multiple PVCs, including data from OSS and NAS.

Fluid supports a variety of runtimes, including distributed cache systems such as JindoRuntime, AlluxioRuntime, JuiceFSRuntime, and VIneyard Runtime. After comparison, we found that JindoRuntime stands out in scenario matching, performance, and stability. JindoRuntime is built on JindoCache, a distributed cache acceleration engine. JindoCache, formerly known as JindoFSx, is a cloud-native data lake acceleration service provided by the data lake management team of Alibaba Cloud. JindoCache supports multiple protocols such as OSS, HDFS, standard S3 protocols, and POSIX and features such as data caching and metadata caching.

After further research and practical use, it is found that many problems that plagued researchers have been effectively solved, including caching costs and the need for secure management and flexible use of datasets. At present, the whole system has been running smoothly for nearly a year based on Fluid, bringing great help to the quantitative research team. Here we share our experience and gains:

Issue: When you perform different types of data processing tasks, a single data storage configuration cannot meet your requirements. For example, the dataset of the training task needs to be set as read-only, while the feature data and checkpoints generated in the middle require read-write permissions. Traditional PVCs do not have the flexibility to handle data sources from different storages at the same time.

Solution: You can use Fluid to set different storage policies for each data type. For example, within the same storage system, training data can be configured as read-only, while feature data and checkpoints can be configured as read-write. In this way, Fluid helps customers flexibly manage different data types in the same PVC, improving resource utilization and performance benefits.

Issue: The demand for computing resources fluctuates markedly when you run a quantitative analysis. During peak hours, a large number of compute instances are scheduled in a short period of time, while during off-peak hours, resources are hardly required. Fixed reserved resources not only cause high costs but also lead to resource waste.

Solution: Fluid supports multiple auto scaling policies to allow customers to dynamically scale resources based on business requirements. Through application prefetching and scaling operations based on business patterns, Fluid helps customers quickly expand computing resources during peak hours. Meanwhile, it implements dynamic and elastic control of data cache throughput by maintaining a self-managed data cache. This auto scaling policy improves resource utilization efficiency and effectively reduces operating costs.

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: training-data

spec:

mounts:

- mountPoint: "pvc://nas/training-data"

path: "/training-data"

accessModes: ReadOnlyMany

---

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: checkpoint

spec:

mounts:

- mountPoint: "pvc://nas/checkpoint"

path: "/checkpoints"

accessModes: ReadWriteManyIssue: Quantitative researchers use GPUs for high-density data computing. The high data access latency during each task scheduling affects the overall computing performance. Since GPU resources are expensive, the team wants to schedule GPUs with the data as close to the compute nodes as possible.

Solution: By using data cache-aware scheduling, Fluid provides the location information of the data cache to the Kubernetes scheduler during application scheduling. This enables applications at the customer end to be scheduled to cache nodes or nodes closer to the cache. This reduces data access latency and maximizes the efficiency of GPU resource usage.

Issue: Quantitative researchers use heterogeneous data sources. Existing solutions cannot meet the requirements of simultaneous acceleration across storage datasets. The differences in usage also bring compatibility complexity to the O&M team.

Solution: Fluid provides unified PVC acceleration capabilities. You can perform operations on OSS data and NAS data by creating a Dataset, scaling out the Runtime, and executing Dataload. The PVC acceleration feature of JindoRuntime is easy to use and meets the performance requirements.

Issue: Data must be isolated and shared. To protect sensitive data, computing tasks and data need to implement access control and isolation. Relatively public data should be easily accessible and used by researchers.

Solution: The Fluid Dataset implements dataset access control among different teams by using the namespace resource isolation mechanism of Kubernetes. This not only protects data privacy but also achieves data isolation. In addition, Fluid supports data access across namespaces, enabling public datasets to be reused in multiple teams. This facilitates one-time caching and dataset sharing among multiple teams, greatly improving data utilization and ease of management.

Issue: Quantitative researchers mainly use Python for development, but when they need to operate in a Kubernetes environment, they must learn and use YAML. Many researchers claim that they are unable to master YAML, which brings them great learning costs and reduces development efficiency.

Solution: Fluid provides the DataFlow feature. You can use Fluid APIs to define automated data processing, including operations related to cache scaling, prefetching, migration, and custom data processing. Most notably, these operations can be done through the Python interface, allowing the development and training of accurate predictive models using the same set of code in both local development and production environments.

Issue: When researchers work in a Jupyter container, they often dynamically mount new storage data sources. Traditionally, pods need to be restarted, which not only wastes time but also interrupts the workflow. This issue has been criticized for a long time.

Solution: The Dataset feature of Fluid allows you to describe multiple data sources and dynamically mount new points or unmount old points. These changes are immediately visible to your containers without restarting. This addresses the biggest concern of data scientists about container usage.

Despite the many advantages of open-source Fluid, in practice, we have found that it does not fully meet our needs:

We looked for solutions and found ack-fluid in the ACK cloud-native AI suite, which can solve these problems well:

JindoRuntime of Fluid preferentially selects ECS and ECI with high network I/O and large memory capabilities as cache workers. The network capability of the ECS instance has been continuously improved, and the current network bandwidth has far exceeded the I/O capabilities of Standard SSDs. For example, ecs.g8i.16xlarge of the ECS instance type on Alibaba Cloud has a basic network bandwidth value of 32 Gbit/s and a memory of 256 GiB. Assuming that two such ECS instances are provided, in theory, 32 GB of data can be read in 2 seconds.

In scenarios with high fault tolerance such as batch processing, you can use Spot Instances as cache workers and add the Kubernetes annotation "k8s.aliyun.com/eci-spot-strategy": "SpotAsPriceGo". This allows you to enjoy the cost benefits brought by Spot Instances while ensuring high stability.

The throughput of the investment research platform shows an obvious tidal variation due to the business characteristics, so configuring the scheduled auto scaling policy of the cache node can yield good benefits, including cost control and performance improvement. For datasets that are individually required by researchers, you can also reserve interfaces for them to scale manually.

apiVersion:autoscaling.alibabacloud.com/v1beta1

kind: CronHorizontalPodAutoscaler

metadata:

name: joinquant-research

namespace: default

spec:

scaleTargetRef:

apiVersion: data.fluid.io/v1alpha1

kind: JindoRuntime

name: joinquant-research

jobs:

- name: "scale-down"

schedule: "0 0 7 ? * 1"

targetSize: 10

- name: "scale-up"

schedule: "0 0 18 ? * 5-6"

targetSize: 20JindoRuntime supports prefetching data while reading it. However, this simultaneous execution method incurs high performance overhead. In our experience, we perform prefetching first and monitor the cache ratio at the same time. Once the cache ratio reaches a threshold, a task is triggered. This avoids I/O latency issues caused by running highly concurrent tasks in advance.

Regularly update metadata in the cache to accommodate periodic changes in the backend storage data

To improve the data loading speed, we choose to retain the metadata in the master of JindoRuntime for a long period of time and pull it multiple times. Meanwhile, as business data is often collected and stored in the backend storage system without caching, Fluid cannot detect these changes. To address this problem, we configure Fluid to perform data prefetching at regular intervals to synchronize data changes in the underlying storage system.

We have made relevant settings in the configuration files of Dataset and DataLoad.

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: joinquant-dataset

spec:

...

# accessModes: ["ReadOnlyMany"] ReadOnlyMany为默认值

---

apiVersion: data.fluid.io/v1alpha1

kind: DataLoad

metadata:

name: joinquant-dataset-warmup

spec:

...

policy: Cron

schedule: "0 0 * * *" # 每日0点执行数据预热

loadMetadata: true # 数据预热时同步底层存储系统数据变化

target:

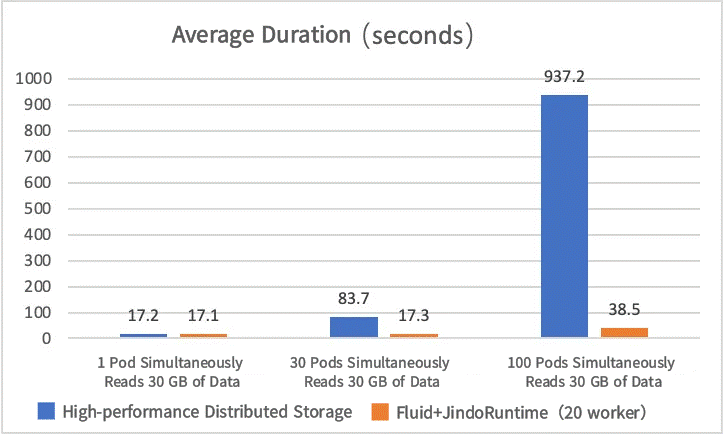

- path: /path/to/warmupIn the actual evaluation, we used 20 ECIs of the ecs.g8i.16xlarge specification as worker nodes to build a JindoRuntime cluster. The maximum bandwidth of a single ECI is 32 Gbit/s; the task node is selected as ecs.gn7i-c16g1.4xlarge with a maximum bandwidth of 16 Gbit/s. The file size downloaded is 30 GiB. The peak bandwidth of high-performance distributed storage is 3 GB/s, and this bandwidth only increases with the increase in storage capacity. Additionally, to improve the data reading speed, we choose to use memory for data caching.

For ease of comparison, we counted the access duration and compared it with the time spent accessing data using Fluid technology. Sample result:

When the number of concurrent pods is small, the bandwidth of traditional high-performance distributed storage can meet the demand. In this case, Fluid does not show obvious advantages. As the number of concurrent pods increases, the performance benefits of Fluid become more pronounced. When the concurrency is scaled up to 10 pods, the average time can be reduced to 1/5 of that of the traditional method. When the concurrency is expanded to 100 pods, the data access time is reduced from 15 minutes to 38.5 seconds, accompanied by the computing cost reduced to 1/10. This significantly improves the task processing speed and reduces the ECI cost caused by I/O latency.

More importantly, the data reading bandwidth of the Fluid system is positively correlated with the size of the JindoRuntime cluster. If you need to scale up more pods, you can modify the replicas of JindoRuntime to increase the data bandwidth. Traditional distributed storage cannot offer this dynamic scaling capability.

By using Fluid, we gain more flexibility and possibilities in read-only dataset scenarios. Fluid helps us realize that in addition to computing resources, data cache can also be regarded as a stateless elastic resource, which can be combined with Kubernetes' auto scaling policy to adapt to changes in workloads and meet our demand for peak and off-peak periods.

All in all, Fluid undoubtedly supports the elasticity of data cache, improves work efficiency, and brings substantial benefits to our work.

In the future, we will continue to cooperate with the Fluid community to better solve the problems we encounter every day and promote improvements in the community:

• Install the management component of ACK Fluid. For more information, see https://www.alibabacloud.com/help/en/ack/cloud-native-ai-suite/user-guide/overview-of-fluid

• Create a NAS-based PVC. For more information, see https://www.alibabacloud.com/help/en/eci/user-guide/mount-a-nas-file-system Obtain the output.

$ kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/static-pvc-nas Bound demo-pv 5Gi RWX 19h

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/static-pv-nas 30Gi RWX Retain Bound default/static-pvc-nas 19h• Use SDK for Python to create a dataset and perform scale-out and data prefetching in sequence. You can also use YAML files to create the dataset.

import fluid

from fluid import constants

from fluid import models

# Connect to the Fluid using the default kubeconfig file and create a Fluid client instance

client_config = fluid.ClientConfig()

fluid_client = fluid.FluidClient(client_config)

# Create a Dataset named mydata under default namespace,

# it's ReadOnly by default.

fluid_client.create_dataset(

dataset_name="mydata",

mount_name="/",

mount_point="pvc://static-pvc-nas/mydata"

)

# Initialize the configuration of the JindoRuntime runtime

# and bind the Dataset to that runtime.

# The number of replicas is 1 and the memory is 30Gi respectively

# Then scale out the elastic cache and preload the data in specified path

dataflow = dataset.bind_runtime(

runtime_type=constants.JINDO_RUNTIME_KIND,

replicas=1,

cache_capacity_GiB=30,

cache_medium="MEM",

wait=True

).scale_cache(replicas=2).preload(target_path="/train")

# Submit the data flow and wait for its completion

run = dataflow.run()

run.wait()• View the status of the dataset. Once the data cache is complete, you can easily start using the cached data.

$ kubectl get dataset mydata

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

mydata 52.95GiB 52.95GiB 60.00GiB 100.0% Bound 2m19sJoinQuant is a technology company based on big data in the domestic financial market. Through quantitative research, artificial intelligence, and other technologies, it constantly explores patterns, optimizes algorithms, and refines models to conduct programmatic trading.

The company currently has a team of over 70 members, including more than 40 professionals in investment research and IT. All team members graduated from well-known universities at home and abroad. The team brings together experts in various fields including mathematics, physics, computer science, statistics, fluid mechanics, and financial engineering, and more than 70% of them have a master's or doctoral degree. JoinQuant combines state-of-the-art research and technologies with financial investments efficiently to create long-term and sound investment value for investors.

Decipher the Open-source Serverless Container Framework: Event-driven

223 posts | 33 followers

FollowAlibaba Cloud Native - July 14, 2023

Alibaba Container Service - February 21, 2023

Alibaba Clouder - March 3, 2021

Alibaba Container Service - February 21, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Cloud Native Community - March 18, 2024

223 posts | 33 followers

Follow Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Apsara File Storage NAS

Apsara File Storage NAS

Simple, scalable, on-demand and reliable network attached storage for use with ECS instances, HPC and Container Service.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Container Service