By Biran

You can use the cloud-native architecture to run tasks on the cloud (such as AI and big data). You can enjoy the advantages of elastic computing resources. However, you encounter the challenges of data access latency and high bandwidth overhead for remote data pull caused by the separation of computing and storage. The iterative remote reading of many training data will slow down the GPU computing efficiency, especially in GPU deep learning training scenarios.

On the other hand, Kubernetes only provides heterogeneous storage service access and management standard interfaces (Container Storage Interface (CSI)). It does not define how applications use and manage data in container clusters. When running training tasks, data scientists need to be able to manage dataset versions, control access permissions, preprocess datasets, accelerate heterogeneous data reads, and more. However, there is no such standard solution in Kubernetes, which is one of the important capabilities missing from the cloud-native container community.

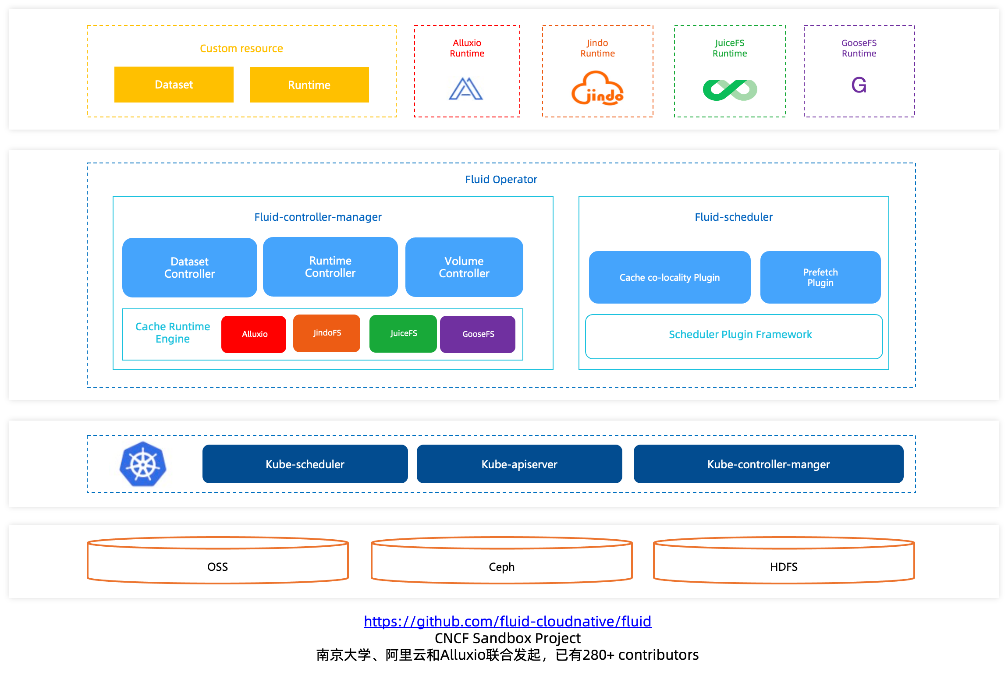

Fluid abstracts the process of using data for computing tasks and proposes the concept of elastic Dataset, which is implemented in Kubernetes as a first class citizen. Fluid creates a data orchestration and acceleration system around the elastic dataset to implement capabilities (such as Dataset management (CRUD operations), permission control, and access acceleration).

Fluid has two core concepts: Dataset and Runtime.

By default, the earliest mode of Fluid supports a Dataset exclusive to one runtime, which can be understood as a dataset with dedicated cache cluster acceleration. It can be customized and optimized for the characteristics of the dataset (such as single file size, file quantity scale, and the number of clients). A separate caching system is provided. It provides the best performance and stability and does not interfere with each other. However, it is a waste of hardware resources, which requires the deployment of cache systems for different datasets. In addition, it is complex to maintain, which requires managing multiple cache runtimes. This mode is essentially a single-tenant architecture and is suitable for scenarios with high requirements for data access throughput and latency.

With the deepening of the use of Fluid, there are different needs.

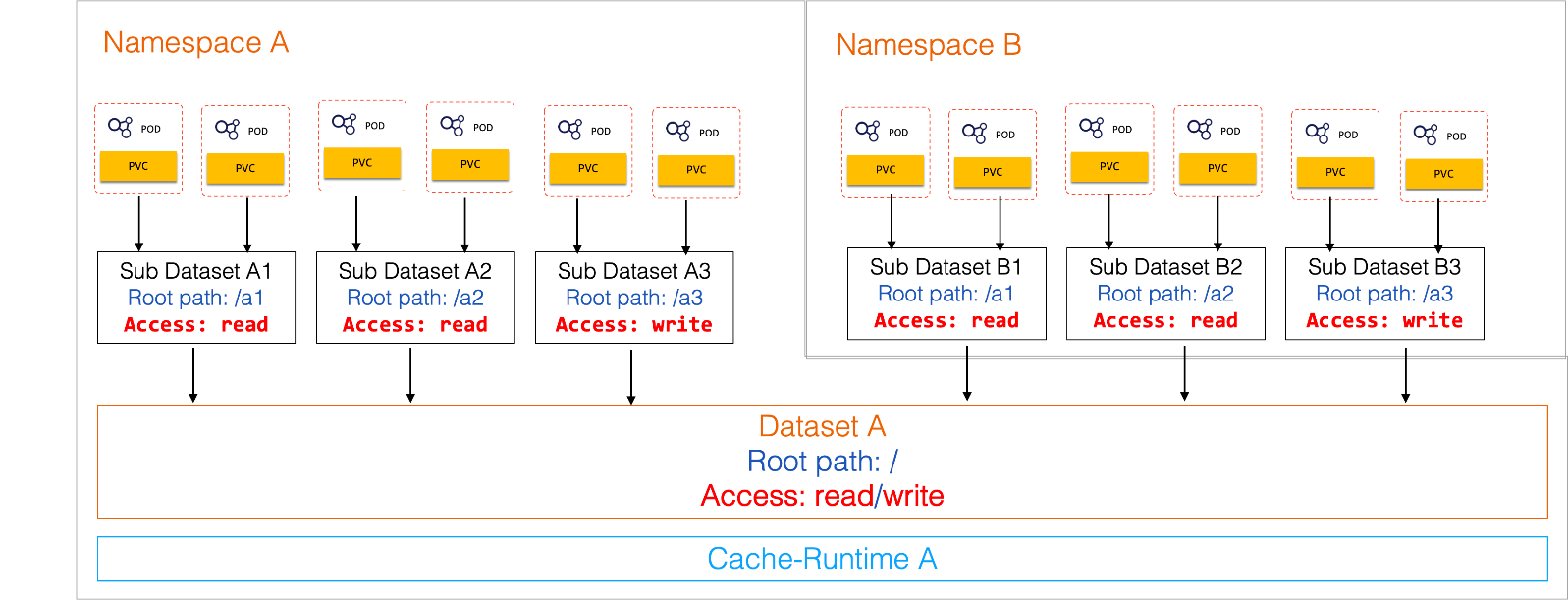

Users of JuiceFS tend to use Dataset to point to the root directory of JuiceFS. Different subdirectories are assigned to different data scientist groups as different data sets, and the data sets are hoped to be invisible to each other. At the same time, it supports the tightening of permissions on SubDataset. For example, the root data set supports reading and writing, and the SubDataset can be tightened to read-only.

This article uses AlluxioRuntime as an example to explain how to use Fluid to support SubDataset.

When user A in Kubernetes namespace spark creates a dataset spark of three versions and only wants team A to see dataset spark-3.1.3, team B can only see dataset spark-3.3.1:

.

|-- spark-3.1.3

| |-- SparkR_3.1.3.tar.gz

| |-- pyspark-3.1.3.tar.gz

| |-- spark-3.1.3-bin-hadoop2.7.tgz

| |-- spark-3.1.3-bin-hadoop3.2.tgz

| |-- spark-3.1.3-bin-without-hadoop.tgz

| `-- spark-3.1.3.tgz

|-- spark-3.2.3

| |-- SparkR_3.2.3.tar.gz

| |-- pyspark-3.2.3.tar.gz

| |-- spark-3.2.3-bin-hadoop2.7.tgz

| |-- spark-3.2.3-bin-hadoop3.2-scala2.13.tgz

| |-- spark-3.2.3-bin-hadoop3.2.tgz

| |-- spark-3.2.3-bin-without-hadoop.tgz

| `-- spark-3.2.3.tgz

`-- spark-3.3.1

|-- SparkR_3.3.1.tar.gz

|-- pyspark-3.3.1.tar.gz

|-- spark-3.3.1-bin-hadoop2.tgz

|-- spark-3.3.1-bin-hadoop3-scala2.13.tgz

|-- spark-3.3.1-bin-hadoop3.tgz

|-- spark-3.3.1-bin-without-hadoop.tgz

`-- spark-3.3.1.tgzThis enables different teams to access different subDatasets, and their data is not visible to each other.

1. Before you run the sample code, refer to the installation document and check whether the components of Fluid are running properly.

$ kubectl get po -n fluid-system

NAME READY STATUS RESTARTS AGE

alluxioruntime-controller-5c6d5f44b4-p69mt 1/1 Running 0 149m

csi-nodeplugin-fluid-bx5d2 2/2 Running 0 149m

csi-nodeplugin-fluid-n6vbv 2/2 Running 0 149m

csi-nodeplugin-fluid-t5p8c 2/2 Running 0 148m

dataset-controller-797868bd7f-g4slx 1/1 Running 0 149m

fluid-webhook-6f6b7dfd74-hmsgw 1/1 Running 0 149m

fluidapp-controller-79c7b89c9-rtdg7 1/1 Running 0 149m

thinruntime-controller-5fdbfd54d4-l9jcz 1/1 Running 0 149m2. Create a namespace spark:

$ kubectl create ns spark3. Create a Dataset and AlluxioRuntime in the namespace development, where the dataset is read-write:

$ cat<<EOF >dataset.yaml

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: spark

namespace: spark

spec:

mounts:

- mountPoint: https://mirrors.bit.edu.cn/apache/spark/

name: spark

path: "/"

accessModes:

- ReadWriteMany

---

apiVersion: data.fluid.io/v1alpha1

kind: AlluxioRuntime

metadata:

name: spark

namespace: spark

spec:

replicas: 1

tieredstore:

levels:

- mediumtype: MEM

path: /dev/shm

quota: 4Gi

high: "0.95"

low: "0.7"

EOF

$ kubectl create -f dataset.yaml4. View the status of a dataset:

$ kubectl get dataset -n spark

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

spark 3.41GiB 0.00B 4.00GiB 0.0% Bound 61s5. Create Pod in the namespace spark to access datasets:

$ cat<<EOF >app.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

namespace: spark

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: /data

name: spark

volumes:

- name: spark

persistentVolumeClaim:

claimName: spark

EOF

$ kubectl create -f app.yaml6. View the data that the application can access through the dataset. You can see three folders: spark-3.1.3, spark-3.2.3, and spark-3.3.1. You can also see the mount permission is RW (ReadWriteMany).

$ kubectl exec -it -n spark nginx -- bash

mount | grep /data

alluxio-fuse on /data type fuse.alluxio-fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other,max_read=131072)

root@nginx:/# ls -ltr /data/

total 2

dr--r----- 1 root root 6 Dec 16 07:25 spark-3.1.3

dr--r----- 1 root root 7 Dec 16 07:25 spark-3.2.3

dr--r----- 1 root root 7 Dec 16 07:25 spark-3.3.1

root@nginx:/# ls -ltr /data/spark-3.1.3/

total 842999

-r--r----- 1 root root 25479200 Feb 6 2022 spark-3.1.3.tgz

-r--r----- 1 root root 164080426 Feb 6 2022 spark-3.1.3-bin-without-hadoop.tgz

-r--r----- 1 root root 231842529 Feb 6 2022 spark-3.1.3-bin-hadoop3.2.tgz

-r--r----- 1 root root 227452039 Feb 6 2022 spark-3.1.3-bin-hadoop2.7.tgz

-r--r----- 1 root root 214027643 Feb 6 2022 pyspark-3.1.3.tar.gz

-r--r----- 1 root root 347324 Feb 6 2022 SparkR_3.1.3.tar.gz

root@nginx:/# ls -ltr /data/spark-3.2.3/

total 1368354

-r--r----- 1 root root 28375439 Nov 14 18:47 spark-3.2.3.tgz

-r--r----- 1 root root 209599610 Nov 14 18:47 spark-3.2.3-bin-without-hadoop.tgz

-r--r----- 1 root root 301136158 Nov 14 18:47 spark-3.2.3-bin-hadoop3.2.tgz

-r--r----- 1 root root 307366638 Nov 14 18:47 spark-3.2.3-bin-hadoop3.2-scala2.13.tgz

-r--r----- 1 root root 272866820 Nov 14 18:47 spark-3.2.3-bin-hadoop2.7.tgz

-r--r----- 1 root root 281497207 Nov 14 18:47 pyspark-3.2.3.tar.gz

-r--r----- 1 root root 349762 Nov 14 18:47 SparkR_3.2.3.tar.gz1. Create a namespace spark-313:

$ kubectl create ns spark-3132. The administrator creates the following information in the spark-313 namespace:

Refer to the spark dataset. The mountPoint format is dataset://${namespace of the initial dataset}/${name of the initial dataset} /subdirectory. In this example, the value is dataset://spark/spark/ spark-3.1.3.

Note: The currently referenced dataset only supports one mount, and the format must be dataset://. (This means the dataset fails to be created when dataset:// or other formats occur.) The dataset permission is read/write.

$ cat<<EOF >spark-313.yaml

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: spark

namespace: spark-313

spec:

mounts:

- mountPoint: dataset://spark/spark/spark-3.1.3

accessModes:

- ReadWriteMany

EOF

$ kubectl create -f spark-313.yaml3. View the dataset status:

$ kubectl get dataset -n spark-313

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

spark 3.41GiB 0.00B 4.00GiB 0.0% Bound 108s4. The user creates a Pod in the spark-313 namespace.

$ cat<<EOFba >app-spark313.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

namespace: spark-313

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: /data

name: spark

volumes:

- name: spark

persistentVolumeClaim:

claimName: spark

EOF

$ kubectl create -f app-spark313.yaml5. If you access data in the spark-313 namespace, you can only see the contents of the spark-3.1.3 folder and see the pvc permission RWX.

$ kubectl get pvc -n spark-313

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

spark Bound spark-313-spark 100Gi RWX fluid 21h

$ kubectl exec -it -n spark-313 nginx -- bash

root@nginx:/# mount | grep /data

alluxio-fuse on /data type fuse.alluxio-fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other,max_read=131072)

root@nginx:/# ls -ltr /data/

total 842999

-r--r----- 1 root root 25479200 Feb 6 2022 spark-3.1.3.tgz

-r--r----- 1 root root 164080426 Feb 6 2022 spark-3.1.3-bin-without-hadoop.tgz

-r--r----- 1 root root 231842529 Feb 6 2022 spark-3.1.3-bin-hadoop3.2.tgz

-r--r----- 1 root root 227452039 Feb 6 2022 spark-3.1.3-bin-hadoop2.7.tgz

-r--r----- 1 root root 214027643 Feb 6 2022 pyspark-3.1.3.tar.gz

-r--r----- 1 root root 347324 Feb 6 2022 SparkR_3.1.3.tar.gz1. Create a namespace spark-331:

$ kubectl create ns spark-3312. The administrator creates the following information in the spark-331 namespace:

Refer to the spark dataset. The mountPoint format is dataset://${namespace of the initial dataset}/${name of the initial dataset} /subdirectory. In this example, dataset://spark/spark/ spark-3.3.1.

Note: The currently referenced dataset supports only one mount, and the form must be dataset://. (This means the dataset creation fails when dataset:// and other forms occur.) The read and write permissions are specified as ReadOnlyMany.

$ cat<<EOF >spark-331.yaml

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: spark

namespace: spark-331

spec:

mounts:

- mountPoint: dataset://spark/spark/spark-3.3.1

accessModes:

- ReadOnlyMany

EOF

$ kubectl create -f spark-331.yaml3. The user creates a Pod in the spark-331 namespace.

$ cat<<EOF >app-spark331.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

namespace: spark-331

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: /data

name: spark

volumes:

- name: spark

persistentVolumeClaim:

claimName: spark

EOF

$ kubectl create -f app-spark331.yaml4. If you access data in the spark-331 namespace, you can only view the contents of the spark-3.3.1 folder and view the PVC permission ROX (ReadOnlyMany).

$ kubectl get pvc -n spark-331

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

spark Bound spark-331-spark 100Gi ROX fluid 14m$ kubectl exec -it -n spark-331 nginx -- bash

mount | grep /data

alluxio-fuse on /data type fuse.alluxio-fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other,max_read=131072)

root@nginx:/# ls -ltr /data/

total 842999

-r--r----- 1 root root 25479200 Feb 6 2022 spark-3.1.3.tgz

-r--r----- 1 root root 164080426 Feb 6 2022 spark-3.1.3-bin-without-hadoop.tgz

-r--r----- 1 root root 231842529 Feb 6 2022 spark-3.1.3-bin-hadoop3.2.tgz

-r--r----- 1 root root 227452039 Feb 6 2022 spark-3.1.3-bin-hadoop2.7.tgz

-r--r----- 1 root root 214027643 Feb 6 2022 pyspark-3.1.3.tar.gz

-r--r----- 1 root root 347324 Feb 6 2022 SparkR_3.1.3.tar.gzIn this example, we demonstrated the ability of a SubDataset to use a subdirectory of a Dataset as a Dataset. The dataset can be used by different data scientists according to different segmentation strategies.

RBAC Permission Settings for ACK Registered Cluster Agent Components

224 posts | 33 followers

FollowAlibaba Container Service - February 21, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Container Service - August 16, 2024

Alibaba Container Service - February 27, 2024

Alibaba Container Service - February 25, 2025

224 posts | 33 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Container Service