By Rong Gu

Cloud-native technology offers resource cost efficiency, easy deployment and maintenance, and flexible computing power. As a result, more enterprises and developers are running data-intensive applications, especially those in the AI and big data fields, in cloud-native environments. While the cloud-native computing and storage separation architecture provides benefits in resource economy and scalability, it also increases data access latency and bandwidth costs.

Kubernetes provides traditional data access interfaces, such as the Container Storage Interface (CSI), for integrating and managing heterogeneous storage services. However, it does not define how applications can efficiently use and manage data within a container cluster. A lot of data-intensive applications need higher-level data access and management interfaces. For example, data scientists running AI model training tasks need to manage dataset versions and access permissions, preprocess datasets, update dynamic data sources, and accelerate heterogeneous data reads. Before the Fluid open source project was introduced, there was no standard solution within the Kubernetes ecosystem for these needs. This was a critical piece missing for cloud-native environments to fully support big data and AI applications.

To address these challenges, Nanjing University, the Alibaba Cloud Container Service for Kubernetes (ACK) team, and the Alluxio open source community jointly initiated the Fluid open source project. By abstracting the process of data usage in computing tasks, they proposed the concept of cloud-native elastic data abstraction (such as DataSet). Around DataSet, we created Fluid, a cloud-native data orchestration and acceleration system, to provide capabilities such as dataset management (CRUD operations), permission management, and access acceleration. After joining the Cloud Native Computing Foundation (CNCF) in April 2021 and undergoing 36 months of continuous R&D iteration and production environment validation, we officially released the mature and stable Fluid 1.0.

The testing framework of Fluid includes daily unit tests, functional tests, compatibility tests, security tests, and real-world scenario tests. Before each release, Fluid undergoes compatibility testing across different Kubernetes versions.

Fluid originated from the cooperation in scientific research between universities and enterprises. Since its open source release, Fluid has been widely applied by community users of different sizes from various industries in scenarios such as AIGC, large models, big data, hybrid cloud, cloud-based development machine management, and autonomous driving data simulation. After continuous iteration and improvement in real cloud applications, the system has matured in stability, performance, and scalability.

According to statistics from both public and private cloud environments, thousands of Kubernetes clusters are using Fluid. It supports up to tens of thousands of nodes in machine learning platforms. Daily users of Fluid in cloud-native environments come from diverse fields such as the Internet, technology, finance, telecommunications, education, autonomous driving and robotics, and smart manufacturing.

Some users have also shared their experiences with Fluid in various scenarios within the open source community.

Fluid 1.0 boasts several key features:

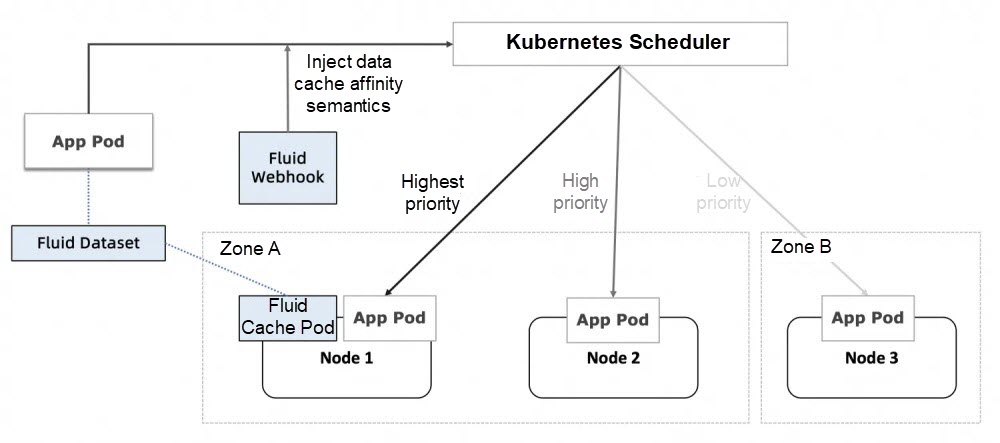

Fluid allows users to schedule tasks based on where dataset caches are located, without the need to understand the detailed layout of the data caches. This scheduling strategy works in the following way:

a. Data Cache Locality Levels: Fluid classifies data access into different levels based on how close the data cache is to the computing tasks. These levels include "on the same node", "in the same rack", "in the same availability zone", and "in the same region".

b. Priority Scheduling Strategy: Fluid schedules computing tasks to the nodes where the data cache is located, to ensure optimal data locality. If the best locality cannot be achieved, Fluid schedules tasks to nodes at different levels based on the data transmission distance.

c. Flexible Configuration: To accommodate the different definitions of affinity and various cloud service providers, Fluid supports custom configurations based on labels. Users can adjust the scheduling strategy according to their specific cloud environment and cluster setup to meet different needs.

For more information, see Fluid Supports Tiered Locality Scheduling.

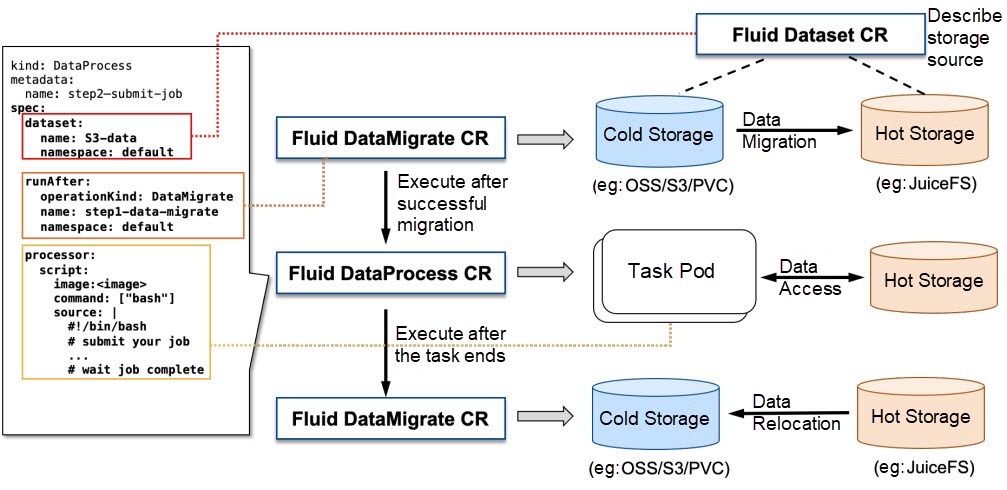

Fluid orchestrates both data and computing tasks within Kubernetes, handling them in both spatial and temporal dimensions. Spatial orchestration means scheduling computing tasks to nodes with cached data or nearby caches, boosting the performance of data-intensive applications. Temporal orchestration allows submitting data operations and tasks simultaneously. However, data migration and preheating are completed before task execution. This ensures smooth, unattended task execution and improves engineering efficiency.

The latest version of Fluid introduces a new type of data operation called DataProcess. This provides data scientists with a way to define custom data processing logic. Fluid also offers various trigger mechanisms for all data operations, including once, onEvent, and Cron.

For example, the following setup runs a data preheating operation every two minutes:

apiVersion: data.fluid.io/v1alpha1

kind: DataLoad

metadata:

name: cron-dataload

spec:

dataset:

name: demo

namespace: default

policy: Cron

schedule: "*/2 * * * *" # Run every 2 minFluid introduces the DataFlow feature, enabling users to define automated data processing workflows with its API. DataFlow supports all Fluid operations, such as cache preheating (DataLoad), data migration (DataMigrate), data backup (DataBackup), and custom data processing (DataProcess). This makes it easier for both O&M engineers and data scientists to manage data operations.

For example, consider the following sequence:

Note: DataFlow only supports sequential execution of data operations, following the defined order. It does not support advanced features such as parallel execution, iterative execution, or conditional execution. For such requirements, users should use Argo Workflow or Tekton.

In practice, data scientists prefer to use code (Python) instead of YAML to define their workflows. Therefore, Fluid offers a higher-level Python interface, making it easier to automate dataset operations and create data flows. The following code is the Python implementation of the previously described workflow:

flow = dataset.migrate(path="/data/", \

migrate_direction=constants.DATA_MIGRATE_DIRECTION_FROM) \

.load("/data/1.txt") \

.process(processor=create_processor(train)) \

.migrate(path="/data/", \

migrate_direction=constants.DATA_MIGRATE_DIRECTION_TO)

run = flow.run()For more information, visit https://github.com/fluid-cloudnative/fluid-client-python/blob/master/examples/02_ml_train_pipeline/pipeline.ipynb

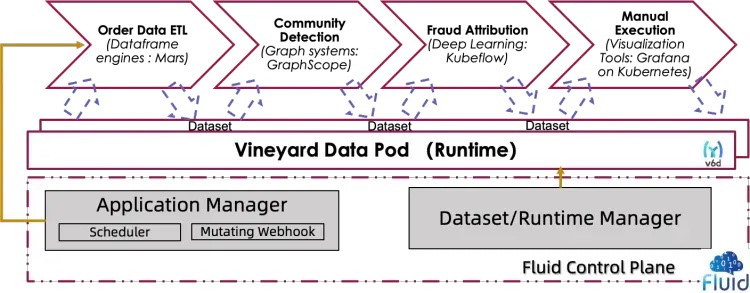

Fluid supports integrating distributed cache engines, such as Alluxio, JindoFS, and JuiceFS which are designed for file systems, with a plugin system. Fluid 1.0 has integrated Vineyard, a distributed in-memory data manager. It combines the efficient data sharing capabilities of Vineyard with the task orchestration feature of Fluid. It also provides data scientists with a Python interface, allowing them to manage intermediate data in Kubernetes by using familiar tools.

For more information, see Fluid and Vineyard Team Up for Efficient Intermediate Data Management in Kubernetes.

In production environments, stability, scalability, and security are crucial for open source software. These are the areas where Fluid continues to improve.

The performance and scalability of Fluid have been validated in large-scale Kubernetes production environments. Fluid reliably supports clusters with over 10,000 nodes. During 24/7 operation, Fluid manages the full lifecycle of over 2,500 datasets and supports more than 6,000 AI workloads accessing data via Fluid-mounted datasets, totaling around 120,000 pods.

Given the widespread deployment of Fluid in large-scale production-grade Kubernetes clusters, we conducted stress tests on the control plane components of Fluid. The results show that with three replicas, the Fluid Webhook can handle pod scheduling requests at a rate of 125 queries per second (QPS), with 90% of requests processed in less than 25 milliseconds. The Fluid Controller supports configuring over 500 custom Fluid datasets per minute. These results demonstrate the ability of Fluid to meet the demands of large-scale cluster scenarios.

In large model training and inference tasks, FUSE processes may crash and restart due to insufficient memory resources or other issues. This will cause FUSE mount points to disconnect, disrupt data access, and impact online business availability. This issue is more common with FUSE-based storage clients. If these problems cannot be automatically resolved, tasks and services may be interrupted, and manual recovery can be complex and time-consuming. To address this issue, Fluid 1.0 has optimized the self-recovery mechanism, which has been successfully deployed in multiple large-scale user scenarios.

Adhering to the principle of least privilege, Fluid 1.0 has removed unnecessary RBAC resource access and permissions.

For more information, visit https://github.com/fluid-cloudnative/fluid/releases/tag/v1.0.0

The goal of the Fluid open source project is to help AI and big data users use data more efficiently, flexibly, economically, and securely in Kubernetes.

Fluid 1.0 has further broken down the barriers between data and computing. Users can flexibly use different data sources (object storage, traditional distributed storage, and programmable memory objects) from various Kubernetes environments (including runC and KataContainer). It has also used distributed cache engines (such as Alluxio, JuiceFS, JindoFS, and Vineyard) and data affinity scheduling tools to improve application data access efficiency.

In future versions, Fluid will continue to integrate with the Kubernetes cloud-native ecosystem and focus on improving data scientists' efficiency and experience. We plan to address the following issues:

We thank all the open source contributors who worked hard on the Fluid 1.0 release. For more information about contributions and contributors, see the Fluid 1.0 release note at https://github.com/fluid-cloudnative/fluid/releases/tag/v1.0.0

We also appreciate the feedback and support from the Fluid open source community. For a list of registered Fluid community users, visit https://github.com/fluid-cloudnative/fluid/blob/master/ADOPTERS.md

Fluid and Vineyard Team Up for Efficient Intermediate Data Management in Kubernetes

Alibaba Cloud Service Mesh Multi-Cluster Practices (1): Multi-Cluster Management Overview

228 posts | 33 followers

FollowAlibaba Developer - January 13, 2020

Alibaba Developer - March 1, 2022

Alibaba Container Service - February 17, 2021

Alibaba Cloud Native Community - March 1, 2022

Alibaba Developer - July 8, 2021

Alibaba Cloud Native Community - September 19, 2023

228 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Container Service

Santhakumar Munuswamy August 16, 2024 at 10:23 am

Thank for sharing